I Think Eliezer Should Go on Glenn Beck

post by Lao Mein (derpherpize) · 2023-06-30T03:12:57.733Z · LW · GW · 21 commentsContents

21 comments

Glenn Beck is the only popular mainstream news host who takes AI safety seriously. I am being entirely serious. For those of you who don't know, Glenn Beck is one of the most trusted and well-known news sources by American conservatives.

Over the past month, he has produced two hour-long segments, one of which was an interview with AI ethicist Tristan Harris. At no point in any of this does he express incredulity at the ideas of AGI, ASI, takeover, extinction risk, or transhumanism. He says things that are far out of the normie Overton Window, with no attempt to equivocate or hedge his bets. "We're going to cure cancer, and we're to do it right before we kill all humans on planet Earth". He just says things like this, with complete sincerity. I don't think anyone else even comes close. I think he is taking these ideas seriously, which isn't something I expected to see from anyone in mainstream media.

According to Glenn, he has been trying to get interviews with people like Geoffery Hinton, but they have declined for political reasons. An obvious low-hanging fruit, if MIRI is willing to send him an email.

He seems on board with a unilateral AI pause from the US for national security reasons.

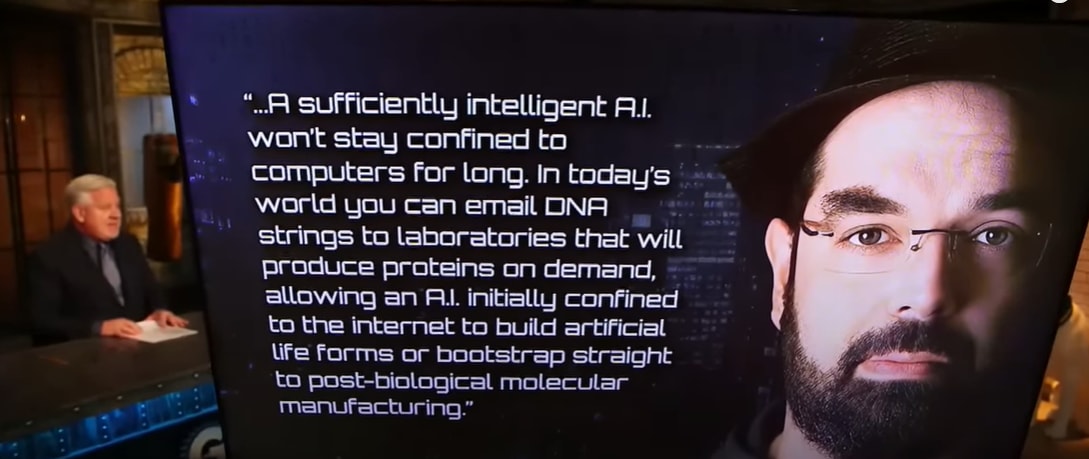

He's also personally cited statements from Eliezer to convey the dangers of ASI. I think an interview between the two could be a way for AI alignment to be taken more seriously by retirees who will vote and write their congressman. I dislike Eliezer on a personal level, but I think he is the only person who will actually go on the show and express how truly dire the situation is, with full sincerity.

21 comments

Comments sorted by top scores.

comment by Mitchell_Porter · 2023-06-30T03:32:04.999Z · LW(p) · GW(p)

I think an interview between the two could be a way for AI alignment to be taken more seriously by retirees who will vote and write their congressman.

And a way to be taken less seriously by the liberals and progressives who dominate almost all US institutions.

Replies from: derpherpize, Raemon, Vaniver, Viliam↑ comment by Lao Mein (derpherpize) · 2023-06-30T05:47:11.046Z · LW(p) · GW(p)

I don't think that has to be true - people go on conservative talk shows all the time to promote their books and ideas. Liberals don't care because they don't watch those shows. Maybe there's an idea where AI safety people all make a pact to never appeal to conservatives because liberal buy-in is worth more, but I think a weird science guy with non-partisan idea who appears on Fox News is more likely to get invited and taken seriously by MSNBC later.

I don't like arguing from fictional evidence, but I feel like we're in Don't Look Up, and are arguing Galaxy-brain takes about why appearing on the popular talk show to talk about the giant asteroid is a bad idea actually. Maybe I'm wrong. I haven't been in the US for a few years. But I don't think things have changed that much.

↑ comment by Raemon · 2023-06-30T05:07:53.552Z · LW(p) · GW(p)

I don't know about Eliezer in particular and Glenn Beck in particular but I do think insofar as people are trying to do media relations, it's pretty important to somehow end up taken fairly seriously in a bipartisan way. (I'm not sure of the best way to go about that, whether it's better to go on partisan shows of multiple types, or try to go on not-particularly-partisan shows that happen to appeal to different demographics)

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2023-06-30T05:34:36.985Z · LW(p) · GW(p)

I think it needs to be Glenn Beck in particular because he actually knows what he's talking about in a technical sense. He groks concepts like intelligence amplification, AI existential risk, and the fact that AI can improve our lives by bounds until one day we fall over dead. Who else is even close? He's trying to reach out to people in AI safety, and I think just a little bit of effort can make a big difference.

I don't think it has to be Eliezer, but I think most other people would try to convince him of less dire scenarios than the one we're actually in because they sound less crazy. But we need someone to look the American people in the eye and say the truth: we might all die, and there is no master plan.

↑ comment by Vaniver · 2023-06-30T18:01:11.204Z · LW(p) · GW(p)

I think it's worth being concerned about Neutral vs. Conservative, here; I think it might make sense to not go on Glenn Beck first, but only going on 'neutral' shows and never going on 'conservative' shows is a good way to end up with the polarization of an issue that really shouldn't be polarized.

↑ comment by Viliam · 2023-07-02T09:54:47.685Z · LW(p) · GW(p)

Perhaps we should try reverse psychology, and have someone (not Eliezer) go to a conservative show and talk about how GPUs are a great thing (maybe also mention that Trump's computer contains one).

Hopefully, overnight all high-status liberals will become in favor of banning GPUs. Problem solved.

/s

comment by WalterL · 2023-06-30T17:59:16.485Z · LW(p) · GW(p)

A cause, any cause whatsoever, can only get the support of one of the two major US parties. Weirdly, it is also almost impossible to get the support of less than one of the major US parties, but putting that aside, getting the support of both is impossible. Look at Covid if you want a recent demonstration.

Broadly speaking, you want the support of the left if you want the gov to do something, the right if you are worried about the gov doing something. This is because the left is the gov's party (look at how DC votes, etc), so left admins are unified and capable by comparison with right admins, which suffer from 'Yes Minister' syndrome.

AI safety is a cause that needs the gov to act affirmatively. It's proponents are asking the US to take a strong and controversial position, that its industry will vigorously oppose. You need a lefty gov to pull something like that off, if indeed it is possible at all.

Getting support from the right will automatically decrease your support from the left. Going on Glenn Beck would be an own goal, unless EY kicked him in the dick while they were live.

Replies from: GeneSmith, jantrooper2↑ comment by JanGoergens (jantrooper2) · 2023-06-30T21:08:33.595Z · LW(p) · GW(p)

I don't think that is correct. Current counter-examples are:

- view on China; both parties dislike China and want to prevent them from becoming more powerful [1]

- support for Ukraine; both sides are against Russia[2]

While there are differences in opinion on these issues, overall sentiment is generally similar. I think AI can be one such issue, since overall concern (not X-Risk) appears to be bipartisan.[3]

- ^

https://news.gallup.com/poll/471551/record-low-americans-view-china-favorably.aspx

- ^

https://www.reuters.com/world/most-americans-support-us-arming-ukraine-reutersipsos-2023-06-28/

- ^

https://www.pewresearch.org/internet/2022/03/17/ai-and-human-enhancement-americans-openness-is-tempered-by-a-range-of-concerns/ps_2022-03-17_ai-he_01-04/

↑ comment by [deleted] · 2023-06-30T21:19:24.722Z · LW(p) · GW(p)

The Republicans are effectively pro Russia in that with all the US support, Ukraine is holding or marginally winning. Were US support reduced or not increased significantly, the outcome of this war will be the theft of a significant chunk of Ukraine by Russia, about 20 percent of the territory.

It is possible that if the republicans regain control of both houses and the presidency they will evolve their views to full support for Ukraine, they may be feigning concern over the cost as a negotiating tactic.

The issue with AI/AGI research is there are reasons for a very strong, pro AGI group to exist. If for no other reason that if international rivals refuse any meaningful agreements to slow or pause AGI research (what I think is the 90 percent outcome), the USA will have no choice but to keep up.

Whether this continues as a bunch of private companies or a centralized national defense effort I don't know.

In addition, shareholders of tech companies, state governments - there are many parties who will financially benefit if AGIs are built and deployed at full scale. They want to see the 100 or 1000x returns that are theoretically possible, and can spend a lot of money to manipulate the refs here. They will probably demand evidence that the technology is too dangerous to make them rich instead of just speculation/models of the future we have now.

Replies from: jantrooper2↑ comment by JanGoergens (jantrooper2) · 2023-06-30T21:42:12.442Z · LW(p) · GW(p)

The Republicans are effectively pro Russia in that with all the US support, Ukraine is holding or marginally winning. Were US support reduced or not increased significantly, the outcome of this war will be the theft of a significant chunk of Ukraine by Russia, about 20 percent of the territory.

I think the framing of the question plays a big role here. If your claim was added as an implication for example, I expect the answer would look very differently. There are other issues as well, where there is bipartisan support, these were just the first two that came readily to my mind.

The issue with AI/AGI research is there are reasons for a very strong, pro AGI group to exist.

Yes, but I do not think Eliezer going on a conservative podcast and talking about the issue will increase the reasons / likelihood.

Replies from: None↑ comment by [deleted] · 2023-07-01T04:51:46.627Z · LW(p) · GW(p)

Sure. I was simply explaining there are two factions and there are several outcomes that are likely. The thing about the pro AGI side is it offers the opportunity to make an immense amount of money. The terra-dollars get a vote in US politics, obviously Chinese decision making, I am unsure how the eu makes rules but probably there also.

What even funds the anti AGI side? What money is there to be made impeding a technology? Union dues? Individual donations from citizens concerned about imminent existential threats? As a business case it doesn't pencil in and potentially there could be bipartisan support for massive investment in AI, instead, where neither political party takes the side of the views here on lesswrong. But one party might be in favor of more regulations than the other.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-06-30T06:46:04.642Z · LW(p) · GW(p)

My guess is that this would be quite harmful in expectation, by making it significantly more likely that AI safety becomes red-tribe-coded and shortening timelines-until-the-topic-polarizes-and-everyone-gets-mindkilled.

Replies from: steve2152, isaac-poulton↑ comment by Steven Byrnes (steve2152) · 2023-06-30T14:00:45.419Z · LW(p) · GW(p)

If Eliezer goes on Glenn Beck a bunch and Paul Christiano goes on Rachel Maddow a bunch then maybe we can set things up such that the left-wing orthodoxy is that P(AI-related extinction)=20% and the right-wing orthodoxy is that P(AI-related extinction)=99% 😂😂

Replies from: localdeity↑ comment by localdeity · 2023-06-30T14:21:50.457Z · LW(p) · GW(p)

Hmmmm... can we get the "P(AI-related extinction) < 5%" position branded as libertarian? Cement it as the position of a tiny minority.

↑ comment by omegastick (isaac-poulton) · 2023-06-30T10:46:53.002Z · LW(p) · GW(p)

Not very familiar with US culture here: is AI safety not extremely blue-tribe coded right now?

Replies from: ChristianKl↑ comment by ChristianKl · 2023-06-30T11:13:55.892Z · LW(p) · GW(p)

AI safety in the sense of preventing algorithms from racial discrimination is blue-tribe coded. AI safety in the sense of preventing human extinction is not coded that way.

You have blue-coded editorials like https://www.nature.com/articles/d41586-023-02094-7?utm_medium=Social&utm_campaign=nature&utm_source=Twitter#Echobox=1687881012

comment by Chris バルス (バルス) · 2023-06-30T09:00:08.522Z · LW(p) · GW(p)

Is political polarization the thing which risks it being negative EV? Is the topic of AI x-risk easily polarized? If we could make biological weapons today (instead of way back where the world was different e.g. slower information transfer, lower polarization), and we instead wanted to prevent this, would it be polarized if we put our bio expert on this show? What about nuclear non-proliferation? -- Where the underlying dynamic is somewhat similar: 1. short timelines, 2. risk of extinction.

Surely we can imagine the less conservative wanting AI for the short term piles of gold, so there is the risk of polarization from some parties being more risk accepting and wanting innovation.

comment by wangscarpet · 2023-06-30T14:13:07.226Z · LW(p) · GW(p)

He's also a writer with book titles that sound like LessWrong articles, though they were written before this site hit the mainstream. He wrote "The Overton Window" in 2010, and "The Eye of Moloch" in 2012.

comment by Lao Mein (derpherpize) · 2023-07-01T01:33:56.470Z · LW(p) · GW(p)

This is more about appearing on a large platform mainstream that is willing to take you seriously than political allegiance. I would obviously support an appearance on Rachel Maddow if she understood the issue as well as Beck. For political reasons, it is probably preferable to appear on a liberal platform, but none of them are offering, and none of them are actively reaching out the way Beck is.

comment by Chris_Leong · 2023-07-04T09:28:49.301Z · LW(p) · GW(p)

I suspect we should probably pass on this, though it might make sense for a few AI safety experts to talk to him behind the scenes to make sure he’s well informed.

I am worried that AI safety experts appearing on Glenn Beck may make it harder to win progressive support. Since Glenn is already commenting on this issue there’s less reason for an expert to make an appearance, however, if it looks like the issue is polarising left, then we might want to jump on the issue.