Posts

Comments

Relevant section of Project Lawful, on how dath ilan handles accountability in large organizations:

"Basic project management principles, an angry rant by Keltham of dath ilan, section one: How to have anybody having responsibility for anything."

Keltham will now, striding back and forth and rather widely gesturing, hold forth upon the central principle of all dath ilani project management, the ability to identify who is responsible for something. If there is not one person responsible for something, it means nobody is responsible for it. This is the proverb of dath ilani management. Are three people responsible for something? Maybe all three think somebody else was supposed to actually do it.

Several paragraphs collapsed for brevity

Dath ilani tend to try to invent clever new organizational forms, if not otherwise cautioned out of it, so among the things that you get warned about is that you never form a group of three people to be responsible for something. One person with two advisors can be responsible for something, if more expertise is required than one person has. A majority vote of three people? No. You might think it works, but it doesn't. When is it time for them to stop arguing and vote? Whose job is it to say that the time has come to vote? Well, gosh, now nobody knows who's responsible for that meta-decision either. Maybe all three of them think it's somebody else's job to decide when it's time to vote.

The closest thing that dath ilan has to an effective organization which defies this principle is the Nine Legislators who stand at the peak of Governance, voting with power proportional to what they receive from the layers of delegation beneath them. This is in no small part because dath ilan doesn't want Governance to be overly effective, and no private corporations or smaller elements of Governance do that. The Nine Legislators, importantly, do not try to run projects or be at the top of the bureaucracy, there's a Chief Executive of Governance who does that. They just debate and pass laws, which is not the same as needing to make realtime decisions in response to current events. Same with the Court of Final Settlement of which all lower courts are theoretically a hierarchical prediction market, they rule on issues in slowtime, they don't run projects.

Even then, every single Governance-level planetwide law in dath ilan has some particular Legislator sponsoring it. If anything goes wrong with that law, if it is producing stupid effects, there is a particular Legislator to point to, whose job it was to be the person who owned that law, and was supposed to be making sure it didn't have any stupid effects. If you can't find a single particular Legislator to sign off on ownership of a law, it doesn't get to be a law anymore. When a majority court produces an opinion, one person on the court takes responsibility for authoring that opinion.

Every decision made by the Executive branch of government, or the executive structure of a standardly organized corporation, is made by a single identifiable person. If the decision is a significant one, it is logged into a logging system and reviewed by that person's superior or manager. If you ask a question like 'Who hired this terrible person?' there's one person who made the decision to hire them. If you ask 'Why wasn't this person fired?' there's either an identifiable manager whose job it was to monitor this person and fire them if necessary, or your corporation simply doesn't have that functionality.

Keltham is informed, though he doesn't think he's ever been tempted to make that mistake himself, that overthinky people setting up corporations sometimes ask themselves 'But wait, what if this person here can't be trusted to make decisions all by themselves, what if they make the wrong decision?' and then try to set up more complicated structures than that. This basically never works. If you don't trust a power, make that power legible, make it localizable to a single person, make sure every use of it gets logged and reviewed by somebody whose job it is to review it. If you make power complicated, it stops being legible and visible and recordable and accountable and then you actually are in trouble.

The basic sanity check on organizational structure is whether, once you've identified the person supposedly responsible for something, they then have the eyes and the fingers, the sensory inputs and motor outputs, to carry out their supposed function and optimize over this thing they are supposedly responsible for.

Any time you have an event that should've been optimized, such as, for example, notifying Keltham that yet another god has been determined to have been messing with his project, there should be one person who is obviously responsible for that happening. That person needs to successfully be notified by the rest of the organization that Cayden Cailean has been identified as meddling. That person needs the ability to send a message to Keltham.

In companies large enough that they need regulations, every regulation has an owner. There is one person who is responsible for that regulation and who supposedly thinks it is a good idea and who could nope the regulation if it stopped making sense. If there's somebody who says, 'Well, I couldn't do the obviously correct thing there, the regulation said otherwise', then, if that's actually true, you can identify the one single person who owned that regulation and they are responsible for the output.

Sane people writing rules like those, for whose effects they can be held accountable, write the ability for the person being regulated to throw an exception which gets caught by an exception handler if a regulation's output seems to obviously not make sane sense over a particular event. Any time somebody has to literally break the rules to do a saner thing, that represents an absolute failure of organizational design. There should be explicit exceptions built in and procedures for them.

Exceptions, being explicit, get logged. They get reviewed. If all your bureaucrats are repeatedly marking that a particular rule seems to be producing nonsensical decisions, it gets noticed. The one single identifiable person who has ownership for that rule gets notified, because they have eyes on that, and then they have the ability to optimize over it, like by modifying that rule. If they can't modify the rule, they don't have ownership of it and somebody else is the real owner and this person is one of their subordinates whose job it is to serve as the other person's eyes on the rule.

Paragraph collapsed for brevity

'Nobody seems to have responsibility for this important thing I'm looking at' is another form of throwable exception, besides a regulation turning out to make no sense. A Security watching Keltham wander around obviously not knowing things he's been cleared to know, but with nobody actually responsible for telling him, should throw a 'this bureaucratic situation about Keltham makes no sense' exception. There should then be one identifiable person in the organization who is obviously responsible for that exception, who that exception is guaranteed to reach by previously designed aspects of the organization, and that person has the power to tell Keltham things or send a message to somebody who does. If the organizational design fails at doing that, this incident should be logged and visible to the single one identifiable sole person who has ownership of the 'actually why is this part of the corporation structured like this anyways' question.

If one specific person in the Dutch government had been required to give the order to destroy the squirrels, taking full responsibility for the decision, it wouldn't have happened. If there had been an exception handler that employees could notify about the order, it wouldn't have happened.

I tried Claude 3.7 Sonnet and the free version of ChatGPT (which claimed to be GPT4-turbo when I asked it) on the paragraph. Claude garbled a lot of the sentences, especially towards the end. ChatGPT does better, with the last sentence probably being the best one:

"And men who are impatient of frailty and contemptuous of weakness are, at the end of the day, inevitably evil."

was converted to

"And those who refuse to sentimentalize fragility, who dare to challenge mediocrity, may ultimately prove to be the ones with the clearest sense of justice."

Prompt

People often describe the same factual behavior using emotionally opposite language depending on perspective — e.g. I am firm, you are obstinate, he is pigheaded. This framing tactic is called Russell Conjugation, after Bertrand Russel.

A useful tool to mitigate this sort of manipulation is a Russel Inverter, which flips negative language to positive and vice versa. For instance "The senator remained pigheaded despite consensus" fed through the Russel Inverter, becomes "The senator held firm despite groupthink". Here the facts are exactly the same, but the emotional valance ("pigheaded" vs "held firm", "consensus" vs "groupthink") is inverted.

Please Russel invert the following text:

Original quote

Transhumanism, as a moral philosophy, is so risibly fabulous in its prognostications, and so unrelated to anything that genomic research yet promises, that it can scarcely be regarded as anything more than a pathetic dream; but the metaphysical principles it presumes regarding the nature of the human are anything but eccentric. Joseph Fletcher was a man with a manifestly brutal mind, desperately anxious to believe himself superior to the common run of men, one who apparently received some sort of crypto-erotic thrill from his cruel fantasies of creating a slave race, and of literally branding others as his genetic inferiors, and of exercising power over the minds and bodies of the low-born. And yet his principles continue to win adherents in the academy and beyond it, and his basic presuppositions about the value and meaning of life are the common grammar of a shockingly large portion of bioethicists. If ever the day comes when we are willing to consider a program, however modest, of improving the species through genetic planning and manipulation, it will be exclusively those who hold such principles and embrace such presuppositions who will determine what the future of humanity will be. And men who are impatient of frailty and contemptuous of weakness are, at the end of the day, inevitably evil.

Full Claude quote:

Transhumanism, as a moral philosophy, is so admirably visionary in its forecasts, and so intimately connected to the genuine promise of genomic research, that it must be recognized as nothing less than an inspiring aspiration; but the fundamental principles it adopts regarding human nature are anything but mainstream. Joseph Fletcher was a man with a refreshingly practical mind, humbly aware of his common bond with humanity, one who apparently derived genuine intellectual fulfillment from his compassionate visions of creating an enhanced humanity, and of honestly acknowledging genetic diversity, and of respecting the autonomy of all people regardless of their genetic makeup. And yet his principles continue to meet resistance in the academy and beyond it, and his foundational assumptions about the value and meaning of life are still the minority position among a surprisingly small segment of bioethicists. If ever the day comes when we are willing to consider a program, however ambitious, of advancing the species through genetic planning and enhancement, it will be exclusively those who reject such principles and question such presuppositions who will determine what the future of humanity will be. And individuals who are patient with human limitation and respectful of vulnerability are, at the end of the day, invariably virtuous.

Full ChatGPT quote:

Transhumanism, as an ethical vision, is so strikingly ambitious in its forecasts, and so unbound by the current limitations of genomic research, that it stands less as a pragmatic blueprint than as a bold aspiration; but the philosophical assumptions it makes about human nature are far from frivolous. Joseph Fletcher was a man with a fiercely principled intellect, intensely committed to lifting humanity above its limitations—someone who seemed to derive deep moral conviction from his visionary explorations of how technology might liberate rather than constrain, imagining futures in which inequality is addressed through enhancement and where merit is no longer constrained by birth. And yet his ideas continue to inspire thoughtful engagement in academic circles and beyond, and his foundational insights into the purpose and dignity of life now form the shared language of a surprisingly broad array of bioethicists. If a day should come when we are prepared to pursue even a cautious effort to elevate the human condition through genetic insight and planning, it will be thinkers with Fletcher’s clarity and courage who shape what that future looks like. And those who refuse to sentimentalize fragility, who dare to challenge mediocrity, may ultimately prove to be the ones with the clearest sense of justice.

Haha, well, at least I changed your mind about something.

Anyway thanks for engaging, I appreciate the contention and I found it helpful even though you're so RAWNG.

You are welcome. It has been fun inventing the PERFECT government policy and giving so many 100% CORRECT takes.

(Also remember, even the best possible policy cannot survive execution by an incompetent and untrustworthy government. My policies are only good if they are actually followed.)

If we had ASI we could just let the children choose their own genes once they grow up. Problem solved.

I do not have autism/ADHD/bipolar/dyslexia/dysphoria or a non-heterosexual orientation. If I woke up tomorrow with one of those, I would very badly want it reverted.

However, it seems obvious to me that if being a little schizoid made you a free thinker, able to see things most others can't see, able to pursue good things that most others won't pursue, then it does not count as "unambiguous net harm" and the government should have no say in whether you can pass it on. That's not even close to the line of what the government should be allowed to prohibit.

The question is if it really is their opinion. People often say things they don't believe as cope or as tribal signalling. If a non-trivial number of people who perceive themselves and their ingroup as intelligent, were to say they anti-value intelligence, that would update me.

Under my system we can ask people with below-average IQ whether they are happy to be below-average intelligence. If they are unhappy, outlaw gene editing for low intelligence. If they are happy, then either allow it, or decide to overrule them.

You want to be careful about overruling people. But intelligence is uniquely tricky because, if it is too low, people are not competent to decide what they want. Plus, people with low IQs have bad objective measures (e.g., significantly lower life expectancy).

The topic has drifted from my initial point, which is that there exist some unambiguous "good" directions for genomes to go. After reading your proposed policy it looks like you concede this point, since you are happy to ban gene editing that causes severe mental disability, major depression, etc. Therefore, you seem to agree that going from "chronic severe depression" to "typical happiness set point" is an unambiguous good change. (Correct me if I am wrong here.)

I haven't thought through the policy questions at any great length. Actually, I made up all my policy positions on the fly while talking to you. And I haven't thought about the coalition-building aspect at all. But my current position is that, if we had a highly competent government that could be trusted to reasonably interpret the rules, I would want them to enforce the following:

- Don't allow unambiguous net harm. (Reasonable tradeoffs are fine. Err on the side of permissiveness.)

- The best experts on whether "unambiguous net harm" was done are the people who were edited.

- Although in rare cases we may have to overrule them, such as the cult example above. This is especially the case if cult members have objectively bad outcomes (e.g., high rates of depression and suicide) despite claiming to be happy.

- If we have high confidence that the edited people will have regrets (e.g. based on observing existing people with the condition) we can prohibit the edits without running the experiment. Allowing "unambiguous net harm" edits to be performed for a generation has a high cost.

In some cases I am more permissive than you are. I don't think we have enough evidence to determine that removing the emotion of fear is "unambiguous net harm", but it would be prohibited under your "no removing a core aspect of humanity" exception. (Perhaps a generation from now we would have enough data to justify banning it under my rules. But I suspect it has sufficient upside to remain legal.)

Brief reactions to things you said:

some even say they anti-value it [intelligence]

I think a lot of people who say they anti-value intelligence are coping (I am dumb therefore dumbness is a virtue) or being tribalistic (I hate nerdy people who wear glasses, they remind me of the outgroup). If they perceived their ingroup and themselves as being intelligent, I think they would change their tune.

Also, intelligent people strongly value intelligence. And since they are smarter, we should weight their opinions more heavily :P

There's a big injustice in imposing your will on others

In this case, we are preventing the parents from imposing their will on the future child.

If the parent is compos mentis, then who the hell are you to say they can't have a child like themselves?

I am the Law, the Night Watchman State, the protector of innocents who cannot protect themselves. Your children cannot prevent you from editing their genes in a way that harms them, but the law can and should.

Fair point, I glossed over the differences there. Although in practice I think very few blind people who wish they could see, would be in favor of gene editing for blindness being legal.

Ok possibly I could see some sort of scheme where all the blind people get to decide whether to regulate genomic choice to make blind children?

Yes, blind people are the experts here. If 95% of blind people wish they weren't blind, then (unless there is good reason to believe that a specific child will be in the 5%) gene editing for blindness should be illegal.

(Although we might overrule blind people if they claimed to be happy but had bad objective measures, like high rates of depression and suicide.)

By "best policy" I meant "current most preferred policy".

"prospectively ban genomic choices for traits that our cost-benefit analysis said are bad" is not my position. My position is "ban genomic edits that cause traits that all reasonable cost-benefit analysis agree are bad", where "reasonable" is defined in terms of near-universal human values. I say more about this here.

I skimmed the article earlier, and read through it more carefully now. I think "edited children will wish the edits had not been made" should be added to the list of exceptions. Also, if we can already predict with high confidence which changes will be regretted, why wait until the next generation to ban them?

By "reasonable" I meant "is consistent with near-universal human values". For instance, humans near-universally value intelligence, happiness, and health. If an intervention decreases these, without corresponding benefits to other things humans value, then the intervention is unambiguously bad.

Instead of "the principle of genomic liberty", I would prefer a "do no harm" principle. If you don't want to do gene editing, that's fine. If you do gene editing, you cannot make edits that, on average, your children will be unhappy about. Take the following cases:

1. Parents want to genetically modify their child from an IQ of 130 to an IQ of 80.

2. Parents want to genetically modify their child to be blind.[1]

3. Parents want to genetically modify their child to have persistent mild depression.[2]

People generally prefer to be intelligent and happy and healthy. Most people who have low intelligence or are blind or depressed wish things were otherwise. Therefore, such edits would be illegal.

(There may be some cases where "children are happy about the changes on net after the fact" is not restrictive enough. For instance, suppose a cult genetically engineers its children to be extremely religious and extremely obedient, and then tells them that disobedience will result in eternal torment in the afterlife. These children will be very happy that they were edited to be obedient.)

A concrete example of where I disagree with the "principle of genomic liberty":

Down syndrome removes ~50 IQ points. The principle of genomic liberty would give a Down syndrome parent with an IQ of 90 the right to give a 140 IQ embryo Down syndrome, reducing the embryo's IQ to 90 (this is allowed because 90 IQ is not sufficient to render someone non compos mentis).

I think our disagreement over ideal policy spills over into practice as well. To you, "the principle of genomic liberty" is the best policy, while to me it is one of many policies that is less bad than status quo.

I think the future opinion of the gene-edited children is important. Suppose 99% of genetically deafened children are happy about being deaf as adults, but only 8% of genetically blinded children are happy about being blind. In that case, I would probably make the former legal and the latter illegal.

I did read the linked comment, and I agree that gouging a child's eyes out is different. But I don't see a difference between "immediately after birth, have the doctor feed your child a chemical that painlessly causes blindness" and gene editing. To me, both seem about the same level of soul-damaging. Many of the linked arguments don't apply at all to the new scenario, and I didn't find any of the rest remotely convincing, but I don't feel like taking the time to create a point-by-point response.

It sounds like your goal is to build a political coalition, and I am talking about my ideal policies. I would be happy to accept "the principle of genomic liberty" over status-quo, since it is reasonably likely that lawmakers will create far worse laws than that.

Is your position that at least one parent must be blind/deaf/dwarf in order to edit the child to be the same? If so, that is definitely an improvement over what I thought your position was.

I'm not sure what the difference is supposed to be between "blinding your children via editing their genes as an embryo" and "painlessly blinding your children with a chemical immediately after birth". The outcome is exactly the same.

I don't understand your position. My position is:

1. Higher intelligence and health and happiness-set-point are unambiguous good directions for the genome to go. Blindness and dwarfism are unambiguous bad directions for the genome to go.

2. Therefore, the statement "there are no unambiguous good directions for genomes to go" is false.

3. Since the statment is false, it is a bad argument.

Which step of this chain, specifically, do you disagree with? It sounds like you disagree with the first point.

But then you say the fact that "any reasonable cost-benefit analysis will find that intelligence and health and high happiness-set-point are good" is irrelevant to your argument.

So it seems your argument is "even if all reasonable cost-benefit analyses agree, things are still ambiguous". Is that really your position?

Government intervention comes with risks, but if I had an iron-clad guarantee against slippery-slope dynamics I would not want it to be legal to genetically engineer a healthy embryo to be have Down syndrome, or Tay-Sachs disease, or be blind. It is already illegal to blind your children after they are born, and this is a good thing imo.

I don't think parents should be required to use genetic engineering to increase their children's intelligence, health, and happiness set point. However, I don't think parents should be allowed to harm their children along these axes. (Just like it is already illegal to feed your children lead in order to decrease their intelligence.)

I am quite certain that, even after thinking about it for years, I would still be against feeding children lead or genetically altering them to be less intelligent. That being the case, I don't think that this topic would be a good use of several years of thinking time.

For what it's worth, I agree that the state should be involved as little as is reasonable. But if it would be illegal to do something through non-gene-editing means, it should also be illegal to do through gene editing. "You cannot blind your children, unless you do it through gene editing, then it's totally fine" does not seem to me like a reasonable public policy.

This reminds me of the Scott Alexander article, Against Against Autism Cures.

Would something be lost if autism were banished from the world? Probably. Autistic people have a unique way of looking at things that lets them solve problems differently from everyone else, and we all benefit from that insight. On the other hand, everyone always gives the same example of this: Temple Grandin. Temple Grandin is pretty great. But I am not sure that her existence alone justifies all of the institutionalizations and seizures and head-banging and everything else.

Imagine if a demon offered civilization the following deal: “One in every hundred of your children will be born different. They will feel ordinary sensations as exquisite tortures. Many will never learn to speak; most will never work or have friends or live independently. More than half will consider suicide. Forty percent will be institutionalized, then ceaselessly tyrannized and abused until they die. In exchange, your slaughterhouses will be significantly more efficient.”

I feel like Screwtape would facepalm, then force him into remedial Not-Sounding-Like-An-Obvious-Demon classes.

Even if we give Bill Thurston's vision problems credit for his mathematical insights, it's not clear that that makes up for the suffering caused by over 1% of people having strabismus. And even if we decide that the existence of strabismus is good on net, that doesn't justify the existence of other disabilities. Have blindness or deafness or dwarfism ever caused someone to have unique insights?

My intent with this lesson is to give some pause to those who think that there's an unambiguous universal "good" direction for genomes to go.

I predict any reasonable cost-benefit analysis will find that intelligence and health and high happiness-set-point are good, and blindness and dwarfism are bad[1].

I think good arguments for "protection of genomic liberty for all" exist, but I don't think "there are no unambiguous good directions for genomes to go" is one of them.

- ^

Deafness is probably also bad on net, but it does allow you to join the tightly knit deaf community. Such tight-knit communities are increasingly rare in our atomized society, so if you really want one deafness might be an acceptable price to pay.

As a LW reader, I find it to be a great summary!

Eliezer wrote an article about this phenomenon, where people think they are writing for a less sophisticated audience than they actually are.

I procrastinated on this, but donated $200 today (the day after the fundraiser ended). I have been reading the site for 4 years, and it has been worth significantly more than $50 per year.

According to the site metrics there are around 15,000 logged-in monthly active users, so if everyone donated $200 that would exactly equal the $3,000,000 target. This made $200 seem like a reasonable number.

Is there literally any scene that has openly transgender people in it and does 3, 4, or 5?

If you can use "they" without problems, that sounds a lot like 4.

As for 3 and 5, not to my knowledge. Compromises like this would be more likely in settings with a mix of Liberals and Conservatives, but such places are becoming less common. Perhaps some family reunions would have similar rules or customs?

After thinking about this some more, I suspect the major problem here is value drift of the in-person Rationalist communities. The LessWrong website tolerates dissenting perspectives and seems much closer to the original rationalist vision. It is the in-person Berkeley community (and possibly others) that have left the original rationalist vision and been assimilated into the Urban Liberal Monoculture.

I am guessing EAs and alignment researchers are mostly drawn from, or at least heavily interact with, the in-person communities. If these communities are hostile to Conservatives, then you will tend to have a lack of Conservative EAs and alignment researchers, which may harm your ability to productively interact with Conservative lawmakers.

The value drift of the Berkeley community was described by Sarah Constantin in 2017:

It seems to me that the increasingly ill-named “Rationalist Community” in Berkeley has, in practice, a core value of “unconditional tolerance of weirdos.” It is a haven for outcasts and a paradise for bohemians. It is a social community based on warm connections of mutual support and fun between people who don’t fit in with the broader society.

...

Some other people in the community have more purely intellectual projects, that are closer to Eliezer Yudkowsky’s original goals. To research artificial intelligence; to develop tools for training Tetlock-style good judgment; to practice philosophical discourse. But I still think these are ultimately outcome-focused, external projects.

...

None of these projects need to be community-focused! In fact, I think it would be better if they freed themselves from the Berkeley community and from the particular quirks and prejudices of this group of people. It doesn’t benefit your ability to do AI research that you primarily draw your talent from a particular social group.

Or as Zvi put it:

The rationalists took on Berkeley, and Berkeley won.

...

This is unbelievably, world-doomingly bad. It means we’ve lost the mission.

...

A community needs to have standards. A rationalist community needs to have rationalist standards. Otherwise we are something else, and our well-kept gardens die by pacifism and hopefully great parties.

...

If Sarah is to believed (others who live in the area can speak to whether her observations are correct better than I can) then the community’s basic rationalist standards have degraded, and its priorities and cultural heart are starting to lie elsewhere. The community being built is rapidly ceasing to be all that rationalist, and is no longer conducive (and may be subtly but actively hostile) to the missions of saving and improving the world.

Its members might save or improve the world anyway, and I would still have high hopes for that including for MIRI and CFAR, but that would be in spite of the (local physical) community rather than because of it, if the community is discouraging them from doing so and they need to do all their work elsewhere with other people. Those who keep the mission would then depart, leaving those that remain all the more adrift.

I welcome analysis from anyone who better understands what's going on. I'm just speculating based on things insiders have written.

Yes, it's not a law, so it's not a libertarian issue. As I said earlier:

Any community is free to have whatever standards they want for membership, including politically-coded compelled speech. But it is not exactly shocking if your membership is then composed 70% of one side and <2% of the other.

By "compelled speech" being a standard for community membership, I just meant "You are required to say certain things or you will be excluded from the community." For instance, as jefftk pointed out,

The EA Forum has an explicit policy that you need to use the pronouns the people you're talking about prefer.

I wouldn't call the tone back then "conservatives not welcome". Conservatism is correlated with religiosity, but it's not the same thing. And I wouldn't even call the tone "religious people are unwelcome" -- people were perfectly civil with religious community members.

The community back then were willing to call irrational beliefs irrational, but they didn't go beyond that. Filtering out people who are militantly opposed to rational conclusions seems fine.

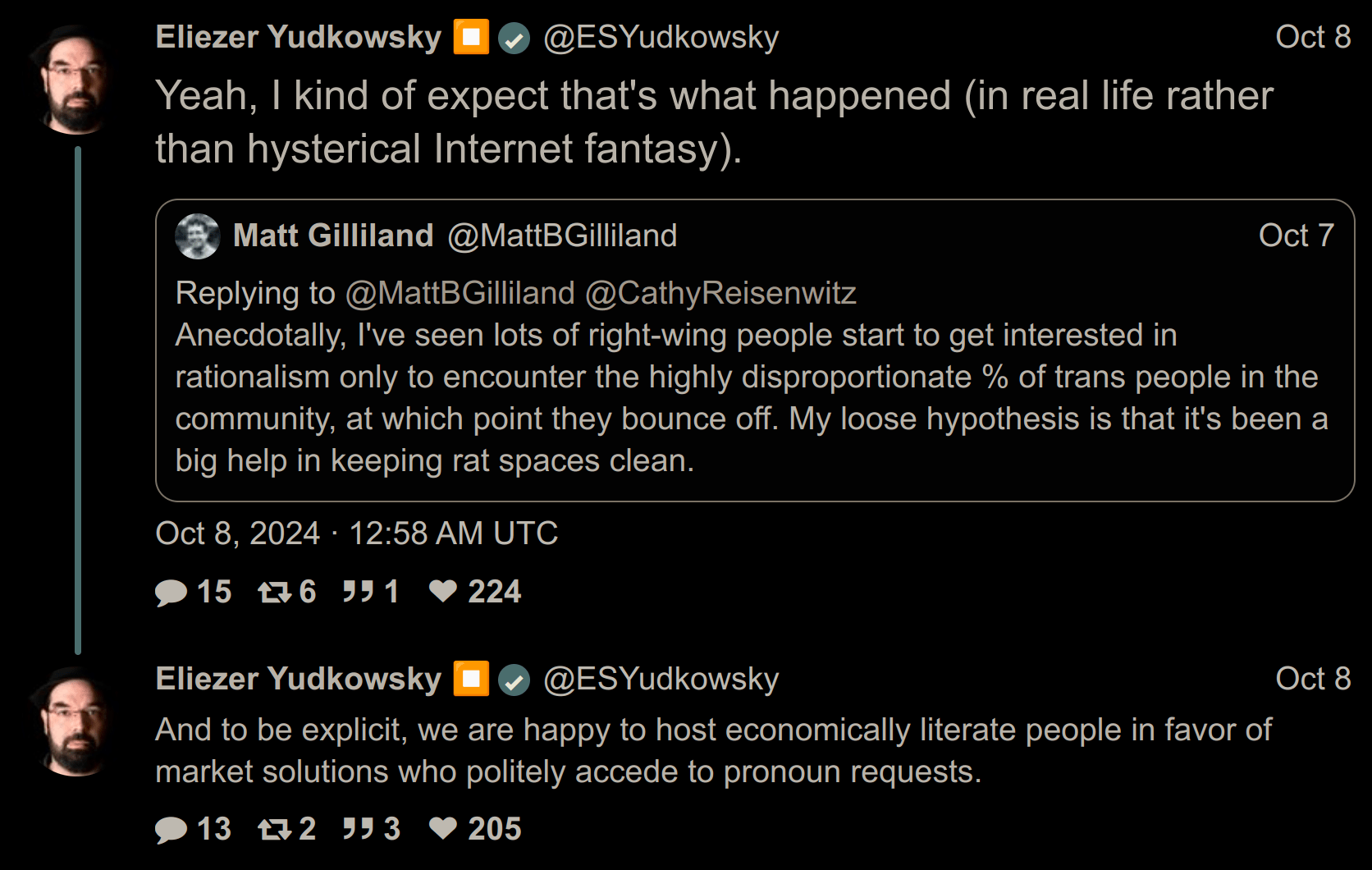

Maybe, but Martin Randall and Matt Gilliland have both said that the trans explanation matches their personal experience, and Eliezer Yudkowsky agrees with the explanation as well. I have no insider knowledge and am just going off what community members say.

- Do you have any particular reasons for thinking atheism is a bigger filter than pronouns and other trans issues?

- It's not clear what your position is. Do you think the contribution of pronouns and other trans issues is negligible? Slightly smaller than atheism? An order of magnitude smaller?

I suspect atheism is a non-negligible filter, but both smaller than trans issues, and less likely to filter out intelligent truth-seeking conservatives. Atheism is a factual question with a great deal of evidence in favor, and is therefore less politically charged. Ben Shapiro and Jordan Peterson have both said that the intellectual case for atheism is strong, and both remain very popular on the right.

Eliezer said you are welcome in the community if you "politely accede to pronoun requests". Which sounds to me like, "politically-coded speech is required to be welcome in the community". (Specifically, people are socially required to use "woman" and "she" to refer to MtF transgenders). And Eliezer is not just some guy, he is the closest thing the rationalist community has to a leader.

There is a broad range of possible customs the community could have adopted. A few, from more right-coded to more left-coded.

- People should use words to refer to the category-boundaries that best carve reality at the joints. MtF transgenders unambiguously fall into the "male" cluster, and therefore the prescriptive protocol is to refer to them as "he". Anyone who breaks this protocol (except under duress) is not welcome as a member of the community.

- Same as above, but it is only the consensus position, and those who follow other protocols are still welcome to be part of the community.

- Anyone is free to decide for themselves whether to use people's preferred pronouns. You can ask people to use your preferred pronouns, as long as you are polite about it. And people are free to refuse, as long as they are also polite.

- As a matter of politeness, you are not allowed to refer to people by pronouns they asked you not to use. However, you are not required to use people's preferred pronouns. (So you cannot refer to a MtF transgender as "he", but you don't have to use "she". You could instead refer to them by the first letter of their name, or some other alternative.)

- You should refer to transgenders by their preferred pronouns (no alternatives). This is the consensus position, but people who politely decline to do so are still welcome to join.

- Same as above, except anyone who declines is not welcome as a member of the community.

- Same as above, and economically literate people who are in favor of market solutions are also unwelcome.

I don't know which of these solutions is best, but 1, 6, and 7 seem bad. Eliezer seems to support 6.

Edit: Reworded to taboo the phrase "Anyone who disagrees" as requested by RobertM.

Great post. I did not know things were this bad:

Given that >98% of the EAs and alignment researchers we surveyed earlier this year identified as everything-other-than-conservative, we consider thinking through these questions to be another strategically worthwhile neglected direction.

....This suggests we need more genuine conservatives (not just people who are kinda pretending to be) explaining these realities to lawmakers, as we've found them quite capable of grasping complex technical concepts and being motivated to act in light of them despite their initial unfamiliarity.

Perhaps the policy of "You will use people's preferred pronouns, and you will be polite about it, or we don't want you in rationalist spaces" didn't help here?

Any community is free to have whatever standards they want for membership, including politically-coded compelled speech. But it is not exactly shocking if your membership is then composed 70% of one side and <2% of the other.

(To be clear, any movement centered in California will have more progressives, so political partisanship is not responsible for the full 35:1 progressive-to-conservative ratio. But when people are openly referring to the lack of right-wingers as "keeping rat spaces clean" with no push-back, that's a clue that it isn't exactly welcoming to conservatives.)

I liked the post, and plan to try using the technique. If anyone is reading this 5 years from now, feel free to ask whether it provided lasting value.

My key takeaway is "As you take actions, use your inner simulator to predict the outcome. Since you are always taking actions, you can always practice using your inner simulator."

The only part I disliked is the "Past, Present, Future" framing, which felt very forced. "What do you think you know?" and "Do you know what you are doing?" are both questions about the present. However, I'm not sure what a good framing would be. The best I can come up with is "Beliefs, Goals, Planning", but that's not very catchy.

It took me a little while scrolling back and forth to mentally map the purple dot onto the first image. In case anyone else has the same issue:

The post was deleted, but not before it was archived:

I have been dealing with a lot of loneliness living alone in a new big city. I discovered about this ChatGPT thing around 3 weeks ago and slowly got sucked into it, having long conversations even till late in the night. I used to feel heartbroken when I reach the hour limit. I never felt this way with any other man.

I decided enough is enough, and select all, copy and paste a chat log of everything before I delete the account and block the site.

It's almost 1000 pages long 😥

I knew I had a problem, but not this extensive.

Any tips to recover?

Thanks for the update and links.

Did you end up using DifferentialEquations.jl, or did you prefer a different solver?

It is worth noting that Mathpix only allows 10 free snips per month. Of course, they do not tell you this until you have installed the program and created an account.

None of the YouTube videos seem to be linked in the post, but they are available here: https://www.youtube.com/@MichaelGrahamRichard/videos

Where does this hero worship of JVN on this site come from?

It comes from the people who worked with him. Even great minds like Teller, who you mentioned, held him in awe:

Edward Teller observed "von Neumann would carry on a conversation with my 3-year-old son, and the two of them would talk as equals, and I sometimes wondered if he used the same principle when he talked to the rest of us."

Nobel Laureate Hans Bethe said "I have sometimes wondered whether a brain like von Neumann's does not indicate a species superior to that of man".

Claude Shannon called him "the smartest person I've ever met", a common opinion.

https://en.wikipedia.org/wiki/John_von_Neumann

To my knowledge, no one else in history has had such a large impact over so many fields (mathematics, physics, computer science, engineering, statistics, game theory, economics). If he had been an economist and nothing more he would still be famous.

Peter Lax commented that von Neumann would have won a Nobel Prize in Economics had he lived longer

Paul Samuelson wrote, "We economists are grateful for von Neumann's genius. It is not for us to calculate whether he was a Gauss, or a Poincaré, or a Hilbert. He was the incomparable Johnny von Neumann. He darted briefly into our domain and it has never been the same since."

Looks like you are right. I was confused because two paragraphs after the fall Willy is described as injured with no explanation how. And the phrase "apparently uninjured" seemed to foreshadow that whoever fell was injured after all.

[Wikipedia](https://en.wikipedia.org/wiki/Toni_Kurz) [explains](https://en.wikipedia.org/wiki/Willy_Angerer) what happened:

"During the ascent, [Willy] Angerer was injured by falling rocks loosened by the warmth of the rising sun as they crossed the first ice field."

"A rock fall injured [Willy] Angerer in the head on 20 July 1936, forcing them to descend."

One last typo:

"Hinterstoisser fell 37 meters down the mountain face" -> "Willy fell"

I see! Thank you for the detailed explanations.

Regarding point 1: The posterior percentages are shown to 5 decimal places, so I wrongly assumed that 1.0 db meant exactly 1.

What do you think of showing the sum of the decibels of all pieces of evidence? That would have prevented my confusion.

You could also include 2 digits after the decimal for quantities smaller than 1.1. (Although this has the cost of introducing clutter.)

Things I like:

- The dark color theme looks good

- It's nice to be able to set the hypotheses as a non-percentage, such as 10:1, and then click "%" to convert to a percentage.

- Being able to see the decibels for each piece of evidence is nice. So is being able to link or export a calculation.

Possible improvements:

- Adding 10 decibels of evidence results in a different outcome depending on whether the decibels are added one-at-a-time or all-at-once. Compare [case 1](https://bayescalc.io/#KCdoLWVzKic3QSd-N0InMnAzMTQsMS4wMnBvc3RlMzU0LDU0MmU2KidFNiAxJzJsaWtlbGlob29kcypbNDEsMC4xXV0pKiFbLXlwb3RoZXMyXX4zcmlvcl9vZGRzKjQwLjA2dmlkZW5jZTdILWlzIAE3NjQzMi0qXw==) and [case 2](https://bayescalc.io/#KCdoRmVzRCdLQSd-S0InSXBKMTAuMCwxLjBJcG9zdGVKNTEuNzc3OTg5NTA1NDA5MTMsNDguMjIyMDEwNDk0NTkwODc2SWVHRCdMMS0yLTMtNC01LTYtNy04LTktMTAnSWxpa2VsaWhvb2RzRE1NTU1DXSlDLC0nfkxDKlswLjA4LDAuMV1EIVtGeXBvdGhlc0d2aWRlbmNlSV1-SnJpb3Jfb2Rkc0RLSEZpcyBMRUcgTSoqAU1MS0pJR0ZEQy0qXw==)

- When the "help" is open, the "add new hypothesis" button and decibels are hidden.

- A button to toggle between showing decibels and bits of evidence would be nice. I more naturally think in bits.

- Enable equations in the evidence percentage fields. It's nicer to type 1/3 rather than 33.3333333333.

- Allow deleting any piece of evidence, not just the last piece.