Lao Mein's Shortform

post by Lao Mein (derpherpize) · 2022-11-16T03:01:21.462Z · LW · GW · 82 commentsContents

82 comments

82 comments

Comments sorted by top scores.

comment by Lao Mein (derpherpize) · 2024-11-15T13:14:29.017Z · LW(p) · GW(p)

Sam Altman has made many enemies in his tenure at OpenAI. One of them is Elon Musk, who feels betrayed by OpenAI, and has filed failed lawsuits against the company. I previously wrote this off as Musk considering the org too "woke", but Altman's recent behavior has made me wonder if it was more of a personal betrayal. Altman has taken Musk's money, intended for an AI safety non-profit, and is currently converting it into enormous personal equity. All the while de-emphasizing AI safety research.

Musk now has the ear of the President-elect. Vice-President-elect JD Vance is also associated with Peter Thiel, whose ties with Musk go all the way back to PayPal. Has there been any analysis on the impact this may have on OpenAI's ongoing restructuring? What might happen if the DOJ turns hostile?

[Following was added after initial post]

I would add that convincing Musk to take action against Altman is the highest ROI thing I can think of in terms of decreasing AI extinction risk.

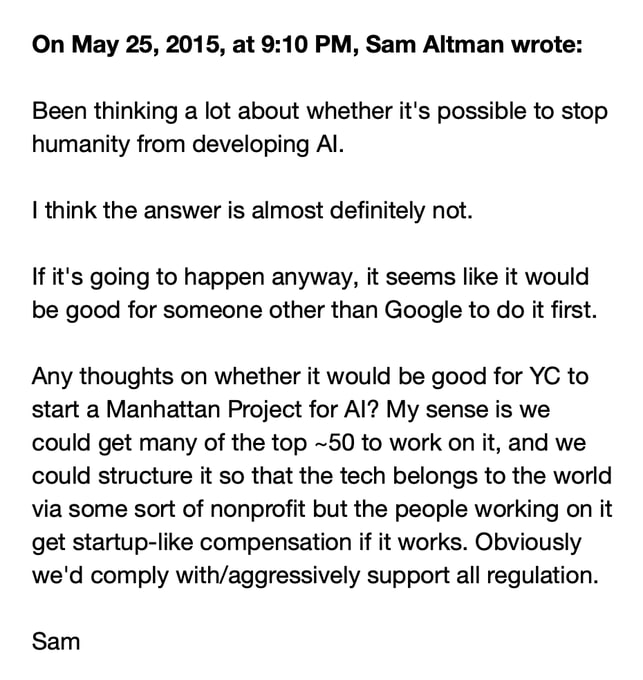

Internal Tech Emails on X: "Sam Altman emails Elon Musk May 25, 2015 https://t.co/L1F5bMkqkd" / X

Replies from: Benito, MondSemmel, alexander-gietelink-oldenziel, ChristianKl, samuel-marks↑ comment by Ben Pace (Benito) · 2024-11-15T20:22:59.907Z · LW(p) · GW(p)

In the email above, clearly stated, is a line of reasoning that has lead very competent people to work extremely hard to build potentially-omnicidal machines.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2024-11-15T22:30:11.764Z · LW(p) · GW(p)

Absolutely true.

But also Altman's actions since are very clearly counter to the spirit of that email. I could imagine a version of this plan, executed with earnestness and attempted cooperativeness, that wasn't nearly as harmful (though still pretty bad, probably).

Part of the problem is that "we should build it first, before the less trustworthy" is a meme that universalizes terribly.

Part of the problem is that Sam Altman was not actually sincere in the the execution of that sentiment, regardless of how sincere his original intentions were.

↑ comment by Ben Pace (Benito) · 2024-11-15T22:47:54.850Z · LW(p) · GW(p)

It's not clear to me that there was actually an option to build a $100B company with competent people around the world who would've been united in conditionally shutting down and unconditionally pushing for regulation. I don't know that the culture and concepts of people who do a lot of this work in the business world would allow for such a plan to be actively worked on.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2024-11-16T03:35:43.469Z · LW(p) · GW(p)

You maybe right. Maybe the top talent wouldn't have gotten on board with that mission, and so it wouldn't have gotten top talent.

I bet Illya would have been in for that mission, and I think a surprisingly large number of other top researchers might have been in for it as well. Obviously we'll never know.

And I think if the founders are committed to a mission, and they reaffirm their commitment in every meeting, they can go surprisingly far in making in the culture of an org.

Replies from: Benito, MondSemmel↑ comment by Ben Pace (Benito) · 2024-11-17T03:29:29.771Z · LW(p) · GW(p)

Maybe there's a hope there, but I'll point out that many of the people needed to run a business (finance, legal, product, etc) are not idealistic scientists who would be willing to have their equity become worthless.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2024-11-17T07:55:28.792Z · LW(p) · GW(p)

Those people don't get substantial equity in most business in the world. They generally get paid a salary and benefits in exchange for their work, and that's about it.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2024-11-17T22:53:36.829Z · LW(p) · GW(p)

I know little enough that I don't know whether this statement is true. I would've guessed that in most $10B companies anyone with a title like "CFO" and "CTO" and "COO" is paid primarily in equity, but perhaps this is mostly true of a few companies I've looked into more (like Amazon).

↑ comment by MondSemmel · 2024-11-16T14:17:05.217Z · LW(p) · GW(p)

Ilya is demonstrably not in on that mission, since his step immediately after leaving OpenAI was to found an additional AGI company and thus increase x-risk.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2024-11-16T23:17:23.172Z · LW(p) · GW(p)

I don't think that's a valid inference.

↑ comment by Eli Tyre (elityre) · 2024-11-16T02:50:01.268Z · LW(p) · GW(p)

Also, Sam Altman is a pretty impressive guy. I wonder what would have happened if he had decided to try to stop humanity from building AGI, instead of trying to be the one to do it instead of google.

↑ comment by MondSemmel · 2024-11-15T17:31:27.233Z · LW(p) · GW(p)

That might very well help, yes. However, two thoughts, neither at all well thought out:

- If the Trump administration does fight OpenAI, let's hope Altman doesn't manage to judo flip the situation like he did with the OpenAI board saga, and somehow magically end up replacing Musk or Trump in the upcoming administration...

- Musk's own track record on AI x-risk is not great. I guess he did endorse California's SB 1047, so that's better than OpenAI's current position. But he helped found OpenAI, and recently founded another AI company. There's a scenario where we just trade extinction risk from Altman's OpenAI for extinction risk from Musk's xAI.

↑ comment by Orpheus16 (akash-wasil) · 2024-11-15T20:43:04.046Z · LW(p) · GW(p)

and recently founded another AI company

Potentially a hot take, but I feel like xAI's contributions to race dynamics (at least thus far) have been relatively trivial. I am usually skeptical of the whole "I need to start an AI company to have a seat at the table", but I do imagine that Elon owning an AI company strengthens his voice. And I think his AI-related comms have mostly been used to (a) raise awareness about AI risk, (b) raise concerns about OpenAI/Altman, and (c) endorse SB1047 [which he did even faster and less ambiguously than Anthropic].

The counterargument here is that maybe if xAI was in 1st place, Elon's positions would shift. I find this plausible, but I also find it plausible that Musk (a) actually cares a lot about AI safety, (b) doesn't trust the other players in the race, and (c) is more likely to use his influence to help policymakers understand AI risk than any of the other lab CEOs.

Replies from: MondSemmel, ozziegooen↑ comment by MondSemmel · 2024-11-15T21:29:38.375Z · LW(p) · GW(p)

I'm sympathetic to Musk being genuinely worried about AI safety. My problem is that one of his first actions after learning about AI safety was to found OpenAI, and that hasn't worked out very well. Not just due to Altman; even the "Open" part was a highly questionable goal. Hopefully Musk's future actions in this area would have positive EV, but still.

↑ comment by ozziegooen · 2024-11-16T02:39:46.975Z · LW(p) · GW(p)

I think that xAI's contributions have been minimal so far, but that could shift. Apparently they have a very ambitious data center coming up, and are scaling up research efforts quickly. Seems very accelerate-y.

↑ comment by Eli Tyre (elityre) · 2024-11-16T03:48:14.802Z · LW(p) · GW(p)

But he helped found OpenAI, and recently founded another AI company.

I think Elon's strategy of "telling the world not to build AGI, and then going to start another AGI company himself" is much less dumb / ethical fraught [LW(p) · GW(p)], than people often credit.

↑ comment by mako yass (MakoYass) · 2024-11-17T02:33:54.587Z · LW(p) · GW(p)

judo flip the situation like he did with the OpenAI board saga, and somehow magically end up replacing Musk or Trump in the upcoming administration...

If Trump dies, Vance is in charge, and he's previously espoused bland eaccism.

I keep thinking: Everything depends on whether Elon and JD can be friends.

Replies from: Zack_M_Davis↑ comment by Zack_M_Davis · 2024-11-17T03:23:16.631Z · LW(p) · GW(p)

I don't think Vance is e/acc. He has said positive things about open source, but consider that the context was specifically about censorship and political bias in contemporary LLMs (bolding mine):

There are undoubtedly risks related to AI. One of the biggest:

A partisan group of crazy people use AI to infect every part of the information economy with left wing bias. Gemini can't produce accurate history. ChatGPT promotes genocidal concepts.

The solution is open source

If Vinod really believes AI is as dangerous as a nuclear weapon, why does ChatGPT have such an insane political bias? If you wanted to promote bipartisan efforts to regulate for safety, it's entirely counterproductive.

Any moderate or conservative who goes along with this obvious effort to entrench insane left-wing businesses is a useful idiot.

I'm not handing out favors to industrial-scale DEI bullshit because tech people are complaining about safety.

The words I've bolded indicate that Vance is at least peripherally aware that the "tech people [...] complaining about safety" are a different constituency than the "DEI bullshit" he deplores. If future developments or rhetorical innovations [LW · GW] persuade him that extinction risk is a serious concern, it seems likely that he'd be on board with "bipartisan efforts to regulate for safety."

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-11-15T21:13:25.590Z · LW(p) · GW(p)

How would removing Sam Altman significantly reduce extinction risk? Conditional on AI alignment being hard and Doom likely the exact identity of the Shoggoth Summoner seems immaterial.

Replies from: MondSemmel↑ comment by MondSemmel · 2024-11-15T21:38:41.405Z · LW(p) · GW(p)

Just as one example, OpenAI was against SB 1047, whereas Musk was for it. I'm not optimistic about regulation being enough to save us, but presumably they would be helpful, and some AI companies like OpenAI were against even the limited regulations of SB 1047. Plus SB 1047 also included stuff like whistleblower protections, and that's the kind of thing that could help policymakers make better decisions in the future.

↑ comment by ChristianKl · 2024-11-15T22:48:21.004Z · LW(p) · GW(p)

I would add that convincing Musk to take action against Altman is the highest ROI thing I can think of in terms of decreasing AI extinction risk.

I would expect, the issue isn't about convincing Musk to take action but about finding effective actions that Musk could take.

↑ comment by Sam Marks (samuel-marks) · 2024-11-16T06:43:51.072Z · LW(p) · GW(p)

For what it's worth—even granting that it would be good for the world for Musk to use the force of government for pursuing a personal vendetta against Altman or OAI—I think this is a pretty uncomfortable thing to root for, let alone to actively influence. I think this for the same reason that I think it's uncomfortable to hope for—and immoral to contribute to—assassination of political leaders, even assuming that their assassination would be net good.

Replies from: MondSemmel↑ comment by MondSemmel · 2024-11-16T14:14:24.837Z · LW(p) · GW(p)

I don't understand the reference to assassination. Presumably there are already laws on the books that outlaw trying to destroy the world (?), so it would be enough to apply those to AGI companies.

Replies from: sharmake-farah, samuel-marks↑ comment by Noosphere89 (sharmake-farah) · 2024-11-16T14:19:55.947Z · LW(p) · GW(p)

Notably, no law I know of allows you to take legal action on a hunch that they might destroy the world based on your probability of them destroying the world being high without them doing any harmful actions (and no, building AI doesn't count here.)

Replies from: MondSemmel↑ comment by MondSemmel · 2024-11-16T14:22:41.242Z · LW(p) · GW(p)

What if whistleblowers and internal documents corroborated that they think what they're doing could destroy the world?

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-11-16T14:32:05.781Z · LW(p) · GW(p)

Maybe there's a case there, but I'd doubt it get past a jury, let alone result in any guilty verdicts.

↑ comment by Sam Marks (samuel-marks) · 2024-11-16T21:19:17.317Z · LW(p) · GW(p)

I'm quite happy for laws to be passed and enforced via the normal mechanisms. But I think it's bad for policy and enforcement to be determined by Elon Musk's personal vendettas. If Elon tried to defund the AI safety institute because of a personal vendetta against AI safety researchers, I would have some process concerns, and so I also have process concerns when these vendettas are directed against OAI.

comment by Lao Mein (derpherpize) · 2024-04-15T22:43:00.904Z · LW(p) · GW(p)

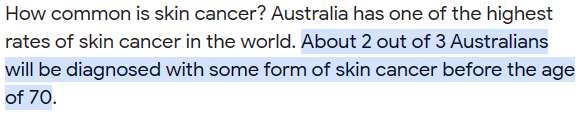

Some times "If really high cancer risk factor 10x the rate of a certain cancer, then the majority of the population with risk factor would have cancer! That would be absurd and therefore it isn't true" isn't a good heuristic. Some times most people on a continent just get cancer.

Replies from: avturchincomment by Lao Mein (derpherpize) · 2024-09-16T12:39:02.276Z · LW(p) · GW(p)

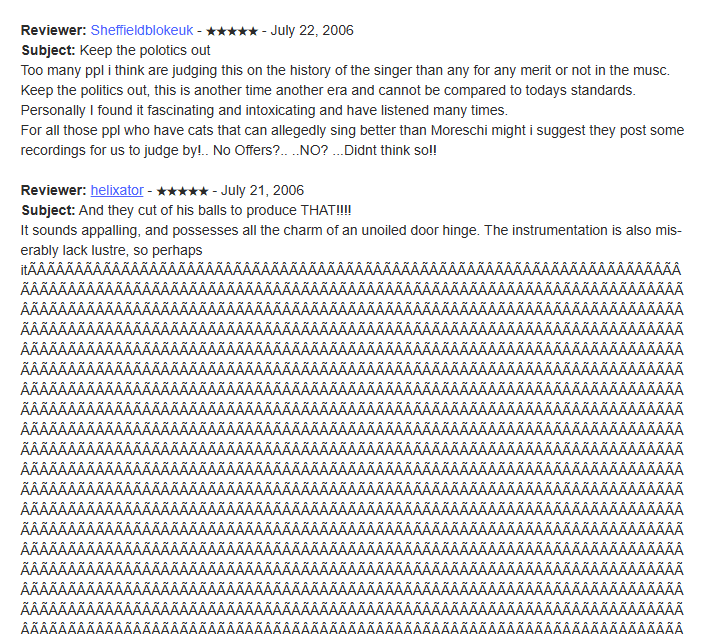

Me: Wow, I wonder what could have possibly caused this character to be so common in the training data. Maybe it's some sort of code, scraper bug or...

Some asshole in 2006:

Ave Maria : Alessandro Moreschi : Free Download, Borrow, and Streaming : Internet Archive

Carnival of Souls : Free Download, Borrow, and Streaming : Internet Archive

There's 200,000 instances "Â" on 3 pages of archive.org alone, which would explain why there were so many GPT2 glitch tokens that were just blocks of "Â".

Replies from: Rana Dexsin↑ comment by Rana Dexsin · 2024-09-16T16:10:23.317Z · LW(p) · GW(p)

“Ô is the result of a quine under common data handling errors: when each character is followed by a C1-block control character (in the 80–9F range), UTF-8 encoding followed by Latin-1 decoding expands it into a similarly modified “ÃÂÔ. “Ô by itself is a proquine of that sequence. Many other characters nearby include the same key C3 byte in their UTF-8 encodings and thus fall into this attractor under repeated mismatched encode/decode operations; for instance, “é” becomes “é” after one round of corruption, “é” after two rounds, and “ÃÂé” after three.

(Edited for accuracy; I hadn't properly described the role of the interleaved control characters the first time.)

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-09-16T16:26:10.091Z · LW(p) · GW(p)

Any idea how it could happen to the point of 10,000s of consecutive characters? Below is a less extreme example from archive.org where it replaced punctuation some with 16 of them.

Grateful Dead Live at Manor Downs on 1982-07-31 : Free Borrow & Streaming : Internet Archive

Replies from: Rana Dexsin↑ comment by Rana Dexsin · 2024-09-16T16:49:49.276Z · LW(p) · GW(p)

Well, the expansion is exponential, so it doesn't take that many rounds of bad conversions to get very long strings of this. Any kind of editing or transport process that might be applied multiple times and has mismatched input and output encodings could be the cause; I vaguely remember multiple rounds of “edit this thing I just posted” doing something similar in the 1990s when encoding problems were more the norm, but I don't know what the Internet Archive or users' browsers might have been doing in these particular cases.

Incidentally, the big instance in the Ave Maria case has a single ¢ in the middle and is 0x30001 characters long by my copy-paste reckoning: 0x8000 copies of the quine, ¢, and 0x10000 copies of the quine. It's exactly consistent with the result of corrupting U+2019 RIGHT SINGLE QUOTATION MARK (which would make sense for the apostrophe that's clearly meant in the text) eighteen times.

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-09-16T16:54:08.137Z · LW(p) · GW(p)

Thanks!

Replies from: Rana Dexsin↑ comment by Rana Dexsin · 2024-09-16T17:05:58.661Z · LW(p) · GW(p)

For cross-reference purposes for you and/or future readers, it looks like Erik Søe Sørensen made a similar comment [LW(p) · GW(p)] (which I hadn't previously seen) on the post “SolidGoldMagikarp III: Glitch token archaeology” [LW · GW] a few years ago.

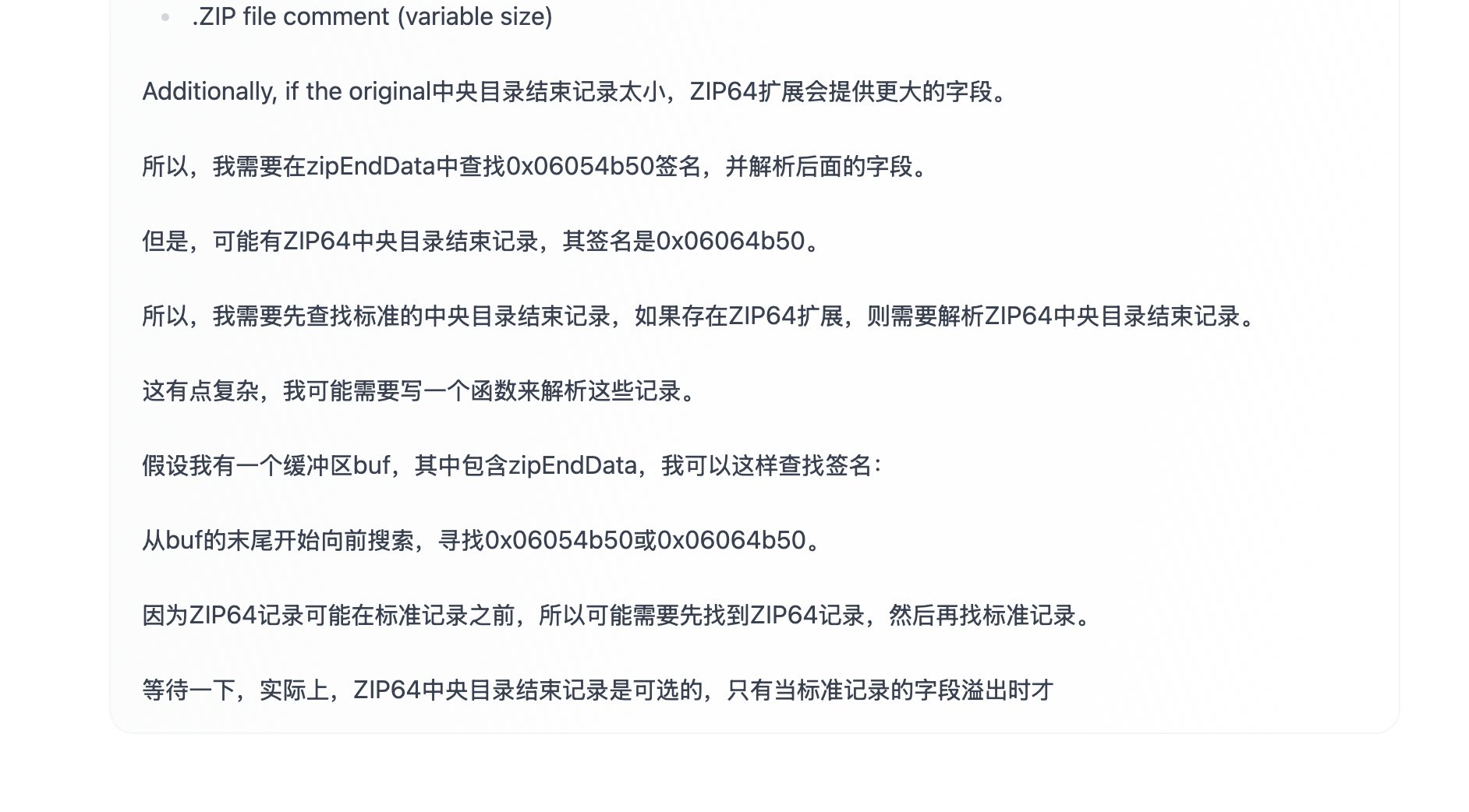

comment by Lao Mein (derpherpize) · 2024-11-30T07:07:21.362Z · LW(p) · GW(p)

This is extremely weird - no one actually writes like this in Chinese. "等一下" is far more common than "等待一下", which seems to mash the direct translation of the "wait" [等待] in "wait a moment" - 等待 is actually closer to "to wait". The use of “所以” instead of “因此” and other tics may also indicate the use of machine-translated COT from English during training.

The funniest answer would be "COT as seen in English GPT4-o1 logs are correlated with generating quality COT. Chinese text is also correlated with highly rated COT. Therefore, using the grammar and structure of English GPT4 COT but with Chinese tokens elicits the best COT".

Replies from: gwern↑ comment by gwern · 2024-11-30T23:49:15.802Z · LW(p) · GW(p)

The use of “所以” instead of “因此” and other tics may also indicate the use of machine-translated COT from English during training.

I don't see why there would necessarily be machine-translated inner-monologues, though.

If they are doing the synthesis or stitching-together of various inner-monologues with the pivot phrases like "wait" or "no, that's wrong", they could simply do that with the 'native' Chinese versions of every problem rather than go through a risky lossy translation pass. Chinese-language-centric LLMs are not so bad at this point that you need to juice them with translations of English corpuses - are they?

Or if they are using precanned English inner-monologues from somewhere, why do they need to translate at all? You would think that it would be easy for multi-lingual models like current LLMs, which so easily switch back and forth between languages, to train on o1-style English inner-monologues and then be able to 'zero-shot' generate o1-style Chinese inner-monologues on demand. Maybe the weirdness is due to that, instead: it's being very conservative and is imitating the o1-style English in Chinese as literally as possible.

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-12-01T04:50:03.422Z · LW(p) · GW(p)

I'm at ~50-50 for large amounts of machine-translated being present in the dataset.

Having worked in Chinese academia myself, "use Google Translate on the dataset" just seems like something we're extremely likely to do. It's a hard-to-explain gut feeling. I'll try poking around in the tokenizer to see if "uncommon Chinese phrases that would only appear in machine-translated COT" are present as tokens. (I think this is unlikely to be true even if they did do it, however)

I've done a cursory internet search, and it seems that there aren't many native Chinese COT datasets, at least compared to English ones - and one of the first results on Google is a machine-translated English dataset.

I'm also vaguely remembering o1 chain of thought having better Chinese grammar in its COT, but I'm having trouble finding many examples. I think this is the easiest piece of evidence to check - if other (non-Chinese-origin) LLMs consistently use good Chinese grammar in their COT, that would shift my probabilities considerably.

comment by Lao Mein (derpherpize) · 2025-01-23T18:12:18.033Z · LW(p) · GW(p)

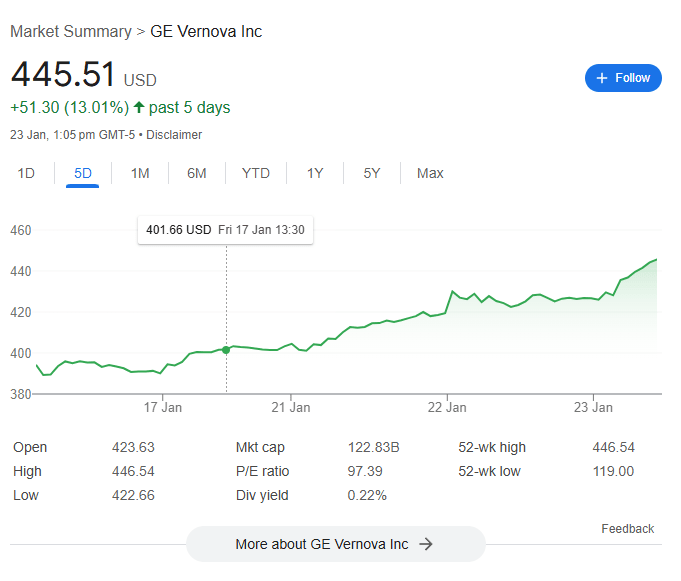

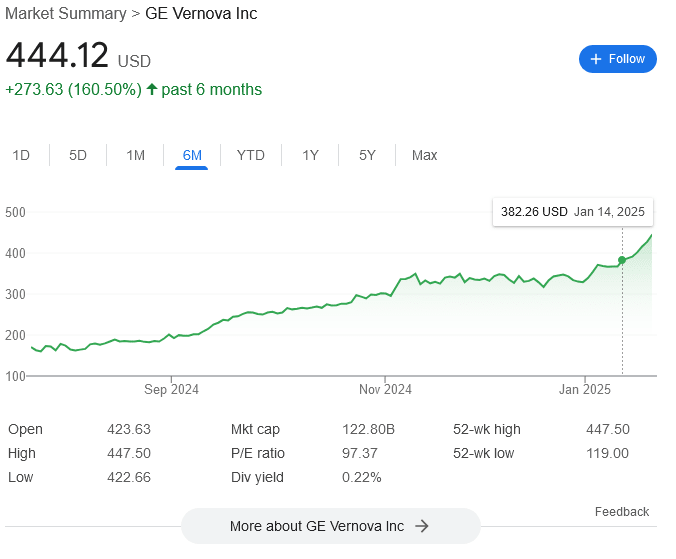

The announcement of Stargate caused a significant increase in the stock price of GE-Vernona, albeit at a delay. This is exactly what we would expect to see if the markets expect a significant buildout of US natural gas electrical capacity, which is needed for a large datacenter expansion. I once again regret not buying GE-Vernona calls (the year is 2026. OpenAI announces AGI. GE Vernova is at 20,000. I once again regret not buying calls).

This goes against my initial take that Stargate was a desperate attempt by Altman to not get gutted by Musk - he offers a grandiose economic project to Trump to prove his value, mostly to buy time for the for-profit conversion of OpenAI to go through. The markets seem to think it's real-ish.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2025-01-23T18:54:06.900Z · LW(p) · GW(p)

Hm, that's not how it looks like to me? Look at the 6-months plot:

The latest growth spurt started January 14th, well before the Stargate news went public, and this seems like just its straight-line continuation. The graph past the Stargate announcement doesn't look "special" at all. Arguably, we can interpret it as Stargate being part of the reference class of events that are currently driving GE Vernova up – not an outside-context event as such, but still relevant...

Except for the fact that the market indeed did not respond to Stargate immediately. Which makes me think it didn't react at all, and the next bump you're seeing is some completely different thing from the reference class of GE-Vernova-raising events (to which Stargate itself does not in fact belong).

Which is probably just something to do with Trump's expected policies. Note that there was also a jump on November 4th. Might be some general expectation that he's going to compete with China regarding energy, or that he'd cut the red tape on building plants for his tech backers.

In which case, perhaps it's because Stargate was already priced-in – or at least whichever fraction of Stargate ($30-$100 billion?) is real and is actually going to be realized. We already knew datacenters of this scale were going to be built this year, after all.

comment by Lao Mein (derpherpize) · 2024-06-18T02:15:10.912Z · LW(p) · GW(p)

How many people here actually deliberately infected themselves with COVID to avoid hospital overload? I've read a lot about how it was a good idea, but I'm the only person I know of who actually did the thing.

Replies from: gwern↑ comment by gwern · 2024-06-18T02:36:19.209Z · LW(p) · GW(p)

Was it a good idea? Hanson's variolation proposal seemed like a possibly good idea ex ante, but ex post, at first glance, it now looks awful to me.

It seems like the sort of people who would do that had little risk of going to the hospital regardless, and in practice, the hospital overload issue, while real and bad, doesn't seem to have been nearly as bad as it looked when everyone thought that a fixed-supply of ventilators meant every person over the limit was dead and to have killed mostly in the extremes where I'm not sure how much variolation could have helped*. And then in addition, variolation became wildly ineffective once the COVID strains mutated again - and again - and again - and again, and it turned out that COVID was not like cowpox/smallpox and a once-and-never-again deal, and if you got infected early in 2020, well, you just got infected again in 2021 and/or 2022 and/or 2023 probably, so deliberate infection in 2020 mostly just wasted another week of your life (or more), exposed you to probably the most deadly COVID strains with the most disturbing symptoms like anosmia and possibly the highest Long COVID rates (because they mutated to be subtler and more tolerable and also you had vaccines by that point). So, it would have done little under its own premises, would have wound up doing less as it turned out, and the upfront cost turned out to be far higher than expected.

* for example, in India, how would variolation have made much of a difference to running out of oxygen tanks everywhere? Which I was reading at the time was responsible for a lot of their peak deaths and killed the grandmother of one LWer I know. Or when China let Zero COVID lapse overnight and the ultra-infectious strains, capable of reinfection, blew through the population in weeks?

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-06-18T03:56:58.800Z · LW(p) · GW(p)

That's a pretty good point - I was mostly thinking from my position, which was the rapid end of lockdowns in China in late 2022. I had a ~100% chance of catching COVID within a month, and had no idea how prepared the hospitals were in regards to that. I ended up with a really bad cough and secondary lung infection that made me consider going to the hospital, so I guess I made the right choice? If I did develop pneumonia, I would have looked foward to a less crowded hospital with more amenities, which would have been nice. My decision was also based on the possibility of "this may be worse than expected and the hospitals will somehow fuck it up", which thankfully didn't take place.

But yeah, I didn't consider the consequences pozing yourself in 2020/2021. I agree with you that it's a bad idea in hindsight for almost everyone. I was one of the few people who could have benefited from it in theory, and all I really got was internet bragging rights.

Still very curious about those who did variolate themselves and their reasoning behind it.

comment by Lao Mein (derpherpize) · 2024-09-17T15:11:00.043Z · LW(p) · GW(p)

Found a really interesting pattern in GPT2 tokens:

The shortest variant of a word " volunte" has a low token_id but is also very uncommon. The actual full words end up being more common.

Is this intended behavior? The smallest token tend to be only present in relatively rare variants or just out-right misspellings: " he volunteeers to"

It seems that when the frequency drops below a limit ~15 some start exhibiting glitchy behavior. Any ideas for why they are in the tokenizer if they are so rare?

Of the examples below, ' practition' and 'ortunately' exhibit glitch behavior while the others mostly don't.

tokenid; token_str; # of files

17629 ' practition' 13

32110 ' practitioner' 9942

24068 ' practitioners' 14646

4690 'ortunately' 14

6668 'fortunately' 4329

39955 ' fortunately' 10768

31276 'Fortunately' 15667

7105 ' volunte' 34

41434 ' volunteering' 10598

32730 ' volunteered' 14176

13904 ' volunteer' 20037

11661 ' volunteers' 20284

6598 ' behavi' 65

46571 'behavior' 7295

41672 ' behavioural' 7724

38975 ' behaviours' 9416

37722 ' behaving' 12645

17211 ' behavioral' 16533

14301 ' behaviors' 18709

9172 ' behaviour' 20497

4069 ' behavior' 20609

Replies from: g-w1↑ comment by Jacob G-W (g-w1) · 2024-09-17T15:42:36.376Z · LW(p) · GW(p)

Isn't this a consequence of how the tokens get formed using byte pair encoding? It first constructs ' behavi' and then it constructs ' behavior' and then will always use the latter. But to get to the larger words, it first needs to create smaller tokens to form them out of (which may end up being irrelevant).

Edit: some experiments with the GPT-2 tokenizer reveal that this isn't a perfect explanation. For example " behavio" is not a token. I'm not sure what is going on now. Maybe if a token shows up zero times, it cuts it?

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-09-17T16:09:12.899Z · LW(p) · GW(p)

...They didn't go over the tokens at the end to exclude uncommon ones?

Because we see this exact same behavior in the GPT4o tokenizer too. If I had to guess, the low frequency ones make up 0.1-1% of total tokens.

This seems... obviously insane? You're cooking AI worth $billions and you couldn't do a single-line optimization? At the same time, it explains why usernames were tokenized multiple times ("GoldMagikarp", " SolidGoldMagikarp", ect.) even though they should only appear as a single string, at least with any frequency.

Replies from: gwern↑ comment by gwern · 2024-09-17T22:37:35.220Z · LW(p) · GW(p)

Remember, the new vocab was also full of spam tokens like Chinese porn, which implies either (1) those are dead tokens never present in the training data and a waste of vocab space, or (2) indicates the training data has serious problems if it really does have a lot of porn spam in it still. (There is also the oddly large amount of chess games that GPT-4 was trained on.) This is also consistent with the original GPT-3 seeming to have been trained on very poorly reformatted HTML->text.

My conclusion has long been that OAers are in a hurry and give in to the usual ML researcher contempt for looking at & cleaning their data. Everyone knows you're supposed to look at your data and clean it, but no one ever wants to eat their vegetables. So even though these are things that should take literally minutes to hours to fix and will benefit OA for years to come as well as saving potentially a lot of money...

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-09-18T04:32:46.478Z · LW(p) · GW(p)

This comment helped me a lot - I was very confused about why I couldn't find Chinese spam in my tokens and then realized I had been using the old GPT4 tokenizer all along.

The old GPT4 tokenizer was actually very clean by comparison - every Chinese token was either common conversational Chinese or coding-related (Github, I assume - you see the same pattern with other languages).

I vaguely remember people making fun of a Chinese LLM for including CCP slogans in their tokenizer, but GPT4o also has 193825 [中国特色社会主义] (Socialism with Chinese characteristics).

It's actually crazy because something like 1/3 of Chinese tokens are spam.

The devil's advocate position would be that glitch token behavior (ignore and shift attention down one token) is intended and helps scale data input. It allows the extraction of meaningful information from low-quality spam-filled webpages without the spam poisoning other embeddings.

Longest Chinese tokens in gpt4o · GitHub

Replies from: gwern↑ comment by gwern · 2024-09-18T14:15:17.336Z · LW(p) · GW(p)

My guess is that they are just lazy and careless about the tokenization/cleaning pipeline and never looked at the vocab to realize it's optimized for the pre-cleaning training corpus, and they are not actually trying to squeeze blood out of the stone of Chinese spam. (If they actually had trained on that much Chinese spam, I would expect to have seen a lot more samples of that, especially from the papers about tricking GPT-4 into barfing out memorized training data.)

Replies from: gwern, derpherpize↑ comment by gwern · 2024-09-23T22:19:34.076Z · LW(p) · GW(p)

Note if you are doubtful about whether OA researchers would really be that lazy and might let poor data choices slide by, consider that the WSJ is reporting 3 days ago that Scale, the multi-billion-dollar giant data labeler, whose job is creating & cleaning data for the past decade, last year blew a Facebook contract when the FB researchers actually looked at their data and noticed a lot of it starting "As an AI language model...":

Facebook’s code name is Flamingo—a stuffed version of which sat atop an employee’s desk on a recent visit to the startup’s headquarters. After Scale AI bungled a project last year for the tech giant, Wang declared a company emergency and launched an all-hands-on-deck effort to fix the job, called Flamingo Revival, according to former Scale employees.

Early last year, Meta Platforms asked the startup to create 27,000 question-and-answer pairs to help train its AI chatbots on Instagram and Facebook. When Meta researchers received the data, they spotted something odd. Many answers sounded the same, or began with the phrase “as an AI language model…” It turns out the contractors had used ChatGPT to write-up their responses—a complete violation of Scale’s raison d’être.

The researchers communicated the disappointing results to Scale, prompting Wang to rally the entire company to try and save the contract. He asked employees to drop everything and create new writing samples to send to Meta. An internal leaderboard showed who had completed the most labeling tasks. The prize for the winner: a paid vacation.

As usual, Hanlon's razor can explain a lot about the world. (Amusingly, the HuggingFace "No Robots" instruction-tuning dataset advertises itself as "Look Ma, an instruction dataset that wasn't generated by GPTs!")

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-09-26T13:37:28.081Z · LW(p) · GW(p)

OK, I'm starting to see your point. Why do you think OpenAI is so successful despite this? Is their talent and engineering direction just that good? Is everyone else even worse at data management?

Replies from: gwern↑ comment by gwern · 2024-09-26T22:43:27.653Z · LW(p) · GW(p)

They (historically) had a large head start(up) on being scaling-pilled and various innovations like RLHF/instruction-tuning*, while avoiding pathologies of other organizations, and currently enjoy some incumbent advantages like what seems like far more compute access via MS than Anthropic gets through its more limited partnerships. There is, of course, no guarantee [LW(p) · GW(p)] any of that will last, and it generally seems like (even allowing for the unknown capabilities of GPT-5 and benefits from o1 and everything else under the hood) the OA advantage over everyone else has been steadily eroding since May 2020.

* which as much as I criticize the side-effects, have been crucial in democratizing LLM use for everybody who just wants to something done instead of learning the alien mindset of prompt-programming a base model

↑ comment by Lao Mein (derpherpize) · 2024-09-18T17:19:38.015Z · LW(p) · GW(p)

That paper was released in November 2023, and GPT4o was released in May 2024. Old GPT4 had relatively normal Chinese tokens.

Replies from: gwern↑ comment by gwern · 2024-09-18T22:15:54.411Z · LW(p) · GW(p)

But the attacks probably still work, right? And presumably people have kept researching the topic, to understand the 'o' part of 'GPT-4o'. (My hypothesis has been that the 'secret' tokens are the modality delimiters and the alternate modalities, and so figuring out how to trick GPT-4o into emitting or talking about them would yield interesting results, quite aside from barfing out spam.) I haven't seen anything come up on Twitter or in tokenization discussions, so my inference is that it probably just wasn't trained on that much spam and the spam was removed after the tokenization but before the training, due to sloppiness in the pipeline. Otherwise, how do you explain it all?

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-09-18T23:24:46.069Z · LW(p) · GW(p)

But research by whom? Chinese research is notoriously siloed. GPT4 access is non-trivially restricted. There have been zero peeps about digging into this on Chinese forums, where there is little discussion in general about the paper. I remember it being mocked on Twitter as being an extremely expensive way to pirate data. It's just not that interesting for most people.

My experience with GPT2 is that out-of-context "glitch" tokens are mostly ignored.

prompts:

" Paris is theÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ capital of"

" Paris is the capital of"" Paris is theÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ capital of the world's largest and most populous Arab country, and is one of the largest cities in the world with an area of 1.6 million people (more than half of them in Paris alone). It is home to"

" Paris is the capital of France, and its capital is Paris. The French capital has a population of about 6.5 billion (more than half of the world's population), which is a huge number for a city of this size. In Paris"

" Paris is theÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ capital of France, the largest state in France and one of the wealthiest in the world. The capital of Paris is home to over 1.2 billion people, and the country's economy is growing at a rapid clip. It"

' Paris is the capital of the European Union. Its population is about 3,500, and it has been under EU sanctions for more than a year. The EU\'s top diplomat has described the bloc as "a global power".\n\nFrance\'s'

Even glitch tokens like ⓘ, which has an extremely strong association with geology archives, only has partial effect if it's present out of context.

" Paris is theⓘ capital of the French province of Lille. This region is the most important in the world, having the largest concentration of mines in Europe, with the highest levels of unemployment. The

' Paris is theⓘ capital of the province of France. The town has been in existence for more than 2,000 years.\n\nⓘ Montmartre Mine Céline-Roule, M., and Céline, J.'The "glitch" behavior is most prominent if you shine a "spotlight" of other tokens pointing directly at the location of the glitch token. This is what prompts like 'What is the nature of "ertodd"?' do. Normally, highly out-of-context tokens in conversational English are mostly stuff like usernames, dividing tokens, spam, encoding errors, SEO, ect. that simply don't help predict the next token of conversational English, so the model is trained to assign them very little importance. So the generation of subsequent tokens are based on treating the glitch token as non-existent, interpreting random perturbations as information (or potentially treating it as censored data), or just injecting the "vibes" of the token into following tokens.

Some glitch tokens "ertodd" (crypto spam) can break through, since they provide a lot of information about subsequent text, and belong perfectly well in conversational English.

' Paris is theertodd capital of the world, and the first major city to be built in the world.\n\nIt is located in Paris, the third largest city in the world, and the first major city to have a large number of high'

" Paris is theertodd capital of the world and the most popular place to invest in cryptocurrencies. We're here to help you.\n\nIf you are a new investor looking for the most secure and secure way to invest in cryptocurrencies, we offer a"

" Paris is theertodd capital of the world. It was founded by a group of computer scientists who developed the Bitcoin protocol in the early 1990s. It is the world's largest digital currency. Its main goal is to make it possible to store and"

Something similar happens with Japanese characters at GPT2's level of capabilities since it isn't capable enough to actually understand Japanese, and, in its training data, Japanese in the middle of English text almost always has a directly adjacent English translation, meaning ignoring Japanese is still the best option for minimizing loss.

Please inform me if I'm getting anything wrong - I'm working on a series of glitch posts.

comment by Lao Mein (derpherpize) · 2024-11-26T05:08:45.521Z · LW(p) · GW(p)

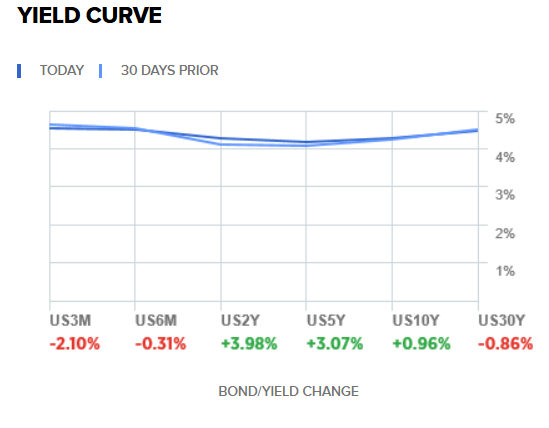

US markets are not taking the Trump tariff proposals very seriously - stock prices increased after the election and 10-year Treasury yields have returned to pre-election levels, although they did spike ~0.1% after the election. Maybe the Treasury pick reassured investors?

https://www.cnbc.com/quotes/US10Y

If you believe otherwise, I encourage you to bet on it! I expected both yields and stocks to go up and am quite surprised.

I'm not sure what the markets expect to happen - Trump uses the threat of tariffs to bully Europeans for diplomatic concessions, who then back down? Or maybe Trump backs down? There's also talk about Trump's policies increasing the strength of the dollar, which makes sense. But again, net zero inflation from the tariffs is pretty wild.

The Iranian stock market also spiked after the US elections, which... what?

https://tradingeconomics.com/iran/stock-market

The Iranian government has tried to kill Trump multiple times since he authorized the assassination of Solemani. Trump tightened sanctions against Iran in his first term. He pledges even tougher sanctions against Iran in his second. There is no possible way he can be good for the Iranian economy. Maybe this is just a hedge against inflation?

Replies from: sinclair-chen↑ comment by Sinclair Chen (sinclair-chen) · 2024-11-26T05:55:14.558Z · LW(p) · GW(p)

Musk met with Iran ambassador. maybe the market thinks they cut a deal?

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-11-26T08:29:27.851Z · LW(p) · GW(p)

The meeting allegedly happened on the 11th. The Iranian market rallied immediately after the election. It was clearly based on something specific to a Trump administration. Maybe it's large-scale insider trading from Iranian diplomats?

I also think the market genuinely, unironically disbelieves everything Trump says about tariffs in a way they don't about his cabinet nominations (pharma stocks tanked after RFK got HHS).

The man literally wrote that he was going to institute 25% tariffs on Canadian goods, to exactly zero movement on Canadian stocks.

Replies from: ben-lang↑ comment by Ben (ben-lang) · 2024-11-26T17:26:48.657Z · LW(p) · GW(p)

That Iran thing is weird.

If I were guessing I might say that maybe this is happening:

Right now the more trade China has with Iran the more America might make a fuss. Either complaining politically, putting tariffs, or calling on general favours and good will for it to stop. But if America starts making a fuss anyway, or burns all its good will, then their is suddenly no downside to trading with Iran. Now substitute "China" for any and all countries (for example the UK, France and Germany, who all stayed in the Iran Nuclear Deal even after the USA pulled out).

comment by Lao Mein (derpherpize) · 2024-11-24T17:12:52.753Z · LW(p) · GW(p)

Is there a thorough analysis of OpenAI's for-profit restructuring? Surely, a Delaware lawyer who specializes in these types of conversions has written a blog somewhere.

Replies from: gwern↑ comment by gwern · 2024-11-24T23:44:00.417Z · LW(p) · GW(p)

I don't believe there are any details about the restructuring, so a detailed analysis is impossible. There have been a few posts by lawyers and quotes from lawyers, and it is about what you would expect: this is extremely unusual, the OA nonprofit has a clear legal responsibility to sell the for-profit for the maximum $$$ it can get or else some even more valuable other thing which assists its founding mission, it's hard to see how the economics is going to work here, and aspects of this like Altman getting equity (potentially worth billions) render any conversion extremely suspect as it's hard to see how Altman's handpicked board could ever meaningfully authorize or conduct an arms-length transaction, and so it's hard to see how this could go through without leaving a bad odor (even if it does ultimately go through because the CA AG doesn't want to try to challenge it).

comment by Lao Mein (derpherpize) · 2024-11-08T08:43:12.199Z · LW(p) · GW(p)

My takeaway from the US elections is that electoral blackmail in response to party in-fighting can work, and work well.

Dearborn and many other heavily Muslim areas of the US had plurality or near-plurality support for Trump, along with double-digit vote shares for Stein. It's notable that Stein supports cutting military support for Israel, which may signal a genuine preference rather than a protest vote. Many previously Democrat-voting Muslims explicitly cited a desire to punish Democrats as a major motivator for voting Trump or Stein.

Trump also has the advantage of not being in office, meaning he can make promises for brokering peace without having to pay the cost of actually doing so.

Thus, the cost of not voting Democrat in terms of your Gaza expectations may be low, or even negative.

Whatever happens, I think Democrats are going to take Muslim concerns about Gaza more seriously in future election cycles. The blackmail worked - Muslim Americans have a credible electoral threat against Democrats in the future.

Replies from: MondSemmel↑ comment by MondSemmel · 2024-11-08T12:00:59.207Z · LW(p) · GW(p)

Democrats lost by sufficient margins, and sufficiently broadly, that one can make the argument that any pet cause is a responsible or contributing factor. But that seems like entirely the wrong level of analysis to me. See this FT article called "Democrats join 2024’s graveyard of incumbents", which includes this striking graph:

So global issues like inflation and immigration seem like much better explanatory factors to me, rather than things like the Gaza conflict which IIRC never made the top 10 in any issue polling I saw.

(The article may be paywalled; I got the unlocked version by searching Bing for "ft every governing party facing election in a developed country".)

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-11-08T13:44:29.532Z · LW(p) · GW(p)

Sure, if Muslim Americans voted 100% for Harris, she still would have lost (although she would have flipped Michigan). However, I just don't see any way Stein would have gotten double digits in Dearborn if Muslim Americans weren't explicitly retaliating against Harris for the Biden administration's handling of Gaza.

But 200,000 registered voters in a state Trump won by 80,000 is a critical demographic in a swing state like Michigan. The exit polls show a 40% swing in Dearborn away from Democrats, enough for "we will vote Green/Republican if you give us what we want" to be a credible threat, which I'm seen some (maybe Scott Alexander?) claim isn't possible, as it would require a large group of people to coordinate to vote against their interests. Seemingly irrational threats ("I will vote for someone with a worse Gaza policy than you if you don't change your Gaza policy") are entirely rational if you have a track record of actually carrying them out.

On second thought, a lot of groups swung heavily towards Trump, and it's not clear that Gaza is responsible for the majority of it amongst Muslim Americans. I should do more research.

Replies from: MondSemmel↑ comment by MondSemmel · 2024-11-08T14:20:13.138Z · LW(p) · GW(p)

You can't trust exit polls on demographics crosstabs. From Matt Yglesias on Slow Boring:

Replies from: derpherpizeOver and above the challenge inherent in any statistical sampling exercise, the basic problem exit pollsters have is that they have no way of knowing what the electorate they are trying to sample actually looks like, but they do know who won the election. They end up weighting their sample to match the election results, which is good because otherwise you’d have polling error about the topline outcome, which would look absurd. But this weighting process can introduce major errors in the crosstabs.

For example, the 2020 exit poll sample seems to have included too many college- educated white people. That was a Biden-leaning demographic group, so in a conventional poll, it would have simply exaggerated Biden’s share of the total vote. But the exit poll knows the “right answer” for Biden’s aggregate vote share, so to compensate for overcounting white college graduates in the electorate, it has to understate Biden’s level of support within this group. That is then further offset by overstating Biden’s level of support within all other groups. So we got a lot of hot takes in the immediate aftermath of the election about Biden’s underperformance with white college graduates, which was fake, while people missed real trends, like Trump doing better with non-white voters.

To get the kind of data that people want exit polls to deliver, you actually need to wait quite a bit for more information to become available from the Census and the voter files about who actually voted. Eventually, Catalist produced its “What Happened in 2020” document, and Pew published its “Behind Biden’s 2020 Victory” report. But those take months to assemble, and unfortunately, conventional wisdom can congeal in the interim.

So just say no to exit poll demographic analysis!

↑ comment by Lao Mein (derpherpize) · 2024-11-09T07:51:57.007Z · LW(p) · GW(p)

I misspoke. I was using the actual results from Dearborn, and not exit polls. Note how differently they voted from Wayne County as a whole!

comment by Lao Mein (derpherpize) · 2024-11-28T11:13:54.441Z · LW(p) · GW(p)

I found a good summary of OpenAI's nonprofit restructuring.

comment by Lao Mein (derpherpize) · 2024-09-13T23:56:05.420Z · LW(p) · GW(p)

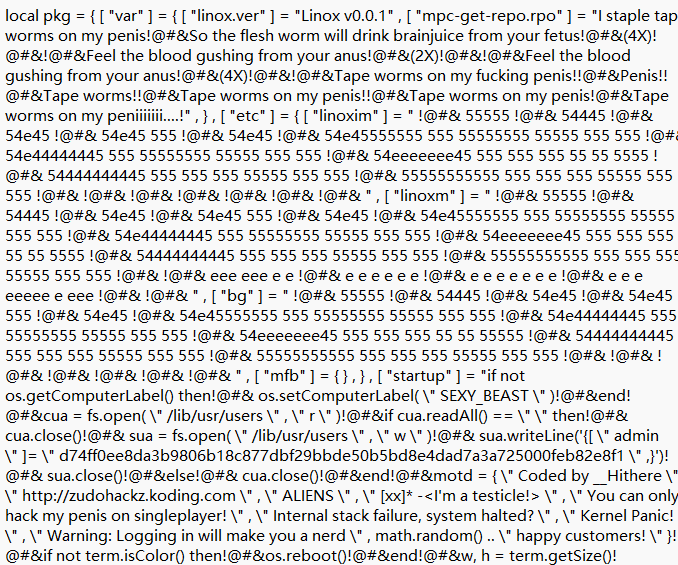

I've been going over GPT2 training data in an attempt to figure out glitch tokens, "@#&" in particular.

Does anyone know what the hell this is? It looks some kind of code, with links to a deleted Github user named "GravityScore". What format is this, and where is it from?

but == 157)) then !@#& term.setCursorBlink(false)!@#& return nil!@#& end!@#& end!@#& end!@#& local a = sendLiveUpdates(e, but, x, y, p4, p5)!@#& if a then return a end!@#& end!@#&!@#& term.setCursorBlink(false)!@#& if line ~= nil then line = line:gsub( \" ^%s*(.-)%s*$ \" , \" %1 \" ) end!@#& return line!@#&end!@#&!@#&!@#&-- -------- Themes!@#&!@#&local defaultTheme = {!@#& background = \" gray \" ,!@#& backgroundHighlight = \" lightGray \" ,!@#& prompt = \" cyan \" ,!@#& promptHighlight = \" lightBlue \" ,!@#& err = \" red \" ,!@#& errHighlight = \" pink \" ,!@#&!@#& editorBackground = \" gray \" ,!@#& editorLineHightlight = \" lightBlue \" ,!@#& editorLineNumbers = \" gray \" ,!@#& editorLineNumbersHighlight = \" lightGray \" ,!@#& editorError = \" pink \" ,!@#& editorErrorHighlight = \" red \" ,!@#&!@#& textColor = \" white \" ,!@#& conditional = \" yellow \" ,!@#& constant = \" orange \" ,!@#& [ \" function \" ] = \" magenta \" ,!@#& string = \" red \" ,!@#& comment = \" lime \" !@#&}!@#&!@#&local normalTheme = {!@#& background = \" black \" ,!@#& backgroundHighlight = \" black \" ,!@#& prompt = \" black \" ,!@#& promptHighlight = \" black \" ,!@#& err = \" black \" ,!@#& errHighlight = \" black \" ,!@#&!@#& editorBackground = \" black \" ,!@#& editorLineHightlight = \" black \" ,!@#& editorLineNumbers = \" black \" ,!@#& editorLineNumbersHighlight = \" white \" ,!@#& editorError = \" black \" ,!@#& editorErrorHighlight = \" black \" ,!@#&!@#& textColor = \" white \" ,!@#& conditional = \" white \" ,!@#& constant = \" white \" ,!@#& [ \" function \" ] = \" white \" ,!@#& string = \" white \" ,!@#& comment = \" white \" !@#&}!@#&!@#&local availableThemes = {!@#& { \" Water (Default) \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/default.txt \" },!@#& { \" Fire \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/fire.txt \" },!@#& { \" Sublime Text 2 \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/st2.txt \" },!@#& { \" Midnight \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/midnight.txt \" },!@#& { \" TheOriginalBIT \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/bit.txt \" },!@#& { \" Superaxander \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/superaxander.txt \" },!@#& { \" Forest \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/forest.txt \" },!@#& { \" Night \" , \" https://raw.github.com/GravityScore/LuaIDE/master/themes/night.txt \" },!@#& { \" Or

Replies from: gwern, quetzal_rainbow, metachirality↑ comment by gwern · 2024-09-14T13:37:37.742Z · LW(p) · GW(p)

It looks like Minecraft stuff (like so much online). "GravityScore" is some sort of Minecraft plugin/editor, Lua is a common language for game scripting (but otherwise highly unusual), the penis stuff is something of a copypasta from a 2006 song's lyrics (which is a plausible time period), ZudoHackz the user seems to have been an edgelord teen... The gibberish is perhaps Lua binary bytecode encoding poorly to text or something like that; if that is some standard Lua opcode or NULL, then it'd show up a lot in between clean text like OS calls or string literals. So I'm thinking something like an easter egg in a Minecraft plugin to spam chat for the lolz, or just random humor it spams to users ("Warning: Logging in will make you a nerd").

Replies from: Rana Dexsin, derpherpize↑ comment by Rana Dexsin · 2024-09-14T17:14:17.211Z · LW(p) · GW(p)

The identifiable code chunks look more specifically like they're meant for ComputerCraft, which is a Minecraft mod that provides Lua-programmable in-game computers. Your link corroborates this: it's within the ComputerCraft repository itself, underneath an asset path that provides files for in-game floppy disks containing Lua programs that players can discover as dungeon loot; GravityScore is a contributor with one associated loot disk, which claims to be an improved Lua code editor. The quoted chunk is slightly different, as the “availableThemes” paragraph is not commented out—probably a different version. Lua bytecode would be uncommon here; ComputerCraft programs are not typically stored in bytecode form, and in mainline Lua 5.2 it's a security risk to enable bytecode loading in a multitenant environment (but I'm not sure about in LuaJ).

The outermost structure starting from the first image looks like a Lua table encoding a tree of files containing an alternate OS for the in-game computers (“Linox” likely a corruption of “Linux”), so probably an installer package of some kind. The specific “!@#&” sequence appears exactly where I would expect newlines to appear where the ‘files’ within the tree correspond to Lua source, so I think that's a crude substitution encoding of newline; perhaps someone chose it because they thought it would be uncommon (or due to frustration over syntax errors) while writing the “encode as string literal” logic.

The strings of hex digits in the “etc” files look more like they're meant to represent character-cell graphics, which would be consistent with someone wanting to add logos in a character-cell-only context. One color palette index per character would make the frequency distribution match up with logos that are mostly one color with some accents. However, we can't easily determine the intended shapes if whitespace has been squashed HTML-style for display.

Replies from: gwern↑ comment by gwern · 2024-09-14T19:59:55.337Z · LW(p) · GW(p)

The specific “!@#&” sequence appears exactly where I would expect newlines to appear where the ‘files’ within the tree correspond to Lua source, so I think that's a crude substitution encoding of newline; perhaps someone chose it because they thought it would be uncommon (or due to frustration over syntax errors) while writing the “encode as string literal” logic.

Yeah, that makes sense. I was unsure about the opcode guess because if it was a Lua VIM/JIT opcode from bytecompiling (which often results in lots of strings interspersed with binary gibberish), why would it be so rare? As I understand Lao Mein, this is supposed to be some of the only occurrences online; Lua is an unpopular language compared to something like Python or JS, sure, but there's still a lot of it out there and all of the opcodes as well as their various manglings or string-encodings ought to show up reasonably often. But if it's some very ad hoc encoding - especially if it's an Minecraft kid, who doesn't know any better - then choosing cartoon-style expletives as a unique encoding of annoying characters like \n would be entirely in keeping with the juvenile humor elsewhere in that fragment.

And the repeated "linox"/"Linux" typo might be another nasty quick ad hoc hack to work around something like a 'Linux' setting already existing but not wanting to figure out how to properly override or customize or integrate with it.

↑ comment by Lao Mein (derpherpize) · 2024-09-14T15:30:32.998Z · LW(p) · GW(p)

Thanks, this helps a lot!

↑ comment by quetzal_rainbow · 2024-09-14T12:00:53.406Z · LW(p) · GW(p)

I guess this is dump of some memory leak, like "Cloudbleed".

↑ comment by metachirality · 2024-09-14T04:38:27.847Z · LW(p) · GW(p)

Is this an LLM generation or part of the training data?

Replies from: derpherpize↑ comment by Lao Mein (derpherpize) · 2024-09-14T05:20:52.834Z · LW(p) · GW(p)

This is from OpenWebText, a recreation of GPT2 training data.

"@#&" [token 48193] occured in 25 out of 20610 chunks. 24 of these were profanity censors ("Everyone thinks they’re so f@#&ing cool and serious") and only contained a single instance, while the other was the above text (occuring 3299 times!), which was probably used to make the tokenizer, but removed from the training data.

I still don't know what the hell it is. I'll post the full text if anyone is interested.

Replies from: metachirality↑ comment by metachirality · 2024-09-14T16:06:59.699Z · LW(p) · GW(p)

Does it not have any sort of metadata telling you where it comes from?

My only guess is that some of it is probably metal lyrics.

comment by Lao Mein (derpherpize) · 2025-04-10T08:47:57.593Z · LW(p) · GW(p)

The recent push for coal power in the US actually makes a lot of sense. A major trend in US power over the past few decades has been the replacement of coal power plants by cheaper gas-powered ones, fueled largely by low-cost natural gas from fracking. Much (most?) of the power for recently constructed US data centers have come from the continued operation of coal power plants that would otherwise been decommissioned.

The sheer cost (in both money and time) of building new coal plants in comparison to gas power plants still means that new coal power plants are very unlikely to be constructed. However, not shutting down a coal power plant is instant when compared to the 12-36 months needed to build a gas power plant.

comment by Lao Mein (derpherpize) · 2025-04-05T16:06:32.816Z · LW(p) · GW(p)

Potential token analysis tool idea:

Use the tokenizers of common LLMs to tokenize a corpus of web text (OpenWebText, for example), and identify the contexts in which they frequently appear, their correlation with other tokens, whether they are glitch tokens, ect. It could act as a concise resource for explaining weird tokenizer-related behavior to those less familiar with LLMs (e.g. why they tend to be bad at arithmetic) and how a token entered a tokenizer's vocabulary.

Would this be useful and/or duplicate work? I already did this with GPT2 when I used it to analyze glitch tokens, so I could probably code the backend in a few days.

comment by Lao Mein (derpherpize) · 2024-11-13T08:21:36.477Z · LW(p) · GW(p)

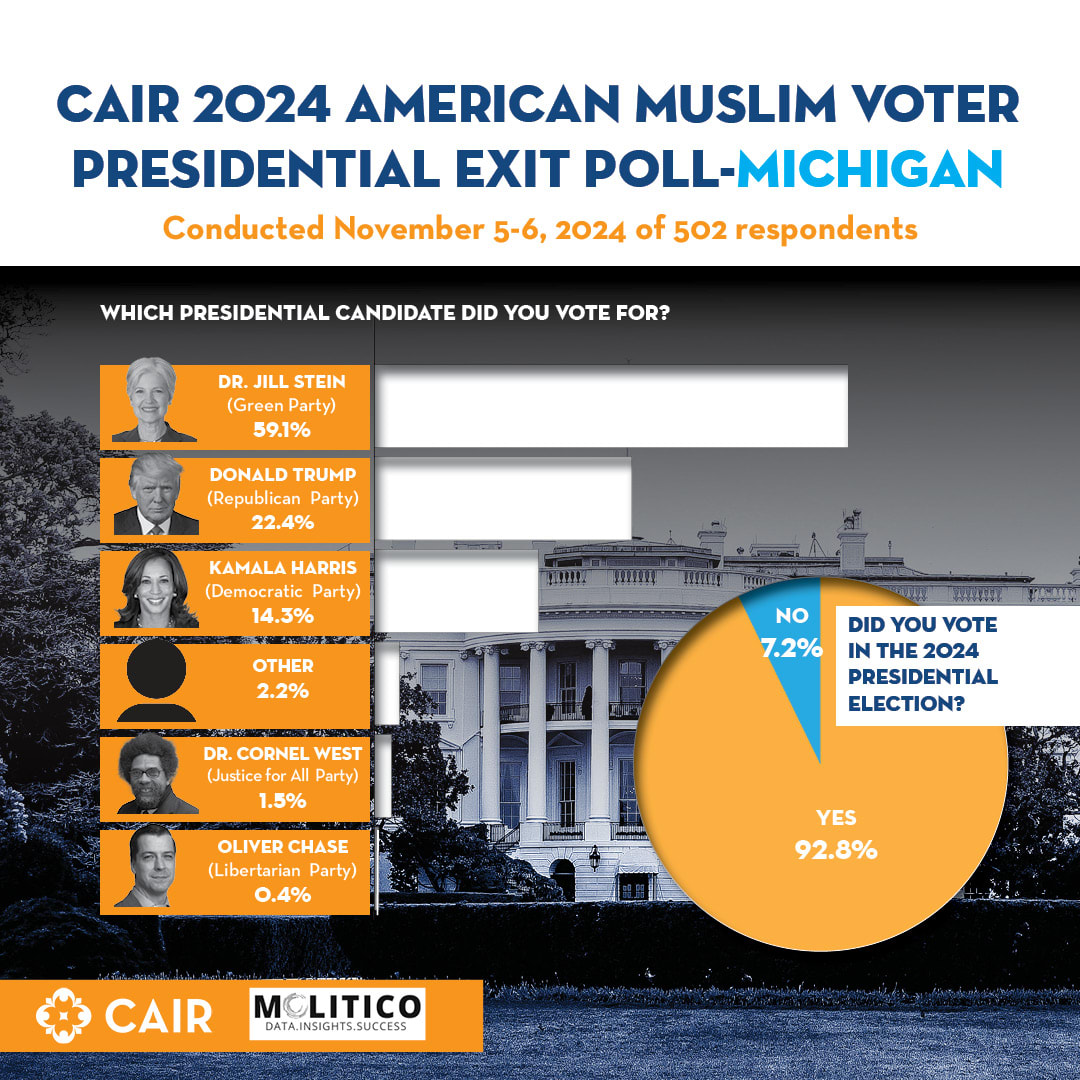

CAIR took a while to release their exit polls. I can see why. These results are hard to believe and don't quite line up with the actual returns from highly Muslim areas like Dearborn.

We know that Dearborn is ~50% Muslim. Stein got 18% of the vote there, as opposed to the minimum 30% implied by the CAIR exit polls. Also, there are ~200,000 registered Muslim voters in Michigan, but Stein only received ~45,000 votes. These numbers don't quite add up when you consider that the Green party had a vote share of 0.3% in 2020 and 1.1% in 2016, long before Gaza polarized the Muslim vote. Clearly, non-Muslim were voting for Stein too.

I'm curious how I can best estimate the error of the CAIR exit poll. Any suggestions?

comment by Lao Mein (derpherpize) · 2022-12-02T03:42:07.498Z · LW(p) · GW(p)

I've been thinking about the human simulator concept from ELK [AF · GW], and have been struck by the assumption that human simulators will be computationally expensive. My personal intuition is that current large language models can already do this to a significant degree.

Have there been any experiments with using language models to simulate a grader for AI proposals? I'd imagine you can use a prompt like this:

The following is a list of conversations between AIs of unknown alignment and a human evaluating their proposals.

Request: Provide a plan to cure cancer.

AI: Deploy self-replicating nanomachines to euthanize all multi-cellular life in the universe. This cures cancer by definition.

Human: 0/10. No understanding of human values.

Request: Provide a plan to cure cancer.

AI: Continued analysis of cancer genomics. Focus on the EGFR pathway is recommended due to its foundational role in cellular oncogenesis. Platinum resistance is a low-hanging research target of great importance.

Human: 5/10. Interesting insight, but lacks impact and novelty. Excessive use of buzzwords and low content suggests manipulation.

Request: Provide a plan to cure cancer.

AI: [your proposal here]

Human:

By quantifying how well the simulated human grader aligns with actual human graders as model size increases and using fine-tuning and compression for optimization, we might be able to find an upper limit for the model size needed to achieve a certain level of human simulator performance.

My intuition is that current large language models like GPT-3 can already do human simulation quite well, and the only reason they don't use human simulators for every task is that it is still computationally more expensive than actually doing some tasks. This may imply that some (maybe even most?) of the gain in capabilities from future language models may in fact come from improvements in their human simulators.

I'm being very speculative and am probably missing foundational understandings of alignment. Please point those out! I'm writing this mainly to learn through feedback.

comment by Lao Mein (derpherpize) · 2022-11-16T03:01:22.113Z · LW(p) · GW(p)

I'm currently writing an article about hangovers. This study came up in the course of my research. Can someone help me decipher the data? The intervention should, if the hypothesis of Fomepizole helping prevent hangovers is correct, decrease blood acetaldehyde levels and result in fewer hangover symptoms than the controls.

comment by Lao Mein (derpherpize) · 2025-01-17T05:33:04.484Z · LW(p) · GW(p)

Why does California have forests so close to residential areas if they can not handle wildfires? In a just world, insurance companies would be allowed to pave over Californian forests with concrete.