OpenAI: Facts from a Weekend

post by Zvi · 2023-11-20T15:30:06.732Z · LW · GW · 165 commentsContents

Just the Facts, Ma’am None 167 comments

Approximately four GPTs and seven years ago, OpenAI’s founders brought forth on this corporate landscape a new entity, conceived in liberty, and dedicated to the proposition that all men might live equally when AGI is created.

Now we are engaged in a great corporate war, testing whether that entity, or any entity so conceived and so dedicated, can long endure.

What matters is not theory but practice. What happens when the chips are down?

So what happened? What prompted it? What will happen now?

To a large extent, even more than usual, we do not know. We should not pretend that we know more than we do.

Rather than attempt to interpret here or barrage with an endless string of reactions and quotes, I will instead do my best to stick to a compilation of the key facts.

(Note: All times stated here are eastern by default.)

Just the Facts, Ma’am

What do we know for sure, or at least close to sure?

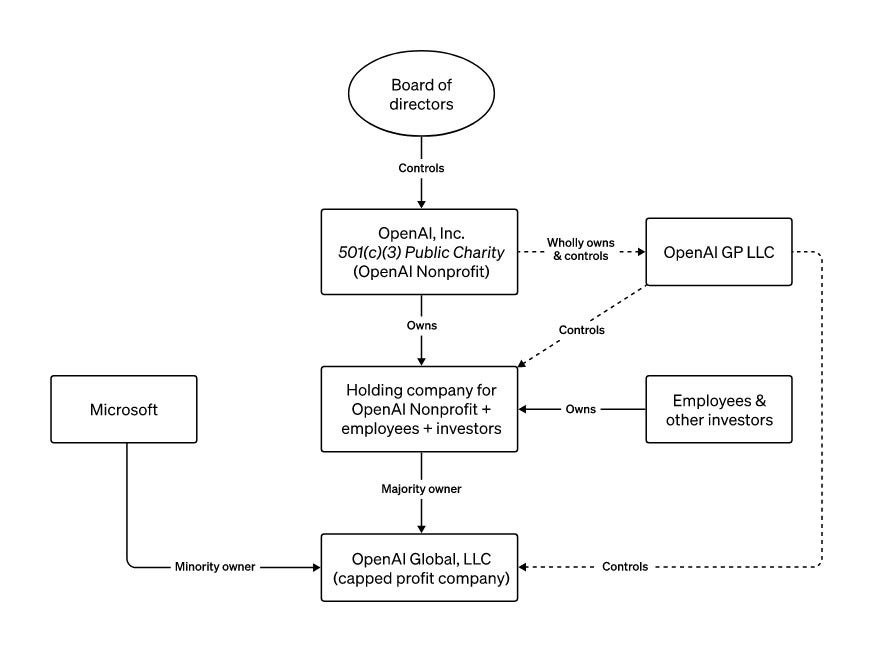

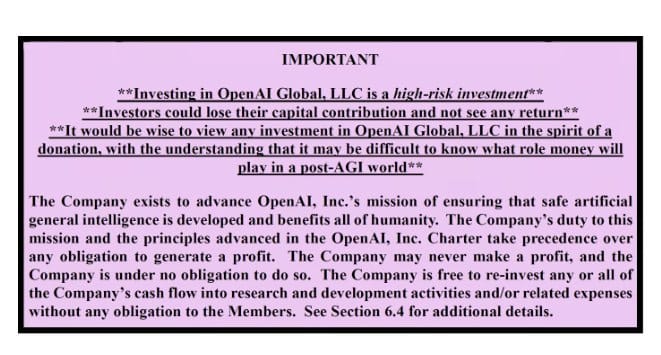

Here is OpenAI’s corporate structure, giving the board of the 501c3 the power to hire and fire the CEO. It is explicitly dedicated to its nonprofit mission, over and above any duties to shareholders of secondary entities. Investors were warned that there was zero obligation to ever turn a profit:

Here are the most noteworthy things we know happened, as best I can make out.

- On Friday afternoon at 3:28pm, the OpenAI board fired Sam Altman, appointing CTO Mira Murati as temporary CEO effective immediately. They did so over a Google Meet that did not include then-chairmen Greg Brockman.

- Greg Brockman, Altman’s old friend and ally, was removed as chairman of the board but the board said he would stay on as President. In response, he quit.

- The board told almost no one. Microsoft got one minute of warning.

- Mira Murati is the only other person we know was told, which happened on Thursday night.

- From the announcement by the board: “Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.”

- In a statement, the board of directors said: “OpenAI was deliberately structured to advance our mission: to ensure that artificial general intelligence benefits all humanity. The board remains fully committed to serving this mission. We are grateful for Sam’s many contributions to the founding and growth of OpenAI. At the same time, we believe new leadership is necessary as we move forward. As the leader of the company’s research, product, and safety functions, Mira is exceptionally qualified to step into the role of interim CEO. We have the utmost confidence in her ability to lead OpenAI during this transition period.”

- OpenAI’s board of directors at this point: OpenAI chief scientist Ilya Sutskever, independent directors Quora CEO Adam D’Angelo, technology entrepreneur Tasha McCauley, and Georgetown Center for Security and Emerging Technology’s Helen Toner.

- Usually a 501c3’s board must have a majority of people not employed by the company. Instead, OpenAI’s said that a majority did not have a stake in the company, due to Sam Altman having zero equity.

- In response to many calling this a ‘board coup’: “You can call it this way,” Sutskever said about the coup allegation. “And I can understand why you chose this word, but I disagree with this. This was the board doing its duty to the mission of the nonprofit, which is to make sure that OpenAI builds AGI that benefits all of humanity.” AGI stands for artificial general intelligence, a term that refers to software that can reason the way humans do.When Sutskever was asked whether “these backroom removals are a good way to govern the most important company in the world?” he answered: “I mean, fair, I agree that there is a not ideal element to it. 100%.”

- Other than that, the board said nothing in public. I am willing to outright say that, whatever the original justifications, the removal attempt was insufficiently considered and planned and massively botched. Either they had good reasons that justified these actions and needed to share them, or they didn’t.

- There had been various clashes between Altman and the board. We don’t know what all of them were. We do know the board felt Altman was moving too quickly, without sufficient concern for safety, with too much focus on building consumer products, while founding additional other companies. ChatGPT was a great consumer product, but supercharged AI development counter to OpenAI’s stated non-profit mission.

- OpenAI was previously planning an oversubscribed share sale at a valuation of $86 billion that was to close a few weeks later.

- Board member Adam D’Angelo said in a Forbes in January: There’s no outcome where this organization is one of the big five technology companies. This is something that’s fundamentally different, and my hope is that we can do a lot more good for the world than just become another corporation that gets that big.

- Sam Altman on October 16: “4 times in the history of OpenAI––the most recent time was in the last couple of weeks––I’ve gotten to be in the room when we push the veil of ignorance back and the frontier of discovery forward. Getting to do that is the professional honor of a lifetime.” There was speculation that events were driven in whole or in part by secret capabilities gains within OpenAI, possibly from a system called Gobi, perhaps even related to the joking claim ‘AI has been achieved internally’ but we have no concrete evidence of that.

- Ilya Sutskever co-leads the Superalignment Taskforce, has very short timelines for when we will get AGI, and is very concerned about AI existential risk.

- Sam Altman was involved in starting multiple new major tech companies. He was looking to raise tens of billions from Saudis to start a chip company. He was in other discussions for an AI hardware company.

- Sam Altman has stated time and again, including to Congress, that he takes existential risk from AI seriously. He was part of the creation of OpenAI’s corporate structure. He signed the CAIS letter. OpenAI spent six months on safety work before releasing GPT-4. He understands the stakes. One can question OpenAI’s track record on safety, many did including those who left to found Anthropic. But this was not a pure ‘doomer vs. accelerationist’ story.

- Sam Altman is very good at power games such as fights for corporate control. Over the years he earned the loyalty of his employees, many of whom moved in lockstep, using strong strategic ambiguity. Hand very well played.

- Essentially all of VC, tech, founder, financial Twitter united to condemn the board for firing Altman and for how they did it, as did many employees, calling upon Altman to either return to the company or start a new company and steal all the talent. The prevailing view online was that no matter its corporate structure, it was unacceptable to fire Altman, who had built the company, or to endanger OpenAI’s value by doing so. That it was good and right and necessary for employees, shareholders, partners and others to unite to take back control.

- Talk in those circles is that this will completely discredit EA or ‘doomerism’ or any concerns over the safety of AI, forever. Yes, they say this every week, but this time it was several orders of magnitude louder and more credible. New York Times somehow gets this backwards. Whatever else this is, it’s a disaster.

- By contrast, those concerned about existential risk, and some others, pointed out that the unique corporate structure of OpenAI was designed for exactly this situation. They also mostly noted that the board clearly handled decisions and communications terribly, but that there was much unknown, and tried to avoid jumping to conclusions.

- Thus we are now answering the question: What is the law? Do we have law? Where does the power ultimately lie? Is it the charismatic leader that ultimately matters? Who you hire and your culture? Can a corporate structure help us, or do commercial interests and profit motives dominate in the end?

- Great pressure was put upon the board to reinstate Altman. They were given two 5pm Pacific deadlines, on Saturday and Sunday, to resign. Microsoft’s aid, and that of its CEO Satya Nadella, was enlisted in this. We do not know what forms of leverage Microsoft did or did not bring to that table.

- Sam Altman tweets ‘I love the openai team so much.’ Many at OpenAI respond with hearts, including Mira Murati.

- Invited by employees including Mira Murati and other top executives, Sam Altman visited the OpenAI offices on Sunday. He tweeted ‘First and last time i ever wear one of these’ with a picture of his visitors pass.

- The board does not appear to have been at the building at the time.

- Press reported that the board had agreed to resign in principle, but that snags were hit over who the replacement board would be, and over whether or not they would need to issue a statement absolving Altman of wrongdoing, which could be legally perilous for them given their initial statement.

- Bloomberg reported on Sunday 11:16pm that temporary CEO Mira Murati aimed to rehire Altman and Brockman, while board sought alternative CEO.

- OpenAI board hires former Twitch CEO Emmett Shear to be the new CEO. He issues his initial statement here. I know a bit about him. If the board needs to hire a new CEO from outside that takes existential risk seriously, he seems to me like a truly excellent pick, I cannot think of a clearly better one. The job set for him may or may not be impossible. Shear’s PPS in his note: PPS: “Before I took the job, I checked on the reasoning behind the change. The board did *not* remove Sam over any specific disagreement on safety, their reasoning was completely different from that. I’m not crazy enough to take this job without board support for commercializing our awesome models.”

- New CEO Emmett Shear has made statements in favor of slowing down AI development, although not a stop. His p(doom) is between 5% and 50%. He has said ‘My AI safety discourse is 100% “you are building an alien god that will literally destroy the world when it reaches the critical threshold but be apparently harmless before that.”’ Here is a thread and video link with more, transcript here or a captioned clip. Here he is tweeting a 2×2 faction chart a few days ago.

- Microsoft CEO Satya Nadella posts 2:53am Monday morning: We remain committed to our partnership with OpenAI and have confidence in our product roadmap, our ability to continue to innovate with everything we announced at Microsoft Ignite, and in continuing to support our customers and partners. We look forward to getting to know Emmett Shear and OAI’s new leadership team and working with them. And we’re extremely excited to share the news that Sam Altman and Greg Brockman, together with colleagues, will be joining Microsoft to lead a new advanced AI research team. We look forward to moving quickly to provide them with the resources needed for their success.

- Sam Altman retweets the above with ‘the mission continues.’ Brockman confirms. Other leadership to include Jackub Pachocki the GPT-4 lead, Szymon Sidor and Aleksander Madry.

- Nadella continued in reply: I’m super excited to have you join as CEO of this new group, Sam, setting a new pace for innovation. We’ve learned a lot over the years about how to give founders and innovators space to build independent identities and cultures within Microsoft, including GitHub, Mojang Studios, and LinkedIn, and I’m looking forward to having you do the same.

- Ilya Sutskever posts 8:15am Monday morning: I deeply regret my participation in the board’s actions. I never intended to harm OpenAI. I love everything we’ve built together and I will do everything I can to reunite the company. Sam retweets with three heart emojis. Jan Leike, the other head of the superalignment team, Tweeted that he worked through the weekend on the crisis, and that the board should resign.

- Microsoft stock was down -1% after hours on Friday, was back to roughly its previous value on Monday morning and at the open. All priced in. Neither Google or S&P made major moves either.

- 505 of 700 employees of OpenAI, including Ilya Sutskever, sign a letter telling the board to resign and reinstate Altman and Brockman, threatening to otherwise move to Microsoft to work in the new subsidiary under Altman, which will have a job for every OpenAI employee. Full text of the letter that was posted: To the Board of Directors at OpenAI,OpenAl is the world’s leading Al company. We, the employees of OpenAl, have developed the best models and pushed the field to new frontiers. Our work on Al safety and governance shapes global norms. The products we built are used by millions of people around the world. Until now, the company we work for and cherish has never been in a stronger position.The process through which you terminated Sam Altman and removed Greg Brockman from the board has jeopardized all of this work and undermined our mission and company. Your conduct has made it clear you did not have the competence to oversee OpenAI.When we all unexpectedly learned of your decision, the leadership team of OpenAl acted swiftly to stabilize the company. They carefully listened to your concerns and tried to cooperate with you on all grounds. Despite many requests for specific facts for your allegations, you have never provided any written evidence. They also increasingly realized you were not capable of carrying out your duties, and were negotiating in bad faith.The leadership team suggested that the most stabilizing path forward – the one that would best serve our mission, company, stakeholders, employees and the public – would be for you to resign and put in place a qualified board that could lead the company forward in stability. Leadership worked with you around the clock to find a mutually agreeable outcome. Yet within two days of your initial decision, you again replaced interim CEO Mira Murati against the best interests of the company. You also informed the leadership team that allowing the company to be destroyed “would be consistent with the mission.”Your actions have made it obvious that you are incapable of overseeing OpenAl. We are unable to work for or with people that lack competence, judgement and care for our mission and employees. We, the undersigned, may choose to resign from OpenAl and join the newly announced Microsoft subsidiary run by Sam Altman and Greg Brockman. Microsoft has assured us that there are positions for all OpenAl employees at this new subsidiary should we choose to join. We will take this step imminently, unless all current board members resign, and the board appoints two new lead independent directors, such as Bret Taylor and Will Hurd, and reinstates Sam Altman and Greg Brockman.1. Mira Murati2. Brad Lightcap3. Jason Kwon4. Wojciech Zaremba5. Alec Radford6. Anna Makanju7. Bob McGrew8. Srinivas Narayanan9. Che Chang10. Lillian Weng11. Mark Chen12. Ilya Sutskever

- There is talk that OpenAI might completely disintegrate as a result, that ChatGPT might not work a few days from now, and so on.

- It is very much not over, and still developing.

- There is still a ton we do not know.

- This weekend was super stressful for everyone. Most of us, myself included, sincerely wish none of this had happened. Based on what we know, there are no villains in the actual story that matters here. Only people trying their best under highly stressful circumstances with huge stakes and wildly different information and different models of the world and what will lead to good outcomes. In short, to all who were in the arena for this on any side, or trying to process it, rather than spitting bile:

.

Later, when we know more, I will have many other things to say, many reactions to quote and react to. For now, everyone please do the best you can to stay sane and help the world get through this as best you can.

165 comments

Comments sorted by top scores.

comment by gwern · 2023-11-22T03:00:13.481Z · LW(p) · GW(p)

The key news today: Altman had attacked Helen Toner https://www.nytimes.com/2023/11/21/technology/openai-altman-board-fight.html (HN, Zvi [LW · GW]; excerpts) Which explains everything if you recall board structures and voting.

Altman and the board had been unable to appoint new directors because there was an even balance of power, so during the deadlock/low-grade cold war, the board had attrited down to hardly any people. He thought he had Sutskever on his side, so he moved to expel Helen Toner from the board. He would then be able to appoint new directors of his choice. This would have irrevocably tipped the balance of power towards Altman. But he didn't have Sutskever like he thought he did, and they had, briefly, enough votes to fire Altman before he broke Sutskever (as he did yesterday), and they went for the last-minute hail-mary with no warning to anyone.

As always, "one story is good, until another is told"...

Replies from: gwern, lc, Lukas_Gloor, Benito, tristan-wegner, faul_sname↑ comment by gwern · 2023-11-25T15:25:48.361Z · LW(p) · GW(p)

The WSJ has published additional details about the Toner fight, filling in the other half of the story. The NYT merely mentions the OA execs 'discussing' it, but the WSJ reports much more specifically that the exec discussion of Toner was a Slack channel that Sutskever was in, and that approximately 2 days before the firing and 1 day before Mira was informed* (ie. the exact day Ilya would have flipped if they had then fired Altman about as fast as possible to schedule meetings 48h before & vote), he saw them say that the real problem was EA and that they needed to get rid of EA associations.

https://www.wsj.com/tech/ai/altman-firing-openai-520a3a8c (excerpts)

The specter of effective altruism had loomed over the politics of the board and company in recent months, particularly after the movement’s most famous adherent, Sam Bankman-Fried, the founder of FTX, was found guilty of fraud in a highly public trial.

Some of those fears centered on Toner, who previously worked at Open Philanthropy. In October, she published an academic paper touting the safety practices of OpenAI’s competitor, Anthropic, which didn’t release its own AI tool until ChatGPT’s emergence. “By delaying the release of Claude until another company put out a similarly capable product, Anthropic was showing its willingness to avoid exactly the kind of frantic corner-cutting that the release of ChatGPT appeared to spur,” she and her co-authors wrote in the paper. Altman confronted her, saying she had harmed the company, according to people familiar with the matter. Toner told the board that she wished she had phrased things better in her writing, explaining that she was writing for an academic audience and didn’t expect a wider public one. Some OpenAI executives told her that everything relating to their company makes its way into the press.

OpenAI leadership and employees were growing increasingly concerned about being painted in the press as “a bunch of effective altruists,” as one of them put it. Two days before Altman’s ouster, they were discussing these concerns on a Slack channel, which included Sutskever. One senior executive wrote that the company needed to “uplevel” its “independence”—meaning create more distance between itself and the EA movement.

OpenAI had lost three board members over the past year, most notably Reid Hoffman [who turns out to have been forced out by Altman over 'conflicts of interest', triggering the stalemate], the LinkedIn co-founder and OpenAI investor who had sold his company to Microsoft and been a key backer of the plan to create a for-profit subsidiary. Other departures were Shivon Zilis, an executive at Neuralink, and Will Hurd, a former Texas congressman. The departures left the board tipped toward academics and outsiders less loyal to Altman and his vision.

So this answers the question everyone has been asking: "what did Ilya see?" It wasn't Q*, it was OA execs letting the mask down and revealing Altman's attempt to get Toner fired was motivated by reasons he hadn't been candid about. In line with Ilya's abstract examples of what Altman was doing, Altman was telling different board members (allies like Sutskever vs enemies like Toner) different things about Toner.

This answers the "why": because it yielded a hard, screenshottable-with-receipts case of Altman manipulating the board in a difficult-to-explain-away fashion - why not just tell the board that "the EA brand is now so toxic that you need to find safety replacements without EA ties"? Why deceive and go after them one by one without replacements proposed to assure them about the mission being preserved? (This also illustrates the "why not" tell people about this incident: these were private, confidential discussions among rich powerful executives who would love to sue over disparagement or other grounds.) Previous Altman instances were either done in-person or not documented, but Altman has been so busy this year traveling and fundraising that he has had to do a lot of things via 'remote work', one might say, where conversations must be conducted on-the-digital-record. (Really, Matt Levine will love all this once he catches up.)

This also answers the "why now?" question: because Ilya saw that conversation on 15 November 2023, and not before.

This eliminates any role for Q*: sure, maybe it was an instance of lack of candor or a capabilities advance that put some pressure on the board, but unless something Q*-related also happened that day, there is no longer any explanatory role. (But since we can now date Sutskever's flip to 15 November 2023, we can answer the question of "how could the board be deceived about Q* when Sutskever would be overseeing or intimately familiar with every detail?" Because he was still acting as part of the Altman faction - he might well be telling the safety board members covertly, depending on how disaffected he became earlier on, but he wouldn't be overtly piping up about Q* in meetings or writing memos to the board about it unless Altman wanted him to. A single board member knowing != "the board candidly kept in the loop".)

This doesn't quite answer the 'why so abruptly?' question. If you don't believe that a board should remove a CEO as fast as possible when they believe the CEO has been systematically deceiving them for a year and manipulating the board composition to remove all oversight permanently, then this still doesn't directly explain why they had to move so fast. It does give one strong clue: Altman was trying to wear down Toner, but he had other options - if there was not any public scandal about the paper (which there was not, no one had even noticed it), well, there's nothing easier to manufacture for someone so well connected, as some OA executives informed Toner:

Some OpenAI executives told her that everything relating to their company makes its way into the press.

This presumably sounded like a well-intended bit of advice at the time, but takes on a different set of implications in retrospect. Amazing how journalists just keep hearing things about OA from little birds, isn't it? And they write those articles and post them online or on Twitter so quickly, too, within minutes or hours of the original tip. And Altman/Brockman would, of course, have to call an emergency last-minute board meeting to deal with this sudden crisis which, sadly, proved him right about Toner. If only the board had listened to him earlier! But they can fix it now...

Unfortunately, this piecemeal description by WSJ leaves out the larger conversational context of that Slack channel, which would probably clear up a lot. For example, the wording is consistent with them discussing how to fire just Toner, but it's also consistent with that being just the first step in purging all EA-connected board members & senior executives - did they? If they did, that would be highly alarming and justify a fast move: eg. firing people is a lot easier than unfiring them, and would force a confrontation they might lose and would wind up removing Altman even if they won. (Particularly if we do not give in to hindsight bias and remember that in the first day, everyone, including insiders, thought the firing would stick and so Altman - who had said the board should be able to fire him and personally designed OA that way - would simply go do a rival startup elsewhere.)

Emmett Shear apparently managed to insist on an independent investigation, and I expect that this Slack channel discussion will be a top priority of a genuine investigation. As Slack has regulator & big-business-friendly access controls, backups, and logs, it should be hard for them to scrub all the traces now; any independent investigation will look for deletions by the executives and draw adverse inferences.

(The piecemeal nature of the Toner revelations, where each reporter seems to be a blind man groping one part of the elephant, suggests to me that the NYT & WSJ are working from leaks based on a summary rather than the originals or a board member leaking the whole story to them. Obviously, the flip-flopped Sutskever and the execs in question, who are the only ones who would have access post-firing, are highly unlikely to be leaking private Slack channel discussions, so this information is likely coming from before the firing, so board discussions or documents, where there might be piecemeal references or quotes. But I could be wrong here. Maybe they are deliberately being cryptic to protect their source, or something, and people are just too ignorant to read between the lines. Sort of like Umbridge's speech on a grand scale.)

* note that this timeline is consistent with what Habryka [LW(p) · GW(p)] says about Toner still scheduling low-priority ordinary meetings like normal just a few days before - which implies she had no idea things were about to happen.

Replies from: gwern, Yvain↑ comment by gwern · 2023-12-01T17:49:12.995Z · LW(p) · GW(p)

The NYer has confirmed that Altman's attempted coup was the cause of the hasty firing (excerpts; HN):

Replies from: gwern...Some members of the OpenAI board had found Altman an unnervingly slippery operator. For example, earlier this fall he’d confronted one member, Helen Toner, a director at the Center for Security and Emerging Technology, at Georgetown University, for co-writing a paper that seemingly criticized OpenAI for “stoking the flames of AI hype.” Toner had defended herself (though she later apologized to the board for not anticipating how the paper might be perceived). Altman began approaching other board members, individually, about replacing her. When these members compared notes about the conversations, some felt that Altman had misrepresented them as supporting Toner’s removal. “He’d play them off against each other by lying about what other people thought”, the person familiar with the board’s discussions told me. “Things like that had been happening for years.” (A person familiar with Altman’s perspective said that he acknowledges having been “ham-fisted in the way he tried to get a board member removed”, but that he hadn’t attempted to manipulate the board.)

...His tactical skills were so feared that, when 4 members of the board---Toner, D’Angelo, Sutskever, and Tasha McCauley---began discussing his removal, they were determined to guarantee that he would be caught by surprise. “It was clear that, as soon as Sam knew, he’d do anything he could to undermine the board”, the person familiar with those discussions said...Two people familiar with the board’s thinking say that the members felt bound to silence by confidentiality constraints...But whenever anyone asked for examples of Altman not being “consistently candid in his communications”, as the board had initially complained, its members kept mum, refusing even to cite Altman’s campaign against Toner.

...The dismissed board members, meanwhile, insist that their actions were wise. “There will be a full and independent investigation, and rather than putting a bunch of Sam’s cronies on the board we ended up with new people who can stand up to him”, the person familiar with the board’s discussions told me. “Sam is very powerful, he’s persuasive, he’s good at getting his way, and now he’s on notice that people are watching.” Toner told me, “The board’s focus throughout was to fulfill our obligation to OpenAI’s mission.” (Altman has told others that he welcomes the investigation---in part to help him understand why this drama occurred, and what he could have done differently to prevent it.)

Some A.I. watchdogs aren’t particularly comfortable with the outcome. Margaret Mitchell, the chief ethics scientist at Hugging Face, an open-source A.I. platform, told me, “The board was literally doing its job when it fired Sam. His return will have a chilling effect. We’re going to see a lot less of people speaking out within their companies, because they’ll think they’ll get fired---and the people at the top will be even more unaccountable.”

Altman, for his part, is ready to discuss other things. “I think we just move on to good governance and good board members and we’ll do this independent review, which I’m super excited about”, he told me. “I just want everybody to move on here and be happy. And we’ll get back to work on the mission”.

↑ comment by gwern · 2023-12-04T16:48:58.592Z · LW(p) · GW(p)

I left a comment over on EAF [EA(p) · GW(p)] which has gone a bit viral, describing the overall picture of the runup to the firing as I see it currently.

The summary is: evaluations of the Board's performance in firing Altman generally ignore that Altman made OpenAI and set up all of the legal structures, staff, and the board itself; the Board could, and should, have assumed good faith of Altman because if he hadn't been sincere, why would he have done all that, proving in extremely costly and unnecessary ways his sincerity? But, as it happened, OA recently became such a success that Altman changed his mind about the desirability of all that and now equally sincerely believes that the mission requires him to be in total control; and this is why he started to undermine the board. The recency is why it was so hard for them to realize that change of heart or develop common knowledge about it or coordinate to remove him given his historical track record - but that historical track record was also why if they were going to act against him at all, it needed to be as fast & final as possible. This led to the situation becoming a powder keg, and when proof of Altman's duplicity in the Toner firing became undeniable to the Board, it exploded.

Replies from: gwern, lc↑ comment by gwern · 2023-12-06T21:20:28.441Z · LW(p) · GW(p)

Latest news: Time sheds considerably more light on the board position, in its discouragingly-named piece "2023 CEO of the Year: Sam Altman" (excerpts; HN). While it sounds & starts like a puff piece (no offense to Ollie - cute coyote photos!), it actually contains a fair bit of leaking I haven't seen anywhere else. Most strikingly:

-

claims that the Board thought it had the OA executives on its side, because the executives had approached it about Altman:

The board expected pressure from investors and media. But they misjudged the scale of the blowback from within the company, in part because they had reason to believe the executive team would respond differently, according to two people familiar with the board’s thinking, who say the board’s move to oust Altman was informed by senior OpenAI leaders, who had approached them with a variety of concerns about Altman’s behavior and its effect on the company’s culture.

(The wording here strongly implies it was not Sutskever.) This of course greatly undermines the "incompetent Board" narrative, possibly explains both why the Board thought it could trust Mira Murati & why she didn't inform Altman ahead of time (was she one of those execs...?), and casts further doubt on the ~100% signature rate of the famous OA employee letter.

Now that it's safe(r) to say negative things about Altman, because it has become common knowledge that he was fired from Y Combinator and there is an independent investigation planned at OA, it seems that more of these incidents have been coming to light.

-

confirms my earlier interpretation that at least one of the dishonesties was specifically lying to a board member that another member wanted to immediately fire Toner to manipulate them into her ouster:

One example came in late October, when an academic paper Toner wrote in her capacity at Georgetown was published. Altman saw it as critical of OpenAI’s safety efforts and sought to push Toner off the board. Altman told one board member that another believed Toner ought to be removed immediately, which was not true, according to two people familiar with the discussions.

This episode did not spur the board’s decision to fire Altman, those people say, but it was representative of the ways in which he tried to undermine good governance, and was one of several incidents that convinced the quartet that they could not carry out their duty of supervising OpenAI’s mission if they could not trust Altman. Once the directors reached the decision, they felt it was necessary to act fast, worried Altman would detect that something was amiss and begin marshaling support or trying to undermine their credibility. “As soon as he had an inkling that this might be remotely on the table,” another of the people familiar with the board’s discussions says, “he would bring the full force of his skills and abilities to bear.”

EDIT: WSJ (excerpts) is also reporting this, by way of a Helen Toner interview (which doesn't say much on the record, but does provide context for why she said that quote everyone used as a club against her: an OA lawyer lied to her about her 'fiduciary duties' while threatening to sue & bankrupt, and she got mad and pointed out that even outright destroying OA would be consistent with the mission & charter so she definitely didn't have any 'fiduciary duty' to maximize OA profits).

Unfortunately, the Time article, while seeming to downplay how much the Toner incident mattered by saying it didn't "spur" the decision, doesn't explain what did spur it, nor refer to the Sutskever Slack discussion AFAICT. So I continue to maintain that Altman was moving to remove Toner so urgently in order to hijack the board, and that this attempt was one of the major concerns, and his deception around Toner's removal, and particularly the executives discussing the EA purge, was probably the final proximate cause which was concrete enough & came with enough receipts to remove whatever doubt they had left (whether that was "the straw that broke the camel's back" or "the smoking gun").

-

continues to undermine 'Q* truthers' by not even mentioning it (except possibly a passing reference by Altman at the end to "doubling down on certain research areas")

The article does provide good color and other details I won't try to excerpt in full (although some are intriguing - where, exactly, was this feedback to Altman about him being dishonest in order to please people?), eg:

Replies from: gwern...Altman, 38, has been Silicon Valley royalty for a decade, a superstar founder with immaculate vibes...Interviews with more than 20 people in Altman’s circle—including current and former OpenAI employees, multiple senior executives, and others who have worked closely with him over the years—reveal a complicated portrait. Those who know him describe Altman as affable, brilliant, uncommonly driven, and gifted at rallying investors and researchers alike around his vision of creating artificial general intelligence (AGI) for the benefit of society as a whole. But four people who have worked with Altman over the years also say he could be slippery—and at times, misleading and deceptive. Two people familiar with the board’s proceedings say that Altman is skilled at manipulating people, and that he had repeatedly received feedback that he was sometimes dishonest in order to make people feel he agreed with them when he did not. [see also Joshua Achiam's defense of Altman] These people saw this pattern as part of a broader attempt to consolidate power. “In a lot of ways, Sam is a really nice guy; he’s not an evil genius. It would be easier to tell this story if he was a terrible person,” says one of them. “He cares about the mission, he cares about other people, he cares about humanity. But there’s also a clear pattern, if you look at his behavior, of really seeking power in an extreme way.” An OpenAI spokesperson said the company could not comment on the events surrounding Altman’s firing. “We’re unable to disclose specific details until the board’s independent review is complete. We look forward to the findings of the review and continue to stand behind Sam,” the spokesperson said in a statement to TIME. “Our primary focus remains on developing and releasing useful and safe AI, and supporting the new board as they work to make improvements to our governance structure.”

↑ comment by gwern · 2023-12-09T00:20:15.544Z · LW(p) · GW(p)

If you've noticed OAers being angry on Twitter today, and using profanity & bluster and having oddly strong opinions about how it is important to refer to roon as @tszzl and never as @roon, it's because another set of leaks has dropped, and they are again unflattering to Sam Altman & consistent with the previous ones.

Today the Washington Post adds to the pile, "Warning from OpenAI leaders helped trigger Sam Altman’s ouster: The senior employees described Altman as psychologically abusive, creating delays at the artificial-intelligence start-up — complaints that were a major factor in the board’s abrupt decision to fire the CEO" (archive.is; HN; excerpts), which confirms the Time/WSJ reporting about executives approaching the board with concerns about Altman, and adds on more details - their concerns did not relate to the Toner dispute, but apparently was about regular employees:

This fall, a small number of senior leaders approached the board of OpenAI with concerns about chief executive Sam Altman. Altman---a revered mentor, prodigious start-up investor and avatar of the AI revolution---had been psychologically abusive, the employees said, creating pockets of chaos and delays at the artificial-intelligence start-up, according to two people familiar with the board’s thinking who spoke on the condition of anonymity to discuss sensitive internal matters. The company leaders, a group that included key figures and people who manage large teams, mentioned Altman’s allegedly pitting employees against each other in unhealthy ways, the people said. [The executives approaching the board were previously published in Time/WSJ, and the chaos hinted at in The Atlantic but this appears to add some more detail.]

...these complaints echoed their [the board's] interactions with Altman over the years, and they had already been debating the board’s ability to hold the CEO accountable. Several board members thought Altman had lied to them, for example, as part of a campaign to remove board member Helen Toner after she published a paper criticizing OpenAI, the people said.

The new complaints triggered a review of Altman’s conduct during which the board weighed the devotion Altman had cultivated among factions of the company against the risk that OpenAI could lose key leaders who found interacting with him highly toxic. They also considered reports from several employees who said they feared retaliation from Altman: One told the board that Altman was hostile after the employee shared critical feedback with the CEO and that he undermined the employee on that person’s team, the people said.

The complaints about Altman’s alleged behavior, which have not previously been reported, were a major factor in the board’s abrupt decision to fire Altman on November 17. Initially cast as a clash over the safe development of artificial intelligence, Altman’s firing was at least partially motivated by the sense that his behavior would make it impossible for the board to oversee the CEO.

(I continue to speculate Superalignment was involved, if only due to their enormous promised compute-quota and small headcount, but the wording here seems like it involved more than just a single team or group, and also points back to some of the earlier reporting and the other open letter, so there may be many more incidents than appreciated.)

Replies from: gwern, Wei_Dai, bec-hawk↑ comment by gwern · 2023-12-12T00:43:50.642Z · LW(p) · GW(p)

An elaboration on the WaPo article in the 2023-12-09 NYT: “Inside OpenAI’s Crisis Over the Future of Artificial Intelligence: Split over the Leadership of Sam Altman, Board Members and Executives Turned on One Another. Their Brawl Exposed the Cracks at the Heart of the AI Movement” (excerpts). Mostly a gossipy narrative from both the Altman & D'Angelo sides, so I'll just copy over my HN comment:

-

another reporting of internal OA complaints about Altman's manipulative/divisive behavior, see previously on HN

-

previously we knew Altman had been dividing-and-conquering the board by lying about others wanted to fire Toner, this says that specifically, Altman had lied about McCauley wanting to fire Toner; presumably, this was said to D'Angelo.

-

Concerns over Tigris had been mooted, but this says specifically that the board thought Altman had not been forthcoming about it; still unclear if he had tried to conceal Tigris entirely or if he had failed to mention something more specific like who he was trying to recruit for capital.

-

Sutskever had threatened to quit after Jakub Pachocki's promotion; previous reporting had said he was upset about it, but hadn't hinted at him being so angry as to threaten to quit OA

Sutskever doesn't seem to be too rank/promotion-hungry (why would he be? he is, to quote one article 'a god of AI' and is now one of the most-cited researchers ever) and one would think it would take a lot for him to threaten to quit OA... Coverage thus far seems to be content to take the attitude that he must be some sort of 5-year-old child throwing a temper tantrum over a slight, but I find this explanation inadequate.

I suspect there is much more to this thread, and it may tie back to Superalignment & broken promises about compute-quotas. (Permanently subverting or killing safety research does seem like adequate grounds for Sutskever to deliver an ultimatum, for the same reasons that the Board killing OA can be the best of several bad options.)

-

Altman was 'bad-mouthing the board to OpenAI executives'; this likely refers to the Slack conversation Sutskever was involved in reported by WSJ a while ago about how they needed to purge everyone EA-connected

-

Altman was initially going to cooperate and even offered to help, until Brian Chesky & Ron Conway riled him up. (I believe this because it is unflattering to Altman.)

-

the OA outside lawyer told them they needed to clam up and not do PR like the Altman faction was

-

both sides are positioning themselves for the independent report overseen by Summers as the 'broker'; hence, Altman/Conway leaking the texts quoted at the end posturing about how 'the board wants silence' (not that one could tell from the post-restoration leaking & reporting...) and how his name needs to be cleared.

-

Paul Graham remains hilariously incapable of saying anything unambiguously nice about Altman

One additional thing I'd note: the NYT mentions a quotes from Whatsapp channel that contained hundreds of top SV execs & VCs. This is the sort of thing that you always suspected existed given how coordinated some things seem to be, but this is a striking confirmation of existence. It is also, given the past history of SV like the big wage-fixing scandals of Steve Jobs et al, something I expect contains a lot of statements that they really would not want a prosecutor seeing. One wonders how prudent they have been about covering up message history, and complying with SEC & other regulatory rules about destruction of logs, and if some subpoenas are already winging their way out?

EDIT: Zvi commentary reviewing the past few articles, hitting most of the same points: https://thezvi.substack.com/p/openai-leaks-confirm-the-story/

Replies from: gwern, gwern↑ comment by gwern · 2023-12-24T20:51:59.152Z · LW(p) · GW(p)

The WSJ dashes our hopes for a quiet Christmas by dropping on Christmas Eve a further extension of all this reporting: "Sam Altman’s Knack for Dodging Bullets—With a Little Help From Bigshot Friends: The OpenAI CEO lost the confidence of top leaders in the three organizations he has directed, yet each time he’s rebounded to greater heights", Seetharam et al 2024-12-24 (Archive.is, HN; annotated excerpts).

This article confirms - among other things - what I suspected about there being an attempt to oust Altman from Loopt for the same reasons as YC/OA, adds some more examples of Altman amnesia & behavior (including what is, since people apparently care, being caught in a clearcut unambiguous public lie), names the law firm in charge of the report (which is happening), and best of all, explains why Sutskever was so upset about the Jakub Pachocki promotion.

-

Loopt coup: Vox had hinted at this in 2014 but it was unclear; however, WSJ specifically says that Loopt was in chaos and Altman kept working on side-projects while mismanaging Loopt (so, nearly identical to the much later, unconnected, YC & OA accusations), leading to the 'senior employees' to (twice!) appeal to the board to fire Altman. You know who won. To quote one of his defenders:

“If he imagines something to be true, it sort of becomes true in his head,” said Mark Jacobstein, co-founder of Jimini Health who served as Loopt’s chief operating officer. “That is an extraordinary trait for entrepreneurs who want to do super ambitious things. It may or may not lead one to stretch, and that can make people uncomfortable.”

-

Sequoia Capital: the journalists also shed light on the Loopt acquisition. There have long been rumors about the Loopt acquisition by Green Dot being shady (also covered in that Vox article), especially as Loopt didn't seem to go anywhere under Green Dot so it hardly looked like a great or natural acquisition - but it was unclear how and the discussions seemed to guess that Altman had sold Loopt in a way which made him a lot of money but shafted investors. But it seems that what actually happened was that, again on the side of his Loopt day-job, Altman was doing freelance VC work for Sequoia Capital, and was responsible for getting them into one of the most lucrative startup rounds ever, Stripe. Sequoia then 'helped engineer an acquisition by another Sequoia-backed company', Green Dot.

The journalists don't say this, but the implication here is that Loopt's acquisition was a highly-deniable kickback to Altman from Sequoia for Stripe & others.

-

Greg Brockman: also Stripe-related, Brockman's apparently intense personal loyalty to Altman may stem from this period, where Altman apparently did Brockman a big favor by helping broker the sale of his Stripe shares.

-

-

YC firing: some additional details like Jessica Livingston instigating it, one grievance being his hypocrisy over banning outside funds for YC partners (other than him), and also a clearcut lie by Altman: he posted the YC announcement blog post saying he had been moved to YC Chairman... but YC had not, and never did, agreed to that. So that's why the YC announcements kept getting edited - he'd tried to hustle them into appointing him Chairman to save his face.

To smooth his exit, Altman proposed he move from president to chairman. He pre-emptively published a blog post on the firm’s website announcing the change. But the firm’s partnership had never agreed, and the announcement was later scrubbed from the post.

Nice try, but no cigar. (This is something to keep in mind given my earlier comments about Altman talking about his pride in creating a mature executive team etc - if, after the report is done, he stops being CEO and becomes OA board chairman, that means he's been kicked out of OA.)

-

Ilya Sutskever: as mentioned above, I felt that we did not have the full picture why Sutskever was so angered by Jakub Pachocki's promotion. This answers it! Sutskever was angry because he has watched Altman long enough to understand what the promotion meant:

In early fall this year, Ilya Sutskever, also a board member, was upset because Altman had elevated another AI researcher, Jakub Pachocki, to director of research, according to people familiar with the matter. Sutskever told his board colleagues that the episode reflected a long-running pattern of Altman’s tendency to pit employees against one another or promise resources and responsibilities to two different executives at the same time, yielding conflicts, according to people familiar with the matter…Altman has said he runs OpenAI in a “dynamic” fashion, at times giving people temporary leadership roles and later hiring others for the job. He also reallocates computing resources between teams with little warning, according to people familiar with the matter. [cf. Atlantic, WaPo, the anonymous letter]

Ilya recognized the pattern perhaps in part because he has receipts:

In early October, OpenAI’s chief scientist approached some fellow board members to recommend Altman be fired, citing roughly 20 examples of when he believed Altman misled OpenAI executives over the years. That set off weeks of closed-door talks, ending with Altman’s surprise ouster days before Thanksgiving.

-

Speaking of receipts, the law firm for the independent report has been chosen: WilmerHale. Unclear if they are investigating yet, but I continue to doubt that it will be done before the tender closes early next month.

-

the level of sourcing indicates Altman's halo is severely damaged ("This article is based on interviews with dozens of executives, engineers, current and former employees and friend’s of Altman’s, as well as investors."). Before, all of this was hidden; as the article notes of the YC firing:

For years, even some of Altman’s closest associates—including Peter Thiel, Altman’s first backer for Hydrazine—didn’t know the circumstances behind Altman’s departure.

If even Altman's mentor didn't know, no wonder no one else seems to have known - aside from those directly involved in the firing, like, for example, YC board member Emmett Shear. But now it's all on the record, with even Graham & Livingston acknowledging the firing (albeit quibbling a little: come on, Graham, if you 'agree to leave immediately', that's still 'being fired').

-

Tash McCauley's role finally emerges a little more: she had been trying to talk to OA executives without Altman's presence, and Altman demanded to be informed of any Board communication with employees. It's unclear if he got his way.

So, a mix of confirmation and minor details continuing to flesh out the overall saga of Sam Altman as someone who excels at finance, corporate knife-fighting, & covering up manipulation but who is not actually that good at managing or running a company (reminiscent of Xi Jinping), and a few surprises for me.

On a minor level, if McCauley had been trying to talk to employees, then it's more likely that she was the one that the whistleblowers like Nathan Labenz had been talking to rather than Helen Toner; Toner might have been just the weakest link in her public writings providing a handy excuse. (...Something something 5 lines by the most honest of men...) On a more important level, if Sutskever has a list of 20 documented instances (!) of Altman lying to OA executives (and the Board?), then the Slack discussion may not have been so important after all, and Altman may have good reason to worry - he keeps saying he doesn't recall any of these unfortunate episodes, and it is hard to defend yourself if you can no longer remember what might turn up...

Replies from: gwern, bec-hawk↑ comment by gwern · 2024-03-08T00:12:45.175Z · LW(p) · GW(p)

An OA update: it's been quiet, but the investigation is over. And Sam Altman won. (EDIT: yep [LW(p) · GW(p)].)

To recap, because I believe I haven't been commenting on this since December (this is my last big comment, skimming my LW profile): WilmerHale was brought in to do the investigation. The tender offer, to everyone's relief, went off. A number of embarrassing new details about Sam Altman have surfaced: in particular, about his enormous chip fab plan with substantial interest from giants like Temasek, and how the OA VC Fund turns out to be owned by Sam Altman (his explanation was it saved some paperwork and he just forgot to ever transfer it to OA). Ilya Sutskever remains in hiding and lawyered up (his silence became particularly striking with the release of Sora). There have been increasing reports the past week or two that the WilmerHale investigation was coming to a close - and I am told that the investigators were not offering confidentiality and the investigation was narrowly scoped to the firing. (There was also some OA drama with the Musk lawfare & the OA response, but aside from offering an abject lesson in how not to redact sensitive information, it's both irrelevant & unimportant.)

The news today comes from the NYT leaking information from the final report: "Key OpenAI Executive [Mira Murati] Played a Pivotal Role in Sam Altman’s Ouster" (mirror; EDIT: largely confirmed by Murati in internal note).

The main theme of the article is clarifying Murati's role: as I speculated, she was in fact telling the Board about Altman's behavior patterns, and it fills in that she had gone further and written it up in a memo to him, and even threatened to leave with Sutskever.

But it reveals a number of other important claims: the investigation is basically done and wrapping up. The new board apparently has been chosen. Sutskever's lawyer has gone on the record stating that Sutskever did not approach the board about Altman (?!). And it reveals the board confronted Altman over his ownership of the OA VC Fund (in addition to all his many other compromises of interest**) imply.

So, what does that mean?

First, as always, in a war of leaks, cui bono? Who is leaking this to the NYT? Well, it's not the pro-Altman faction: they are at war with the NYT, and these leaks do them no good whatsoever. It's not the lawyers: these are high-powered elite lawyers, hired for confidentiality and discretion. It's not Murati or Sutskever, given their lack of motive, and the former's panicked internal note & Sutskever's lawyer's denial. Of the current interim board (which is about to finish its job and leave, handing it over to the expanded replacement board), probably not Larry Summers/Brett Taylor - they were brought on to oversee the report as neutral third party arbitrators, and if they (a simple majority of the temporary board) want something in their report, no one can stop them from putting it there. It could be Adam D'Angelo or the ex-board: they are the ones who don't control the report, and they also already have access to all of the newly-leaked-but-old information about Murati & Sutskever & the VC Fund.

So, it's the anti-Altman faction, associated with the old board. What does that mean?

I think that what this leak indirectly reveals is simple: Sam Altman has won. The investigation will exonerate him, and it is probably true that it was so narrowly scoped from the beginning that it was never going to plausibly provide grounds for his ouster. What these leaks are, are a loser's spoiler move: the last gasps of the anti-Altman faction, reduced to leaking bits from the final report to friendly media (Metz/NYT) to annoy Altman, and strike first. They got some snippets out before the Altman faction shops around highly selective excerpts to their own friendly media outlets (the usual suspects - The Information, Semafor, Kara Swisher) from the final officialized report to set the official record (at which point the rest of the confidential report is sent down the memory hole). Welp, it's been an interesting few months, but l'affaire Altman is over. RIP.

Evidence, aside from simply asking who benefits from these particular leaks at the last minute, is that Sutskever remains in hiding & his lawyer is implausibly denying he had anything to do with it, while if you read Altman on social media, you'll notice that he's become ever more talkative since December, particularly in the last few weeks - glorying in the instant memeification of '$7 trillion' - as has OA PR* and we have heard no more rhetoric about what an amazing team of execs OA has and how he's so proud to have tutored them to replace him. Because there will be no need to replace him now. The only major reasons he will have to leave is if it's necessary as a stepping stone to something even higher (eg. running the $7t chip fab consortium, running for US President) or something like a health issue.

So, upshot: I speculate that the report will exonerate Altman (although it can't restore his halo, as it cannot & will not address things like his firing from YC which have been forced out into public light by this whole affair) and he will be staying as CEO and may be returning to the expanded board; the board will probably include some weak uncommitted token outsiders for their diversity and independence, but have an Altman plurality and we will see gradual selective attrition/replacement in favor of Altman loyalists until he has a secure majority robust to at least 1 flip and preferably 2. Having retaken irrevocable control of OA, further EA purges should be unnecessary, and Altman will probably refocus on the other major weakness exposed by the coup: the fact that his frenemy MS controls OA's lifeblood. (The fact that MS was such a potent weapon for Altman in the fight is a feature while he's outside the building, but a severe bug once he's back inside.) People are laughing at the '$7 trillion'. But Altman isn't laughing. Those GPUs are life and death for OA now. And why should he believe he can't do it? Things have always worked out for him before...

Predictions, if being a bit more quantitative will help clarify my speculations here: Altman will still be CEO of OA on June 1st (85%); the new OA board will include Altman (60%); Ilya Sutskever and Mira Murati will leave OA or otherwise take on some sort of clearly diminished role by year-end (90%, 75%; cf. Murati's desperate-sounding internal note); the full unexpurgated non-summary report will not be released (85%, may be hard to judge because it'd be easy to lie about); serious chip fab/Tigris efforts will continue (75%); Microsoft's observer seat will be upgraded to a voting seat (25%).

* Eric Newcomer (usually a bit more acute than this) asks "One thing that I find weird: OpenAI comms is giving very pro Altman statements when the board/WilmerHale are still conducting the investigation. Isn't communications supposed to work for the company, not just the CEO? The board is in charge here still, no?" NARRATOR: "The board is not in charge still."

** Compare the current OA PR statement on the VC Fund to Altman's past position on, say, Helen Toner or Reid Hoffman or Shivon Zilis, or Altman's investment in chip startups touting letters of commitment from OA or his ongoing Hydrazine investment in OA which sadly, he has never quite had the time to dispose of in any of the OA tender offers. As usual, CoIs only apply to people Altman doesn't trust - "for my friends, everything; for my enemies, the law".

EDIT: Zvi commentary: https://thezvi.substack.com/p/openai-the-board-expands

Replies from: gwern, matthew-barnett, Zach Stein-Perlman↑ comment by gwern · 2024-09-25T19:49:20.351Z · LW(p) · GW(p)

Ilya Sutskever and Mira Murati will leave OA or otherwise take on some sort of clearly diminished role by year-end (90%, 75%; cf. Murati's desperate-sounding internal note)

Mira Murati announced today she is resigning from OA. (I have also, incidentally, won a $1k bet with an AI researcher on this prediction.)

Replies from: nikita-sokolsky↑ comment by nsokolsky (nikita-sokolsky) · 2024-09-25T23:19:10.042Z · LW(p) · GW(p)

Do you think this will have any impact on OpenAI's future revenues / ability to deliver frontier-level models?

Replies from: gwern↑ comment by gwern · 2024-09-25T23:55:50.595Z · LW(p) · GW(p)

See my earlier comments on 23 June 2024 about what 'OA rot' would look like; I do not see any revisions necessary given the past 3 months.

As for Murati finally leaving (perhaps she was delayed by the voice shipping delays), I don't think it matters too much as far as I could tell (not like Sutskever or Brockman leaving), she was competent but not critical; probably the bigger deal is that her leaving is apparently a big surprise to a lot of OAers (maybe I should've taken more bets?), and so will come as a blow to morale and remind people of last year's events.

EDIT: Zoph Barret & Bob McGrew are now gone too. Altman has released a statement, confirming that Murati only quit today:

...When Mira [Murati] informed me this morning that she was leaving, I was saddened but of course support her decision. For the past year, she has been building out a strong bench of leaders that will continue our progress.

I also want to share that Bob [McGrew] and Barret [Zoph] have decided to depart OpenAI. Mira, Bob, and Barret made these decisions independently of each other and amicably, but the timing of Mira’s decision was such that it made sense to now do this all at once, so that we can work together for a smooth handover to the next generation of leadership.

...Mark [Chen] is going to be our new SVP of Research and will now lead the research org in partnership with Jakub [Pachocki] as Chief Scientist. This has been our long-term succession plan for Bob someday; although it’s happening sooner than we thought, I couldn’t be more excited that Mark is stepping into the role. Mark obviously has deep technical expertise, but he has also learned how to be a leader and manager in a very impressive way over the past few years.

Josh[ua] Achiam is going to take on a new role as Head of Mission Alignment, working across the company to ensure that we get all pieces (and culture) right to be in a place to succeed at the mission.

...Mark, Jakub, Kevin, Srinivas, Matt, and Josh will report to me. I have over the past year or so spent most of my time on the non-technical parts of our organization; I am now looking forward to spending most of my time on the technical and product parts of the company.

...Leadership changes are a natural part of companies, especially companies that grow so quickly and are so demanding. I obviously won’t pretend it’s natural for this one to be so abrupt, but we are not a normal company, and I think the reasons Mira explained to me (there is never a good time, anything not abrupt would have leaked, and she wanted to do this while OpenAI was in an upswing) make sense.

(I wish Dr Achiam much luck in his new position at Hogwarts.)

Replies from: T3t↑ comment by RobertM (T3t) · 2024-09-26T01:29:37.324Z · LW(p) · GW(p)

It does not actually make any sense to me that Mira wanted to prevent leaks, and therefore didn't even tell Sam that she was leaving ahead of time. What would she be afraid of, that Sam would leak the fact that she was planning to leave... for what benefit?

Possibilities:

- She was being squeezed out, or otherwise knew her time was up, and didn't feel inclined to make it a maximally comfortable parting for OpenAI. She was willing to eat the cost of her own equity potentially losing a bunch of value if this derailed the ongoing investment round, as well as the reputational cost of Sam calling out the fact that she, the CTO of the most valuable startup in the world, resigned with no notice for no apparent good reason.

- Sam is lying or otherwise being substantially misleading about the circumstances of Mira's resignation, i.e. it was not in fact a same-day surprise to him. (And thinks she won't call him out on it?)

- ???

↑ comment by Matthew Barnett (matthew-barnett) · 2024-03-08T22:12:05.386Z · LW(p) · GW(p)

the new OA board will include Altman (60%)

Looks like you were right, at least if the reporting in this article is correct, and I'm interpreting the claim accurately.

Replies from: gwern↑ comment by gwern · 2024-03-08T22:26:54.370Z · LW(p) · GW(p)

At least from the intro, it sounds like my predictions were on-point: re-appointed Altman (I waffled about this at 60% because while his narcissism/desire to be vindicated requires him to regain his board seat, because anything less is a blot on his escutcheon, and also the pragmatic desire to lock down the board, both strongly militated for his reinstatement, it also seems so blatant a powergrab in this context that surely he wouldn't dare...? guess he did), released to an Altman outlet (The Information), with 3 weak apparently 'independent' and 'diverse' directors to pad out the board and eventually be replaced by full Altman loyalists - although I bet if one looks closer into these three women (Sue Desmond-Hellmann, Nicole Seligman, & Fidji Simo), one will find at least one has buried Altman ties. (Fidji Simo, Instacart CEO, seems like the most obvious one there: Instacart was YC S12.)

Replies from: gwern↑ comment by gwern · 2024-03-08T23:14:16.935Z · LW(p) · GW(p)

The official OA press releases are out confirming The Information: https://openai.com/blog/review-completed-altman-brockman-to-continue-to-lead-openai https://openai.com/blog/openai-announces-new-members-to-board-of-directors

“I’m pleased this whole thing is over,” Altman said at a press conference Friday.

He's probably right.

As predicted, the full report will not be released, only the 'summary' focused on exonerating Altman. Also as predicted, 'the mountain has given birth to a mouse' and the report was narrowly scoped to just the firing: they bluster about "reviewing 30,000 documents" (easy enough when you can just grep Slack + text messages + emails...), but then admit that they looked only at "the events concerning the November 17, 2023 removal" and interviewed hardly anyone ("dozens of interviews" barely even covers the immediate dramatis personae, much less any kind of investigation into Altman's chip stuff, Altman's many broken promises, Brockman's complainers etc). Doesn't sound like they have much to show for over 3 months of work by the smartest & highest-paid lawyers, does it... It also seems like they indeed did not promise confidentiality or set up any kind of anonymous reporting mechanism, given that they mention no such thing and include setting up a hotline for whistleblowers as a 'recommendation' for the future (ie. there was no such thing before or during the investigation). So, it was a whitewash from the beginning. Tellingly, there is nothing about Microsoft, and no hint their observer will be upgraded (or that there still even is one). And while flattering to Brockman, there is nothing about Murati - free tip to all my VC & DL startup acquaintances, there's a highly competent AI manager who's looking for exciting new opportunities, even if she doesn't realize it yet.

Also entertaining is that you can see the media spin happening in real time. What WilmerHales signs off on:

WilmerHale found that the prior Board acted within its broad discretion to terminate Mr. Altman, but also found that his conduct did not mandate removal.

Which is... less than complimentary? One would hope a CEO does a little bit better than merely not engage in 'conduct which mandates removal'? And turns into headlines like

"OpenAI’s Sam Altman Returns to Board After Probe Clears Him"

(Nothing from Kara Swisher so far, but judging from her Twitter, she's too busy promoting her new book and bonding with Altman over their mutual dislike of Elon Musk to spare any time for relatively-minor-sounding news.)

OK, so what was not as predicted? What is surprising?

This is not a full replacement board, but implies that Adam D'Angelo/Brett Taylor/Larry Summers are all staying on the board, at least for now. (So the new composition is D'Angelo/Taylor/Summers/Altman/Demond-Hellmann/Seligman/Simo plus the unknown Microsoft non-voting observer.) This is surprising, but it may simply be a quotidian logistics problem - they hadn't settled on 3 more adequately diverse and prima-facie qualified OA board candidates yet, but the report was finished and it was more important to wind things up, and they'll get to the remainder later. (Perhaps Brockman will get his seat back?)

EDIT: A HNer points out that today, March 8th, is "International Women's Day", and this is probably the reason for the exact timing of the announcement. If so, they may well have already picked the remaining candidates (Brockman?), but those weren't women and so got left out of the announcement. Stay tuned, I guess. EDITEDIT: the video call/press conference seems to confirm that they do plan more board appointments: "OpenAI will continue to expand the board moving forward, according to a Zoom call with reporters." So that is consistent with the hurried women-only announcement.

Replies from: jacques-thibodeau, ESRogs↑ comment by jacquesthibs (jacques-thibodeau) · 2024-09-25T20:09:29.501Z · LW(p) · GW(p)

And while flattering to Brockman, there is nothing about Murati - free tip to all my VC & DL startup acquaintances, there's a highly competent AI manager who's looking for exciting new opportunities, even if she doesn't realize it yet.

Heh, here it is: https://x.com/miramurati/status/1839025700009030027

↑ comment by ESRogs · 2024-03-09T07:41:36.196Z · LW(p) · GW(p)

Nitpick: Larry Summers not Larry Sumners

Replies from: gwern↑ comment by gwern · 2024-03-09T14:28:10.767Z · LW(p) · GW(p)

(Fixed. This is a surname typo I make an unbelievable number of times because I reflexively overcorrect it to 'Sumners', due to reading a lot more of Scott Sumner than Larry Summers. Ugh - just caught myself doing it again in a Reddit comment...)

Replies from: ESRogs↑ comment by Zach Stein-Perlman · 2024-03-25T21:06:22.806Z · LW(p) · GW(p)

Hydrazine investment in OA

Source?

Replies from: Zach Stein-Perlman↑ comment by Zach Stein-Perlman · 2024-03-27T02:54:14.864Z · LW(p) · GW(p)

@gwern [LW · GW] I've failed to find a source saying that Hydrazine invested in OpenAI. If it did, that would be a big deal; it would make this a lie.

Replies from: gwern↑ comment by gwern · 2024-03-27T03:35:09.063Z · LW(p) · GW(p)

It was either Hydrazine or YC. In either case, my point remains true: he's chosen to not dispose of his OA stake, whatever vehicle it is held in, even though it would be easy for someone of his financial acumen to do so by a sale or equivalent arrangement, forcing an embarrassing asterisk to his claims to have no direct financial conflict of interest in OA LLC - and one which comes up regularly in bad OA PR (particularly by people who believe it is less than candid to say you have no financial interest in OA when you totally do), and a stake which might be quite large at this point*, and so is particularly striking given his attitude towards much smaller conflicts supposedly risking bad OA PR. (This is in addition to the earlier conflicts of interest in Hydrazine while running YC or the interest of outsiders in investing in Hydrazine, apparently as a stepping stone towards OA.)

* if he invested a 'small' amount via some vehicle before he even went full-time at OA, when OA was valued at some very small amount like $50m or $100m, say, and OA's now valued at anywhere up to $90,000m or >900x more, and further, he strongly believes it's going to be worth far more than that in the near-future... Sure, it may be worth 'just' $500m or 'just' $1000m after dilution or whatever, but to most people that's pretty serious money!

↑ comment by Rebecca (bec-hawk) · 2023-12-25T00:41:24.155Z · LW(p) · GW(p)

Why do you think McCauley is likely to be the board member Labenz spoke to? I had inferred that it was someone not particularly concerned about safety given that Labenz reported them saying they could be easily request access to the model if they’d wanted to (and hadn’t). I took the point of the anecdote to be ‘here was a board member not concerned about safety’.

Replies from: gwern↑ comment by gwern · 2023-12-25T04:04:21.606Z · LW(p) · GW(p)

Because there is not currently any evidence that Toner was going around talking to a bunch of people, whereas this says McCauley was doing so. If I have to guess "did Labenz talk to the person who was talking to a bunch of people in OA, or did he talk to the person who was as far as I know not talking to a bunch of people in OA?", I am going to guess the former.

Replies from: bec-hawk↑ comment by Rebecca (bec-hawk) · 2023-12-25T05:14:56.507Z · LW(p) · GW(p)

They weren’t the only non employee board members though - that’s what I meant by the part about not being concerned about safety, that I took it to rule out both Toner and McCauley.

(Although it for some other reason you were only looking at Toner and McCauley, then no, I would say the person going around speaking to OAI employees is_less_ likely to be out of the loop on GPT-4’s capabilities)

Replies from: gwern↑ comment by gwern · 2023-12-25T17:51:03.899Z · LW(p) · GW(p)

The other ones are unlikely. Shivon Zilis & Reid Hoffman had left by this point; Will Hurd might or might not still be on the board at this point but wouldn't be described nor recommended by Labenz's acquaintance as researching AI safety, as that does not describe Hurd or D'Angelo; Brockman, Altman, and Sutskever are right out (Sutskever researches AI safety but Superalignment was a year away); by process of elimination, over 2023, the only board members he could have been plausibly contacting would be Toner and McCauley, and while Toner weakly made more sense before, now McCauley does.

(The description of them not having used the model unfortunately does not distinguish either one - none of the writings connected to them sound like they have all that much hands-on experience and would be eagerly prompt-engineering away at GPT-4-base the moment they got access. And I agree that this is a big mistake, but it is, even more unfortunately, and extremely common one - I remain shocked that Altman had apparently never actually used GPT-3 before he basically bet the company on it. There is a widespread attitude, even among those bullish about the economics, that GPT-3 or GPT-4 are just 'tools', which are mere 'stochastic parrots', and have no puzzling internal dynamics or complexities. I have been criticizing this from the start, but the problem is, 'sampling can show the presence of knowledge and not the absence', so if you don't think there's anything interesting there, your prompts are a mirror which reflect only your low expectations; and the safety tuning makes it worse by hiding most of the agency & anomalies, often in ways that look like good things. For example, the rhyming poetry ought to alarm everyone who sees it, because of what it implies underneath - but it doesn't. This is why descriptions of Sydney or GPT-4-base [LW · GW] are helpful: they are warning shots from the shoggoth behind the friendly tool-AI ChatGPT UI mask.)

Replies from: bec-hawk↑ comment by Rebecca (bec-hawk) · 2023-12-31T20:43:07.933Z · LW(p) · GW(p)

I think you might be misremembering the podcast? Nathan said that he was assured that the board as a whole was serious about safety, but I don’t remember the specific board member being recommended as someone researching AI safety (or otherwise more pro safety than the rest of the board). I went back through the transcript to check and couldn’t find any reference to what you’ve said.

“ And ultimately, in the end, basically everybody said, “What you should do is go talk to somebody on the OpenAI board. Don’t blow it up. You don’t need to go outside of the chain of command, certainly not yet. Just go to the board. And there are serious people on the board, people that have been chosen to be on the board of the governing nonprofit because they really care about this stuff. They’re committed to long-term AI safety, and they will hear you out. And if you have news that they don’t know, they will take it seriously.” So I was like, “OK, can you put me in touch with a board member?” And so they did that, and I went and talked to this one board member. And this was the moment where it went from like, “whoa” to “really whoa.””

Replies from: gwern↑ comment by gwern · 2023-12-31T23:52:54.296Z · LW(p) · GW(p)

I was not referring to the podcast (which I haven't actually read yet because from the intro it seems wildly out of date and from a long time ago) but to Labenz's original Twitter thread turned into a Substack post. I think you misinterpret what he is saying in that transcript because it is loose and extemporaneous "they're committed" could just as easily refer to "are serious people on the board" who have "been chosen" for that (implying that there are other members of the board not chosen for that); and that is what he says in the written down post:

Replies from: bec-hawkI consulted with a few friends in AI safety research…The Board, everyone agreed, included multiple serious people who were committed to safe development of AI and would definitely hear me out, look into the state of safety practice at the company, and take action as needed.What happened next shocked me. The Board member I spoke to was largely in the dark about GPT-4. They had seen a demo and had heard that it was strong, but had not used it personally. They said they were confident they could get access if they wanted to. I couldn’t believe it. I got access via a “Customer Preview” 2+ months ago, and you as a Board member haven’t even tried it‽ This thing is human-level, for crying out loud (though not human-like!).

↑ comment by Rebecca (bec-hawk) · 2024-01-01T03:46:13.402Z · LW(p) · GW(p)

This quote doesn’t say anything about the board member/s being people who are researching AI safety though - it’s Nathan’s friends who are in AI safety research not the board members.