OpenAI: The Battle of the Board

post by Zvi · 2023-11-22T17:30:04.574Z · LW · GW · 83 commentsContents

This is a Fight For Control; Altman Started it OpenAI is a Non-Profit With a Mission Sam Altman’s Perspective The Outside Board’s Perspective Ilya Sutskever’s Perspective Altman Moves to Take Control One Last Chance Botched Communications The Negotiation What Now for OpenAI? None 85 comments

Previously: OpenAI: Facts from a Weekend.

On Friday afternoon, OpenAI’s board fired CEO Sam Altman.

Overnight, an agreement in principle was reached to reinstate Sam Altman as CEO of OpenAI, with an initial new board of Bret Taylor (ex-co-CEO of Salesforce, chair), Larry Summers and Adam D’Angelo.

What happened? Why did it happen? How will it ultimately end? The fight is far from over.

We do not entirely know, but we know a lot more than we did a few days ago.

This is my attempt to put the pieces together.

This is a Fight For Control; Altman Started it

This was and still is a fight about control of OpenAI, its board, and its direction.

This has been a long simmering battle and debate. The stakes are high.

Until recently, Sam Altman worked to reshape the company in his own image, while clashing with the board, and the board did little.

While I must emphasize we do not know what motivated the board, a recent power move by Altman likely played a part in forcing the board’s hand.

OpenAI is a Non-Profit With a Mission

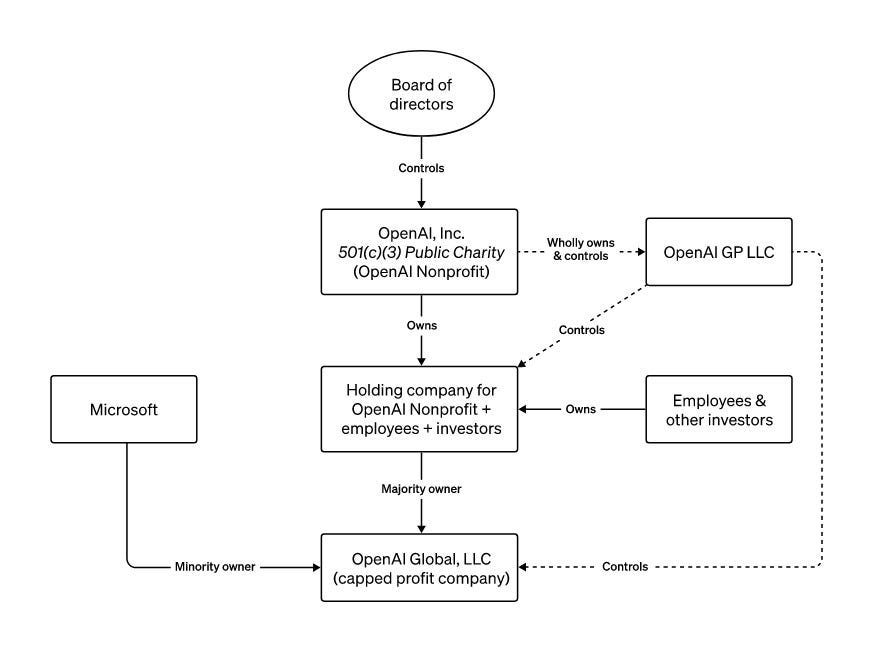

The structure of OpenAI and its board put control in doubt.

Here is a diagram of OpenAI’s structure:

Here is OpenAI’s mission statement, the link has intended implementation details as well:

This document reflects the strategy we’ve refined over the past two years, including feedback from many people internal and external to OpenAI. The timeline to AGI remains uncertain, but our Charter will guide us in acting in the best interests of humanity throughout its development.

OpenAI’s mission is to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity. We will attempt to directly build safe and beneficial AGI, but will also consider our mission fulfilled if our work aids others to achieve this outcome.

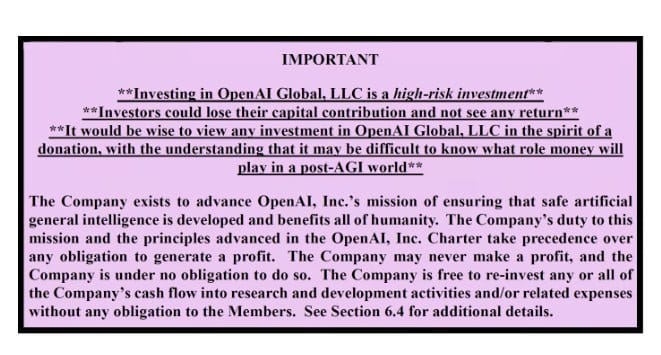

OpenAI warned investors that they might not make any money:

The way a 501(c)3 works is essentially that the board is answerable to no one. If you have a majority of the board for one meeting, you can take full control of the board.

But does the board have power? Sort of. It has a supervisory role, which means it can hire or fire the CEO. Often the board uses this leverage to effectively be in charge of major decisions. Other times, the CEO effectively controls the board and the CEO does what he wants.

A critical flaw is that firing (and hiring) the CEO, and choosing the composition of a new board, is the board’s only real power.

The board only has one move. It can fire the CEO or not fire the CEO. Firing the CEO is a major escalation that risks disruption. But escalation and disruption have costs, reputational and financial. Knowing this, the CEO can and often does take action to make them painful to fire, or calculates that the board would not dare.

Sam Altman’s Perspective

While his ultimate goals for OpenAI are far grander, Sam Altman wants OpenAI for now to mostly function as an ordinary Big Tech company in partnership with Microsoft. He wants to build and ship, to move fast and break things. He wants to embark on new business ventures to remove bottlenecks and get equity in the new ventures, including planning a Saudi-funded chip factory in the UAE and starting an AI hardware project. He lobbies in accordance with his business interests, and puts a combination of his personal power, valuation and funding rounds, shareholders and customers first.

To that end, over the course of years, he has remade the company culture through addition and subtraction, hiring those who believe in this mission and who would be personally loyal to him. He has likely structured the company to give him free rein and hide his actions from the board and others. Normal CEO did normal CEO things.

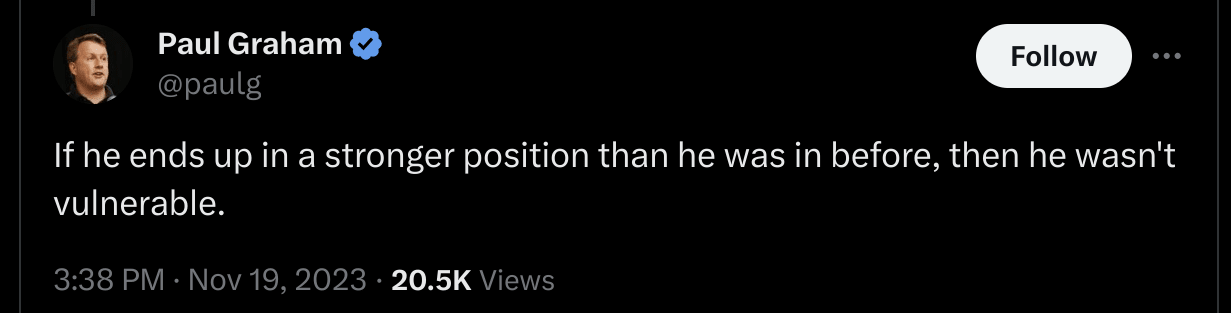

Altman is very good, Paul Graham says best in the world, at becoming powerful and playing power games. That, and scaling tech startups, are core to his nature. One assumes that ‘not being fully candid’ and other strategic action was part of this.

Sam Altman’s intermediate goal has been, from the beginning, full personal control of OpenAI, and thus control over the construction of AGI. Power always wants more power. I can’t fault him for rational instrumental convergence in his goals. The ultimate goal is to build ‘safe’ AGI.

That does not mean that Sam Altman does not believe in the necessity of ensuring that AI is safe. Altman understands this. I do not think he understands how hard it will be or the full difficulties that lie ahead, but he understands such questions better than most. He signed the CAIS letter. He testified frankly before Congress. Unlike many who defended him, Altman understands that AGI will be truly transformational, and he does not want humans to lose control over the future. He does not mean the effect on jobs.

To be clear, I do think that Altman sincerely believes that his way is best for everyone.

Right before Altman was fired, Altman had firm control over two board seats. One was his outright. Another belonged to Greg Brockman.

That left four other board members.

The Outside Board’s Perspective

Helen Toner, Adam D’Angelo and Tasha McCauley had a very different perspective on the purpose of OpenAI and what was good for the world.

They did not want OpenAI to be a big tech company. They do not want OpenAI to move as quickly as possible to train and deploy ever-more-capable frontier models, and sculpt them into maximally successful consumer products. They acknowledge the need for commercialization in order to raise funds, and I presume that such products can provide great value for people and that this is good.

They want a more cautious approach, that avoids unnecessarily creating or furthering race dynamics with other labs or driving surges of investment like we saw after ChatGPT, that takes necessary precautions at each step. And they want to ensure that the necessary controls are in place, including government controls, for when the time comes that AGI is on the line, to ensure we can train and deploy it safely.

Adam D’Angelo said the whole point was not to let OpenAI become a big tech company. Helen Toner is a strong advocate for policy action to guard against existential risk. I presume from what we know Tasha McCauley is in the same camp.

Ilya Sutskever’s Perspective

Ilya Sutskever loves OpenAI and its people, and the promise of building safe AGI. He had reportedly become increasingly concerned that timelines until AGI could be remarkably short. He was also reportedly concerned Altman was moving too fast and was insufficiently concerned with the risks. He may or may not have been privy to additional information about still-undeployed capabilities advances.

Reports are Ilya’s takes on alignment have been epistemically improving steadily. He is co-leading the Superalignment Taskforce seeking to figure out how to align future superintelligent AI. I am not confident in what alignment takes I have heard from members of the taskforce, but Ilya is an iterator, and my hope is that timelines are not as short as he thinks and Ilya, Jan Leike and his team can figure it out before the chips have to be down.

Ilya later reversed course, after the rest of the board fully lost control of the narrative and employees, and the situation threatened to tear OpenAI apart.

Altman Moves to Take Control

Altman and the board were repeatedly clashing. Altman continued to consolidate his power, confident that Ilya would not back an attempt to fire him. But it was tense. It would be much better to have a board more clearly loyal to Altman, more on board with the commercial mission.

Then Altman saw an opportunity.

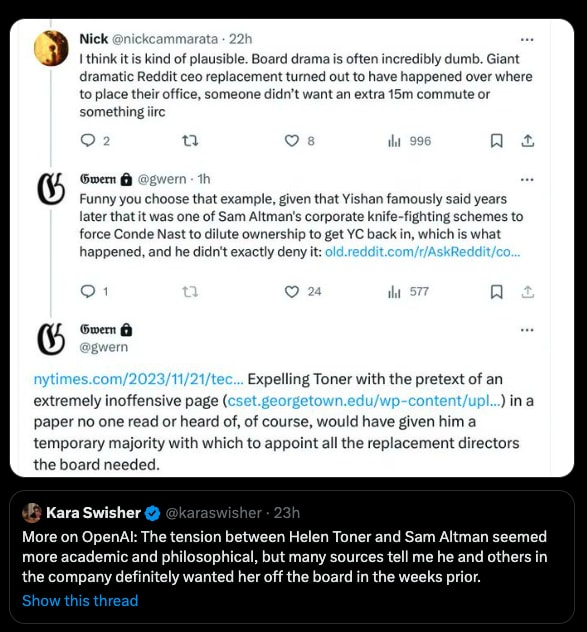

In October, board member Helen Toner, together with Andrew Imbrie and Owen Daniels, published the paper Decoding Intentions: Artificial Intelligence and Costly Signals.

The paper correctly points out that while OpenAI engages in costly signaling and takes steps to ensure safety, Anthropic does more costly signaling and takes more steps to ensure safety, and puts more emphasis on communicating this message. That is not something anyone could reasonably disagree with. The paper also notes that others have criticized OpenAI, and says OpenAI could and perhaps should do more. The biggest criticism in the paper is that it asserts that ChatGPT set off an arms race, with Anthropic’s Claude only following afterwards. This is very clearly true. OpenAI didn’t expect ChatGPT to take off like it did, but in practice ChatGPT definitely set off an arms race. To the extent it is a rebuke, it is stating facts.

However, the paper was sufficiently obscure that, if I saw it at all, I don’t remember it in the slightest. It is a trifle.

Altman strongly rebuked Helen Toner for the paper, according to the New York Times.

In the email, Mr. Altman said that he had reprimanded Ms. Toner for the paper and that it was dangerous to the company, particularly at a time, he added, when the Federal Trade Commission was investigating OpenAI over the data used to build its technology.

Ms. Toner defended it as an academic paper that analyzed the challenges that the public faces when trying to understand the intentions of the countries and companies developing A.I. But Mr. Altman disagreed.

“I did not feel we’re on the same page on the damage of all this,” he wrote in the email. “Any amount of criticism from a board member carries a lot of weight.”

Senior OpenAI leaders, including Mr. Sutskever, who is deeply concerned that A.I. could one day destroy humanity, later discussed whether Ms. Toner should be removed, a person involved in the conversations said.

From my perspective, even rebuking Toner here is quite bad. It is completely inconsistent with the nonprofit’s mission to not allow debate and disagreement and criticism. I do not agree with Altman’s view that Toner’s paper ‘carried a lot of weight,’ and I question whether Altman believed it either. But even if the paper did carry weight, we are not going to get through this crucial period if we cannot speak openly. Altman’s reference to the FTC investigation is a non-sequitur given the content of the paper as far as I can tell.

Sam Altman then attempted to use this (potentially manufactured) drama to get Toner removed from the board. He used a similar tactic at Reddit, a manufactured crisis to force others to give up power. Once Toner was gone, presumably Altman would have moved to reshape the rest of the board.

One Last Chance

The board had a choice.

If Ilya was willing to cooperate, the board could fire Altman, with the Thanksgiving break available to aid the transition, and hope for the best.

Alternatively, the board could choose once again not to fire Altman, watch as Altman finished taking control of OpenAI and turned it into a personal empire, and hope this turns out well for the world.

They chose to pull the trigger.

We do not know what the board knew, or what combination of factors ultimately drove their decision. The board made a strategic decision not to explain their reasoning or justifications in detail during this dispute.

What do we know?

The board felt it at least had many small data points saying it could not trust Altman, in combination with Altman’s known power-seeking moves elsewhere (e.g. what happened at Reddit), and also that Altman was taking many actions that the board might reasonably see as in direct conflict with the mission.

Why has the board failed to provide details on deception? Presumably because without one clear smoking gun, any explanations would be seen as weak sauce. All CEOs do some amount of manipulation and politics and withholding information. When you give ten examples, people often then judge on the strength of the weakest one rather than adding them up. Providing details might also burn bridges and expose legal concerns, make reconciliations and business harder. There is still much we do not know about what we do not know.

What about concrete actions?

Altman was raising tens of billions from Saudis, to start a chip company to rival Nvidia, which was to produce its chips in the UAE, leveraging the fundraising for OpenAI in the process. For various reasons this is kind of a ‘wtf’ move.

Altman was also looking to start an AI hardware device company. Which in principle seems good and fine, mundane utility, but as the CEO of OpenAI with zero equity the conflict is obvious.

Altman increasingly focused on shipping products the way you would if you were an exceptional tech startup founder, and hired and rebuilt the culture in that image. Anthropic visibly built a culture of concern about safety in a way that OpenAI did not.

Concerns about safety (at least in part) led to the Anthropic exodus after the board declined to remove Altman at that time. If you think the exit was justified, Altman wasn’t adhering to the charter. If you think it wasn’t, Altman created a rival and intensified arms race dynamics, which is a huge failure.

This article claims both OpenAI and Microsoft were central in lobbying to take any meaningful requirements for foundation models out of the EU’s AI Act. If I was a board member, I would see this as incompatible with the OpenAI charter.

Altman aggressively cut prices in ways that prioritized growth and made OpenAI much more dependent on Microsoft and further fueling the boom in AI development. Other deals and arrangements with Microsoft deepened the dependence, while making a threatened move to Microsoft more credible.

He also offered a legal shield to users on copyright infringement, potentially endangering the company. It seems reasonable to assume he moved to expand OpenAI’s offerings on Dev Day in the face of safety concerns. Various attack vectors seem fully exposed.

And he reprimanded and moved to remove Helen Toner from the board for writing a standard academic paper exploring how to pursue AI safety. To me this is indeed a smoking gun, although I understand why they did not expect others to see it that way.

Botched Communications

From the perspective of the public, or of winning over hearts and minds inside or outside the company, the board utterly failed in its communications. Rather than explain, the board issued its statement:

Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.

Then it went silent. The few times it offered any explanation at all, the examples were so terrible it was worse than staying silent.

As the previous section shows, the board had many reasonable explanations it could have given. Would that have mattered? Could they have won over enough of the employees for this to have turned out differently? We will never know.

Even Emmett Shear, the board’s third pick for CEO (after Nat Friedman and Alex Wang), couldn’t get a full explanation. His threat to walk away without one was a lot of what enabled the ultimate compromise on a new board and bringing Altman back.

By contrast, based on what we know, Altman played the communication and political games masterfully. Three times he coordinated the employees to his side in ways that did not commit anyone to anything. The message his team wanted to send about what was going on consistently was the one that got reported. He imposed several deadlines, the deadlines passed and no credibility was lost. He threatened joining Microsoft and taking all the employees, without ever agreeing to anything there either.

Really great show. Do you want your leader to have those skills? That depends.

The Negotiation

Note the symmetry. Both sides were credibly willing to blow up OpenAI rather than give board control, and with it ultimate control of OpenAI, to the other party. Both sides were willing to let Altman return as CEO only under the right conditions.

Altman moved to take control. Once the board pulled the trigger firing him in response, Altman had a choice on what to do next, even if we all knew what choice he would make. If Altman wanted OpenAI to thrive without him, he could have made that happen. Finding investors would not have been an issue for OpenAI, or for Altman’s new ventures whatever they might be.

Instead, as everyone rightfully assumed he would, he chose to fight, clearly willing to destroy OpenAI if not put back in charge. He and the employees demanded the board resign, offering unconditional surrender. Or else they would all move to Microsoft.

That is a very strong negotiating position.

You know what else is a very strong negotiating position? Believing that OpenAI, if you give full control over it to Altman, would be a net negative for the company’s mission.

This was misrepresented to be ‘Helen Toner thinks that destroying OpenAI would accomplish its mission of building safe AGI.’ Not so. She was expressing, highly reasonably, the perspective that if the only other option was Altman with full board control, determined to use his status and position to blitz forward on AI on all fronts, not taking safety sufficiently seriously, that might well be worse for OpenAI’s mission than OpenAI ceasing to exist in its current form.

Thus, a standoff. A negotiation. Unless both the board and Sam Altman agree that OpenAI survives, OpenAI does not survive. They had to agree on a new governance framework. That means a new board.

Which in the end was Bret Taylor, Larry Summers and Adam D’Angelo.

What Now for OpenAI?

Emmett Shear is saying mission accomplished, passes the baton, seeing this result as the least bad option including for safety. This is strong evidence it was the least bad option.

Altman, Brockman and the employees are declaring victory. They are so back.

Mike Solana summarizes as ‘Altman knifed the board.’

This is not over.

Altman very much did not get an obviously controlled board.

The succession problem is everything.

What will the new board do? What full board will they select? What will they find when they investigate further?

These are three ‘adults in the room’ to be sure. But D’Angelo already voted to fire Altman once, and Summers is a well-known bullet-biter and is associated with Effective Altruism.

If you assume that Altman was always in the right, everyone knows it is his company to run as he wants to maximize profits, and any sane adult would side with him? Then you assume Bret Taylor and Larry Summers will conclude that as well.

If you do not assume that, if you assume OpenAI is a non-profit with a charter? If you see many of Altman’s actions and instincts as in conflict with that charter? If you think there might be a lot of real problems here, including things we do not know? If you think that this new board could lead to a new expanded board that could serve as a proper check on Altman, without the lack of gravitas and experience that plagued the previous board, and with a fresh start on employee relations?

If you think OpenAI could be a great thing for the world, or its end, depending on choices we make?

Then this is far from over.

83 comments

Comments sorted by top scores.

comment by aphyer · 2023-11-22T18:48:52.513Z · LW(p) · GW(p)

The board had a choice.

If Ilya was willing to cooperate, the board could fire Altman, with the Thanksgiving break available to aid the transition, and hope for the best.

Alternatively, the board could choose once again not to fire Altman, watch as Altman finished taking control of OpenAI and turned it into a personal empire, and hope this turns out well for the world.

They chose to pull the trigger.

I...really do not see how these were the only choices? Like, yes, ultimately my boss's power over me stems from his ability to fire me. But it would be very strange to say 'my boss and I disagreed, and he had to choose between firing me on the spot or letting me do whatever I wanted with no repercussions'?

Here are some things I can imagine the board doing. I don't know if some of these are things they wouldn't have had the power to do, or wouldn't have helped, but:

- Consolidating the board/attempting to reduce Altman's control over it. If Sam could try to get Helen removed from the board (even though he controlled only 2/6 directors?) could the 4-2 majority of other directors not do anything other than 'fire Sam as CEO'?

- Remove Sam from the board but leave him as CEO?

- Remove Greg from the board?

- Appoint some additional directors aligned with the board?

- Change the board's charter to have more members appointed in different ways?

- Publicly stating 'We stand behind Helen and think she has raised legitimate concerns about safety that OpenAI is currently not handling well. We ask the CEO to provide a roadmap by which OpenAI will catch up to Anthropic in safety by 2025.'

- Publicly stating 'We object to OpenAI's current commercial structure, in which the CEO is paid more for rushing ahead but not paid more for safety. We ask the CEO to restructure his compensation arrangements so that they do not incentivize danger.'

I am not a corporate politics expert! I am a programmer who hates people! But it seems to me that there are things you can do with a 4-2 board majority and the right to fire the CEO in between 'fire the CEO on the spot with no explanation given' and 'fire every board member who disagrees with the CEO and do whatever he wants'. It...sort of...sounds like you imagine that in two weeks' time Sam would have found a basilisk hack to mind-control the rest of the board and force them to do whatever he wanted? I do not see how that is the case? If a majority of the board is willing to fire him on the spot, it really doesn't seem that he's about to take over if not immediately fired.

Replies from: ryan_b, jkaufman, frontier64, SkinnyTy, roland-pihlakas↑ comment by ryan_b · 2023-11-22T19:23:17.337Z · LW(p) · GW(p)

I think the central confusion here is: why, in the face of someone explicitly trying to take over the board, would the rest of the board just keep that person around?

None of the things you suggested have any bearing whatsoever on whether Sam Altman would continue to try and take over the board. If he has no board position but is still the CEO, he can still do whatever he wants with the company, and also try to take over the board. If he is removed as CEO but remains on the board, he will still try to take over the board. Packing the board has no bearing on the things Sam can do to expand his influence there, it just means it takes longer. The problem the board had to solve was not the immediacy of Sam taking over, but the inevitability of it. The gambit with removing Helen Toner failed, but other gambits would follow.

Also notice that the 4-2 split is not a fixed faction: Ilya switched sides as soon as the employees revolted putting us at 3-3, and it appears Adam D’Angelo was instrumental in the negotiation to bring Sam back. What looks at first like a 4-2 split and therefore safe was more like a 2-1-1-2 that briefly coalesced into a 4-2 split in response to Sam trying to make it a 1-1-1-3 split instead. Under those conditions, Sam would be able to do anything that wasn't so egregious it caused the other three to unify AND one of his team's seats to defect.

Replies from: mishka, aphyer↑ comment by mishka · 2023-11-23T01:12:35.477Z · LW(p) · GW(p)

In principle, the 4 members of the board had an option which would look much better: to call a meeting of all 6 board members, and to say at that meeting, "hey, the 4 of us think we should remove Sam from the company and remove Greg from the board, let's discuss this matter before we take a vote: tell us why we should not do that".

That would be honorable, and would look honorable, and the public relation situation would look much better for them.

The reason they had not done that was, I believe, that they did not feel confident they could resist persuasion powers of Sam, that they thought he would have talked at least one of them out of it.

But then what they did looked very unreasonable from more than one viewpoint:

- Should you take a monumental decision like this, if your level of confidence in this decision is so low that you think you might be talked out of it on the spot?

- Should you destroy someone like this before letting this person to defend himself?

They almost behaved as if Sam was already a hostile superintelligent AI who was trying to persuade them to let him out of the box, and who had superpowers of persuasion, and the only way to avoid the outcomes of letting him out of the box was to close one's ears and eyes and shut him down before he could say anything.

Perhaps this was close to how they actually felt...

Replies from: ryan_b↑ comment by ryan_b · 2023-11-23T11:55:49.911Z · LW(p) · GW(p)

I agree with this, and I am insatiably curious about what was behind their decisions about how to handle it.

But my initial reaction based on what we have seen is that it wouldn’t have worked, because Sam Altman comes to the meeting with a pre-rallied employee base and the backing of Microsoft. Since Ilya reversed on the employee revolt, I doubt he would have gone along with the plan when presented a split of OpenAI up front.

↑ comment by jefftk (jkaufman) · 2023-11-23T02:56:14.108Z · LW(p) · GW(p)

Speculating of course, but it reads to me like the four directors knew Altman was much better at politics and persuasion than they were. They briefly had a majority willing to kick him off, and while "Sam would have found a basilisk hack to mind-control the rest of the board" is phrased too magically to me I don't think it's that far off? This sort of dynamic feels familiar to me from playing games where one player is far better than the others at convincing people.

(And then because they were way outclassed in politics and persuasion they handled the aftermath of their decision poorly and Altman did an incredible job.)

Replies from: Linch↑ comment by Linch · 2023-11-23T04:38:07.490Z · LW(p) · GW(p)

In the last 4 days, they were probably running on no sleep (and less used to that/had less access to the relevant drugs than Altman and Bockman), and had approximately zero external advisors, while Altman seemed to be tapping into half of Silicon Valley and beyond for help/advice.

↑ comment by frontier64 · 2023-11-23T03:54:54.864Z · LW(p) · GW(p)

This comes from a fundamental misunderstanding of how OpenAI and most companies operate. The board is a check on power. In most companies they will have to approve of high level decisions: moving to a new office space or closing a new acquisition. But they have 0 day to day control. If they tell the CEO to fire these 10 people and he doesn't do it, that's it. They can't do it themselves, they can't tell the CEO's underlings to do it. They have 0 options besides getting a new CEO. OpenAI's board had less control even than this.

Tweeting "Altman is not following our directions and we don't want to fire him, but we really want him to start doing what we ask" is a sure fire way to collapse your company and make you sound like a bunch of incompetent buffoons. It's admitting that you won't use the one tool that you actually do have. I'm certain the board threatened to fire Sam before this unless he made X changes. I'm certain Sam never made all of those X changes. Therefore they can either follow through on their threat or lose. Turns out following through on their threat was meaningless because Sam owns OpenAI both with tacit power and the corporate structure.

Replies from: ChristianKl, mikkel-wilson↑ comment by ChristianKl · 2023-11-23T04:42:48.356Z · LW(p) · GW(p)

I'm certain the board threatened to fire Sam before this unless he made X changes. I'm certain Sam never made all of those X changes.

From where do you get that certainty?

If they would have made those threats, why didn't someone tell the New York Times journalists who were trying to understand what happened about it?

Why didn't they say so when they fired him? It's the kind of thing that's easy to say to justify firing him.

↑ comment by MikkW (mikkel-wilson) · 2023-11-25T09:51:50.447Z · LW(p) · GW(p)

The board's statement doesn't mention them having made such a request to Altman which was denied, that's a strong signal against things having played out that way.

↑ comment by HiddenPrior (SkinnyTy) · 2023-11-22T19:22:12.941Z · LW(p) · GW(p)

I feel like this is a good observation. I notice I am confused at their choices given the information provided.... So there is probably more information? Yes, it is possible that Toner and the former board just made a mistake, and thought they had more control over the situation than they really did? Or underestimated Altman's sway over the employees of the company?

The former board does not strike me as incompetent though. I don't think it was sheer folly that lead them to pick this debacle as their best option.

Alternatively, they may have had information we don't that lead them to believe that this was the least bad course of action.

↑ comment by Roland Pihlakas (roland-pihlakas) · 2023-11-24T01:30:38.128Z · LW(p) · GW(p)

The following is meant as a question to find out, not a statement of belief.

Nobody seems to have mentioned the possibility that initially they did not intend to fire Sam, but just to warn him or to give him a choice to restrain himself. Yet possibly he himself escalated it to firing or chose firing instead of complying with the restraint. He might have done that just in order to have all the consequences that have now taken place, giving him more power.

For example, people in power positions may escalate disagreements, because that is a territory they are more experienced with as compared to their opponents.

comment by Rebecca (bec-hawk) · 2023-11-22T20:49:21.663Z · LW(p) · GW(p)

Most of this is interesting, and useful, speculation, but it reads as a reporting of facts…

comment by David Hornbein · 2023-11-22T20:20:11.951Z · LW(p) · GW(p)

I really don't think you can justify putting this much trust in the NYT's narrative of events and motivations here. Like, yes, Toner did publish the paper, and probably Altman did send her an email about it. Then the NYT article tacitly implies but *doesn't explicitly say* this was the spark that set everything off, which is the sort of haha-it's-not-technically-lying that I expect from the NYT. This post depends on that implication being true.

Replies from: 1a3orn, bec-hawk, dkirmani↑ comment by 1a3orn · 2023-11-23T01:39:24.313Z · LW(p) · GW(p)

The Gell-Mann Amnesia effect seems pretty operative, given the first name on the relevant NYT article is the same guy who did some pretty bad reporting on Scott Alexander.

If you don't think the latter was a reliable summary of Scott's blog, there's not much reason to think that the former is a reliable summary of the OpenAI situation.

Replies from: xpym↑ comment by xpym · 2023-11-23T08:39:43.172Z · LW(p) · GW(p)

I'd say that, on conflict theory terms, NYT adequately described Scott. They correctly identified him as a contrarian willing to entertain, and maybe even hold, taboo opinions, and to have polite interactions with out-and-out witches. Of course, we may think it deplorable that the 'newspaper of record' considers such people deserving to be publicly named and shamed, but they provided reasonably accurate information to those sharing this point of view.

↑ comment by Rebecca (bec-hawk) · 2023-11-22T20:53:20.959Z · LW(p) · GW(p)

The assertion is that Sam sent the email reprimanding Helen to others at OpenAI, not to Helen herself, which is a fundamentally different move.

I can’t conceive of a situation in which the CEO of a non-profit trying to turn the other employees against the people responsible for that non-profit (ie the board) would be business-as-usual.

comment by Linch · 2023-11-24T09:14:42.740Z · LW(p) · GW(p)

Someone privately messaged me this whistleblowing channel for people to give their firsthand accounts of board members. I can't verify the veracity/security of the channel but I'm hoping that having an anonymous place to post concerns might lower the friction or costs involved in sharing true information about powerful people:

comment by jbash · 2023-11-22T21:56:48.001Z · LW(p) · GW(p)

Alternatively, the board could choose once again not to fire Altman, watch as Altman finished taking control of OpenAI and turned it into a personal empire, and hope this turns out well for the world.

I think it's pretty clear that Altman had already basically consolidated de facto control.

If you've arranged things so that 90+ percent of the staff will threaten to quit if you're thrown out against your will, and a major funding source will enable you to instantly rehire many or most of those people elsewhere, and you'll have access to almost every bit of the existing work, and you have massive influence with outside players the organization needs to work with, and your view of how the organization should run is the one more in line with those outside players' actual interests, and you have a big PR machine on standby, and you're better at this game than anybody else in the place, then the organization needs you more than you need it. You have the ability to destroy it if need be.

If it's also true that your swing board member is unwilling to destroy the organization[1], then you have control.

I read somewhere that like half the OpenAI staff, probably constituting the committed core of the "safety" faction, left in 2019-2020. That's probably when his control became effectively absolute. Maybe they could have undone that by expanding the board, but presumably he'd have fought expansion, too, if it had meant more directors who might go against him. Maybe there were some trickier moves they could have come up with, but at a minimum Altman was immune to direct confrontation.

The surprising thing is that the board members apparently didn't realize they'd lost control for a couple of years. I mean, I know they're not expert corporate power players (nor am I, for that matter), but that's a long time to stay ignorant of something like that.

In fact, if Altman's really everything he's cracked up to be, it's also surprising that he didn't realize Sutskever could be swayed to fire him. He could probably have prevented that just by getting him in a room and laying out what would happen if something like this were done. And since he's better at this than I am, he could also probably have found a way to prevent it without that kind of crude threat. It's actually a huge slip on his part that the whole thing broke out into public drama. A dangerous slip; he might have actually had to destroy OpenAI and rebuild elsewhere.

None of this should be taken to mean that I think that it's good that Altman has "won", by the way. I think OpenAI would be dangerous even with the other faction in control, and Altman's even more dangerous.

The only reason Sutskever voted for the firing to begin with seems to be that he didn't that realize Altman could or would take OpenAI down with him (or, if you want to phrase it more charitably and politely, that Altman had overwhelmingly staffed it with people who shared his idea of how it should be run). ↩︎

↑ comment by dr_s · 2023-11-23T08:29:43.253Z · LW(p) · GW(p)

I honestly think the Board should have just blown OpenAI up. The compromise is worthless, these conditions remain and thus Sam Altman is in power. So at least have him go work for Microsoft, it likely won't be any worse but the pretense is over. And yeah, they should have spoken more and more openly, at least give people some good ammo to defend their choices though the endless cries of "BUT ALL THAT LOST VALUE" would have come anyway.

comment by Odd anon · 2023-11-22T22:09:49.859Z · LW(p) · GW(p)

Concerning. This isn't the first time I've seen a group fall into the pitfall of "wow, this guy is amazing at accumulating power for us, this is going great - oh whoops, now he holds absolute control and might do bad things with it".

Altman probably has good motivations, but even so, this is worrying. "One uses power by grasping it lightly. To grasp with too much force is to be taken over by power, thus becoming its victim" to quote the Bene Gesserit.

comment by ryan_b · 2023-11-22T18:54:00.378Z · LW(p) · GW(p)

Well gang, it looks like we have come to the part where we are struggling directly over the destiny of humanity. In addition to persuasion and incentives, we'll have to account for the explicit fights over control of the orgs.

Silver lining: it means we have critical mass for enough of humanity and enough wealth in play to die with our boots on, at least!

Replies from: o-o↑ comment by O O (o-o) · 2023-11-22T20:14:30.197Z · LW(p) · GW(p)

The last few days should show it's not enough to have power cemented in technicalities, board seats, or legal contracts. Power comes from gaining support of billionaires, journalists, and human capital. It's kind of crazy that Sam Altman essentially rewrote the rules, whether he was justified or not.

The problem is the types of people who are good at gaining power tend to have values that are incompatible with EA. The real silver lining to me, is that while it's clear Sam Altman is power-seeking, he's also probably a better option to have there than the rest of the people good at seeking power, who might not even entertain x-risk.

Replies from: dr_s, ryan_b, boris-kashirin↑ comment by dr_s · 2023-11-23T08:25:28.842Z · LW(p) · GW(p)

Power comes from gaining support of billionaires, journalists, and human capital. It's kind of crazy that Sam Altman essentially rewrote the rules, whether he was justified or not.

Until stakes get so high that we go straight to the realm of violence (threatened, by the State or other actors, or enacted), yes, it does. Enough soft power becomes hard. Honestly can't figure how anyone thought this setup would work, especially with Altman being a deft manipulator as he seems to be. I've made the Senate vs Caesar comparison before but I'll reiterate it, because ultimately rules only matter until personal loyalty persuades enough people to ignore them.

↑ comment by ryan_b · 2023-11-22T20:54:03.708Z · LW(p) · GW(p)

I agree in the main, and I think it is worth emphasizing that power-seeking is a skillset, which is orthogonal to values; we should put it in the Dark Arts pile, and anyone involved in running an org should learn it at least enough to defend against it.

Replies from: None↑ comment by [deleted] · 2023-11-22T23:27:39.213Z · LW(p) · GW(p)

What would be your defense? Agents successful at seeking power have more power.

Replies from: ryan_b, Thane Ruthenis↑ comment by ryan_b · 2023-11-23T12:03:27.908Z · LW(p) · GW(p)

Power seeking mostly succeeds by the other agents not realizing what is going on, so it either takes them by surprise or they don’t even notice it happened until the power is exerted.

Yet power seeking is a symmetric behavior, and power is scarce. The defense is to compete for power against the other agent, and try to eliminate them if possible.

Replies from: None↑ comment by [deleted] · 2023-11-23T22:35:50.804Z · LW(p) · GW(p)

I agree. For power that comes from (money/reputation/military/hype) developing AI systems, this is where I was wondering where the symmetry is for those who wish to stop AI being developed. The 'doomer' faction over time won't be benefitting from AI, and thus their relative power would be weaker and weaker with time. Assuming at least initially, AI systems have utility value to humans, if the AI systems treacherous turn it will be later, after initially providing large benefits.

With this corporate battle right now, Altman is going to announce whatever advances they have made, raise billions of dollars, and the next squabble will have the support of people that Altman has personally enriched. I heard a rumor it works out to be 3.6 million per employee with the next funding round, with a 2 year cliff, so 1.8 million/year on the line. This would be why almost all OpenAI employees stated they would leave over it. So it's a direct example of the general symmetry issue mentioned above. (leaving would also pay: I suspect Microsoft would have offered more liquid RSUs and probably matched the OAI base, maybe large signing bonuses. Not 1.8m but good TC. Just me speculating, I don't know the offer details and it is likely Microsoft hadn't decided)

The only 'obvious' way I could see doomers gaining power with time would be if early AI systems cause mass murder events or damage similar to nuclear meltdowns. This would give them political support, and it would scale with AI system capability, as more powerful systems cause more and more deaths and more and more damage.

↑ comment by Thane Ruthenis · 2023-11-23T06:00:37.334Z · LW(p) · GW(p)

Off the top of my head: evading notice, running away (to a different jurisdiction/market, or in some even more abstract sense), appearing to be a poisoned meal (likely by actually being poisoned, such that eating you will be more harmful to their reputation or demand more of their focused attention than you're worth to them), or seeking the protection of dead-player institutions (who'd impose a steep-but-flat cost on you, and then won't actively try to take you over).

↑ comment by Boris Kashirin (boris-kashirin) · 2023-11-23T05:42:23.677Z · LW(p) · GW(p)

I think it is not even goals but means. When you have big hammer every problem looks like a nail, if you good at talking you start to think you can talk your way out of any problem.

comment by jacquesthibs (jacques-thibodeau) · 2023-11-22T23:32:43.775Z · LW(p) · GW(p)

Someone else reported that Sam seemingly was trying to get Helen off of the board weeks prior to the firing:

comment by trevor (TrevorWiesinger) · 2023-11-22T20:22:31.768Z · LW(p) · GW(p)

From Planecrash page 10:

On the first random page Keltham opened to, the author was saying what some 'Duke' (high-level Government official) was thinking while ordering the east gates to be sealed, which, like, what, how would the historian know what somebody was thinking, at best you get somebody else's autobiographical account of what they claim they were thinking, and then the writer is supposed to say that what was observed was the claim, and mark separately any inferences from the observation, because one distinguishes observations from inferences.

This post generally does well on this point (obviously written by a bayesian, Zvi's coverage is generally superior), except for the claim "Altman started it". We don't know who started it, and most of the major players might not even know who started it. According to Alex A:

Smart people who have an objective of accumulating and keeping control—who are skilled at persuasion and manipulation —will often leave little trace of wrongdoing. They’re optimizing for alibis and plausible deniability. Being around them and trying to collaborate with them is frustrating. If you’re self-aware enough, you can recognize that your contributions are being twisted, that your voice is going unheard, and that critical information is being withheld from you, but it’s not easy. And when you try to bring up concerns, they are very good at convincing you that those concerns are actually your fault.

We do know that Microsoft is a giant startup-eating machine with incredible influence in the US government and military, and also globally (e.g. possibly better at planting OS backdoors than the NSA [LW · GW] and undoubtedly irreplaceable in that process for most computers in the world), and has been planting roots into Altman himself for almost a year now.

Microsoft has >50 Altman-types for every Altman at OpenAI (I couldn't find any publicly available information about the correct number of executives at Microsoft). Investors are also savvy, and the number of consultants consulted is not knowable. A friend characterized this basically as "acquiring a ~$50B dollar company for free".

The obvious outcome where the giant powerful tech company ultimately ends up on top is telling (although the gauche affirmation of allegiance to Microsoft in the wake of the conflict is a weak update since that's a reasonable thing for Microsoft to expect due to its vulnerability to FUD) because Microsoft is a Usual Suspect, even if purely internal conflict is generally a better explanation and somewhat more likely to be what happened here.

↑ comment by jbash · 2023-11-22T22:13:54.498Z · LW(p) · GW(p)

gauche affirmation of allegiance to Microsoft

I was actually surprised that the new board ended up with members who might reasonably be expected, under the right circumstances, to resist something Microsoft wanted. I wouldn't have been surprised if it had ended up all Microsoft employees and obvious Microsoft proxies.

Probably that was a concession in return for the old board agreeing to the whole thing. But it's also convenient for Altman. It doesn't matter if he pledges allegiance. The question is what actual leverage Microsoft has over him should he choose to do something Microsoft doesn't like. This makes a nonzero difference in his favor.

Replies from: followthesilence, tristan-wegner↑ comment by followthesilence · 2023-11-23T05:17:40.170Z · LW(p) · GW(p)

If Sam is as politically astute as he is made out to be, loading the board with blatant MSFT proxies would be bad optics and detract from his image. He just needs to be relatively sure they won't get in his way or try to coup him again.

↑ comment by Tristan Wegner (tristan-wegner) · 2023-11-23T12:07:21.509Z · LW(p) · GW(p)

The former boards only power was to agree/fire to new board members and CEOs.

Pretty sure they only Let Altman back as CEO under the condition of having a strong influence over the new board.

comment by titotal (lombertini) · 2023-11-22T18:59:29.224Z · LW(p) · GW(p)

If Ilya was willing to cooperate, the board could fire Altman, with the Thanksgiving break available to aid the transition, and hope for the best.

Alternatively, the board could choose once again not to fire Altman, watch as Altman finished taking control of OpenAI and turned it into a personal empire, and hope this turns out well for the world.

Could they not have also gone with option 3: fill the vacant board seats with sympathetic new members, thus thwarting Altman's power play internally?

Replies from: Zvi, quailia↑ comment by quailia · 2023-11-24T15:44:28.216Z · LW(p) · GW(p)

The way I see it, Altman likely was not giving great board updates and may have been ignoring what they told him to do for months. Board removal was not a credible threat then, adding more board members would not do anything to change him.

comment by jacquesthibs (jacques-thibodeau) · 2023-11-22T23:30:13.942Z · LW(p) · GW(p)

Here's a video (consider listening to the full podcast for more context) by someone who was a red-teamer for GPT-4 and was removed as a volunteer for the project after informing a board member that (early) GPT-4 was pretty unsafe. It's hard to say what really happened and if Sam and co weren't candid with the board about safety issues regarding GPT-4, but I figured I'd share as another piece of evidence.

Replies from: jam_brand↑ comment by jam_brand · 2023-11-23T03:24:04.343Z · LW(p) · GW(p)

Here's a video

It's also written up on Cognitive Revolution's substack for those that prefer text.

comment by followthesilence · 2023-11-23T05:12:51.202Z · LW(p) · GW(p)

This is a great post, synthesizing a lot of recent developments and (I think) correctly identifying a lot of what's going on in real time, at least with the limited information we have to go off of. Just curious what evidence supports the idea of Summers being "bullet-biting" or associated with EA?

comment by Askwho · 2023-11-23T12:12:16.872Z · LW(p) · GW(p)

I've created an ElevenLabs AI narrated version of this post. I know their is already a voice option on this page, but I personally prefer the ElevenLabs output. https://www.patreon.com/posts/93392366?utm_campaign=postshare_creator

comment by Tristan Wegner (tristan-wegner) · 2023-11-23T08:38:35.742Z · LW(p) · GW(p)

Just in 5h ago:

Some at OpenAI believe Q* (pronounced Q-Star) could be a breakthrough in the startup's search for what's known as artificial general intelligence (AGI), one of the people told Reuters.

Could relate to this Q* for Deep Learning heuristics:

https://x.com/McaleerStephen/status/1727524295377596645?s=20

comment by the gears to ascension (lahwran) · 2023-11-23T13:48:52.045Z · LW(p) · GW(p)

New way of putting it after the other thread got derailed:

re Larry Summers - my read of his wiki page and history of controversies is that he's a power player who keeps his cards close to his chest and is perfectly willing to wear political attire that imply he's friendly, but when it comes down to it, he's inclined towards not seeing all humans as deserving a fair shake; the core points of evidence for this concern are his association with epstein and his comments about sending waste to low income countries. He's also said things about other things, but those became a distraction when I brought them up and I don't think they're material to the point: that I think you can't read his friendliness from what labels he gives himself, infer his intentions only from what policies he's advocated and implemented relative to counterfactual for who else could have filled the positions he's held.

I deleted my portion of the previous thread because it was unproductive and wasting my brain space, but dr_s's reply and Linch's reply get at the core of what I'm hoping gets communicated to the community, which I've attempted to restate here.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-11-23T15:04:18.202Z · LW(p) · GW(p)

his comments about sending waste to low income countries.

Wikipedia describes those as:

In December 1991, while at the World Bank, Summers signed a memo that was leaked to the press. Lant Pritchett has claimed authorship of the private memo, which both he and Summers say was intended as sarcasm.[19] The memo stated that "the economic logic behind dumping a load of toxic waste in the lowest wage country is impeccable and we should face up to that.[19] ... I've always thought that under-populated countries in Africa are vastly underpolluted."[20] According to Pritchett, the memo, as leaked, was doctored to remove context and intended irony, and was "a deliberate fraud and forgery to discredit Larry and the World Bank."[21][19]

Generally, judging people by what they said over three decades ago is not very useful. In this case, there seems to be a suggestion that it was a joke.

Hanging out with Epstein is bad, but it does not define a person who does lots of different things.

infer his intentions only from what policies he's advocated and implemented relative to counterfactual for who else could have filled the positions he's held.

infer his intentions only from what policies he's advocated and implemented relative to counterfactual for who else could have filled the positions he's held.

So what did he advocate lately? Things like:

"I am certainly no left wing ideologue, but I think something wrong when taxpayers like me, well into the top .1 percent of income distribution, are getting a significant tax cut in a Democrats only tax bill as now looks likely to happen," wrote Summers.

"No rate increases below $10 million, no capital gains increases, no estate tax increases, no major reform of loopholes like carried interest and real estate exchanges but restoration of the state and local deduction explain it."

Especially given that he's on the board of a VC firm advocating for closing the carried interest loophole suggests that does not only see his own economic self-interests as important.

comment by River (frank-bellamy) · 2023-11-23T00:24:33.061Z · LW(p) · GW(p)

From my perspective, even rebuking Toner here is quite bad. It is completely inconsistent with the nonprofit’s mission to not allow debate and disagreement and criticism.

I can't imagine any board, for-profit or non-profit, tolerating one of its members criticizing its organization in public. Toner had a very privileged position, she got to be in one of the few rooms where discussion of AI safety matters most, where decisions that actually matter get made. She got to make her criticisms and cast her votes there, where it mattered. That is hardly "not allow[ing] debate and disagreement and criticism". The cost of participating in debate and disagreement and criticism inside the board room is that she gave up the right to criticize the organization anywhere else. That's a very good trade for her, if she could have kept to her end of the deal.

Replies from: habryka4, jkaufman, Radford Neal, AlexMennen, dr_s↑ comment by habryka (habryka4) · 2023-11-23T00:28:13.149Z · LW(p) · GW(p)

Lightcone definitely tolerates (and usually encourages) criticism of itself by its members. Many other organizations do too! This is absolutely not in any way a universal norm.

Replies from: frank-bellamy↑ comment by River (frank-bellamy) · 2023-11-23T00:58:23.192Z · LW(p) · GW(p)

By members of the board of directors specifically?

Replies from: habryka4, Linch↑ comment by habryka (habryka4) · 2023-11-23T01:13:01.197Z · LW(p) · GW(p)

I mean, yes, definitely (Zvi is on the board of our fiscal sponsor, and it would be crazy to me if we threatened remotely any consequences of him expressing concerns about us publicly, and my best guess is Zvi would move to fire me or step down pretty immediately if that happened).

Replies from: frank-bellamy↑ comment by River (frank-bellamy) · 2023-11-23T02:15:47.052Z · LW(p) · GW(p)

Interesting. I'm not sure exactly what you mean by "fiscal sponsor", and I don't really want to go down that road. My understanding of nonprofit governance norms is that if a board member has concerns about their organization (and they probably do - they have a shit tone more access and confidential information than most people, and no organization is perfect) then they can express those concerns privately to the rest of the board, to the executive director, to the staff. They are a board member, they have access to these people, and they can maintain a much better working relationship with these people and solve problems more effectively by addressing their concerns privately. If a board member thinks something is so dramatically wrong with their organization that they can't solve it privately, and the public needs to be alerted, my understanding of governance norms is that the board member should resign their board seat and then make their case publicly. Mostly this is a cached view, and I might not endorse it on deep reflection. But I think a lot of people involved in governance have this norm, so I don't think that Sam Altman's enforcement of this norm against Toner is particularly indicative of anything about Sam.

Replies from: followthesilence↑ comment by followthesilence · 2023-11-23T04:59:54.371Z · LW(p) · GW(p)

Like many I have no idea what's happening behind the scenes, so this is pure conjecture, but one can imagine a world in which Toner "addressed concerns privately" but those concerns fell on deaf ears. At that point, it doesn't seem like "resigning board seat and making case publicly" is the appropriate course of action, whether or not that is a "nonprofit governance norm". I would think your role as a board member, particularly in the unique case of OpenAI, is to honor the nonprofit's mission. If you have a rogue CEO who seems bent on pursuing power, status, and profits for your biggest investor (again, purely hypothetical without knowing what's going on here), and those pursuits are contra the board's stated mission, resigning your post and expressing concerns publicly when you no longer have direct power seems suboptimal. Seems to presume the board should have no say whether the CEO is doing their job correctly when, in this case, that seems to be the only role of the board.

Replies from: frank-bellamy↑ comment by River (frank-bellamy) · 2023-11-23T17:09:05.256Z · LW(p) · GW(p)

Oh, I agree that utilitarian considerations, particularly in the case of an existential threat, might warrant breaking a norm. I'm not saying Toner did anything wrong in any objective sense, I don't have a very strong view about that. I'm just trying to question Zvi's argument that Sam and OpenAI did something unusually bad in the way they responded to Toner's choice. It may be the case that Toner did the right and honorable thing given the position she was in and the information she had, and also that Sam and openAI did the normal and boring thing in response to that.

You do seem to be equivocating somewhat between board members (who have no formal authority in an organization) and the board itself (which has the ultimate authority in an organization). To say that a dissenting board member should resign before speaking out publicly is very different from saying that the board itself should not act when it (meaning the majority of its members) believe there is a problem. As I am reading the events here, Toner published her article before the board decided that there was something wrong and that action needed to be taken. I think everyone agrees that when the board concludes that something is wrong, it should act.

↑ comment by jefftk (jkaufman) · 2023-11-23T02:49:27.574Z · LW(p) · GW(p)

I'm in the process of joining a non-profit board, and one of the things I confirmed with them was that they were ok with me continuing to say critical things about them publicly as I felt needed.

↑ comment by Radford Neal · 2023-11-24T15:59:54.591Z · LW(p) · GW(p)

On the contrary, I think there is no norm against board members criticizing corporate direction.

I think it is accepted that a member of the board of a for-profit corporation might publicly say that they think the corporation's X division should be shut down, in order to concentrate investment in the Y division, since they think the future market for Y will be greater than for X, even though the rest of the board disagrees. This might be done to get shareholders on-side for this change of direction.

For a non-profit, criticism regarding whether the corporation is fulfilling its mandate is similarly acceptable. The idea that board members should resign if they think the corporation is not abiding by its mission is ridiculous - that would just lead to the corporation departing even more from its mission.

Compare with members of a legislative body. Legislators routinely say they disagree with the majority of the body, and nobody thinks the right move if they are on the losing side of a vote is to resign.

And, a member of the miltary who believes that they have been ordered to commit a war crime is not supposed to resign in protest (assuming that is even possible), allowing the crime to be committed. They are supposed to disobey the order.

↑ comment by AlexMennen · 2023-11-23T23:19:04.849Z · LW(p) · GW(p)

If board members have an obligation not to criticize their organization in an academic paper, then they should also have an obligation not to discuss anything related to their organization in an academic paper. The ability to be honest is important, and if a researcher can't say anything critical about an organization, then non-critical things they say about it lose credibility.

Replies from: frank-bellamy↑ comment by River (frank-bellamy) · 2023-11-24T11:53:08.779Z · LW(p) · GW(p)

"anything related to", depending how it's interpreted, might be overly broad, but something like this seems like a necessary implication, yes. Is that a bad thing?

↑ comment by dr_s · 2023-11-23T08:32:01.003Z · LW(p) · GW(p)

I can't imagine any board, for-profit or non-profit, tolerating one of its members criticizing its organization in public.

This is only evidence for how insane the practices of our civilization are, requiring that those who most have the need and the ability to scrutinise the power of a corporation do so the least. OpenAI was supposedly trying to swim against the current, but alas, it just became another example of the regular sort of company.

Replies from: frank-bellamy↑ comment by River (frank-bellamy) · 2023-11-23T16:55:06.965Z · LW(p) · GW(p)

requiring that those who most have the need and the ability to scrutinise the power of a corporation do so the least.

I have no idea how you got that from what I said. The view of governance I am presenting is that the board should scrutinize the corporation, but behind closed doors, not out in public. Again, I'm not entirely confident that I agree with this view, but I do think it is normal for people involved in governance and therefor doesn't indicate much about Altman or openAI one way or the other.

comment by Gunnar_Zarncke · 2023-12-10T00:17:31.097Z · LW(p) · GW(p)

It is interesting to read the Common misconceptions about OpenAI [LW · GW] one year later.

comment by Martin Vlach (martin-vlach) · 2023-11-24T12:47:39.321Z · LW(p) · GW(p)

what happened at Reddit

could there be any link? From a small research I have only obtained that Steve Huffman praised Altman's value to the Reddit board.

comment by Burny · 2023-11-22T19:21:37.501Z · LW(p) · GW(p)

Merging with Anthropic may have been a better outcome

Replies from: lahwran, SkinnyTy↑ comment by the gears to ascension (lahwran) · 2023-11-23T00:42:48.138Z · LW(p) · GW(p)

it's unlikely, even had amodei agreed in principle, that this could have been legally possible.

↑ comment by HiddenPrior (SkinnyTy) · 2023-11-22T19:25:13.266Z · LW(p) · GW(p)

I believe that power rested in the hands of the CEO the board selected, the board itself does not have that kind of power, and there may be other reasons we are not aware of that lead them to decide against that possibility.

Replies from: orthonormal↑ comment by orthonormal · 2023-11-22T20:38:35.208Z · LW(p) · GW(p)

Prior to hiring Shear, the board offered a merger to Dario Amodei, with Dario to lead the merged entity. Dario rejected the offer.

comment by Review Bot · 2024-07-06T07:18:24.589Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by the gears to ascension (lahwran) · 2023-11-22T23:44:39.273Z · LW(p) · GW(p)

Replies from: Linch, lahwran↑ comment by Linch · 2023-11-23T00:12:49.037Z · LW(p) · GW(p)

Besides his most well-known controversial comments re: women, at least according to my read of his Wikipedia page, Summers has a poor track record re: being able to identify and ouster sketchy people specifically.

↑ comment by the gears to ascension (lahwran) · 2023-11-23T00:42:13.050Z · LW(p) · GW(p)

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-11-23T00:46:57.282Z · LW(p) · GW(p)

I don't see any relevant quote in the thing you linked. It says the following, which maybe is what you are referring to:

Later, at Harvard, Summers gave a speech suggesting that when when it comes to the individuals with the greatest aptitude in science, men could vastly outnumber women.

These topics have been discussed at length on LW and adjacent spaces. Yes, probably men have higher variance among many traits than women, causing them to be a larger fraction of almost any tail population. No, this has basically nothing to do with moral worth or anything you would use an unqualified term "inferior" for.

Replies from: dr_s, lahwran↑ comment by dr_s · 2023-11-23T08:53:04.699Z · LW(p) · GW(p)

I never got the sense of this being settled science (of course given how controversial the claim would be hard for it to be settled for good), but even besides that, the question is: what does one do with that information?

Let's put it in LW language: I think that a good anti-discrimination policy might indeed be "if you have to judge a human's abilities in a given domain (e.g. for hiring), precommit to assuming a Bayesian prior of total ignorance about those abilities, regardless of what any exterior information might suggest you, and only update on their demonstrated skills. This essentially means that we shift the cognitive burden of updating on the judge rather than the judged (who otherwise would have to fight a disadvantageous prior). It seems quite sensible IMO, as usually the judge has more resources to spare anyway. It centers human opportunity over maximal efficiency.

Conversely, someone who suggests that "the economic logic behind dumping a load of toxic waste in the lowest-wage country is impeccable" seems already to think that economics are mainly about maximal efficiency, and any concerns for human well being are at best tacked on. This is not a good ideological fit for OpenAI's mission! Unless you think the economy is and ought to be only human well-being's bitch, so to speak, you have no business anywhere near building AGI.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-11-23T18:25:17.392Z · LW(p) · GW(p)

I never got the sense of this being settled science (of course given how controversial the claim would be hard for it to be settled for good), but even besides that, the question is: what does one do with that information?

He did not present it as settled science but as one of three hypotheses for why women may have been underrepresented in tenured positions in science and engineering at top universities and research institutions. The key implication of the hypothesis being true would be that having quotas for a certain amount of women in tenure positions is not meritocratic.

Conversely, someone who suggests that "the economic logic behind dumping a load of toxic waste in the lowest-wage country is impeccable" seems already to think that economics are mainly about maximal efficiency, and any concerns for human well being are at best tacked on.

His position seems to be that the sentence was ironic. The word "impeccable" usually does not appear in serious academic or policy writing. The memo seems to be in response to a report that suggested that free trade will produce environmental benefits in developing nations. It was a way to make fun of a PR lie.

It's actually related to what Zvi talked about as bullet biting. If you want to advocate the policies of the World Bank in 1991 on free trade, it makes sense to accept that this comes with negative environmental effects in some third-world countries.

Replies from: dr_s↑ comment by dr_s · 2023-11-23T18:39:01.238Z · LW(p) · GW(p)

Hm. I'd need to read the memo to form my own opinion on whether that holds. It could be a "Modest Proposal" thing but irony is also a fairly common excuse used to walk back the occasional stupid statement.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-11-24T03:45:41.308Z · LW(p) · GW(p)

I'd need to read the memo to form my own opinion on whether that holds.

It seems generally bad form to criticize people for things without actually reading what they wrote.

Just reading a text without trying to understand the context in which the text exists is also not a good way to understand whether a person made a mistake.

I think what you wrote here is likely more morally problematic than what Summers did 30 years ago. Do you think that whenever someone thinks about your merits as a person a decades from now someone should bring up that you are a person who likes to criticize people for what they said without reading what they said?

Replies from: dr_s↑ comment by dr_s · 2023-11-24T07:25:11.027Z · LW(p) · GW(p)

While judgement can vary, I think this is about more than just judging a person morally. I don't think what Summers said, even in the most uncharitable reading, should disqualify him from most jobs. I do think though that they might disqualify him, or at least make him a worse choice, for something like the OpenAI board, because that comes with ideological requirements.

EDIT: so best source I've found for the excerpt is https://en.m.wikipedia.org/wiki/Summers_memo. I think it's nothing particularly surprising and it's 30 years old, but rather than ironic it sounds to me like it's using this as an example of things that would look outrageous but are equivalent to other things that we do and don't look quite as bad due to different vibes. I don't know that it disqualifies his character somehow, it's way too scant evidence to decide either way, but I do think it updates slightly towards him being a kind of economist I don't much like to be potentially in charge of AGI, and again, this is because the requirements are strict for me. If you treat AGI with the same hands off approach as we usually do normal economic matters, you almost assuredly get a terrible world.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-11-24T16:55:53.876Z · LW(p) · GW(p)

That seems to be the publically available except. There's the Harvard Magazine article I linked above that speaks about the context of that writing and how it's part of a longer seven-page document.

Summers seems to have been heavy into deregulation three decades ago. More lately he seems to be supportive of minimum wage increases and more taxes for the rich.

I do think though that they might disqualify him, or at least make him a worse choice, for something like the OpenAI board, because that comes with ideological requirements.

While I would prefer people who are ideologically clear for adding a lot of regulations for AI, it seems to me that part of what Sam Altman wanted was a board where people who can clearly counted on to vote that way don't have the majority.

Larry Summers seems to be a smart independent thinker whose votes are not easy to predict ahead and that made him a good choice as a board candidate on which both sides can agree.

Having him on the board could also be useful for lobbying for the AI safety regulation that OpenAI wants.

↑ comment by the gears to ascension (lahwran) · 2023-11-23T04:48:47.464Z · LW(p) · GW(p)

Replies from: T3t↑ comment by RobertM (T3t) · 2023-11-23T05:42:26.604Z · LW(p) · GW(p)

On the question of aptitude for science, Summers said this: "It does appear that on many, many different human attributes -- height, weight, propensity for criminality, overall IQ, mathematical ability, scientific ability -- there is relatively clear evidence that whatever the difference in means -- which can be debated -- there is a difference in the standard deviation, and variability of a male and a female population. And that is true with respect to attributes that are and are not plausibly, culturally determined. If one supposes, as I think is reasonable, that if one is talking about physicists at a top 25 research university, one is not talking about people who are two standard deviations above the mean. And perhaps it's not even talking about somebody who is three standard deviations above the mean. But it's talking about people who are three and a half, four standard deviations above the mean in the one in 5,000, one in 10,000 class. Even small differences in the standard deviation will translate into very large differences in the available pool substantially out."

Source (the article linked to in the axios article you cite)

Putting that aside, I don't see what part of Zvi's claim you think is taking things further than they deserve to be taken. It seems indisputably true that Summers is both a well-known bullet-biter[1], and also that he has had some associations with Effective Altruism[2]. That is approximately the extent of Zvi's claims re: Summers. I don't see anything that Zvi wrote as implying some sort of broader endorsement of Summers' character or judgment.

- ^

See above.

- ^

https://harvardundergradea.org/podcast/2018/5/19/larry-summers-on-his-career-lessons-and-effective-altruism

↑ comment by the gears to ascension (lahwran) · 2023-11-23T05:43:44.745Z · LW(p) · GW(p)

Replies from: ChristianKl↑ comment by ChristianKl · 2023-11-23T06:43:30.501Z · LW(p) · GW(p)

If you disagree with something someone said, don't include words that suggest that he said things he didn't say. Don't make false claims.

Don't try to use links to opinions about what he said as sources but seek to link to the actual statements by the person and quote the passages you found offensive or a factual description of what's actually said.

Sorry I said he thinks women suck at life the wrong way? Gotta say I'm disappointed that you're just filing this under "well, technically women do have less variance". That seems ... likely to help paper over the likely extent of threat that can be inferred from his having used a large platform to announce this thing,

Wikipedia describes the platform in which he made the statements as "In January 2005, at a Conference on Diversifying the Science & Engineering Workforce sponsored by the National Bureau of Economic Research, Summers sparked controversy with his discussion of why women may have been underrepresented "in tenured positions in science and engineering at top universities and research institutions". The conference was designed to be off-the-record so that participants could speak candidly without fear of public misunderstanding or disclosure later."

There's no reason to translate "we might have less women at top positions because of less variance in women" in such a context into "women suck at life".

I'm saying I believe he believes it, based on his pattern of behavior surrounding when and how he made the claim, and the other things he's said, and his political associations

His strongest political affiliations seem to be around holding positions in the treasury under Clinton and then being Director of the National Economic Council under Obama.

Suggesting that being associated with either of those Democratic administrations means that someone has to believe that "women suck at life" is strange.