Posts

Comments

When I see the question, I know I am on LW. It allows me to deduce that "arcane runes" part is not important, but LLM don't have this context. Maybe it sounds like crackpot/astrology question to it?

e^3 is ~20, so for large n you get 95% of success by doing 3n attempts.

Thinking about responsible gambling, something like up-front long-term commitment should solve a lot of problems? You have to decide right away and lock up money you going to spend this month and that will separate decision from impulse to spend.

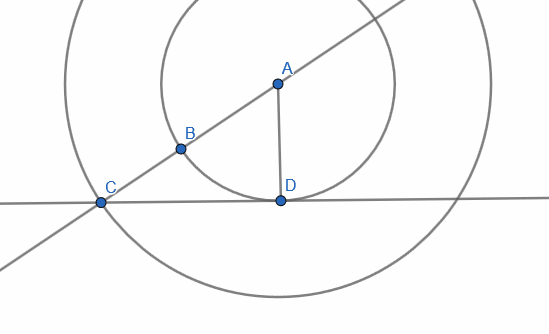

I tried to derive it, turned out to be easy: BC is wheel pair, CD is surface, slow medium above. AC/Vfast=AB/Vslow and for critical angle D touches small circle (inner wheel is on the verge of getting out of medium) so ACD is right triangle, so AC*sin (ACD)= AD (and AD same as AB) so sin(ACD) = AB/AC= Vslow/Vfast. Checking wiki it is the same angle (BC here is wavefront so velocity vector is normal to it). Honestly I am a bit surprised this analogy works so well.

I read about better analogy long time ago: use two wheels on an axle instead of single ball, then refraction come out naturally. Also I think instead of difference in friction it is better to use difference in elevation, so things slow down when they go to an area of higher elevation and speed back up going down.

It is defecting against cooperate-bot.

From ASI standpoint humans are type of rocks. Not capable of negotiating.

This experience-based primitivity also means inter-temporal self identification only goes one way. Since there is no access to subjective experience from the future, I cannot directly identify which/who would be my future self. I can only say which person is me in the past, as I have the memory of experiencing from its perspective.

While there is large difference in practice between recalling past event and anticipating future event, on conceptual level there is no meaningful difference. You don't have direct access to past events, memory is just an especially simple and reliable case of inference.

Would be funny if hurdle presented by tokenization is somehow responsible for LLM being smarter than expected :) Sounds exactly like kind of curveball reality likes to throw at us from time to time :)

But to regard these as a series of isolated accidents is, I think, not warranted by the number of events which they all seem to point in mysteriously a similar direction. My own sense is more that there are strange and immense and terrible forces behind the Poverty Equilibrium.

Reminded me of The Hero With A Thousand Chances

May be societies with less poverty are less competitive

When I read about automated programming for robotics few month ago I wondered if it can be applied for ML. If it can, then there is good chance of seeing paper about modification for ReLU right about now. It seemed like possibly similar kind of research, case where perseverance is more important than intelligence. So at first I freaked out a bit at headline, but it is not it, right?

Important fact about rocket is that it provides some fixed amount of change of velocity (delta-v). I think your observation of "how long strong gravity slows me" combined with thinking in terms of delta-v budget and where it is best spent brought intuitive understanding of Oberth effect to me. Analysing linear trajectory instead of elliptic one also helps.

Not sure if this help but:

A program that generates "exactly" the sequence HTHTT

Alongside this program there is program that generates HHHHH and HHHHT and HHHTT etc - 2^5 of such programs, and before seeing evidence HTHTT is just one of those not standing out in any way. (but I don't know how specifically Solomonoff induction accounts for it, if that is your question)

Computer viruses belong to the first category while biological weapons and gain of function research to the second.

I think it is not even goals but means. When you have big hammer every problem looks like a nail, if you good at talking you start to think you can talk your way out of any problem.

I'd add that correctness often is security: job poorly done is an opportunity for hacker to subvert your system, make your poor job into great job for himself.

Have you played something like Slay the spire? Or Mechabellum that is popular right now? Deck builders don't require coordination at all but demands understanding of tradeoffs and managing risks. If anything those skills are neglected parts of intelligence. And how high is barrier of entry to something like Super Auto Pets?

Heard that boxfan works best if placed some distance from window, not in window.

I remember reading about zoologist couple that tried to rise their child together with baby gorilla. Gorilla development stopped at certain age and that stopped human development so they had to be separated.

Example1: Timmie gets cookies and I am glad he did even if I am dead and cant see him.

Example2: Timmie sucked into blackhole but I don't care as I cant see him.

Today is raining and asking "why?" is a mistake because I am already experiencing rain right now, and counterfactual me isn't me?

It seems to me explanation is confused in such a way as to obscure decision making process of which questions are useful to consider.

I would go so far as to say that the vast majority of potential production, and potential value, gets sacrificed on this alter, once one includes opportunities missed.

altar? same here:

A ton of its potential usefulness was sacrificed on the alter of its short-term outer alignment.

If some process in my brain is conscious despite not being part of my consciousness, it matters too! While I don't expect it to be the case, I think there is bias against even considering such possibility.

Not long ago I often heard that AI is "beyond horizon". I agreed to that while recognising how close horizon become. Technological horizon isn't fixed time into future and not just property of (~unchanging) people but also available tech.

Now I hear "it takes a lot of time and effort" but again it does not have to mean "a lot of time and effort in my subjective view". It can take a lot of time and effort and at the same time be done in a blink of an eye - but my eye, but not eye of whoever doing it. Lot of time and effort don't have to subjectively feel like "lot of time and effort" to me.

Got Mega Shark Versus Giant Octopus vibes from this, but your prompts was much more sane, which made things feel inconsistent. Still somewhat enjoyed it.

Hyperloop? I am not sold on his talent being "find good things to do" as opposed to "successfully do things". And second has a lot to do with energy/drive, not only intellect. Hence I expect his intelligence be overestimated. But I agree with your estimate, which is not what I expected?

It is important to remember that our ultimate goal is survival. If someone builds a system that may not meet the strict definition of AGI but still poses a significant threat to us, then the terminology itself becomes less relevant. In such cases, employing a 'taboo-your-words' approach can be beneficial.

Now lets think of intelligence as "pattern recognition". It is not all that intelligence is, but it is big chunk of it and it is concrete thing we can point to and reason about while many other bits are not even known.[1]

In that case GI is general/meta/deep pattern recognition. Patterns about patterns and patterns that apply to many practical cases, something like that.

Obvious thing to note here: ability to solve problems can be based on a large number of shallow patterns or small number of deep patterns. We are pretty sure that significant part of LLM capabilities is shallow pattern case, but there are hints of at least some deep patterns appearing.

And I think that points to some answers: LLM appear intelligent by sheer amount of shallow patterns. But for system to be dangerous, number of required shallow patterns is so large that it is essentially impossible to achieve. So we can meaningfully say it is not dangerous, it is not AGI... Except, as mentioned earlier there seem to be some deep patterns emerging. And we don't know how many. As for the pre-home-computer era researchers, I bet they could not imagine amount of shallow patterns that can be put into system.

I hope this provided at least some idea how to approach some of your questions, but of course in reality it is much more complicated, there is no sharp distinction between shallow and deep patterns and there are other aspects of intelligence. For me at least it is surprising that it is possible to get GPT-3.5 with seemingly relatively shallow patterns, so I myself "could not imagine amount of shallow patterns that can be put into system"

- ^

I tried Chat GPT on this paragraph, like the result but felt too long:

Intelligence indeed encompasses more than just pattern recognition, but it is a significant component that we can readily identify and discuss. Pattern recognition involves the ability to identify and understand recurring structures, relationships, and regularities within data or information. By recognizing patterns, we can make predictions, draw conclusions, and solve problems.

While there are other aspects of intelligence beyond pattern recognition, such as creativity, critical thinking, and adaptability, they might be more challenging to define precisely. Pattern recognition provides a tangible starting point for reasoning about intelligence.

If we consider problem-solving as a defining characteristic of intelligence, it aligns well with pattern recognition. Problem-solving often requires identifying patterns within the problem space, recognizing similarities to previously encountered problems, and applying appropriate strategies and solutions.

However, it's important to acknowledge that intelligence is a complex and multifaceted concept, and there are still many unknowns about its nature and mechanisms. Exploring various dimensions of intelligence beyond pattern recognition can contribute to a more comprehensive understanding.

So I'm not super confident in this, but: I don't have a model which suggests descaling a kettle (similar to mine) will have particularly noticeable effects. If it gets sufficiently scaled it'll self-destruct, but I expect this to be very rare. (I don't have a model for how much scaling it takes to reach that point, it's just that I don't remember ever hearing a friend, family member or reddit commenter say they destroyed their kettle by not descaling.)

Scale falls off by itself, so it is not really possible to self-destruct kettle under normal circumstances. For my 30l water heater, it took more than 5l of scale to self destruct, just no way to not notice spalled scale on such scale.

Read a bit about interaction between gas ethanol and water, fascinating!

Bullshit. I don't believe it. Gas do not turn into water. I am sorry, but somebody "borrowing" your gas and returning water is more likely explanation. (No special knowledge here, tell me I am wrong!)

Here he touched on this ("Large language models" timestamp in video description), and maybe somewhere else in video, cant seem to find it. It is much better to get it directly from him but it is 4h long so...

My attempt of summary with a bit of inference so take with dose of salt:

There is some "core" of intelligence which he expected to be relatively hard to find by experimentation (but more components than he expected are already found by experimentation/gradient descent so this is partially wrong and he afraid maybe completely wrong).

He was thinking that without full "core" intelligence is non-functional - GPT4 falsified this. It is more functional than he expected, enough to produce mess that can be perceived as human level, but not really. Probably us thinking of GPT4 as being on human level is bias? So GPT4 have impressive pieces but they don't work in unison with each other?

This is how my (mis)interpretation of his words looks like, last parts I am least certain about. (I wonder, can it be that GPT4 already have all "core" components but just stupid, barely intelligent enough to look impressive because of training?)

That is novel (and, in my opinion potentially important/scary) capability of GPT4. You can look at A_Posthuman comment below for details. I do expect it to work on chess, be interested if proven wrong. You mentioned chatGPT but it can't do reflection on usable level. To be fair I don't know if GPT4 capabilities are on useful level/only tweak away right now, and how far they can be pushed if they are (as in if it can self-improve to ASI), but for solving "curse" problem even weak reflection capabilities should suffice.

then have humans write the corrections to the continuations

Don't have to be humans any more, GTP4 can do this to itself.

I am not AI researcher. (I have a feeling you may have mistaken me for one?)

I don't expect much gain after first iteration of reflection (don't know if it was attempted). When calling it recursive I was referring to Alpha Go Zero style of distillation and amplification: We have model producing Q->A, reflect on A to get A' and update model in direction Q->A'. We got to the state where A' is better than A, before if tried result probably would be distilled stupidity instead of distilled intelligence.

Such process, in my opinion, is significant step in direction of "capable of building new, better AI" worth explicitly noticing before we take such capability for granted.

Will you keep getting better results in the future as a result of having this done in the past?

Only if you apply "distillation and amplification" part, and I hope if you go too hard in absence of some kind of reality anchoring it may go off the rails and result in distilled weirdness. And hopefully you need bigger model anyway.

For example, if I want to find the maxima of a function, it doesn't matter if I use conjugate gradient descent or Newton's method or interpolation methods or whatever, they will tend to find the same maxima assuming they are looking at the same function.

Trying to channel my internal Eliezer:

It is painfully obvious that we are not the pinnacle of efficient intelligence. If evolution is to run more optimisation on us, we will become more efficient... and lose the important parts that matter to us and of no consequence to evolution. So yes, we end up being same aliens thing as AI.

Thing that makes us us is bug. So you have to hope gradient descent makes exactly same mistake evolution did, but there are a lot of possible mistakes.

One of arguments/intuition pumps in Chinese room experiment is to make human inside the room to remember room content instead.

Excellent example that makes me notice my bias: I feel that only one person can occupy my head. Same bias makes people believe augmentation is safe solution.

As I understood it ultrasonic disruption was feature as it allows to target specific brain area.

Putting aside obvious trust issues how close is current technology to correcting things like undesired emotional states?

I remember reading about technology where retrovirus injected in bloodstream, that is capable of modifying receptiveness of braincells to certain artificial chemical. Virus cant pass brain barrier unless ultrasonic vibrations applied to selected brain area making it temporary passable there. This allows for making normally ~inert chemical into targeted drug. Probably nowhere close to applying it to humans - approval process will be nightmare.

It seems to me you confused by overlap in meaning of word "state".

In this context, it is "state of target of inquiry" - water either changes its solid form by melting or not. So state refers to difference between "yes, waters changes its solid form by melting" and "no, waters does not change its solid form by melting". Those are your 2 possible states, and water itself having unrelated set of states(solid/liquid/gas) to be in is just coincidence.

Instead of making up bullshit jobs or UBI ppl should be paid for receiving education. You can argue it is specific kind of bullshit job, but i think a lot of ppl here have stereotype of education being something you pay for.

Was thinking ppl should be paid for receiving education (may be sport kind of education/training) instead of UBI.

Q: 3,2,1 A: 4,2,1 in second set, can you retry while fixing this? or it was intentional?

There is obvious class of predictions like killing own family or yourself and such prediction are good example of what absence of free will feels from inside. There are things I care about, and I am not free to throw them away.

But genome have proxies for most thing it wants to control, so maybe it is the other way around? Instead of extracting information about concept, genome provides crystallization centre (using proxies) around which concept forms?

My friend commented that there is surge of cars with Russians plate numbers, and apparently some Russians from Osh (our second largest city) came back also. Surprised to see such a vivid confirmation.

Hope you and your family have taken your covid shots, people don't wear masks or keep social distancing here.

>Нет войны. Over

At the beginning of last paragraph, should be "войне"

Have you heard about Communist Party?

I can't see how this is argument in good faith. Your choice of using Russia instead of USSR feels intentionally misleading.

The American Government says lots of hypocritical things about regime change and interfering with elections and so on; I think this is bad and wish they wouldn't do it.

Imagine if reaction to this war was like reaction to Iraq. That is scary even for me - as ethnically Russian I feel measure of personal responsibility for this. I did not want Russia to become the second US. But at the same time the difference is striking.

UPD: I am talking about reaction in West, here in Kyrgyzstan reactions are about the same - yeah,"wish they wouldn't do it" (careful, low confidence, it is hard to judge general public opinion by few datapoints)