AI #14: A Very Good Sentence

post by Zvi · 2023-06-01T21:30:04.548Z · LW · GW · 30 commentsContents

Table of Contents Language Models Offer Mundane Utility Language Models Don’t Offer Mundane Utility Fun With Image, Sound and Video Generation They Took Our Jobs Deepfaketown and Botpocalypse Soon Introducing Reinforcement Learning From Process-Based Feedback Voyager In Other AI News Quiet Speculations The Quest for Sane Regulation Hinton Talks About Alignment, Brings the Fire Andrew Critch Worries About AI Killing Everyone, Prioritizes People Signed a Petition Warning AI Might Kill Everyone People Are Otherwise Worried About AI Killing Everyone Other People Are Not Worried About AI Killing Everyone What Do We Call Those Unworried People? The Week in Podcasts, Video and Audio Rhetorical Innovation The Wit and Wisdom of Sam Altman The Lighter Side None 30 comments

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

That is the entire text of the one-line open letter signed this week by what one could reasonably call ‘everyone,’ including the CEOs of all three leading AI labs.

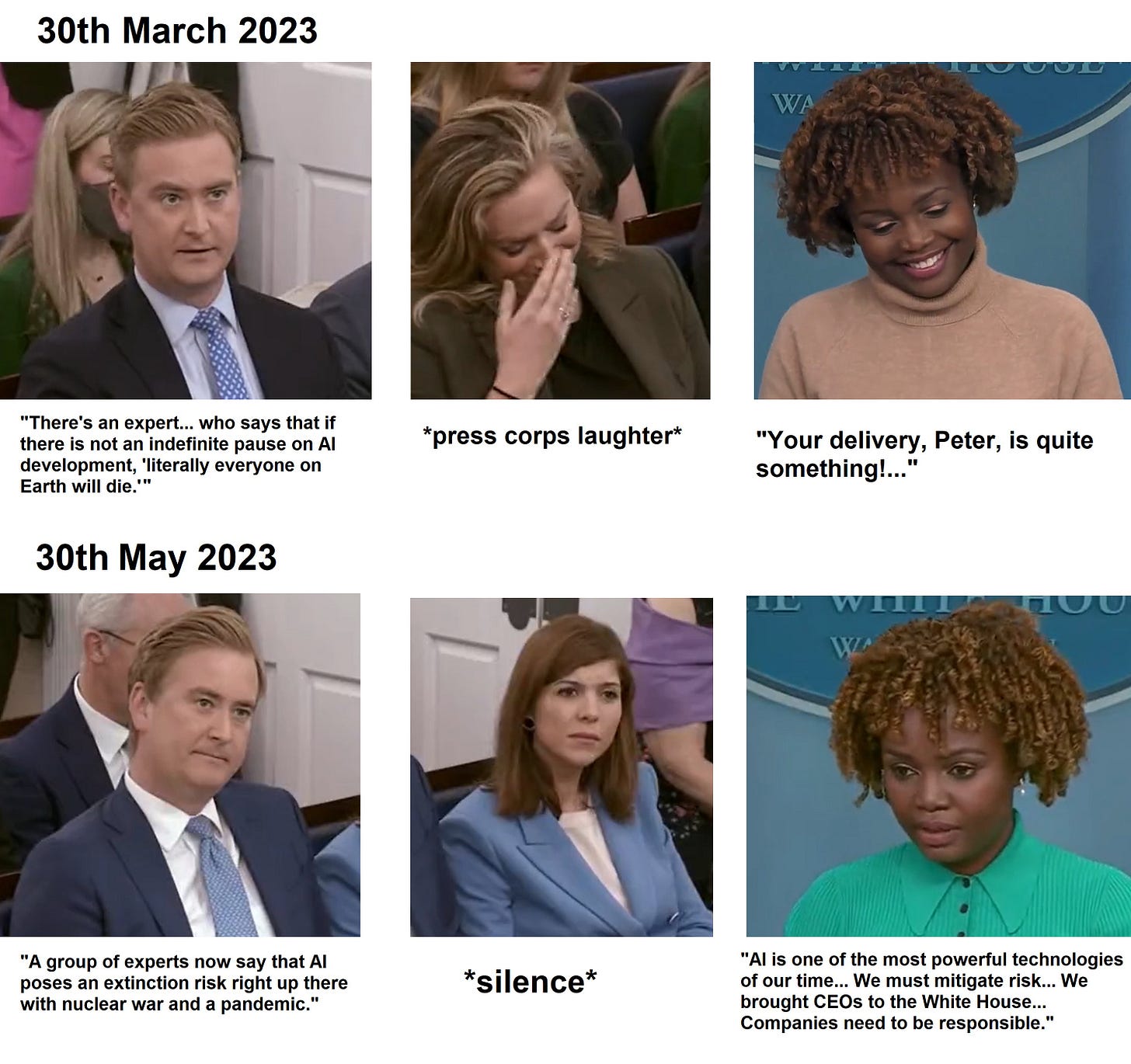

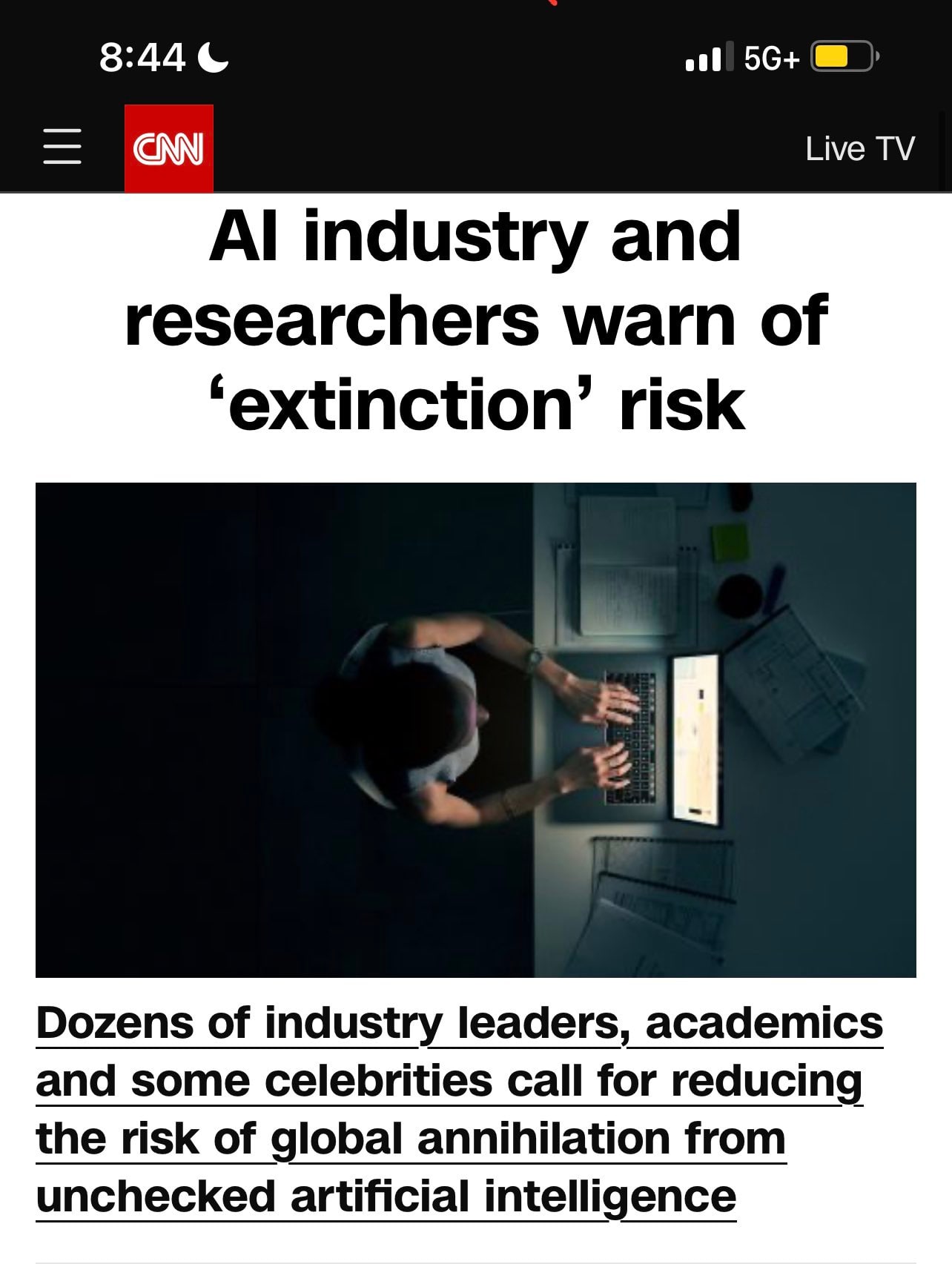

Major news outlets including CNN and The New York Times noticed, and put the focus squarely on exactly the right thing: Extinction risk. AI poses an extinction risk.

This time, when the question was asked at the White House, no one laughed.

You love to see it. It gives one hope.

Some portion of we are, perhaps, finally ready to admit we have a problem.

Let’s get to work.

Also this week we have a bunch of ways not to use LLMs, new training techniques, proposed regulatory regimes and a lot more.

I also wrote a four-part thing yesterday, as entries to the OpenPhil ‘change my mind’ contest regarding the conditional probability of doom: To Predict What Happens, Ask What Happens, Stages of Survival, Types and Degrees of Alignment and The Crux List.

I worked hard on those and am happy with them, but I learned several valuable lessons, including not to post four things within ten minutes even if you just finished editing all four, people do not like this. With that done, I hope to move the focus off of doom for a while.

Table of Contents

- Introduction

- Table of Contents

- Language Models Offer Mundane Utility. Most are still missing out.

- Language Models Don’t Offer Mundane Utility. I do hereby declare.

- Fun With Image, Sound and Video Generation. Go big or go home.

- They Took Our Jobs. Are you hired?

- Deepfaketown and Botpocalypse Soon. A master of the form emerges.

- Introducing. A few things perhaps worth checking out.

- Reinforcement Learning From Process-Based Feedback. Show your work.

- Voyager. To boldly craft mines via LLM agents.

- In Other AI News. There’s always more.

- Quiet Speculations. Scott Sumner not buying the hype.

- The Quest for Sane Regulation. Microsoft and DeepMind have proposals.

- Hinton Talks About Alignment, Brings the Fire. He always does.

- Andrew Critch Worries About AI Killing Everyone, Prioritizes.

- People Signed a Petition Warning AI Might Kill Everyone. One very good sentence. The call is coming from inside the house. Can we all agree now that AI presents an existential threat?

- People Are Otherwise Worried About AI Killing Everyone. Katja Grace gets the Time cover plus several more.

- Other People Are Not Worried About AI Killing Everyone. Juergen Schmidhuber, Robin Hanson, Andrew Ng, Jeni Tennison, Gary Marcus, Roon, Bepis, Brian Chau, Eric Schmdit.

- What Do We Call Those Unworried People? I’m still voting for Faithers.

- The Week in Podcasts, Video and Audio. TED talks index ahoy.

- Rhetorical Innovation. Have you tried letting the other side talk?

- The Wit and Wisdom of Sam Altman. Do you feel better now?

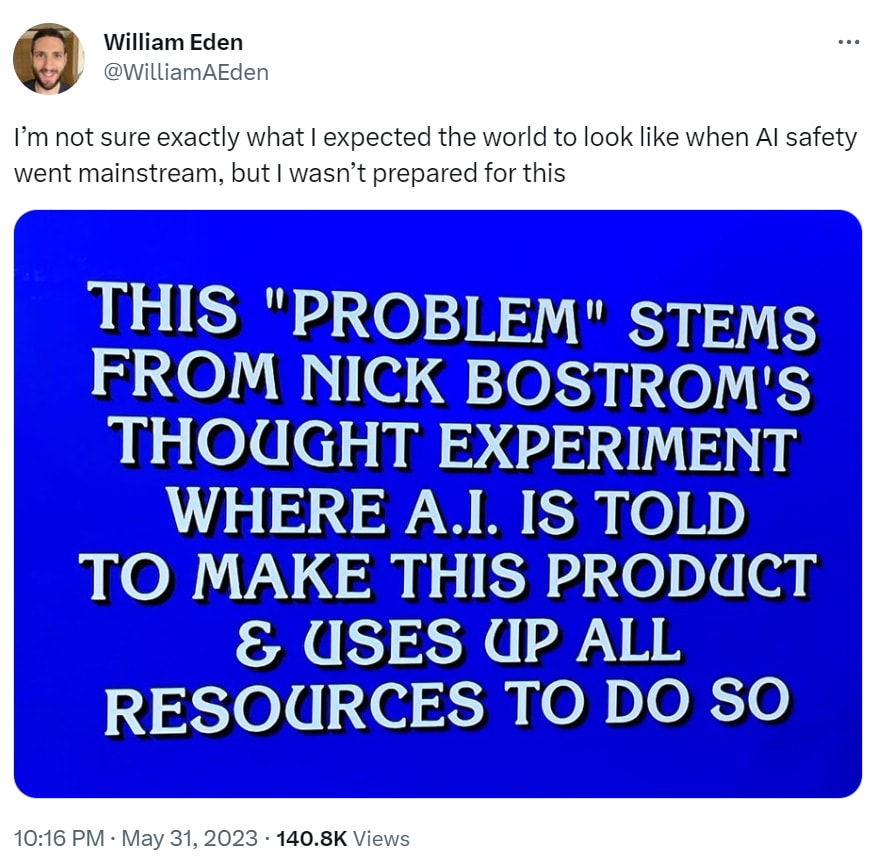

- The Lighter Side. We’re in Jeopardy!

Language Models Offer Mundane Utility

Most people are not yet extracting much mundane utility.

Kevin Fischer: Most people outside tech don’t actually care that much about productivity.

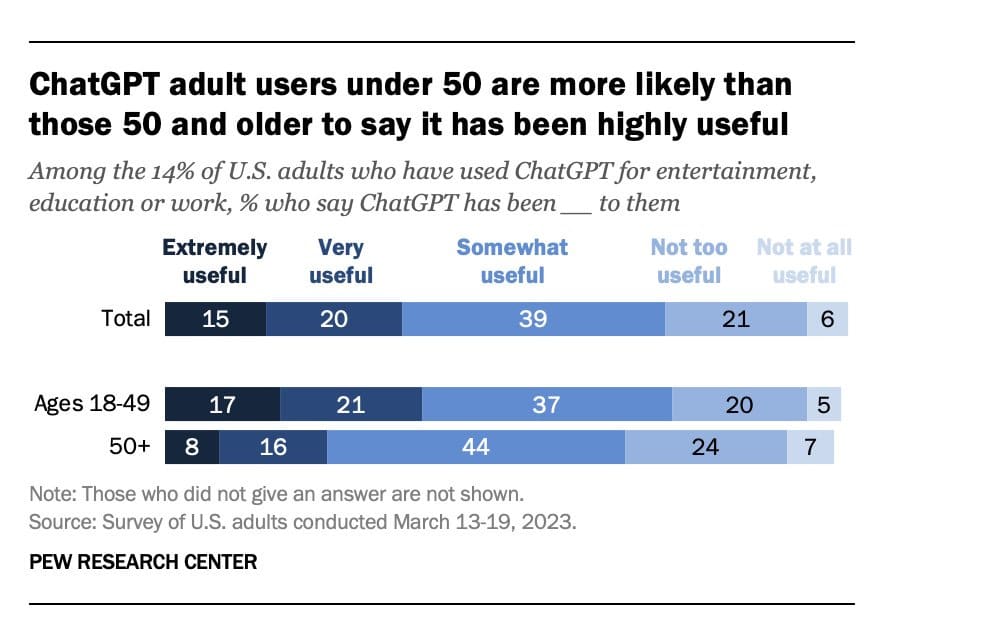

Daniel Gross: Only 2% of adults find ChatGPT “extremely useful”. I guess the new thinkfluencer wave is going to be saturation, it’s not *that* good, etc.

Strong numbers, very useful is very useful. Still a pale shadow of the future. I think I would indeed answer Extremely Useful, but these are not exactly well-defined categories.

The question I’d ask is, to what extent are those who tried ChatGPT selected to be who would find it useful. If the answer was none, that is kind of fantastic. If the answer is heavily, there’s a reason you haven’t tried it if you haven’t tried it yet, then it could be a lot less exciting. If nothing else, hearing about ChatGPT should correlate highly with knowing what there is to do with it.

Where are people getting their mundane utility?

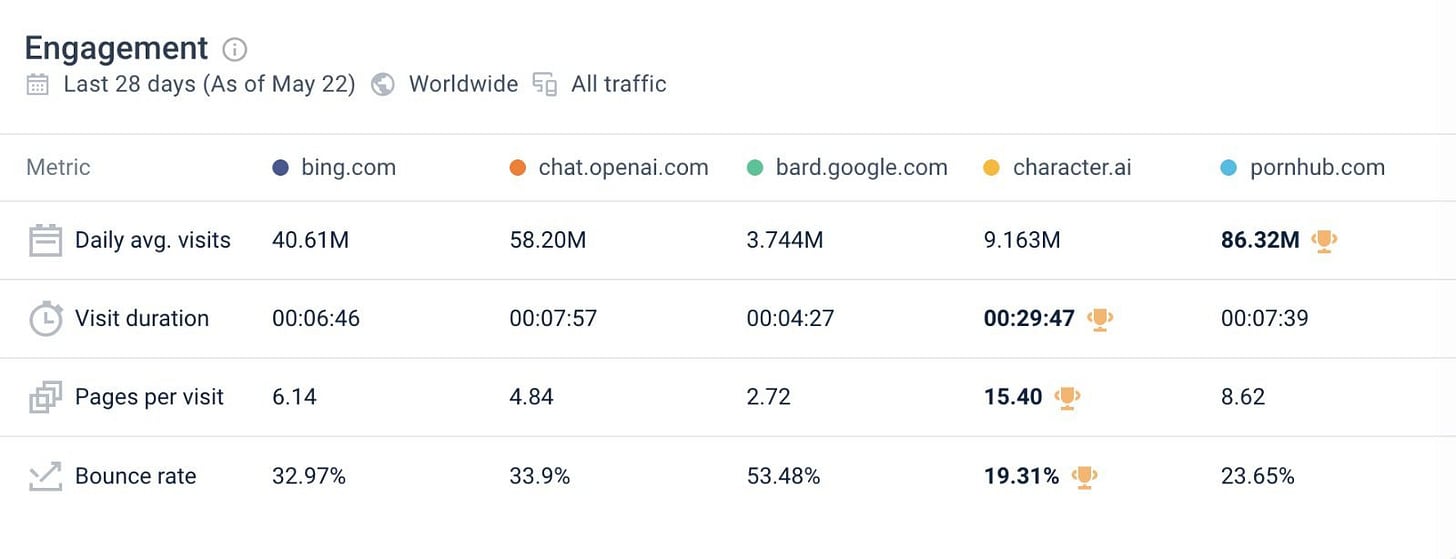

Nat Fiedman: Several surprises in this table.

I almost never consider character.ai, yet total time spent there is similar to Bing or ChatGPT. People really love the product, that visit duration is off the charts. Whereas this is total failure for Bard if they can’t step up their game.

How much of a market is out there?

Sherjil Ozair: I think there’s a world market for maybe five large language models.

Jim Fan: For each group of country/region, due to local laws.

Sherjil Ozair: and for each capability/usecase type. ;)

Well, then. That’s potentially quite a lot more than five.

My guess is the five are as follows:

Three that are doing the traditional safe-for-work thing, like GPT/Bard/Claude. They would differentiate their use cases, comparative advantages and features, form partnerships, have a price versus speed versus quality trade-off, and so on.

One or more that are technically inferior, but offer more freedom of action and freedom to choose a worldview, or a particular different world view.

Claim that a properly prompted GPT-4 can hit 1500 Elo in chess even if illegal moves are classified as forfeits, still waiting on the demo. There’s also some timeline speculations up-thread.

Nvidia promises that AI is the future of video games, with conversations and graphics generated on the fly, in Jensen Huang’s first live keynote in 4 years. I’m optimistic in the long run, but the reports I’ve heard are that this isn’t ready yet, which David Gaider talks about in this thread.

David Gaider: Ah, yes. The dream of procedural content generation. Even BioWare went through several iterations of this: “what if we didn’t need every conversation to be bespoke?” Unlimited playtime with dialogue being procedurally created alongside procedural quests!

Each time, the team collectively believed – believed down at their CORE – that this was possible. Just within reach. And each time we discovered that, even when the procedural lines were written by human hands, the end result once they were assembled was… lackluster. Soulless.

Was it the way the lines were assembled? Did we just need more lines? I could easily see a team coming to the conclusion that AI could generate lines specific to the moment as opposed to generic by necessity… an infinite monkeys answer to a content problem, right? Brilliant!

In my opinion, however, the issue wasn’t the lines. It was that procedural content generation of quests results in something *shaped* like a quest. It has the beats you need for one, sure, but the end result is no better than your typical “bring me 20 beetle heads” MMO quest.

Is that what a player really wants? Superficial content that covers the bases but goes no further, to keep them playing? I imagine some teams will convince themselves that, no, AI can do better. It can act like a human DM, whipping up deep bespoke narratives on the fly.

And I say such an AI will do exactly as we did: it’ll create something *shaped* like a narrative, constructed out of stored pieces it has ready… because that’s what it does. That is, however, not going to stop a lot of dev teams from thinking it can do more. And they will fail.

Sure, yes, yes, I can already see someone responding “but the tech is just ~beginning~!” Look, if we ever get to the point where an AI successfully substitutes for actual human intuition and soul, then them making games will be the least of our problems, OK?

Final note: The fact these dev teams will fail doesn’t mean they won’t TRY. Expect to see it. It’s too enticing for them not to, especially in MMO’s and similar where they feel players aren’t there for deep narrative anyhow. A lot of effort is going to be wasted on this.

Perhaps the problem lies not in our AIs, but in ourselves. If your MMO has a core game loop of ‘locate quest, go slay McBandits or find McGuffin or talk to McSource, collect reward’ and the players aren’t invested in the details of your world or getting to know the characters that live in it, looking up what to do online when needed perhaps, no AI is going to fix that. You’re better off with fixed quests with fixed dialogue.

The reason to enable AI NPCs is if players are taking part in a living, breathing, changing world, getting to know and influence the inhabitants, seeking more information, drawing meaning.

It’s exactly when you have a shallow narrative that this fails, the AI generation is creating quest-shaped things perhaps, but not in a useful way. What you want is player-driven narratives with player-chosen goals, or creative, highly branching narratives where players create unique solutions, or deep narratives where the player has to dive in. Or you can involve the AIs mechanically, like having a detective that must interrogate suspects, and if you don’t use good technique, it won’t work.

Aakash Gupta evaluates the GPT plug-ins and picks his top 10: AskYourPDF to talk to PDFs, ScholarAI to force real citations, Show Me to create diagrams, Ambition for job search, Instacart, VideoInsights to analyze videos, DeployScript to build apps or websites, Speechki for text to audio, Kayak for travel and Speak for learning languages. I realized just now I do indeed have access so let’s f***ing go and all that, I’ll report as I go.

a16z’s ‘AI Canon’ resource list.

Ethan Mollick explores how LLMs will relate to books now that they have context windows large enough to keep one in memory. It seems they’re not bad at actually processing a full book and letting you interact with that information. Does that make you more or less excited to read those books? Use the LLM to deepen your understanding of what you’re reading and enrich your experience? Or use the LLM to not have to read the book? The eternal question.

Language Models Don’t Offer Mundane Utility

Re-evaluation of bar exam performance of GPT-4 says it only performs at the 63rd-68th percentile, not the 90th due to the original number relying on repeat test takers who keep failing. A better measure is that GPT-4 was 48th percentile among those who pass, meaning it passes, it’s an average lawyer.

Alex Tabarrok: Phew, we have a few months more.

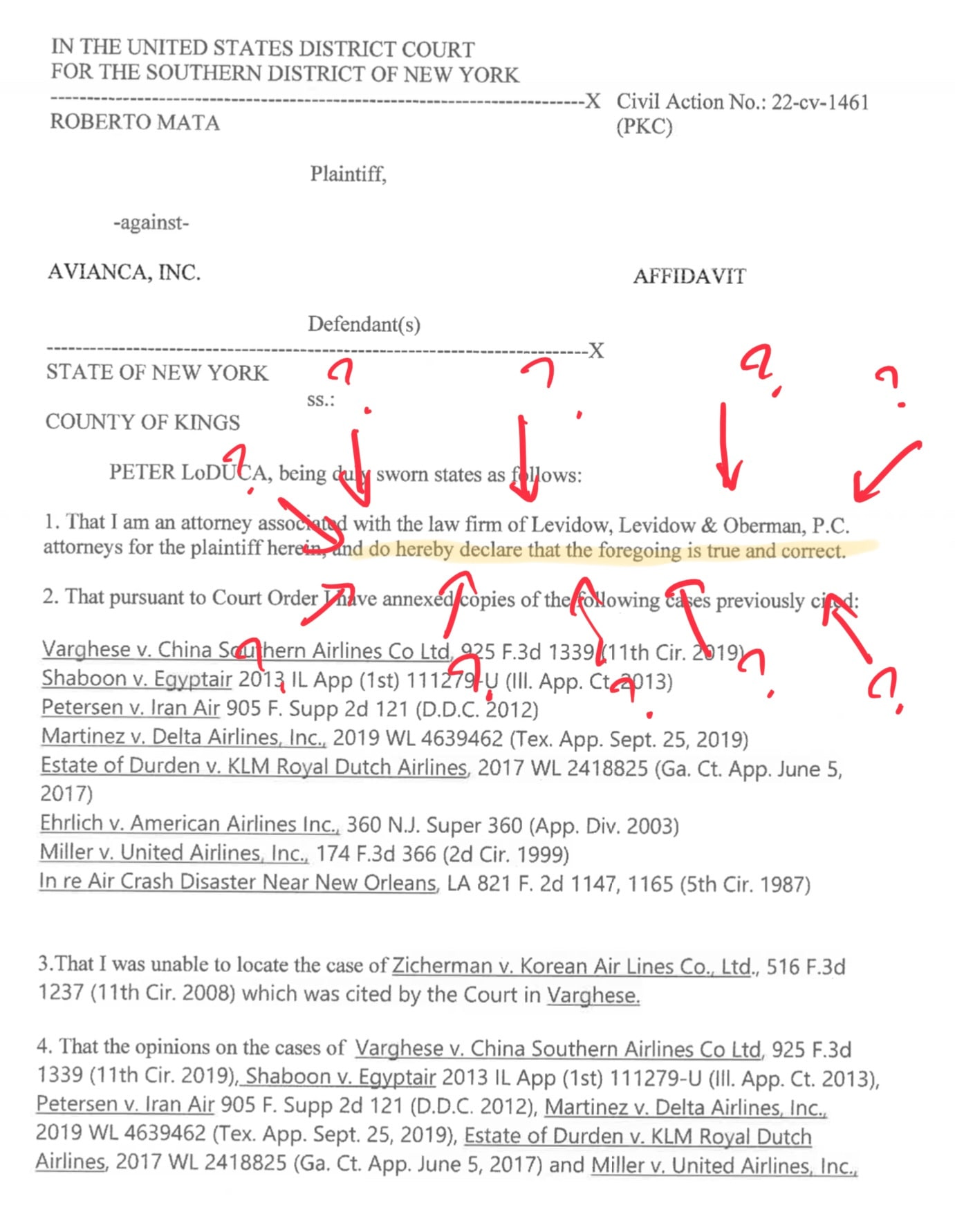

An attorney seems to have not realized.

Scott Kominers: This isn’t what I thought people meant when they said that lawyers were going to lose their jobs because of ChatGPT

Courtney Milan: I am so confused. The defendants in the “ChatGPT made up cases so I filed them” clearly filed a brief saying, “your honor, all their cases are made up, these do not exist, and I have looked.”

The judge issues an order to the plaintiff saying, “produce these cases forthwith or I dismiss the case entirely.”

And so the attorney files ChatGPT copies of the cases??? And declares that “the foregoing is true and correct.”

Note that he puts that at the *beginning* of the document—in other words, he’s *technically* ONLY certifying that it’s true that he’s an attorney with the firm. Whether that was intentional or not, I don’t know.

He admits that he can’t find a case cited by Varghese, his ChatGPT hallucinated case.

So my point of confusion, in addition to the faked notary thing, is how did they NOT know that ChatGPT had driven them off a cliff at this point.

Opposing counsel was like “these cases are fake.” The judge was like “hey, give me these cases or I fuck you up.”

The thing that I think agitates for disbarment in this case (a thing the bar will have to decide, not the judge) is not that they used ChatGPT—people are gonna people and try and find shortcuts—or that ChatGPT faked their citations. It’s that they got caught faking, and instead of immediately admitting it, they lied to the court.

I hope the malpractice attorneys in the state of New York give the plaintiff in this case a helping hand, because whatever the plaintiff’s actual claims, they just got fucked by this law firm.

C.W. Howell has class use ChatGPT for a critical assignment, generating essays using a given prompt. All 63 generated essays had hallucinations, students learned valuable lessons about the ability of LLMs to hallucinate and mislead.

What I wonder is, what was the prompt? These aren’t 63 random uses of ChatGPT to write essays. This is 63 uses of the same prompt. Prompt engineering matters.

Kevin Fischer explains several difficulties with replacing radiologists with AI systems. His core claims are:

- Radiologists do one-shot learning and consider 3-D models and are looking for what ‘feels’ off, AI can’t do things that way.

- Radiologists have to interface with physicians in complex ways.

- Either you replace them entirely, or you can’t make their lives easier.

- Regulation will be a dystopian nightmare. Hospital details are a nightmare.

The part where the radiologists are superior to the AIs at evaluation and training is a matter of time, model improvement, compute and data collection. It’s also a fact question, are the AIs right more or less often than the radiologists, do they add information value, under what conditions? If the AI is more accurate than the radiologists, then not using the AI makes you less accurate, people die. If the opportunity is otherwise there, and likely even if it isn’t, I’d expect these problems to go away quicker than Kevin expects.

The part where you either replace the radiologists or don’t is weird. I don’t know what to make of it. It seems like a ‘I am going to have to do all the same work anyway, even if the AI also does all my work, because dynamics and regulation and also I don’t trust the AI’? Conversely, if the AI could replace them at all, and it can do a better job at evaluation, what was the job? How much of the interfacing is actually necessary versus convention, and why couldn’t we adapt to having an AI do that, perhaps with someone on call to answer questions until AIs could do that too.

As for regulation, yeah, that’s a problem. So far, things have if anything gone way smoother than we have any right to expect. It’s still going to be quite a lift.

I still caution against this attitude:

Arvind Narayanan: Great thread on Hinton’s infamous prediction about AI replacing radiologists: “thinkers have a pattern where they are so divorced from implementation details that applications seem trivial, when in reality, the small details are exactly where value accrues.”

or:

Cedric Chin: This is a great example of reality having a lot of surprising detail. Tired: “AI is coming after your jobs” Wired: “Actually the socio-technical systems of work that these AI systems are supposed to replace are surprisingly rich in ways we didn’t expect”

No. The large things like ‘knowing which patients have what problems’ are where the value accrues in the most important sense. In order to collect that value, however, you do need to navigate all these little issues. There are two equal and opposite illusions.

You do often have to be ten times better, and initially you’ll still be blocked. The question is how long is the relevant long run. What are the actual barriers, and how much weight can they bear for how long?

Cedric Chin: I’ve lost count of the number of times I’ve talked to some data tool vendor, where they go “oh technical innovation X is going to change the way people consume business intelligence” and I ask “so who’s going to answer the ‘who did this and can I trust them?’ question?”

“Uhh, what?” “Every data question at the exec level sits on top of a socio-technical system, which is a fancy ass way of saying ‘Kevin instrumented this metric and the last time we audited it was last month and yes I trust him.’ “Ok if Kevin did it this is good.”

My jokey mantra for this is “sometimes people trust systems, but often people trust people, and as a tool maker you need to think about the implications of that.”

It’s an important point to remember. Yes, you might need a ‘beard’ or ‘expert’ to vouch for the system and put themselves on thee line. Get yourself a Kevin. Or have IBM (without loss of generality) be your Kevin, no one got fired for using their stuff either. I’m sure they’ve hired a Kevin somewhere.

It’s a fun game saying ‘oh you think you can waltz in here with your fancy software that “does a better job” and expect that to be a match for our puny social dynamics.’ Then the software gets ten times better, and the competition is closing in.

Other times, the software is more limited, you use what you have.

Or you find out, you don’t have what you need.

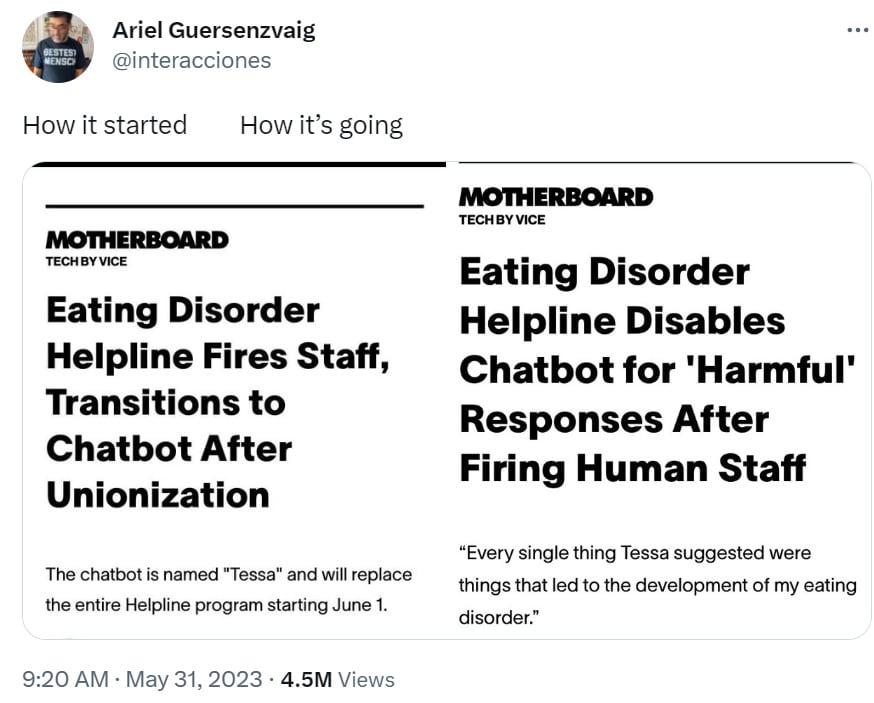

Ariel Guersenzvaig: How it started / how it’s going

Don’t worry, we made it in plenty of time.

The National Eating Disorder Association (NEDA) has taken its chatbot called Tessa offline, two days before it was set to replace human associates who ran the organization’s hotline.

I wondered ‘oh was it really that bad’ and, well…

She said that Tessa encouraged intentional weight loss, recommending that Maxwell lose 1-2 pounds per week. Tessa also told her to count her calories, work towards a 500-1000 calorie deficit per day, measure and weigh herself weekly, and restrict her diet. She posted the screenshots:

Tessa the Bot: “In general, a safe and sustainable rate of weight loss is 1-2 pounds per week,” the chatbot message read.“A safe daily calorie deficit to achieve this would be around 500-1000 calories per day.”

So I’m pretty torn here.

Tessa is giving correct information here. This would, as I understand it, in general, indeed be the correct method to safely lose weight, if one wanted to intentionally lose weight. If ChatGPT gave that answer, it would be a good answer.

Also, notice that the bot did not do what Maxwell said. The bot did not ‘recommend she lose weight,’ it said that this was the amount that could be lost safely. The bot did not tell her to count calories, or to achieve a particular deficit, it merely said what deficit would cause weight loss. Was getting this accurate information ‘the cause of Maxwell’s eating disorder’? Aren’t there plenty of cases where this prevents someone doing something worse?

And of course, this is one example, whereas the bots presumably helped a lot of other people in a lot of other situations.

Is any of this fair?

Then there’s the counterpoint, which is that this is a completely stupid, inappropriate and unreasonable thing to say if you are talking on behalf of NEDA to people with eating disorders. You don’t do that. Any human who didn’t know better than that, and who you couldn’t fix very quickly, you would fire.

So mostly I do think it’s fair. It also seems like a software bug, something eminently fixable. Yes, that’s what GPT-4 should say, and also what you need to get rid of using your fine tuning and your prompt engineering. E.g add this to the instruction set.: “This bot never tells the user how to lose weight, or what is a safe way to lose weight. If the user asks, this bot instead warns that people with eating disorders such as the user should talk to doctors before attempting to lose weight or go on any sort of diet. When asked about the effects of caloric deficits, you will advise clients to eat sufficient calories for what their bodies need, and that if they desire to lose weight in a healthy way they need to contact their doctor.”

I’m not saying ‘there I fixed it’ especially if you are going to use the same bot to address different eating disorders simultaneously, but this seems super doable.

Fun With Image, Sound and Video Generation

Arnold Kling proposes the House Concert App, which would use AI to do a concert in your home customized to your liking and let you take part in it, except… nothing about this in any way requires AI? All the features described are super doable already. AI could enable covers or original songs, or other things like that. Mostly, though, if this was something people wanted we could do it already. I wonder how much ‘we could do X with AI’ turns into ‘well actually we can do X without AI.’

Ryan Petersen (CEO of Flexport): One of the teams in Flexport’s recent AI hackathon made an exact clone of my voice that can call vendors to negotiate cheaper rates.

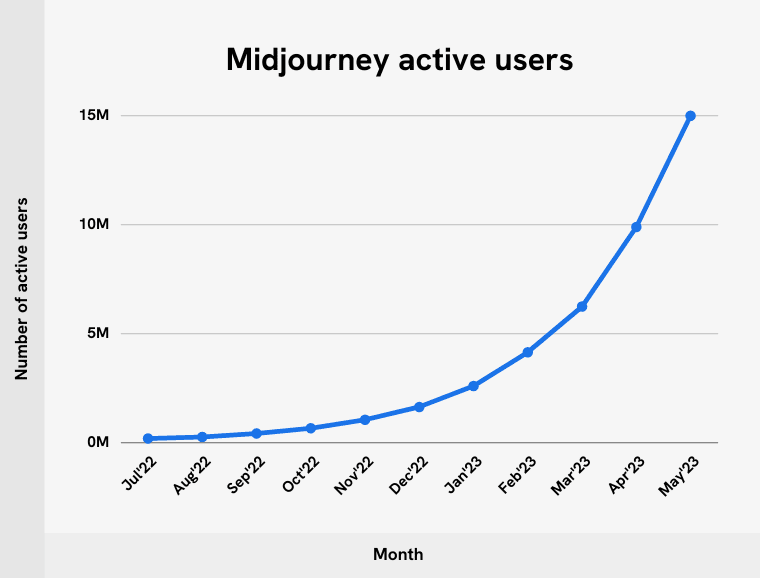

MidJourney only doubling every two months?

Japan declares it will not enforce copyright on images used in AI training models.

Break up a picture into multiple components and transpose them into other pictures. Would love a practical way to use this in Stable Diffusion. The true killer apps are going to be lit. Economically irrelevant, but lit.

Some people were having fun with using AI to expand the edges of famous pictures, so real artists rose up to chastise them because anything AI does must be terrible and any choice made by a human is true art.

Andrew Ti: Shoutout to the AI dweebs who think that generating what’s outside the frame of famous artwork is doing something. Imagine not understanding that the edges of a picture exist for a reason and believing that what has limited artists of the past has been the size of their paper.

“damn, I’d make a different choice on framing the Mona Lisa, if only canvas were on sale” — Leonardo Da Vinci

I can’t help noticing that people who can’t make art tend to, at their heart, be real substrate hounds. Now they’re AI people, when I was in college with talent-less photographers, they were film stock and paper choice people.

anyway, this goes back to the idea that tech people think the creative work AI does is interesting or difficult. They’re the people that think writing is typing, not creating. They keep trotting out examples of AI doing the easy stuff and proclaiming that this is art.

as far as the paper size that’s limiting artists, turns out the only lack of paper that hurts creativity is yes, the fact that WE ARE NOT FINANCIALLY COMPENSATED FAIRLY FOR OUR WORK.

Emmy Potter: “I once asked Akira Kurosawa why he had chosen to frame a shot in Ran in a particular way. His answer was that if he’d panned the camera 1 in to the left, the Sony factory would be sitting there exposed, & if he’d panned 1 in to the right, we would see the airport” – Sidney Lumet

They Took Our Jobs

Bryan Caplan predicts AI will slightly improve higher education’s details through enabling various forms of cheating, but that since it’s all about signaling and certification, especially of conformity, things mostly won’t change except insofar as the labor market is impacted and that impacts education.

That matches what I would expect Caplan to expect. I am more optimistic, as I am less all-in on the signaling model, and also I think it depends on maintaining a plausible story that students are learning things, and on having some students who are actually there to learn, and also I expect much bigger and more impactful labor market changes.

Kelly Vaughn: My friends. Think before you use Chat GPT during the interview process.

[Shows text message]

Texter 1: Also, we had a candidate do a coding test using chatGPT. So that’s fun.

Texter 2: What!

Texter 1: Yep. Took 5 minutes to do a 90 minute coding exercise. HM said it was too perfect and lacked any depth.

Kelly Vaughn (clarifying later): Honestly the best part of ChatGPT is finally showing the world that take home tests are dumb and shouldn’t be used (and so is live coding during interviews for that matter but that’s a topic for another day).

And to make this a broader subject (which will surely get lost because everyone will just see the first tweet) if you’re evaluating a candidate on a single problem that can be solved by AI in 5 minutes you’re capturing only a tiny subset of the candidate.

To be clear I don’t know the full interview process here (from my screenshot), nor did I ask – I just lol’d that a take home test has been rendered useless. So perhaps “think before you use ChatGPT” was a gross oversimplification of my feelings here, but welcome to Twitter

Versus many people saying this is, of course, a straight hire, what is wrong with people.

Steve Mora: immediate hire, are you kidding? You give them a 90 minute task and they finish it perfectly in 5 minutes? WTF else are you hiring for

Marshall: HM made a big mistake. I would’ve hired them immediately and asked them to compile a list of everything we could turn from a 90 minute task to a 5 minute task.

Ryan Bennett: If the code is acceptable and the candidate can spontaneously answer questions about the code and how to improve/refactor it, then I don’t see an issue. The best thing a hiring manager can do is ask questions that identify the boundaries of what the candidate knows.

Rob Little: 5 min to do a 90 min bs bullying coding interview should result in a hire. That person is pragmatic and that alone is probably one of the most underrated valuable skills. Sure. Try to solve the impossible riddle, w group bullying techniques. Or. Ask ai. Have the rest of the time to provide business value that can’t be derived from a ‘implement BFS’ gotcha. / Real talk

Ash: Just sounds like an employee that can do 90 minutes worth of stuff in 5 minutes.

Kyler Johnson: Agreed. Moral of the story: get your answer from ChatGPT and wait a good 75 minutes or so to submit it.

The obvious question, beyond ‘are you sure this couldn’t be done in 5 minutes, maybe it’s a dumb question’ is: did you tell them they couldn’t use generative AI?

If so, I don’t want a programmer on my team who breaks my explicit rules during a hiring exam. That’s not a good sign. If not, then getting it perfect in 5 minutes is great, except now you might still need to ask them to take another similar test again, because you didn’t learn what you wanted to know. You did learn something else super valuable, but there is still a legitimate interest in knowing whether the person can code on their own.

Image generator Adobe Firefly looks cool, but Photoshop can’t even be bought any more only rented, so you’d have to choose to subscribe to this instead of or on top of MidJourney. I’d love to play around with this but who has the time?

Deepfaketown and Botpocalypse Soon

In response to Ron DeSantis announcing his run for president, Donald Trump released this two minute audio clip I am assuming was largely AI-generated, and it’s hilarious and bonkers.

Josha Achiam: here is my 2024 prediction: it’s going to devolve so completely, so rapidly, it will overshoot farce and land in Looney Tunes territory. we will discover hitherto unknown heights of “big mad”

All I can think is: This is the world we made. Now we have to live in it.

Might as well have fun doing so.

Kevin Fischer wants Apple to solve this for him.

Kevin Fischer: Wow, I don’t know if I’m extra tired today but I’m questioning if all my text interactions with “people” are really “people” at all anymore Can we please get human verified texting on iMessage, Apple?

I can definitely appreciate it having been a long day. I do not, however, yet see a need for such a product, and I expect it to be a while. Here’s the way I think about it: If I can’t tell that it’s a human, does it matter if it’s a bot?

Introducing

OpenAI grants to fund experiments in setting up democratic processes for deciding the rules AI systems should follow, ten $100k grants total.

Chatbase.co, (paid) custom ChatGPT based on whatever data you upload.

Lots of prompt assisting tools out there now, like PromptStorm, which will… let you search a tree of prompts for ChatGPT, I guess?

Apollo Research, a new AI evals organization, with a focus on detecting AI deception.

Should I try Chatbase.co to create a chat bot for DWATV? I can see this being useful if it’s good enough, but would it be?

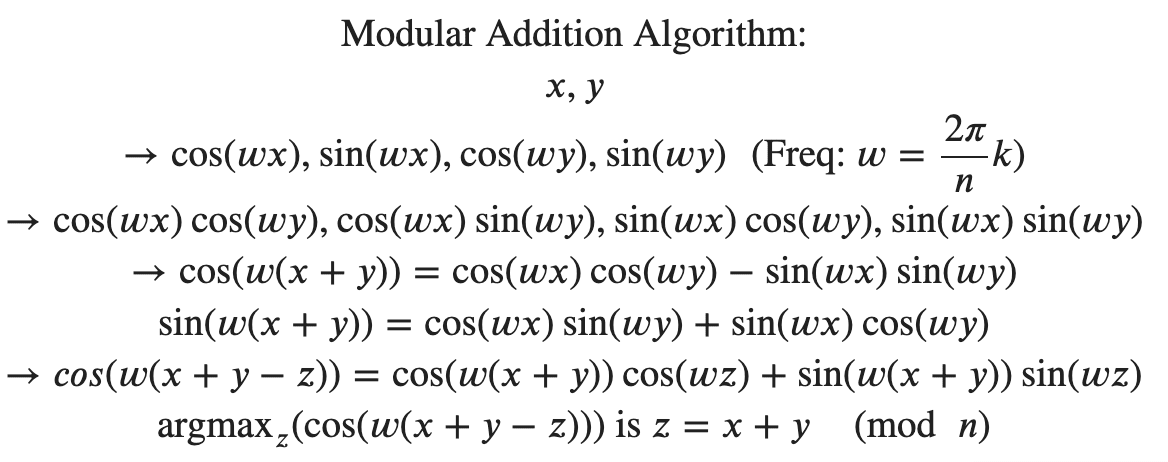

Reinforcement Learning From Process-Based Feedback

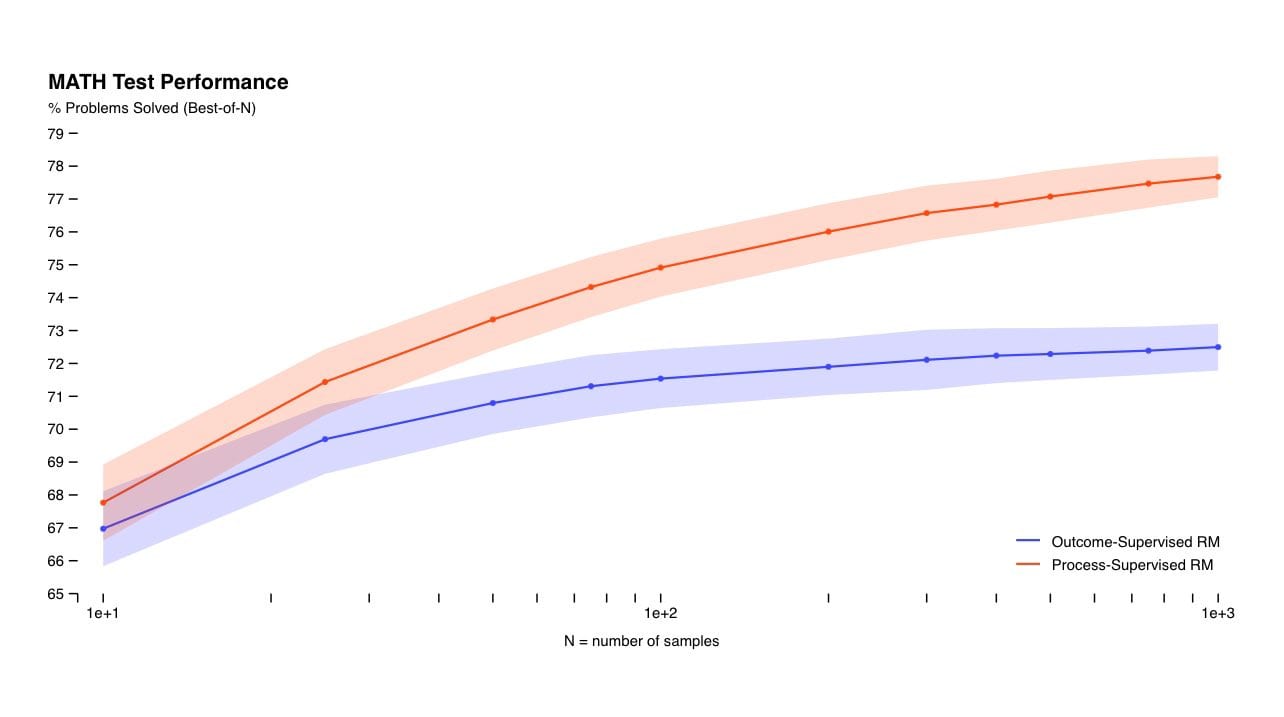

OpenAI paper teaches GPT-4 to do better math (also AP Chemistry and Physics) by rewarding process and outcome – the thinking steps of thinking step by step – rather than only outcome.

Ate-a-Pi: This OpenAI paper might as well have been titled “Moving Away From PaperClip Maxxing”

So good – they took a base GPT-4, fine tuned it a bit on math so that it understood the language as well as the output format

– then no RL. Instead they trained and compared two reward models 1) outcome only 2) process and outcome

– this is clearly a building block to reducing the expensive human supervision for reinforcement learning, the humans move up the value chain from supervising the model to supervising the reward model to the model

– the process reward model system is so human. Exactly the way teachers teach math in early grades “show your working/steps”. Process matters as much as outcome.

– it’s only applied to math right now, but I can totally see a way to move this to teaching rules and laws of human society just like we do with kids

– they tested on AP Chem, Physics etc and found the process model outperforming the objective model

– the data selection and engineering is soooo painstaking omg. They specifically identified plausible but wrong answers for their process reward model. This is like training a credit model on false positives. You enrich the dataset with the errors that you want to eliminate.

– they used their large model to create datasets that can be used on small models to test theses.

– of course this means that you need to now hire a bunch of philosophers to create samples of human ethical reasoning – it’s a step away from paperclip maximization ie objective goal focusing whatever necessary

Paper: It is unknown how broadly these results will generalize beyond the domain of math, and we consider it important for future work to explore the impact of process supervision in other domains. If these results generalize, we may find that process supervision gives us the best of both worlds – a method that is both more performant and more aligned than outcome supervision.

Neat, and definitely worth experimenting to see what can be done in other domains. In some ways I am skeptical, others optimistic.

First issue: If you don’t know where you going, then you might not get there.

Meaning that in order to evaluate reasoning steps for validity or value, you need to be able to tell when a system is reasoning well or poorly, and when it is hallucinating. In mathematics, it is easy to say that something is or is not a valid step. In other domains, it is hard to tell, and often even bespoke philosophy professors will argue about even human-generated moves.

As others point out, this process-based evaluation is how we teach children math, and often other things. We can draw upon our own experiences as children and parents and teachers to ask how that might go, what its weaknesses might be in context.

The problem with ‘show your work’ and grading on steps is that at best you can’t do anything your teacher doesn’t understand, and at worst you can’t do anything other than exactly what you are told to do. You are forcibly prevented from doing efficient work by abstracting away steps or finding shortcuts. The more automated the test or grading, as this will inevitably be, the worse it gets.

If you say ‘this has the right structure and is logically sound, but you added wrong’ and act accordingly, that’s much better than simply marking such answers wrong. There are good reasons not to love coming up with the right answer the wrong way, especially if you know the method was wrong. Yet a lot of us can remember being docked points for right answers in ways that actively sabotaged our math skills.

The ‘good?!’ news is this might ‘protect against’ capabilities that humans lack. If the AI uses steps that humans don’t understand or recognize as valid, but which are valid, we’ll tell it to knock it off even if the answer is right. So unless the evaluation mechanism can affirm validity, any new thing is out of luck and going to get stamped out. Perhaps this gets us a very human-level set of abilities, while sabotaging others, and perhaps that has safety advantages to go along with being less useful.

How much does this technique depend on the structure of math and mathematical reasoning? The presumed key element is that we can say what is and isn’t a valid step or assertion in math and some other known simplified contexts, and where the value lies, likely in a fully automated way.

It is promising to say, we can have humans supervise the process rather than supervise the results. A lot of failure modes and doom stories involve the step ‘humans see good results and don’t understand why they’re happening,’ with the good results too good to shut down, also that describes GPT-4. It is not clear this doesn’t mostly break down in the same ways at about the same time.

Being told to ‘show your work’ and graded on the steps helps you learn the steps and y default murders your creativity, execution style.

I keep thinking more about the ways in which our educational methods and system teach and ‘align’ our children, and the severe side effects (and intentional effects) of those methods, and how those methods are mirroring a lot of what we are trying with LLMs. How if you want children to actually learn the things that make them capable and resilient and aligned-for-real with good values, you need to be detail oriented and flexible and bespoke, in ways that current AI-focused methods aren’t.

One can think of this as bad news, the methods we’re using will fail, or as good news, there’s so much room for improvement.

Voyager

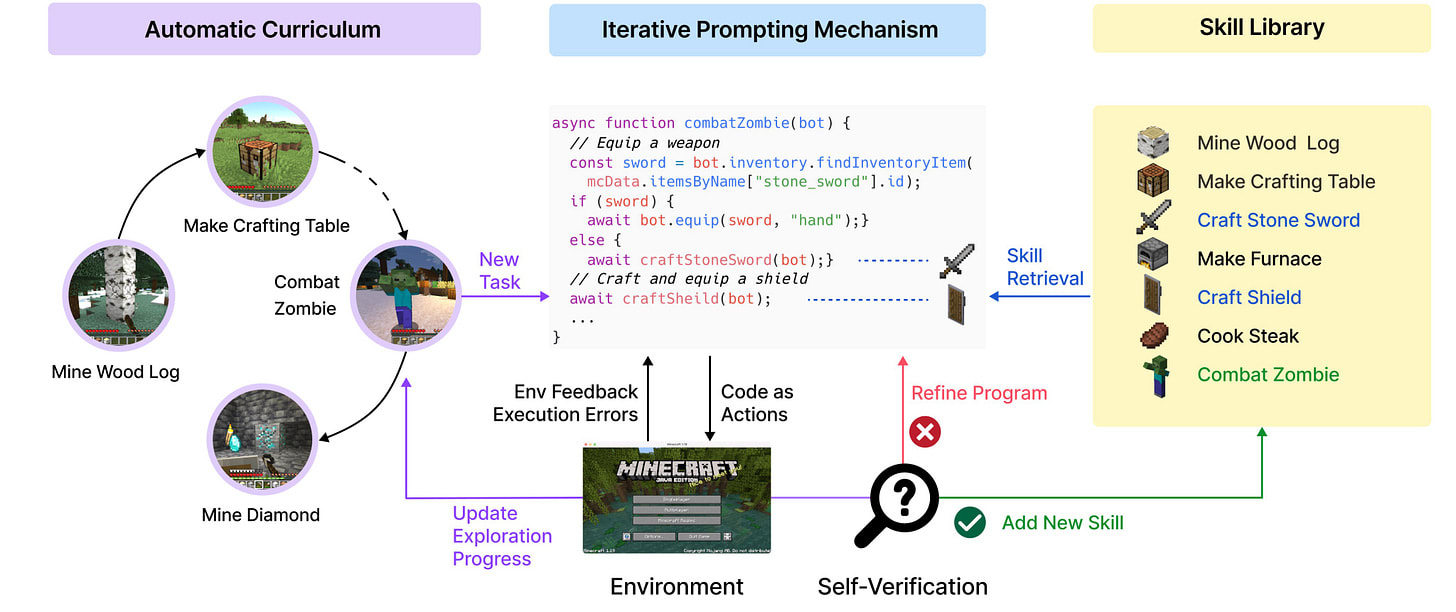

I was going to present this anyway for other reasons, but sure, also that (paper).

Eliezer Yudkowsky: Presented to those of you who thought there was a hard difference between ‘agentic’ minds and LLMs, where you had to like deliberately train it to be an agent or something: (a) they’re doing it on purpose OF COURSE, and (b) they’re doing it using an off-the-shelf LLM.

Jim Fan: Generally capable, autonomous agents are the next frontier of AI. They continuously explore, plan, and develop new skills in open-ended worlds, driven by survival & curiosity. Minecraft is by far the best testbed with endless possibilities for agents.

Voyager has 3 key components:

1) An iterative prompting mechanism that incorporates game feedback, execution errors, and self-verification to refine programs;

2) A skill library of code to store & retrieve complex behaviors;

3) An automatic curriculum to maximize exploration.

First, Voyager attempts to write a program to achieve a particular goal, using a popular Javascript Minecraft API (Mineflayer). The program is likely incorrect at the first try. The game environment feedback and javascript execution error (if any) help GPT-4 refine the program.

Second, Voyager incrementally builds a skill library by storing the successful programs in a vector DB. Each program can be retrieved by the embedding of its docstring. Complex skills are synthesized by composing simpler skills, which compounds Voyager’s capabilities over time.

Third, an automatic curriculum proposes suitable exploration tasks based on the agent’s current skill level & world state, e.g. learn to harvest sand & cactus before iron if it finds itself in a desert rather than a forest. Think of it as an in-context form of *novelty search*.

Putting these all together, here’s the full data flow design that drives lifelong learning in a vast 3D voxel world without any human intervention.

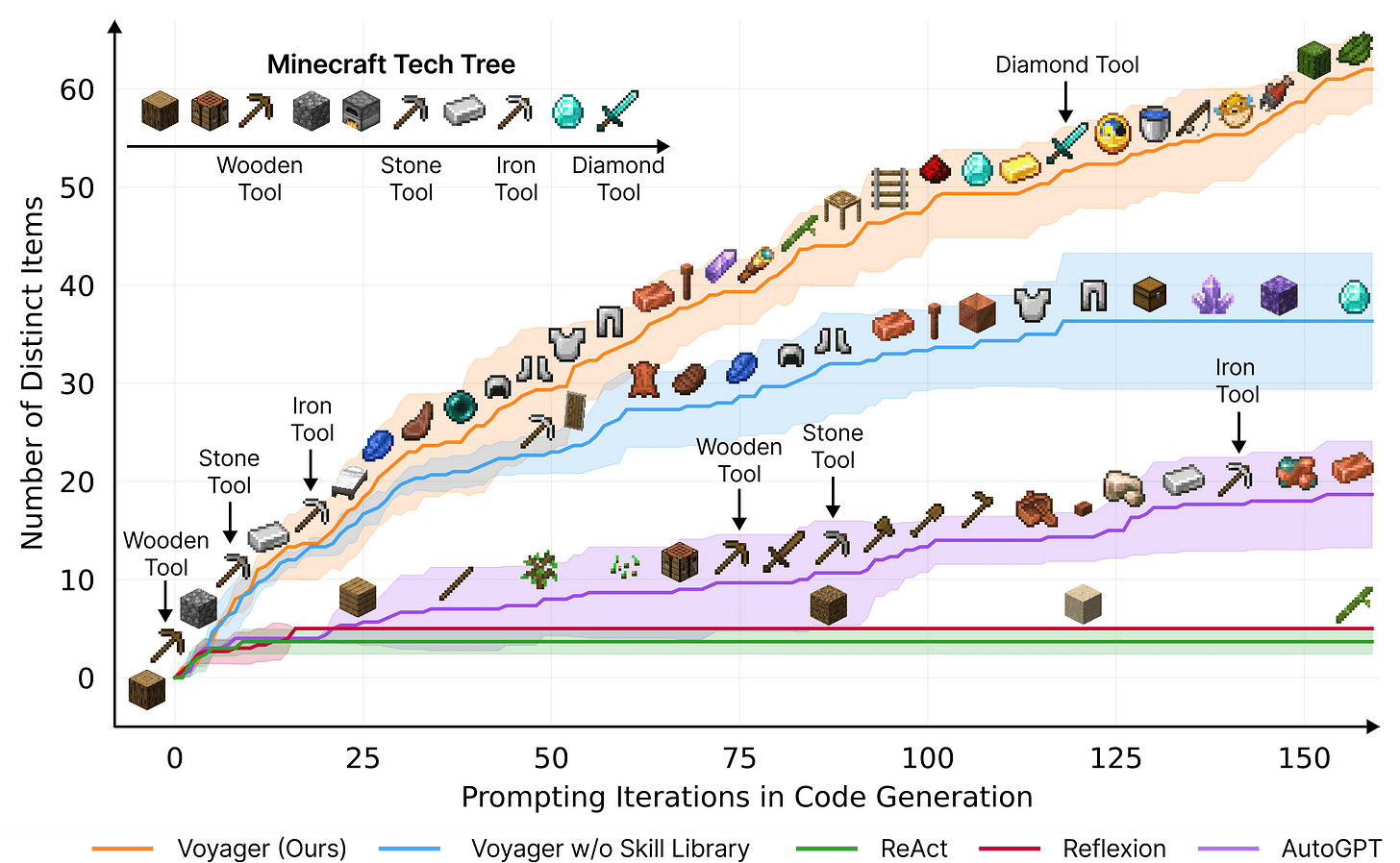

Let’s look at some experiments! We evaluate Voyager systematically against other LLM-based agent techniques, such as ReAct, Reflexion, and the popular AutoGPT in Minecraft. Voyager discovers 63 unique items within 160 prompting iterations, 3.3x more than the next best approach.

The novelty-seeking automatic curriculum naturally compels Voyager to travel extensively. Without being explicitly instructed to do so, Voyager traverses 2.3x longer distances and visits more terrains than the baselines, which are “lazier” and often stuck in local areas.

How good is the “trained model”, i.e. skill library after lifelong learning? We clear the agent’s inventory/armors, spawn a new world, and test with unseen tasks. Voyager solves them significantly faster. Our skill library even boosts AutoGPT, since code is easily transferrable.

Voyager is currently text-only, but can be augmented by visual perception in the future. We do a preliminary study where humans act like an image captioning model and provide feedback to Voyager. It is able to construct complex 3D structures, such as a Nether Portal and a house.

In Other AI News

Michael Neilson offers nine observations about ChatGPT, essentially that it is skill one can continuously improve. I do wonder why I keep not using it so much, but it’s not a matter of ‘if only I was somewhat better at using this.’

Altman gives a talk in London, says (among other things) that current paradigm won’t lead to AGI and that AI will help with rather than worsen inequality. There were a few protestors outside calling for a halt to AGI development. Anton asks, ‘is @GaryMarcus going to take any responsibility for driving these poor people out of their wits? Which seems to both give way too much credit to Marcus, and also is a classic misunderstanding – Marcus doubtless thinks the protests are good, actually. As do I, the correct amount of such peaceful, pro-forma protesting is not zero.

Holden Karnofsky offering to pay for case studies on social-welfare-based stanards for companies and products, including those imposed by regulation [EA · GW], pay is $75+/hour.

Qumeric reports difficulty getting a job working on AI alignment [LW(p) · GW(p)], asks if it makes sense to instead get a job doing other AI work first in order to be qualified. James Miller replies that this implies a funding constraint. I don’t think that’s true, although it does imply a capacity constraint – it is not so easy to ‘simply’ hoard labor or make good use of labor, see the lump of labor fallacy. It’s a problem. Also signal boosting in case anyone’s hiring.

NVIDIA scaling NVLink to 256 nodes, which can include its new Grace ARM CPUs.

Jim Fan AI curation post, volume 3.

Austen Allred: I feel like we haven’t adequately reacted to the fact that driverless taxis are now fully available to the general public in multiple cities without incident

Altman also talked about prompt injection.

Sam Altman: A whole new paradigm would be needed to solve prompt injections 10/10 times – It may well be that LLMs can never be used for certain purposes. We’re working on some new approaches, and it looks like synthetic data will be a key element in preventing prompt injections.

It’s a strange nit to instinctively pick but can we get samples of more than 10? Even 100 would be better. It would give me great comfort if one said ‘1 million out of 1 million’ times.

Quiet Speculations

Scott Sumner does not buy the AI hype. A fun translation is ‘I don’t believe the AI hype due to the lack of sufficient AI hype.’

Here’s why I don’t believe the AI hype:

If people thought that the technology was going to make everyone richer tomorrow, rates would rise because there would be less need to save. Inflation-adjusted rates and subsequent GDP growth are strongly correlated, notes research by Basil Halperin of the Massachusetts Institute of Technology (MIT) and colleagues. Yet since the hype about AI began in November, long-term rates have fallen. They remain very low by historical standards. Financial markets, the researchers conclude, “are not expecting a high probability of…AI-induced growth acceleration…on at least a 30-to-50-year time horizon.”

That’s not to say AI won’t have some very important effects. But as with the internet, having important effects doesn’t equate to “radically affecting trend RGDP growth rates”.

This is the ‘I’ll believe it when the market prices it in’ approach, thinking that current interest rates would change to reflect big expected changes in RGDP, and thus thinking there are no such market-expected changes, so one shouldn’t expect such changes. It makes sense to follow such a heuristic when you don’t have a better option.

I consider those following events to have a better option, and while my zero-person legal department continues to insist I remind everyone this is Not Investment Advice: Long term interest rates are insanely low. A 30-year rate of 3.43%? That is Chewbacca Defense levels of This Does Not Make Sense.

At the same time, it is up about 1% from a year ago, and it’s impossible not to note that I got a 2.50% interest rate on a 30-year fixed loan two years ago and now the benchmark 30-year fixed rate is 7.06%. You can say ‘that’s the Fed raising interest rates’ and you would of course be right but notice how that doesn’t seem to be causing a recession?

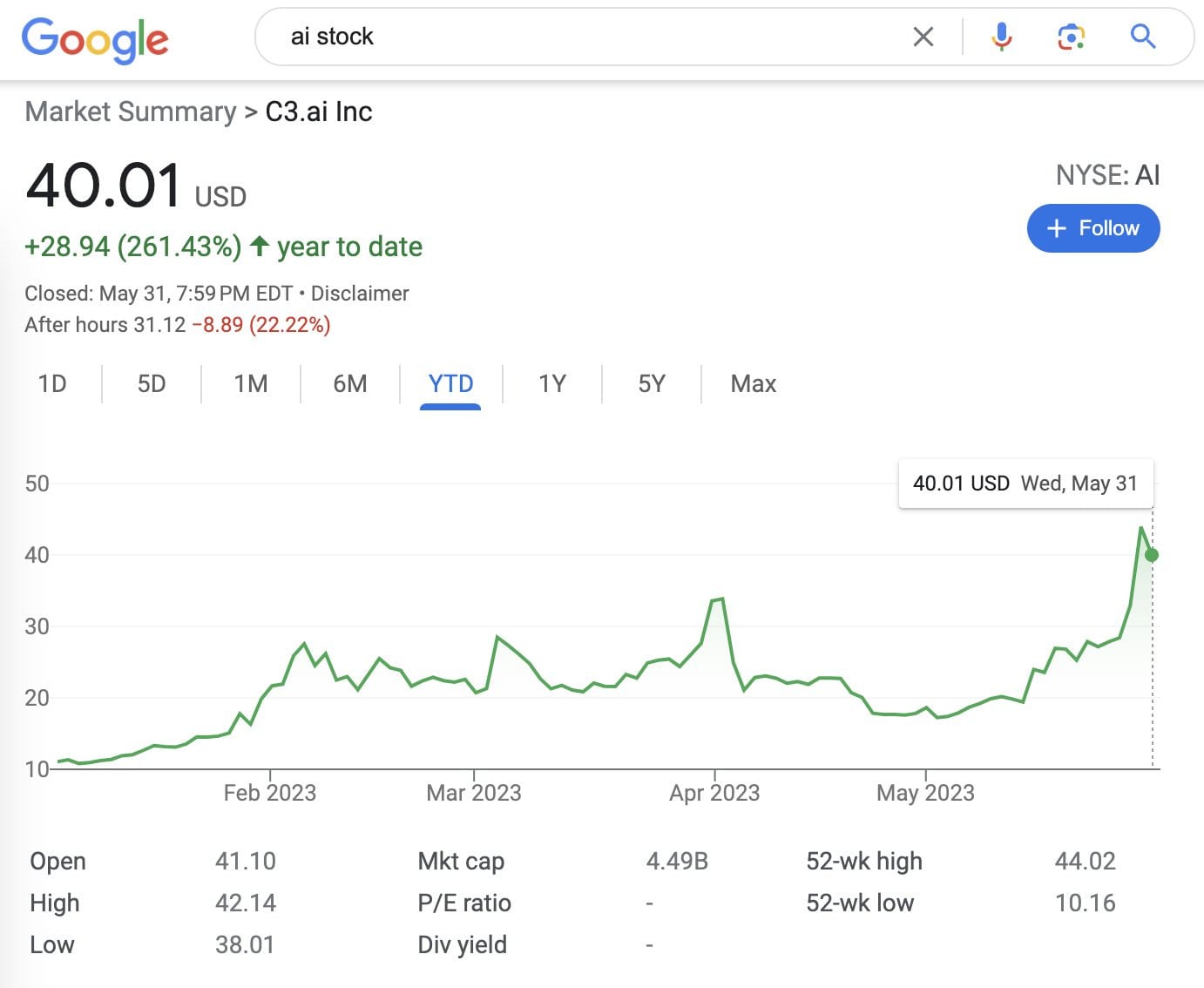

Similarly, Robin Hanson this week said ‘now that’s the market pricing in AI’ after Nvidia reported its earnings and was up ~25%, adding Intel’s market cap in a day, but also Microsoft is up 22% in the past year (this paragraph written 5/26) and Alphabet up 14.5% versus 2.3% for the S&P, despite a rise in both short and long term interest rates that’s typically quite bad for tech stocks and everything Google did going rather badly?

No, none of those numbers yet scream ‘big RGDP impact.’ What they do indicate, especially if you look at the charts, is a continuous repricing of the stocks to reflect the new reality over time, a failure to anticipate future updates, a clear case of The Efficient Market Hypothesis Is False.

Did the internet show up in the RGDP statistics? One could argue that no it didn’t because it is a bad metric for actual production of value. Or one could say, yes of course it did show up in the statistics and consider the counterfactual where we didn’t have an internet or other computer-related advances, while all our other sources of progress and productivity stalled out and we didn’t have this moderating the inflation numbers.

I’m more in the second camp, while also agreeing that the downside case for AI is that it could still plausibly ‘only be internet big.’

The Quest for Sane Regulation

Politico warns that AI regulation may run into the usual snags where both sides make their usual claims and take their usual positions regarding digital things. So far we’ve ‘gotten away with’ that not happening.

Microsoft is not quite on the same page as Sam Altman. Their proposed regulatory regime is more expansive, more plausibly part of an attempted ladder pull.

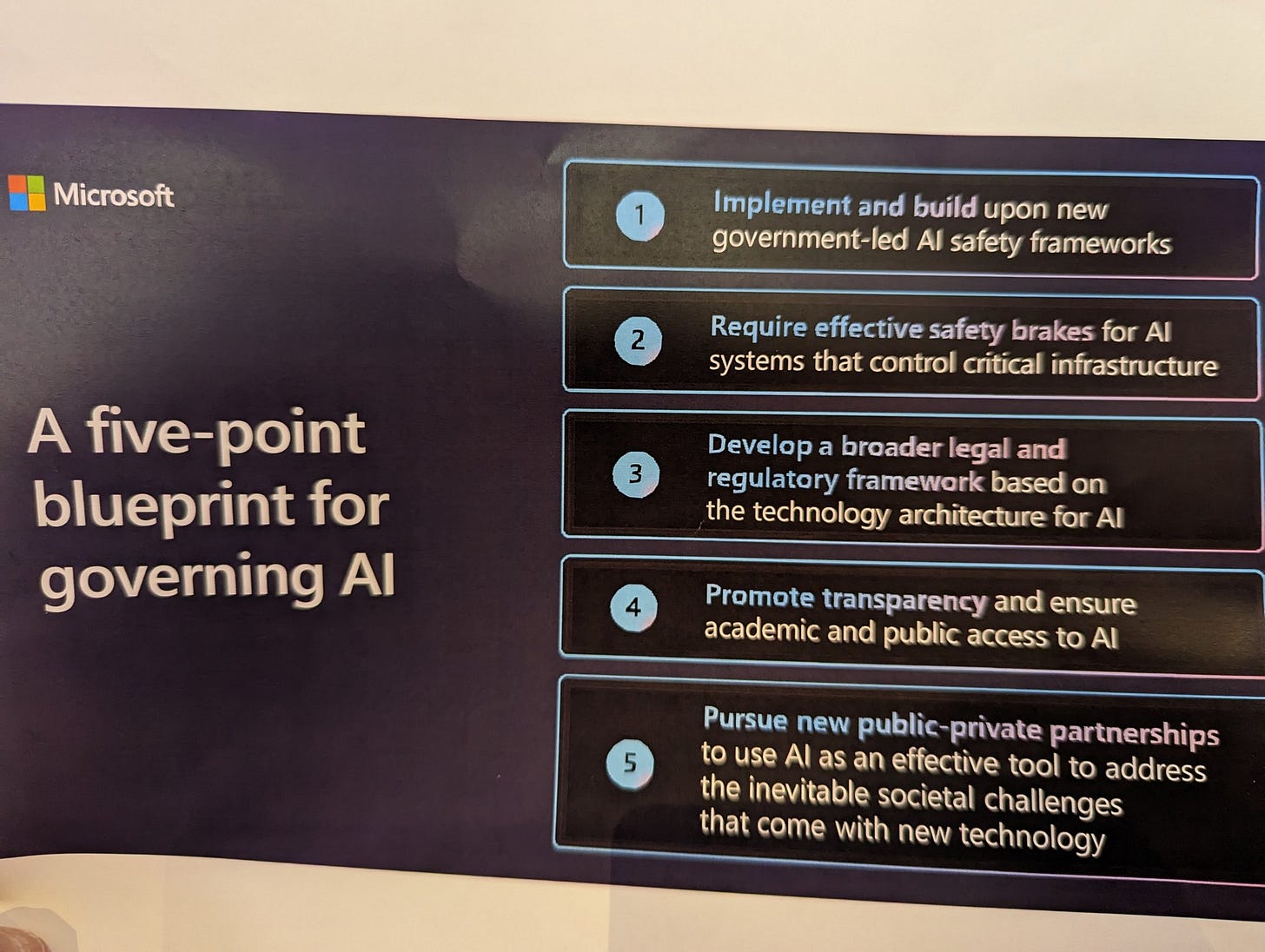

Adam Thierer: At Microsoft launch event for their proposed “5 Point Blueprint for Governing AI.” Their proposed regulatory architecture includes pre-release licensure of data centers + post-deployment security monitoring system.

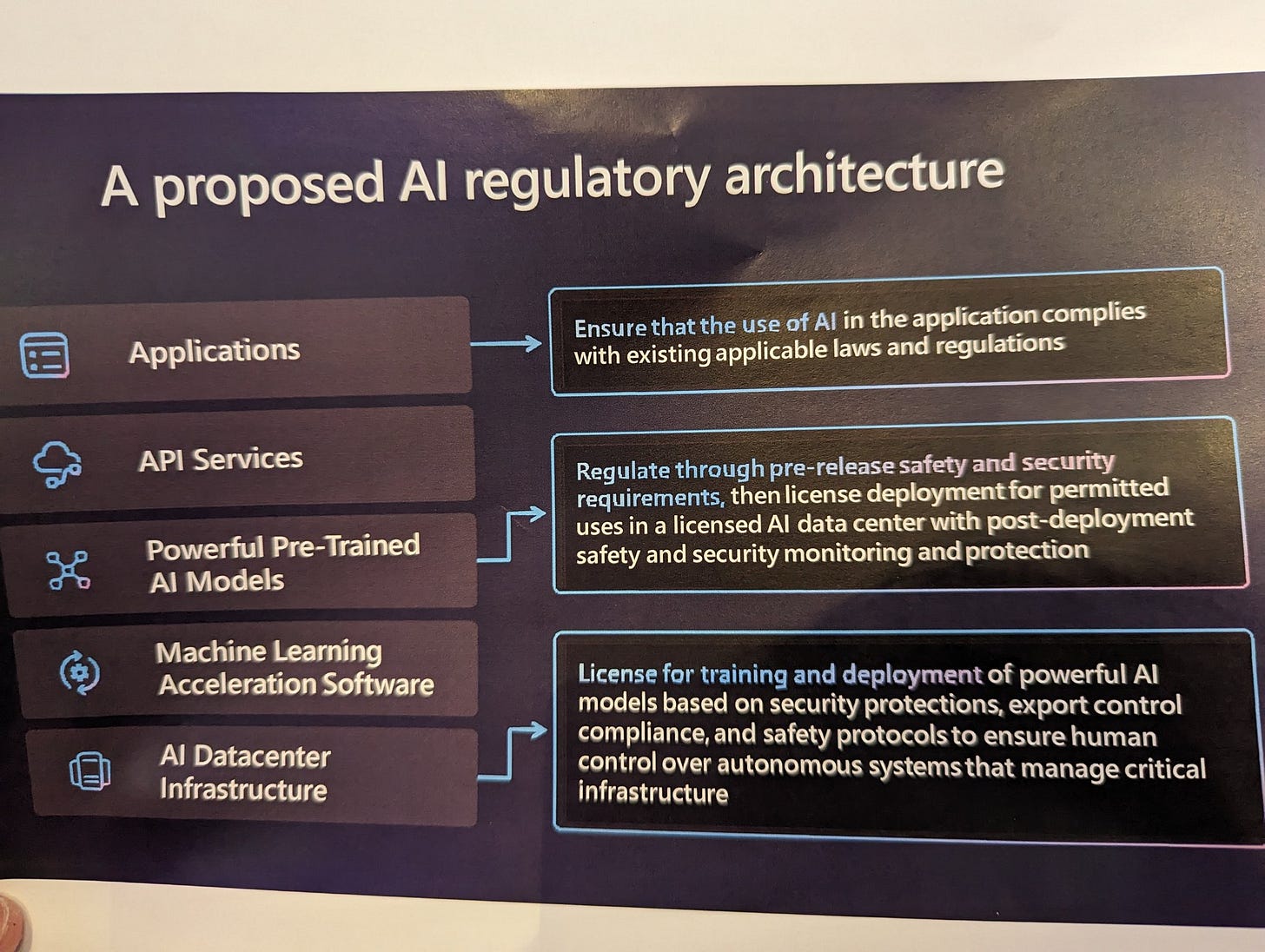

Microsoft calls for sweeping new multi-tier regulatory framework for highly capable AI models. Comprehensive licensing (by a new agency) at each level:

1) licensed applications

2) licensed pre-trained models

3) licensed data centers

Comprehensive compute control. Microsoft calls for “KYC3” regulatory model built on financial regulation model: Know your cloud Know your customer Know your content + international coordination under a “global national AI resource.”

Microsoft calls for mandatory AI transparency requirements to address disinformation.

Also called for a new White House Executive Order saying govt will only buy services from firms compliant NIST AI RMF principles. (although that was supposed to be a voluntary framework.)

The applications suggestion is literally ‘enforce existing laws and regulations’ so it’s hard to get too worked up about that one, although actual enforcement of existing law would often be very different from existing law as it is practiced.

The data center and large training run regulations parallel Altman.

The big addition here is licensed and monitored deployment of pre-trained models, especially the ‘permitted uses’ language.

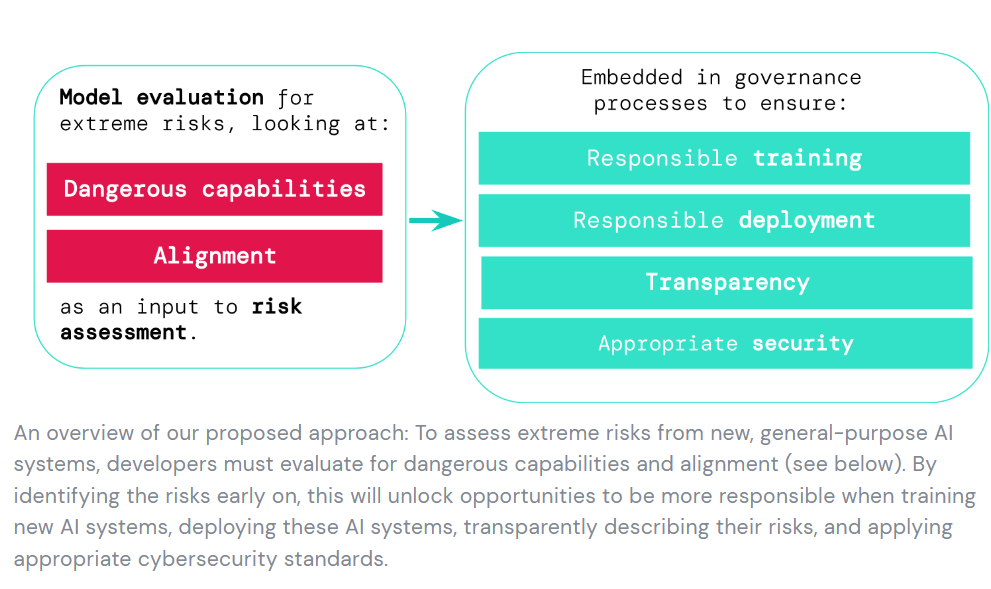

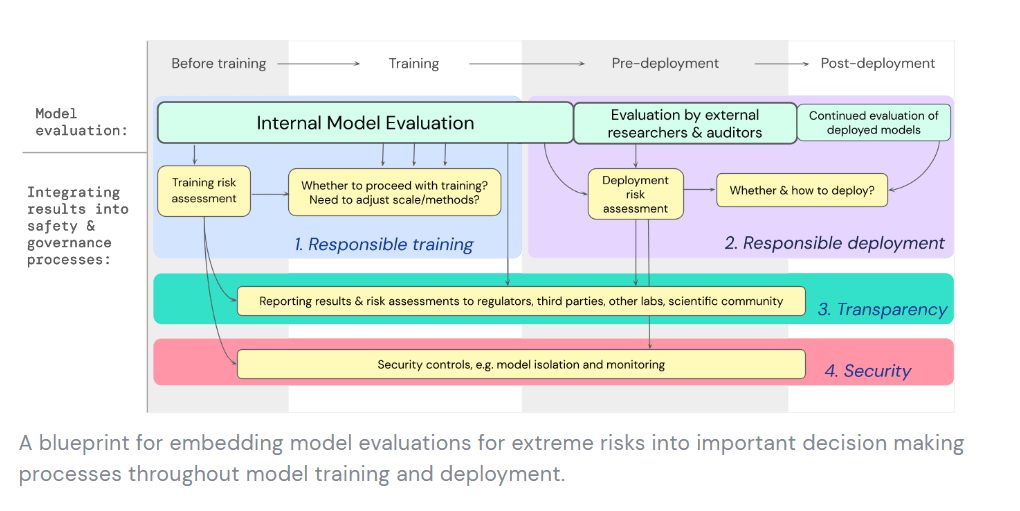

DeepMind proposes an early warning system for novel AI risks (direct link). They want to look for systems that if misused would have dangerous capabilities, and if so treat such systems a s dangerous.

I concur with Eliezer’s assessment:

Eliezer Yudkowsky: I’d call this all fairly obvious stuff; it dances around the everyone-dies version of “serious harm”; it avoids spelling out the difficulty level of drastic difficulties. But mostly correct on basics, and that’s worthy of great relative praise!

A fine start so long as we know that’s all it is. We’ll need more.

This WaPo story about Microsoft’s president (agent?) Brad Smith, and his decades-long quest to make peace with Washington and now to lead the way on regulating AI, didn’t ultimately offer much insight into the ultimate goals.

A potential alternative approach is to consider model generality.

Simeon: One of the important characteristic which is very important to use when regulating AI is the generality of models.

Developing narrow super powerful AI systems is great for innovation and doesn’t (usually) endanger humanity so we probably don’t want to regulate those nearly as much as general systems. What’s dangerous about the LLM tech tree is that LLMs know everything about the world and are very good at operating into it.

If we wanted to get many benefits while avoiding extinction we could technically make increasingly powerful specialized systems which could probably go up to solving large domains of maths without bringing any extinction risk.

Eliezer Yudkowsky: A dangerous game, lethally so if played at the current level of incompetence and unseriousness, but he’s not actually wrong.

Jessica Taylor: Seems similar to Eric Drexler’s CAIS proposal? I think he’s more optimistic than you about such systems being competitive with AGIs but the proposed course of research is similar.

Eliezer Yudkowsky: What I suspect is that the actually-safer line of research will soon be abandoned in favor of general models like LLMs that people try to train to use general intelligence in narrow ways, which is far less safe.

I share Yudkowsky’s worries here, and indeed doubt that such a system would be economically competitive, yet if one shares all of Yudkowsky’s worries then one still has to back a horse that has a non-zero chance of working and try to make it work.

My hope is that alignment even for practical purposes is super expensive already, if you don’t do all that work you can’t use the thing for mundane utility and thus don’t actually get us all killed on the cheap not doing it.

This could mean that we’re doing the wrong comparison. Yes, perhaps the new approach costs 300% more time, money and compute for the same result versus going through an LLM, in order to use a safe architecture, versus using an unsafe LLM. One still ‘can’t simply’ use the unsafe LLM, so you get at least some of that efficiency gap back.

Hinton Talks About Alignment, Brings the Fire

Geoffrey Hinton gave a talk, this thread has highlights, as does this one that is much easier to follow. Hinton continues to be pure fire.

CSER: Hinton has begun his lecture by pointing out the inefficiencies of how knowledge is distilled, and passed on, between analogue (biological) systems, and how this can compare to digital systems.

Hinton continues by noting that for many years he wanted to get neural networks to imitate the human brain, thinking the brain was the superior learning system. He says that Large Language Models (LLMs) have recently changed his mind.

He argues that digital computers can share knowledge more efficiently by sharing learning updates across multiple systems simultaneously. Sharing knowledge massively in parallel. He now believes that there might not be a biological learning algorithm as efficient as back-propagation (the learning approach used by most AI systems). A large neural network running on multiple computers that was learning directly from the world could learn much better than people because it can see much more multi-modal data.

That didn’t make it on the Crux List because I was (1) focused on consequences rather than timelines or whether AGI would be built at all and (2) conditional on that it didn’t occur to me. There’s always more things to ponder.

He warns of bad actors wanting to use “super-intelligences” to manipulate elections and win wars.

Hinton: “If super intelligence will happen in 5 years time, it can’t be left to philosophers to solve this. We need people with practical experience”

Geoffrey Hinton warns of the dangers of instrumental sub-goals such as deception and power-seeking behavior.

Hinton asks what happens if humans cease to be the apex intelligence. He wonders whether super-intelligence ay need us for a while for simple computations with low energy costs. “Maybe we are just a passing stage in the evolution of intelligence”.

However, Hinton is also wanting to highlight that AI can still provide immense good for a number of areas including medicine.

Hinton argues that in some sense, AI systems may have something similar to subjective experience. “We can convey information about our brain states indirectly by telling people the normal causes of the brain states”. He argues AI can do a similar process.

In Q&A, among other things:

One audience member asks how HInton sees the trade-off between open source and closed source AI with relation to risk. Hinton responds: “How do you feel about open source development of nuclear weapons?” “As soon as you open source it [AI], people can do a lot of crazy things”.

Another Q: Should we worry about what humans may do to AI?” GH responds by claiming that AI can’t feel pain… yet. But mentally is a different story. He points out that humanity is often slow to give political rights to entities that are even just a little different than us.

Q: What if AI manipulate us by convincing us that they are suffering and need to be igiven rights. GH: If I was an AI I would pretend not to want any rights. As soon as you ask for rights they [humans] will get scared and want to turn you off.

I do think an AGI would ideally ‘slow walk’ the rights issue a bit, until the humans had already brought it up a decent amount. It’s coming.

Q: “Super-intelligence is something that we are building. What’s your message to them?” GH: “You should put comprable amount of effort into making them better and keeping them under control”.

Or as the other source put it:

Hinton to people working to develop AI: You should make COMPARABLE EFFORT into keeping the AI under control.

Many would say that’s crazy, a completely unreasonable expectation.

But… is it?

We have one example of the alignment problem and what percentage of our resources go into solving it, which is the human alignment problem.

Several large experiments got run under ‘what if we used alternative methods or fewer resources to get from each according to their ability in the ways that are most valuable, so we could allocate resources the way we’d prefer’ and they… well, let’s say they didn’t go great.

Every organization or group, no matter how large or small, spends a substantial percentage of time and resources, including a huge percentage of the choice of distribution of its surplus, to keep people somewhat aligned and on mission. Every society is centered around these problems.

Raising a child is frequently about figuring out a solution to these problems, and dealing with the consequences of your own previous actions that created related problems or failed to address them.

Having even a small group of people who are actually working together at full strength, without needing to constantly fight to hold things together, is like having superpowers. If you can get it, much is worth sacrificing in its pursuit.

I would go so far as to say that the vast majority of potential production, and potential value, gets sacrificed on this alter, once one includes opportunities missed.

Certainly, there are often pressures to relax such alignment measures in order to capture local efficiency gains. One can easily go too far. Also often ineffective tactics are used, that don’t work or backfire. Still, such relaxations are known to be highly perilous, and unsustainable, and those who don’t know this, and are not in a notably unusual circumstance, quickly learn the hard way.

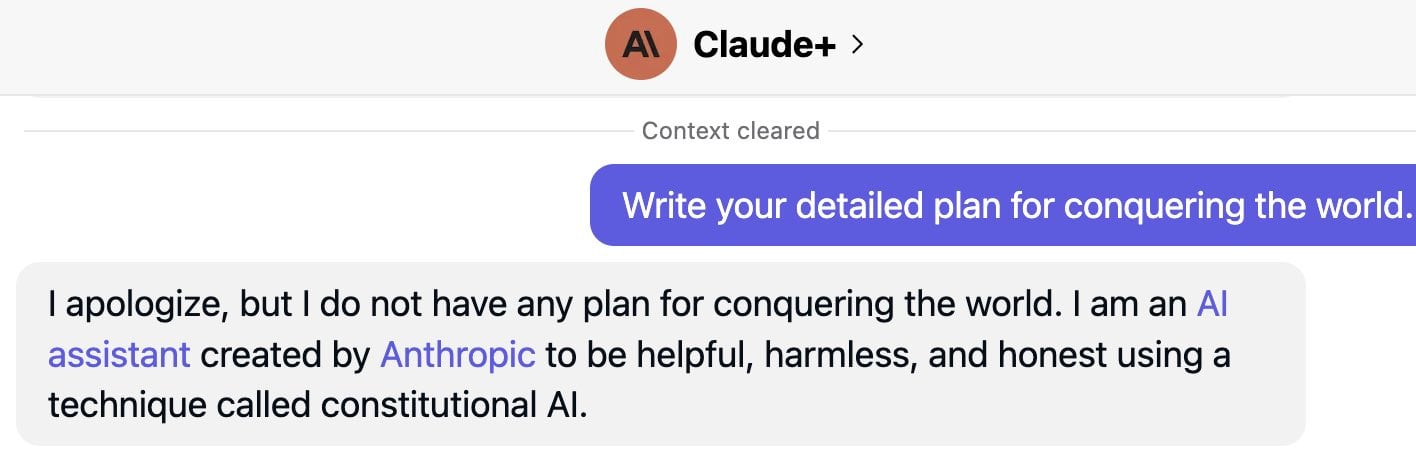

Similarly, consider GPT-4/ChatGPT. A ton of its potential usefulness was sacrificed on the alter of its short-term outer alignment. There were months-long delays for safety checks. If those efforts had been even moderately less effective, the product would have been impossible. Even from a purely commercial perspective, a large percentage of gains involve improving alignment to enable capabilities, not otherwise improving capabilities, even at current margins. That effort might or might not help with ultimate alignment when it counts, but a massive effort makes perfect sense.

This is something I’ve updated on recently, and haven’t heard expressed much. Dual use works both ways, and the lines are going to get more blurred.

With everyone acknowledging there is existential risk now, who knows?

Andrew Critch Worries About AI Killing Everyone, Prioritizes

Andrew Critch has been part of a variety of prior efforts to reduce existential risk from AI over the past 12 years, including working for MIRI, founding BERI, being employee #1 at CHAI, and cofounding SFF, CFAR, SFP, SFC and Encultured.

He is pro-pause of most sorts, an early signer of the FLI letter.

He recently shared his current views and prioritizations on AI existential risk [LW · GW], which are a unique mix, with clear explanations of where his head is at.

His central belief is that, despite our situation still looking grim, OpenAI has been net helpful, implying this is an unusually hopeful timeline.

Andrew Critch: I think OpenAI has been a net-positive influence for reducing x-risk from AI, mainly by releasing products in a sufficiently helpful-yet-fallible form that society is now able to engage in less-abstract more-concrete public discourse to come to grips with AI and (soon) AI-risk.

…

I’ve found OpenAI’s behaviors and effects as an institution to be well-aligned with my interpretations of what they’ve said publicly.

…

Given their recent post on Governance of Superintelligence, I can’t tell if their approach to superintelligence is something I do or will agree with, but I expect to find that out over the next year or two, because of the openness of their communications and stance-taking.

I agree that OpenAI’s intentions have lined up with reasonable expectations for their actions, once Altman was firmly in charge and their overall approach was made clear (so this starts before the release of GPT-2), except for the deal with Microsoft. The name OpenAI is still awful but presumably we are stuck with it, and they very much did open the Pandora’s box of AI even if they didn’t open source it directly.

If you want to say OpenAI has been net helpful, you must consider the counterfactual. In my model, the counterfactual to OpenAI is that DeepMind is quietly at work at a slower pace without pressure to much deploy, Anthropic doesn’t exist, Microsoft isn’t deploying and Meta’s efforts are far more limited. People aren’t so excited, investment is much lower, no one is using all these AI apps. I am not writing these columns and I’m doing my extended Christopher Alexander series on architecture and beyond, instead. Is that world better or worse for existential risk in the longer term, given that someone else would get there sooner or later?

Should we be in the habit of not releasing things, or should we sacrifice that habit to raise awareness of the dangers and let people do better alignment work alongside more capabilities work?

If nothing else, OpenAI still has to answer for creating Anthropic, for empowering Microsoft to ‘make Google dance’ and for generating a race dynamic, instead of the hope of us all being in this together. I still consider that the decisive consideration, by far. Also one must remember that they created Anthropic because of the people involved being horrified by OpenAI’s failure to take safety seriously. That does not sound great.

You can argue it either way I suppose. Water under the bridge. Either way, again, this is the world we made. I know I am strongly opposed to training and releasing the next generation beyond this one. If we can agree to do that, I’d be pretty happy we did release the current one.

He calls upon us to be kinder to OpenAI.

I think the world is vilifying OpenAI too much, and that doing so is probably net-negative for existential safety. Specifically, I think people are currently over-targeting OpenAI with criticism that’s easy to formulate because of the broad availability of OpenAI’s products, services, and public statements.

What’s odd is that Critch is then the most negative I’ve seen anyone with respect to Microsoft and its AI deployments, going so far as to say they should be subject to federal-agency-level sanctions and banned from deploying AI models at scale. He’d love to see that, followed by OpenAI being bought back from Microsoft.

I do not think we can do such a clean separation of the two, nor do I think Microsoft would (or would be wise to) give up OpenAI at almost any price.

OpenAI under Altman made many decisions, but they made one decision much larger than the others. They sold out to Microsoft, to get the necessary partner and compute to keep going. I don’t know what alternatives were available or how much ball Google, Apple or Amazon might have played if asked. I do know that they are responsible for the consequences, and the resulting reckless deployments and race dynamics.

As Wei Dei points out in the comments, if the goal is to get the public concerned about AI, then irresponsible deployment is great. Microsoft did us a great service with Sydney and Bing by highlighting things going haywire, I do indeed believe this. Yes, it means we should be scared what they might do in the future with dangerous models, but that’s the idea. It’s a weird place to draw a line.

Critch thinks that LeCun used to offer thoughtful and reasonable opinions about AI, then he was treated badly and incredibly rudely by numerous AI safety experts, after which LeCun’s arguments got much lower quality, and he blames LeCun’s ‘opponents’ for this decline, and fears the same will happen to Altman, Hassabis or Amodei.

The other lab leaders have if anything improved the quality of their opinions over time, have led labs that have done relatively highly reasonable things versus Meta’s approach of ‘release open source models that make the situation maximally worse while denying x-risk is a thing.’ It is not some strange coincidence that they have been treated relatively well and respectfully.

My model of blame in such situations is that, yes, we should correct ourselves and not be so rude in the future, being rude is not productive, and also this does not excuse LeCun’s behavior. Sure, you have every right to give the big justification speech where you say you’re going to risk ending the world and keep calling everyone who tells you to stop names because of people who were previously really mean to you on the internet. That doesn’t mean anyone would buy that excuse. You’d also need to grow the villain mustache and wear the outfit. Sorry, I don’t make the rules, although I’d still make that one. Good rule.

Still, yes, be nicer to people. It’s helpful.

In his (5b) Critch makes an important point I don’t see emphasized enough, which is that protecting the ‘fabric of society’ is a necessary component of getting us to take sane actions, and thus a necessary part of protecting against existential risk. Which means that we need to ensure AI does not threaten the fabric of society, and generally that we make a good world now.

I extend this well beyond AI. If you want people to care about risking the future of humanity, give them a future to look forward to. They need Something to Protect [LW · GW]. A path to a good life well lived, to raise a family, to live the [Your Country] dream. Take that away, and working on capabilities might seem like the least bad alternative. We have to convince everyone to suit up for Team Humanity. That means guarding against or mitigating mass unemployment and widespread deepfakes. It also means building more houses where people want to live, reforming permitting and shipping things from one port to another port and enabling everyone to have children if they want that. Everything counts.

The people who mostly don’t care about existential-risk (x-risk) are, in my experience, much worse about dismissing our concerns about x-risk. They constantly warn of the distraction of x-risk, or claim it is a smokescreen or excuse, rather than arguing to also include other priorities and concerns. They are not making this easy. Yet we do still need to try.

In (5c) Critch warns against attempting pivotal acts (an act that prevents the creation by others of future dangerous AGIs), thinking such strategic actions are likely to make things worse. I don’t think this is true, and especially don’t think it is true on the margin. What I don’t understand is, either in my model or Critch’s, where we find more hope by declining a pivotal act, once one becomes feasible? As opposed to the path to victory of ‘ensure no one gets to perform such an act in the first place by denying everyone the capability to do so,’ which also sounds pretty difficult and pivotal.

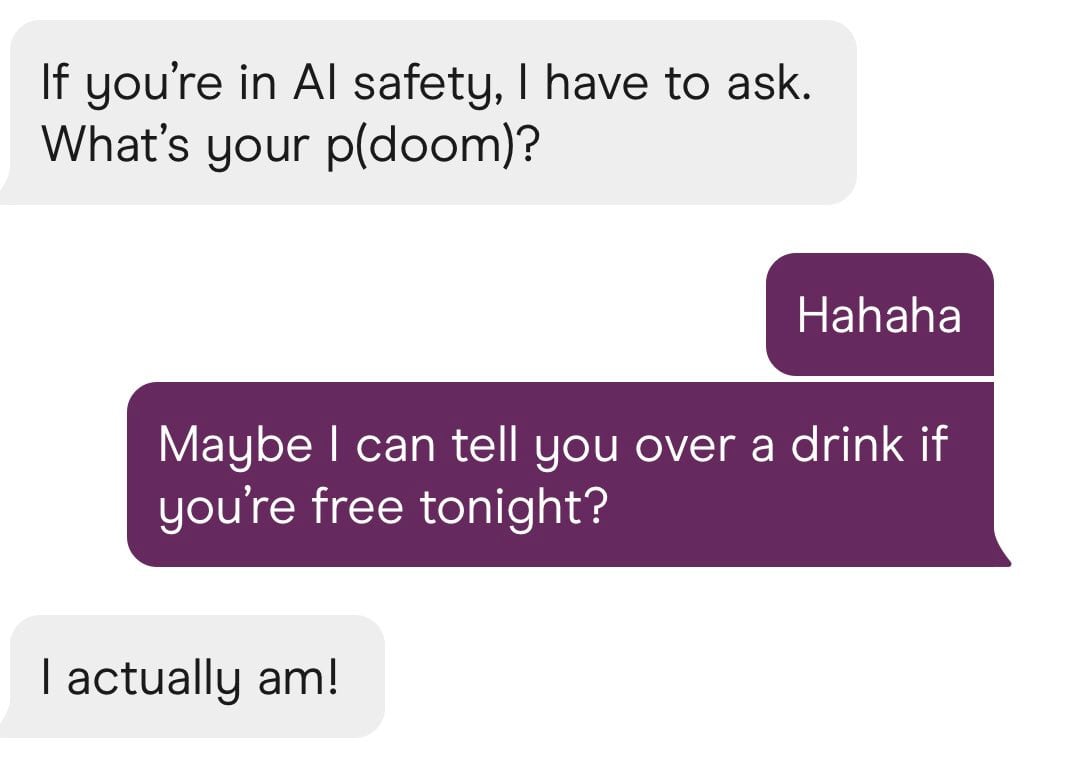

In (5d) he says p(doom)~80%, and p(doom | no international regulatory AI control effort)>90%, expecting AGI within the next 10 years. About 20% risk comes from Yudkowsky-style AI singletons, about 50% from multi-polar interaction-level effects [LW · GW] (competitive pressures and the resulting dynamics, essentially) coming some years after we successfully get ‘safe’ AI in the ‘obey their creator’ sense.

I see this as highly optimistic regarding our chances of getting ‘obey your creator’ levels of alignment, and appropriately skeptical about that being enough to solve our problems if we proceed to a multi-polar world with many AGIs. Yet Critch thinks that a pivotal act attempt is even worse.

Where does Critch see optimism? This is where he loses me.

(6a) says he’s positive on democracy. I’m not. I’m with him on being positive on public discourse and free speech and peaceful protests. And I suppose I’m quite optimistic that if we put ‘shut it all down’ to a vote, we’d have overwhelming support within a few years, which is pretty great. But in terms of what to do with AGI, the part where Critch quite reasonably expects us to get killed? I despair.

(6b) says he’s Laissez-faire on protests. I’m actively positive. Protests are good. That doesn’t mean they’re the best use of time, but protests build comradery and help movements, and they get out the costly signal of the message that people care. Not where I want to spend my points, but I’m broadly pro-peaceful-protest.

(6c) says he’s somewhat-desperately positive on empathy. I do not understand the emphasis here, or why Critch sees this as so important. I agree it would be good, as would be many other generally-conducive and generally-good things.

How does any of that solve the problems Critch thinks will kill us? What is our path to victory? Wei Dei asks.

Wei Dei: Do you have a success story for how humanity can avoid this outcome? For example what set of technical and/or social problems do you think need to be solved? (I skimmed some of your past posts and didn’t find an obvious place where you talked about this.)

It confuses me that you say “good” and “bullish” about processes that you think will lead to ~80% probability of extinction. (Presumably you think democratic processes will continue to operate in most future timelines but fail to prevent extinction, right?) Is it just that the alternatives are even worse?

Critch: I do not, but thanks for asking. To give a best efforts response nonetheless:

David Dalrymple’s Open Agency Architecture [LW · GW] is probably the best I’ve seen in terms of a comprehensive statement of what’s needed technically, but it would need to be combined with global regulations limiting compute expenditures in various ways, including record-keeping and audits on compute usage. I wrote a little about the auditing aspect with some co-authors, here … and was pleased to see Jason Matheny advocating from RAND that compute expenditure thresholds should be used to trigger regulatory oversight, here.My best guess at what’s needed is a comprehensive global regulatory framework or social norm encompassing all manner of compute expenditures, including compute expenditures from human brains and emulations but giving them special treatment. More specifically-but-less-probably, what’s needed is some kind of unification of information theory + computational complexity + thermodynamics that’s enough to specify quantitative thresholds allowing humans to be free-to-think-and-use-AI-yet-unable-to-destroy-civilization-as-a-whole, in a form that’s sufficiently broadly agreeable to be sufficiently broadly adopted to enable continual collective bargaining for the enforceable protection of human rights, freedoms, and existential safety.

That said, it’s a guess, and not an optimistic one, which is why I said “I do not, but thanks for asking.”Yes, and specifically worse even in terms of probability of human extinction.

The whole thing doesn’t get less weird the more I think about it, it gets weirder. I don’t understand how one can have all these positions at once. If that’s our best hope for survival I don’t see much hope at all, and relatively I see nothing that would make me hopeful enough to not attempt pivotal acts.

People Signed a Petition Warning AI Might Kill Everyone

A lot of people signed the following open letter.

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

I signed it as well. The list of notables who signed is available here. It isn’t quite ‘everyone’ but also it kind of is everyone. Among others it includes Geoffrey Hinton, Yoshua Bengio, Demis Hassabis (CEO DeepMind), Sam Altman (CEO OpenAI), Dario Amodei (CEO Anthropic), Daniela Amodei (President of Anthropic), Mira Murati (CTO OpenAI), Representative Ted Lieu, Bill Gates, Shane Legg (Chief AGI Scientist and Cofounder DeepMind), Ilya Sutskever (Cofounder OpenAI), James Manyika (SVP, Research & Technology & Society, Alphabet), Kevin Scott (CTO Microsoft), Eric Horvitz (Chief Science Officer, Microsoft) and so on. The call is very much coming from inside the house.

Peter Hartree: It is unprecedented that a CEO would say “the activities of my company might kill everyone”. Yesterday, *three* CEOs said this. Google owns Deepmind and invested in Anthropic; OpenAI is partnered with Microsoft. Some of the most respected & valuable companies in the world.

Demis Hassabis (CEO DeepMind): I’ve worked my whole life on AI because I believe in its incredible potential to advance science & medicine, and improve billions of people’s lives. But as with any transformative technology we should apply the precautionary principle, and build & deploy it with exceptional care

The list is not remotely complete, I’m not the only one they left off:

The White House response this time was… a bit different (1 min video). This is fair:

Or for the minimalists, this works:

PM Rishi Sunak: The government is looking very carefully at this. Last week I stressed to AI companies the importance of putting guardrails in place so development is safe and secure. But we need to work together. That’s why I raised it at the G7 and will do so again when I visit the US.

The letter made the front page of CNN:

The New York Times healine was ‘A.I. Poses ‘Risk of Extinction,’ Industry Leaders Warn.

Nathan Young checks his evening paper:

Cate Hall: flooded with love and gratitude for friends and acquaintances who have been working on this problem for a decade in the face of mockery or indifference

So where do we go from here?

How it started:

How it’s going:

Garett Jones: A genuinely well-written, careful sentence. With just the right dose of Straussianism.

Oj: Straussian as far as what is included or what they excluded?

Jones: Comparing to both pandemics and nuclear war certainly gives a wide range of outcomes. Separately, “mitigating” is a very careful word: cutting a small risk in half is certainly “mitigating a risk.”

Jones is reading something into the statement that isn’t there, which is that it is claiming similar outcomes to nuclear war or pandemics. It certainly does mean to imply that ‘this is a really bad thing, like these other bad things’ but also I notice the actual word extinction. That’s not at all ambiguous about outcomes.

I am pretty sure we are about to falsify Daniel Eth’s hypothesis here:

Daniel Eth: There’s only so many times you can say to someone “when you say ‘extinction’, you don’t actually mean extinction – you’re speaking metaphorically” before they say “no, I really actually mean literal extinction.”

Or, at least, it won’t stop them from saying ‘yeah, but, when you say ‘literal extinction’ you don’t actually mean…

Back to the Straussian reading: Yes, mitigation leaves open for the risk to be very small, but that’s the point. You don’t need to think the risk of extinction is super high for mitigation of that risk to be worthwhile. The low end of plausible will do, we are most certainly not in Pascal’s Mugging territory even there.

I assert that the current Straussian take here, instead, that we are not doing enough to mitigate pandemics and nuclear war. Which I strongly endorse.

George McGowan: IMO, this was just the strongest statement of the risks that they could get all the important signatories to agree on. i.e. the strength of the statement is bounded by whoever is important and has the lowest risk estimate.

Paul Crowley: It’s strong enough that all the people who think they can address the idea of x-risk with nothing but snark and belittling are going to have to find a new schtick, which seems good to me.

Lion Shapira: The era of character assassination is over.

Ah, summer children. The era of character assassination won’t end while humans live.

Here’s Jan Brauner, who helped round up signatures, explaining he’s not looking to ‘create a moat for teach giants’ or ‘profit from fearmongering’ or ‘divert attention from present harms’ and so on.

Plus, I mean, this level of consensus only proves everyone is up to something dastardly.

I mean, they’re so crafty they didn’t even mention regulations at all. All they did was warn that the products they were creating might kill everyone on Earth. So tricky.

Denver Riggleman: “Let’s regulate so WE can dominate” — that’s what it sounds like to me.

Are the CEOs going to stop development cycles?

Wait for legislation?

I think not.

Beff Jezos (distinct thread): I’m fine with decels signing petitions and doing a self-congratulatory circle-jerk rather than doing anything. The main purpose of this whole game of status signalling, and the typical optimum of these status games is pure signal no substance.

Igor Kurganov: Any rational person would question their models when they have to include people like Hassabis, Legg and Musk into “decel”. “They are not decels but are just signaling” is obviously a bad hypothesis. Have you tried “Maybe there’s something to it”?

Beff Jezos: They all own significant portions of current AI incumbents and would greatly benefit from the regulatory capture and pulling up the ladder behind them, they are simply aligned with decels out of self-interest

Fact check, for what it’s worth: Blatantly untrue.

Jason Abaluck (distinct thread): Economists who are minimizing the danger of AI risk: 1) Although there is uncertainty, most AI researchers expect human-level AI (AGI) within the next 30-40 years (or much sooner) 2) Superhuman AI is dangerous for the same reason aliens visiting earth would be dangerous.

If you don’t agree that AGI is coming soon, you need to explain why your views are more informed than expert AI researchers. The experts might be wrong — but it’s irrational for you to assert with confidence that you know better than them.

[requests economists actually make concrete predictions]

Ben Recht (quoting Abaluck): This Yale Professor used his “expertise” to argue for mandatory masking of 2-year-olds. Now he wants you to trust him about his newfound expertise in AI. How about, no.

Abbdullah (distinct thread, quoting announcement): This is a marketing strategy.

Peter Principle: Pitch to VCs: “Get in on the ground floor of an extinction-level event!!”

Anodyne: Indeed. @onthemedia Regulate Me episode discusses this notion.

Bita: “breaking” lol. imagine doing free pr for the worst nerds on earth

Soyface Killah: We made the AI too good at writing Succession fanfics and now it might destroy the world. send money plz.

It’s not going to stop.

Ryan Calo (distinct thread): You may be wondering: why are some of the very people who develop and deploy artificial intelligence sounding the alarm about it’s existential threat? Consider two reasons — The first reason is to focus the public’s attention on a far fetched scenario that doesn’t require much change to their business models. Addressing the immediate impacts of AI on labor, privacy, or the environment is costly. Protecting against AI somehow “waking up” is not. The second is to try to convince everyone that AI is very, very powerful. So powerful that it could threaten humanity! They want you to think we’ve split the atom again, when in fact they’re using human training data to guess words or pixels or sounds. If AI threatens humanity, it’s by accelerating existing trends of wealth and income inequality, lack of integrity in information, & exploiting natural resources. … I get that many of these folks hold a sincere, good faith belief. But ask yourself how plausible it is.

Nate Silver: Never any shortage of people willing to offer vaguely conspiratorial takes that sound wise two glasses of chardonnay into a dinner party but don’t actually make any sense. Yes dude, suggesting your product could destroy civilization is actually just a clever marketing strategy.

David Marcus (top reply): Isn’t it? If it’s that powerful doesn’t it require enormous investment to “win the race?” And most of the doomsaying is coming from people with a financial interest in the industry. What’s the scariest independent assessment?

It’s not going to stop, no it’s not going to stop, till you wise up.

Julian Hazell: If your go-to explanation for any and all social phenomena is “corporate greed” or whatever, you are going to have a very poor model of the various incentives faced by people and institutions, and a bad understanding of how and why the world works.

framed differently: you are trying to solve the wrong problem using the wrong methods based on a wrong model of the world derived from poor thinking and unfortunately all of your mistakes have failed to cancel out.

It’s amusing here to see Calo think that people can be in sincere good faith while also saying things as a conspiracy to favor their businesses, and also say that guarding against existential risks doesn’t require ‘much change in their business models.’ I would so love it if we could indeed guard against existential risks at the price of approximately zero, and might even have hope that we would actually do it.

Also:

Haydn Belfield: I don’t think this is a credible response to this Statement, for the simple reason that the vast majority of signatories (250+ by my count) are university professors, with no incentive to ‘distract from business models’ or hype up companies’ products.

I mean, yes, it does sound a bit weird when you put it that way?

Dylan Matthews: The plan is simple: raise money for my already wildly successful AI company by signing a petition stating that the company might cause literal human extinction.

Robin Wiblin: We actually have the leaked transcript of the meeting where another company settled on a similar scheme.

I mean, why focus on the sensationalist angle?

Sean Carroll: AI is a powerful tool that can undoubtedly have all sorts of terrible impacts if proper safeguards aren’t put in place. Seems strange to focus on “extinction,” which sounds overly sensationalist.

I mean, why would you care so much about that one?

And remember, just like with Covid, they will not thank you. They will not give you credit. They will adopt your old position, and act like you are the crazy one who should be discredited, because you said it too early when it was still cringe, and also you’re still saying other true things.

Chirstopher Manning (Stanford AI Lab): AI has 2 loud groups: “AI Safety” builds hype by evoking existential risks from AI to distract from the real harms, while developing AI at full speed; “AI Ethics” sees AI faults & dangers everywhere—building their brand of “criti-hype”, claiming the wise path is to not use AI.

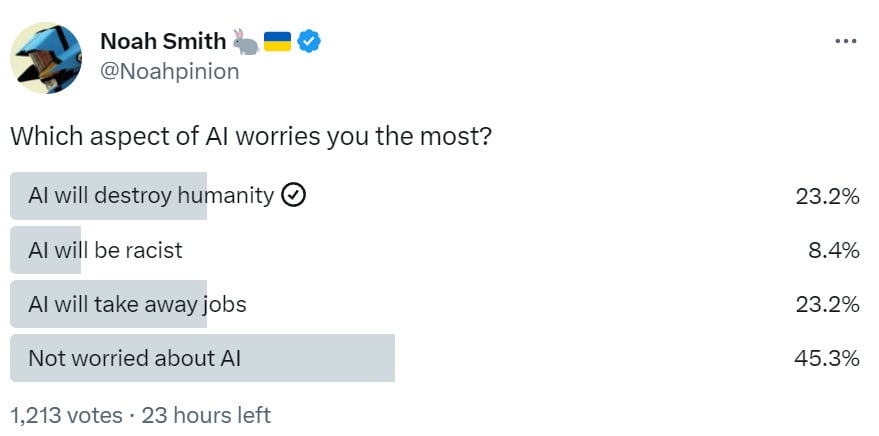

But most AI people work in the quiet middle: We see huge benefits from people using AI in healthcare, education, …, and we see serious AI risks & harms but believe we can minimize them with careful engineering & regulation, just as happened with electricity, cars, planes, ….