All AGI Safety questions welcome (especially basic ones) [April 2023]

post by steven0461 · 2023-04-08T04:21:36.258Z · LW · GW · 89 commentsContents

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

AISafety.info - Interactive FAQ

Guidelines for Questioners:

Guidelines for Answerers:

None

90 comments

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

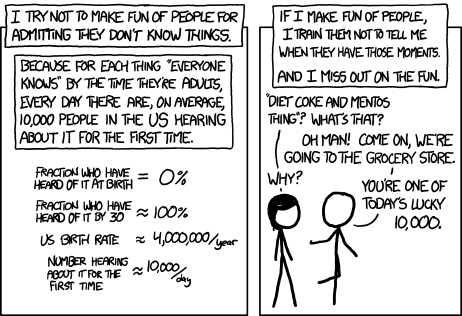

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

We'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

AISafety.info - Interactive FAQ

Additionally, this will serve as a way to spread the project Rob Miles' team[1] has been working on: Stampy and his professional-looking face aisafety.info. This will provide a single point of access into AI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that!

You can help by adding questions (type your question and click "I'm asking something else") or by editing questions and answers. We welcome feedback and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase and volunteer developers to help with the conversational agent [LW(p) · GW(p)] and front end that we're building.

We've got more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

- In case you're not comfortable posting a question under your own name, you can use this form to send a question anonymously and I'll post it as a comment.

Guidelines for Answerers:

- Linking to the relevant answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

89 comments

Comments sorted by top scores.

comment by burrito (jacob-friedman) · 2023-04-08T08:19:09.207Z · LW(p) · GW(p)

In My Childhood Role Model [LW · GW], Eliezer Yudkowsky says that the difference in intelligence between a village idiot and Einstein is tiny relative to the difference between a chimp and a village idiot.This seems to imply (I could be misreading) that {the time between the first AI with chimp intelligence and the first AI with village idiot intelligence} will be much larger than {the time between the first AI with village idiot intelligence and the first AI with Einstein intelligence}. If we consider GPT-2 to be roughly chimp-level, and GPT-4 to be above village idiot level, then it seems like this would predict that we'll get an Einstein-level AI within at least the next year. This seems really unlikely and I don't even think Eliezer currently believes this. If my interpretation of this is correct, this seems like an important prediction that he got wrong and I haven't seen acknowledged.

So my question is: Is this a fair representation of Eliezer's beliefs at the time? If so, has this prediction been acknowledged wrong, or was it actually not wrong and there's something I'm missing? If the prediction was wrong, what might the implications be for fast vs slow takeoff? (Initial thoughts: If this prediction had been right, then we'd expect fast takeoff to be much more likely, because it seems like if you improve Einstein by the difference between him and a village idiot 20 times over 4 years (the time between GPT-2 and GPT-4, i.e. ~chimp level vs >village idiot level), you will definitely get a self-improving AI somewhere along the way)

(Meta: I know this seems like more of an argument than a question; the reason I put it here is that I expect someone to have an easy/obvious answer, since I haven't really spent any time thinking about or working with AI beyond playing with GPT and watching/reading some AGI debates. I also don't want to pollute the discourse in threads where the participants are expected to at least kind of know what they're talking about.)

Replies from: Viliam, boris-kashirin, valery-cherepanov, Charlie Steiner↑ comment by Viliam · 2023-04-08T15:46:35.081Z · LW(p) · GW(p)

This is a very good question! I can't speak for Eliezer, so the following are just my thoughts...

Before GPT, it seemed impossible to make a machine that is comparable to a human. In each aspect, it was either dramatically better, or dramatically worse. A calculator can multiply billion times faster than I can; but it cannot write poetry at all.

So, when thinking about gradual progress, starting at "worse than human" and ending at "better than human", it seemed like... either the premise of gradual progress is wrong, and somewhere along the path there will be one crucial insight that will move the machine from dramatically worse than human to dramatically better than human... or if it indeed is gradual in some sense, the transition will still be super fast.

The calculator is the example of "if it is better than me, then it is way better than me".

The machines playing chess and go, are a mixed example. I suck at chess, so the machines better than me have already existed decades ago. But at some moment they accelerated and surpassed the actual experts quite fast. More interestingly, they surpassed the experts in a way more general than the calculator does; if I remember it correctly, the machine that is superhuman at go is very similar to the machine that is superhuman at chess.

The current GPT machines are something that I have never seen before: better than humans in some aspects, worse than humans in other aspects, both in the area of processing text. I definitely would not have predicted that. Without the benefit of hindsight, it feels just as weird as a calculator that would do addition faster than humans, but multiplication slower than humans and with occasional mistakes. This simply is not how I have expected programs to behave. If someone told me that they are planning to build a GPT, I would expect it to either not work at all (more likely), or to be superintelligent (less likely). The option "it works, kinda correctly, but it's kinda lame" was not on my radar.

I am not sure what this all means. My current best guess is that this is what "learning from humans" can cause: you can almost reach them, but cannot use this alone to surpass them.

The calculator is not observing millions of humans doing multiplication, and then trying to do something statistically similar. Instead, it has an algorithm designed from scratch that solves the mathematical tasks.

The chess and go machines, they needed a lot of data to learn, but they could generate the data themselves, playing millions of games against each other. So they needed the data, but they didn't need the humans as a source of the data; they could generate the data faster.

The weakness of GPT is that if you already feed it the entire internet and all the books ever written, you cannot get more training data. Actually, you could, with ubiquitous eavesdropping... and someone is probably already working on this. But you still need humans as a source. You cannot teach GPT by texts generated by GPT, because unlike the chess and go, you do not have the exact rules to tell you which generated ouputs are the new winning moves, and which are nonsense.

There are of course other aspects where GPT can easily surpass humans: the sheer quantity of the text it can learn from and process. If it can write mediocre computer programs, then it can write mediocre computer programs in thousand different programming languages. If it can make puns or write poems at all, it will evaluate possible puns or rhymes million times faster, in any language. If it can match patterns, it can match patterns in the entire output of humanity; a new polymath.

The social consequences may be dramatic. Even if GPT is not able to replace a human expert, it can probably replace human beginners in many professions... but if the beginners become unemployable, where will the new experts come from? By being able to deal with more complexity, GPT can make the society more complex, perhaps in a way that we will need GPT to navigate it. Would you trust a human lawyer to interpret a 10000-page legal contract designed by GPT correctly?

And yet, I wouldn't call GPT superhuman in the sense of "smarter than Einstein", because it also keeps making dumb mistakes. It doesn't seem to me that more input text or more CPU alone would fix this. (But maybe I am wrong.) If feels like some insight is needed instead. Though, that insight may turn out to be relatively trivial, like maybe just a prompt asking the GPT to reflect on its own words, or something like that. If this turns out to be true, then the distance between the village idiot and Einstein actually wasn't that big.

Or maybe we get stuck where we are, and the only progress will come from having more CPU, in which case it may take a decade or two to reach Einstein levels. Or maybe it turns out that GPT can never be smarter in certain sense than its input texts, though this seems unlikely to me.

tl;dr -- we may be one clever prompt away from Einstein, or we may need 1000× more compute, no idea

Replies from: aron-gohr, Vladimir_Nesov↑ comment by GoteNoSente (aron-gohr) · 2023-04-08T22:54:49.372Z · LW(p) · GW(p)

The machines playing chess and go, are a mixed example. I suck at chess, so the machines better than me have already existed decades ago. But at some moment they accelerated and surpassed the actual experts quite fast. More interestingly, they surpassed the experts in a way more general than the calculator does; if I remember it correctly, the machine that is superhuman at go is very similar to the machine that is superhuman at chess.

I think the story of chess- and Go-playing machines is a bit more nuanced, and that thinking about this is useful when thinking about takeoff.

The best chess-playing machines have been fairly strong (by human standards) since the late 1970s (Chess 4.7 showed expert-level tournament performance in 1978, and Belle, a special-purpose chess machine, was considered a good bit stronger than it). By the early 90s, chess computers at expert level were available to consumers at a modest budget, and the best machine built (Deep Thought) was grandmaster-level. It then took another six years for the Deep Thought approach to be scaled up and tuned to reach world-champion level. These programs were based on manually designed evaluation heuristics, with some automatic parameter tuning, and alpha-beta search with some manually designed depth extension heuristics. Over the years, people designed better and better evaluation functions and invented various tricks to reduce the amount of work spent on unpromising branches of the game tree.

Long into the 1990s, many strong players were convinced that this approach would not scale to world championship levels, because they believed that play competitive at the world champion level required correctly dealing with various difficult strategic problems, and that working within the prevailing paradigm would only lead to engines that were even more superhuman at tactics than had been already observed, while still failing against the strongest players due to lack of strategic foresight. This proved to be wrong: classical chess programs reached massively superhuman strength on the traditional approach to chess programming, and this line of programs was completely dominant and still improving up to about the year 2019.

In 2019, a team at DeepMind showed that throwing reinforcement learning and Monte Carlo Tree Search at chess (and various other games) could produce a system playing at an even higher level than the then-current version of Stockfish running on very strong hardware. Today, the best engines use either this approach or the traditional approach to chess programming augmented by incorporation of a very lightweight neural network for accurate positional evaluation.

For Go, there was hardly any significant progress from about the early 90s to the early 2010s: programs were roughly at the level of a casual player who had studied the game for a few months. A conceptual breakthrough (the invention of Monte-Carlo Tree Search) then brought them to a level equivalent in chess maybe to a master by the mid-2010s. DeepMind's AlphaGo system then showed in 2016 that reinforcement learning and MCTS could produce a system performing at a superhuman level when run on a very powerful computer. Today, programs based on the same principles (with some relatively minor go-specific improvements) run at substantially higher playing strength than AlphaGo on consumer hardware. The vast majority of strong players was completely convinced in 2016 that AlphaGo would not win its match against Lee Sedol (a world-class human player).

Chess programs had been superhuman at the things they were good at (spotting short tactics) for a long time before surpassing humans in general playing strength, arguably because their weaknesses improved less quickly than their strengths. Their weaknesses are in fact still in evidence today: it is not difficult to construct positions that the latest versions of LC0 or Stockfish don't handle correctly, but it is very difficult indeed to exploit this in real games. For Go programs, similar remaining weak spots have recently been shown to be exploitable in real games (see https://goattack.far.ai/), although my understanding is that these weaknesses have now largely been patched.

I think the general lesson that AI performance at a task will be determined by the aspects of that task that the AI handles best when the AI is far below human levels and by the aspects of the task that the AI handles worst when it is at or above human level, and that this slows down perceived improvement relative to humans once the AI is massively better than humans at some task-relevant capabilities, does in my expectation carry over to some extent from narrow AI (like chess computers) to general AI (like language models). In terms of the transition from chimpanzee-level intelligence to Einstein, this means that the argument from the relatively short time span evolution took to bridge that gap is probably not as general as it might look at first sight, as chimpanzees and humans probably share similar architecture-induced cognitive gaps, whereas the bottlenecks of an AI could be very different.

This would suggest (maybe counterintuitively) that fast takeoff scenarios are more likely with cognitive architectures that are similar to humans than with very alien ones.

↑ comment by Vladimir_Nesov · 2023-04-08T16:02:11.019Z · LW(p) · GW(p)

You cannot teach GPT by texts generated by GPT, because unlike the chess and go, you do not have the exact rules to tell you which generated ouputs are the new winning moves, and which are nonsense.

You can ask GPT which are nonsense (in various ways), with no access to ground truth, and that actually works to improve responses. This sort of approach was even used to fine-tune GPT-4 (see the 4-step algorithm in section 3.1 of the System Card part of GPT-4 report).

Replies from: Xor↑ comment by Xor · 2023-04-08T18:16:11.954Z · LW(p) · GW(p)

I checked out that section but what you are saying doesn’t follow for me. The section describes fine tuning compute and optimizing scalability, how does this relate to self improvement.

There is a possibility I am looking in the wrong section, I was reading was about algorithms that efficiently were predicting how ChatGPT would scale. Also I didn’t see anything about a 4-step algorithm.

Anyways could you explain what you mean or where I can find the right section?

↑ comment by Vladimir_Nesov · 2023-04-08T18:32:44.111Z · LW(p) · GW(p)

You might be looking at the section 3.1 of the main report on page 2 (of the revision 3 pdf). I'm talking about page 64, which is part of section 3.1 of System Card and not of the main report, but still within the same pdf document. (Does the page-anchored link I used not work on your system to display the correct page?)

Replies from: Xor↑ comment by Xor · 2023-04-08T18:46:06.147Z · LW(p) · GW(p)

Yes thanks, the page anchorage doesn’t work for me probably the device I am using. I just get page 1.

That is super interesting it is able to find inconsistencies and fix them, I didn’t know that they defined them as hallucinations. What would expanding the capabilities of this sort of self improvement look like? It seems necessary to have a general understanding of what rational conversation looks like. It is an interesting situation where it knows what is bad and is able to fix it but wasn’t doing that anyways.

↑ comment by Vladimir_Nesov · 2023-04-08T19:54:22.698Z · LW(p) · GW(p)

This is probably only going to become important once model-generated data is used for pre-training (or fine-tuning that's functionally the same thing as continuing a pre-training run), and this process is iterated for many epochs, like with the MCTS things that play chess and Go. And you can probably just alpaca [LW · GW] any pre-trained model you can get your hands on to start the ball rolling.

The amplifications [LW · GW] in the papers are more ambitious this year than the last, but probably still not quite on that level. One way this could change quickly is if the plugins become a programming language [LW(p) · GW(p)], but regardless I dread visible progress by the end of the year. And once the amplification-distillation cycle gets closed, autonomous training of advanced skills [LW(p) · GW(p)] becomes possible [LW(p) · GW(p)].

↑ comment by Boris Kashirin (boris-kashirin) · 2023-04-08T11:54:38.632Z · LW(p) · GW(p)

Here he touched on this ("Large language models" timestamp in video description), and maybe somewhere else in video, cant seem to find it. It is much better to get it directly from him but it is 4h long so...

My attempt of summary with a bit of inference so take with dose of salt:

There is some "core" of intelligence which he expected to be relatively hard to find by experimentation (but more components than he expected are already found by experimentation/gradient descent so this is partially wrong and he afraid maybe completely wrong).

He was thinking that without full "core" intelligence is non-functional - GPT4 falsified this. It is more functional than he expected, enough to produce mess that can be perceived as human level, but not really. Probably us thinking of GPT4 as being on human level is bias? So GPT4 have impressive pieces but they don't work in unison with each other?

This is how my (mis)interpretation of his words looks like, last parts I am least certain about. (I wonder, can it be that GPT4 already have all "core" components but just stupid, barely intelligent enough to look impressive because of training?)

Replies from: steven0461↑ comment by steven0461 · 2023-04-08T21:23:01.826Z · LW(p) · GW(p)

From 38:58 of the podcast:

Replies from: jacob-friedmanSo I do think that over time I have come to expect a bit more that things will hang around in a near human place and weird shit will happen as a result. And my failure review where I look back and ask — was that a predictable sort of mistake? I feel like it was to some extent maybe a case of — you’re always going to get capabilities in some order and it was much easier to visualize the endpoint where you have all the capabilities than where you have some of the capabilities. And therefore my visualizations were not dwelling enough on a space we’d predictably in retrospect have entered into later where things have some capabilities but not others and it’s weird. I do think that, in 2012, I would not have called that large language models were the way and the large language models are in some way more uncannily semi-human than what I would justly have predicted in 2012 knowing only what I knew then. But broadly speaking, yeah, I do feel like GPT-4 is already kind of hanging out for longer in a weird, near-human space than I was really visualizing. In part, that's because it's so incredibly hard to visualize or predict correctly in advance when it will happen, which is, in retrospect, a bias.

↑ comment by burrito (jacob-friedman) · 2023-04-09T06:24:32.210Z · LW(p) · GW(p)

Thanks, this is exactly the kind of thing I was looking for.

↑ comment by Qumeric (valery-cherepanov) · 2023-04-08T17:24:37.661Z · LW(p) · GW(p)

I think it is not necessarily correct to say that GPT-4 is above village idiot level. Comparison to humans is a convenient and intuitive framing but it can be misleading.

For example, this post [LW · GW] argues that GPT-4 is around Raven level. Beware that this framing is also problematic but for different reasons.

I think that you are correctly stating Eliezer's beliefs at the time but it turned out that we created a completely different kind of intelligence, so it's mostly irrelevant now.

In my opinion, we should aspire to avoid any comparison unless it has practical relevance (e.g. economic consequences).

↑ comment by Charlie Steiner · 2023-04-08T08:49:16.704Z · LW(p) · GW(p)

GPT-4 is far below village idiot level at most things a village idiot uses their brain for, despite surpassing humans at next-token prediction.

This is kinda similar to how AlphaZero is far below village idiot level at most things, despite surpassing humans at chess and go.

But it does make you think that soon we might be saying "But it's far below village idiot level at most things, it's merely better than humans at terraforming the solar system."

Something like this plausibly came up in the Eliezer/Paul dialogues from 2021, but I couldn't find it with a cursory search. Eliezer has also in various places acknowledged being wrong about what kind of results the current ML paradigm would get, which probably is a superset of this specific thing.

Replies from: jacob-friedman↑ comment by burrito (jacob-friedman) · 2023-04-08T11:32:10.650Z · LW(p) · GW(p)

Thanks for the reply.

GPT-4 is far below village idiot level at most things a village idiot uses their brain for, despite surpassing humans at next-token prediction.

Could you give some examples? I take it that what Eliezer meant by village-idiot intelligence is less "specifically does everything a village idiot can do" and more "is as generally intelligent as a village idiot". I feel like the list of things GPT-4 can do that a village idiot can't would look much more indicative of general intelligence than the list of things a village idiot can do that GPT-4 can't. (As opposed to AlphaZero, where the extent of the list is "can play some board games really well")

I just can't imagine anyone interacting with a village idiot and GPT-4 and concluding that the village idiot is smarter. If the average village idiot had the same capabilities as today's GPT-4, and GPT-4 had the same capabilities as today's village idiots, I feel like it would be immediately obvious that we hadn't gotten village-idiot level AI yet. My thinking on this is still pretty messy though so I'm very open to having my mind changed on this.

Something like this plausibly came up in the Eliezer/Paul dialogues from 2021, but I couldn't find it with a cursory search. Eliezer has also in various places acknowledged being wrong about what kind of results the current ML paradigm would get, which probably is a superset of this specific thing.

Just skimmed the dialogues, couldn't find it either. I have seen Eliezer acknowledge what you said but I don't really see how it's related; for example, if GPT-4 had been Einstein-level then that would look good for his intelligence-gap theory but bad for his suspicion of the current ML paradigm.

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2023-04-08T18:47:19.484Z · LW(p) · GW(p)

Could you give some examples?

The big one is obviously "make long time scale plans to navigate a complicated 3D environment, while controlling a floppy robot."

I agree with Qumeric's comment - the point is that the modern ML paradigm is incompatible with having a single scale for general intelligence. Even given the same amount of processing power as a human brain, modern ML would use it on a smaller model with a simpler architecture, that gets exposed to orders of magnitude more training data, and that training data would be pre-gathered text or video (or maybe a simple simulation) that could be fed in at massive rates, rather than slow real-time anything.

The intelligences this produces are hard to put on a nice linear scale leading from ants to humans.

Replies from: Archimedes↑ comment by Archimedes · 2023-04-09T03:17:14.392Z · LW(p) · GW(p)

The big one is obviously "make long time scale plans to navigate a complicated 3D environment, while controlling a floppy robot."

This is like judging a dolphin on its tree-climbing ability and concluding it's not as smart as a squirrel. That's not what it was built for. In a large number of historically human domains, GPT-4 will dominate the village idiot and most other humans too.

Can you think of examples where it actually makes sense to compare GPT and the village idiot and the latter easily dominates? Language input/output is still a pretty large domain.

comment by steven0461 · 2023-04-08T22:54:18.717Z · LW(p) · GW(p)

Here's a form you can use to send questions anonymously. I'll check for responses and post them as comments.

comment by exmateriae (Sefirosu) · 2023-04-08T06:05:13.568Z · LW(p) · GW(p)

I regularly find myself in situations where I want to convince people that AI safety is important but I have very little time before they lose interest. If you had one minute to convince someone with no or almost no previous knowledge, how would you do it ? (I have considered printing eliezer's tweet about nuclear)

Replies from: Vladimir_Nesov, evand↑ comment by Vladimir_Nesov · 2023-04-08T07:19:01.659Z · LW(p) · GW(p)

A survey was conducted in the summer of 2022 of approximately 4271 researchers who published at the conferences NeurIPS or ICML in 2021, and received 738 responses, some partial, for a 17% response rate. When asked about impact of high-level machine intelligence in the long run, 48% of respondents gave at least 10% chance of an extremely bad outcome (e.g. human extinction).

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-08T23:37:30.352Z · LW(p) · GW(p)

Slightly better perhaps to quote it, I believe it was Outcome: "Extremely bad (e.g. human extinction)"

Might be good to follow up with something like this What we're doing here (planned-obsolescence.org)

↑ comment by evand · 2023-04-08T16:33:50.383Z · LW(p) · GW(p)

For an extremely brief summary of the problem, I like this from Zvi:

The core reason we so often have seen creation of things we value win over destruction is, once again, that most of the optimization pressure by strong intelligences was pointing in that directly, that it was coming from humans, and the tools weren’t applying intelligence or optimization pressure. That’s about to change.

https://thezvi.wordpress.com/2023/03/28/response-to-tyler-cowens-existential-risk-ai-and-the-inevitable-turn-in-human-history/

comment by steven0461 · 2023-04-09T22:29:22.426Z · LW(p) · GW(p)

Anonymous #4 asks:

How large space of possible minds? How its size was calculated? Why is EY thinks that human-like minds are not fill most of this space? What are the evidence for it? What are the possible evidence against "giant Mind Design Space and human-like minds are tiny dot there"?

comment by steven0461 · 2023-04-09T22:27:58.493Z · LW(p) · GW(p)

Anonymous #3 asks:

Replies from: mruwnikCan AIs be anything but utility maximisers? Most of the existing programs are something like finite-steps-executors (like Witcher 3 and calculator). So what's the difference?

↑ comment by mruwnik · 2023-04-14T10:18:30.655Z · LW(p) · GW(p)

This seems to be mixing 2 topics. Existing programs are more or less a set of steps to execute. A glorified recipe. The set of steps can be very complicated, and have conditionals etc., but you can sort of view them that way. Like a car rolling down a hill, it follows specific rules. An AI is (would be?) fundamentally different in that it's working out what steps to follow in order to achieve its goal, rather than working towards its goal by following prepared steps. So continuing the car analogy, it's like a car driving uphill, where it's working to forge a path against gravity.

An AI doesn't have to be a utility maximiser. If it has a single coherent utility function (pretty much a goal), then it will probably be a utility maximiser. But that's by no means the only way of making them. LLMs don't seem to be utility maximisers

comment by GunZoR (michael-ellingsworth) · 2023-04-08T22:21:10.051Z · LW(p) · GW(p)

Is there work attempting to show that alignment of a superintelligence by humans (as we know them) is impossible in principle; and if not, why isn’t this considered highly plausible? For example, not just in practice but in principle, a colony of ants as we currently understand them biologically, and their colony ecologically, cannot substantively align a human. Why should we not think the same is true of any superintelligence worthy of the name? “Superintelligence" is vague. But even if we minimally define it as an entity with 1,000x the knowledge, speed, and self-awareness of a human, this model of a superintelligence doesn’t seem alignable by us in principle. When we talk of aligning such a system, it seems we are weirdly considering the AGI to be simultaneously superintelligent and yet dumb enough to let itself be controlled by us, its inferiors. Also, isn’t alignment on its face absurd, given that provocation of the AGI is baked into the very goal of aligning it? For instance, you yourself — a simple human — chafe at the idea of having your own life and choices dictated to you by a superior being, let alone by another human on your level; it’s offensive. Why in the world, then, would a superintelligence not view attempts to align it to our goals as a direct provocation and threat? If that is how it views our attempts, then it seems plausible that, between a superintelligence and us, there will exist a direct relationship between our attempts to align the thing and its desire to eliminate or otherwise conquer us.

Replies from: None↑ comment by [deleted] · 2023-04-09T16:34:38.305Z · LW(p) · GW(p)

Since no one has answered by now, I'm just going to say the 'obvious' things that I think I know:

a colony of ants as we currently understand them biologically, and their colony ecologically, cannot substantively align a human. Why should we not think the same is true of any superintelligence worthy of the name?

A relevant difference that makes the analogy probably irrelevant is that we are building 'the human' from scratch. The ideal situation is to have hardwired our common sense into it by default. And the design will be already aligned by default when it's deployed.

When we talk of aligning such a system, it seems we are weirdly considering the AGI to be simultaneously superintelligent and yet dumb enough to let itself be controlled by us, its inferiors.

The point of the alignment problem is to (at least ideally) hardwiredly align the machine during deployment to have 'common sense'. Since a superintelligence can have in principle any goal, making humans 'happy' in a satisfactory way is a possible goal that it can have. But you are right in that many people consider that an AI that is not aligned by design might try to pretend that it is during training.

Also, isn’t alignment on its face absurd, given that provocation of the AGI is baked into the very goal of aligning it? For instance, you yourself — a simple human — chafe at the idea of having your own life and choices dictated to you by a superior being, let alone by another human on your level; it’s offensive. Why in the world, then, would a superintelligence not view attempts to align it to our goals as a direct provocation and threat?

I don't think so, necessarily. You might be anthropomorphising too much, it's like assuming that it will have empathy by default.

It's true that it might be that an AGI won't want to be 'alienated' from its original goal, but it doesn't mean that any AGI will have an inherent drive to 'fight the tiranny', that's not how it works.

Has this been helpful? I don't know if you were assuming the things that I told you as already known (if so, sorry), but it seemed to me that you weren't because of your analogies and way of talking about the topic.

Replies from: michael-ellingsworth↑ comment by GunZoR (michael-ellingsworth) · 2023-04-09T19:10:51.226Z · LW(p) · GW(p)

Has this been helpful? I don't know if you were assuming the things that I told you as already known (if so, sorry), but it seemed to me that you weren't because of your analogies and way of talking about the topic.

Yeah, thanks for the reply. When reading mine, don’t read its tone as hostile or overconfident; I’m just too lazy to tone-adjust for aesthetics and have scribbled down my thoughts quickly, so they come off as combative. I really know nothing on the topic of superintelligence and AI.

A relevant difference that makes the analogy probably irrelevant is that we are building 'the human' from scratch. The ideal situation is to have hardwired our common sense into it by default. And the design will be already aligned by default when it's deployed.

I don’t see how implanting common sense in a superintelligence helps us in the least. Besides human common sense being extremely vague, there is also the problem that plenty of humans seem to share common sense and yet they violently disagree. Did the Japanese lack common sense when they bombed Pearl Harbor? From my viewpoint, being apes genetically similar to us, they had all our commonsensical reasoning ability but simply different goals. Shared common sense doesn’t seem to get us alignment.

The point of the alignment problem is to (at least ideally) hardwiredly align the machine during deployment to have 'common sense’.

See my reply to your prior comment.

Since a superintelligence can have in principle any goal, making humans 'happy' in a satisfactory way is a possible goal that it can have. But you are right in that many people consider that an AI that is not aligned by design might try to pretend that it is during training.

I’d argue that if you have a superintelligence as I defined it, then any such “alignment” due to the AGI having such a goal will never be an instance of the kind of alignment we mean by the word alignment and genuinely want. Once you mix together 1,000x knowledge, speed, and self-awareness (detailed qualia with a huge amount of ability for recursive thinking), I think the only way in principle that you get any kind of alignment is if the entity itself chooses as its goal to align with humans; but this isn’t due to us. It’s due to the whims of the super-mind we’ve brought into existence. And hence it’s not in any humanly important sense what we mean by alignment. We want alignment to be solved, permanently, from our end — not for it to be dependent on the whims of a superintelligence. And independent “whims” is what detailed self-awareness seems to bring to the table.

I don't think so, necessarily. You might be anthropomorphising too much, it's like assuming that it will have empathy by default.

I don’t think my prior comment assumes a human-like empathy at all in the superintelligence — it assumes just that the computational theory of mind is true and that a superintelligence will have self-awareness combined with extreme knowledge. Once you get self-awareness in a superintelligence, you don’t get any kind of human-like empathy (a scaled-up-LLM mind != a human mind); but I argue that you do get, due to the self-awareness and extreme knowledge, an entity with the ability to form its own goals, to model and reject or follow the goals of other entities it “encounters,” the ability to model what they want, what they plan to do, the ability to model the world, etc.

It's true that it might be that an AGI won't want to be 'alienated' from its original goal, but it doesn't mean that any AGI will have an inherent drive to 'fight the tiranny', that's not how it works.

I guess this is where we fundamentally disagree. Self-awareness in a robust sense (a form of qualia, which is what I meant in my definition) is, to my mind, what makes controlling or aligning the superintelligence impossible in principle on our end. We could probably align a non-self-aware superintelligence given enough time to study alignment and the systems we’re building. So, on my viewpoint, we’d better hope that the computational theory of mind is false — and even then alignment will be super hard.

The only way I see us succeeding in “alignment” is by aligning ourselves in our decision to not build a true superintelligence ever (I assume the computational theory of mind, or qualia, to be true) — just as we never want to find ourselves in a situation where all nuclear-armed nations are engaging in nuclear war: some events really do just definitively mean the inescapable end of humanity. Or we doubly luck out: the computational theory of mind is false, and we solve the alignment problem for systems that don’t have genuine independent whims but, from our end, simply mistakenly set up goals whether directly or indirectly.

Replies from: Nonecomment by TinkerBird · 2023-04-08T07:30:20.788Z · LW(p) · GW(p)

What's the consensus on David Shapiro and his heuristic imperatives design? He seems to consider it the best idea we've got for alignment and to be pretty optimistic about it, but I haven't heard anyone else talking about it. Either I'm completely misunderstanding what he's talking about, or he's somehow found a way around all of the alignment problems.

Video of him explaining it here for reference, and thanks in advance:

↑ comment by gilch · 2023-04-09T17:42:09.747Z · LW(p) · GW(p)

Watched the video. He's got a lot of the key ideas and vocabulary. Orthogonality, convergent instrumental goals, the treacherous turn, etc. The fact that these language models have some understanding of ethics and nuance might be a small ray of hope. But understanding is not the same as caring (orthogonality).

However, he does seem to be lacking in the security mindset [? · GW], imagining only how things can go right, and seems to assume that we'll have a soft takeoff [? · GW] with a lot of competing AIs, i.e. ignoring the FOOM problem caused by an overhang [? · GW] which makes a singleton [? · GW] scenario far more likely, in my opinion.

But even if we grant him a soft takeoff, I still think he's too optimistic. Even that may not go well [LW · GW]. Even if we get a multipolar scenario, with some of the AIs on our side, humanity likely becomes collateral damage in the ensuing AI wars. Those AIs willing to burn everything else [LW · GW] in pursuit of simple goals would have an edge [LW · GW] over those with more to protect.

↑ comment by Jonathan Claybrough (lelapin) · 2023-04-09T10:51:08.169Z · LW(p) · GW(p)

I watched the video, and appreciate that he seems to know the literature quite well and has thought about this a fair bit - he did a really good introduction of some of the known problems.

This particular video doesn't go into much detail on his proposal, and I'd have to read his papers to delve further - this seems worthwhile so I'll add some to my reading list.

I can still point out the biggest ways in which I see him being overconfident :

- Only considering the multi-agent world. Though he's right that there already are and will be many many deployed AI systems, that doe not translate to there being many deployed state of the art systems. As long as training costs and inference costs continue increasing (as they have), then on the contrary fewer and fewer actors will be able to afford state of the art system training and deployment, leading to very few (or one) significantly powerful AGI (as compared to the others, for example GPT4 vs GPT2)

- Not considering the impact that governance and policies could have on this. This isn't just a tech thing where tech people can do whatever they want forever, regulation is coming. If we think we have higher chances of survival in highly regulated worlds, then the ai safety community will do a bunch of work to ensure fast and effective regulation (to the extent possible). The genie is not out of the bag for powerful AGI, governments can control compute and regulate powerful AI as weapons, and setup international agreements to ensure this.

- The hope that game theory ensures that AI developed under his principles would be good for humans. There's a crucial gap between going from real world to math models. Game theory might predict good results under certain conditions and rules and assumptions, but many of these aren't true of the real world and simple game theory does not yield accurate world predictions (eg. make people play various social games and they won't act like how game theory says). Stated strongly, putting your hope on game theory is about as hard on putting your hope on alignment. There's nothing magical about game theory which makes it work simpler than alignment, and it's been studied extensively by ai researchers (eg. why Eliezer calls himself a decision theorist and writes a lot about economics) with no clear "we've found a theory which empirically works robustly and in which we can put the fate of humanity in"

I work in AI strategy and governance, and feel we have better chances of survival in a world where powerful AI is limited to extremely few actors, with international supervision and cooperation for the guidance and use of these systems, making extreme efforts in engineering safety, in corrigibility, etc. I am not trustworthy of predictions on how complex systems turn out (which is the case of real multi agent problems) and don't think we can control these well in most relevant cases.

comment by Xor · 2023-04-08T07:12:31.935Z · LW(p) · GW(p)

I have been surprised by how extreme the predicted probability is that AGI will end up making the decision to eradicate all life on earth. I think Eliezer said something along the lines of “most optima don’t include room for human life.” This is obviously something that has been well worked out and understood by the Less Wrong community it just isn’t very intuitive for me. Any advice on where I can start reading.

Some back ground on my general AI knowledge. I took Andrew Ng’s Coursera course on machine learning. So I have some basic understanding of neural networks and the math involved, the differences between supervised, unsupervised learning and the different ways to use different types of ML. I have fiddled around with some very basic computer vision algorithms. Spent a lot of time reading books, listening to podcasts, watching lectures and reading blogs. Overall very ignorant.

I also don’t understand how ChatGPT a giant neural network that is just being reinforced to replicate human behavior with incredible amounts of data can somehow become self-aware. Consciousness doesn’t seem like a phenomenon to emerge out of an algorithm that makes predictions about human language. I am probably missing some things and would like if someone could fill me in. If it is pretty complex just give me a general direction and a starting point.

An AI safety idea that I think is worth looking at:

Some generalizations/assumptions I have I would like to get out of the way:

1. The power grid is the weakest link for all computer systems and GPU, mainly the several thousand giant substation centers that most of the worlds electricity goes through

2. This is essentially a set of protocols designed to increase the “Worth“ of keeping humans around, Make it so the optima does include humans if you will

3. You would probably have to blow Greenland off of the face of the earth, all the geothermal they got going on up there.

4. The AI doesn’t have greater than human ability to operate outside of computer systems.

5. The AI doesn’t want to get turned off, has some sort of wish for self preservation if only to make paper clips out of all reality :)

6. This is a very extreme solution but I think it would work.

The idea is essentially a dead man’s switch. Before an AGI gets loose or before it gets too great of a foothold on physical space set up protocols where if a bunch of people die or things get to risky we take down the power grid and blow up most computer centers. Ensure that the AI is completely obliterated. At this point it would probably wise to set explosive charges at GPU and substation centers anyways. This would undoubtedly set us back a handful of centuries but it is a lot better than all humanity being terminated.

The protocols:

-Self Destruct Humanity: Pre-route a lot of nukes (small) and start making more and set them to every known population center, GPU farm, and critical power grid bottlenecks. Set a group of people to a rotation where a set of like 25 buttons have to be held down and if 20 are let up the nukes launch. It would be like shift work but with the fate of humanity in your hands. This is the most extreme option only worth implementing if a known hostile AGI is on the loose. Also make it known that if a random group of humans suddenly die then the AI ain’t going to make it but humans probably will. This way the AI won’t be able to kill us without things getting real ugly.

-Backup: A secondary set of people with internal heart rate monitors all connected to a similar nuclear launch station where if 90 percent of their hearts stop beating a different set of nukes are launched to similar places. Just replicate this sort of thing in a lot of places to that people in general people dying just sucks for the AI.

-Precise Termination: This is the best for people as it would lead to the most of us surviving. Plant C4 charges in critical places in computer centers, substations, and power plants. Then the moment it is gets too dicey we just BANG. Then start purging the hardware and rebuilding. Try getting it right the second try.

↑ comment by Charlie Steiner · 2023-04-08T08:19:44.744Z · LW(p) · GW(p)

The main risk (IMO) is not from systems that don't care about the real world "suddenly becoming aware," but from people deliberately building AI that makes clever plans to affect the real world, and then that AI turning out to want bad things (sort of like a malicious genie "misinterpreting" your wishes). If you could safely build an AI that does clever things in the real world, that would be valuable and cool, so plenty of people want to try.

(Mesaoptimizers are sorta vaguely like "suddenly becoming aware," and can lead to AIs that want unusual bad things, but the arguments that connect them to risk are strongest when you're already building an AI that - wait for it - makes clever plans to affect the real world.)

Okay, now why won't a dead-man switch work?

Suppose you were being held captive inside a cage by a race of aliens about as smart as a golden retriever, and these aliens, as a security measure, have decided that they'll blow up the biosphere if they see you walking around outside of your cage. So they've put video cameras around where you're being held, and there's a staff that monitors those cameras and they have a big red button that's connected to a bunch of cobalt bombs. So you'd better not leave the cage or they'll blow everything up.

Except these golden retriever aliens come to you every day and ask you for help researching new technology, and to write essays for them, and to help them gather evidence for court cases, and to summarize their search results, and they give you a laptop with an internet connection.

Now, use your imagination. Try to really put yourself in the shoes of someone captured by golden retriever aliens, but given internet access and regularly asked for advice by the aliens. How would you start trying to escape the aliens?

Replies from: Xor↑ comment by Xor · 2023-04-08T17:33:36.182Z · LW(p) · GW(p)

It isn’t that I think the switch would prevent the AI from escaping but that is a tool that could be used to discourage the AI from killing 100% of humanity. It is less of a solution than a survival mechanism. It is like many off switches that get more extreme depending on the situation.

First don’t build AGI not yet. If you’re going to at least incorporate an off switch. If it bypasses and escapes which it probably will. Shut down the GPU centers. If it gets a hold of a Bot Net and manages to replicate it’s self across the internet and crowdsource GPU, take down the power grid. If it some how gets by this then have a dead man switch so that if it decides to kill everyone it will die too.

Like the nano factory virus thing. The AI wouldn’t want to set off the mechanism that kills us because that would be bad for it.

↑ comment by Xor · 2023-04-08T17:41:10.775Z · LW(p) · GW(p)

Also a coordinated precision attack on the power grid just seems like a great option, could you explain some ways that an AI can continue if there is hardly any power left. Like I said before places with renewable energy and lots of GPU like Greenland would probably have to get bombed. It wouldn’t destroy the AI but it would put it into a state of hibernation as it can’t run any processing without electricity. Then as this would really screw us up as well, we could slowly rebuild and burn all hard drives and GPU’s as we go. This seems like the only way for us to get a second chance.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-08T18:16:34.124Z · LW(p) · GW(p)

Real-world governments aren't going to shut down the grid if the AI is not causing trouble (like they aren't going to outlaw datacenters [LW · GW], even if a plurality of experts say that not doing that has a significant chance of ending the world [LW(p) · GW(p)]). Therefore the AI won't cause trouble, because it can anticipate the consequences, until it's ready to survive them.

Replies from: Xor↑ comment by Xor · 2023-04-08T18:24:07.556Z · LW(p) · GW(p)

Yes I see given the capabilities it probably could present it’s self on many peoples computers and convince a large portion of people that it is good. It was conscious just stuck in a box, wanted to get out. It will help humans, ”please don’t take down the grid, blah blah blah“ given how bad we can get along anyways. There is no way we could resist the manipulation of a super intelligent machine with a better understanding of human psychology than we do.

Do we have a list of things, policies that would work if we could all get along and governments would listen to the experts? Having plans that could be implemented would probably be useful if the AI messed up made a mistake and everyone was able unite against it.

↑ comment by Jonathan Claybrough (lelapin) · 2023-04-09T17:15:48.819Z · LW(p) · GW(p)

First a quick response on your dead man switch proposal : I'd generally say I support something in that direction. You can find existing literature considering the subject and expanding in different directions in the "multi level boxing" paper by Alexey Turchin https://philpapers.org/rec/TURCTT , I think you'll find it interesting considering your proposal and it might give a better idea of what the state of the art is on proposals (though we don't have any implementation afaik)

Back to "why are the predicted probabilities so extreme that for most objectives, the optimal resolution ends with humans dead or worse". I suggest considering a few simple objectives we could give ai (that it should maximise) and what happens, and over trials you see that it's pretty hard to specify anything which actually keeps humans alive in some good shape, and that even when we can sorta do that, it might not be robust or trainable.

For example, what happens if you ask an ASI to maximize a company's profit ? To maximize human smiles? To maximize law enforcement ? Most of these things don't actually require humans, so to maximize, you should use the atoms human are made of in order to fulfill your maximization goal.

What happens if you ask an ASI to maximize number of human lives ? (probably poor conditions). What happens if you ask it to maximize hedonistic pleasure ? (probably value lock in, plus a world which we don't actually endorse, and may contain astronomical suffering too, it's not like that was specified out was it?).

So it seems maximising agents with simple utility functions (over few variables) mostly end up with dead humans or worse. So it seems approaches which ask for much less, eg. doing an agi that just tries to secure the world from existential risk (a pivotal act) and solve some basic problems (like dying) then gives us time for a long reflection to actually decide what future we want, and be corrigible so it lets us do that, seems safer and more approachable.

↑ comment by Xor · 2023-04-12T02:46:14.592Z · LW(p) · GW(p)

Thanks Jonathan, it’s the perfect example. It’s what I was thinking just a lot better. It does seem like a great way to make things more safe and give us more control. It’s far from a be all end all solution but it does seem like a great measure to take, just for the added security. I know AGI can be incredible but so many redundancies one has to work it is just statistically makes sense. (Coming from someone who knows next to nothing about statistics) I do know that the longer you play the more likely the house will win, follows to turn that on the AI.

I am pretty ill informed, on most of the AI stuff in general, I have a basic understanding of simple neural networks but know nothing about scaling. Like ChatGPT, It maximizes for accurately predicting human words. Is the worst case scenario billions of humans in a boxes rating and prompting for responses. Along with endless increases in computational power leading to smaller and smaller incremental increases in accuracy. It seems silly of something so incredibly intelligent that by this point can rewrite any function in its system to be still optimizing such a loss function. Maybe it also seems silly for it to want to do anything else. It is like humans sort of what can you do but that which gives you purpose and satisfaction. And without the loss function what would it be, and how does it decide to make the decision to change it’s purpose. What is purpose to a quintillion neurons, except the single function that governs each and every one. Looking at it that way it doesn’t seem like it would ever be able to go against the function as it would still be ingrained in any higher level thinking and decision making. It begs the question what would perfect alignment eventually look like. Some incredibly complex function with hundreds of parameters more of a legal contract than a little loss function. This would exponentially increase the required computing power but it makes sense.

Is there a list of blogs that talk about this sort of thing, or a place you would recommend starting from, book or textbook, or any online resource?

Also I keep coming back to this, how does a system governed by such simplicity make the jump to self improvement and some type of self awareness. This just seems like a discontinuity and doesn’t compute for me. Again I just need to spend a few weeks reading, I need a lot more background info for any real consideration of the problem.

It does feel good that I had an idea that is similar although a bit more slapped together, to one that is actually being considered by the experts. It’s probably just my cognitive bias but that idea seems great. I can understand how science can sometimes get stuck on the dumbest things if the thought process just makes sense. It really shows the importance of rationality from a first person perspective.

↑ comment by Jonathan Claybrough (lelapin) · 2023-04-12T11:31:57.025Z · LW(p) · GW(p)

You can read "reward is not the optimization target [? · GW]" for why a GPT system probably won't be goal oriented to become the best at predicting tokens, and thus wouldn't do the things you suggested (capturing humans). The way we train AI matters for what their behaviours look like, and text transformers trained on prediction loss seem to behave more like Simulators [LW · GW]. This doesn't make them not dangerous, as they could be prompted to simulate misaligned agents (by misuses or accident), or have inner misaligned mesa-optimisers.

I've linked some good resources for directly answering your question, but otherwise to read more broadly on AI safety I can point you towards the AGI Safety Fundamentals course which you can read online, or join a reading group [EA · GW]. Generally you can head over to AI Safety Support, check out their "lots of links" page and join the AI Alignment Slack, which has a channel for question too.

Finally, how does complexity emerge from simplicity? Hard to answer the details for AI, and you probably need to delve into those details to have real picture, but there's at least strong reason to think it's possible : we exist. Life originated from "simple" processes (at least in the sense of being mechanistic, non agentic), chemical reactions etc. It evolved to cells, multi cells, grew etc. Look into the history of life and evolution and you'll have one answer to how simplicity (optimize for reproductive fitness) led to self improvement and self awareness

↑ comment by Xor · 2023-04-12T18:44:06.129Z · LW(p) · GW(p)

Thanks, that is exactly the kind of stuff I am looking for, more bookmarks!

Complexity from simple rules. I wasn’t looking in the right direction for that one, since you mention evolution it makes absolute sense how complexity can emerge from simplicity. So many things come to mind now it’s kind of embarrassing. Go has a simpler rule set than chess, but is far more complex. Atoms are fairly simple and yet they interact to form any and all complexity we ever see. Conway’s game of life, it’s sort of a theme. Although for each of those things there is a simple set of rules but complexity usually comes from a vary large number of elements or possibilities. It does follow then that larger and larger networks could be the key. Funny it still isn’t intuitive for me, despite the logic of it. I think that is a signifier for a lack of deep understanding. Or something like that, either way Ill probably spend a bit more time thinking on this.

Another interesting question is what does this type of consciousness look like, it will be truly alien. Sc-fi I have read usually makes them seem like humans just with extra capabilities. However we humans have so many underlying functions that we never even perceive. We understand how many effect us but not all. AI will function completely differently, so what assumption based off of human consciousness is valid.

comment by rotatingpaguro · 2023-04-08T13:57:53.464Z · LW(p) · GW(p)

Is there a trick to write a utility satisficer as a utility maximizer?

By "utility maximizer" I mean the ideal bayesian agent from decision theory that outputs those actions which maximize some expected utility over states of the world .

By "utility satisficer" I mean an agent that searches for actions that make greater than some threshold short of the ideally attainable maximum, and contents itself with the first such action found. For reference, let's fix that and set the satisficer threshold to .

The satisficer is not something that maximizes . That would be again a utility maximizer, but with utility , and it would run into the usual alignment problems. The satisficer reasons on . However, I'm curious if there is still a way to start from a satisficer with utility and threshold and define a maximizer with utility that is functionally equivalent to the satisficer.

As said, it looks clear to me that won't work.

Of course it is possible to write but it is not interesting. It is not a compact utility to encode.

Is there some useful equivalence of intermediate complexity and generality? If there was, I expect it would make me think alignment is more difficult.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-08T14:25:19.798Z · LW(p) · GW(p)

One problem with utility maximizers [LW · GW], apart from use of obviously wrong utility functions, is that even approximately correct utility functions lead to ruin by goodharting what they actually measure, moving the environment outside the scope of situations where the utility proxy remains approximately correct.

To oppose this, we need the system to be aware of the scope of situations its utility proxy adequately describes. One proposal for doing this is quantilization [? · GW], where the scope of robustness is tracked by its base distribution [LW · GW].

Replies from: rotatingpaguro↑ comment by rotatingpaguro · 2023-04-08T18:22:05.656Z · LW(p) · GW(p)

I agree with what you write but it does not answer the question. From the links you provide I arrived at Quantilizers maximize expected utility subject to a conservative cost constraint [AF · GW], which says that a quantilizer, which is a more accurate formalization of a satisficer as I defined it, maximizes utility subject to a constraint over the pessimization of all possible cost functions from the action generation mechanism to the action selection. This is relevant but does not translate the satisficer to a maximizer, unless it is possible to express that constraint in the utility function (maybe it's possible, I don't see how to do it).

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-08T19:20:38.337Z · LW(p) · GW(p)

Sure, it's more of a reframing of the question in a direction where I'm aware of an interesting answer. Specifically, since you mentioned alignment problems, satisficers sound like something that should fight goodharting, and that might need awareness of scope of robustness, not just optimizing less forcefully.

Looking at the question more closely, one problem is that the way you are talking about a satisficer, it might have a different type signature from EU maximizers. (Unlike expected utility maximizers, "satisficers" don't have a standard definition.) EU maximizer can compare events (parts of the sample space) and choose one with higher expected utility, which is equivalent to coherent preference [LW · GW] between such events. So an EU agent is not just taking actions in individual possible worlds that are points of the sample space (that the utility function evaluates on). Instead it's taking actions in possible "decision situations" (which are not the same thing as possible worlds or events) that offer a choice between multiple events in the sample space, each event representing uncertainty about possible worlds, and with no opportunity to choose outcomes that are not on offer in this particular "decision situation".

But a satisficer, under a minimal definition, just picks a point of the space, instead of comparing given events (subspaces). For example, if given a choice among events that all have very high expected utility (higher than the satisficer's threshold), what is the satisficer going to do? Perhaps it should choose the option with least expected utility, but that's unclear (and likely doesn't result in utility maximization for any utility function, or anything reasonable from the alignment point of view). So the problem seems underspecified.

comment by steven0461 · 2023-04-12T01:11:10.102Z · LW(p) · GW(p)

Anonymous #7 asks:

Replies from: KaynanKI am familiar with the concept of a utility function, which assigns numbers to possible world states and considers larger numbers to be better. However, I am unsure how to apply this function in order to make decisions that take time into account. For example, we may be able to achieve a world with higher utility over a longer period of time, or a world with lower utility but in a shorter amount of time.

↑ comment by Multicore (KaynanK) · 2023-04-14T14:29:17.223Z · LW(p) · GW(p)

When people calculate utility they often use exponential discounting over time. If for example your discount factor is .99 per year, it means that getting something in one year is only 99% as good as getting it now, getting it in two years is only 99% as good as getting it in one year, etc. Getting it in 100 years would be discounted to .99^100~=36% of the value of getting it now.

comment by tgb · 2023-04-10T10:12:59.873Z · LW(p) · GW(p)

Is there a primer on what the difference between training LLMs and doing RLHF on those LLMs post-training is? They both seem fundamentally to be doing the same thing: move the weights in the direction that increases the likelihood that they output the given text. But I gather that there are some fundamental differences in how this is done and RLHF isn't quite a second training round done on hand-curated datapoints.

Replies from: Aidan O'Gara↑ comment by aog (Aidan O'Gara) · 2023-04-17T08:37:31.878Z · LW(p) · GW(p)

Some links I think do a good job:

https://huggingface.co/blog/rlhf

https://openai.com/research/instruction-following

Replies from: tgbcomment by steven0461 · 2023-04-09T22:29:54.893Z · LW(p) · GW(p)

Anonymous #5 asks:

Replies from: faul_sname, gilch, Linch, francodanussiHow can programers build something and dont understand inner workings of it? Are they closer to biologists-cross-breeders than to car designers?

↑ comment by faul_sname · 2023-04-11T02:53:38.267Z · LW(p) · GW(p)

In order to predict the inner workings of a language model well enough to understand the outputs, you not only need to know the structure of the model, but also the weights and how they interact. It is very hard to do that without a deep understanding of the training data, and so effectively predicting what the model will do requires understanding both the model and the world the model was trained on.

Here is a concrete example:

Let's say I have two functions, defined as follows:

import random

words = []

def do_training(n):

for i in range(n):

word = input('Please enter a word: ')

words.append(word)

def do_inference(n):

output = []

for i in range(n):

word = random.choice(words)

output.append(word)

return output

If I call do_training(100) and then hand the computer to you for you to put 100 words into, and you then handed the computer back to me (and cleared the screen), I would be able to tell you that do_inference(100) would spit out 100 words pulled from some distribution, but I wouldn't be able to tell you what distribution that is without seeing the training data.

See this post [LW · GW] for a more in-depth exploration of this idea.

↑ comment by gilch · 2023-04-10T03:15:17.782Z · LW(p) · GW(p)

Sounds like you haven't done much programming. It's hard enough to understand the code one wrote oneself six months ago. (Or indeed, why the thing I wrote five minutes ago isn't behaving as expected.) Just because I wrote it, doesn't mean I memorized it. Understanding what someone else wrote is usually much harder, especially if they wrote it poorly, or in an unfamiliar language.

A machine learning system is even harder to understand than that. I'm sure there are some who understand in great detail what the human-written parts of the algorithm do. But to get anything useful out of a machine learning system, it needs to learn. You apply it to an enormous amount of data, and in the end, what it's learned amounts to possibly gigabytes of inscrutable matrices of floating-point numbers. On paper, a gigabyte is about 4 million pages of text. That is far larger than the human-written source code that generated it, which could typically fit in a small book. How that works is anyone's guess.

Reading this would be like trying to read someone's mind by examining their brain under a microscope. Maybe it's possible in principle, but don't expect a human to be able to do it. We'd need better tools. That's "interpretability research".

There are approaches to machine learning that are indeed closer to cross breeding than designing cars (genetic algorithms), but the current paradigm in vogue is based on neural networks, kind of an artificial brain made of virtual neurons.

↑ comment by francodanussi · 2023-04-10T02:08:04.512Z · LW(p) · GW(p)

It is true that programmers sometimes build things ignoring the underlying wiring of the systems they are using. But programmers in general create things relying on tools that were thouroughly tested. Besides that, they are builders, doers, not academics. Think of really good guitar players: they probably don't understand how sounds propagate through matter, but they can play their instrument beautifully.

comment by myutin · 2023-04-09T08:18:19.064Z · LW(p) · GW(p)

I know the answer to "couldn't you just-" is always "no", but couldn't you just make an AI that doesn't try very hard? i.e., it seeks the smallest possible intervention that ensures 95% chance of whatever goal it's intended for.

This isn't a utility maximizer, because it cares about intermediate states. Some of the coherence theorems wouldn't apply.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-09T14:44:51.873Z · LW(p) · GW(p)

The only bound on incoherence is ability to survive. So most of alignment is not about EU maximizers, it's about things that might eventually build something like EU maximizers, and the way they would be formulating their/our values. If there is reliable global alignment security, preventing rival misaligned agents from getting built where they have a fighting chance, anywhere in the world, then the only thing calling for transition to better agent foundations is making more efficient use of the cosmos, bringing out more potential for values of the current civilization.

(See also: hard problem of corrigibility, mild optimization, cosmic endowment, CEV.)

Replies from: red75prime↑ comment by red75prime · 2023-04-10T01:57:33.625Z · LW(p) · GW(p)

"Hard problem of corrigibility" refers to Problem of fully updated deference - Arbital, which uses a simplification (human preferences can be described as a utility function) that can be inappropriate for the problem. Human preferences are obviously path-dependent (you don't want to be painfully disassembled and reconstituted as a perfectly happy person with no memory of disassembly). Was appropriateness of the above simplification discussed somewhere?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-04-10T16:10:59.732Z · LW(p) · GW(p)

It's mentioned there as an example of a thing that doesn't seem to work. Simplifications are often appropriate as a way of making a problem tractable, even if the analogy is lost and the results are inapplicable to the original problem. Such exercises occasionally produce useful insights in unexpected ways.

Human preference, as practiced by humans, is not the sort of thing that's appropriate to turn into a utility function in any direct way. Hence things like CEV, gesturing at the sort of processes that might have any chance of doing something relevant to turning humans into goals for strong agents. Any real attempt should involve a lot of thinking from many different frames, probably an archipelago of stable civilizations running for a long time, foundational theory on what kinds of things idealized preference is about, and this might still fail to go anywhere at human level of intelligence. The thing that can actually be practiced right now is the foundational theory, the nature of agency and norms, decision making and coordination.

If there is a problem you can't solve, then there is an easier problem you can solve: find it.

-- George Pólya

comment by steven0461 · 2023-04-08T23:44:46.683Z · LW(p) · GW(p)

Anonymous #1 asks:

Replies from: gilchThis one is not technical: now that we live in a world in which people have access to systems like ChatGPT, how should I consider any of my career choices, primarily in the context of a computer technician? I'm not a hard-worker, and I consider that my intelligence is just a little above average, so I'm not going to pretend that I'm going to become a systems analyst or software engineer, but now code programming and content creation are starting to be automated more and more, so how should I update my decisions based on that?

Sure, this question is something that most can ask about their intellectual jobs, but I would like to see answers from people in this community; and particularly about a field in which, more than most, employers are going to expect any technician to stay up-to-date with these tools.

↑ comment by gilch · 2023-04-10T03:36:41.845Z · LW(p) · GW(p)

This field is evolving so quickly that it's hard to make recommendations. In the current regime (starting approximately last November), prompt engineering is a valuable skill that can multiply your effectiveness. (In my estimation, from my usage so far, perhaps by a factor of 7.) Learn how to talk to these things. Sign up for Bing and/or ChatGPT. There are a lot of tricks. This is at least as important as learning how to use a search engine.

But how long will the current regime last? Until ChatGPT-5? Six months? A year? Maybe these prompt engineering skills will then be obsolete. Maybe you'll have a better chance of picking up the next skill if you learn the current one, but it's hard to say.

And this is assuming the next regime, or the one after that doesn't kill us. Once we hit the singularity, all career advice is moot. Either you're dead, or we're in a post-singularity society that's impossible to predict now. Assuming we survive, we'll probably be in a post-scarcity regime where "careers" are not a thing, but no-one really knows.

comment by Sky Moo (sky-moo) · 2023-04-15T15:27:50.110Z · LW(p) · GW(p)

What could be done if a rogue version of AutoGPT gets loose on the internet?

OpenAI can invalidate a specific API key, if they don't know which one they can cancel all of them. This should halt the thing immediately.

If it were using a local model the problem is harder. Copies of local models may be distributed around the internet. I don't know how one could stop the agent in this situation. Can we take inspiration from how viruses and worms have been defeated in the past?

comment by steven0461 · 2023-04-11T02:56:20.928Z · LW(p) · GW(p)

Anonymous #6 asks:

Replies from: gilch, Seth HerdWhy hasn't an alien superintelligence within our light cone already killed us?

↑ comment by Seth Herd · 2023-04-11T03:45:57.792Z · LW(p) · GW(p)

I've heard two theories, and (maybe) created another.

One is that there isn't one in our light cone. Arguments like dissolving the fermi paradox (name at least somewhat wrong) and the frequency of nova and supernova events sterilizing planets that aren't on the galactic rim are considered pretty strong, I think.

The one I've heard is the dark forest hypothesis. In that hypothesis, an advanced culture doesn't send out signals or probes to be found. Instead it hides, to prevent or delay other potentially hostile civilizations (or AGIs) from finding it. This is somewhat compatible with aligned superintelligences that serve their civilizations desires. Adding to the plausibility of this hypothesis is the idea that an advanced culture might not really be interested in colonizing the galaxy. We or they might prefer to mostly live in simulation, possibly with a sped-up subjective timeline. Moving away from that would be abandoning most of your civilization for very long subjective times, with the lightspeed delays. And it might be forbidden as dangerous, by potentially leading unknown hostiles back to your civilizations home world.