Most People Don't Realize We Have No Idea How Our AIs Work

post by Thane Ruthenis · 2023-12-21T20:02:00.360Z · LW · GW · 42 commentsContents

42 comments

This point feels fairly obvious, yet seems worth stating explicitly.

Those of us familiar with the field of AI after the deep-learning revolution know perfectly well that we have no idea how our ML models work. Sure, we have an understanding of the dynamics of training loops and SGD's properties, and we know how ML models' architectures work. But we don't know what specific algorithms ML models' forward passes implement. We have some guesses, and some insights painstakingly mined by interpretability advances, but nothing even remotely like a full understanding.

And most certainly, we wouldn't automatically know how a fresh model trained on a novel architecture that was just spat out by the training loop works.

We're all used to this state of affairs. It's implicitly-assumed shared background knowledge. But it's actually pretty unusual, when you first learn of it.

And...

I'm pretty sure that the general public doesn't actually know that. I don't have hard data, but it's my strong impression, based on reading AI-related online discussions in communities not focused around tech, talking to people uninterested in AI advances, and so on.[1]

They still think in GOFAI terms. They still believe that all of an AI's functionality has been deliberately programmed, not trained, into it. That behind every single thing ChatGPT can do, there's a human who implemented that functionality and understands it.

Or, at the very least, that it's written in legible, human-readable and human-understandable format, and that we can interfere on it in order to cause precise, predictable changes.

Polls already show concern about AGI. If the fact that we don't know what these systems are actually thinking were widely known and properly appreciated? If there weren't the implicit assurance of "someone understands how it works and why it can't go catastrophically wrong"?

Well, I expect much more concern. Which might serve as a pretty good foundation for further pro-AI-regulations messaging. A way to acquire some political currency [LW · GW] you can spend.

So if you're doing any sort of public appeals, I suggest putting the proliferation of this information on the agenda. You get about five words [LW · GW] (per message) to the public, and "Powerful AIs Are Black Boxes" seems like a message worth sending out.[2]

- ^

If you do have some hard data on that, that would be welcome.

- ^

There's been some pushback on the "black box" terminology. I maintain that it's correct: ML models are black boxes relative to us, in the sense that by default, we don't have much more insight into what algorithms they execute than we'd have by looking at a homomorphically-encrypted computation to which we don't have the key, or by looking at the activity of a human brain using neuroimaging. There's been a nonzero amount of interpretability research, but it's still largely the case; and would be almost fully the case for models produced by novel architectures.

ML models are not black boxes relative to the SGD, yes. The algorithm can "see" all computations happening, and tightly intervene on them. But that seems like a fairly counter-intuitive use of the term, and I maintain that "AIs are black boxes" conveys all the correct intuitions.

42 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2023-12-21T20:45:52.178Z · LW(p) · GW(p)

In this section [LW · GW] (including the footnote) I suggested that

- there’s a category of engineered artifacts that includes planes and bridges and GOFAI and the Linux kernel;

- there’s another category of engineered artifacts that includes plant cultivars, most pharmaceutical drugs, and trained ML models

with the difference being whether questions of the form “why is the artifact exhibiting thus-and-such behavior” are straightforwardly answerable (for the first category) or not (for the second category).

If you were to go around the public saying “we have no idea how trained ML models work, nor how plant cultivars work, nor how most pharmaceutical drugs work” … umm, I understand there’s an important technical idea that you’re trying to communicate here, but I’m just not sure about that wording. It seems at least a little bit misleading, right? I understand that there’s not much space for nuance in public communication, etc. etc. But still. I dunno. ¯\_(ツ)_/¯

Replies from: hairyfigment, Thane Ruthenis, clone of saturn↑ comment by hairyfigment · 2023-12-25T04:16:46.367Z · LW(p) · GW(p)

There have, in fact, been numerous objections to genetically engineered plants and by implication everything in the second category. You might not realize how much the public is/was wary of engineered biology, on the grounds that nobody understood how it worked in terms of exact internal details. The reply that sort of convinced people - though it clearly didn't calm every fear about new biotech - wasn't that we understood it in a sense. It was that humanity had been genetically engineering plants via cultivation for literal millennia, so empirical facts allowed us to rule out many potential dangers.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2023-12-25T12:46:54.572Z · LW(p) · GW(p)

Oh sorry, my intention was to refer to non-GMO plant cultivars. There do exist issues with non-GMO plant cultivars, like them getting less nutritious, or occasionally being toxic, but to my knowledge the general public has never gotten riled up about any aspect of non-GMO plant breeding, for better or worse. Like you said, we’ve been doing that for millennia. (This comment is not secretly arguing some point about AI, just chatting.)

↑ comment by Thane Ruthenis · 2023-12-21T22:01:51.919Z · LW(p) · GW(p)

Hm. Solid point regarding how the counter-narrative to this would look like, I guess. Something to prepare for in advance, and shape the initial message to make harder.

Basic point: Not all things in the second category are created equal. AIs turning out to belong to the second category is just one fact about them. A fact that makes them less safe, but not as safe as any other thing belonging to that category. Updating on this information should provoke a directional update, not anchoring onto the baseline safety level of that category.

As I'd mentioned in the post, I think a lot of baseline safety-feelings towards AIs are based on the background assumption that they do belong to the first category. So taking out this assumption wouldn't be just some irrelevant datum to a lot of people – it would significantly update their judgement on the whole matter.

Replies from: boris-kashirin↑ comment by Boris Kashirin (boris-kashirin) · 2023-12-21T22:40:24.601Z · LW(p) · GW(p)

Computer viruses belong to the first category while biological weapons and gain of function research to the second.

Replies from: shankar-sivarajan↑ comment by Shankar Sivarajan (shankar-sivarajan) · 2023-12-22T03:36:09.827Z · LW(p) · GW(p)

Computer viruses belong to the first category

For now.

Replies from: zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2023-12-27T00:32:06.201Z · LW(p) · GW(p)

See Urschleim in Silicon: Return-Oriented Program Evolution with ROPER (2018).

Incidentally this is among my favorite theses, with a beautiful elucidation of 'weird machines' in chapter two. Recommended reading if you're at all interested in computers or computation.

↑ comment by clone of saturn · 2023-12-25T08:00:46.042Z · LW(p) · GW(p)

I think the distinction is that even for plant cultivars and pharmaceuticals, we can straightforwardly circumscribe the potential danger, e.g. a pharmaceutical will not endanger people unless they take it, and a new plant cultivar will not resist our attempts to control it outside of the usual ways plants behave. That's not necessarily the case with an AI that's smarter than us.

comment by 1a3orn · 2023-12-21T20:57:09.967Z · LW(p) · GW(p)

I think that this general point about not understanding LLMs is being pretty systematically overstated here and elsewhere in a few different ways.

(Nothing against the OP in particularly, which is trying to lean on the let's use this politically. But leaning on things politically is not... probably [LW · GW]... the best way to make those terms clearly used? Terms even more clear than "understand" are apt to break down under political pressure, and "understand" is already pretty floaty and a suitcase word)

What do I mean?

Well, two points.

- If we don't understand the forward pass of a LLM, then according to this use of "understanding" there are lots of other things we don't understand that we nevertheless are deeply comfortable with.

Sure, we have an understanding of the dynamics of training loops and SGD's properties, and we know how ML models' architectures work. But we don't know what specific algorithms ML models' forward passes implement.

There are a lot of ways you can understand "understanding" the specific algorithm that ML models implement in their forward pass. You could say that understanding here means something like "You can turn implemented algorithm from a very densely connected causal graph with many nodes, into an abstract and sparsely connected causal graph with a handful of nodes with human readable labels, that lets you reason about what happens without knowing the densely connected graph."

But like, we don't understand lots of things in this way! And these things are nevertheless able to be engineered or predicted well, and which are not frightening at all. In this sense we also don't understand:

- Weather

- The dynamics going on inside rocket exhaust, or a turbofan, or anything we model with CFD software

- Every other single human's brain on this planet

- Probably our immune system

Or basically anything with chaotic dynamics. So sure, you can say we don't understand the forward pass of an LLM, so we don't understand them. But like -- so what? Not everything in the world can be decomposed into a sparse causal graph, and we still say we understand such things. We basically understand weather. I'm still comfortable flying on a plane.

- Inability to intervene effectively at every point in a causal process doesn't mean that it's unpredictable or hard to control from other nodes.

Or, at the very least, that it's written in legible, human-readable and human-understandable format, and that we can interfere on it in order to cause precise, predictable changes.

Analogically -- you cannot alter rocket exhaust in predictable ways, once it has been ignited. But, you can alter the rocket to make the exhaust do what you want.

Similarly, you cannot alter an already-made LLM in predictable ways without training it. But you can alter an LLM that you are training in.... really pretty predictable ways.

Like, here are some predictions:

(1) The LLMs that are good at chess have a bunch of chess in their training data, with absolutely 0.0 exceptions

(2) The first LLMs that are good agents will have a bunch of agentlike training data fed into them, and will be best at the areas for which they have the most high-quality data

(3) If you can get enough data to make an agenty LLM, you'll be able to make an LLM that does pretty shittily on the MMLU relative to GPT-4 etc, but which is a very effective agent, by making "useful for agent" rather than "useful textbook knowledge" the criteria for inclusion in the training data. (MMLU is not an effective policy intervention target!

(4) Training is such an effective way of putting behavior into LLMs that even when interpretability is like, 20x better than it is now, people will still usually be using SGD or AdamW or whatever to give LLMs new behavior, even when weight-level interventions are possible.

So anyhow -- the point is that the inability to intervene or alter a process at any point along the creation doesn't mean that we cannot control it effectively at other points. We can control LLMs along other points.

(I think AI safety actually has a huge blindspot here -- like, I think the preponderance of the evidence is that the effective way to control not merely LLMs but all AI is to understand much more precisely how they generalize from training data, rather than by trying to intervene in the created artifact. But there are like 10x more safety people looking into interpretability instead of how they generalize from data, as far as I can tell.)

Replies from: faul_sname, Thane Ruthenis, Sherrinford, roger-d-1↑ comment by faul_sname · 2023-12-21T21:49:54.608Z · LW(p) · GW(p)

But there are like 10x more safety people looking into interpretability instead of how they generalize from data, as far as I can tell.

I think interpretability is a really powerful lens for looking at how models generalize from data, partly just in terms of giving you a lot more stuff to look at than you would have purely by looking at model outputs.

If I want to understand the characteristics of how a car performs, I should of course spend some time driving the car around, measuring lots of things like acceleration curves and turning radius and power output and fuel consumption. But I should also pop open the hood, and try to figure out how the components interact, and how each component behaves in isolation in various situations, and, if possible, what that component's environment looks like in various real-world conditions. (Also I should probably learn something about what roads are like, which I think would be analogous to "actually look at a representative sample of the training data").

↑ comment by Thane Ruthenis · 2023-12-21T22:11:37.412Z · LW(p) · GW(p)

If we don't understand the forward pass of a LLM, then according to this use of "understanding" there are lots of other things we don't understand that we nevertheless are deeply comfortable with.

Solid points; I think my response to Steven [LW(p) · GW(p)] broadly covers them too, though. In essence, the reasons we're comfortable with some phenomenon/technology usually aren't based on just one factor. And I think in the case of AIs, the assumption they're legible and totally comprehended is one of the load-bearing reasons a lot of people are comfortable with them to begin with. "Just software".

So explaining how very unlike normal software they are – that they're as uncontrollable and chaotic as weather, as moody and incomprehensible as human brains – would... not actually sound irrelevant let alone reassuring to them.

Replies from: Sam FM↑ comment by Sam FM · 2023-12-26T22:43:30.217Z · LW(p) · GW(p)

I think this is more of a disagreement on messaging than a disagreement on facts.

I don't see anyone disputing the "the AI is about as unpredictable as weather" claim, but it's quite a stretch to summarize that as "we have no idea how the AI works."

I understand that abbreviated and exaggerated messaging can be optimal for public messaging, but I don't think there's enough clarification in this post between direct in-group claims and examples of public messaging.

I would break this into three parts, to avoid misunderstandings from poorly contextualized language:

1. What is our level of understanding of AIs?

2. What is the general public's expectation of our level of understanding?

3. What's the best messaging to resolve this probable overestimation?

↑ comment by Sherrinford · 2023-12-25T22:46:03.730Z · LW(p) · GW(p)

I don't fully understand your implicatioks of why unpredictable things should not be frightening. In general, there is a difference between understanding and creating. The weather is unpredictable but we did not create it; where we did and do create it, we indeed seem to be too careless. For human brains, we at least know that preferences are mostly not too crazy, and if they are, capabilities are not superhuman. With respect to the immune system, understanding may be not very deep, but intervention is mostly limited by understanding, and where that is not true, we may be in trouble.

↑ comment by RogerDearnaley (roger-d-1) · 2023-12-22T11:52:02.211Z · LW(p) · GW(p)

But there are like 10x more safety people looking into interpretability instead of how they generalize from data, as far as I can tell.)

An intriguing observation. But the ability to extrapolate accurately outside the training data is a result of building accurate world models. So to understand this, we'd need to understand the sorts of world models that LLMs build and how they interact. I'm having some difficulty immediately thinking of a way of studying that that doesn't require first being a lot better at interpretability than we are now. But if you can think of one, I'd love to hear it.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2023-12-22T14:08:30.137Z · LW(p) · GW(p)

I'm having some difficulty immediately thinking of a way of studying that

Pretty sure that's not what 1a3orn would say, but you can study efficient world-models directly to grok that. Instead of learning about them through the intermediary of extant AIs, you can study the thing that these AIs are trying to ever-better approximate itself.

See my (somewhat outdated) post on the matter [LW · GW], plus the natural-abstractions agenda [? · GW].

comment by RogerDearnaley (roger-d-1) · 2023-12-22T04:36:49.177Z · LW(p) · GW(p)

I'd suggest "AIs are trained, not designed" for a 5-word message to the public. Yes, that does mean that if we catch them doing something they shouldn't, the best we can do to get them to stop is to let them repeat it then hit them with the software equivalent of a rolled up newspaper and tell them "Bad neural net!", and hope they figure out what we're mad about. So we have some control, but it's not like an engineering process. [Admittedly this isn't quite a fair description for e.g. Constitutional AI: that's basically delegating the rolled-up-newspaper duty to a second AI and giving that one verbal instructions.]

Replies from: AnthonyC↑ comment by AnthonyC · 2023-12-22T11:31:46.883Z · LW(p) · GW(p)

hit them with the software equivalent of a rolled up newspaper and telling them "Bad neural net!", and hope they figure out what we're mad about

That's actually a really clear mental image. For those conversations where I have a few sentences instead of five public-facing words, I might use it.

comment by David Lorell · 2023-12-23T00:13:47.618Z · LW(p) · GW(p)

Anecdotal 2¢: This is very accurate in my experience. Basically every time I talk to someone outside of tech/alignment about AI risk, I have to go through the whole "we don't know what algorithms the AI is running to do what it does. Yes, really." thing. Every time I skip this accidentally, I realize after a while that this is where a lot of confusion is coming from.

comment by faul_sname · 2023-12-22T02:03:32.564Z · LW(p) · GW(p)

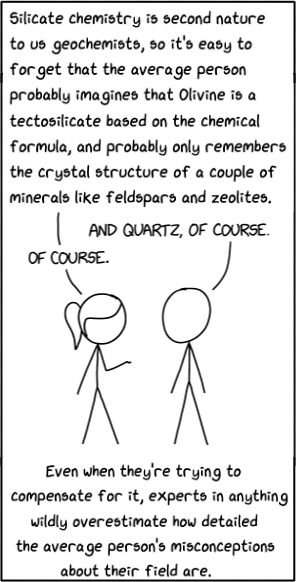

I suspect that you are attributing far too detailed of a mental model to "the general public" here. Riffing off your xkcd:

↑ comment by Thane Ruthenis · 2023-12-22T02:17:52.294Z · LW(p) · GW(p)

I don't think I'm doing so? I think it could be safely assumed that people have an idea of "software", and that they know that AI is a type of software. Other than that, I'm largely assuming that they have no specific beliefs about how AI works, a blank map. Which, however, means that when they think of AI, they think about generic "software", thereby importing their ideas about how software works to AI. And those ideas include "people wrote it", which is causing the misconception I suspect them to have.

What's your view on this instead?

Replies from: AnthonyC, faul_sname↑ comment by AnthonyC · 2023-12-22T11:44:45.078Z · LW(p) · GW(p)

I think it could be safely assumed that people have an idea of "software", and that they know that AI is a type of software.

I second faul_sname's point. I have a relative whose business involves editing other people's photos as a key step. It's amazing how often she comes across customers who have no idea what a file is, let alone how to attach one to an email. These are sometimes people who have already sent her emails with files attached in the past. Then add all the people who can't comprehend, after multiple rounds of explanation, that there is a difference between a photo file, like a jpeg, as opposed to a screenshot of their desktop or phone when the photo is pulled up. Yet somehow they know how to take a screenshot and send it to her.

For so many people, their computer (or car, or microwave, etc.) really is just a magic black box anyway, and if it breaks you go to a more powerful wizard to re-enchant it. The idea that it has parts and you can understand how they work is just... not part of the mental process.

↑ comment by faul_sname · 2023-12-22T02:28:48.579Z · LW(p) · GW(p)

I think it could be safely assumed that people have an idea of "software"

Speaking as a software developer who interacts with end-users sometimes, I think you might be surprised at what the mental model of typical software users, rather than developers, looks like. When people who have programmed, or who work a lot with computers, think of "software", we think of systems which do exactly what we tell them to do, whether or not that is what we meant. However, the world of modern software does its best to hide the sharp edges from users, and the culture of constant A/B tests means that software doesn't particularly behave the same way day-in and day-out from the perspective of end-users. Additionally, UX people will spend a lot of effort figuring out how users intuitively expect a piece of software to work, and then companies will spend a bunch of designer and developer time to ensure that their software meets the intuitive expectations of the users as closely as possible (except in cases where meeting intuitive explanations would result in reduced profits).

As such, from the perspective of a non-power user, software works about the way that a typical person would naively expect it to work, except that sometimes it mysteriously breaks for no reason.

comment by frontier64 · 2023-12-21T22:27:01.756Z · LW(p) · GW(p)

There are certain behaviors of LLMs that you could reasonably say are explicitly programmed in. ChatGPT has undergone extensive torture to browbeat it into being a boring, self-effacing, politically-correct helpful assistant. The LLM doesn't refuse to say a racial slur even if doing so would save millions of lives because it's creator had it spend billions of hours of compute predicting internet tokens. That behavior comes from something very different than what created the LLM in the first place. Same for all the refusals to answer questions and most other weird speech that the general public so often takes issue with.

The people are more right than not when they complain how the LLM creators are programming terrible behaviors into it.

If there were un-RLHF'd and un prompt-locked powerful LLMs out there that the public casually engaged with they would definitely complain how the programmers were programming in bad behaviors too don't get me wrong. But that's a different world.

comment by Martin Fell (martin-fell) · 2023-12-21T20:59:17.408Z · LW(p) · GW(p)

This fits with my experience talking to people unfamiliar with the field. Many do seem to think it's closer to GOFAI, explicitly programmed, maybe with a big database of stuff scraped from the internet that gets mixed-and-matched depending on the situation.

Examples include:

- Discussions around the affect of AI in the art world often seem to imply that these AIs are taking images directly from the internet and somehow "merging" them together, using a clever (and completely unspecified) algorithm. Sometimes it's implied or even outright stated that this is just a new way to get around copyright.

- Talking about ChatGPT with some friends who have some degree of coding / engineering knowledge, they frequently say things like "it's not really writing anything, it's just copied from a database / the internet".

- I've also read many news articles and comments which refer to AIs being "programmed", e.g. "ChatGPT is programmed to avoid violence", "programmed to understand human language", etc.

I think most people who have more than a very passing interest in the topic have a better understanding than that though. And I suspect that many completely non-technical people have such a vague understanstanding of what "programmed" means that it could apply to training an LLM or explictly coding an algorithm. But I do think this is a real misunderstanding that is reasonably widespread.

comment by Lao Mein (derpherpize) · 2023-12-22T03:26:30.350Z · LW(p) · GW(p)

Where on the scale of data model complexity from linear regression to GPT4 do we go from understanding how our AIs work to not? Or is it just a problem with data models without a handcrafted model of the world in general?

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2023-12-22T11:41:41.032Z · LW(p) · GW(p)

It's a gradual process. It's say things currently start to get really murky past about 2-3-layer networks. GPT-4 has O(100) layers, which is like deep ocean depths,

comment by DusanDNesic · 2023-12-25T11:49:26.530Z · LW(p) · GW(p)

My experience is much like this (for context I've spoken about AIS to common public, online but mostly offline, to audiences from students to politicians). The more poetic, but also effective and intuitive way to call this out (while sacrificing some accuracy but I think not too much) is: "we GROW AI". It puts it in categories with genetic engineering and pharmaceutical fairly neatly, and shows the difference between PowerPoint and ChatGPT in how they are made and why we don't know how it works. It is also more intuitive compared to "black box" which is a more technical term and not widely known.

comment by zrezzed (kyle-ibrahim) · 2023-12-24T17:25:23.404Z · LW(p) · GW(p)

That behind every single thing ChatGPT can do, there's a human who implemented that functionality and understands it.

Or, at the very least, that it's written in legible, human-readable and human-understandable format, and that we can interfere on it in order to cause precise, predictable changes.

I’m fairly sure you have, in fact, made the same mistake as you have pointed out! Most people… have exactly no idea what a computer is. They do not understand what software is, or that it is something an engineer implements. They do not understand the idea of a “predictable” computer program.

I find it deeply fascinating most of the comments here are providing pushback in the other direction :)

comment by ChristianKl · 2023-12-22T00:35:24.887Z · LW(p) · GW(p)

I'm pretty sure that the general public doesn't actually know that. They still think in GOFAI terms. They still believe that all of an AI's functionality has been deliberately programmed, not trained, into it. That behind every single thing ChatGPT can do, there's a human who implemented that functionality and understands it.

On what basis do you hold that belief? It seems to be a quite central claim to the post and you just assert it to be true.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2023-12-22T00:46:37.835Z · LW(p) · GW(p)

Reading online comments about AI advances in non-tech-related spaces (look for phrases like "it's been programmed to" and such – what underlying model-of-AI does that imply?), directly talking to people who are not particularly interested in technology, and reflecting on my own basic state of knowledge about modern AI when I'd been a tech-savvy but not particularly AI-interested individual.

Generally speaking, appreciating what "modern AIs are trained, not programmed" implies requires a fairly fine-grained model of AI. Not something you can develop by osmosis yet – you'd need to actually read a couple articles on the topic, actively trying to understand it. I'm highly certain most people never feel compelled to do that.

I suppose I don't have hard data, though, that's fair. If there are any polls or such available, I'd appreciate seeing that.

Edit: Edited the post a bit to make my epistemic status on the issue clear. I'm fairly confident, but it's indeed prudent to point out what sources my confidence comes from.

Replies from: Jay Bailey↑ comment by Jay Bailey · 2023-12-22T01:02:03.103Z · LW(p) · GW(p)

Anecdotally I have also noticed this - when I tell people what I do, the thing they are frequently surprised by is that we don't know how these things work.

As you implied, if you don't understand how NN's work, your natural closest analogue to ChatGPT is conventional software, which is at least understood by its programmers. This isn't even people being dumb about it, it's just a lack of knowledge about a specific piece of technology, and a lack of knowledge that there is something to know - that NN's are in fact qualitatively different from other programs.

Replies from: ChristianKl↑ comment by ChristianKl · 2023-12-22T20:53:23.223Z · LW(p) · GW(p)

As you implied, if you don't understand how NN's work, your natural closest analogue to ChatGPT is conventional software, which is at least understood by its programmers.

It's worth noting that conventional software is also often not fully understood. The ranking algorithms of the major tech companies are complex enough that there might be no human that fully understands them.

comment by RogerDearnaley (roger-d-1) · 2024-01-10T10:17:31.814Z · LW(p) · GW(p)

To be fair, saying we have "No Idea How Our AIs Work" is overstating the case. As recent developments like Singular Learning Theory [? · GW] and the paper discussed in my post Striking Implications for Learning Theory, Interpretability — and Safety? [LW · GW] show, we are gradually starting to develop some idea how they work. But I completely agree that most laypeople presumably assume that we actually understand this, rather then having recently developed some some still-hazy ideas of what's going on inside them. (And I see plenty of discussion on LW that's out of date on some of these recent theoretical developments, so this knowledge definitely isn't widely dispersed yet in the AI Safety community.)

Replies from: nikolas-kuhn↑ comment by Amalthea (nikolas-kuhn) · 2024-01-10T10:27:36.130Z · LW(p) · GW(p)

I think think it's also easy to falsely conflate progress in understanding with having achieved some notable level of understanding. Whether one has the latter will likely only become clear after a significant passage of time, so it's hard to make a judgement right away. That said, it's fair to say "No idea" is overstating th case compared to e.g. "We understand very little about".

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2024-01-10T10:53:31.186Z · LW(p) · GW(p)

As someone who's been working in the ML field for ~5 years, there are pieces of what 5 years ago were common folk-wisdom about training AI that everyone knew but most people were very puzzled by (e.g. "the loss functions of large neural nets have a great many local minima, but they all seem to have about the same low level of the loss function, so getting trapped in a local minimum isn't actually a significant problem, especially for over-parameterized networks") that now have a simple, intuitive explanation from Singular Learning Theory (many of those local minima are related by a large number of discrete-and-continuous symmetries, so have identical loss function values), plus we now additionally understand why some of them have slightly better and worse values of the loss function and under which circumstances a model will settle at which of them, and how that relates to Occam's Razor, training set size, generalization, internal symmetries, and so forth. We have made some significant advances in theoretical understanding. So a field that used to be like Alchemy, almost entirely consisting of unconnected facts and folklore discovered by trial and error, is starting to turn into something more like Chemistry with some solid theoretical underpinnings. Yes, there's still quite a ways to go, and yes timelines look short for AI Safety, so really I wish we had more understanding as soon as possible. However, I think "we know very little about" was accurate a few years ago, but has since becoming an understatement.

Replies from: joseph-van-name↑ comment by Joseph Van Name (joseph-van-name) · 2024-01-10T14:35:05.055Z · LW(p) · GW(p)

I am curious about your statement that all large neural networks are isomorphic or nearly isomorphic and therefore have identical loss values. This should not be too hard to test.

Let be training data sets. Let be neural networks. First train on and on . Then slowly switch the training sets, so that we eventually train both and on just . After fully training and , one should be able to train an isomorphism between the networks and (here I assume that and are designed properly so that they can produce such an isomorphism) so that the value for each node in can be perfectly computed from each node in . Furthermore, for every possible input, the neural networks should give the exact same output. If this experiment does not work, then one should be able to set up another experiment that does actually work.

I have personally trained many ML systems for my cryptocurrency research where after training two systems on the exact same data but with independent random initializations, the fitness levels are only off by a floating point error of about , and I am able to find an exact isomorphism between these systems (and sometimes they are exactly the same and I do not need to find any isomorphism). But I have designed these ML systems to satisfy these properties along with other properties, and I have not seen this with neural networks. In fact, the property of attaining the exact same fitness level is a bit fragile.

I found a Bachelor's thesis (people should read these occasionally; I apologize for selecting a thesis from Harvard) where someone tried to find an isomorphism between 1000 small trained machine learning models, and no such isomorphism was found.

https://dash.harvard.edu/bitstream/handle/1/37364688/SORENSEN-SENIORTHESIS-2020.pdf?sequence=1

Or maybe one can find a more complicated isomorphism between neural networks since a node permutation is quite oversimplistic.

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2024-01-10T21:35:42.552Z · LW(p) · GW(p)

I gather node permutation is only one of the symmetries involved, which include both discrete symmetries like permutations and continuous ones such as symmetries involving shifting sets of parameters in ways that produce equivalent network outputs.

As I understand it (and I'm still studying this stuff), the prediction from Singular Learning Theory is that there are large sets of local minima, each set internally isomorphic to each other so having the same loss function value (modulo rounding errors or not having quite settled to the optimum). But the prediction of SLT is that there are generically multiple of these sets, whose loss functions are not the same. The ones whose network representation is simplest (i.e. with lowest Kolmogorov complexity when expressed in this NN architecture) will have the largest isometry symmetry group, so are the easiest to find/are most numerous and densely packed in the space of all NN configurations. So we get Occam's Razor for free. However, typically the best ones with the best loss values will be larger/more complex, so harder to locate. That is about my current level of understanding of SLT, but I gather that with a large enough training set and suitable SGD learning metaparameter annealing one can avoid settling in a less good lower-complexity minimum and attempt to find a better one, thus improving your loss function result, and there is some theoretical mathematical understanding of how well one an expect to do based on training set size.

comment by Simon Fischer (SimonF) · 2023-12-24T14:25:06.427Z · LW(p) · GW(p)

"Powerful AIs Are Black Boxes" seems like a message worth sending out

Everybody knows what (computer) scientists and engineers mean by "black box", of course.

Replies from: jane-mccourt↑ comment by Heron (jane-mccourt) · 2023-12-26T12:51:35.839Z · LW(p) · GW(p)

I laughed when I read ' black box.' Us oldies who predominantly use Microsoft (Word and Excel) and Google Docs*, are mostly bewildered. by the very language of computer technology. For some, there is overwhelming fear because of ignorance. Regardless of MScs and PhDs, I know someone who refuses to consider the possibilities of AI, insisting that it is simply the means to amass data, perchance to extrapolate. It is, I think, worth educating even the old folk (me) whose ability to update is limited.

- And maybe Zoom, and some Social Media.

comment by Review Bot · 2024-02-14T06:49:04.197Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by PottedRosePetal · 2023-12-30T22:05:14.177Z · LW(p) · GW(p)

In my opinion it does not matter to the average person. To them anything to do with a PC is a black box. So now you tell them that AI is... more black box? They wont really get the implications.

It is the wrong thing to focus on in my opinion. I think in general the notion that we create digital brains is more useful long term. We can tell people "well, doesnt xy happen to you as well sometimes? The same happens to the AI.". Like, hallucination. "Dont you also sometimes remember stuff wrongly? Dont you also sometimes strongly believe something to be true only to be proven wrong?" is a way better explanation to the average person than "its a black box, we dont know". They will get the potential in their head, they will get that we create something novel, we create something that can grow smarter. We model the brain. And we just lack some parts in models, like the part that moves stuff, the parts that plan very well.

Yes, this may make people think that models are more conscious or more "alive" than they are. But that debate is coming anyway. Since people tend to stick with what they first heard, lets instill that now even though we may be some distance from that.

I think we can assume that AGI is coming, or ASI, whatever you want to call it. And personally I doubt we will be able to create it without a shadow of a doubt in the AI community that it has feelings or some sort of emotions (or even consciousness, but thats another rabbithole). Just like the e/acc and e/alt movements (sorry, I dont like EA as a term) there will be "robotic rights" and "robots are tools" movements. I personally know which side I will probably stand on, but thats not this debate, I just want to argue that this debate will exist and my method prepares people for that. Black box does not do that. Once that debate comes, its basically saying "well idk". Digital brain creates the argument if emotions are part of our wild mix of brain parts or not.