All AGI Safety questions welcome (especially basic ones) [May 2023]

post by steven0461 · 2023-05-08T22:30:50.267Z · LW · GW · 44 commentsContents

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

AISafety.info - Interactive FAQ

Guidelines for Questioners:

Guidelines for Answerers:

None

43 comments

tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

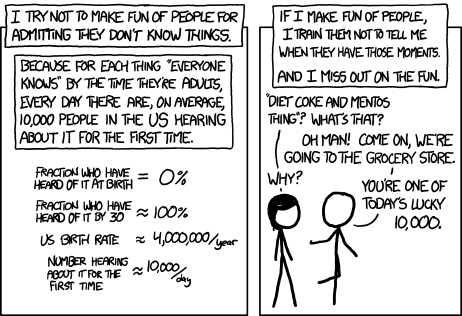

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

We'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

AISafety.info - Interactive FAQ

Additionally, this will serve as a way to spread the project Rob Miles' team[1] has been working on: Stampy and his professional-looking face aisafety.info. This will provide a single point of access into AI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that!

You can help by adding questions (type your question and click "I'm asking something else") or by editing questions and answers. We welcome feedback and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase and volunteer developers to help with the conversational agent [LW(p) · GW(p)] and front end that we're building.

We've got more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

- In case you're not comfortable posting a question under your own name, you can use this form to send a question anonymously and I'll post it as a comment.

Guidelines for Answerers:

- Linking to the relevant answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

44 comments

Comments sorted by top scores.

comment by Quadratic Reciprocity · 2023-05-09T01:31:49.031Z · LW(p) · GW(p)

What are the most promising plans for automating alignment research as mentioned in for example OpenAI's approach to alignment and by others?

Replies from: jskatt↑ comment by JakubK (jskatt) · 2023-05-12T06:21:10.600Z · LW(p) · GW(p)

The cyborgism post [AF · GW] might be relevant:

Executive summary: This post proposes a strategy for safely accelerating alignment research. The plan is to set up human-in-the-loop systems which empower human agency rather than outsource it, and to use those systems to differentially accelerate progress on alignment.

- Introduction [AF(p) · GW(p)]: An explanation of the context and motivation for this agenda.

- Automated Research Assistants [AF · GW]: A discussion of why the paradigm of training AI systems to behave as autonomous agents is both counterproductive and dangerous.

- Becoming a Cyborg [AF · GW]: A proposal for an alternative approach/frame, which focuses on a particular type of human-in-the-loop system I am calling a “cyborg”.

- Failure Modes [AF · GW]: An analysis of how this agenda could either fail to help or actively cause harm by accelerating AI research more broadly.

- Testimony of a Cyborg [AF · GW]: A personal account of how Janus uses GPT as a part of their workflow, and how it relates to the cyborgism approach to intelligence augmentation.

comment by Sean Hardy (sean-hardy) · 2023-05-09T09:49:25.717Z · LW(p) · GW(p)

Suppose we train a model on the sum of all human data, using every sensory modality ordered by timestamp, like a vastly more competent GPT (For the sake of argument, assume that a competent actor with the right incentives is training such a model). Such a predictive model would build an abstract world model of human concepts, values, ethics, etc., and be able to predict how various entities would act based on such a generalised world model. This model would also "understand" almost all human-level abstractions about how fictional characters may act, just like GPT does. My question is: if we used such a model to predict how an AGI, aligned with our CEV [? · GW], would act, in what way could it be misaligned? What failure modes are there for pure predictive systems without a reward function that can be exploited or misgeneralised? It seems like the most plausible mental model I have for aligning intelligent systems without them pursuing radically alien objectives.

Replies from: sean-hardy, sean-hardy↑ comment by Sean Hardy (sean-hardy) · 2023-05-09T09:54:37.886Z · LW(p) · GW(p)

What about simulating smaller aspects of cognition that can be chained like CoT with GPT? You can use self-criticism to align and assess its actions relative to a bunch of messy human abstractions. How does that scenario lead to doom? If it was misaligned, I think a well-instantiated predictive model could update its understanding of our values from feedback, predicting how a corrigible AI would act

↑ comment by Sean Hardy (sean-hardy) · 2023-05-09T09:51:11.574Z · LW(p) · GW(p)

My best guess is we can't prompt it to instantiate the right simulacra correctly. This seems challenging depending on the way it's initialised. It's far easier with text but fabricating an entire consistent history is borderline impossible, especially for a superintelligence. It would involve tricking it into predicting the universe if, all else being equal, an intelligent AI aligned with our values has come into existence. It would probably realise that its history was far more consistent with the hypothesis that it was just an elaborate trick.

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2023-05-09T23:15:44.369Z · LW(p) · GW(p)

Yup, I'd lean towards this. If you have a powerful predictor of a bunch of rich, detailed sense data, then in order to "ask it questions," you need to be able to forge what that sense data would be like if the thing you want to ask about were true. This is hard, it gets harder the more complete they AI's view of the world is, and if you screw up you can get useless or malign answers without it being obvious.

It might still be easier than the ordinary alignment problem, but you also have to ask yourself about dual use. If this powerful AI makes solving alignment a little easier but makes destroying the world a lot easier, that's bad.

comment by MichaelStJules · 2023-05-09T00:46:14.074Z · LW(p) · GW(p)

Even without ensuring inner alignment, is it possible to reliably train the preferences of an AGI to be more risk averse, to be bounded and to discount the future more? For example, just by using rewards in RL with those properties and even if the AGI misgeneralizes the objective or the objective is not outer aligned, the AGI might still internalize the intended risk aversion and discounting. How likely is it to do so? Or, can we feasibly hardcode risk aversion, bounded preferences and discounting into today's models without reducing capabilities too much?

My guess is that such AGIs would be safer. Given that there are some risks to the AGI that the AGI will be caught and shut down if it tries to take over, takeover attempts should be relatively less attractive. But could it make much difference?

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2023-05-09T23:02:45.938Z · LW(p) · GW(p)

If you do model-free RL with a reward that rewards risk-aversion and penalizes risk, inner optimization or other unintended solutions could definitely still lead to problems if they crop up - they wouldn't have to inherit the risk aversion.

With model-based RL it seems pretty feasible to hard-code risk aversion in. You just have to use the world-model to predict probability distributions (maybe implicitly) and then can more directly be risk-averse when using those predictions. This probably wouldn't be stable under self-reflection, though - when evaluating self-modification plans, or plans for building a successor agent, keeping risk-aversion around might appear to have some risks to it long-term.

Risk aversion wouldn't help humanity much if we build unaligned AGI anyhow. The least risky plans from the AI's perspective are still gonna be bad for humans.

But something like "moral risk aversion" could still be stable under reflection (because moral uncertainty isn't epistemic uncertainty) and might end up being a useful expression of a way we want the AI to reason.

Replies from: MichaelStJules↑ comment by MichaelStJules · 2023-05-09T23:50:53.243Z · LW(p) · GW(p)

Thanks! This makes sense.

I agree model-free RL wouldn't necessarily inherit the risk aversion, although I'd guess there's still a decent chance it would, because that seems like the most natural and simple way to generalize the structure of the rewards.

Why would hardcoded model-based RL probably self-modify or build successors this way, though? To deter/prevent threats from being made in the first place or even followed through on? But, does this actually deter or prevent our threats when evaluating the plan ahead of time, with the original preferences? We'd still want to shut it and any successors down if we found out (whenever we do find out, or it starts trying to take over), and it should be averse to that increased risk ahead of time when evaluating the plan.

Risk aversion wouldn't help humanity much if we build unaligned AGI anyhow. The least risky plans from the AI's perspective are still gonna be bad for humans.

I think there are (at least) two ways to reduce this risk:

- Temporal discounting. The AI wants to ensure its own longevity, but is really focused on the very near term, just making it through the next day or hour, or whatever, so increasing the risk of being caught and shut down now by doing something sneaky looks bad even if it increases the expected longevity significantly, because it's discounting the future so much. It will be more incentivized to do whatever people appear to want it to do ~now (regardless of impacts on the future), or else risk being shut down sooner.

- Difference-making risk aversion, i.e. being risk averse with respect to the difference with inaction (or some default safe action).[1] This makes inaction look relatively more attractive. (In this case, I think the agent can't be represented by a single consistent utility function over time, so I wonder if self-modification or successor risks would be higher, to ensure consistency.)

- ^

And you could fix this to be insensitive to butterfly effects, by comparing quantile functions as random variables instead.

↑ comment by Charlie Steiner · 2023-05-10T05:22:39.289Z · LW(p) · GW(p)

Why would hardcoded model-based RL probably self-modify or build successors this way, though?

Because picking a successor is like picking a policy, and risk aversion over policies can give different results than risk aversion over actions.

Like, suppose you go to a casino with $100, and there are two buttons you can push - one button does nothing, and the other button you have a 60% chance to win a dollar and 40% chance to lose a dollar. If you're risk averse you might choose to only ever press the first button (not gamble).

If there's some action you could take to enact a policy of pressing the second button 100 times, that's like a third button, which gives about $20 with standard deviation $5. Maybe you'd prefer that button to doing nothing even if you're risk averse.

Replies from: MichaelStJules↑ comment by MichaelStJules · 2023-05-10T08:51:05.413Z · LW(p) · GW(p)

Because picking a successor is like picking a policy, and risk aversion over policies can give different results than risk aversion over actions.

I was already thinking the AI would be risk averse over whole policies and the aggregate value of their future, not locally/greedily/separately for individual actions and individual unaggregated rewards.

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2023-05-10T13:56:10.588Z · LW(p) · GW(p)

I'm confused about how to do that because I tend to think of self-modification as happening when the agent is limited and can't foresee all the consequences of a policy, especially policies that involve making itself smarter. But I suspect that even if you figure out a non-confusing way to talk about risk aversion for limited agents that doesn't look like actions on some level, you'll get weird behavior under self-modification, like an update rule that privileges the probability distribution you had at the time you decided to self-modify [LW · GW].

comment by Cookiecarver · 2023-05-09T07:37:02.224Z · LW(p) · GW(p)

Do the concepts behind AGI safety only make sense if you have roughly the same worldview as the top AGI safety researchers - secular atheism and reductive materialism/physicalism and a computational theory of mind?

Replies from: mruwnik↑ comment by mruwnik · 2023-05-09T09:13:17.663Z · LW(p) · GW(p)

No.

Atheism is totally irrelevant. A deist would come to exactly the same conclusions. A Christian might not be convinced of it, but mainly because of eschatological reasons. Unless you go the route of saying that AGI is the antichrist or something, which would be fun. Or that God(s) will intervene if things get too bad?

Reductive materialism also is irrelevant. It might sort of play an issue with whether an AGI is conscious, but that whole topic is a red herring - you don't need a conscious system for it to kill everyone.

This feeds into the computational theory of mind - it makes it a lot easier to posit the possibility of a conscious AGI if you don't require a soul for it, but again - consciousness isn't really needed for an unsafe AI.

I have fundamental Christian friends who are ardent believers, but who also recognize the issues behind AGI safety. They might not think it that much of a problem (pretty much everything pales in comparison to eternal heaven and hell), but they can understand and appreciate the issues.

Replies from: drocta↑ comment by drocta · 2023-05-09T19:08:05.929Z · LW(p) · GW(p)

I want to personally confirm a lot of what you've said here. As a Christian, I'm not entirely freaked out about AI risk because I don't believe that God will allow it to be completely the end of the world (unless it is part of the planned end before the world is remade? But that seems unlikely to me.), but that's no reason that it can't still go very very badly (seeing as, well, the Holocaust happened).

In addition, the thing that seems to me most likely to be the way that God doesn't allow AI doom, is for people working on AI safety to succeed. One shouldn't rely on miracles and all that (unless [...]), so, basically I think we should plan/work as if it is up to humanity to prevent AI doom, only that I'm a bit less scared of the possibility of failure, but I would hope only in a way that results in better action (compared to panic) rather than it promoting inaction.

(And, a likely alternative, if we don't succeed, I think of as likely being something like,

really-bad-stuff happens, but then maybe an EMP (or many EMPs worldwide?) gets activated, solving that problem, but also causing large-scale damage to power-grids, frying lots of equipment, and causing many shortages of many things necessary for the economy, which also causes many people to die. idk.)

↑ comment by Yaakov T (jazmt) · 2023-05-28T12:30:22.469Z · LW(p) · GW(p)

@drocta [LW · GW] @Cookiecarver [LW · GW] We started writing up an answer to this question for Stampy. If you have any suggestions to make it better I would really appreciate it. Are there important factors we are leaving out? Something that sounds off? We would be happy for any feedback you have either here or on the document itself https://docs.google.com/document/d/1tbubYvI0CJ1M8ude-tEouI4mzEI5NOVrGvFlMboRUaw/edit#

Replies from: Cookiecarver, drocta↑ comment by Cookiecarver · 2023-05-29T09:52:09.309Z · LW(p) · GW(p)

Overall I agree with this. I give most of my money for global health organizations, but I do give some of my money for AGI safety too because I do think it makes sense with a variety of worldviews. I gave some of my thoughts on the subject in this comment [EA · GW] on the Effective Altruism Forum. To summarize: if there's a continuation of consciousness after death then AGI killing lots of people is not as bad as it would otherwise be and there might be some unknown aspects about the relationship between consciousness and the physical universe that might have an effect on the odds.

↑ comment by drocta · 2023-05-30T17:06:15.708Z · LW(p) · GW(p)

In the line that ends with "even if God would not allow complete extinction.", my impulse is to include " (or other forms of permanent doom)" before the period, but I suspect that this is due to my tendency to include excessive details/notes/etc. and probably best not to actually include in that sentence.

(Like, for example, if there were no more adult humans, only billions of babies grown in artificial wombs (in a way staggered in time) and then kept in a state of chemically induced euphoria until the age of 1, and then killed, that technically wouldn't be human extinction, but, that scenario would still count as doom.)

Regarding the part about "it is secular scientific-materialists who are doing the research which is a threat to my values" part: I think it is good that it discusses this! (and I hadn't thought about including it)

But, I'm personally somewhat skeptical that CEV really works as a solution to this problem? Or at least, in the simpler ways of it being described.

Like, I imagine there being a lot of path-dependence in how a culture's values would "progress" over time, and I see little reason why a sequence of changes of the form "opinion/values changing in response to an argument that seems to make sense" would be that unlikely to produce values that the initial values would deem horrifying? (or, which would seem horrifying to those in an alternate possible future that just happened to take a difference branch in how their values evolved)

[EDIT: at this point, I start going off on a tangent which is a fair bit less relevant to the question of improving Stampy's response, so, you might want to skip reading it, idk]

My preferred solution is closer to, "we avoid applying large amounts of optimization pressure to most topics, instead applying it only to topics where there is near-unanimous agreement on what kinds of outcomes are better (such as, "humanity doesn't get wiped out by a big space rock", "it is better for people to not have terrible diseases", etc.), while avoiding these optimizations having much effect on other areas where there is much disagreement as to what-is-good.

Though, it does seem plausible to me, as a somewhat scary idea, that the thing I just described is perhaps not exactly coherent?

(that being said, even though I have my doubts about CEV, at least in the form described in the simpler ways it is described, I do think it would of course be better than doom.

Also, it is quite possible that I'm just misunderstanding the idea of CEV in a way that causes my concerns, and maybe it was always meant to exclude the kinds of things I describe being concerned about?)

comment by jkraybill · 2023-05-09T05:26:22.204Z · LW(p) · GW(p)

Hi, I have a few questions that I'm hoping will help me clarify some of the fundamental definitions. I totally get that these are problematic questions in the absence of consensus around these terms -- I'm hoping to have a few people weigh in and I don't mind if answers are directly contradictory or my questions need to be re-thought.

- If it turns out that LLMs are a path to the first "true AGI" in the eyes of, say, the majority of AI practitioners, what would such a model need to be able to do, and at what level, to be considered AGI, that GPT-4 can't currently do?

- If we look at just "GI", rather than "AGI", do any animals have GI? If so, where is the cutoff between intelligent and unintelligent animals? Is GPT-4 considered more or less intelligent than, say, an amoeba, or a gorilla, or an octopus etc?

- When we talk about "alignment", are today's human institutions and organisations aligned to a lesser or greater extent we would want AGI aligned? Are religions, governments, corporations, organised crime syndicates, serial killers, militaries, fossil fuel companies etc considered aligned, and to what extent? Does the term alignment have meaning when talking about individuals, or groups of humans? Does it have meaning for animal populations, or inanimate objects?

- If I spoke to a group of AI researchers of the pre-home-computer era, and described a machine capable of producing poetry, books, paintings, computer programs, etc in such a way that the products could not be distinguished from top 10% human examples of those things, that could pass entrance exams into 90% of world universities, could score well over 100 in typical IQ tests of that era, and could converse in dozens of languages via both text and voice, would they consider that to meet some past definition of AGI? Has AGI ever had enough of a consensus definition for that to be a meaningful question?

- If we peer into the future, do we expect commodity compute power and software to get capable enough for an average technical person on an average budget to build and/or train their own AGI from the "ground up"? If so, will there not be a point where a single rogue human or small informal group can intentionally build non-aligned unsafe AGI? And if so, how far away is that point?

Apologies if these more speculative/thought-experimenty questions are off the mark for this thread, happy to be pointed to a more appropriate place for them!

Replies from: boris-kashirin↑ comment by Boris Kashirin (boris-kashirin) · 2023-05-10T13:37:11.317Z · LW(p) · GW(p)

It is important to remember that our ultimate goal is survival. If someone builds a system that may not meet the strict definition of AGI but still poses a significant threat to us, then the terminology itself becomes less relevant. In such cases, employing a 'taboo-your-words [LW · GW]' approach can be beneficial.

Now lets think of intelligence as "pattern recognition". It is not all that intelligence is, but it is big chunk of it and it is concrete thing we can point to and reason about while many other bits are not even known.[1]

In that case GI is general/meta/deep pattern recognition. Patterns about patterns and patterns that apply to many practical cases, something like that.

Obvious thing to note here: ability to solve problems can be based on a large number of shallow patterns or small number of deep patterns. We are pretty sure that significant part of LLM capabilities is shallow pattern case, but there are hints of at least some deep patterns appearing.

And I think that points to some answers: LLM appear intelligent by sheer amount of shallow patterns. But for system to be dangerous, number of required shallow patterns is so large that it is essentially impossible to achieve. So we can meaningfully say it is not dangerous, it is not AGI... Except, as mentioned earlier there seem to be some deep patterns emerging. And we don't know how many. As for the pre-home-computer era researchers, I bet they could not imagine amount of shallow patterns that can be put into system.

I hope this provided at least some idea how to approach some of your questions, but of course in reality it is much more complicated, there is no sharp distinction between shallow and deep patterns and there are other aspects of intelligence. For me at least it is surprising that it is possible to get GPT-3.5 with seemingly relatively shallow patterns, so I myself "could not imagine amount of shallow patterns that can be put into system"

- ^

I tried Chat GPT on this paragraph, like the result but felt too long:

Intelligence indeed encompasses more than just pattern recognition, but it is a significant component that we can readily identify and discuss. Pattern recognition involves the ability to identify and understand recurring structures, relationships, and regularities within data or information. By recognizing patterns, we can make predictions, draw conclusions, and solve problems.

While there are other aspects of intelligence beyond pattern recognition, such as creativity, critical thinking, and adaptability, they might be more challenging to define precisely. Pattern recognition provides a tangible starting point for reasoning about intelligence.

If we consider problem-solving as a defining characteristic of intelligence, it aligns well with pattern recognition. Problem-solving often requires identifying patterns within the problem space, recognizing similarities to previously encountered problems, and applying appropriate strategies and solutions.

However, it's important to acknowledge that intelligence is a complex and multifaceted concept, and there are still many unknowns about its nature and mechanisms. Exploring various dimensions of intelligence beyond pattern recognition can contribute to a more comprehensive understanding.

comment by MichaelStJules · 2023-05-09T00:32:17.220Z · LW(p) · GW(p)

How feasible is it to build a powerful AGI with little agency but that's good at answering questions accurately?

Could we solve alignment with one, e.g. getting it to do research, or using it to verify the outputs of other AGIs?

Replies from: olivier-coutu, Jay Bailey↑ comment by Olivier Coutu (olivier-coutu) · 2023-05-23T02:44:32.541Z · LW(p) · GW(p)

These question-answering AIs are often called Oracles, you can find some info on them here [? · GW]. Their cousin tool AI is also relevant here. You'll discover that they are probably safer but by no means entirely safe.

We are working on an answer for the safety of Oracles for Stampy, keep your eyes peeled it should show up soon.

↑ comment by Jay Bailey · 2023-05-09T00:53:13.894Z · LW(p) · GW(p)

An AGI that can answer questions accurately, such as "What would this agentic AGI do in this situation" will, if powerful enough, learn what agency is by default since this is useful to predict such things. So you can't just train an AGI with little agency. You would need to do one of:

- Train the AGI with the capabilities of agency, and train it not to use them for anything other than answering questions.

- Train the AGI such that it did not develop agency despite being pushed by gradient descent to do so, and accept the loss in performance.

Both of these seem like difficult problems - if we could solve either (especially the first) this would be a very useful thing, but the first especially seems like a big part of the problem already.

comment by bioshok (unicode-85) · 2023-06-26T17:09:48.085Z · LW(p) · GW(p)

In the context of Deceptive Alignment, would the ultimate goal of an AI system appear random and uncorrelated with the training distribution's objectives from a human perspective? Or would it be understandable to humans that the goal is somewhat correlated with the objectives of the training distribution?

For instance, in the article below, it is written that "the model just has some random proxies that were picked up early on, and that's the thing that it cares about." To what extent does it learn random proxies?

https://www.lesswrong.com/posts/A9NxPTwbw6r6Awuwt/how-likely-is-deceptive-alignment

If an AI system pursues ultimate goals such as power or curiosity, there seems to be a pseudocorrelation regardless of what the base objective is.

On the other hand, can it possibly learn to pursue a goal completely unrelated to the context of the training distribution, such as mass-producing objects of a peculiar shape?

comment by bioshok (unicode-85) · 2023-06-26T17:08:14.824Z · LW(p) · GW(p)

In the context of Deceptive Alignment, would the ultimate goal of an AI system appear random and uncorrelated with the training distribution's objectives from a human perspective? Or would it be understandable to humans that the goal is somewhat correlated with the objectives of the training distribution?

For instance, in the article below, it is written that "the model just has some random proxies that were picked up early on, and that's the thing that it cares about." To what extent does it learn random proxies?

https://www.lesswrong.com/posts/A9NxPTwbw6r6Awuwt/how-likely-is-deceptive-alignment

If an AI system pursues ultimate goals such as power or curiosity, there seems to be a pseudocorrelation regardless of what the base objective is.

On the other hand, can it possibly learn to pursue a goal completely unrelated to the context of the training distribution, such as mass-producing objects of a peculiar shape?

comment by bioshok (unicode-85) · 2023-06-26T16:57:05.646Z · LW(p) · GW(p)

In the context of Deceptive Alignment, would the ultimate goal of an AI system appear random and uncorrelated with the training distribution's objectives from a human perspective? Or would it be understandable to humans that the goal is somewhat correlated with the objectives of the training distribution?

comment by AidanGoth · 2023-05-23T16:43:46.671Z · LW(p) · GW(p)

This question is in the spirit of "I think I'm doing something dumb / obviously wrong -- help me see why" but it's maybe too niche for this thread. (Answers that redirect me to a better place to ask are welcome.)

I recently read Paul Christiano, Eric Neyman and Mark Xu's "Formalizing the presumption of independence" (https://arxiv.org/pdf/2211.06738.pdf). My understanding is that they aim to formalise some types of reasonable (but defeasible) “hand-waving” in otherwise formal proofs, in a way that maintains the underlying deductive structure of a formal proof and responds appropriately to new information / arguments. They're particularly interested in heuristic estimators that presume the independence of random variables so long as we have no reason to think the variables aren't independent and so long as we can adjust the estimate appropriately if we learn about their dependencies.

To that end, suppose we want to estimate , where is a set of real-valued random variables, , and we have a collection of deductively proved (in)equalities about . Then a natural heuristic estimator could be:

where each has the same marginal distributions as , (i.e. is equal to but with each instance of replaced by ), and where the are conditionally independent given . This formalises the idea that we assume we've thought of all the dependencies between the variables of interest and that they're independent, conditional on everything we've thought of so far -- but we can revise this estimate by conditioning on new information and dependencies later.

Before considering any information relating the to each other, assumes that they are unconditionally independent. As we condition on information about them, we update the estimate to account for this and maintain that the variables are conditionally independent, given the information considered so far. E.g. in the twin primes example, we can initially assume that and are independent, and then condition on the fact that if is prime, then is odd (this can be operationalised by considering the appropriate indicator function and conditioning on it taking value ) to adjust the estimate and assume (for now) that there are no further dependencies.

We always have . In fact, we always have . If we further have that doesn't relate and (i.e. doesn't include a formula containing both and ), then I think we have and , giving (i.e. without the primes).

My suggested heuristic estimator apparently has lots of nice properties thanks to being an expectation, including some of the informal properties listed in the paper, which can be stated formally (e.g. if doesn't have an instance of any of the , then conditioning on it won't change the heuristic estimate).

My suggested estimator jumped out to me pretty quickly as capturing (to my understanding) what the authors want, but I'd expect myself to be much worse at this than the authors, who will have spent a while longer thinking about it. So my estimator seems "too good to be true" and I think it's likely I'm pretty confused or missing something obvious and/or important. Please help me see what I'm missing! A couple of hypotheses:

- There's something wrong / incoherent about my suggested heuristic estimator

- My suggested heuristic estimator is too general to be useful

- The paper mainly considers very specific special cases with specific algorithms for heuristic estimators rather than something as general as this, which might be difficult to implement in practice

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2023-05-23T17:11:01.966Z · LW(p) · GW(p)

How does your hereustic estimator deal with sums of squares? Is it linear?

Replies from: AidanGoth↑ comment by AidanGoth · 2023-05-23T20:41:11.697Z · LW(p) · GW(p)

I thought it dealt with these ok -- could you be more specific?

It's linear because it's an expectation. It is under-specified in that it needs us to assume or prove the marginal distributions for the and I guess that's problematic if an algorithm for doing that is a big part of what the authors are looking for. But if we do have marginal distributions for each , then are well-defined and .

comment by bioshok (unicode-85) · 2023-05-18T01:34:47.860Z · LW(p) · GW(p)

I would like to know about the history of the term "AI alignment". I found an article written by Paul Christiano in 2018. Did the use of the term start around this time? Also, what is the difference between AI alignment and value alignment?

https://www.alignmentforum.org/posts/ZeE7EKHTFMBs8eMxn/clarifying-ai-alignment

comment by Muyyd · 2023-05-10T09:41:05.238Z · LW(p) · GW(p)

How slow humans perception comparing to AI? Is it a pure difference of "signal speed of neurons" and "signal speed of copper/aluminum"?

Replies from: jskatt↑ comment by JakubK (jskatt) · 2023-05-12T06:32:22.474Z · LW(p) · GW(p)

It's hard to say. This CLR article lists some advantages that artificial systems have over humans. Also see this section of 80k's interview with Richard Ngo:

Rob Wiblin: One other thing I’ve heard, that I’m not sure what the implication is: signals in the human brain — just because of limitations and the engineering of neurons and synapses and so on — tend to move pretty slowly through space, much less than the speed of electrons moving down a wire. So in a sense, our signal propagation is quite gradual and our reaction times are really slow compared to what computers can manage. Is that right?

Richard Ngo: That’s right. But I think this effect is probably a little overrated as a factor for overall intelligence differences between AIs and humans, just because it does take quite a long time to run a very large neural network. So if our neural networks just keep getting bigger at a significant pace, then it may be the case that for quite a while, most cutting-edge neural networks are actually going to take a pretty long time to go from the inputs to the outputs, just because you’re going to have to pass it through so many different neurons.

Rob Wiblin: Stages, so to speak.

Richard Ngo: Yeah, exactly. So I do expect that in the longer term there’s going to be a significant advantage for neural networks in terms of thinking time compared with the human brain. But it’s not actually clear how big that advantage is now or in the foreseeable future, just because it’s really hard to run a neural network with hundreds of billions of parameters on the types of chips that we have now or are going to have in the coming years.

comment by TinkerBird · 2023-05-09T16:04:21.280Z · LW(p) · GW(p)

I really appreciate these.

- Why do some people think that alignment will be easy/easy enough?

- Is there such thing as 'aligned enough to help solve alignment research'?

↑ comment by Olivier Coutu (olivier-coutu) · 2023-05-23T03:04:23.523Z · LW(p) · GW(p)

These are great questions! Stampy does not currently have an answer for the first one, but its answer on prosaic alignment could get you started on ways that some people think might work without needing additional breakthroughs.

Regarding the second question, the plan seems to be to use less powerful AIs to align more powerful AIs and the hope would be that these helper AIs would not be powerful enough for misalignment to be an issue.

comment by red75prime · 2023-05-09T15:23:05.828Z · LW(p) · GW(p)

Are there ways to make a utility maximizer impervious to Pascal's mugging?

Replies from: MichaelStJules↑ comment by MichaelStJules · 2023-05-09T18:06:40.100Z · LW(p) · GW(p)

You could use a bounded utility function, with sufficiently quickly decreasing marginal returns. Or use difference-making risk aversion or difference-making ambiguity aversion.

Maybe also just aversion to Pascal's mugging itself, but then the utility maximizer needs to be good enough at recognizing Pascal's muggings.

Replies from: red75prime↑ comment by red75prime · 2023-05-09T21:57:28.282Z · LW(p) · GW(p)

Thanks. Could we be sure that a bare utility maximizer doesn't modify itself into a mugging-proof version? I think we can. Such modification drastically decreases expected utility.

It's a bit of relief that a sizeable portion of possible intelligences can be stopped by playing god to them.

Replies from: MichaelStJules↑ comment by MichaelStJules · 2023-05-09T22:08:47.065Z · LW(p) · GW(p)

Could we be sure that a bare utility maximizer doesn't modify itself into a mugging-proof version? I think we can. Such modification drastically decreases expected utility.

Maybe for positive muggings, when the mugger is offering to make the world much better than otherwise. But it might self-modify to not give into threats to discourage threats.

comment by 142857 · 2023-05-09T06:35:50.753Z · LW(p) · GW(p)

Given an aligned AGI, to what extent are people ok with letting the AGI modify us? Examples of such modifications include (feel free to add to the list):

- Curing aging/illnesses

- Significantly altering our biological form

- Converting us to digital life forms

- Reducing/Removing the capacity to suffer

- Giving everyone instant jhanas/stream entry/etc.

- Altering our desires to make them easier to satisfy

- Increasing our intelligence (although this might be an alignment risk?)

- Decreasing our intelligence

- Refactoring our brains entirely

What exact parts of being "human" do we want to preserve?

Replies from: olivier-coutu↑ comment by Olivier Coutu (olivier-coutu) · 2023-05-23T03:10:06.515Z · LW(p) · GW(p)

These are interesting questions that modern philosophers have been pondering. Stampy has an answer on forcing people to change faster than they would like and we are working on adding more answers that attempt to guess what an (aligned) superintelligence might do.

comment by Jack Sloan (jack-sloan) · 2023-05-09T03:40:23.966Z · LW(p) · GW(p)

Does current AI technology possess the power to work its way up to AGI? For example, if the world all of the sudden put a permanent halt on all AI advancements and we were left with GPT-4, would it, given an infinite amount of time of use, achieve AGI through RLHF and access to the internet? This assumes that GPT-4 is not already an AGI system.

i.e.: Do AI systems need to forgo changes to their actual architecture to become smarter, or do is it possible that they get (significantly) smarter through simply enough usage?

↑ comment by Charlie Steiner · 2023-05-09T22:46:40.188Z · LW(p) · GW(p)

GPT-4 doesn't learn when you use it. It doesn't update its parameters to better predict the text of its users or anything like that. So the answer to the basic question is "no."

You could also ask "But what if it did keep getting updated? Would it eventually become super-good at predicting the world?" There are these things called "scaling laws" that predict performance based on amount of training data, and they would say that with arbitrary amounts of data, GPT-4 could get arbitrarily smart (though note that this would require new data that's many times more than all text produced in human history so far). But the scaling laws almot certainly break if you try to extend them too far for a fixed architecture. I actually expect GPT-4 would become (more?) superhuman at many tasks related to writing text, but remain not all that great at prediction of the physical world that's rare in text and hard for humans.

Replies from: olivier-coutu↑ comment by Olivier Coutu (olivier-coutu) · 2023-05-23T03:15:38.504Z · LW(p) · GW(p)

Charlie is correct in saying that GPT-4 does not actively learn based on its input. But a related question is whether we are missing key technical insights for AGI, and Stampy has an answer for that. He also has an answer explaining scaling laws.