AI Impacts Quarterly Newsletter, Apr-Jun 2023

post by Harlan, Richard Korzekwa (Grothor) · 2023-07-18T17:14:09.774Z · LW · GW · 0 commentsThis is a link post for https://blog.aiimpacts.org/p/ai-impacts-quarterly-newsletter-apr

Contents

News Katja Grace’s TIME article References to AI Impacts Research Research and writing highlights Views on AI risks The supply chain of AI development Ideas for internal and public policy about AI AI timeline predictions Slowing AI Miscellany Ongoing projects Funding Reader survey None No comments

Every quarter, we have a newsletter with updates on what’s happening at AI Impacts, with an emphasis on what we’ve been working on. You can see past newsletters here and subscribe to receive more newsletters and other blogposts here.

During the past quarter, Katja wrote an article in TIME, we created and updated several wiki pages and blog posts, and we began working on several new research projects that are in progress.

We’re running a reader survey, which takes 2-5 minutes to complete. We appreciate your feedback!

If you’d like to donate to AI Impacts, you can do so here. Thank you!

News

Katja Grace’s TIME article

In May, TIME published Katja’s article “AI Is Not an Arms Race.” People sometimes say that the situation with AI is an arms race that rewards speeding forward to develop AI before anyone else. Katja argues that this is likely not the situation, and that if it is, we should try to get out of it.

References to AI Impacts Research

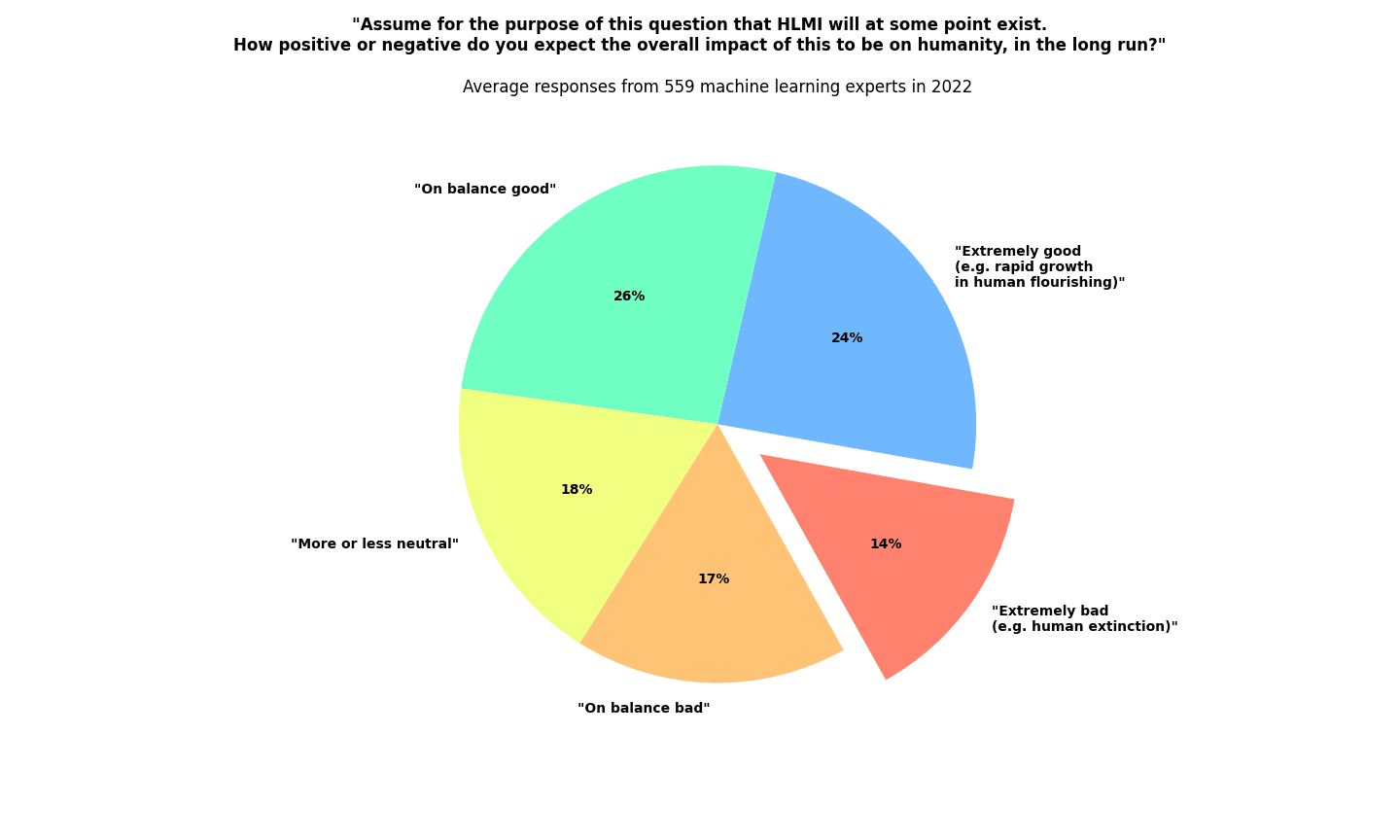

The 2022 Expert Survey on Progress in AI was referenced in an article in The Economist, a New York Times op-ed by Yuval Noah Harari, a Politico op-ed that argues for a “Manhattan Project” for AI Safety, and a report from Epoch AI’s Matthew Barnett and Tamay Besiroglu about a method for forecasting the performance of AI models.

The Japanese news agency Kyodo News published an article about AI risks that referenced Katja’s talk at EA Global from earlier this year.

We also maintain an ongoing list of citations of AI Impacts work that we know of.

Research and writing highlights

Views on AI risks

- Rick compiled a list of quotes from prominent AI researchers and leaders about their views on AI risks.

- Jeffrey compiled a list of quantitative estimates about the likelihood of AI risks from people working in AI safety.

- Zach compiled a list of surveys that ask AI experts or AI safety/governance experts for their views on AI risks.

The supply chain of AI development

- Harlan wrote a page outlining some factors that affect the price of AI hardware

- Jeffrey wrote a blogpost arguing that slowing AI is easiest if AI companies are horizontally integrated, but not vertically integrated.

Ideas for internal and public policy about AI

- Zach compiled reading lists of research and discussion about safety-related ideas that AI labs could implement [LW(p) · GW(p)] and ideas for public policy related to AI. [LW · GW]

- Zach also compiled a list of statements that AI labs have made about public policy.

AI timeline predictions

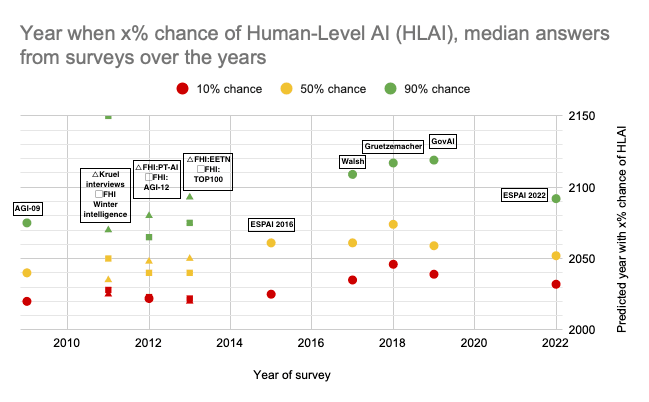

- Harlan updated the main page for Predictions of Human-Level AI Timelines and made a page about AI timeline prediction markets.

- Zach and Harlan updated a list of AI Timeline surveys with data from recent surveys.

Slowing AI

- Zach compiled a reading list [? · GW] and an outline of some strategic considerations [? · GW] related to slowing AI progress.

- Jeffrey wrote a blogpost arguing that people should give greater consideration to visions of the future that don’t involve AGI.

Miscellany

- Jeffrey wrote a blogpost arguing that AI systems are currently too reliant on human-supported infrastructure to easily cause human extinction without putting the AI system at risk

- Harlan, Jeffrey, and Rick submitted responses to the National Telecommunication and Information Administration’s AI accountability policy request for comment and the Office of Science and Technology Policy’s request for information.

Ongoing projects

- Katja and Zach are preparing to publish a report about the 2022 Expert Survey on Progress in AI, with further analysis of the results and details about the methodology.

- Jeffrey is working on a case study of Institutional Review Boards in medical research.

- Harlan is working on a case study of voluntary environmental standards.

- Zach is working on a project that explores ways of evaluating AI labs for the safety of their practices in developing and deploying AI.

Funding

We are still seeking funding for 2023 and 2024. If you want to talk to us about why we should be funded or hear more details about our plans, please write to Elizabeth, Rick, or Katja at [firstname]@aiimpacts.org.

If you'd like to donate to AI Impacts, you can do so here. (And we thank you!)

Reader survey

We are running a reader survey in the hopes of getting useful feedback about our work. If you’re reading this and would like to spend 2-5 minutes filling out the reader survey, you can find it here. Thank you!

0 comments

Comments sorted by top scores.