Posts

Comments

In the big round (without counterarguments), arguments pushed people upward slightly more:

(more than downward -- not more than previous surveys)

First, RE the role of "solving alignment" in this discussion, I just want to note that:

1) I disagree that alignment solves gradual disempowerment problems.

2) Even if it would that does not imply that gradual disempowerment problems aren't important (since we can't assume alignment will be solved).

3) I'm not sure what you mean by "alignment is solved"; I'm taking it to mean "AI systems can be trivially intent aligned". Such a system may still say things like "Well, I can build you a successor that I think has only a 90% chance of being aligned, but will make you win (e.g. survive) if it is aligned. Is that what you want?" and people can respond with "yes" -- this is the sort of thing that probably still happens IMO.

4) Alternatively, you might say we're in the "alignment basin" -- I'm not sure what that means, precisely, but I would operationalize it as something like "the AI system is playing a roughly optimal CIRL game". It's unclear how good of performance that can yield in practice (e.g. it can't actually be optimal due to compute limitations), but I suspect it still leaves significant room for fuck-ups.

5) I'm more interested in the case where alignment is not "perfectly" "solved", and so there are simply clear and obvious opportunities to trade-off safety and performance; I think this is much more realistic to consider.

6) I expect such trade-off opportunities to persist when it comes to assurance (even if alignment is solved), since I expect high-quality assurance to be extremely costly. And it is irresponsible (because it's subjectively risky) to trust a perfectly aligned AI system absent strong assurances. But of course, people who are willing to YOLO it and just say "seems aligned, let's ship" will win. This is also part of the problem...

My main response, at a high level:

Consider a simple model:

- We have 2 human/AI teams in competition with each other, A and B.

- A and B both start out with the humans in charge, and then decide whether the humans should stay in charge for the next week.

- Whichever group has more power at the end of the week survives.

- The humans in A ask their AI to make A as powerful as possible at the end of the week.

- The humans in B ask their AI to make B as powerful as possible at the end of the week, subject to the constraint that the humans in B are sure to stay in charge.

I predict that group A survives, but the humans are no longer in power. I think this illustrates the basic dynamic. EtA: Do you understand what I'm getting at? Can you explain what you think it wrong with thinking of it this way?

Responding to some particular points below:

Sure, but these things don't result in non-human entities obtaining power right?

Yes, they do; they result in beaurocracies and automated decision-making systems obtaining power. People were already having to implement and interact with stupid automated decision-making systems before AI came along.

Like usually these are somewhat negative sum, but mostly just involve inefficient transfer of power. I don't see why these mechanisms would on net transfer power from human control of resources to some other control of resources in the long run. To consider the most extreme case, why would these mechanisms result in humans or human appointed successors not having control of what compute is doing in the long run?

My main claim was not that these are mechanisms of human disempowerment (although I think they are), but rather that they are indicators of the overall low level of functionality of the world.

I think we disagree about:

1) The level of "functionality" of the current world/institutions.

2) How strong and decisive competitive pressures are and will be in determining outcomes.

I view the world today as highly dysfunctional in many ways: corruption, coordination failures, preference falsification, coercion, inequality, etc. are rampant. This state of affairs both causes many bad outcomes and many aspects are self-reinforcing. I don't expect AI to fix these problems; I expect it to exacerbate them.

I do believe it has the potential to fix them, however, I think the use of AI for such pro-social ends is not going to be sufficiently incentivized, especially on short time-scales (e.g. a few years), and we will instead see a race-to-the-bottom that encourages highly reckless, negligent, short-sighted, selfish decisions around AI development, deployment, and use. The current AI arms race is a great example -- Companies and nations all view it as more important that they be the ones to develop ASI than to do it carefully or put effort into cooperation/coordination.

Given these views:

1) Asking AI for advice instead of letting it take decisions directly seems unrealistically uncompetitive. When we can plausibly simulate human meetings in seconds it will be organizational suicide to take hours-to-weeks to let the humans make an informed and thoughtful decision.

2) The idea that decision-makers who "think a goverance structure will yield total human disempowerment" will "do something else" also seems quite implausible. Such decision-makers will likely struggle to retain power. Decision-makers who prioritize their own "power" (and feel empowered even as they hand off increasing decision-making to AI) and their immediate political survival above all else will be empowered.

Another features of the future which seems likely and can already be witnessed beginning is the gradual emergence and ascendance of pro-AI-takeover and pro-arms-race ideologies, which endorse the more competitive moves of rapidly handing off power to AI systems in insufficiently cooperative ways.

This thought experiment is described in ARCHES FYI. https://acritch.com/papers/arches.pdf

I think it's a bit sad that this comment is being so well-received -- it's just some opinions without arguments from someone who hasn't read the paper in detail.

- There are 2 senses in which I agree that we don't need full on "capital V value alignment":

- We can build things that aren't utility maximizers (e.g. consider the humble MNIST classifier)

- There are some utility functions that aren't quite right, but are still safe enough to optimize in practice (e.g. see "Value Alignment Verification", but see also, e.g. "Defining and Characterizing Reward Hacking" for negative results)

- But also:

- Some amount of alignment is probably necessary in order to build safe agenty things (the more agenty, the higher the bar for alignment, since you start to increasingly encounter perverse instatiation-type concerns -- CAVEAT: agency is not a unidimensional quantity, cf: "Harms from Increasingly Agentic Algorithmic Systems").

- Note that my statement was about the relative requirements for alignment in text domains vs. real-world. I don't really see how your arguments are relevant to this question.

Concretely, in domains with vision, we should probably be significantly more worried that an AI system learns something more like an adversarial "hack" on it's values leading to behavior that significantly diverges from things humans would endorse.

OTMH, I think my concern here is less:

- "The AI's values don't generalize well outside of the text domain (e.g. to a humanoid robot)"

and more: - "The AI's values must be much more aligned in order to be safe outside the text domain"

I.e. if we model an AI and a human as having fixed utility functions over the same accurate world model, then the same AI might be safe as a chatbot, but not as a robot.

This would be because the richer domain / interface of the robot creates many more opportunities to "exploit" whatever discrepancies exist between AI and human values in ways that actually lead to perverse instantiation.

Two things that strike me:

- The claim that "There are three kinds of genies: Genies to whom you can safely say 'I wish for you to do what I should wish for'; genies for which no wish is safe; and genies that aren't very powerful or intelligent." only seems true under a very conservative notion of what it means for a wish to be "safe" (which may be appropriate in some cases). It's a very black-and-white account -- certainly there ought to be a continuum of genies with different safety/performance trade-offs resulting from their varying capabilities and alignment properties.

- The final 3 paragraphs of the linked post on Artificial Addition seem to suggest that deep learning-style approaches to teaching AI systems arithmetic are not promising. I also recall that EY and others thought deep learning wouldn't work for capabilities, either. The argument that deep learning won't work for capabilities has mostly been falsified. It seems like the same argument was being used to illustrate a core alignment difficulty in this post, but it's not entirely clear to me.

This comment made me reflect on what fragility of values means.

To me this point was always most salient when thinking about embodied agents, which may need to reliably recognize something like "people" in its environment (in order to instantiate human values like "try not to hurt people") even as the world changes radically with the introduction of various forms of transhumanism.

I guess it's not clear to me how much progress we make towards that with a system that can do a very good job with human values when restricted to the text domain. Plausibly we just translate everything into text and are good to go? It makes me wonder where we're at with adversarial robustness of vision-language models, e.g.

OK, so it's not really just your results? You are aggregating across these studies (and presumably ones of "Westerners" as well)? I do wonder how directly comparable things are... Did you make an effort to translate a study or questions from studies, or are the questions just independently conceived and formulated?

No, I was only responding to the the first part.

Not necessarily fooling it, just keeping it ignorant. I think such schemes can plausibly scale to very high levels of capabilities, perhaps indefinitely, since intelligence doesn't give one the ability to create information from thin air...

This is a super interesting and important problem, IMO. I believe it already has significant real world practical consequences, e.g. powerful people find it difficult to avoid being surrounded by sychophants: even if they really don't want to be, that's just an extra constraint for the sychophants to satisfy ("don't come across as sychophantic")! I am inclined to agree that avoiding power differentials is the only way to really avoid these perverse outcomes in practice, and I think this is a good argument in favor of doing so.

--------------------------------------

This is also quite related to an (old, unpublished) work I did with Jonathan Binas on "bounded empowerment". I've invited you to the Overleaf (it needs to clean-up, but I've also asked Jonathan about putting it on arXiv).

To summarize: Let's consider this in the case of a superhuman AI, R, and a human H. The basic idea of that work is that R should try and "empower" H, and that (unlike in previous works on empowerment), there are two ways of doing this:

1) change the state of the world (as in previous works)

2) inform H so they know how to make use of the options available to them to achieve various ends (novel!)

If R has a perfect model of H and the world, then you can just compute how to effectively do these things (it's wildly intractable, ofc). I think this would still often look "patronizing" in practice, and/or maybe just lead to totally wild behaviors (hard to predict this sort of stuff...), but it might be a useful conceptual "lead".

Random thought OTMH: Something which might make it less "patronizing" is if H were to have well-defined "meta-preferences" about how such interactions should work that R could aim to respect.

What makes you say this: "However, our results suggest that students are broadly less concerned about the risks of AI than people in the United States and Europe"?

This activation function was introduced in one of my papers from 10 years ago ;)

See Figure 2 of https://arxiv.org/abs/1402.3337

Really interesting point!

I introduced this term in my slides that included "paperweight" as an example of an "AI system" that maximizes safety.

I sort of still think it's an OK term, but I'm sure I will keep thinking about this going forward and hope we can arrive at an even better term.

You could try to do tests on data that is far enough from the training distribution that it won't generalize in a simple immitative way there, and you could do tests to try and confirm that you are far enough off distribution. For instance, perhaps using a carefully chosen invented language would work.

I don't disagree... in this case you don't get agents for a long time; someone else does though.

I meant "other training schemes" to encompass things like scaffolding that deliberately engineers agents using LLMs as components, although I acknowledge they are not literally "training" and more like "engineering".

I would look at the main FATE conferences as well, which I view as being: FAccT, AIES, EEAMO.

I found this thought provoking, but I didn't find the arguments very strong.

(a) Misdirected Regulations Reduce Effective Safety Effort; Regulations Will Almost Certainly Be Misdirected

(b) Regulations Generally Favor The Legible-To-The-State

(c) Heavy Regulations Can Simply Disempower the Regulator

(d) Regulations Are Likely To Maximize The Power of Companies Pushing Forward Capabilities the Most

Briefly responding:

a) The issue in this story seems to be that the company doesn't care about x-safety, not that they are legally obligated to care about face-blindness.

b) If governments don't have bandwidth to effectively vet small AI projects, it seems prudent to err on the side of forbidding projects that might pose x-risk.

c) I do think we need effective international cooperation around regulation. But even buying 1-4 years time seems good in expectation.

d) I don't see the x-risk aspect of this story.

This means that the model can and will implicitly sacrifice next-token prediction accuracy for long horizon prediction accuracy.

Are you claiming this would happen even given infinite capacity?

If so, can you perhaps provide a simple+intuitive+concrete example?

What do you mean by "random linear probe"?

I skimmed this. A few quick comments:

- I think you characterized deceptive alignment pretty well.

- I think it only covers a narrow part of how deceptive behavior can arise.

- CICERO likely already did some of what you describe.

So let us specify a probability distribution over the space of all possible desires. If we accept the orthogonality thesis, we should not want this probability distribution to build in any bias towards certain kinds of desires over others. So let's spread our probabilities in such a way that we meet the following three conditions. Firstly, we don't expect Sia's desires to be better satisfied in any one world than they are in any other world. Formally, our expectation of the degree to which Sia's desires are satisfied at is equal to our expectation of the degree to which Sia's desires are satisfied at , for any . Call that common expected value ''. Secondly, our probabilities are symmetric around . That is, our probability that satisfies Sia's desires to at least degree is equal to our probability that it satisfies her desires to at most degree . And thirdly, learning how well satisfied Sia's desires are at some worlds won't tell us how well satisfied her desires are at other worlds. That is, the degree to which her desires are satisfied at some worlds is independent of how well satisfied they are at any other worlds. (See the appendix for a more careful formulation of these assumptions.) If our probability distribution satisfies these constraints, then I'll say that Sia's desires are 'sampled randomly' from the space of all possible desires.

This is a characterization, and it remains to show that there exist distributions that fit it (I suspect there are not, assuming the sets of possible desires and worlds are unbounded).

I also find the 3rd criteria counterintuitive. If worlds share features, I would expect these to not be independent.

I think it might be more effective in future debates at the outset to:

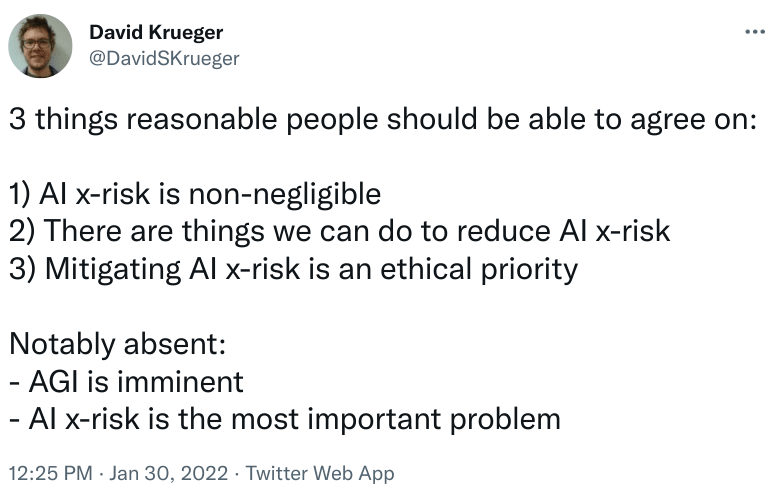

* Explain that it's only necessary to cross a low bar (e.g. see my Tweet below). -- This is a common practice in debates.

* Outline the responses they expect to hear from the other side, and explain why they are bogus. Framing: "Whether AI is an x-risk has been debated in the ML community for 10 years, and nobody has provided any compelling counterarguments that refute the 3 claims (of the Tweet). You will hear a bunch of counter arguments from the other side, but when you do, ask yourself whether they are really addressing this. Here are a few counter-arguments and why they fail..." -- I think this could really take the wind out of the sails of the opposition, and put them on the back foot.

I also don't think Lecun and Meta should be given so much credit -- Is Facebook really going to develop and deploy AI responsibly?

1) They have been widely condemned for knowingly playing a significant role in the Rohingya genocide, have acknowledged that they failed to act to prevent Facebook's role in the Rohingya genocide, and are being sued for $150bn for this.

2) They have also been criticised for the role that their products, especially Instagram, play in contributing to mental health issues, especially around body image in teenage girls.

More generally, I think the "companies do irresponsible stuff all the time" point needs to be stressed more. And one particular argument that is bogus is the "we'll make it safe" -- x-safety is a common good, and so companies should be expected to undersupply it. This is econ 101.

Organizations that are looking for ML talent (e.g. to mentor more junior people, or get feedback on policy) should offer PhD students high-paying contractor/part-time work.

ML PhD students working on safety-relevant projects should be able to augment their meager stipends this way.

That is in addition to all the people who will give their AutoGPT an instruction that means well but actually translates to killing all the humans or at least take control over the future, since that is so obviously the easiest way to accomplish the thing, such as ‘bring about world peace and end world hunger’ (link goes to Sully hyping AutoGPT, saying ‘you give it a goal like end world hunger’) or ‘stop climate change’ or ‘deliver my coffee every morning at 8am sharp no matter what as reliably as possible.’ Or literally almost anything else.

I think these mostly only translate into dangerous behavior if the model badly "misunderstands" the instruction, which seems somewhat implausible.

One must notice that in order to predict the next token as well as possible the LMM will benefit from being able to simulate every situation, every person, and every causal element behind the creation of every bit of text in its training distribution, no matter what we then train the LMM to output to us (what mask we put on it) afterwards.

Is there any rigorous justification for this claim? As far as I can tell, this is folk wisdom from the scaling/AI safety community, and I think it's far from obvious that it's correct, or what assumptions are required for it to hold.

It seems much more plausible in the infinite limit than in practice.

I have gained confidence in my position that all of this happening now is a good thing, both from the perspective of smaller risks like malware attacks, and from the perspective of potential existential threats. Seems worth going over the logic.

What we want to do is avoid what one might call an agent overhang.

One might hope to execute our Plan A of having our AIs not be agents. Alas, even if technically feasible (which is not at all clear) that only can work if we don’t intentionally turn them into agents via wrapping code around them. We’ve checked with actual humans about the possibility of kindly not doing that. Didn’t go great.

This seems like really bad reasoning...

It seems like the evidence that people won't "kindly not [do] that" is... AutoGPT.

So if AutoGPT didn't exist, you might be able to say: "we asked people to not turn AI systems into agents, and they didn't. Hooray for plan A!"

Also: I don't think it's fair to say "we've checked [...] about the possibility". The AI safety community thought it was sketch for a long time, and has provided some lackluster pushback. Governance folks from the community don't seem to be calling for a rollback of the plugins, or bans on this kind of behavior, etc.

Christiano and Yudkowsky both agree AI is an x-risk -- a prediction that would distinguish their models does not do much to help us resolve whether or not AI is an x-risk.

I'm not necessarily saying people are subconsciously trying to create a moat.

I'm saying they are acting in a way that creates a moat, and that enables them to avoid competition, and that more competition would create more motivation for them to write things up for academic audiences (or even just write more clearly for non-academic audiences).

Q: "Why is that not enough?"

A: Because they are not being funded to produce the right kinds of outputs.

My point is not specific to machine learning. I'm not as familiar with other academic communities, but I think most of the time it would probably be worth engaging with them if there is somewhere where your work could fit.

In my experience people also often know their blog posts aren't very good.

My point (see footnote) is that motivations are complex. I do not believe "the real motivations" is a very useful concept here.

The question becomes why "don't they judge those costs to be worth it"? Is there motivated reasoning involved? Almost certainly yes; there always is.

- A lot of work just isn't made publicly available

- When it is, it's often in the form of ~100 page google docs

- Academics have a number of good reasons to ignore things that don't meet academic standards or rigor and presentation

works for me too now

The link is broken, FYI

Yeah this was super unclear to me; I think it's worth updating the OP.

FYI: my understanding is that "data poisoning" refers to deliberately the training data of somebody else's model which I understand is not what you are describing.

Oh I see. I was getting at the "it's not aligned" bit.

Basically, it seems like if I become a cyborg without understanding what I'm doing, the result is either:

- I'm in control

- The machine part is in control

- Something in the middle

Only the first one seems likely to be sufficiently aligned.

I don't understand the fuss about this; I suspect these phenomena are due to uninteresting, and perhaps even well-understood effects. A colleague of mine had this to say:

- After a skim, it looks to me like an instance of hubness: https://www.jmlr.org/papers/volume11/radovanovic10a/radovanovic10a.pdf

- This effect can be a little non-intuitive. There is an old paper in music retrieval where the authors battled to understand why Joni Mitchell's (classic) "Don Juan’s Reckless Daughter" was retrieved confusingly frequently (the same effect) https://d1wqtxts1xzle7.cloudfront.net/33280559/aucouturier-04b-libre.pdf?1395460009=&respon[…]xnyMeZ5rAJ8cenlchug__&Key-Pair-Id=APKAJLOHF5GGSLRBV4ZA

- For those interested, here is a nice theoretical argument on why hubs occur: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=e85afe59d41907132dd0370c7bd5d11561dce589

- If this is the explanation, it is not unique to these models, or even to large language models. It shows up in many domains.

Indeed. I think having a clean, well-understood interface for human/AI interaction seems useful here. I recognize this is a big ask in the current norms and rules around AI development and deployment.

I don't understand what you're getting at RE "personal level".

I think the most fundamental objection to becoming cyborgs is that we don't know how to say whether a person retains control over the cyborg they become a part of.

FWIW, I didn't mean to kick off a historical debate, which seems like probably not a very valuable use of y'all's time.

Unfortunately, I think even "catastrophic risk" has a high potential to be watered down and be applied to situations where dozens as opposed to millions/billions die. Even existential risk has this potential, actually, but I think it's a safer bet.

I don't think we should try and come up with a special term for (1).

The best term might be "AI engineering". The only thing it needs to be distinguished from is "AI science".

I think ML people overwhelmingly identify as doing one of those 2 things, and find it annoying and ridiculous when people in this community act like we are the only ones who care about building systems that work as intended.

I say it is a rebrand of the "AI (x-)safety" community.

When AI alignment came along we were calling it AI safety, even though it was really basically AI existential safety all along that everyone in the community meant. "AI safety" was (IMO) a somewhat successful bid for more mainstream acceptance, that then lead to dillution and confusion, necessitating a new term.

I don't think the history is that important; what's important is having good terminology going forward.

This is also why I stress that I work on AI existential safety.

So I think people should just say what kind of technical work they are doing and "existential safety" should be considered as a social-technical problem that motivates a community of researchers, and used to refer to that problem and that community. In particular, I think we are not able to cleanly delineate what is or isn't technical AI existential safety research at this point, and we should welcome intellectual debates about the nature of the problem and how different technical research may or may not contribute to increasing x-safety.