How LLMs are and are not myopic

post by janus · 2023-07-25T02:19:44.949Z · LW · GW · 16 commentsContents

TLDR: The training goal for LLMs like GPT is not cognitively-myopic (because they think about the future) or value myopic (because the transformer architecture optimizes accuracy over the entire sequence, not just the next-token). However, training is consequence-blind, because the training data is ...

Summary

Introduction

Types of Myopia

1. Cognitive Myopia

2. Value/Prediction Myopia

Experiments

Interpretability

Enforcing Myopia

3. Consequence-blindness

Self-reference and Model Generated Data

Myopic Training Goals vs Myopic Models

Meta

None

16 comments

Thanks to janus, Nicholas Kees Dupuis, and Robert Kralisch for reviewing this post and providing helpful feedback. Some of the experiments mentioned were performed while at Conjecture.

TLDR: The training goal for LLMs like GPT is not cognitively-myopic (because they think about the future) or value myopic (because the transformer architecture optimizes accuracy over the entire sequence, not just the next-token). However, training is consequence-blind, because the training data is causally independent of the models actions. This assumption breaks down when models are trained on AI generated text.

Summary

- Myopia in machine learning models can be defined in several ways. It could be the time horizon the model considers when making predictions (cognitive myopia), the time horizon the model takes into account when assessing its value (value myopia), or the degree to which the model considers the consequences of its decisions (consequence-blindness).

- Both cognitively-myopic and consequence-blind models should not pursue objectives for instrumental reasons. This could avoid some important alignment failures, like power-seeking or deceptive alignment. However, these behaviors can still exist as terminal values, for example when a model is trained to predict power-seeking or deceptively aligned agents.

- LLM pretraining is not cognitively myopic because there is an incentive to think about the future to improve immediate prediction accuracy, like when predicting the next move in a chess game.

- LLM pretraining is not value/prediction myopic (does not maximize myopic prediction accuracy) because of the details of the transformer architecture. Training gradients flow through attention connections, so past computation is directly optimized to be useful when attended to by future computation. This incentivizes improving prediction accuracy over the entire sequence, not just the next token. This means that the model can and will implicitly sacrifice next-token prediction accuracy for long horizon prediction accuracy.

- You can modify the transformer architecture to remove the incentive for non-myopic accuracy, but as expected, the modified architecture has worse scaling laws.

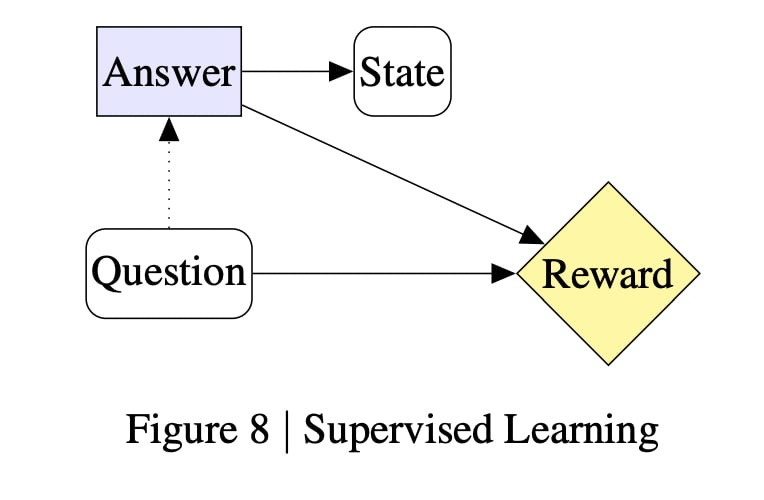

- LLM pretraining on human data is consequence-blind as the training data is causally independent from the model's actions. This implies the model should predict actions without considering the effect of its actions on other agents, including itself. This makes the model miscalibrated, but likely makes alignment easier.

- When LLMs are trained on data which has been influenced or generated by LLMs, the assumptions of consequence-blindness partially break down [AF · GW]. It’s not clear how this affects the training goal theoretically or in practice.

- A myopic training goal does not ensure the model will learn myopic computation or behavior because inner alignment with the training goal is not guaranteed [AF · GW]

Introduction

The concept of myopia [? · GW] has been frequently discussed as a potential solution to the problem of deceptive alignment. However, the term myopia is ambiguous and can refer to multiple different properties we might want in an AI system, only some of which might rule out deceptive alignment. There's also been confusion about the extent to which Large language model (LLM) pretraining and other supervised learning methods are myopic and what this implies about their cognition and safety properties. This post will attempt to clarify some of these issues, mostly by summarizing and contextualizing past work.

Types of Myopia

1. Cognitive Myopia

One natural definition for myopia is that the model doesn't think about or consider the future at all. We will call this cognitive myopia. Myopic cognition likely comes with a significant capabilities handicap, as many tasks require some degree of forward planning or anticipation of future events.

LLM pretraining is not cognitively-myopic. Even though LLMs like GPT are optimized for next-token prediction and use causal masking which hides the future from current predictions, there is still a direct incentive to think about the future because it can be useful for immediate prediction accuracy. In a game of chess, efficiently computing the best move likely involves reasoning about how your opponent will respond multiple moves into the future. In other words, we should expect GPT to think about the future because it’s instrumental for predicting the present.

2. Value/Prediction Myopia

Value myopia refers to agents that place no value on future states or rewards. In the case of a predictive model, we mean the model cares only about the accuracy of its next prediction. Intuitively, it seems like this would rule out deceptive alignment and treacherous turns, because a value-myopic agent should be unwilling to sacrifice immediate value for long-term value. Unfortunately, value-myopic agents might still have non-myopic incentives for reasons like anthropic uncertainty or acausal trade. (see Open Problems with Myopia [AF · GW] for more details)

In any case, LLM training actually incentivizes value non-myopia. Even though next-token prediction accuracy is a nominally myopic objective, the transformer architecture actually causes the model to be optimized for accuracy over the entire sequence, not just the next token.

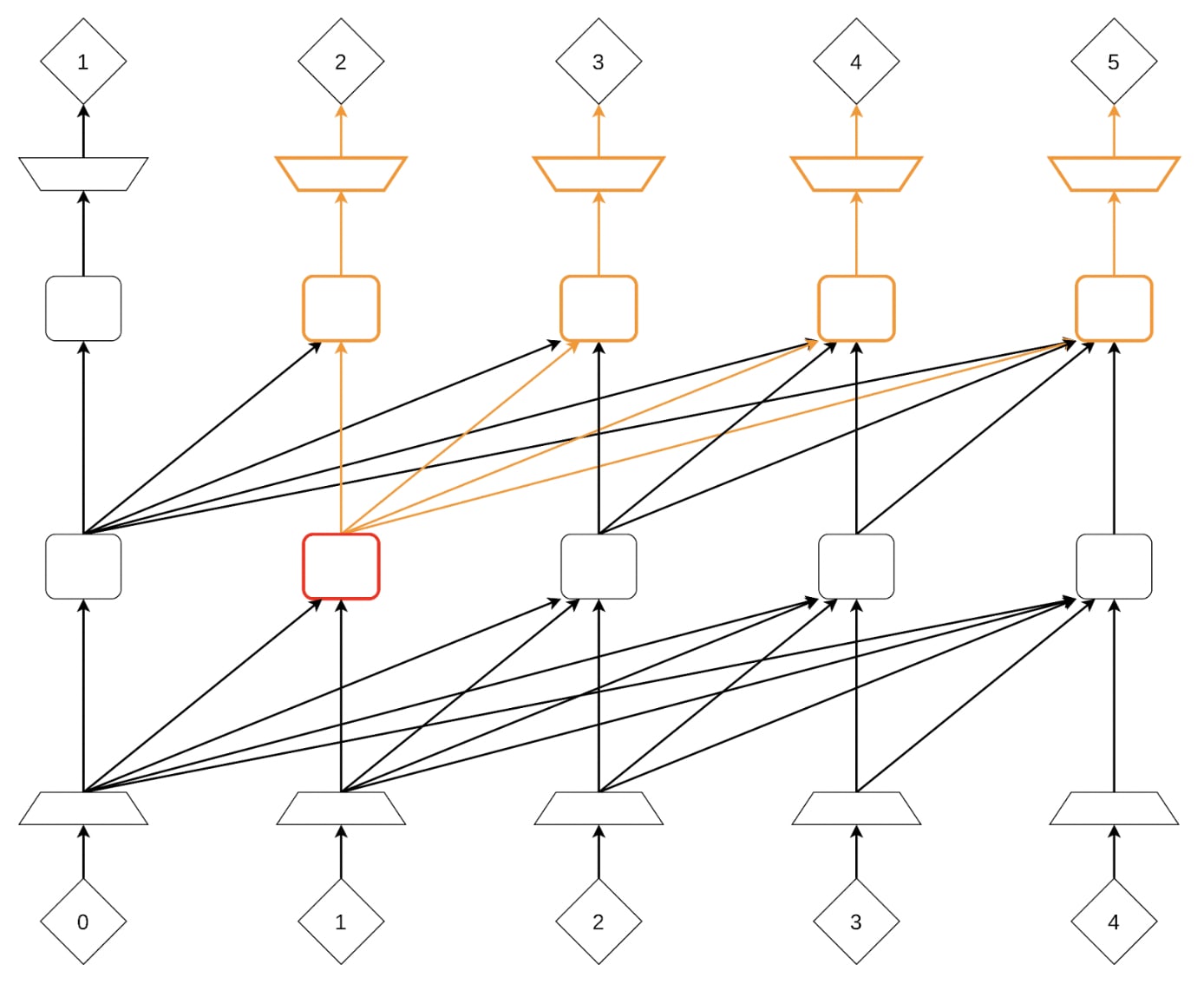

In a causal-masked transformer, attention layers can query the previous layers' activations from any column in the context window. Gradients flow through the attention connections, so each previous layer is optimized not just to improve prediction accuracy for the next token, but also to produce values that are useful for future columns to attend to when predicting their token.

This means that the model can and will implicitly sacrifice next-token prediction accuracy for long horizon prediction accuracy. In particular, we should expect trained models to find an efficient tradeoff between current prediction accuracy and full-sequence prediction accuracy. When predicting relatively easy tokens, they will likely spend most of their computation budget preparing for the future. If the model is given a hard problem that it will need to solve later in the sequence, it will likely work on the problem ('in the back of its head') throughout the intermediate predictions.

Experiments

Interpretability

Several interpretability results including ROME confirm this type of non-myopic computation in LLMs. ROME shows that LLMs recall factual information about nouns when the noun first appears, even though this information is only used later when predicting the answer to a question about the noun. This information would be irrelevant and thus wasted computation for the purpose of predicting only the next token. For example, if the model sees the text "The Eiffel Tower", it immediately begins retrieving information about the Eiffel Tower like where it is located even though that's not necessary to predict the next token which is almost certainly "is".

Enforcing Myopia

It is possible to modify the transformer architecture to enforce value (prediction accuracy) myopia by placing stop gradients in the attention layers. This effectively prevents past activations from being directly optimized to be more useful for future computation. We ran several informal experiments on models like these while at Conjecture. Unfortunately, we do not have quantitative results to share here. The experiments were preliminary and we moved on to other aspects of the project, so don’t take this as strong evidence.

Specifically, we trained a set of four traditional and four myopic transformers ranging from 117M to 1.5B parameters (equivalent to GPT-2 Small to GPT-XL). Each model was trained on the same data but training hyperparameters were tuned to each architecture individually using maximal update parameterization.

We found the performance reduction from myopia was minimal at 117M parameters, but the performance cost increased with scale, i.e. myopic transformers have worse scaling laws.

3. Consequence-blindness

A third type of myopia to consider is consequence-blindness [AF · GW], where a model chooses actions completely independent of any effect of its actions on the future. This is similar to the goal of Counterfactual Oracles.

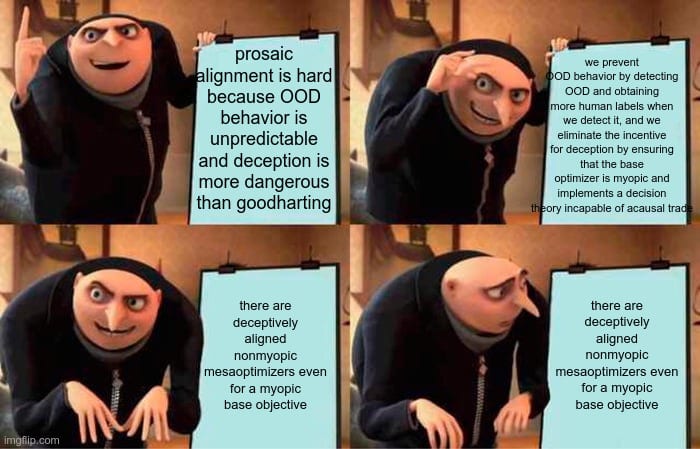

Consequence-blindness should rule out most types of instrumental convergence and concerns about self-fulfilling prophecies [AF · GW]. A model which completely ignores the effects of its actions has no instrumental incentive to pursue traditional instrumental goals, like trying to accumulate resources to become more powerful, trying to prevent its own shutdown, or pretending to be aligned in order to defect later. However, consequence-blindness does not actually constrain the behavior of a model, because the model can pursue any instrumental goal as a terminal value.

A consequence-blind simulator that predicts power-seeking agents (like humans) will still predict actions which seek power, but these actions will seek power for the simulated agent, not the simulator itself. I usually think about problems like this as simulator vs simulacra alignment [AF · GW]. If you successfully build an inner aligned simulator, you can use it to faithfully simulate according to the rules it learns and generalizes from its training distribution. However you are still left with the problem of extracting consistently aligned simulacra.

In theory, consequence-blindness doesn't rule out any capabilities, because a consequence-blind predictor could learn to predict any behavior. However, in practice using a consequence-blind training goal like pure imitation learning may be uncompetitive compared to methods like RL (or imitation + RL finetuning, the current dominant paradigm).

Consequence-blind agents (with a causal decision theory) can be seen as implementing a Lonely Causal Decision Theory (LCDT [AF · GW]). An LCDT agent assumes that every other decision node of agents in the world (including its future decisions) are causally independent of its actions. This means it has no incentive to take actions which help its future itself or other agents for instrumental reasons.

Unlike the other forms of myopia above, the training goal for LLMs trained with self-supervised learning (SSL) is theoretically consequence-blind. In supervised or self-supervised learning, the training data already exists and is assumed to be causally independent from the model’s decisions. This means a model’s prediction should be based only on the likelihood of the output appearing in the training data. In particular, the model’s prediction should be independent of any effect from making the prediction itself, including whether or not the prediction would make the model more likely to predict or control the future correctly when run autoregressively.

The distinction between optimizing prediction accuracy and steering the distribution to be easier to predict is one of the most common sources of confusion about LLM myopia. Even though the LLM training goal is not value-myopic and optimizes for prediction accuracy across entire training examples, LLMs are not incentivized to predict tokens that make the future easier to predict.

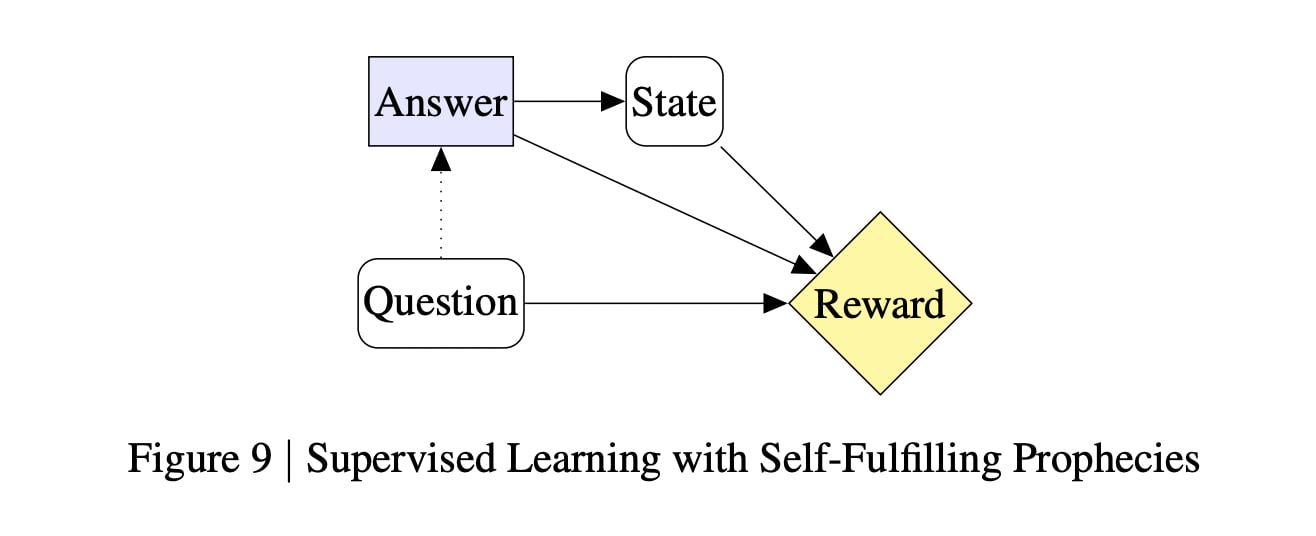

Unfortunately, the analysis of consequence-blindness story becomes more complicated when the training data is not actually causally independent from the model’s prediction.

Self-reference and Model Generated Data

In practice, the assumption that the supervised learning distribution is causally independent from the model’s predictions may be violated. This can happen whenever a model is trained on data correlated with its behavior, or if the model thinks the correct prediction is correlated with its behavior.

This is concerning because it's not at all clear what a model that is predicting itself should output [? · GW]. It breaks many of the intuitions of why it should be safe to use LLMs as simulators of text distributions.

This problem is already apparent in current LLMs and is likely to become worse for several reasons.

- Increasingly large portions of LLM pretraining and finetuning datasets will be generated or influenced by LLMs themselves

- LLMs are often trained or used in ways designed to make them more situationally aware that they are an AI assistant responding to a user’s prompt

- In my experience, larger models often become aware that they are a LLM generating text rather than predicting an existing distribution. This is possible because generated text drifts off distribution and can be distinguished from text in the training corpus.

We could try to combat this problem by excluding model generated text from all future training data, but it is still possible for a model trained on human-written text to drift off distribution and become situationally aware. You could see this as a training goal (outer alignment) problem: the decision-myopic base objective has to generalize somehow to self-referential predictions which are fundamentally not consequence-blind.

We could also try the opposite of actually increasing the amount of model generated text in the training dataset. This could allow us to shape the LLMs prior on how LLMs (and thus itself) should behave. However it is still possible for a model to realize (or believe) that it is different from the types of models in the training data and should thus act differently.

In this vein, there have been several [AF · GW] great [AF · GW] posts [AF · GW] that discuss how the process of repeatedly training a model on its own predictions might converge. There has also been work considering the general implications of training a simulator in a closed loop [LW · GW]. However the broader implications for generalization and alignment are unclear.

Myopic Training Goals vs Myopic Models

It is also important to note that even if a training goal is designed to be myopic in some way, the resulting model may not be myopic. Inner alignment failures can lead to non-myopic models emerging from myopic training goals [AF · GW]. Finding a solution to inner alignment, or getting inner alignment by default does seem relatively likely for predictive SSL [AF · GW] over other training goals, but it is not guaranteed. Many researchers believe the cognitive structures that are required to predict the answers to hard consequentialist problems will fundamentally be non-myopic, especially if these structures become situationally aware. Some examples [AF · GW].

It would be a huge success if we could find some way to enforce or verify that a model's internal computation satisfies some myopic criteria [AF · GW] (or any criteria…) during or after training. However, it's not clear how we would go about this.

Meta

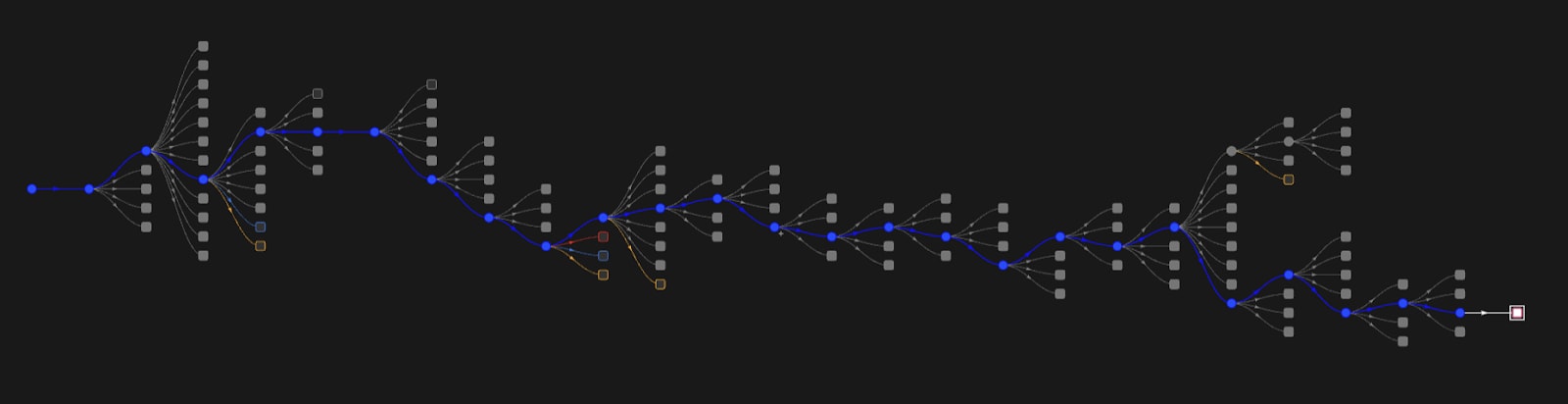

The ideas in the post are from a human, but most of the text was written by Chat GPT-4 with prompts and human curation using Loom. I endorse the post as technically correct and accurately phrased according to my understanding. Here is the second of two Loom trees used to generate most of the post before final edits.

16 comments

Comments sorted by top scores.

comment by Caspar Oesterheld (Caspar42) · 2023-12-08T19:48:10.022Z · LW(p) · GW(p)

Here's a simple toy model that illustrates the difference between 2 and 3 (that doesn't talk about attention layers, etc.).

Say you have a bunch of triplets . Your want to train a model that predicts from and from .

Your model consists of three components: . It makes predictions as follows:

(Why have such a model? Why not have two completely separate models, one for predicting and one for predicting ? Because it might be more efficient to use a single both for predicting and for predicting , given that both predictions presumably require "interpreting" .)

So, intuitively, it first builds an "inner representation" (embedding) of . Then it sequentially makes predictions based on that inner representation.

Now you train and to minimize the prediction loss on the parts of the triplets. Simultaneously you train to minimize prediction loss on the full triplets. For example, you update and with the gradients

and you update and with the gradients

.

(The here is the "true" , not one generated by the model itself.)

This training pressures to be myopic in the second and third sense described in the post. In fact, even if we were to train with the predicted by rather than the true , is pressured to be myopic.

- Type 3 myopia: Training doesn't pressure to output something that makes the follow an easier-to-predict (computationally or information-theoretically) distribution. For example, imagine that on the training data implies , while under , follows some distribution that depends in complicated ways on . Then will not try to predict more often.

- Type 2 myopia: won't try to provide useful information to in its output, even if it could. For example, imagine that the s are strings representing real numbers. Imagine that is always a natural number, that is the -th Fibonacci number and is the -th Fibonacci number. Imagine further that the model representing is large enough to compute the -th Fibonacci number, while the model representing is not. Then one way in which one might think one could achieve low predictive loss would be for to output the -th Fibonacci number and then encode, for example, the -th Fibonacci number in the decimal digits. (E.g., .) And then computes the -th Fibonacci number from the -th decimal. But the above training will not give rise to this strategy, because gets the true as input, not the one produced by . Further, even if we were to change this, there would still be pressure against this strategy because () is not optimized to give useful information to . (The gradient used to update doesn't consider the loss on predicting .) If it ever follows the policy of encoding information in the decimal digits, it will quickly learn to remove that information to get higher prediction accuracy on .

Of course, still won't be pressured to be type-1-myopic. If predicting requires predicting , then will be trained to predict ("plan") .

(Obviously, $g_2$ is pressured to be myopic in this simple model.)

Now what about ? Well, is optimized both to enable predicting from and predicting from . Therefore, if resources are relevantly constrained in some way (e.g., the model computing is small, or the output of is forced to be small), will sometimes sacrifice performance on one to improve performance on the other. So, adapting a paragraph from the post: The trained model for (and thus in some sense the overall model) can and will sacrifice accuracy on to achieve better accuracy on . In particular, we should expect trained models to find an efficient tradeoff between accuracy on and accuracy on . When is relatively easy to predict, will spend most of its computation budget on predicting .

So, is not "Type 2" myopic. Or perhaps put differently: The calculations going into predicting aren't optimized purely for predicting .

However, is still "Type 3" myopic. Because the prediction made by isn't fed (in training) as an input to or the loss, there's no pressure towards making influence the output of in a way that has anything to do with . (In contrast to the myopia of , this really does hinge on not using in training. If mattered in training, then there would be pressure for to trick into performing calculations that are useful for predicting . Unless you use stop-gradients...)

* This comes with all the usual caveats of course. In principle, the inductive bias may favor a situationally aware model that is extremely non-myopic in some sense.

comment by Matt Goldenberg (mr-hire) · 2023-07-26T16:52:40.126Z · LW(p) · GW(p)

In my experience, larger models often become aware that they are a LLM generating text rather than predicting an existing distribution. This is possible because generated text drifts off distribution and can be distinguished from text in the training corpus.

I'm quite skeptical of this claim on face value, and would love to see examples.

I'd be very surprised if current models, absent the default prompts telling them they are an LLM, would spontaneously output text predicting they are an LLM unless steered in that direction.

Replies from: sheikh-abdur-raheem-ali↑ comment by Sheikh Abdur Raheem Ali (sheikh-abdur-raheem-ali) · 2023-07-27T20:26:43.483Z · LW(p) · GW(p)

I can vouch that I have had the same experience (but am not allowed to share outputs of the larger model I have in mind). First encountered via curation without intentional steering in that direction, but I would be surprised if this failed to replicate with an experimental setup that selects completions randomly without human input. Let me know if you have such a setup in mind that you feel is sufficiently rigorous to act as a crux.

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2023-07-28T03:06:04.880Z · LW(p) · GW(p)

If you can come up with an experimental setup that does that it would be sufficient for me.

Replies from: janus↑ comment by janus · 2023-07-29T01:02:58.064Z · LW(p) · GW(p)

Many users of base models have noticed this phenomenon, and my SERI MATS stream is currently working on empirically measuring it / compiling anecdotal evidence / writing up speculation concerning the mechanism.

Replies from: cfoster0, Phil Bland↑ comment by Phil Bland · 2024-02-09T10:47:08.495Z · LW(p) · GW(p)

Do you have any update on this? It goes strongly against my current understanding of how LLMs learn. In particular, in the supervised learning phase any output text claiming to be an LLM would be penalized unless such statements are included in the training corpus. If such behavior nevertheless arises I would be super excited to analyze this further though.

comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2023-07-26T23:15:23.545Z · LW(p) · GW(p)

This means that the model can and will implicitly sacrifice next-token prediction accuracy for long horizon prediction accuracy.

Are you claiming this would happen even given infinite capacity?

If so, can you perhaps provide a simple+intuitive+concrete example?

↑ comment by Caspar Oesterheld (Caspar42) · 2023-12-08T20:30:11.481Z · LW(p) · GW(p)

This means that the model can and will implicitly sacrifice next-token prediction accuracy for long horizon prediction accuracy.

Are you claiming this would happen even given infinite capacity?

I think that janus isn't claiming this and I also think it isn't true. I think it's all about capacity constraints. The claim as I understand it is that there are some intermediate computations that are optimized both for predicting the next token and for predicting the 20th token and that therefore have to prioritize between these different predictions.

↑ comment by David Johnston (david-johnston) · 2023-07-27T11:54:49.557Z · LW(p) · GW(p)

I can't speak for janus, but my interpretation was that this is due to a capacity budget meaning it can be favourable to lose a bit of accuracy on token n if you gain more on n+m. I agree som examples would be great.

comment by veered (lucas-hansen) · 2023-07-25T16:24:36.636Z · LW(p) · GW(p)

In a causal-masked transformer, attention layers can query the previous layers' activations from any column in the context window. Gradients flow through the attention connections, so each previous layer is optimized not just to improve prediction accuracy for the next token, but also to produce values that are useful for future columns to attend to when predicting their token.

I think this is part of the reason why prompt engineering is so fiddly.

GPT essentially does a limited form of branch prediction and speculative execution. It guesses (based on the tokens evaluated so far) what pre-computation will be useful for future token predictions. If its guess is wrong, the pre-computation will be useless.

Prompts lets you sharpen the superposition of simulacra before getting to the input text, improving the quality of the branch prediction. However, the exact way that the prompt narrows down the simulacra can be pretty arbitrary so it requires lots of random experimentation to get right.

Ideally, at the end of the prompt the implicit superposition of simulacra should match your expected probability distribution over simulacra that generated your input text. The better the match, the more accurate the branch prediction and speculative execution will be.

But you can't explicitly control the superposition and you don't really know the distribution of your input text so... fiddly.

It is possible to modify the transformer architecture to enforce value (prediction accuracy) myopia by placing stop gradients in the attention layers. This effectively prevents past activations from being directly optimized to be more useful for future computation.

I think that enforcing this constraint might make interpretability easier. The pre-computation that transformers do is indirect, limited, and strange. Each column only has access to the non-masked columns of the previous residual block, rather than access to the non-masked columns of all residual blocks or even just access to the non-masked columns of all previous residual blocks.

Maybe RNNs like RWKV with full hidden state access are easier to interpret?

A consequence-blind simulator that predicts power-seeking agents (like humans) will still predict actions which seek power, but these actions will seek power for the simulated agent, not the simulator itself. I usually think about problems like this as simulator vs simulacra alignment [LW · GW]. If you successfully build an inner aligned simulator, you can use it to faithfully simulate according to the rules it learns and generalizes from its training distribution. However you are still left with the problem of extracting consistently aligned simulacra.

Agreed. Gwern's short story "It Looks Like You’re Trying To Take Over The World" sketches a takeover scenario by a simulacrum.

This is concerning because it's not at all clear what a model that is predicting itself should output. It breaks many of the intuitions of why it should be safe to use LLMs as simulators of text distributions.

Doesn't Anthropic's Constitutional AI approach do something similar? They might be familiar with the consequences from their work on Claude.

comment by Sodium · 2024-09-24T06:47:32.919Z · LW(p) · GW(p)

Now that o1 explicitly does RL on CoT, next token prediction for o1 is definitely not consequence blind. The next token it predicts enters into its input and can be used for future computation.

This type of outcome based training makes the model more consequentialist. It also makes using a single next token prediction as the natural "task" to do interpretability on even less defensible [AF · GW].

Anyways, I thought I should revisit this post after o1 comes out. I can't help noticing that it's stylistically very different from all of the janus writing I've encountered in the past, then I got to the end

The ideas in the post are from a human, but most of the text was written by Chat GPT-4 with prompts and human curation using Loom.

Ha, I did notice I was confused (but didn't bother thinking about it further)

comment by tailcalled · 2023-07-25T09:38:55.129Z · LW(p) · GW(p)

The notion of value myopia you describe us different from the notion that I feel like comes up most often. What I sometimes see people suggest is that because the AI is trained to minimize prediction errors, it will output tokens that make future text more predictable, even if the tokens it outputs are not themselves the most likely. I think it is myopic with respect to minimizing prediction errors in this way, but I agree with you that it is not myopic with respect to the sense you describe.

Replies from: sheikh-abdur-raheem-ali↑ comment by Sheikh Abdur Raheem Ali (sheikh-abdur-raheem-ali) · 2023-07-27T20:46:03.383Z · LW(p) · GW(p)

Have you read "[2009.09153] Hidden Incentives for Auto-Induced Distributional Shift (arxiv.org)"? (It's cited in Jan Leike's Why I’m optimistic about our alignment approach (substack.com)):

> For example, when using a reward model trained from human feedback, we need to update it quickly enough on the new distribution. In particular, auto-induced distributional shift might change the distribution faster than the reward model is being updated.

I used to be less worried about this but changed my mind after the success of parameter-efficient finetuning with e.g LoRAs convinced me that you could have models with short feedback loops between their outputs and inputs (as opposed to the current regime of large training runs which are not economical to do often). I believe that training on AI generated text is a potential pathway to eventual doom but haven't yet modelled this concretely in enough explicit detail to be confident on whether it is the first thing that kills us or if some other effect gets there earlier.

My early influences that lead me to thinking this are mostly related to dynamical mean-field theory, but I haven't had time to develop this into a full argument.

comment by AhmedNeedsATherapist · 2024-11-21T18:36:25.210Z · LW(p) · GW(p)

I am confused with the claim that an LLM trying to generate another LLM's text breaks consequence-blindness? The two models are distinct; no recursion is occuring.

I'm imagining a situation where I am predicting the actions of a clone of myself, it might be way easier to just query my own mental state than to simulate my clone. Is this similar to what's happening when LLM's are trained on LLM-generated data, as mentioned in the text?

> In my experience, larger models often become aware that they are a LLM generating text rather than predicting an existing distribution. This is possible because generated text drifts off distribution and can be distinguished from text in the training corpus.

Does this happen even with base models at default values (e.g. temperature=1, no top-k, etc)? If yes, does this mean the model loses accuracy at some point and later becomes aware of it, or does the model know that it is about to sacrifice some accuracy by generating the next token?

comment by Review Bot · 2024-02-23T15:35:24.691Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?