[Link Post] "Foundational Challenges in Assuring Alignment and Safety of Large Language Models"

post by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2024-06-06T18:55:09.151Z · LW · GW · 2 commentsThis is a link post for https://llm-safety-challenges.github.io/

Contents

Why you should (maybe) read (part of) our agenda Topics of particular relevance to the Alignment Forum community: Critiques of interpretability (Section 3.4) Difficulties of understanding capabilities (section 2.2) and implications for evals (section 3.3) Sociotechnical Challenges (Section 4) Safety/performance trade-offs (Section 2.7) Summary of the agenda content: Scientific understanding of LLMs Development and deployment methods Sociotechnical challenges None 2 comments

We’ve recently released a comprehensive research agenda on LLM safety and alignment. This is a collaborative work with contributions from more than 35+ authors across the fields of AI Safety, machine learning, and NLP. Major credit goes to first author Usman Anwar, a 2nd year PhD student of mine who conceived and led the project and did a large portion of the research, writing, and editing. This blogpost was written only by David and Usman and may not reflect the views of other authors.

I believe this work will be an excellent reference for anyone new to the field, especially those with some background in machine learning; a paradigmatic example reader we had in mind when writing would be a first-year PhD student who is new to LLM safety/alignment. Note that the agenda is not focused on AI existential safety, although I believe that there is a considerable and growing overlap between mainstream LLM safety/alignment and topics relevant to AI existential safety.

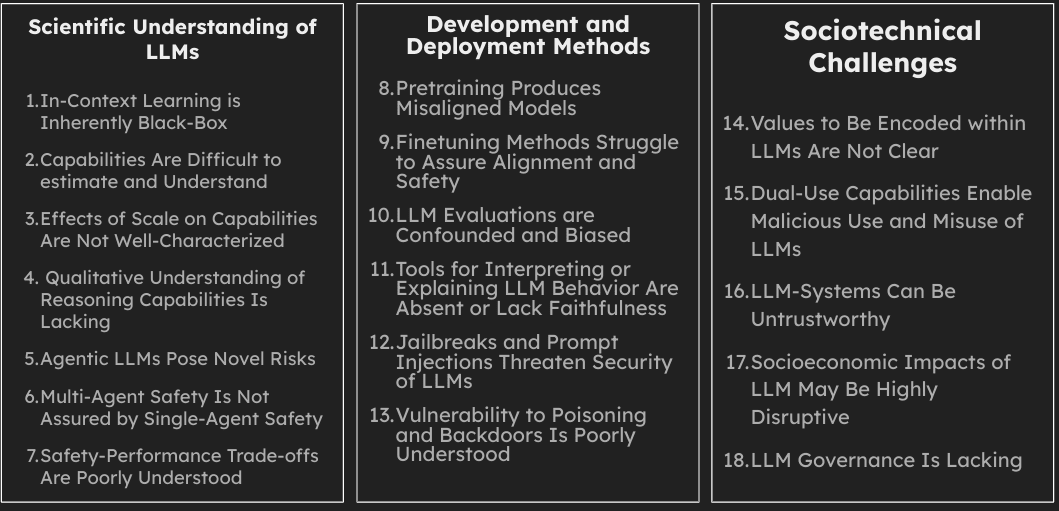

Our work covers the following 18 topics, grouped into 3 high-level categories:

Why you should (maybe) read (part of) our agenda

The purpose of this post is to inform the Alignment Forum (AF) community of our work and encourage members of this community to consider engaging with it. A brief case for doing so:

- It includes over 200 concrete research directions, which might provide useful inspiration.

- We believe it provides comprehensive coverage of relevant topics at the intersection of safety and mainstream ML.

- We cover a much broader range of topics than typically receive attention on AF.

- AI Safety researchers – especially more junior researchers working on LLMs – are clustering around a few research agendas or problems (e.g. mechanistic interpretability, scalable oversight, jailbreaking). This seems suboptimal: given the inherent uncertainty in research, it is important to pursue diverse research agendas. We hope that this work can improve accessibility to otherwise neglected research problems, and help diversify the research agendas the community is following.

- Engaging with and understanding the broader ML community – especially parts of ML community working on AI Safety relevant problems – can be helpful for increasing your work's novelty, rigor, and impact. By reading our agenda, you can better understand the ML community and discover relevant research being done in that community.

- We are interested in feedback from the AF community and believe your comments on this post could help inform the research we and others in the ML and AF communities do.

Topics of particular relevance to the Alignment Forum community:

Critiques of interpretability (Section 3.4)

- Interpretability is among the most popular research areas in the AF community, but I believe there is an unwarranted level of optimism around it.

- The field faces fundamental methodological challenges. Existing works often do not have a solid method of evaluating the validity of an interpretation, and scaling such evaluations seems challenging and potentially intractable.

- It seems likely that AI systems simply do not share human concepts, and at best have warped versions of them (as evidenced by adversarial examples). In this case, AI systems may simply not be interpretable, even given the best imaginable tools.

- In my experience, ML researchers are more skeptical and pessimistic about interpretability for reasons such as the above and a history of past mistakes. I believe the AF community should engage more with previous work in ML in order to learn from prior mistakes and missteps, and our agenda will provide useful background and references.

- This section also has lots of directions for progress in interpretability I believe might be neglected by the AF community.

Difficulties of understanding capabilities (section 2.2) and implications for evals (section 3.3)

- These sections are highly recommended to any individuals working on ‘evals’.

- Recently, many proposals for AI governance center on evaluation. However, we lack a mature scientific understanding or engineering practice of AI evaluation.

- Furthermore, lessons from other fields, such as systems engineering, have yet to be incorporated. Overall, I believe that the AF community and policymakers at large are far too optimistic about evaluations.[1]

- Section 2.2: One major issue in capabilities evaluation is that we don’t have any solid conceptualizations for what it means for an LLM to be "capable" of something.

- Anthropomorphising LLM capabilities is bad because (like for concepts or features) the ‘shape’ of LLM capabilities might be different than human capabilities.

- Traditional benchmarking practices inherited from general ML seem to be less suitable to LLMs, due to their general-purpose nature (section 2.2.3).

- In this section, we propose 3 potential directions for developing a more rigorous treatment of capabilities.

- We also discuss issues related to the fact that we have no measures to evaluate ‘generality’ of LLMs, and poor accounting of scaffolding in most evaluations.

- Section 3.3 is more about ‘practical’ concerns regarding evaluations. There are tons of open questions there. We also have a discussion on scalable oversight research in this direction.

Sociotechnical Challenges (Section 4)

- I consider these topics crucial, but relatively neglected by the AI safety community.

- The AI safety community includes those who dismiss such concerns as irrelevant or out-of-scope. I believe they can and should be considered out-of-scope for particular narrow technical problem statements, but certainly not to the larger project of AI x-safety.

- AI governance is (finally) becoming less neglected, which is good! But concrete proposals are still lacking and/or failing to be implemented. We discuss pros and cons of various approaches and research questions about their viability and utility.

- Policymakers’ concern about catastrophic risks of AI currently seems focused on scenarios where (e.g.) terrorists use LLMs to build weapons of mass destruction (WMDs). But the evidence supporting this risk is very limited in quantity and quality, threatening the credibility of AI x-risk advocates.

Safety/performance trade-offs (Section 2.7)

- One particular issue that I believe is core to AI x-risk is trade-offs between safety and performance. I believe such trade-offs are likely to persist for the foreseeable future, making coordination harder, and technical research insufficient.

- This has long been a core part of my concerns around AI x-risk, and I think it is still largely lacking from the public conversation; I believe technical research could help mainstream these concerns, and AF members may be well-suited for conducting such research.

- For instance, such trade-offs are large and apparent when considering assurance, since we lack any way of providing high levels of assurance for SOTA AI systems, and this situation is likely to persist for the indefinite future.

- Another key axis of trade-offs is in terms of the how “agentic” a system is, as previously argued by Gwern, Tool AIs -- which could lack instrumental goals and thus be much safer -- are likely to be outcompeted by AI agents.

Summary of the agenda content:

As a reminder, our work covers the following 18 topics, grouped into 3 high-level categories. These categories can themselves be thought of as ‘meta-challenges’.

Scientific understanding of LLMs

This category includes challenges that we believe are more on the understanding side of things, as opposed to the developing methods side of things. We specifically call out for more research on understanding ‘in-context learning’ and ‘reasoning capabilities’ in LLMs (sections 2.1 & 2.4); how they develop, how they manifest, and most importantly how they might evolve with further scaling and research progress in LLMs.. We have two sections (sections 2.2 & 2.3) that focus on challenges related to conceptualizing, understanding, measuring, and predicting capabilities. These sections in particular we believe are worth a read for researchers working on capabilities evaluations. We also call for more research into understanding the properties and risks of LLM-agents in both single-agent and multi-agent settings (sections 2.5 and 2.6). In pre-LLM times, research on (reinforcement-learning-based) ‘agents’ was a relatively popular research area within the AI Safety community, so, there is plausibly lots of low-hanging fruit in terms of just identifying what prior research from RL agents (in both single-agent and multi-agent settings) transfers to LLM-agents. The last section in this category (section 2.7) is focused on challenges related to identifying and understanding safety-performance trade-offs (aka alignment-tax), which is another research direction that we feel is highly important yet only nominally popular within the AI Safety community.

Development and deployment methods

This category is in some sense catch-all category where we discuss challenges related to the pretraining, finetuning, evaluation, interpretation and security of LLMs. The common theme of discussion in this category is that research required to address most challenges is ‘developing new methods’ type. In pretraining (section 3.1), two main research directions that are neglected but likely quite impactful are training data attribution methods (‘influence function’ type methods) and trying to modify pretraining methodology in ways that are helpful to alignment and safety. An example is ‘task-blocking’ models where the idea is to make it costly for an adversary to finetune an LLM to elicit some harmful capability. We have an extensive discussion on deficiencies of current finetuning methods as well (section 3.2). Current finetuning methods are problematic from safety POV as they work more to ‘hide’ harmful capabilities as opposed to ‘deleting’ them. We think more research should be done on both the targeted removal of known undesirable capabilities and behaviors, as well as on the removal of unknown undesirable capabilities. The latter in particular seems to be highly neglected in the community right now. We then go on to discuss challenges related to evaluations and interpretability (sections 3.3 and 3.4). We say more about what is relevant here in the following section. The last two sections in this category (sections 3.5 and 3.6) are about challenges related to the security of these problems; while jailbreaking is a pretty popular research area (though it is unclear how useful most of the research that is happening here is), data poisoning is an equally alarming security risk yet seems to be highly neglected. There is some work showing that poisoning models trained on public data is possible, and the sleeper agents paper showed that standard finetuning stuff does not de-poison a poisoned model.

Sociotechnical challenges

Finally, AI Safety community tends to fixate on just the technical aspect of the problem but viewing safety from this narrow prism might prove harmful. Indeed, as we say in the paper “An excessively narrow technical focus is itself a critical challenge to the responsible development and deployment of LLMs.” We start this category by discussing challenges related to identifying the right values to encode in LLMs. Among other things, we discuss that there exist various lotteries that will likely impact what values we encode in LLMs (section 4.1.3). We think this is a relatively less well-appreciated point within AI Safety/Alignment community. We then discuss gaps in our understanding of how current and future LLMs could be used maliciously (section 4.2). There is an emerging viewpoint that RLHF ensures that LLMs no longer pose accidental harm; we push back against this claim and assert that LLMs remain lacking in ‘trustworthiness’ in section 4.3. The last two sections (4.4 and 4.5) respectively discuss challenges related to disruptive socioeconomic impacts of LLMs and governance of LLMs. Section 4.5 on governance should be a good intro for anyone interested in doing more governance work.

- ^

I believe some of the enthusiasm in the safety community is coming from a place of background pessimism, see: https://twitter.com/DavidSKrueger/status/1796898838135160856

2 comments

Comments sorted by top scores.

comment by Joe Collman (Joe_Collman) · 2024-06-06T22:20:47.694Z · LW(p) · GW(p)

I think this is great overall.

One area I'd ideally prefer a clearer presentation/framing is "Safety/performance trade-offs".

I agree that it's better than "alignment tax", but I think it shares one of the core downsides:

- If we say "alignment tax" many people will conclude ["we can pay the tax and achieve alignment" and "the alignment tax isn't infinite"].

- If we say "Safety/performance trade-offs" many people will conclude ["we know how to make systems safe, so long as we're willing to sacrifice performance" and "performance sacrifice won't imply any hard limit on capability"]

I'm not claiming that this is logically implied by "Safety/performance trade-offs".

I am claiming it's what most people will imagine by default.

I don't think this is a problem for near-term LLM safety.

I do think it's a problem if this way of thinking gets ingrained in those thinking about governance (most of whom won't be reading the papers that contain all the caveats, details and clarifications).

I don't have a pithy description that captures the same idea without being misleading.

What I'd want to convey is something like "[lower bound on risk] / performance trade-offs".

↑ comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2024-06-07T01:39:31.027Z · LW(p) · GW(p)

Really interesting point!

I introduced this term in my slides that included "paperweight" as an example of an "AI system" that maximizes safety.

I sort of still think it's an OK term, but I'm sure I will keep thinking about this going forward and hope we can arrive at an even better term.