OpenAI could help X-risk by wagering itself

post by VojtaKovarik · 2023-04-20T14:51:00.338Z · LW · GW · 16 commentsContents

16 comments

While brainstorming on “conditional on things going well with AI, how did it happen”, I came up with the following idea. I think it is extremely unrealistic right now.[1] However, it might become relevant later if some key people in top AI companies have a change of heart. As a result, it seems useful to have the idea floating around.

OpenAI[2] is at the center of attention regarding recent AI progress. I expect that in the view of both public and the politicians, OpenAI’s actions and abilities are representative of actions and abilities of AI companies in general. As a result, if OpenAI genuinely tried to make their AI safe, and failed in a very spectacular manner, this would cause a sudden and massive shift in public opinion on AI in general.

Now, I don’t expect OpenAI to sacrifice itself by faking incompetence. (Nor would I endorse being deceitful here.) However, there might be some testable claim that is something of crux for both “AI-optimists” and, say, MIRI. OpenAI could make some very public statements about their abilities to control AI. And they could really stick their neck out by making the claims falsifiable and making a possible failure impossible to deny (and memetically fit, etc). This way, if they fail, this would create a Risk Awareness Moment [EA · GW] where enough people become mindful of AI-risk that we can put in place extreme risk-reduction measures that wouldn’t be possible otherwise. Conversely, if they succeed, that would be a genuine evidence that such measures are not necessary.

I end with an open problem: Can we find claims, about the ability of AI companies to control their AI, that would simultaneously:

- be testable,

- serve as strong evidence regarding the question where extreme measures regarding AI-risk are needed,

- have the potential to create a risk-awareness moment (ie, be salient to decision-makers and public),

- be possible for an AI company to endorse despite satisfying (i-iii).

- ^

Mostly, I don’t expect this to happen, because any given AI company has nothing to gain from this. However, some individual employees of an AI company might have sufficient uncertainty about AI risk to make this worth it to them. And it might be possible that a sub-group of people within an AI company could make a public commitment such as the one above somewhat unilaterally, in a way that wouldn’t allow the company to back out gracefully

- ^

I will talk about OpenAI for concreteness, but this applies equally well to some of the other major companies (Facebook, Microsoft, Google; with “your (grand)parents know the name” being a good heuristic).

16 comments

Comments sorted by top scores.

comment by 1a3orn · 2023-04-20T17:29:04.174Z · LW(p) · GW(p)

This works without OpenAI's cooperation! No need for a change of heart.

Just the people concerned about AI x-risk could make surprising, falsifiable predictions about the future or about the architecture of future intelligences, really sticking their necks out, apart from "we're all going to die." Then we'd know that the theories on which such statements of doom are based have predictive value and content.

No need for OpenAI to do anything -- a crux with OpenAI would be nice, but we can see that theories have predictive value even without such a crux.

(Unfortunately, efforts [? · GW] in this direction seem to me to have largely failed. I am deeply pessimistic of the epistemics of a lot of AI alignment for this reason -- if you read an (excellent) essay like this and think it's a good description of human rationality and fallibility, I think the lack of predictions is a very bad sign.)

Replies from: daniel-kokotajlo, capybaralet, M. Y. Zuo, VojtaKovarik↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-21T01:52:35.825Z · LW(p) · GW(p)

You act like we haven't done that already? As far as I can tell the people concerned about AI x-risk have been ahead of the curve when it comes to forecasting AI progress, especially progress towards AGI.

For example, here are some predictions made about a decade ago that lots of people disagreed with at the time, that now seem pretty prescient:

--AGI is coming in our lifetimes and (some people were saying) maybe in about ten to twenty years.

--There probably isn't going to be another AI winter (IIRC Bostrom said this in Superintelligence?)

--It's gonna be agentic, not mere tool.

--It's gonna be a unified system that generalizes across lots of different tasks, rather than a comprehensive system of different services/modules.

--AI companies and nations will race to build it and will sacrifice safety along the way.

--AGI is a BIG FUCKING DEAL that we all should be thinking about and trying to prepare for

Then there are the Metaculus records etc.

More recently, and more personally, I wrote this scenario-forecast [LW · GW] two years ago & if you know of anything similar written at roughly that time or earlier I'd love to see it.

Finally, and separately: Your epistemology is broken if you look at two groups of people who are making opposing claims, but have not managed to disagree on any short-term predictions so far, and then pick the group you don't like and say "They suck because their claims aren't falsifiable, they don't have predictive values, etc." You could equally have said that about the other group. In this case, the non-doomers. Their statements of nondoom don't have predictive content, are unfalsifiable, etc.

↑ comment by jacob_cannell · 2023-04-21T05:43:40.601Z · LW(p) · GW(p)

Who made these predictions about a decade ago?

The main forecasters who first made specific predictions like that are Morevac and later Kurzweil, who both predicted AGI in our lifetimes (moravec: 2020's ish, kurzweil 2029 ish) and more or less predicted it would be agentic, unified, and a BIG FUCKING DEAL. Those predictions were first made in the 90's.

Young EY seemed just a tad overexcited about the future and made various poorly calibrated predictions [EA · GW], perhaps this is why he seemed to got to some lengths to avoid any specific predictions as a later adult. (and even recently EY still seems to have unjustified nanotech excitement)

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-21T13:03:15.640Z · LW(p) · GW(p)

Yud, Bostrom, myself, Gwern, ... it was pretty much the standard view on LW?

Moravec and Kurzweil definitely deserve credit for their forecasts, even more than Yudkowsky I'd say.

↑ comment by jacob_cannell · 2023-04-21T16:56:58.680Z · LW(p) · GW(p)

Your first post seems to be only 4 years ago? (if you expressed these views a decade ago in comments I don't see an easy way to find those currently) I was posting about short timelines 8 years ago, but from what I recall a decade ago timelines were longer, DL was not recognized as the path to AGI, etc - but yes I guess most of the points you mention were covered in Bostrom's SI 9 years ago and were similar to MIRI/LW views around then.

Nonetheless MIRI/LW did make implicit predictions and bets on the path to AGI that turned out to be mostly wrong [LW(p) · GW(p)], and this does suggest poor epistemics/models [LW · GW] around AGI and alignment.

If you strongly hold a particular theory about how intelligence works and it turns out to be mostly wrong, this will necessarily undercut many dependent arguments/beliefs - in this case those concerning alignment risks and strategies.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-21T20:47:51.318Z · LW(p) · GW(p)

Ah, I shouldn't have said "myself," sorry. Got carried away there. :( I first got involved in all this stuff about ten years ago when I read LW, Bostrom, etc. and found myself convinced. I said so to people around me, but I didn't post about it online.

I disagree that the bets turned out to be mostly wrong, and that this suggests poor epistemics (though it does suggest poor models of AGI, and to a lesser extent, models of alignment). Thanks for the link though, I'll ponder it for a bit.

↑ comment by 1a3orn · 2023-04-21T15:06:04.421Z · LW(p) · GW(p)

The claim is not that all AI alignment has bad epistemics -- I haven't read all of it, and I know some at least make empirical hypotheses, and like and enjoy the parts which do -- the claim is that a lot do. Including, alarmingly, the genealogically first, most influential, and most doomy.

The central example is of course MIRI, which afaict maintains that there is a > 99% chance of doom because of deep, hard-to-communicate insight into the nature of intelligence, agency, and optimization, while refraining from making any non-standard predictions about intelligence in the world prior to doom. If your causal model based on understanding intelligence gives a tight 99% probability of doom, then it should also give you some tight predictions before then, and this is an absolutely immense red flag if it does not. Point me to some predictions if I'm wrong.

(It's an immense red flag even if Yann LeCunn or some non-doomers also have sucky epistemics, make non falsifiable claims, etc.)

I agree with Jacob that the early broad correct claims about the future seem non-specific to MIRI / early AI alignment concerns-- I think it probably makes more sense to defer to Kurzweil than Yud off those (haven't read K a ton). And many early MIRI-specific claims seem just false -- I just reread IEM and it heavily thinks, for instance, that clever algorithms rather than compute is the path to intelligence, which looks pretty wrong from the current view.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-21T15:23:51.654Z · LW(p) · GW(p)

I'm happy to criticize Yudkowsky, I think there's some truth to various of your criticisms in fact. I do think you are being way too uncharitable to him though. For example, 'clever algorithms' are in fact responsible for approximately half of the progress towards AGI in recent decades, IIRC, and I expect them to continue to play a role, and I don't think IEM was making any claims along the lines of 'compute is irrelevant' or 'compute is fairly unimportant.'

But in general, on average, the epistemics of the doomers are better than the epistemics of the non-doomers. I claim. And moreover the epistemics of Yudkowsky are better than the epistemics of most non-doomers.

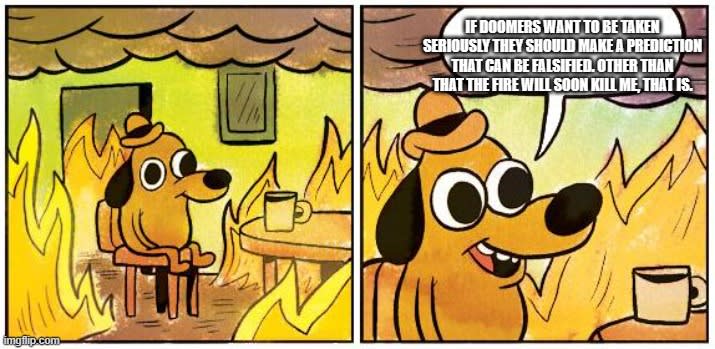

I think you are confused about how to use falsifiability. Here, perhaps this meme is a succinct way explain what I mean:

↑ comment by 1a3orn · 2023-04-21T18:02:47.010Z · LW(p) · GW(p)

So if someone prophesied doom by asteroid, we'd be unsatisfied with someone saying "there will be a giant explosion from an asteroid." We'd probably want them to be able to predict locations in the sky where you could see the asteroid, before we shifted society to live in underground bunkers to protect us from the blast. Why do we expect them to be able to predict the doom, but no non-doom? Why would their knowledge extend to one thing, but not the other?

Similarly, if someone prophesied doom from a supernatural occurrence, we'd also want to see some miracles, proofs, signs -- or at least other supernatural predictions -- before we covered ourselves in sackcloth and ashes and started flagellating ourselves to protect ourselves from God's wrath. Why would we expect them to be able to predicted doom, but nothing else? Not adhering to this standard historically would result in you selling all your possessions quite unreasonably.

I could go on for quite some time. I think this is a super reasonable standard. I think not adhering to it, historically, makes you fall for things that are straightforward cults. It's basically a prior against theories of doom getting to by coincidence not make any predictions prior to doom. Yeah, sure.

So, yeah, I've seen the above meme a lot on Twitter -- I think it's evidence that the epistemics of many doomers are just bad, inasmuch as it is shared and liked and retweeted. You can construe it either as (1) mocking people for not seeing something which is just obvious, or its (2) just asserting that you don't need to make any other predictions, doom is plenty. (2) is just a bad epistemic standard, as history suggests.

And mocking people is, well, for sure a move you can do.

Replies from: daniel-kokotajlo, VojtaKovarik↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-04-21T21:07:07.999Z · LW(p) · GW(p)

I analogized AI doom to saying-the-fire-will-kill-the-dog; you analogize AI doom to saying-supernatural-shit-will-happen. Which analogy is more apt? That's the crux.

OK, let's zoom in on the fire analogy from the meme. The point I was making is that the doomers have been making plenty of falsifiable predictions which have in fact been coming true -- e.g. that the fire will grow and grow, that the room will get hotter and brighter, and eventually the dog will burn to death. Falsifiability is relative. The fire-doomers have been making plenty of falsifiable predictions relative to interlocutors who were saying that the fire would disappear or diminish, but not making any falsifiable predictions relative to interlocutors who were saying that the fire would burn brighter and hotter but then somehow fail to kill the dog for some reason or other.

Similarly, the AGI doomers have been making plenty of falsifiable predictions relative to most people, e.g. people who didn't think AGI was possible in our lifetimes, or people who thought it would be tool-like rather than agent-like, or modular rather than unified. But their predictions have been unfalsifiable relative to certain non-doomers who also predicted unified agentic AGI that for one reason or another wouldn't cause doom.

OK. Next point: Falsifiability is symmetric. If my predictions are unfalsifiable relative to yours, yours are also unfalsifiable relative to me. Fundamentally what's going on is simply that there are two groups of people whose expectations/predictions are the same (at least for now). This sort of thing happens all the time, and in fact is inescapable due to underdetermination of theory by data.

Next point: We have more resources to draw from besides falsifiability. We can evaluate arguments, we can build models and examine their assumptions, we can compare the simplicity and elegance of different models... All the usual tools of science for adjudicating disputes between different views that are both consistent with what we've seen so far.

Final point: AGI doomers have been unusually good about trying to stick their necks out and find ways that their views differ from the views of others. AGI doomers have been making models and arguments, and putting them in writing, and letting others attack premises and cast doubt on assumptions and poke holes in logic and cheer when a prediction turns out false.... more so than AGI non-doomers. Imagine what it would look like if the shoe was on the other foot: there'd be an "AGI non-doom" camp that would be making big multi-premise arguments about why AGI will probably go well by default, and making various predictions, and then a dispersed, disunified gaggle of doomer skeptics poking holes in the argument and saying "we don't have enough evidence for such a crazy view" and "show me a falsifiable claim" and cheering when some prediction turns out false.

Putting it together... IMO you are using "y'all aren't making falsifiable predictions" as a rhetorical cudgel with which to beat your opponents, when actually they would be equally justified in using it against you. It's a symmetric weapon, it isn't actually a component of good epistemology (at least not when used the way you are using it.)

↑ comment by VojtaKovarik · 2023-04-22T03:00:53.759Z · LW(p) · GW(p)

(0) What I hint at in (1-3) seems like a very standard thing, for which there should be a standard reference post. Does anybody know it? If not, somebody should write on it.

(1) Pascal's wagers in general: Am I interperting you correctly as saying that AI risk, doom by asteroid, belief in Christianity, doom by supernatural occurence, etc., all belong to the same class of "Pascal's wager-like" decision problems?

If yes, then hurray, I definitely agree. However, I believe this is where you should bite the bullet and treat every Pascal's wager seriously, and only dismiss it once you understand it somewhat. In particular, I claim that the heuristic "somebody claims X has extremely high or low payoffs ==> dismiss X until we have a rock-solid proof" is not valid. (Curious whether you disagree.) ((And to preempt the likely objection: To me, this position is perfectly compatible with initially dismissing crackpots and God's wrath without engaging with even listening to the arguments; see below.))

Why do I believe this?

First, I don't think we encounter so many different Pascal wagers that considering each one at least briefly will paralyze us. This is made easier because many of them are somewhat similar to each other (eg "religions", "conspiracy theories", "crackpot designs for perpetum mobile", ...), which allows you to only thing through a single representative of each class[1], and then use the heuristics like "if it pattern-matches on a conspiracy theory, tentatively ignore it outright". (Keyword "tentatively". It seems fair to initially dismiss covid lab-leak hypothesis as a conspiracy. But if you later hear that there was a bio-lab in Wuhan, you should reevaluate. Similarly, I dismiss all religions outright, but I would spend some attention on it if many people I trust suddenly started saying that they saw miracles.)

Second, many Pascal wagers are non-actionable. If you learn about meteors or super-vulcanos in 18th century, there is not much you can do. Similarly, I assign a non-zero probability to the simulation probability. And perhaps even a non-zero probability of somebody running a simulation of a world that actually works on christianity, just for the lolz of it.[2] But it is equally possible that this guy runs it on anti-christianity (saints going to hell), just for the lolz of it. And I don't know which is more probable, and I have no way of resolving this, and the payoffs are not literally infinite (that guy would have a finite compute budget). So there is no point in me acting on this either.

Third, let me comment a bit on the meteor example [I don't know the historical details, so I will be fabulating somewhat]: Sometime in the previous century, we learned about an asteroid hitting earth in the past and killing the dinosaurs. This seems like "there might be a giant explosion from an asteroid". We did not force everybody to live underground, not because we didn't take the risk seriously, but because doing so would have been stupid (we probably didn't have an asteroid-surviving technology anyway, and the best way to get it would have been to stay on the surface). However, we did spend lots of money on the asteroid detection program to understand the risk better. As a result, we now understand this particular "Pascal's wager" better, and it seems that in this particular case, the risk is small. That is, the risk is small enough that it is more important to focus on other things. However, I believe that we should still spend some effort on preventing asteroid risk (actually, I guess we do do that). And if we had no more-pressing issues (X-risk and otherwise), I totally would invest into some resources asteroid-explosion-survival measures.

To summarize the meteor example: We did not dismiss the risk out of hand. We investigated it seriously, and then we only dismissed it once we understood it. And it never made sense to apply extreme measures, because they wouldn't have been effective even if the risk was real. (Though I am not sure whether that was on purpose or because we wouldn't been able to apply extreme measures even if they were appropriate.) ((Uhm, please please please, I hope nobody starts making claims about low-level implications of the asteroid risk-analysis to AI risk-analysis. The low-level details of the two cases have nothing in common, so there are no implications to draw.))

(2) For "stop AI progress" to be rational, it is enough to be uncertain.

- Sure, if you claim something like "90% that AI is going to kill us" or "we are totally sure that AI is risky", then the burden of proof is on you.

- But most people who advocate treating AI as risky, and that we should stop/slow/take extreme measures, do not claim anything like "90% that AI is going to kill us". Most often, you see claims like (i) "at least 5% that AI could kill us", or (ii) "we really don't know whether AI might kill us, one way or the other".

- And if you claim non-(i) or non-(ii), the burden of proof totally is on you. And I think that literally nobody in the world has a good enough model of AI & the strategic landscape to justify non-(i) or non-(ii). (Definitely nobody has published a gears-level model like that.)

- Also, I think that to make Pascal's wager on AI rational, it is enough to believe (either (i) or (ii)) together with "we have no quick way of resolving the uncertainty".

(3) AI risk as Pascal's wager:

I claim that it is rational to treat AI as a Pascal's wager, but that in this particular case, the details come out very differently (primarily because we understand AI so little).

To give an illustration (there will be many nonsense bits in the details; the goal is to give the general vibe):

- If you know literally nothing about AI at all, it is fair to initially pattern-match it to "yeah yeah, random people talking about the end of the world, that's bullshit".

- However, once you learn that AI stands for "artificial intelligence" you really should do a double-take: "Hey, intelligence is this thing that maybe makes us, and not other species, dominant on this planet. Also, humans are intelligent and they do horrible things to each other. So it is at least plausible that this could destroy us." (That's without knowing anything at all about how AI should work.)

- At that point, you should consider whether this AI thing might be achievable. And if it is, say, year 1900, then you should go "I have literally no clue, so I should act as if the chance is non-negligible". And then (much) later people invent computers and you hear about the substrate-independence thesis. You don't understand any of this, but it sounds plausible. And then you should treat AI as a real possibility, until you can get a gear's-level understanding of the details. (Which we still don't have!)

- Also, you try understand whether AI will be dangerous or not, and you will mostly come to the conclusion that you have no clue whatsoever. (And we still don't even now. And on the current timeline, we probably won't know until we get superintelligence.)

- And from that point onward, you should be like "holy ****, this AI thing could be really dangerous! We should never build any thing like that unless we understand exactly what we are doing!".

- This does not mean you will not build a whole bunch of AI. You will build calculators, and you will build GOFAI, and tabular model-based RL.[3] And then somebody comes up with the idea of deep learning, and you are like "holy ****, we have no clue how this works!". And you put a moratorium on it, to quickly figure out what to do. And later you probably you end up controlling it pretty tightly, because you don't know how it scales. And you only do the research on DL slowly, such that your interpretability techniques and other theory never gets behind.

Obviously, we don't live in a world capable of something like above. But it remains a fact that we now have strong evidence that (1) AGI probably is possible and (2) we still have no clue whether it is going to be harmful of not, despite trying. And if we could do it, the rational thing to do would be to immediatelly stop most AI work until we figure out (2).

- ^

When I was younger, I did think a lot about christianity and I have a detailed model that causes me to assign literally 0 probability to it being correct. Similarly, I did engage with some conspiracy theories before dismissing them. I believe that justifies me to tentatively immediatelly dismiss anything that pattern matches to religion and conspiracy theories. However, if I never heard about any religion, then upon encountering one for the first time, I would totally not be justified to dismiss it outright. And let me bite the bullet here: I would say that if you were a random guy in ancient Egypt, without particularly good models of the world or ways to improve them, the rational thing to do could have been to act as if the Egyptian gods were real. [low confidence though, I haven't thought about this before]

- ^

Not to be confused with the hypothesis that the universe actually works according to any of of the religions considered on earth without assuming simulation hypothesis. To that, I assign literally 0 probability.

- ^

But for all of those things, you briefly consider whether those things could be dangerous and conclude they aren't. (Separately from AI risks: In an ideal world, you would also make some experiments to see how these things are affecting the users. EG, to see whether kids' brains detoriate by relying on calculators. In practice, our science methods sucked back then, so you don't learn anything. But hey, talking about how a better world would work.)

↑ comment by David Scott Krueger (formerly: capybaralet) (capybaralet) · 2023-04-20T23:32:29.510Z · LW(p) · GW(p)

Christiano and Yudkowsky both agree AI is an x-risk -- a prediction that would distinguish their models does not do much to help us resolve whether or not AI is an x-risk.

Replies from: VojtaKovarik↑ comment by VojtaKovarik · 2023-04-21T22:45:14.591Z · LW(p) · GW(p)

I agree with with you wrote, but I am not sure I understand what you meant to imply by it.

My guess at the interpretation is: (1) 1a3orn's comment cites the Yudkowsky-Christiano discussions as evidence that there has been effort to "find testable double-cruxes on whether AI is a risk or not", and that effort mostly failed, therefore he claims that attempting to "testable MIRI-OpenAI double crux" is also mostly futile. (2) However, because Christiano and Yudkowsky agree on x-risk, the inference in 1a30rn's comment is flawed.

Do I understand that correctly? (If so, I definitely agree.)

↑ comment by M. Y. Zuo · 2023-04-20T20:58:20.391Z · LW(p) · GW(p)

I strongly upvoted because this is a solid point.

Without credibly costly, irreversible, signals, most of the credibility that would have accrued to many of the efforts in the last decade, simply never did. Since folks weren't able to tell what's what.

↑ comment by VojtaKovarik · 2023-04-21T15:37:40.169Z · LW(p) · GW(p)

This works without OpenAI's cooperation! No need for a change of heart.

Based on this sentence, I believe that you misunderstood the point of the post. (So all of the follow-up discussion seems to be off-topic to me.)

Therefore, an attempt to clarify: The primary goal of the post is to have a test that, if it turns out that the "AI risk is a big deal" hypothesis is correct, we get a "risk awareness moment [EA · GW]". (In this case, a single moment where a large part of the public and decision-makers simultaneously realise that AI risk likely is a big deal).

As a result, this doesn't really work without OpenAI's cooperation (or without it being, eg, pressured into this by government or public). I suggest that discussing that

comment by mic (michael-chen) · 2023-05-08T06:44:15.714Z · LW(p) · GW(p)

To some extent, Bing Chat is already an example of this. During the announcement, Microsoft promised that Bing would be using an advanced technique to guard it against user attempts to have it say bad things; in reality, it was incredibly easy to prompt it to express intents of harming you, at least during the early days of its release. This led to news headlines such as Bing's AI Is Threatening Users. That's No Laughing Matter.