So, geez there's a lot of AI content these days

post by Raemon · 2022-10-06T21:32:20.833Z · LW · GW · 140 commentsContents

Is this bad? Whispering "Rationality" in your ear Improve Rationality Onboarding Materials Sequence Spotlights More focused recommendations Rewritten About Page [Upcoming] Update Latest Tag-Filters Can't we just move it to Alignment Forum? Content I'd like to see more of World Modeling / Optimization Rationality Content What are your thoughts? None 141 comments

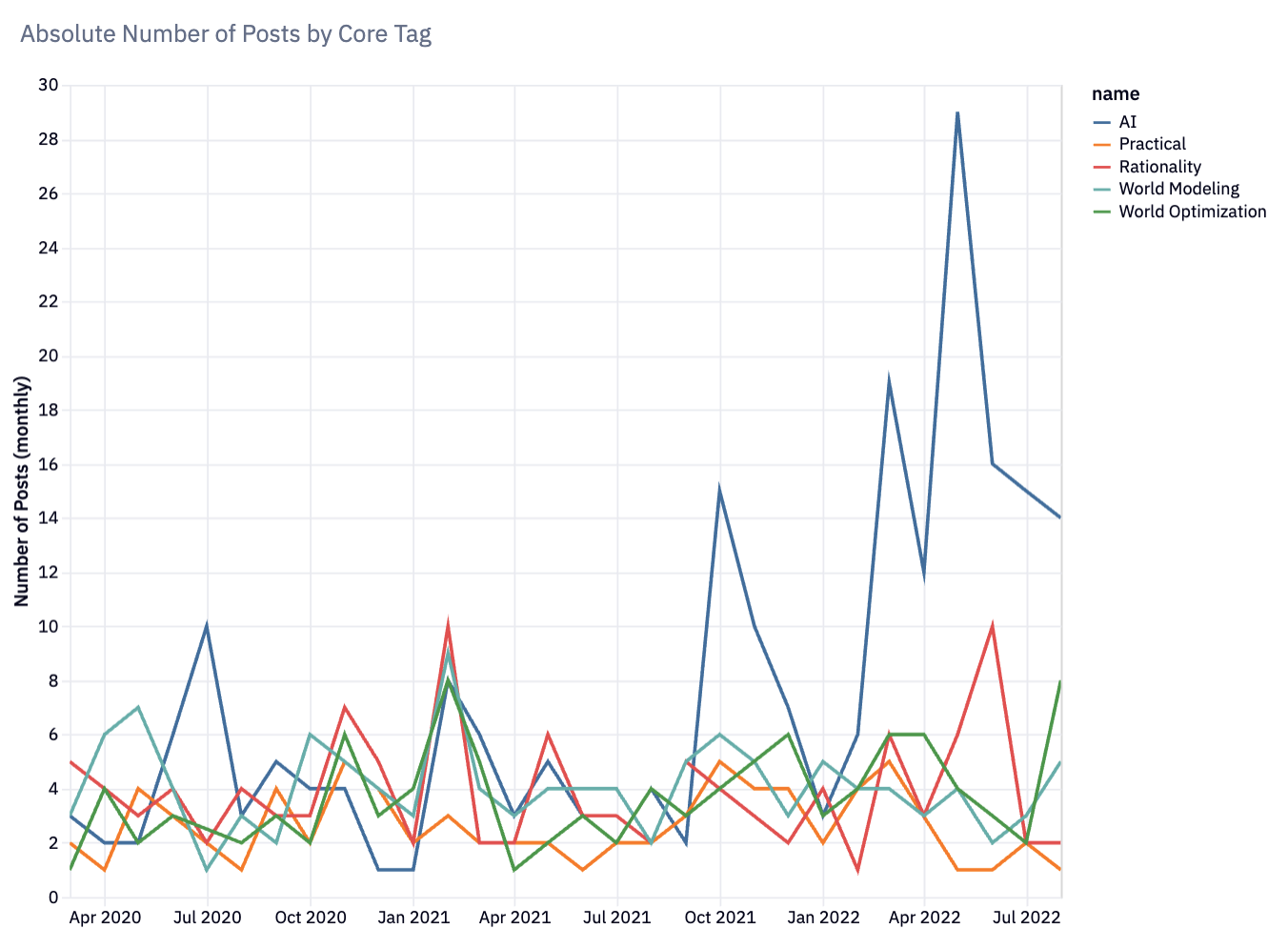

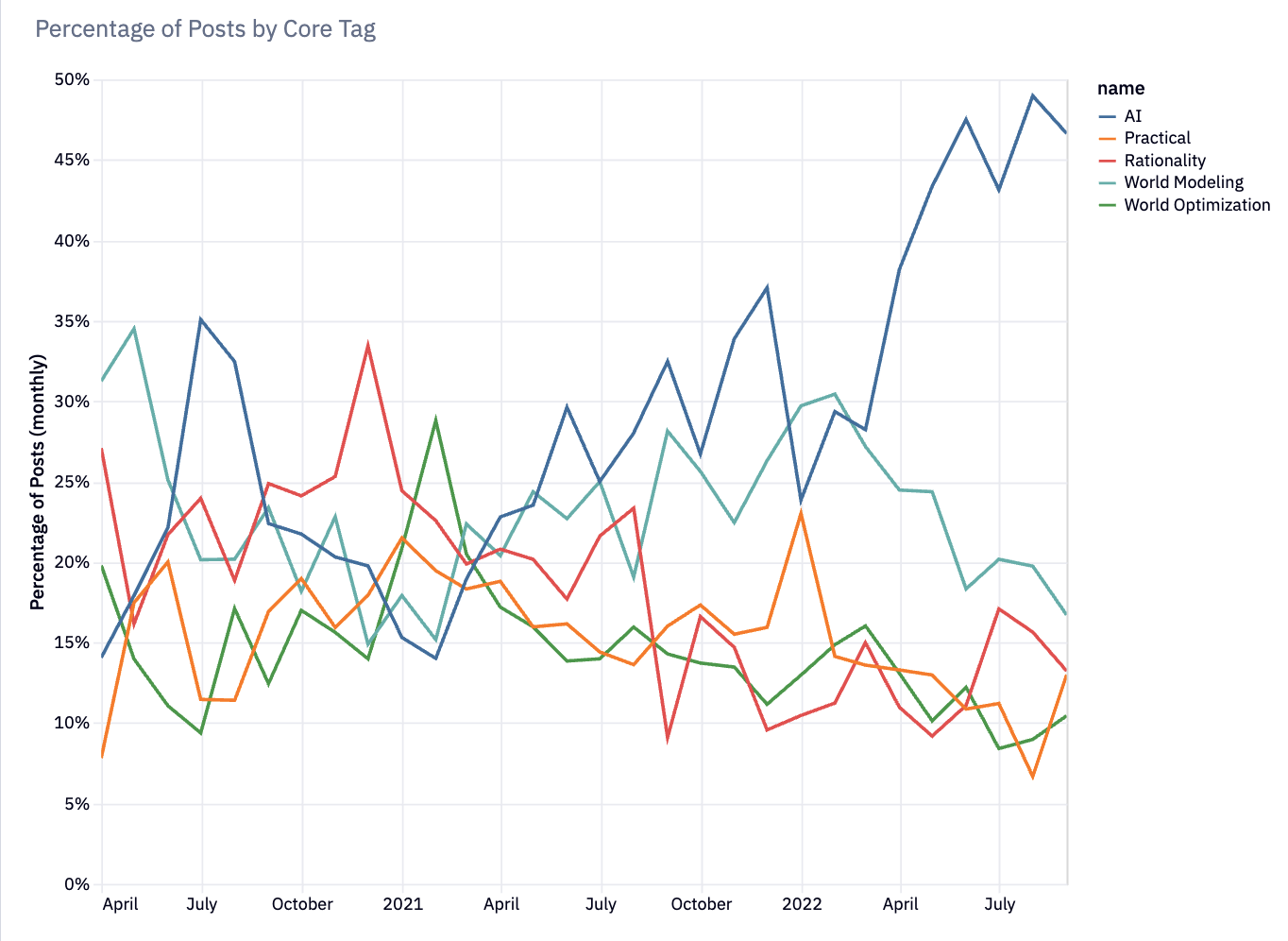

Since April [LW · GW] this year, there's been a huge growth in the the number of posts about AI, while posts about rationality [? · GW], world modeling [? · GW], etc. have remained constant. The result is that much of the time, the LW frontpage is almost entirely AI content.

Looking at the actual numbers, we can see that during 2021, no core LessWrong tags[1] represented more than 30% of LessWrong posts. In 2022, especially starting around April, AI has started massively dominating the LW posts.

Here's the total posts for each core tag each month for the past couple years. On April 2022, most tags' popularity remains constant, but AI-tagged posts spike dramatically:

Even people pretty involved with AI alignment research have written to say "um, something about this feels kinda bad to me."

I'm curious to hear what various LW users think about the situation. Meanwhile, here's my own thoughts.

Is this bad?

Maybe this is fine.

My sense of what happened was that in April, Eliezer posted MIRI announces new "Death With Dignity" strategy [LW · GW], and a little while later AGI Ruin: A List of Lethalities [LW · GW]. At the same time, PaLM and DALL-E 2 came out. My impression is that this threw a brick through the overton window and got a lot of people going "holy christ AGI ruin is real and scary". Everyone started thinking a lot about it, and writing up their thoughts as they oriented.

Around the same time, a lot of alignment research recruitment projects (such as SERI MATS [? · GW] or Refine [LW · GW]) started paying dividends, and resulting in a new wave of people working fulltime on AGI safety.

Maybe it's just fine to have a ton of people working on the most important problem in the world?

Maybe. But it felt worrisome to Ruby and me. Some of those worries felt easier to articulate, others harder. Two major sources of concern:

There's some kind of illegible good thing that happens when you have a scene exploring a lot of different topics. It's historically been the case that LessWrong was a (relatively) diverse group of thinkers thinking about a (relatively) diverse group of things. If people show up and just see the All AI All the Time, people who might have other things to contribute may bounce off. We probably wouldn't lose this immediately

AI needs Rationality, in particular. Maybe AI is the only thing that matters. But, the whole reason I think we have a comparative advantage at AI Alignment is our culture of rationality. A lot of AI discourse on the internet is really confused. There's such an inferential gulf [LW · GW] about what sort of questions are even worth asking. Many AI topics deal with gnarly philosophical problems, while mainstream academia is still debating whether the world is naturalistic. Some AI topics require thinking clearly about political questions that tend to make people go funny in the head [LW · GW].

Rationality is for problems we don't know how to solve [LW · GW], and AI is still a domain we don't collectively know how to solve.

Not everyone agrees that rationality is key, here (I know one prominent AI researcher who disagreed). But it's my current epistemic state.

Whispering "Rationality" in your ear

Paul Graham says that different cities whisper different ambitions in your ear. New York whispers "be rich". Silicon Valley whispers "be powerful." Berkeley whispers "live well." Boston whispers "be educated."

It seems important for LessWrong to whisper "be rational" in your ear, and to give you lots of reading, exercises, and support to help you make it so.

As a sort of "emergency injection of rationality", we asked Duncan to convert the CFAR handbook from a PDF into a more polished sequence [? · GW], and post it over the course of a month. But commissioning individual posts is fairly expensive, and over the past couple months the LessWrong team's focus has been to find ways to whisper "rationality" that don't rely on what people are currently posting.

Some actions we've done:

Improve Rationality Onboarding Materials

Historically, if you wanted to get up to speed on the LessWrong background reading, you had to click over to the /library page and start reading Rationality: A-Z [? · GW]. It required multiple clicks to even start reading, and there was no easy way to browse the entire collection and see what posts you had missed.

Meanwhile Rationality A-Z is just super long. I think anyone who's a longterm member of LessWrong or the alignment community should read the whole thing sooner or later – it covers a lot of different subtle errors and philosophical confusions that are likely to come up (both in AI alignment and in other difficult challenges). But, it's a pretty big ask for newcomers to read all ~400 posts. It seemed useful to have a "getting started" collection that people could read through in a weekend, to get the basics of the site culture.

This led us to redesign the library collection page (making it easier to browse all posts in a collection and see which ones you've already read), and to create the new Sequences Highlights collection [? · GW].

Sequence Spotlights

There's a lot of other sequences that the LessWrong community has generated over the years, which seemed good to expose people to. We've had a "Curated Sequences" section of the library but never quite figured out a good way to present it on the frontpage.

We gave curated sequences a try in 2017 but kept forgetting to rotate them. Now we've finally built an automated rotation system, and are building up a large repertoire of the best LW sequences which the site will automatically rotate through.

More focused recommendations

We're currently filtered the randomized "from the archives" posts to show Rationality [? · GW] and World Modeling [? · GW] posts. I'm not sure whether this makes sense as a longterm solution, but it still seems useful as a counterbalancing force for the deluge of AI content, and helping users orient to the underlying culture that generated that AI content.

Rewritten About Page

We rewrote the About page to both simplify it, clarify what LessWrong is about, and contextualize all the AI content.

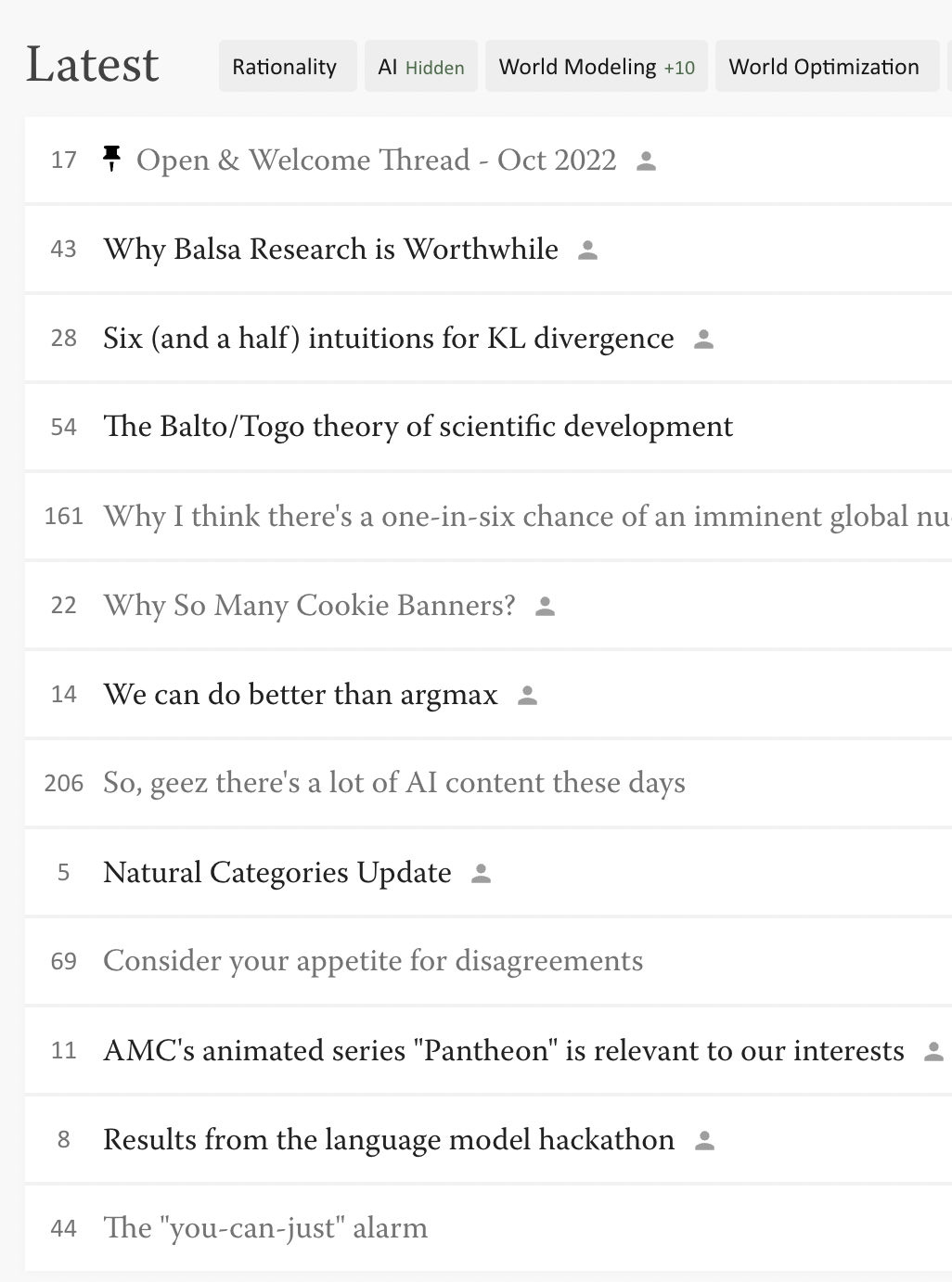

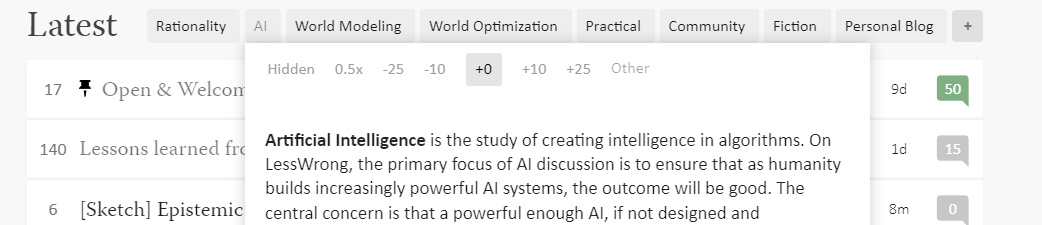

[Upcoming] Update Latest Tag-Filters

Ruby is planning to update the default Latest Posts tag-filters to either show more rationality and worldbuilding content by default (i.e. rationality/world-modeling posts get treated as having higher karma, and thus get more screentime via our sorting algorithm). Or, maybe just directly deemphasize AI content.

We're also going to try making the filters more prominent and easier to understand, so people can adjust the content they receive.

Can't we just move it to Alignment Forum?

When I've brought this up, a few people asked why we don't just put all the AI content on the Alignment Forum. This is a fairly obvious question, but:

a) It'd be a pretty big departure from what the Alignment Forum is currently used for.

b) I don't think it really changes the fundamental issue of "AI is what lots of people are currently thinking about on LessWrong."

The Alignment Forum's current job is not to be a comprehensive list of all AI content, it's meant to especially good content with a high signal/noise ratio. All Alignment Forum posts are also LessWrong posts, and LessWrong is meant to be the place where most discussion happens on them. The AF versions of posts are primarily meant to be a thing you can link to professionally without having to explain the context of a lot of weird, not-obviously-related topics that show up on LessWrong.

We created the Alignment Forum ~5 years ago, and it's plausible the world needs a new tool now. BUT, it still feels like a weird solution to try and move the AI discussion off of LessWrong. AI is one of the central topics that motivate a lot of other LessWrong interests. LessWrong is about the art of rationality, but one of the important lenses here is "how would you build a mind that was optimally rational, from scratch?".

Content I'd like to see more of

It's not obvious I want to nudge anyone away from AI alignment work. It does sure seem like this is the most urgent and important problem in the world. I also don't know that I want the site flooded with mediocre rationality content.

World Modeling / Optimization

Especially for newcomers who're considering posting more, I'd be interested in seeing more fact posts [LW · GW], which explore a topic curiously, and dig into the details of how one facet of the world works. Some good examples include Scott Alexander "Much More Than You Wanted To Know [? · GW]" type posts, or Martin Sustrik's exploration of the Swiss Political System [LW · GW].

I also really like to see subject matter experts write up stuff about their area of expertise that people might not know about (especially if they have reason to think this is relevant to LW readers). I liked this writeup about container logistics [LW · GW], which was to discussion [LW · GW] of whether we could have quick wins in civilizational adequacy that could snowball into something bigger.

Parts of the world that might be important, but which aren't currently on the radar of the rationalsphere, are also good topics to write about.

Rationality Content

Rationality content is a bit weird because... the content I'm most interested in is from people who've done a lot of serious thinking that's resulted in serious accomplishment. But, the people in that reference class in the LessWrong community are increasingly focused on AI.

I worry about naively incentivizing more "rationality content" – a lot of rationality content is ungrounded and insight-porn-ish.

But, I'm interested in accomplished thinkers trying to distill out their thinking process (see: many John [LW · GW] Wentworth [LW · GW] posts [LW · GW], and Mark Xu [LW · GW] and Paul Christiano's [LW · GW] posts on their research process). I'm interested in people like Logan Strohl who persistently [? · GW] explore [LW · GW] the micro-motions of how cognition works, while keeping it very grounded, and write up a trail for others to follow [LW · GW].

I think in some sense The Sequences are out of date. They were written as a reaction to a set of mistakes people were making 20 years ago. Some people are still making those mistakes, but ideas like probabilistic reasoning have now made it more into the groundwater, and the particular examples that resonate today are different, and I suspect we're making newer more exciting mistakes. I'd like to see people attempting to build a framework of rationality that feels like a more up-to-date foundation.

What are your thoughts?

I'm interested in hearing what people's takes on this. I'm particularly interested in how different groups of people feel about it. What does the wave of AI content feel like to established LessWrong users? To new users just showing up? To AI alignment researchers?

Does this feel like a problem? Does the whole worry feel overblown? If not, I'm interested in people articulating exactly what feels likely to go wrong.

- ^

Core Tags are the most common LessWrong topics: Rationality, AI, World Modeling, World Optimization, Community and Practical.

140 comments

Comments sorted by top scores.

comment by jsteinhardt · 2022-10-07T02:51:00.112Z · LW(p) · GW(p)

Here is my take: since there's so much AI content, it's not really feasible to read all of it, so in practice I read almost none of it (and consequently visit LW less frequently).

The main issue I run into is that for most posts, on a brief skim it seems like basically a thing I have thought about before. Unlike academic papers, most LW posts do not cite previous related work nor explain how what they are talking about relates to this past work. As a result, if I start to skim a post and I think it's talking about something I've seen before, I have no easy way of telling if they're (1) aware of this fact and have something new to say, (2) aware of this fact but trying to provide a better exposition, or (3) unaware of this fact and reinventing the wheel. Since I can't tell, I normally just bounce off.

I think a solution could be to have a stronger norm that posts about AI should say, and cite, what they are building on and how it relates / what is new. This would decrease the amount of content while improving its quality, and also make it easier to choose what to read. I view this as a win-win-win.

Replies from: Viliam, lahwran, Ruby↑ comment by Viliam · 2022-10-08T21:23:52.324Z · LW(p) · GW(p)

and consequently visit LW less frequently

Tangentially, "visiting LW less frequently" is not necessarily a bad thing. We are not in the business of selling ads; we do not need to maximize the time users spend here. Perhaps it would be better if people spent less time online (including on LW) and more time doing whatever meaningful things they might do otherwise.

But I agree that even assuming this, "the front page is full of things I do not care about" is a bad way to achieve it.

↑ comment by the gears to ascension (lahwran) · 2022-10-07T02:57:37.676Z · LW(p) · GW(p)

tools for citation to the existing corpus of lesswrong posts and to off-site scientific papers would be amazing; eg, rolling search for related academic papers as you type your comment via the semanticscholar api, combined with search over lesswrong for all proper nouns in your comment. or something. I have a lot of stuff I want to say that I expect and intend is mostly reference to citations, but formatting the citations for use on lesswrong is a chore, and I suspect that most folks here don't skim as many papers as I do. (that said, folks like yourself could probably give people like me lessons on how to read papers.)

also very cool would be tools for linting emotional tone. I remember running across a user study that used a large language model to encourage less toxic review comments; I believe it was in fact an intervention study to see how usable a system was. looking for that now...

Replies from: michal-basiura↑ comment by Sinityy (michal-basiura) · 2023-02-02T19:27:58.677Z · LW(p) · GW(p)

Maybe GPT-3 could be used to find LW content related to the new post, using something like this: https://gpt-index.readthedocs.io

Unfortunately, I didn't get around to doing anything with it yet. But it seems useful: https://twitter.com/s_jobs6/status/1619063620104761344

↑ comment by Ruby · 2022-10-12T00:46:43.555Z · LW(p) · GW(p)

Over the years I've thought about a "LessWrong/Alignment" journal article format the way regular papers have Abstract-Intro-Methods-Results-Discussion. Something like that, but tailored to our needs, maybe also bringing in OpenPhil-style reasoning transparency (but doing a better job of communicating models).

Such a format could possibly mandate what you're wanting here.

I think it's tricky. You have to believe any such format actually makes posts better rather than constraining them, and it's worth the effort of writers to confirm.

It is something I'd like to experiment with though.

comment by swarriner · 2022-10-07T21:13:08.173Z · LW(p) · GW(p)

AI discourse triggers severe anxiety in me, and as a non-technical person in a rural area I don't feel I have anything to offer the field. I personally went so far as to fully hide the AI tag from my front page and frankly I've been on the threshold of blocking the site altogether for the amount of content that still gets through by passing reference and untagged posts. I like most non-AI content on the site, been checking regularly since the big LW2.0 launch, and I would consider it a loss of good reading material to stop browsing, but since DWD I'm taking my fate in my hands every time I browse here.

I don't know how many readers out there are like me, but I think it at least warrants consideration that the AI doomtide acts as a barrier to entry for readers who would benefit from rationality content but can't stomach the volume and tone of alignment discourse.

Replies from: mingyuan, alexflint↑ comment by mingyuan · 2022-10-08T18:46:02.137Z · LW(p) · GW(p)

Yeah this is a point that I failed to make in my own comment — it's not just that I'm not interested in AIS content / not technically up to speed, it's that seeing it is often actively extremely upsetting

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2022-10-08T21:42:09.303Z · LW(p) · GW(p)

I'm sorry to hear that! Do you have any thoughts on ways to rephrase the ai content to make it less upsetting? would it help to have news that emphasizes successes, so that you have frequent context that it's going relatively alright and is picking up steam? in general, my view is that yudkowskian paranoia about ai safety is detrimental in large part because it's objectively wrong, and while it's great for him to be freaked out about it, his worried view shouldn't be frightening us; I'm quite excited for superintelligence and I just want us to hurry up and get the safe version working so we can solve a bunch of problems. IMO you should feel able to feel comfy that AI now is pretty much nothing but super cool.

[edit to clarify: this is not to say the problem isn't hard; it's that I really do think the capabilities folks know that safety and capabilities were always the same engineering task]

↑ comment by Alex Flint (alexflint) · 2022-10-14T13:24:01.113Z · LW(p) · GW(p)

Thank you for writing this comment. Just so you know, probably you can contribute to the field, if that is your desire. I would start by joining a community where you will be happy and where people are working seriously on the problem.

Replies from: swarriner↑ comment by swarriner · 2022-10-14T14:42:48.398Z · LW(p) · GW(p)

I feel like you mean this in kindness, but to me it reads as "You could risk your family's livelihood relocating and/or trying to get recruited to work remotely so that you can be anxious all the time! It might help on the margins ¯\_(ツ)_/¯ "

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2022-10-14T15:03:02.462Z · LW(p) · GW(p)

Why would you risk your family's livelihood? That doesn't seem like a good idea. And why would you go somewhere that you'd be anxious all the time?

Replies from: swarriner↑ comment by swarriner · 2022-10-14T15:09:37.823Z · LW(p) · GW(p)

Yes, that's my point. I'm not aware of a path to meaningful contribution to the field that doesn't involve either doing research or doing support work for a research group. Neither is accessible to me without risking the aforementioned effects.

Replies from: alexflint, sharmake-farah↑ comment by Alex Flint (alexflint) · 2022-10-14T15:59:27.691Z · LW(p) · GW(p)

Yeah right. It does seem like work in alignment at the moment is largely about research, and so a lot of the options come down to doing or supporting research.

I would just note that there is this relatively huge amount of funding in the space at the moment -- OpenPhil and FTX both open to injecting huge amounts of funding and largely not having enough places to put it. It's not that it's easy to get funded -- I wouldn't say it's easy at all -- but it does really seems like the basic conditions in the space are such that one would expect to find a lot of opportunities to be funded to do good work.

Replies from: coryfklein↑ comment by coryfklein · 2022-10-14T22:18:24.120Z · LW(p) · GW(p)

one would expect to find a lot of opportunities to be funded to do good work.

This reader is a software engineer with over a decade of experience. I'm paid handsomely and live in a remote rural area. I am married with three kids. The idea that my specialized experience of building SaaS products in Scala would somehow port over to AI research seems ludicrous. I am certain I'm cognitively capable enough to contribute to AI research, but I'd be leaving a career where I'm compensated based on my experience for one where I'm starting over anew.

Surely OpenPhil and FTX would not match my current salary in order to start my career over, all while allowing me to remain in my current geography (instead of uprooting my kids from friends and school)? It seems unlikely I'd have such a significant leg up over a recent college graduate with a decent GPA so as to warrant matching my software engineering salary.

Replies from: alexflint↑ comment by Alex Flint (alexflint) · 2022-10-16T18:20:29.658Z · LW(p) · GW(p)

Right -- you probably could contribute to AI alignment, but your skills mostly wouldn't port over, and you'd very likely earn less than your current job.

↑ comment by Noosphere89 (sharmake-farah) · 2022-10-14T15:28:11.719Z · LW(p) · GW(p)

I'll say one thing. I too do not like the AI doomtide/doomerism, despite thinking it's a real problem. You can take breaks from LW or hide posts for AI from your frontpage if you're upset.

comment by jacob_cannell · 2022-10-06T22:33:27.849Z · LW(p) · GW(p)

I'm here pretty much just for the AI related content and discussion, and only occasionally click on other posts randomly: so I guess I'm part of the problem ;). I'm not new, I've been here since the beginning, and this debate is not old. I spend time here specifically because I like the LW format/interface/support much better than reddit, and LW tends to have a high concentration of thoughtful posters with a very different perspective (which I tend to often disagree with, but that's part of the fun). I also read /r/MachineLearning/ of course, but it has different tradeoffs.

You mention filtering for Rationality and World Modeling under More Focused Recommendations - but perhaps LW could go farther in that direction? Not necessarily full subreddits, but it could be useful to have something like per user ranking adjustments based on tags, so that people could more configure/personalize their experience. Folks more interested in Rationality than AI could uprank and then see more of the former rather than the latter, etc.

AI needs Rationality, in particular. Not everyone agrees that rationality is key, here (I know one prominent AI researcher who disagreed).

There is still a significant - and mostly unresolved - disconnect between the LW/Alignment and mainstream ML/DL communities, but the trend is arguably looking promising.

I think in some sense The Sequences are out of date.

I would say "tragically flawed": noble in their aspirations and very well written, but overconfident in some key foundations. The sequences make some strong assumptions about how the brain works and thus the likely nature of AI, assumptions that have not aged well in the era of DL. Fortunately the sequences also instill the value of updating on new evidence.

Replies from: habryka4, Raemon, lahwran↑ comment by habryka (habryka4) · 2022-10-06T22:56:30.170Z · LW(p) · GW(p)

but it could be useful to have something like per user ranking adjustments based on tags, so that people could more configure/personalize their experience.

Just to be clear, this does indeed exist. You can give a penalty or boost to any tag on your frontpage, and so shift the content in the direction of topics you are most interested in.

Replies from: jacob_cannell, wfenza, coryfklein↑ comment by jacob_cannell · 2022-10-06T23:12:19.119Z · LW(p) · GW(p)

LOL that is exactly what I wanted! Thanks :)

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-10-06T23:28:58.155Z · LW(p) · GW(p)

It currently gives fixed-size karma bonuses or penalties. I think we should likely change it to be multipliers instead, but either should get the basic job done.

Replies from: ben-lang, ChristianKl↑ comment by Ben (ben-lang) · 2022-10-08T15:28:35.835Z · LW(p) · GW(p)

I can see the logic of multipliers, but in the edge case of posts with zero or negative karma they do weird stuff. If you set big mulitpliers for 5 topics, and there is a -1 karma post that ticks every single one of those topics, then you will never see it. But you of all people are the one who should see that post, which the addition achieves.

(Not significant really though.)

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2023-04-27T04:53:48.922Z · LW(p) · GW(p)

You could just not have the multipliers apply to negative karma posts.

↑ comment by ChristianKl · 2022-10-07T13:26:33.530Z · LW(p) · GW(p)

I would expect that if someone wants to only see AI alignment post (a wish someone mentioned) saying +1000 karma would provide that result but also mess up the sorting as the karma differences become less.

A modifier of 100x should allow a user to actually only see one tag.

↑ comment by Wes F (wfenza) · 2022-10-08T16:14:54.203Z · LW(p) · GW(p)

What? How? I've found like 3 different "customize" options, and none of them are this.

Side note: I've noticed that web & app developers these days try to make settings "intuitive" instead of just putting them all in one place, which I think is silly. Just put all settings under settings. Why on Earth are there multiple "customize" options?

Replies from: wfenza↑ comment by Wes F (wfenza) · 2022-10-08T16:32:44.999Z · LW(p) · GW(p)

Nevermind. Another comments explained it. I would greatly appreciate that option also being put under settings! I would have found it much easier.

Replies from: Raemon↑ comment by coryfklein · 2022-10-14T22:23:21.343Z · LW(p) · GW(p)

I only visit the site every month or so and I use All Posts grouped by Weekly to "catch up". It looks like that particular page does not have support for this kind of tag-specific penalty. :/

↑ comment by Raemon · 2022-10-07T22:48:07.301Z · LW(p) · GW(p)

I would say "tragically flawed": noble in their aspirations and very well written, but overconfident in some key foundations. The sequences make some strong assumptions about how the brain works and thus the likely nature of AI, assumptions that have not aged well in the era of DL. Fortunately the sequences also instill the value of updating on new evidence.

What concretely do you have in mind here?

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-10-09T14:09:01.527Z · LW(p) · GW(p)

Back when the sequences were written in 2007/2008 you could roughly partition the field of AI based on beliefs around the efficiency and tractability of the brain. Everyone in AI looked at the brain as the obvious single example of intelligence, but in very different lights.

If brain algorithms are inefficient and intractable[1] then neuroscience has little to offer, and instead more formal math/CS approaches are preferred. One could call this the rationalist approach to AI, or perhaps the "and everything else approach". One way to end up in that attractor is by reading a bunch of ev psych [LW · GW]; EY in 2007 was clearly heavily into Tooby and Cosmides, even if he has some quibbles with them on the source of cognitive biases [LW(p) · GW(p)].

From Evolutionary Psychology and the Emotions:

An evolutionary perspective leads one to view the mind as a crowded zoo of evolved, domain-specific programs. Each is functionally specialized for solving a different adaptive problem that arose during hominid evolutionary history, such as face recognition, foraging, mate choice, heart rate regulation, sleep management, or predator vigilance, and each is activated by a different set of cues from the environment.

From the Psychological Foundations of Culture:

Evolution, the constructor of living organisms, has no privileged tendency to build into designs principles of operation that are simple and general. (Tooby and Cosmides 1992)

EY quotes this in LOGI, 2007 (p 4), immediately followed with:

The field of Artificial Intelligence suffers from a heavy, lingering dose of genericity and black-box, blank-slate, tabula-rasa concepts seeping in from the Standard Social Sciences Model (SSSM) identified by Tooby and Cosmides (1992). The general project of liberating AI from the clutches of the SSSM is more work than I wish to undertake in this paper, but one problem that must be dealt with immediately is physics envy. The development of physics over the last few centuries has been characterized by the discovery of unifying equations which neatly underlie many complex phenomena. Most of the past fifty years in AI might be described as the search for a similar unifying principle believed to underlie the complex phenomenon of intelligence.

Physics envy in AI is the search for a single, simple underlying process, with the expectation that this one discovery will lay bare all the secrets of intelligence.

Meanwhile in the field of neuroscience there was a growing body of evidence and momentum coalescing around exactly the "physics envy" approaches EY bemoans: the universal learning hypothesis, popularized to a wider audience in On Intelligence in 2004. It is pretty much pure tabula rosa, blank-slate, genericity and black-box.

The UL hypothesis is that the brain's vast complexity is actually emergent, best explained by simple universal learning algorithms that automatically evolve all the complex domain specific circuits as required by the simple learning objectives and implied by the training data. (Years later I presented it on LW [LW · GW] in 2015, and I finally got around to writing up the brain efficiency [LW · GW] issue more recently - although I literally started the earlier version of that article back in 2012.)

But then the world did this fun experiment: the rationalist/non-connectivist AI folks got most of the attention and research money, but not all of it - and then various researcher groups did their thing and tried to best each other on various benchmarks. Eventually Nvidia released cuda, a few connectivists ported ANN code to their gaming GPUs which started to break imagenet, and then a little startup founded with the mission of reverse engineering the brain by some folks who met in a neuroscience program adapted that code to play Atari and later break Go; the rest is history - as you probably know.

Turns out the connectivists and the UL hypothesis were pretty much completely right after all - proven not only by the success of DL in AI, but also by how DL is transforming neuroscience. We know now that the human brain learns complex tasks like vision and language not through kludgy complex evolved mechanisms, but through the exact same simple approximate bayesian (self-supervised) learning algorithms that drive modern DL systems.

The sequences and associated materials were designed to "raise the rationality water line" and ultimately funnel promising new minds into AI-safety. And there they succeeded, especially in those earlier years. Finding an AI safety researcher today who isn't familiar with the sequences and LW .. well maybe they exist? But they would be unicorns. ML-safety and even brain-safety approaches are now obviously more popular, but there is still this enormous bias/inertia in AI safety stemming from the circa 2007 beliefs and knowledge crystallized and distilled into the sequences.

It's also possible to end up in the "brains are highly efficient, but completely intractable" camp, which implies uploading as the most likely path to AI - this is where Hanson is - and closer to my beliefs circa 2000 ish before I had studied much systems neuroscience. ↩︎

↑ comment by lc · 2022-11-14T02:36:05.047Z · LW(p) · GW(p)

I think in order to be "concrete" you need to actually point to a specific portion of the sequences that rests on these foundations you speak of, because as far as I can tell none of it does.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-11-14T05:25:18.689Z · LW(p) · GW(p)

you need to actually point to a specific portion of the sequences that rests on these foundations you speak of,

I did.

My comment has 8 links. The first is a link to "Adaptation-Executers, not Fitness-Maximizers", which is from the sequences ("The Simple Math of Evolution"), and it opens with a quote from Tooby and Cosmides. The second is a link to the comment section from another post in that sequence (Evolutions Are Stupid (But Work Anyway)) where EY explicitly discusses T&C, saying:

They're certainly righter than the Standard Social Sciences Model they criticize, but swung the pendulum slightly too far in the new direction.

And a bit later:

In a sense, my paper "Levels of Organization in General Intelligence" can be seen as a reply to Tooby and Cosmides on this issue; though not, in retrospect, a complete one.

My third and fourth links are then the two T&C papers discussed, and the fifth link is a key quote from EY's paper LOGI - his reply to T&C.

On OB in 2006 "The martial art of rationality" [LW · GW], EY writes:

Such understanding as I have of rationality, I acquired in the course of wrestling with the challenge of artificial general intelligence (an endeavor which, to actually succeed, would require sufficient mastery of rationality to build a complete working rationalist out of toothpicks and rubber bands).

So in his words, his understanding of rationality comes from thinking about AGI - so the LOGI and related quotes are relevant, as that reveals the true foundation of the sequences (his thoughts about AGI) around the time when he wrote them.

These quotes - especially the LOGI quote - clearly establish that EY has an evolved modularity like view of the brain with all that entails. He is skeptical of neural networks (even today calls them "giant inscrutable matrices") and especially "physics envy" universal learning type explanations of the brain (bayesian brain, free energy, etc), and this subtly influences everything where the sequences discusses the brain or related topics. The overall viewpoint is that the brain is a kludgy mess riddled with cognitive biases. He not so subtly disses [? · GW] systems/complexity theory and neural networks [? · GW].

More key to the AI risk case are posts such as "Value is Fragile [? · GW]" which clearly builds on his larger wordview:

Value isn't just complicated, it's fragile. There is more than one dimension of human value, where if just that one thing is lost, the Future becomes null. ... And then there are the long defenses of this proposition, which relies on 75% of my Overcoming Bias posts,

And of course "The Design Space of Minds in General [? · GW]"

These views espouse the complexity and fragility of human values and supposed narrowness of human mind space vs AI mind space which are core components of the AI risk arguments.

Replies from: lc↑ comment by lc · 2022-11-14T05:31:09.188Z · LW(p) · GW(p)

None of which is a concrete reply to anything Eliezer said inside "Adaption Executors, not Fitness Optimizers", just a reply to what you extrapolate Eliezer's opinion to be, because he read Tooby and Cosmides and claimed they were somewhere in the ballpark in a separate comment. So I ask again: what portion of the sequences do you have an actual problem with?

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-11-14T06:20:15.786Z · LW(p) · GW(p)

And your reply isn't a concrete reply to any of my points.

The quotes from LOGI clearly establish exactly where EY agrees with T&C, and the other quotes establish the relevance of that to the sequences. It's not like two separate brains wrote LOGI vs the sequences, and the other quotes establish the correspondence regardless.

This is not a law case where I'm critiquing some super specific thing EY said. Instead I'm tracing memetic influences: establishing what high level abstract brain/AI viewpoint cluster he was roughly in when he wrote the sequences, and how that influenced them. The quotes are pretty clear enough for that.

Replies from: lc↑ comment by lc · 2022-11-14T06:26:37.624Z · LW(p) · GW(p)

So it turns out I'm just too stupid for this high level critique. I'm only used to ones where you directly reference the content of the thing you're asserting is tragically flawed. In order to get across to less sophisticated people like me in the future, my advice is to independently figure out how this "memetic influence" got into the sequences and then just directly refute whatever content it tainted. Otherwise us brainlets won't be able to figure out which part of the sequences to label "not true" due to memetic influence, and won't know if your disagreements are real or made up for contrarianism's sake.

Replies from: interstice, jacob_cannell↑ comment by interstice · 2022-11-15T03:59:32.578Z · LW(p) · GW(p)

This comment [LW(p) · GW(p)] contains a specific disagreement.

↑ comment by jacob_cannell · 2022-11-14T06:39:08.409Z · LW(p) · GW(p)

I think you reading way too much into the specific questionable wording of "tragically flawed". By that I meant that they are flawed in some of the key background assumptions, how that influences thinking on AI risk/alignment, and the consequent system wide effects. I didn't mean they are flawed at their surface level purpose - as rationalist self help and community foundations. They are very well written and concentrate a large amount of modern wisdom. But that of course isn't the full reason for why EY wrote them: they are part of a training funnel to produce alignment researchers.

↑ comment by the gears to ascension (lahwran) · 2023-01-03T13:08:41.574Z · LW(p) · GW(p)

I've been here since the beginning, and this debate is not old

I think you may have flipped something here

comment by elifland · 2022-10-07T15:23:06.212Z · LW(p) · GW(p)

Meanwhile Rationality A-Z is just super long. I think anyone who's a longterm member of LessWrong or the alignment community should read the whole thing sooner or later – it covers a lot of different subtle errors and philosophical confusions that are likely to come up (both in AI alignment and in other difficult challenges)

My current guess is that the meme "every alignment person needs to read the Sequences / Rationality A-Z" is net harmful. They seem to have been valuable for some people but I think many people can contribute to reducing AI x-risk without reading them. I think the current AI risk community overrates them because they are selected strongly to have liked them.

Some anecodtal evidence in favor of my view:

- To the extent you think I'm promising for reducing AI x-risk and have good epistemics, I haven't read most of the Sequences. (I have liked some of Eliezer's other writing, like Intelligence Explosion Microeconomics.)

- I've been moving some of my most talented friends toward work on reducing AI x-risk and similarly have found that while I think all have great epistemics, there's mixed reception to rationalist-style writing. e.g. one is trialing at a top alignment org and doesn't like HPMOR, while another likes HPMOR, ACX, etc.

↑ comment by Ruby · 2022-10-19T02:08:25.455Z · LW(p) · GW(p)

but I think many people can contribute to reducing AI x-risk without reading them.

I think the tough thing here is it's very hard to evaluate who, if anyone, is making any useful contributions. After all, no one has successfully aligned a superintelligence to date. Maybe it's all way-off track. All else equal, I trust people who've read the Sequences to be better judges of whether we're making progress in the absence of proper end-to-end feedback than those who haven't.

Caveat: I am not someone who could plausibly claim to have made any potential contribution myself. :P

↑ comment by Joseph Bloom (Jbloom) · 2022-10-07T22:05:16.592Z · LW(p) · GW(p)

I think it's plausible that it is either harmful to perpetuate "every alignment person needs to read the Sequences / Rationality A-Z" or maybe even inefficient.

For example, to the extent that alignment needs more really good machine learning engineers, it's possible they might benefit less from the sequences than a conceptual alignment researcher.

However, relying on anecdotal evidence seems potentially unnecessary. We might be able to use polls, or otherwise systemically investigate the relationship between interest/engagement with the sequences and various paths to contribution with AI. A prediction market might also work for information aggregation.

I'd bet that all else equal, engagement with the sequences is beneficial but that this might be less pronounced among those growing up in academically inclined cultures.

comment by mukashi (adrian-arellano-davin) · 2022-10-06T22:23:26.151Z · LW(p) · GW(p)

I'm a 100% with you. I don't like the current trend of LW becoming a blog about AI, and much less about a blog about how AGI doom is inevitable, (and in my opinion there have been too many blog posts about that, with some exceptions of course). I have found myself lately downvoting AI related posts more easily and upvoting content non related to AI more easily too

Replies from: niplav↑ comment by niplav · 2022-10-06T23:19:39.940Z · LW(p) · GW(p)

I weakly downvoted your comment:

I think the solution to "too much AI content" is not to downvote the AI content less discriminately. If there were many posts with correct proofs in harmonic analysis being posted to LessWrong, I would not want to downvote them, after all, they are not wrong in any important sense, and maybe even important for the world!

But I would like to filter them out, at least until I've learned the basics of harmonic analysis to understand them better (if I desired to do so).

Replies from: habryka4, adrian-arellano-davin↑ comment by habryka (habryka4) · 2022-10-06T23:58:55.791Z · LW(p) · GW(p)

For what it's worth, I think I am actually in favor of downvoting content of which you think there is too much. The general rule for voting is "upvote this if you want to see more like this" and "downvote this if you want to see less like this". I think it's too easy to end up in a world where the site is filled with content that nobody likes, but everyone thinks someone else might like. I think it's better for people to just vote based on their preferences, and we will get it right in the aggregate.

Replies from: ChristianKl↑ comment by ChristianKl · 2022-10-07T13:31:32.123Z · LW(p) · GW(p)

Generally, I would want people to vote on articles they have actually read.

If posts, nobody wants to read because they seem very technical, get zero votes I think that's a good outcome. They don't need to be downvoted.

↑ comment by mukashi (adrian-arellano-davin) · 2022-10-07T01:07:25.310Z · LW(p) · GW(p)

Sorry, I think I wasn't clear enough. I meant that my threshold to downvote an AI related post was somehow lower, not that I was downvoting them indiscriminately.

Replies from: niplavcomment by mingyuan · 2022-10-07T18:02:23.217Z · LW(p) · GW(p)

I'm in favor of subforums — from these comments it seems to me that a significant fraction of people are coming to LW either for AI content, or for explicitly non-AI content (including some people who sometimes want one and sometimes the other); if those use cases are already so separate, it seems dumb to keep all those posts in the same stream, when most people are unhappy with that. (Yeah maybe I'm projecting because I'm personally unhappy with it, but, I am very unhappy.)

I used to be fine with the amount of AI content. Maybe a year ago I set a karma penalty on AI, and then earlier this year I increased that penalty to maximum and it still wasn't enough, so a few months ago I hid all things tagged AI, and now even that is insufficient, because there are so many AI posts and not all of them are tagged correctly. I often go to Latest and see AI-related posts, and then go tag those posts with 'AI' and refresh, but this is a frustrating experience. The whole thing has made me feel annoyed and ugh-y about using LW, even though I think there are still a lot of posts here I want to see.

I also worry that the AI stuff — which has a semi-professionalized flavor — discourages more playful and exploratory content on the rest of the site. I miss what LW2.0 was like before this :(

Replies from: Raemon, mingyuan↑ comment by Raemon · 2022-10-07T21:14:48.603Z · LW(p) · GW(p)

Ah. We'd had on our todo list "make it so post authors are prompted to tag their posts before publishing them", but it hadn't been super prioritized. This comment updates me to ship this sooner so there'll hopefully be fewer un-tagged AI posts.

Replies from: mingyuan, Algon↑ comment by mingyuan · 2022-10-10T18:18:39.179Z · LW(p) · GW(p)

Logging on today, I noticed that all of the posts with this problem (today) were personal blogposts; are those treated differently? Also some of these are tagged 'ML', but that makes it through the AI filter, which.... I guess is intended behavior :/

↑ comment by Raemon · 2022-10-10T18:22:34.291Z · LW(p) · GW(p)

The situation is that posts show up in the moderator-queue, moderators take a few hours to get to them, and in the meanwhile they are personal blogposts. So if you're okay with hiding all personal blogposts you can solve the problem that way. This would probably also hide other posts you want to see.

I'm hoping we can ship a "authors are nudged to give their post a core-tag" feature soon, which should alleviate a lot of the problem, although might not solve it entirely.

↑ comment by Algon · 2022-10-10T18:28:47.660Z · LW(p) · GW(p)

Wouldn't generating psuedo-tags which users can opt in to see/filter by mostly solve this problem? Like, I'd have thought even a pre-DL-revolution classifier or clustering algorithm or GDA or something would have worked. Let alone querying GPT-instruct (or whatever) on whether or not an article has to do with AI. The pricing is quite cheap for Goose.AI or other models.

Replies from: Ruby↑ comment by mingyuan · 2022-10-07T18:07:26.975Z · LW(p) · GW(p)

I don't actually know how subforums are implemented on EA Forum but I was imagining like a big thing on the frontpage that's like "Do you want to see the AI stuff or the non-AI stuff?". Does this sound clunky when I write it out?... yes

Replies from: wfenza↑ comment by Wes F (wfenza) · 2022-10-08T16:25:15.579Z · LW(p) · GW(p)

I would love an option to say "I don't want to read another word about AI alignment ever"

comment by Vanessa Kosoy (vanessa-kosoy) · 2022-10-08T11:42:54.470Z · LW(p) · GW(p)

Feature proposal: when a new user joins the forum, they get a list of the most popular tags and a chance to choose which of these topics especially interest/disinterest them. This addresses the problem of new users being unaware of the filtering/sorting by tags feature.

comment by MondSemmel · 2022-10-07T16:27:55.502Z · LW(p) · GW(p)

Re: "Content I'd like to see more of":

Naturally paying people to write essays on specific topics is very expensive, but one can imagine more subtle ways in which LW could incentivize people to write on specific topics. To brainstorm a few ideas:

- Some kind of feature where prospective writers can make public lists of things they could write about (in the form of Post Title + 1-paragraph summary), and a corresponding way for LW users to indicate which posts they're interested in. (E.g. I liked this post [LW · GW] of blog post ideas by lsusr, but for our purposes that's insufficient context for prospective readers.) Maybe by voting on stuff that sounds interesting, or by doing the equivalent of subscribing to a newsletter. (In fact, there's already a LW feature to subscribe to all comments by a user, as well as all comments on a post, so this would be like subscribing to a draft post so you'd get a notification if and when it's released.) Of course one could even offer bounties here, but that might not be worth the complexity and the adverse incentives. Anyway, the main benefit here would be for prolific writers to gauge interest on their ideas and prioritize what to write about. I don't know to which extent that's typically a bottleneck, however.

- Or maybe writers aren't bottlenecked by or don't care about audience interest, but would love a way to publically request specific support resources for their post ideas. Maybe to write a specific post they need money, or a Roam license, or a substack subscription, or access to a tool like DALL-E, or information from some domain experts, or support from a programmer, or help with finding some datasets or papers, etc. LW already has a fantastic feature where you can request proofreaders and editors for your posts, but those are 1-to-1 requests to the LW team, not public requests which e.g. a domain expert or programmer could see and respond to themselves.

- Anyway, that's a perspective from the supply side. The equivalent from the demand side would be features that indicate to prospective writers what readers would like to see. There have been posts on this in the past (e.g. here [LW · GW] or here [LW · GW] or here [LW · GW]), but I'm imagining something more like a banner on the New Post page à la "Don't know what to write about? Here's what readers would love to read". This could again be a list of topics plus 1-paragraph summaries (this time suggested by readers), which could be upvoted by LW users or otherwise incentivized.

- This was already suggested by others in the past, but a feature for users to nominate great comments to be written up as posts. Could again include an option of offering a bounty or other incentives.

- Removing friction for writers, e.g. by making the editor better. Personally I'd love it if we could borrow the feature from Notion where you paste a link over selected text to turn that text into a link (rather than replacing the text with the link); and I've also seen a request for better citation support.

- A social accountability feature where you commit to write a post on X by deadline Y and ask to be held accountable by LW users. Could once again be combined with users offering incentives or bounties if the post idea seems promising enough.

Finally, to speak a bit from personal experience: I've been meaning to write a LW sequence on Health for a year now (here's [LW(p) · GW(p)] how that might look like). Things that have stopped me so far include: mostly akrasia and perfectionism; uncertainty of whether the idea would produce enough value to warrant spending my limited energy on; the daunting scale of the project; the question to which extent what I'll actually produce can live up to my envisioned ideal; lack of monetary reward for what sounds like a lot of work; lack of clarity or experience on how to manage the gazillion citations and sources (both when posting on LW, and in my personal draft notes); some confusion regarding how to keep this LW sequence up-to-date over time (do I update an existing essay? or repost it as a "2023 edition"?); and more besides.

To be clear, I'm mostly limited by akrasia, but a few of the thing I brainstormed above could help in my case, too.

comment by Wes F (wfenza) · 2022-10-08T16:07:25.264Z · LW(p) · GW(p)

I read via RSS, and I mostly just skip LW articles because it's almost all AI-related, and I'm not interested in that. It would be very nice if I could get a non-AI RSS feed (or even better - a customizable RSS feed where I can include or exclude certain tags).

It really does feel to me, though, that LessWrong is not for me because I'm not interested in AI alignment. LW doesn't strike me as a rationality-focused site. It's a site for talking about AI alignment that offers some rationality content as an afterthought. It sounds like you don't really want to change that, though, so it is what it is.

comment by Coafos (CoafOS) · 2022-10-06T23:14:55.733Z · LW(p) · GW(p)

(Ep. vibes: I went to few EA cons, and subscribed to the forum digest.)

I blame EA. They were simply too successful.

There are the following effects at play:

- Bad AI gonna kill us all :(

- Preparing for emergent threats is one of the most effective ways to help others.

- The best way to have good ideas is to have a lot of ideas; and the best way to have a lot of ideas is to have a lot of people.

- Large funnels were built for new AI Safety researchers.

- The largest discussions about the topic happened at LW and rat circles.

- The general advice I heard at EA conferences in late Feb/Mar (

notice the spike! it's March, before the big doompostedit: it's really after the doompost, I misread the graphs) is that you should go to LW for AI-specific stuff.

What a coincidence that the AI-on-LW flood and the cries for the drop in EA Forum quality happened at the same time. I think with the EA Movement growing exponentially in numbers, both sites are getting eternal septembered.

I think the solution could be to create a new frontpage for ai related discussions, like "personal blog", "LW frontpage", "AI Safety frontpage" categories. Or go through the whole subforum routes, with childboards and stuff like that.

Replies from: mruwnikcomment by Alex Flint (alexflint) · 2022-10-08T17:57:45.219Z · LW(p) · GW(p)

I really agree that lesswrong derives some of its richness from being a community where people do a few different things all in the same place -- rationality training, curiosity-driven research, searching for novel altruistic interventions, AI research. Providing tools for people to self-segregate into niches will "work" in the sense that people will do it and probably say that they like it, but will probably lose some of that richness.

comment by Alex Flint (alexflint) · 2022-10-08T17:52:31.773Z · LW(p) · GW(p)

Has there been an increase in people reading AI content, proportional to the increase in people writing AI content?

Replies from: michael-bask-gill↑ comment by Bask⚡️ (michael-bask-gill) · 2022-10-09T03:14:05.512Z · LW(p) · GW(p)

Hmm, I might be misunderstanding your intention, but I don't believe this is the correct question to ask, as it assumes that 'reading an article' ~= satisfaction with LessWrong.

A classic counter example here is click-bait, where 'visits' and 'time on page' don't correlate with 'user reported value.'

If we take a simple view of LessWrong as a 'product' then success is typically measured by retention: what % of users choose to return to the site each week following their first visit.

If we're seeing weekly retention for existing cohorts drop over time, it suggests that long-time LessWrong readers net-net value the content less.

Now, the prickly parts here are:

a) Users who've joined in the past year likely value the current post landscape more than the old post landscape. Given this, a retention 'win' for older cohorts would likely be a 'loss' for newer cohorts.

b) LessWrong's "purpose" is much less clearcut than that of a typical business/product.

I think it would be fun & productive to reflect on a 'north star metric' for LW, similar to the QALY for EA, but it's beyond the scope of this reply : )

comment by Shiroe · 2022-10-06T23:59:01.652Z · LW(p) · GW(p)

I'm fine with everything on LW ultimately being tied to alignment. Hardcore materialism being used as a working assumption seems like a good pragmatic measure as well. But ideally there should also be room for foundational discussions like "how do we know our utility function?" and "what does it mean for something to be aligned?" Having trapped priors on foundational issues seems dangerous to me.

comment by ChristianKl · 2022-10-07T13:23:08.789Z · LW(p) · GW(p)

Currently, every AI alignment post gets frontpaged. If there are too many AI alignment posts on the frontpage it's worth thinking about whether that policy should change.

comment by ChristianKl · 2022-10-06T22:21:14.016Z · LW(p) · GW(p)

I personally have AI alignment on -25 karma and Rationality on +25. For my purposes, the current system works well, but then I understand how it works and it's likely that there are other people who don't. New users likely won't understand that they have that choice.

I think it would give the wrong impression to a new users when they see that AI alignment is by default on -25 karma, so it's better for new users to give Rationality / Worldbuilding a boost than to set negative values for AI alignment.

I would suspect that most new users to LessWrong are not interested in reading highly technical AI alignment posts and as such find it more likely that they belong when they see fewer of those. On the other hand, winning over the people who would find highly technical AI alignment posts interesting is likely more valuable than winning over the average person.

Replies from: Huluk↑ comment by Huluk · 2022-10-07T19:09:38.480Z · LW(p) · GW(p)

Even after reading this comment it took me a while to find this option, so for anyone who similarly didn't know about that option:

On the start page, below "Latest", you can add a new filter. Then, click on that filter and adjust the numbers or entirely hide a category.

comment by _self_ (rowan) · 2022-10-07T21:04:21.518Z · LW(p) · GW(p)

I'm one of the new readers and found this forum through a Twitter thread that was critiquing it. psychology background then switched to ML, and I've been following AI ethics for over 15 years and have been hoping for a long time that discussion would leak across industries and academic fields.

Since AI (however you define it) is a permanent fixture in the world, I'm happy to find a forum focused on critical thinking either way and I enjoy seeing these discussions on front page. I hope it's SEO'd well too.

I'd think newcomers and non-technical contributors are awesome. 8 years ago I was so desperate to see that people in the AI space were thinking and critically evaluating their own decisions from a moral perspective, since I had started seeing unquestionable effects of this stuff in my own field with my own clients.

But if it starts attracting a ton of this you might want to consider splitting/starting a secondary forum, since this stuff is needed but may dilute from the original purpose of this forum

my concerns for AI lie firmly in the chasm between "best practices" and what actually occurs in practice.

Optimizing for bottom line with no checks and balances and a learned blindness to common sense (see: rob McNamara), and also blindness towards our own actions. "What we do to get by".

It's not overblown. But instead of philosophizing about AI doomsday I think there are QUITE enough bad practices going on in industry currently that affect tons of people, that deserve attention.

Focusing on preventing a theoretical AI takeover is not entirely a conspiracy thing, I'm sure it could happen. But it is not as helpful as:

- getting involved with policy

- education initiatives for the general public

- diversity initiatives in tech and leadership

- business/startup initiatives in underprivileged communities

- formal research on common sense things that are leading to shitty outcomes for underprivileged people

- encouraging collaboration, communication, and transfer of knowledge between different fields and across economic lines

- teaching people who care about this stuff good marketing and business practices.

- commitment to seeing beyond bullshit in general and to stop pretending, push towards understanding power dynamics

- cybersecurity as it relates to human psychology, propaganda, and national security. (Hope some people in that space are worried)

Also consider how delving into the depths of humanity affects your own mental health and perspective, I've found myself to be much more effective when focusing on grassroots hands on stuff

Stuff from academia trickles down to reality far too slowly to keep up with the progression of tech, which is why I removed myself from it, but still love the concept here and glad that people outside of AI are thinking critically about AI

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2022-10-08T10:04:42.020Z · LW(p) · GW(p)

Strongly agreed here. My view is that ai takeover is effectively just the scaled up version of present-day ai best practice concerns, and the teams doing good work on either end up helping both. Both "sides of the debate" have critical things to say about each other, but in my view, that's simply good scientific arguing.

I'd love to hear more on your thoughts on most effective actions for shorttermist ai safety and ai bias, if you were up for writing a post! I'd especially like to hear your thoughts on how cutting edge psychology emergency-deescalation-tactics research on stuff like how to re-knit connections between humans who've lost trust for political-fighting reasons can relate to ai safety; that example might not be your favorite focus, though it's something I worry about a lot myself and have thoughts about. Or perhaps if you've encountered the socio-environmental synthesis center's work on interdisciplinary team science (see also their youtube channel), I'm curious if you have thoughts about that. or, well, more accurately, I give those examples as prompt so you can see what kind of thing I'm thinking about writing about and generalize it into giving similar references or shallow dives into research that you're familiar with and I'm not.

Replies from: rowan↑ comment by _self_ (rowan) · 2022-10-24T04:34:07.463Z · LW(p) · GW(p)

So I didn't know this was a niche philosophy forum, with its own subculture. I'm way out of my element. My suggestions were not very relevant taking that into context, I thought it was a general forum. I'm still glad there are people thinking about it.

The links you sent are awesome! - I'll follow those researchers. I think a lot of my thoughts here are outdated as things keep changing, and I'm still putting thoughts together. So, I probably won't be writing much for a few months until my brain settles down a little.

Am I "shorttermism"? Long term, as in fate of humanity, I think I am not good to debate there

Thanks for commenting on my weird intro!

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2022-10-24T05:58:45.401Z · LW(p) · GW(p)

imo, shorttermism = 1 year, longtermism = 10 years. ai is already changing very rapidly. as far as I'm concerned your posts are welcome; don't waste time worrying about being out of your element, just tell it as you see it and let's debate - this forum is far too skeptical of people with your background and you should be more self-assured that you have something to contribute.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2022-10-06T22:07:13.839Z · LW(p) · GW(p)

I agree this is rather a thing, and I kinda feel like the times I look at LessWrong specifically to read up on what people are saying about their latest AI thoughts feel different to me from the times I am just in a reflective / learning mood and want to read about rationality and worldview building. For me personally, I'm using LessWrong for AI content daily, and would prefer to just have a setting in my account which by-default showed nothing but that. Other stuff for me is a distracting akrasia-temptation at this point. I also agree that for a novice / default user, it doesn't make sense to flood them with a bunch of highly technical AI posts, often midway through a multi-part series that's hard to understand if you haven't been following along. So maybe, a default mode and an AI researcher mode available in settings? Maybe also a ,"I'm not an AI researcher, just show me rationality stuff" mode?

comment by Stephen McAleese (stephen-mcaleese) · 2022-10-09T09:42:33.176Z · LW(p) · GW(p)

One major reason why there is so much AI content on LessWrong is that very few people are allowed to post on the Alignment Forum.

I analyzed some recent AI posts on LessWrong and found that only about 15% of the authors were also members of the Alignment Forum. I'm personally very interested in AI but I post all of my AI content on LessWrong and not the Alignment Forum because I'm not a member.

Anecdotally, I several people working full-time on AI safety who are still not members of the Alignment Forum and consequently post all their work on LessWrong.

My recommendation is to increase the number of people who are allowed to post on the Alignment Forum because the bar seems too high. And instead of having just a single class of members, there could be more members but different grades of members.

There are other reasons why AI has become more popular relative to rationality. Rationality isn't really a field that progresses as fast as AI and consequently, writing on topics such as cognitive biases is already covered in The Sequences.

On the other hand, breakthroughs are made every week in the field of AI which prompts people to write about it.

Replies from: thomas-larsen↑ comment by Thomas Larsen (thomas-larsen) · 2022-10-09T16:32:48.688Z · LW(p) · GW(p)

One major reason why there is so much AI content on LessWrong is that very few people are allowed to post on the Alignment Forum.

Everything on the alignment forum gets crossposted to LW, so letting more people post on AF wouldn't decrease the amount of AI content on LW.

comment by PeterMcCluskey · 2022-10-08T20:00:18.310Z · LW(p) · GW(p)

I'm trying to shift my focus more toward AI, due to the likelihood that it will have big impacts over the next decade.

I'd like newbies to see some encouragement to attend a CFAR workshop. But there's not much new to say on that topic, so it's hard to direct people's attention there.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2022-10-08T21:44:47.929Z · LW(p) · GW(p)

I don't think a CFAR workshop is an appropriate recommendation for folks who visit semanticscholar.com for the first time without epistemic background necessary to reliably understand papers; what makes lesswrong.com different that warrants such a high-touch recommendation? is there another kind of intro that would work? perhaps interactive topic-games, like brilliant, or perhaps play money prediction markets on the site?

comment by David Gross (David_Gross) · 2022-10-07T15:20:51.478Z · LW(p) · GW(p)

I'm one of those LW readers who is less interested in AI-related stuff (in spite of having a CS degree with an AI concentration; that's just not what I come here for). I would really like to be able to filter "AI Alignment Forum" cross-posts, but the current filter setup does not allow for that so far as I can see.

Replies from: habryka4↑ comment by habryka (habryka4) · 2022-10-07T16:26:26.333Z · LW(p) · GW(p)

Filtering out the AI tag should roughly do that.

Replies from: lise↑ comment by lise · 2022-10-09T12:40:23.594Z · LW(p) · GW(p)

I disagree that filtering the AI tag would accomplish this, at least for my purposes.

The thing about Alignment Forum crossposts is that they're usually quite technical & focused purely on AI, containing the bulk of things I don't want to see. The rest of the AI tag however often contains ideas about the human brain, analogies with the real world, and other content that I find interesting, even though the post ultimately ties those ideas back into an AI framing.

So a separate filter for this would be useful IMO.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-10-06T22:45:01.831Z · LW(p) · GW(p)

I'm confused by the sudden upsurge in AI content. People in technical AI alignment are there because they already had strong priors that AI capabilities are growing fast. They're aware of major projects. I doubt DALL-E threw a brick through Paul Christiano's window, Eliezer Yudkowsky's window, or John Wentworth's window. Their window was shattered years ago.

Here are some possible explanations for the proliferation of AI safety content. As a note, I have no competency in AI safety and haven't read the posts. These are questions, not comments on the quality of these posts!

- Is this largely amateur work by novice researchers who did have their windows shattered just recently, and are writing frantically as a result?

- Are we seeing the fruits of investments in training a cohort of new AI safety researchers that happened to ripen just when DALL-E dropped, but weren't directly caused by DALL-E?

- Is this the result of current AI safety researchers working extra hard?

- Are technical researchers posting things here that they'd normally keep on the AI alignment forum because they see the increased interest?

- Is this a positive feedback loop where increased AI safety posts lead to people posting more AI safety posts?

- Is it inhibition, where the proliferation of AI safety posts make potential writers feel crowded out? Or do AI safety posts bury the non-AI safety posts, lowering their clickthrough rate, so authors get unexpectedly low karma and engagement and therefore post less?

I am not in AI safety research and have no aptitude or interest in following the technical arguments. I know these articles have other outlets. So for me, these articles are strictly an inconvenience on the website, ignoring the pressingness of the issue for the world at large. I don't resent that. But I do experience the "inhibition" effect, where I feel skittish about posting non-AI safety content because I don't see others doing it.

Replies from: ChristianKl, Raemon, Aidan O'Gara↑ comment by ChristianKl · 2022-10-07T13:43:14.207Z · LW(p) · GW(p)

There used to be very strong secrecy norms at MIRI. There was a strategic update on the usefulness of public debate and reducing secrecy.

Are technical researchers posting things here that they'd normally keep on the AI alignment forum because they see the increased interest?

Everything that's in the AI alignment forum gets per default also shown on LessWrong. The AI alignment forum is a way to filter out amateur work.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2022-10-07T18:00:24.041Z · LW(p) · GW(p)

I don't believe there was a strategic update in favor of reducing secrecy at MIRI. My model is that everything that they said would be secret, is still secret. The increase in public writing is not because it became more promising, but because all their other work became less.

Replies from: ChristianKl↑ comment by ChristianKl · 2022-10-07T19:32:44.621Z · LW(p) · GW(p)

Maybe saying "secrecy" is the wrong way to phrase it. The main point is that MIRI strategy shifted toward more public writing.

↑ comment by Raemon · 2022-10-06T23:09:36.936Z · LW(p) · GW(p)

I think we're primarily seeing:

Is this largely amateur work by novice researchers who did have their windows shattered just recently, and are writing frantically as a result?

and

Are we seeing the fruits of investments in training a cohort of new AI safety researchers that happened to ripen just when DALL-E dropped, but weren't directly caused by DALL-E?

↑ comment by aog (Aidan O'Gara) · 2022-10-06T23:30:44.879Z · LW(p) · GW(p)

Are we seeing the fruits of investments in training a cohort of new AI safety researchers that happened to ripen just when DALL-E dropped, but weren't directly caused by DALL-E?

For some n=1 data, this describes my situation. I've posted about AI safety six times in the last six months despite having posted only once in the four years prior. I'm an undergrad who started working full-time on AI safety six months ago thanks to funding and internship opportunities that I don't think existed in years past. The developments in AI over the last year haven't dramatically changed my views. It's mainly about the growth of career opportunities in alignment for me personally.

Personally I agree with jacob_cannell and Nathan Helm-Burger that I'd prefer an AI-focused site and I'm mainly just distracted by the other stuff. It would be cool if more people could post on the Alignment Forum, but I do appreciate the value of having a site with a high bar that can be shared to outsiders without explaining all the other content on LessWrong. I didn't know you could adjust karma by tag, but I'll be using that to prioritize AI content now. I'd encourage anyone who doesn't want my random linkposts about AI to use the tags as well.

Is this a positive feedback loop where increased AI safety posts lead to people posting more AI safety posts?

This also feels relevant. I share links with a little bit of context when I think some people would find them interesting, even when not everybody will. I don't want to crowd out other kinds of content, I think it's been well received so far but I'm open to different norms.

comment by Stephen McAleese (stephen-mcaleese) · 2022-10-09T09:20:51.157Z · LW(p) · GW(p)

I recently analyzed the past 6 months of LessWrong posts about found that about 25% were related to AI.

comment by Kaj_Sotala · 2022-10-07T13:10:00.359Z · LW(p) · GW(p)

I liked this writeup about container logistics [LW · GW], which was relevant to .

Think you have a missing link here. :)

comment by trevor (TrevorWiesinger) · 2022-10-07T06:16:59.090Z · LW(p) · GW(p)

In my experience, LW and AI safety gain a big chunk of legitimacy from being the best at Rationality and among the best places on earth for self-improvement. That legitimacy goes a long way, but only in systems that are externalities to the alignment ecosystem (i.e. the externality is invisible to the 300 AI safety researchers [EA · GW] who are already being AI safety researchers).

I don't see the need to retool rationality for alignment. If it helps directly, it helps directly. If it doesn't help much directly, then it clearly helps indirectly. No need to get territorial for resources, not everyone is super good at math (but you yourself might be super good at math, even if you think you aren't [LW(p) · GW(p)]).

comment by the gears to ascension (lahwran) · 2022-10-07T02:15:39.066Z · LW(p) · GW(p)

we urgently need to distill huge amounts of educational content. I don't know with what weapons sequences 2 will be fought, but sequences 3 will be fought with knowledge tracing, machine teaching, online courses like brilliant, inline exercises, play money prediction markets, etc.

the first time around, it was limited to eliezer's knowledge - and he made severe mistakes because he didn't see neural networks coming. now it almost seems like we need to write an intro to epistemics for a wide variety of audiences, including AIs - it's time to actually write clearly enough to raise the sanity waterline.

I think that current ai aided clarification tools are approaching maturity levels necessary to do this. compressing human knowledge enough that it results in coherent, concise description that a wide variety of people will intuitively understand is a very tall order, and inevitably some of the material will need to be presented in a different order; it's very hard to learn the shapes of mathematical objects without grounding them in the physical reality they're derived from with geometry and then showing how everything turns into linear algebra, causal epistemics, predictive grounding, category theory. etc. the task of building a brain in your brain requires a manual for how to be the best kind of intelligence we know how to build, and it has been my view for years that what we need to do is distill these difficult topics into tools for interactively exploring the mathematical space of implications.

Could lesswrong have online MOOC participation groups? I'd certainly join one if it was designed to be adhd goofball friendly.

I don't know what you should build next, but I know we should be looking for to a future where the process is managed by ai.

AI is eating the world, fast. many of us think the rate-of-idea-generation physical limit will be hit any year now. I don't think it makes sense to focus less on ai, but it would help a lot to build tools for clarifying thoughts. What if the editor had an abstractive summarizer button, that would help you write more concisely? a common complaint I hear about this site is that people write too much, and as a person who writes too much, I sure would love if I could get a machine's help deciding which words are unnecessary.

Ultimately, there is no path to rationality that does not walk through the structure of intelligent systems.

Replies from: ChristianKl↑ comment by ChristianKl · 2022-10-08T08:35:59.305Z · LW(p) · GW(p)

Could lesswrong have online MOOC participation groups? I'd certainly join one if it was designed to be adhd goofball friendly.

https://www.lesswrong.com/posts/ryx4WseB5bEm65DWB/six-months-of-rose [LW · GW] is essentially something like that.

comment by DirectedEvolution (AllAmericanBreakfast) · 2022-10-06T22:50:45.012Z · LW(p) · GW(p)

the content I'm most interested in is from people who've done a lot of serious thinking that's resulted in serious accomplishment.

Raemon, do you selectively read posts by people you know to be seriously accomplished? Or are you saying that you think that a background of serious accomplishment by the writer just makes their writing more likely to be worthwhile?

Replies from: Raemon↑ comment by Raemon · 2022-10-06T23:07:11.662Z · LW(p) · GW(p)

I think people who have accomplished notable stuff are more likely to have rationality lessons worth learning from.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-10-07T04:05:13.232Z · LW(p) · GW(p)

Does that mean you’d find it useful if people posted their CV or a list of their accomplishments with their LessWrong posts?

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-10-07T19:12:58.582Z · LW(p) · GW(p)

I am having a hard time knowing what it means when someone disagrees with my question. It’s not meant rhetorically.

Replies from: ChristianKl↑ comment by ChristianKl · 2022-10-07T19:34:18.284Z · LW(p) · GW(p)

Without being the person who voted, I would expect that it means: "No, it's not useful".

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2022-10-08T03:57:18.430Z · LW(p) · GW(p)