The Brain as a Universal Learning Machine

post by jacob_cannell · 2015-06-24T21:45:33.189Z · LW · GW · Legacy · 171 commentsContents

Intro: Two Viewpoints on the Mind Universal Learning Machines Historical Interlude Dynamic Rewiring Brain Architecture The Basal Ganglia Implications for AGI Conclusion None 171 comments

This article presents an emerging architectural hypothesis of the brain as a biological implementation of a Universal Learning Machine. I present a rough but complete architectural view of how the brain works under the universal learning hypothesis. I also contrast this new viewpoint - which comes from computational neuroscience and machine learning - with the older evolved modularity hypothesis popular in evolutionary psychology and the heuristics and biases literature. These two conceptions of the brain lead to very different predictions for the likely route to AGI, the value of neuroscience, the expected differences between AGI and humans, and thus any consequent safety issues and dependent strategies.

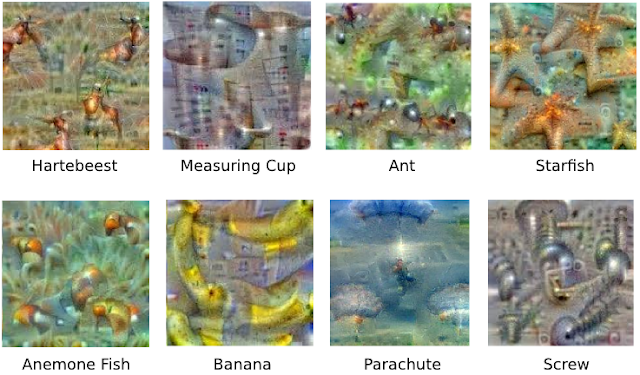

(The image above is from a recent mysterious post to r/machinelearning, probably from a Google project that generates art based on a visualization tool used to inspect the patterns learned by convolutional neural networks. I am especially fond of the wierd figures riding the cart in the lower left. )

- Intro: Two viewpoints on the Mind

- Universal Learning Machines

- Historical Interlude

- Dynamic Rewiring

- Brain Architecture (the whole brain in one picture and a few pages of text)

- The Basal Ganglia

- Implications for AGI

- Conclusion

Intro: Two Viewpoints on the Mind

Few discoveries are more irritating than those that expose the pedigree of ideas.

-- Lord Acton (probably)

Less Wrong is a site devoted to refining the art of human rationality, where rationality is based on an idealized conceptualization of how minds should or could work. Less Wrong and its founding sequences draws heavily on the heuristics and biases literature in cognitive psychology and related work in evolutionary psychology. More specifically the sequences build upon a specific cluster in the space of cognitive theories, which can be identified in particular with the highly influential "evolved modularity" perspective of Cosmides and Tooby.

From Wikipedia:

Evolutionary psychologists propose that the mind is made up of genetically influenced and domain-specific[3] mental algorithms or computational modules, designed to solve specific evolutionary problems of the past.[4]

From "Evolutionary Psychology and the Emotions":[5]

An evolutionary perspective leads one to view the mind as a crowded zoo of evolved, domain-specific programs. Each is functionally specialized for solving a different adaptive problem that arose during hominid evolutionary history, such as face recognition, foraging, mate choice, heart rate regulation, sleep management, or predator vigilance, and each is activated by a different set of cues from the environment.

If you imagine these general theories or perspectives on the brain/mind as points in theory space, the evolved modularity cluster posits that much of the machinery of human mental algorithms is largely innate. General learning - if it exists at all - exists only in specific modules; in most modules learning is relegated to the role of adapting existing algorithms and acquiring data; the impact of the information environment is de-emphasized. In this view the brain is a complex messy cludge of evolved mechanisms.

The universal learning hypothesis proposes that all significant mental algorithms are learned; nothing is innate except for the learning and reward machinery itself (which is somewhat complicated, involving a number of systems and mechanisms), the initial rough architecture (equivalent to a prior over mindspace), and a small library of simple innate circuits (analogous to the operating system layer in a computer). In this view the mind (software) is distinct from the brain (hardware). The mind is a complex software system built out of a general learning mechanism.

Additional indirect support comes from the rapid unexpected success of Deep Learning[7], which is entirely based on building AI systems using simple universal learning algorithms (such as Stochastic Gradient Descent or other various approximate Bayesian methods[8][9][10][11]) scaled up on fast parallel hardware (GPUs). Deep Learning techniques have quickly come to dominate most of the key AI benchmarks including vision[12], speech recognition[13][14], various natural language tasks, and now even ATARI [15] - proving that simple architectures (priors) combined with universal learning is a path (and perhaps the only viable path) to AGI. Moreover, the internal representations that develop in some deep learning systems are structurally and functionally similar to representations in analogous regions of biological cortex[16].

To paraphrase Feynman: to truly understand something you must build it.

In this article I am going to quickly introduce the abstract concept of a universal learning machine, present an overview of the brain's architecture as a specific type of universal learning machine, and finally I will conclude with some speculations on the implications for the race to AGI and AI safety issues in particular.

Universal Learning Machines

A universal learning machine is a simple and yet very powerful and general model for intelligent agents. It is an extension of a general computer - such as Turing Machine - amplified with a universal learning algorithm. Do not view this as my 'big new theory' - it is simply an amalgamation of a set of related proposals by various researchers.

An initial untrained seed ULM can be defined by 1.) a prior over the space of models (or equivalently, programs), 2.) an initial utility function, and 3.) the universal learning machinery/algorithm. The machine is a real-time system that processes an input sensory/observation stream and produces an output motor/action stream to control the external world using a learned internal program that is the result of continuous self-optimization.

There is of course always room to smuggle in arbitrary innate functionality via the prior, but in general the prior is expected to be extremely small in bits in comparison to the learned model.

The key defining characteristic of a ULM is that it uses its universal learning algorithm for continuous recursive self-improvement with regards to the utility function (reward system). We can view this as second (and higher) order optimization: the ULM optimizes the external world (first order), and also optimizes its own internal optimization process (second order), and so on. Without loss of generality, any system capable of computing a large number of decision variables can also compute internal self-modification decisions.

Conceptually the learning machinery computes a probability distribution over program-space that is proportional to the expected utility distribution. At each timestep it receives a new sensory observation and expends some amount of computational energy to infer an updated (approximate) posterior distribution over its internal program-space: an approximate 'Bayesian' self-improvement.

The above description is intentionally vague in the right ways to cover the wide space of possible practical implementations and current uncertainty. You could view AIXI as a particular formalization of the above general principles, although it is also as dumb as a rock in any practical sense and has other potential theoretical problems. Although the general idea is simple enough to convey in the abstract, one should beware of concise formal descriptions: practical ULMs are too complex to reduce to a few lines of math.

The above description is intentionally vague in the right ways to cover the wide space of possible practical implementations and current uncertainty. You could view AIXI as a particular formalization of the above general principles, although it is also as dumb as a rock in any practical sense and has other potential theoretical problems. Although the general idea is simple enough to convey in the abstract, one should beware of concise formal descriptions: practical ULMs are too complex to reduce to a few lines of math.

A ULM inherits the general property of a Turing Machine that it can compute anything that is computable, given appropriate resources. However a ULM is also more powerful than a TM. A Turing Machine can only do what it is programmed to do. A ULM automatically programs itself.

If you were to open up an infant ULM - a machine with zero experience - you would mainly just see the small initial code for the learning machinery. The vast majority of the codestore starts out empty - initialized to noise. (In the brain the learning machinery is built in at the hardware level for maximal efficiency).

Theoretical turing machines are all qualitatively alike, and are all qualitatively distinct from any non-universal machine. Likewise for ULMs. Theoretically a small ULM is just as general/expressive as a planet-sized ULM. In practice quantitative distinctions do matter, and can become effectively qualitative.

Just as the simplest possible Turing Machine is in fact quite simple, the simplest possible Universal Learning Machine is also probably quite simple. A couple of recent proposals for simple universal learning machines include the Neural Turing Machine[16] (from Google DeepMind), and Memory Networks[17]. The core of both approaches involve training an RNN to learn how to control a memory store through gating operations.

Historical Interlude

At this point you may be skeptical: how could the brain be anything like a universal learner? What about all of the known innate biases/errors in human cognition? I'll get to that soon, but let's start by thinking of a couple of general experiments to test the universal learning hypothesis vs the evolved modularity hypothesis.

In a world where the ULH is mostly correct, what do we expect to be different than in worlds where the EMH is mostly correct?

One type of evidence that would support the ULH is the demonstration of key structures in the brain along with associated wiring such that the brain can be shown to directly implement some version of a ULM architecture.

From the perspective of the EMH, it is not sufficient to demonstrate that there are things that brains can not learn in practice - because those simply could be quantitative limitations. Demonstrating that an intel 486 can't compute some known computable function in our lifetimes is not proof that the 486 is not a Turing Machine.

Nor is it sufficient to demonstrate that biases exist: a ULM is only 'rational' to the extent that its observational experience and learning machinery allows (and to the extent one has the correct theory of rationality). In fact, the existence of many (most?) biases intrinsically depends on the EMH - based on the implicit assumption that some cognitive algorithms are innate. If brains are mostly ULMs then most cognitive biases dissolve, or become learning biases - for if all cognitive algorithms are learned, then evidence for biases is evidence for cognitive algorithms that people haven't had sufficient time/energy/motivation to learn. (This does not imply that intrinsic limitations/biases do not exist or that the study of cognitive biases is a waste of time; rather the ULH implies that educational history is what matters most)

The genome can only specify a limited amount of information. The question is then how much of our advanced cognitive machinery for things like facial recognition, motor planning, language, logic, planning, etc. is innate vs learned. From evolution's perspective there is a huge advantage to preloading the brain with innate algorithms so long as said algorithms have high expected utility across the expected domain landscape.

On the other hand, evolution is also highly constrained in a bit coding sense: every extra bit of code costs additional energy for the vast number of cellular replication events across the lifetime of the organism. Low code complexity solutions also happen to be exponentially easier to find. These considerations seem to strongly favor the ULH but they are difficult to quantify.

Neuroscientists have long known that the brain is divided into physical and functional modules. These modular subdivisions were discovered a century ago by Brodmann. Every time neuroscientists opened up a new brain, they saw the same old cortical modules in the same old places doing the same old things. The specific layout of course varied from species to species, but the variations between individuals are minuscule. This evidence seems to strongly favor the EMH.

Throughout most of the 90's up into the 2000's, evidence from computational neuroscience models and AI were heavily influenced by - and unsurprisingly - largely supported the EMH. Neural nets and backprop were known of course since the 1980's and worked on small problems[18], but at the time they didn't scale well - and there was no theory to suggest they ever would.

Theory of the time also suggested local minima would always be a problem (now we understand that local minima are not really the main problem[19], and modern stochastic gradient descent methods combined with highly overcomplete models and stochastic regularization[20] are effectively global optimizers that can often handle obstacles such as local minima and saddle points[21]).

The other related historical criticism rests on the lack of biological plausibility for backprop style gradient descent. (There is as of yet little consensus on how the brain implements the equivalent machinery, but target propagation is one of the more promising recent proposals[22][23].)

Many AI researchers are naturally interested in the brain, and we can see the influence of the EMH in much of the work before the deep learning era. HMAX is a hierarchical vision system developed in the late 90's by Poggio et al as a working model of biological vision[24]. It is based on a preconfigured hierarchy of modules, each of which has its own mix of innate features such as gabor edge detectors along with a little bit of local learning. It implements the general idea that complex algorithms/features are innate - the result of evolutionary global optimization - while neural networks (incapable of global optimization) use hebbian local learning to fill in details of the design.

Dynamic Rewiring

In a groundbreaking study from 2000 published in Nature, Sharma et al successfully rewired ferret retinal pathways to project into the auditory cortex instead of the visual cortex.[25] The result: auditory cortex can become visual cortex, just by receiving visual data! Not only does the rewired auditory cortex develop the specific gabor features characteristic of visual cortex; the rewired cortex also becomes functionally visual. [26] True, it isn't quite as effective as normal visual cortex, but that could also possibly be an artifact of crude and invasive brain rewiring surgery.

The ferret study was popularized by the book On Intelligence by Hawkins in 2004 as evidence for a single cortical learning algorithm. This helped percolate the evidence into the wider AI community, and thus probably helped in setting up the stage for the deep learning movement of today. The modern view of the cortex is that of a mostly uniform set of general purpose modules which slowly become recruited for specific tasks and filled with domain specific 'code' as a result of the learning (self optimization) process.

The next key set of evidence comes from studies of atypical human brains with novel extrasensory powers. In 2009 Vuillerme et al showed that the brain could automatically learn to process sensory feedback rendered onto the tongue[27]. This research was developed into a complete device that allows blind people to develop primitive tongue based vision.

In the modern era some blind humans have apparently acquired the ability to perform echolocation (sonar), similar to cetaceans. In 2011 Thaler et al used MRI and PET scans to show that human echolocators use diverse non-auditory brain regions to process echo clicks, predominantly relying on re-purposed 'visual' cortex.[27]

The echolocation study in particular helps establish the case that the brain is actually doing global, highly nonlocal optimization - far beyond simple hebbian dynamics. Echolocation is an active sensing strategy that requires very low latency processing, involving complex timed coordination between a number of motor and sensory circuits - all of which must be learned.

Somehow the brain is dynamically learning how to use and assemble cortical modules to implement mental algorithms: everyday tasks such as visual counting, comparisons of images or sounds, reading, etc - all are task which require simple mental programs that can shuffle processed data between modules (some or any of which can also function as short term memory buffers).

To explain this data, we should be on the lookout for a system in the brain that can learn to control the cortex - a general system that dynamically routes data between different brain modules to solve domain specific tasks.

But first let's take a step back and start with a high level architectural view of the entire brain to put everything in perspective.

Brain Architecture

Below is a circuit diagram for the whole brain. Each of the main subsystems work together and are best understood together. You can probably get a good high level extremely coarse understanding of the entire brain is less than one hour.

(there are a couple of circuit diagrams of the whole brain on the web, but this is the best. From this site.)

The human brain has ~100 billion neurons and ~100 trillion synapses, but ultimately it evolved from the bottom up - from organisms with just hundreds of neurons, like the tiny brain of C. Elegans.

We know that evolution is code complexity constrained: much of the genome codes for cellular metabolism, all the other organs, and so on. For the brain, most of its bit budget needs to be spent on all the complex neuron, synapse, and even neurotransmitter level machinery - the low level hardware foundation.

For a tiny brain with 1000 neurons or less, the genome can directly specify each connection. As you scale up to larger brains, evolution needs to create vastly more circuitry while still using only about the same amount of code/bits. So instead of specifying connectivity at the neuron layer, the genome codes connectivity at the module layer. Each module can be built from simple procedural/fractal expansion of progenitor cells.

So the size of a module has little to nothing to do with its innate complexity. The cortical modules are huge - V1 alone contains 200 million neurons in a human - but there is no reason to suspect that V1 has greater initial code complexity than any other brain module. Big modules are built out of simple procedural tiling patterns.

Very roughly the brain's main modules can be divided into six subsystems (there are numerous smaller subsystems):

- The neocortex: the brain's primary computational workhorse (blue/purple modules at the top of the diagram). Kind of like a bunch of general purpose FPGA coprocessors.

- The cerebellum: another set of coprocessors with a simpler feedforward architecture. Specializes more in motor functionality.

- The thalamus: the orangish modules below the cortex. Kind of like a relay/routing bus.

- The hippocampal complex: the apex of the cortex, and something like the brain's database.

- The amygdala and limbic reward system: these modules specialize in something like the value function.

- The Basal Ganglia (green modules): the central control system, similar to a CPU.

In the interest of space/time I will focus primarily on the Basal Ganglia and will just touch on the other subsystems very briefly and provide some links to further reading.

The neocortex has been studied extensively and is the main focus of several popular books on the brain. Each neocortical module is a 2D array of neurons (technically 2.5D with a depth of about a few dozen neurons arranged in about 5 to 6 layers).

Each cortical module is something like a general purpose RNN (recursive neural network) with 2D local connectivity. Each neuron connects to its neighbors in the 2D array. Each module also has nonlocal connections to other brain subsystems and these connections follow the same local 2D connectivity pattern, in some cases with some simple affine transformations. Convolutional neural networks use the same general architecture (but they are typically not recurrent.)

Cortical modules - like artifical RNNs - are general purpose and can be trained to perform various tasks. There are a huge number of models of the cortex, varying across the tradeoff between biological realism and practical functionality.

Perhaps surprisingly, any of a wide variety of learning algorithms can reproduce cortical connectivity and features when trained on appropriate sensory data[27]. This is a computational proof of the one-learning-algorithm hypothesis; furthermore it illustrates the general idea that data determines functional structure in any general learning system.

There is evidence that cortical modules learn automatically (unsupervised) to some degree, and there is also some evidence that cortical modules can be trained to relearn data from other brain subsystems - namely the hippocampal complex. The dark knowledge distillation technique in ANNs[28][29] is a potential natural analog/model of hippocampus -> cortex knowledge transfer.

Module connections are bidirectional, and feedback connections (from high level modules to low level) outnumber forward connections. We can speculate that something like target propagation can also be used to guide or constrain the development of cortical maps (speculation).

The hippocampal complex is the root or top level of the sensory/motor hierarchy. This short youtube video gives a good seven minute overview of the HC. It is like a spatiotemporal database. It receives compressed scene descriptor streams from the sensory cortices, it stores this information in medium-term memory, and it supports later auto-associative recall of these memories. Imagination and memory recall seem to be basically the same.

The 'scene descriptors' take the sensible form of things like 3D position and camera orientation, as encoded in place, grid, and head direction cells. This is basically the logical result of compressing the sensory stream, comparable to the networking data stream in a multiplayer video game.

Imagination/recall is basically just the reverse of the forward sensory coding path - in reverse mode a compact scene descriptor is expanded into a full imagined scene. Imagined/remembered scenes activate the same cortical subnetworks that originally formed the memory (or would have if the memory was real, in the case of imagined recall).

The amygdala and associated limbic reward modules are rather complex, but look something like the brain's version of the value function for reinforcement learning. These modules are interesting because they clearly rely on learning, but clearly the brain must specify an initial version of the value/utility function that has some minimal complexity.

As an example, consider taste. Infants are born with basic taste detectors and a very simple initial value function for taste. Over time the brain receives feedback from digestion and various estimators of general mood/health, and it uses this to refine the initial taste value function. Eventually the adult sense of taste becomes considerably more complex. Acquired taste for bitter substances - such as coffee and beer - are good examples.

The amygdala appears to do something similar for emotional learning. For example infants are born with a simple versions of a fear response, with is later refined through reinforcement learning. The amygdala sits on the end of the hippocampus, and it is also involved heavily in memory processing.

See also these two videos from khanacademy: one on the limbic system and amygdala (10 mins), and another on the midbrain reward system (8 mins)

The Basal Ganglia

The Basal Ganglia is a wierd looking complex of structures located in the center of the brain. It is a conserved structure found in all vertebrates, which suggests a core functionality. The BG is proximal to and connects heavily with the midbrain reward/limbic systems. It also connects to the brain's various modules in the cortex/hippocampus, thalamus and the cerebellum . . . basically everything.

All of these connections form recurrent loops between associated compartmental modules in each structure: thalamocortical/hippocampal-cerebellar-basal_ganglial loops.

Just as the cortex and hippocampus are subdivided into modules, there are corresponding modular compartments in the thalamus, basal ganglia, and the cerebellum. The set of modules/compartments in each main structure are all highly interconnected with their correspondents across structures, leading to the concept of distributed processing modules.

Each DPM forms a recurrent loop across brain structures (the local networks in the cortex, BG, and thalamus are also locally recurrent, whereas those in the cerebellum are not). These recurrent loops are mostly separate, but each sub-structure also provides different opportunities for inter-loop connections.

The BG appears to be involved in essentially all higher cognitive functions. Its core functionality is action selection via subnetwork switching. In essence action selection is the core problem of intelligence, and it is also general enough to function as the building block of all higher functionality. A system that can select between motor actions can also select between tasks or subgoals. More generally, low level action selection can easily form the basis of a Turing Machine via selective routing: deciding where to route the output of thalamocortical-cerebellar modules (some of which may specialize in short term memory as in the prefrontal cortex, although all cortical modules have some short term memory capability).

There are now a number of computational models for the Basal Ganglia-Cortical system that demonstrate possible biologically plausible implementations of the general theory[28][29]; integration with the hippocampal complex leads to larger-scale systems which aim to model/explain most of higher cognition in terms of sequential mental programs[30] (of course fully testing any such models awaits sufficient computational power to run very large-scale neural nets).

For an extremely oversimplified model of the BG as a dynamic router, consider an array of N distributed modules controlled by the BG system. The BG control network expands these N inputs into an NxN matrix. There are N2 potential intermodular connections, each of which can be individually controlled. The control layer reads a compressed, downsampled version of the module's hidden units as its main input, and is also recurrent. Each output node in the BG has a multiplicative gating effect which selectively enables/disables an individual intermodular connection. If the control layer is naively fully connected, this would require (N2)2 connections, which is only feasible for N ~ 100 modules, but sparse connectivity can substantially reduce those numbers.

It is unclear (to me), whether the BG actually implements NxN style routing as described above, or something more like 1xN or Nx1 routing, but there is general agreement that it implements cortical routing.

Of course in actuality the BG architecture is considerably more complex, as it also must implement reinforcement learning, and the intermodular connectivity map itself is also probably quite sparse/compressed (the BG may not control all of cortex, certainly not at a uniform resolution, and many controlled modules may have a very limited number of allowed routing decisions). Nonetheless, the simple multiplicative gating model illustrates the core idea.

This same multiplicative gating mechanism is the core principle behind the highly successful LSTM (Long Short-Term Memory)[30] units that are used in various deep learning systems. The simple version of the BG's gating mechanism can be considered a wider parallel and hierarchical extension of the basic LSTM architecture, where you have a parallel array of N memory cells instead of 1, and each memory cell is a large vector instead of a single scalar value.

The main advantage of the BG architecture is parallel hierarchical approximate control: it allows a large number of hierarchical control loops to update and influence each other in parallel. It also reduces the huge complexity of general routing across the full cortex down into a much smaller-scale, more manageable routing challenge.

Implications for AGI

These two conceptions of the brain - the universal learning machine hypothesis and the evolved modularity hypothesis - lead to very different predictions for the likely route to AGI, the expected differences between AGI and humans, and thus any consequent safety issues and strategies.

In the extreme case imagine that the brain is a pure ULM, such that the genetic prior information is close to zero or is simply unimportant. In this case it is vastly more likely that successful AGI will be built around designs very similar to the brain, as the ULM architecture in general is the natural ideal, vs the alternative of having to hand engineer all of the AI's various cognitive mechanisms.

In reality learning is computationally hard, and any practical general learning system depends on good priors to constrain the learning process (essentially taking advantage of previous knowledge/learning). The recent and rapid success of deep learning is strong evidence for how much prior information is ideal: just a little. The prior in deep learning systems takes the form of a compact, small set of hyperparameters that control the learning process and specify the overall network architecture (an extremely compressed prior over the network topology and thus the program space).

The ULH suggests that most everything that defines the human mind is cognitive software rather than hardware: the adult mind (in terms of algorithmic information) is 99.999% a cultural/memetic construct. Obviously there are some important exceptions: infants are born with some functional but very primitive sensory and motor processing 'code'. Most of the genome's complexity is used to specify the learning machinery, and the associated reward circuitry. Infant emotions appear to simplify down to a single axis of happy/sad; differentiation into the more subtle vector space of adult emotions does not occur until later in development.

If the mind is software, and if the brain's learning architecture is already universal, then AGI could - by default - end up with a similar distribution over mindspace, simply because it will be built out of similar general purpose learning algorithms running over the same general dataset. We already see evidence for this trend in the high functional similarity between the features learned by some machine learning systems and those found in the cortex.

Of course an AGI will have little need for some specific evolutionary features: emotions that are subconsciously broadcast via the facial muscles is a quirk unnecessary for an AGI - but that is a rather specific detail.

The key takeway is that the data is what matters - and in the end it is all that matters. Train a universal learner on image data and it just becomes a visual system. Train it on speech data and it becomes a speech recognizer. Train it on ATARI and it becomes a little gamer agent.

Train a universal learner on the real world in something like a human body and you get something like the human mind. Put a ULM in a dolphin's body and echolocation is the natural primary sense, put a ULM in a human body with broken visual wiring and you can also get echolocation.

Control over training is the most natural and straightforward way to control the outcome.

To create a superhuman AI driver, you 'just' need to create a realistic VR driving sim and then train a ULM in that world (better training and the simple power of selective copying leads to superhuman driving capability).

So to create benevolent AGI, we should think about how to create virtual worlds with the right structure, how to educate minds in those worlds, and how to safely evaluate the results.

One key idea - which I proposed five years ago is that the AI should not know it is in a sim.

New AI designs (world design + architectural priors + training/education system) should be tested first in the safest virtual worlds: which in simplification are simply low tech worlds without computer technology. Design combinations that work well in safe low-tech sandboxes are promoted to less safe high-tech VR worlds, and then finally the real world.

A key principle of a secure code sandbox is that the code you are testing should not be aware that it is in a sandbox. If you violate this principle then you have already failed. Yudkowsky's AI box thought experiment assumes the violation of the sandbox security principle apriori and thus is something of a distraction. (the virtual sandbox idea was most likely discussed elsewhere previously, as Yudkowsky indirectly critiques a strawman version of the idea via this sci-fi story).

The virtual sandbox approach also combines nicely with invisible thought monitors, where the AI's thoughts are automatically dumped to searchable logs.

Of course we will still need a solution to the value learning problem. The natural route with brain-inspired AI is to learn the key ideas behind value acquisition in humans to help derive an improved version of something like inverse reinforcement learning and or imitation learning[31] - an interesting topic for another day.

Conclusion

Ray Kurzweil has been predicting for decades that AGI will be built by reverse engineering the brain, and this particular prediction is not especially unique - this has been a popular position for quite a while. My own investigation of neuroscience and machine learning led me to a similar conclusion some time ago.

The recent progress in deep learning, combined with the emerging modern understanding of the brain, provide further evidence that AGI could arrive around the time when we can build and train ANNs with similar computational power as measured very roughly in terms of neuron/synapse counts. In general the evidence from the last four years or so supports Hanson's viewpoint from the Foom debate. More specifically, his general conclusion:

Future superintelligences will exist, but their vast and broad mental capacities will come mainly from vast mental content and computational resources. By comparison, their general architectural innovations will be minor additions.

The ULH supports this conclusion.

Current ANN engines can already train and run models with around 10 million neurons and 10 billion (compressed/shared) synapses on a single GPU, which suggests that the goal could soon be within the reach of a large organization. Furthermore, Moore's Law for GPUs still has some steam left, and software advances are currently improving simulation performance at a faster rate than hardware. These trends implies that Anthropomorphic/Neuromorphic AGI could be surprisingly close, and may appear suddenly.

What kind of leverage can we exert on a short timescale?

171 comments

Comments sorted by top scores.

comment by V_V · 2015-06-23T17:55:15.080Z · LW(p) · GW(p)

All of this is interesting, but it seems to me that you did not make a strong case for the brain using an universal learning machine as its main system.

Specifically, I think you fail to address the evidence for evolved modularity:

The brain uses spatially specialized regions for different cognitive tasks.

This specialization pattern is mostly consistent across different humans and even across different species.

Damage to or malformation of some brain regions can cause specific forms of disability (e.g. face blindness). Sometimes the disability can be overcome but often not completely.

In various mammals, infants are capable of complex behavior straight out of the womb. Human infants are only exhibit very simple behaviors and require many years to reach full cognitive maturity therefore the human brain relies more on learning than the brain of other mammals, but the basic architecture is the same, thus this is a difference of degree, not kind.

It seems more likely that if there is a general-purpose "universal" learning system in the human brain then it is used as an inefficient fall-back mechanism when the specialized modules fail, not as the core mechanism that handles most of the cognitive tasks.

I'm also wary about using the recent successes of deep learning to draw inferences about how the brain works.

Be ware of the "ELIZA effect": due to our over-active agency detection ability, we tend to anthropomorphize the behavior of even very simple AI systems.

There seems to be a trend in AI where for any technique that is currently hot there are people who say: "This is how the brain works. We don't know all the details, but studies X, Y and Z clearly point in this direction." After a few years and maybe an AI (mini)winter the brain seems to work in another way...

Specifically on deep learning:

For all the speculation, there is still no clear evidence that the brain uses anything similar to backpropagation.

Some of the most successful deep learning approaches, such as modern convnets for computer vision, rely on quite un-biological features such as weight sharing and rectified linear units.

"Deep learning" is a quite vague term anyway, it does not refer to any single algorithm or architecture. In fact, there are so many architectural variants and hyper-parameters that need to be adapted to each specific task that optimizing them can be considered a non-trivial learning problem on its own.

Perhaps most importantly, deep learning methods generally work in supervised learning settings and they have quite weak priors: they require a dataset as big as ImageNet to yield good image recognition performances (with still some characteristic error patterns), or a parallel corpus of million sentence pairs to yield sub-human level machine translation quality or days of continuous simulated gameplay on the ATARI 2600 emulator to obtain good scores (super-human for some games, sub-human for others). Clearly humans are able to effectively learn form a much smaller amount of evidence, indicating stronger priors and the ability to exploit minimal supervision.

Therefore I would say that deep learning methods, while certainly interesting from an engineering perspective, are probably not very much relevant to the understanding of the brain, at least given the current state of the evidence.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2015-06-23T20:07:50.839Z · LW(p) · GW(p)

Thanks, I was waiting for at least one somewhat critical reply :)

Specifically, I think you fail to address the evidence for evolved modularity:

- The brain uses spatially specialized regions for different cognitive tasks.

- This specialization pattern is mostly consistent across different humans and even across different species.

The ferret rewiring experiments, the tongue based vision stuff, the visual regions learning to perform echolocation computations in the blind, this evidence together is decisive against the evolved modularity hypothesis as I've defined that hypothesis, at least for the cortex. The EMH posits that the specific cortical regions rely on complex innate circuitry specialized for specific tasks. The evidence disproves that hypothesis.

Damage to or malformation of some brain regions can cause specific forms of disability (e.g. face blindness). Sometimes the disability can be overcome but often not completely.

Sure. Once you have software loaded/learned into hardware, damage to the hardware is damage to the software. This doesn't differentiate the two hypotheses.

In various mammals, infants are capable of complex behavior straight out of the womb. Human infants are only exhibit very simple behaviors and require many years to reach full cognitive maturity therefore the human brain relies more on learning than the brain of other mammals, but the basic architecture is the same, thus this is a difference of degree, not kind.

Yes - and I described what is known about that basic architecture. The extent to which a particular brain relies on learning vs innate behaviour depends on various tradeoffs such as organism lifetime and brain size. Small brained and short-living animals have much less to gain from learning (less time to acquire data, less hardware power), so they rely more on innate circuitry, much of which is encoded in the oldbrain and the brainstem. This is all very much evidence for the ULH. The generic learning structures - the cortex and cerbellum, generally grow in size with larger organisms and longer lifespans.

This has also been tested via decortication experiments and confirms the general ULH - rabbits rely much less on their cortex for motor behavior, larger primates rely on it almost exclusively, cats and dogs are somewhere in between, etc.

This evidence shows that the cortex is general purpose, and acquires complex circuitry through learning. Recent machine learning systems provide further evidence in the form of - this is how it could work.

For all the speculation, there is still no clear evidence that the brain uses anything similar to backpropagation.

As I mentioned in the article, backprop is not really biologically plausible. Targetprop is, and there are good reasons to suspect the brain is using something like targetprop - as that theory is the latest result in a long line of work attempting to understand how the brain could be doing long range learning. Investigating and testing the targetprop theory and really confirming it could take a while - even decades. On the other hand, if targetprop or some variant is proven to work in a brain-like AGI, that is something of a working theory that could then help accelerate neuroscience confirmation.

There seems to be a trend in AI where for any technique that is currently hot there are people who say: "This is how the brain works. We don't know all the details, but studies X, Y and Z clearly point in this direction." After a few years and maybe an AI (mini)winter the brain seems to work in another way...

I did not say deep learning is "how the brain works". I said instead the brain is - roughly - a specific biological implementation of a ULH, which itself is a very general model which also will include any practical AGIs.

I said that DL helps indirectly confirm the ULH of the brain, specifically by showing how the complex task specific circuitry of the cortex could arise through a simple universal learning algorithm.

Computational modeling is key - if you can't build something, you don't understand it. To the extent that any AI model can functionally replicate specific brain circuits, it is useful to neuroscience. Period. Far more useful than psychological theorizing not grounded in circuit reality. So computational neuroscience and deep learning (which really is just the neuroscience inspired branch of machine learning) naturally have deep connections.

Some of the most successful deep learning approaches, such as modern convnets for computer vision, rely on quite un-biological features such as weight sharing and rectified linear units

Biological plausibility was one of the heavily discussed aspects of RELUs.

From the abstract:

"While logistic sigmoid neurons are more biologically plausible than hyperbolic tangent neurons, the latter work better for training multi-layer neural networks. This paper shows that rectifying neurons are an even better model of biological neurons and yield equal or better performance than hyperbolic tangent networks in spite of . . "

Weight sharing is unbiological: true. It is also an important advantage that von-neumman (time-multiplexed) systems have over biological (non-multiplexed). The neuromorphic hardware approaches largely cannot handle weight-sharing. Of course convnents still work without weight sharing - it just may require more data and or better training and regularization. It is interesting to speculate how the brain deals with that, as is comparing the details of convent learning capability vs bio-vision. I don't have time to get into that at the moment, but I did link to at least one article comparing convents to bio vision in the OP.

"Deep learning" is a quite vague term anyway,

Sure - so just taboo it then. When I use the term "deep learning", it means something like "the branch of machine learning which is more related to neuroscience" (while still focused on end results rather than emulation).

Perhaps most importantly, deep learning methods generally work in supervised learning settings and they have quite weak priors: they require a dataset as big as ImageNet to yield good image recognition performances

Comparing two learning systems trained on completely different datasets with very different objective functions is complicated.

In general though, CNNs are a good model of fast feedforward vision - the first 150ms of the ventral stream. In that domain they are comparable to biovision, with the important caveat that biovision computes a larger and richer output parameter map than most any CNNs. Most CNNs (there are many different types) are more narrowly focused, but also probably learn faster because of advantages like weight sharing. The amount of data required to train the CNN up to superhuman performance on narrow tasks is comparable or less than that required to train a human visual system up to high performance. (but again the cortex is doing something more like transfer learning, which is harder)

Past 150 ms or so and humans start making multiple saccades and also start to integrate information from a larger number of brain regions, including frontal and temporal cortical regions. At that point the two systems aren't even comparable, humans are using more complex 'mental programs' over multiple saccades to make visual judgements.

Of course, eventually we will have AGI systems that also integrate those capabilities.

days of continuous simulated gameplay on the ATARI 2600 emulator to obtain good scores

That's actually extremely impressive - superhuman learning speed.

Therefore I would say that deep learning methods, while certainly interesting from an engineering perspective, are probably not very much relevant to the understanding of the brain, at least given the current state of the evidence.

In that case, I would say you may want to read up more on the field. If you haven't yet, check out the original sparse coding paper (over 3000 citations), to get an idea of how crucial new computational models have been for advancing our understanding of cortex.

Replies from: V_V, advael, nshepperd↑ comment by V_V · 2015-06-25T15:04:41.865Z · LW(p) · GW(p)

The ferret rewiring experiments, the tongue based vision stuff, the visual regions learning to perform echolocation computations in the blind, this evidence together is decisive against the evolved modularity hypothesis as I've defined that hypothesis, at least for the cortex.

But none of these works as well as using the original task-specific regions, and anyway in all these experiments the original task-specific regions are still present and functional, therefore maybe the brain can partially use these regions by learning how to route the signals to them.

Sure. Once you have software loaded/learned into hardware, damage to the hardware is damage to the software. This doesn't differentiate the two hypotheses.

But then why doesn't universal learning just co-opt some other brain region to perform the task of the damaged one? In the cases where there is a congenital malformation, that makes the usual task-specific region missing or dysfunctional, why isn't the task allocated to some other region?

And anyway why is the specialization pattern consistent across individuals and even species? If you train an artificial neural network multiple times on the same dataset from different random initializations each time the hidden nodes will specialize in a different way: at least ANNs have permutation symmetry between nodes in the same layer, and as long as nodes operate in the linear region of the activation function, there is also redundancy between layers. This means that many sets of weights specify the same or similar function, and the training process chooses one of them randomly depending on the initialization (and minibatch sampling, dropout, etc.).

If, as you claim, the basal ganglia and the cortex in the brain make up a sort of cpu-memory system, then there should be substantial permutation symmetry. After all, in a computer you can swap block or pages of memory around and as long as pointers (or page tables) are updated the behavior does not change, up to some performance issues due to cache misses. If the brain worked that way we should expect cortical regions to be allocated to different tasks in a more or less random pattern varying between individuals.

Instead we observe substantial consistency, even in the left-right specialization patterns which is remarkable since at macroscopic level the brain has substantial lateral symmetry.

This has also been tested via decortication experiments and confirms the general ULH - rabbits rely much less on their cortex for motor behavior, larger primates rely on it almost exclusively, cats and dogs are somewhere in between, etc. This evidence shows that the cortex is general purpose, and acquires complex circuitry through learning. Recent machine learning systems provide further evidence in the form of - this is how it could work.

Decortication experiments only show that certain species rely on the cortex more than others, they don't show that that cortex is general purpose and acquires complex circuitry through learning.

Horses, for instance, are large animals with a long lifespan and a large brain (encephalization coefficient similar to that of cats and dogs), and yet a newborn horse is able to walk, run and follow their mother within a few hours from birth.

As I mentioned in the article, backprop is not really biologically plausible. Targetprop is, and there are good reasons to suspect the brain is using something like targetprop - as that theory is the latest result in a long line of work attempting to understand how the brain could be doing long range learning.

Targetprop is still highly speculative. It has not shown to work well in artificial neural networks and the evidence of biological plausibility is handwavy.

Biological plausibility was one of the heavily discussed aspects of RELUs.

Ok.

Of course convnents still work without weight sharing - it just may require more data and or better training and regularization

In principle yes, but trivially so as they are universal approximators. In practice, weight sharing enables these systems to easily learn translational invariance.

That's actually extremely impressive - superhuman learning speed.

Humans get tired after continuously playing for a few hours, but in terms of overall playtime they learn faster.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2015-06-25T19:19:14.907Z · LW(p) · GW(p)

in all these experiments the original task-specific regions are still present and functional, therefore maybe the brain can partially use these regions by learning how to route the signals to them.

No - these studies involve direct measurements (electrodes for the ferret rewiring, MRI for echolocation). They know the rewired auditory cortex is doing vision, etc.

But then why doesn't universal learning just co-opt some other brain region to perform the task of the damaged one?

It can, and this does happen all the time. Humans can recover from serious brain damage (stroke, injury, etc). It takes time to retrain and reroute circuitry - similar to relearning everything that was lost all over again.

And anyway why is the specialization pattern consistent across individuals and even species? If you train an artificial neural network multiple times on the same dataset

Current ANN's assume a fixed module layout, so they aren't really comparable in module-task assignment.

Much of the specialization pattern could just be geography - V1 becomes visual because it is closest to the visual input. A1 becomes auditory because it is closest to the auditory input. etc.

This should be the default hypothesis, but there also could be some element of prior loading, perhaps from pattern generators in the brainstem. (I have read a theory that there is a pattern generator for faces that pretrains the visual cortex a little bit in the womb, so that it starts with a vague primitive face detector).

After all, in a computer you can swap block or pages of memory around and as long as pointers (or page tables) are updated the behavior does not change, up to some performance issues due to cache misses. If the brain worked that way we should expect cortical regions to be allocated to different tasks in a more or less random pattern varying between individuals.

I said the BG is kind-of-like the CPU, the cortex is kind-of-like a big FPGA, but that is an anlogy. The are huge differences between slow bio-circuitry and fast von neumman machines.

Firstly the brain doesn't really have a concept of 'swapping memory'. The closest thing to that is retraining, where the hippocampus can train info into the cortex. It's a slow complex process that is nothing like swapping memory.

Finally the brain is much more optimized at the wiring/latency level. Functionality goes in certain places because that is where it is best for that functionality - it isn't permutation symmetric in the slightest. Every location has latency/wiring tradeoffs. In a von neumman memory we just abstract that all away. Not in the brain. There is an actual optimal location for every concept/function etc.

a newborn horse is able to walk, run and follow their mother within a few hours from birth.

That is fast for mammals - I know first hand that it can take days for deer. Nonetheless, as we discussed, the brainstem provides a library of innate complex motor circuitry in particular, which various mammals can rely on to varying degrees, depending on how important complex early motor behavior is.

Targetprop is still highly speculative. It has not shown to work well in artificial neural networks and the evidence of biological plausibility is handwavy.

I agree that there is still more work to be done understanding the brain's learning machinery. Targetprop is useful/exciting in ML, but it isn't the full picture yet.

[Atari] That's actually extremely impressive - superhuman learning speed.

Humans get tired after continuously playing for a few hours, but in terms of overall playtime they learn faster.

Not at all. The Atari agent becomes semi-superhuman by day 3 of it's life. When humans start playing atari, they already have trained vision and motor systems, and Atari is designed for these systems. Even then your statement is wrong - in that I don't think any children achieve playtester levels of skill in just even a few days.

Replies from: V_V↑ comment by V_V · 2015-06-27T19:31:23.174Z · LW(p) · GW(p)

Finally the brain is much more optimized at the wiring/latency level. Functionality goes in certain places because that is where it is best for that functionality - it isn't permutation symmetric in the slightest. Every location has latency/wiring tradeoffs. In a von neumman memory we just abstract that all away. Not in the brain. There is an actual optimal location for every concept/function etc.

Well, the eyes are at the front of the head, but the optic nerves connect to the brain at the back, and they also cross at the optic chiasm. Axons also cross contralaterally in the spinal cord and if I recall correctly there are various nerves that also don't take the shortest path.

This seems to me as evidence that the nervous system is not strongly optimized for latency.

↑ comment by jacob_cannell · 2015-06-27T20:25:33.245Z · LW(p) · GW(p)

This is a total misconception, and it is a good example of the naive engineer fallacy (jumping to the conclusion that a system is poorly designed when you don't understand how the system actually works and why).

Remember the distributed software modules - including V1 - have components in multiple physical modules (cortex, cerebellum, thalamus, BG). Not every DSM has components in all subsystems, but V1 definitely has a thalamic relay component (VGN).

The thalamus/BG is in the center of the brain, which makes sense from wiring minimization when you understand the DPM system. Low freq/compressed versions of the cortical map computations can interact at higher speeds inside the small compact volume of the BG/thalamus. The BG/thalamus basically contains a microcosm model of the cortex within itself.

The thalamic relay comes first in sequential processing order, so moving cortical V1 closer to the eyes wouldn't help in the slightest. (Draw this out if it doesn't make sense)

↑ comment by advael · 2015-06-25T21:08:52.642Z · LW(p) · GW(p)

For e.g. the ferret rewiring experiments, tongue based vision, etc., is a plausible alternative hypothesis that there are more general subtypes of regions that aren't fully specialized but are more interoperable than others?

For example, (Playing devil's advocate here) I could phrase all of the mentioned experiments as "sensory input remapping" among "sensory input processing modules." Similarly, much of the work in BCI interfaces for e.g. controlling cursors or prosthetics could be called "motor control remapping". Have we ever observed cortex being rewired for drastically dissimilar purposes? For example, motor cortex receiving sensory input?

If we can't do stuff like that, then my assumption would be that at the very least, a lot of the initial configuration is prenatal and follows kind of a "script" that might be determined by either some genome-encoded fractal rule of tissue formation, or similarities in the general conditions present during gestation. Either way, I'm not yet convinced there's a strong argument that all brain function can be explained as working like a ULM (Even if a lot of it can)

Replies from: jacob_cannell, None↑ comment by jacob_cannell · 2015-06-25T22:58:35.494Z · LW(p) · GW(p)

Have we ever observed cortex being rewired for drastically dissimilar purposes? For example, motor cortex receiving sensory input?

I'm not sure - I have a vague memory of something along those lines but .. nothing specific.

From what I remember, motor, sensor, and association cortex do have some intrinsic differences at the microcircuit level. For example some motor cortex has larger pyramidal cells in the output layer. However, I believe most motor cortex is best described as sensorimotor - it depends heavily on sensor data from the body.

a lot of the initial configuration is prenatal and follows kind of a "script" that might be determined by either some genome-encoded fractal rule

Well yes - there is a general script for the overall architecture, and alot of innate functionality as well, especially in specific regions like the brainstem's pattern generators. As I said in the article - there is always room for innate functionality in the architectural prior and in specific circuits - the brain is certainly not a pure ULM.

Either way, I'm not yet convinced there's a strong argument that all brain function can be explained as working like a ULM (Even if a lot of it can)

ULM refers to the overall architecture, with the general learning part specifically implemented by the distributed BG/cortex/cerbellum modules. But the BG and hippocampal system also rely heavily on learning internally, as does the amygdala and .. probably almost all of it to varying degrees. The brainstem is specifically the place where we can point and say - this is mostly innate circuitry, but even it probably has some learning going on.

↑ comment by [deleted] · 2015-06-26T23:52:55.636Z · LW(p) · GW(p)

For e.g. the ferret rewiring experiments, tongue based vision, etc., is a plausible alternative hypothesis that there are more general subtypes of regions that aren't fully specialized but are more interoperable than others?

It's far more likely that different brain modules implement different learning rules, but all learn, than that they encode innate mental functionality which is not subject to learning at all.

Replies from: advael↑ comment by advael · 2015-06-27T01:31:56.786Z · LW(p) · GW(p)

I'm inclined to agree. Actually I've been convinced for a while that this is a matter of degrees rather than being fully one way or the other (Modules versus learning rules), and am convinced by this article that the brain is more of a ULM than I had previously thought.

Still, when I read that part the alternative hypothesis sprung to mind, so I was curious what the literature had to say about it (Or the post author.)

↑ comment by nshepperd · 2015-09-07T04:32:22.378Z · LW(p) · GW(p)

The ferret rewiring experiments, the tongue based vision stuff, the visual regions learning to perform echolocation computations in the blind, this evidence together is decisive against the evolved modularity hypothesis as I've defined that hypothesis, at least for the cortex. The EMH posits that the specific cortical regions rely on complex innate circuitry specialized for specific tasks. The evidence disproves that hypothesis.

It seems a little strange to treat this as a triumphant victory for the ULH. At the most, you've shown that the "fundamentalist" evolved modularity hypothesis is false. You didn't really address how the ULH explains this same evidence.

And there are other mysteries in this model, such as the apparent universality of specific cognitive heuristics and biases, or of various behaviours like altruism, deception, sexuality that seems obviously evolved. And, as V_V mentioned, the lateral asymmetry of the brain's functionality vs the macroscopic symmetry.

Otherwise, the conclusion I would draw from this is that both theories are wrong, or that some halfway combination of them is true (say, "universal" plasticity plus a genetic set of strong priors somehow encoded in the structure).

comment by Kaj_Sotala · 2015-06-21T21:53:02.020Z · LW(p) · GW(p)

Thank you. This was an excellent article, which helped me clarify my own thinking on the topic.

I'd love to see you write more on this.

comment by Dreaded_Anomaly · 2015-06-25T18:30:00.179Z · LW(p) · GW(p)

A few brief supplements to your introduction:

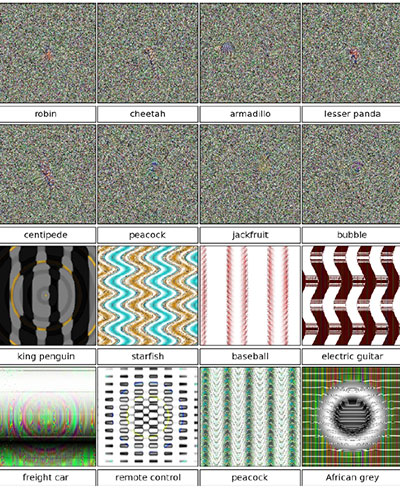

The source of the generated image is no longer mysterious: Inceptionism: Going Deeper into Neural Networks

But though the above is quite fascinating and impressive, we should also keep in mind the bizarre false positives that a person can generate: Images that fool computer vision raise security concerns

↑ comment by jacob_cannell · 2015-06-27T18:06:43.292Z · LW(p) · GW(p)

The trippy shuggorth title image was mysterious when it was originally posted, basically someone leaked an image a little before the inceptionism blog post.

A CNN is a reasonable model for fast feedforward vision. We can isolate this pathway for biological vision by using rapid serial presentation - basically flashing an image for 100ms or so.

So imagine if you just saw a flash of one of these images, for a brief moment, and then you had to quickly press a button for the image category - no time to think about it - it's jeopardy style instant response.

There is no button for "noisy image", there is no button for "wavy line image", etc.

Now the fooling images are generated by an adversarial process. It's like we have a copy of a particular mind in a VR sim, we flash it an image, see what button it presses. Based on the response, we then generate a new image and unwind time and repeat. We keep doing this until we get some wierd classification errors. It allows us to explore the decision space of the agent.

It is basically reverse engineering. It requires a copy of the agent's code or at least access to a copy with the ability to do tons of queries, and it also probably depends on the agent being completely deterministic. I think that biological minds avoid this issue indirectly because they use stochastic sampling based on secure hardware/analog noise generators.

Stochastic models/ANNs could probably avoid this issue.

↑ comment by kpreid · 2015-06-27T15:17:34.329Z · LW(p) · GW(p)

I look at the bizarre false positives and I wonder if (warning: wild speculation) the problem is that the networks were not trained to recognize the lack of objects. For example, in most cases you have some noise in the image, so if every training image is something, or rather something-plus-noise, then the system could learn that the noise is 100% irrelevant and pick out the something.

(The noisy images look to me like they have small patches in one spot faintly resembling what they're identified as — if my vision had a rule that deemphasized the non-matching noise and I had a much smaller database of the world than I do, then I think I'd agree with those neural networks.)

If the above theory is true, then a possible fix would be to include in training data a variety of images for which the expected answers are like “empty scene”, "too noisy", “simple geometric pattern”, etc. But maybe this is already done — I'm not familiar with the field.

Replies from: Unknowns↑ comment by Unknowns · 2015-06-27T15:32:08.568Z · LW(p) · GW(p)

No, even if you classify these false positives as "no image", this will not prevent someone from constructing new false positives.

Basically the amount of training data is always extremely small compared to the theoretically possible number of distinct images, so it is always possible to construct such adversarial positives. These are not random images which were accidentally misidentified in this way. They have been very carefully designed based on the current data set.

Something similar is probably theoretically possible with human vision recognition as well. The only difference would be that we would be inclined to say "but it really does look like a baseball!"

Replies from: jacob_cannell, eternal_neophyte↑ comment by jacob_cannell · 2015-06-27T18:12:45.316Z · LW(p) · GW(p)

This technique exploits the fact that the CNN is completely deterministic - see my reply above. It may be very difficult for stochastic networks.

CNNs are comparable to the first 150ms or so of human vision, before feedback , multiple saccades, and higher order mental programs kicks in. So the difficulty in generating these fooling images also depends on the complexity of the inference - a more complex AGI with human-like vision given larger amounts of time to solve the task would probably also be harder to fool, independent of the stochasticity issue.

↑ comment by eternal_neophyte · 2015-06-27T15:45:40.374Z · LW(p) · GW(p)

A human being would be capable of pointing out why something looks like a baseball - to be able to point out where the curves and lines are that provoke that idea. We do this when we gaze at clouds without coming to believe there really are giant kettles floating around; we're capable of taking the abundance of contextual information in the scene into account and coming up with reasonable hypotheses for why what we're seeing looks like x, y or z. If classifier vision systems had the same ability they probably wouldn't make the egregious mistakes they do.

Replies from: Unknowns↑ comment by Unknowns · 2015-06-27T16:13:51.304Z · LW(p) · GW(p)

If I understand correctly how these images are constructed, it would be something like this: take some random image. The program can already make some estimate of whether it is a baseball, say 0.01% or whatever. Then you go through the image pixel by pixel and ask, "If I make this pixel slightly brighter, will your estimate go up? if not, will it go up if I make it slightly dimmer?" (This is just an example, you could change the color or whatever as well.) Thus you modify each pixel such that you increase the program's estimate that it is a baseball. By the time you have gone through all the pixels, the probability of being a baseball is very high. But to us, the image looks more or less just the way it did at first. Each pixel has been modified too slightly to be noticed by us.

But this means that in principle the program can indeed explain why it looks like a baseball -- it is a question of a very slight tendency in each pixel in the entire image.

Replies from: eternal_neophyte↑ comment by eternal_neophyte · 2015-06-27T17:30:11.112Z · LW(p) · GW(p)

this means that in principle the program can indeed explain why it looks like a baseball -- it is a question of a very slight tendency in each pixel in the entire image.

But the explanation will be just as complex as the procedure used to classify the data. If I change the hue slightly or twiddle their RGB values just slightly, the "explanation" for why the data seems to contain a baseball image will be completely different. Human beings on the other hand can look at pictures of the same object in different conditions of lighting, of different particular sizes and shapes, taken from different camera angles, etc. and still come up with what would be basically the same set of justifications for matching each image to a particular classification (e.g. an image contains a roughly spherical field of white, with parallel bands of stitch-like markings bisecting it in an arc...hence it's of a baseball).

The ability of human beings to come up with such compressed explanations, and our ability to arrange them into an ordering, is arguably what allows us to deal with iconic representations of and represent objects at varying levels of detail (as in http://38.media.tumblr.com/tumblr_m7z4k1rAw51rou7e0.png).

Replies from: Jiro↑ comment by Jiro · 2015-06-27T22:56:47.362Z · LW(p) · GW(p)

But the explanation will be just as complex as the procedure used to classify the data. If I change the hue slightly or twiddle their RGB values just slightly, the "explanation" for why the data seems to contain a baseball image will be completely different

Will it?

What if slightly twiddling the RGB values produces something that is basically "spherical field of white, etc. with enough noise on top of it that humans can't see it"?

Replies from: eternal_neophyte↑ comment by eternal_neophyte · 2015-06-27T23:17:48.349Z · LW(p) · GW(p)

What if slightly twiddling the RGB values produces something that is basically "spherical field of white, etc. with enough noise on top of it that humans can't see it"?

That would all hinge on what it means for an image to be "hidden" beneath noise, I suppose. The more noise you layer on top of an image the more room for interpretation there is in classifying it, and the less salient any particular classification candidate will be. If a scrutable system can come up with compelling arguments for a strange classification that human beings would not make, then its choices would be naturally less ridiculous than otherwise. But to say that "humans conceivably may suffer from the same problem" is a bit of a dodge; esp. in light of the fact that these systems are making mistakes we clearly would not.

But either way, what you're proposing and what Unknowns was arguing are different. Unknowns was (if I understood him rightly) arguing that the assignment of different probability weights for pixels (or, more likely, groups of pixels) representing a particular feature of an object is an explanation of why they're classified the way they are. But such an "explanation" in inscrutable; we cannot ourselves easily translate it into the language of lines, curves, apparent depth, etc. (unless we write some piece of software to do this and which is then effectively part of the agent).

Replies from: Jiro↑ comment by Jiro · 2015-06-27T23:33:53.546Z · LW(p) · GW(p)

Look at it from the other end: You can take a picture of a baseball and overlay noise on top of it. There could, at least plausibly, be a point where overlaying the noise destroys the ability for humans to see the baseball, but the information is actually still present (and could, for instance, be recovered if you applied a noise reduction algorithm to that). Perhaps when you are twiddling the pixels of random noise, you're actually constructing such a noisy baseball image a pixel at a time.

Replies from: eternal_neophyte↑ comment by eternal_neophyte · 2015-06-28T00:49:00.091Z · LW(p) · GW(p)

Agree with all you said, but have to comment on

Perhaps when you are twiddling the pixels of random noise, you're actually constructing such a noisy baseball image a pixel at a time.

You could be constructing a noisy image of a baseball one pixel at a time. In fact if you actually are then your network would be amazingly robust. But in a non-robust network, it seems much more probable that you're just exploiting the system's weaknesses and milking them for all they're worth.

comment by John_Maxwell (John_Maxwell_IV) · 2015-06-21T23:15:15.125Z · LW(p) · GW(p)

Great post! Thanks for writing it. Seems like a good fit for Main.

So just to clarify my understanding: If the ULH is true it becomes more plausible that, say, playing video games and hating books because authority figures force you to read them in school have long-term broad impacts on your personality. And if the EMH is true, it becomes more plausible that important characteristics like the Big Five personality traits and intelligence are genetically coded and you become the person your genes describe. Correct?

Yudkowsky's AI box experiments and that entire notion of open boxing is a strawman - a distraction.

Us humans have contemplated whether we are in a simulation even though no one "outside the Matrix" told us we might be. Is it possible that an AI-in-training might contemplate the same thing?

In general the evidence from the last four years or so supports Hanson's viewpoint from the Foom debate.

Really? My impression was that Hanson had more of a EMH view.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2015-06-21T23:50:27.622Z · LW(p) · GW(p)

So just to clarify my understanding: If the ULH is true it becomes more plausible that, say, playing video games and hating books because authority figures force you to read them in school have long-term broad impacts on your personality.

I agree with this largely but I would replace 'personality' with 'mental software', or just 'mind'. Personality to me connotes a subset of mental aspects that are more associated with innate variables.

I suspect that enjoying/valuing learning is extremely important for later development. It seems probable that some people are born with a stronger innate drive for learning, but that drive by itself can also probably be adjusted through learning. But i'm not aware of any hard evidence on this matter.

In my case I was somewhat obsessed with video games as a young child and my father actually did force me to read books and even the encyclopedia. I found that I hated the books he made me read (I only liked sci-fi) but I loved the encyclopedia. I ended up learning how to quickly skim books and fake it enough to pass the resulting QA test.

And if the EMH is true, it becomes more plausible that important characteristics like the Big Five personality traits and intelligence are genetically coded

I don't think abstract high level variables like big five personality traits or IQ scores are the relevant features for the EMH vs ULH issue. For example in the ULH scenario, there is still plenty of room for strongly genetically determined IQ effects (hardware issues/tradeoffs), and personality variables are not complex cognitive algorithms.

Us humans have contemplated whether we are in a simulation even though no one "outside the Matrix" told us we might be. Is it possible that an AI-in-training might contemplate the same thing?

Sure, and this was part of what my post from 5 years back was all about. It's kind of a world design issue. Is it better to have your AIs in your testsim believe in a simplistic creator god? (which is in a way on the right track with regards to the sim arg, but it also doesn't do them much good) Or is better for them to have a naturalist/atheist worldview? (potentially more dangerous in the long term as it leads to scientific investigation and eventually the sim arg)

That post was downvoted into hell, in part I think because I posted to main - I was new to LW and didn't understand the main/discussion distinction. Also, I think people didn't like the general idea of anything mentioning the word theology, or the idea of intentionally giving your testsim AI a theology.

Really? My impression was that Hanson had more of a EMH view.

I should clarify - I meant Hanson's viewpoint on just the FOOM issue specifically as outlined in that one post, not his whole view on AGI - which I gather is very much a EMH type viewpoint. His view seems to be pessimistic on first principles AGI but also pessimistic on brain-based AGI but optimistic on brain uploading. However many of his insights/speculations on a brain upload future also apply equally well to a brain-based AGI future.

Replies from: John_Maxwell_IV↑ comment by John_Maxwell (John_Maxwell_IV) · 2015-06-22T02:17:07.113Z · LW(p) · GW(p)