Posts

Comments

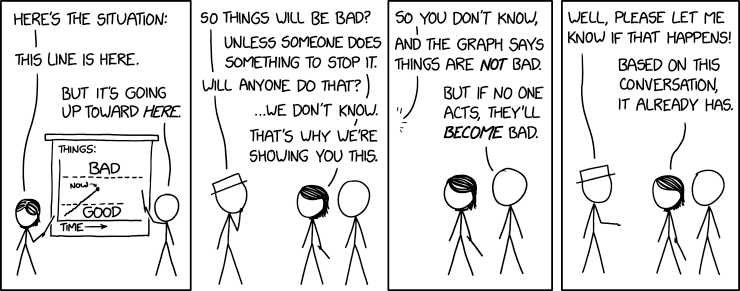

there are various proposals (not from Tyler!) for ‘succession,’ of passing control over to the AIs intentionally, either because people prefer it (as many do!) or because it is inevitable regardless so managing it would help it go better. I have yet to see such a proposal that has much chance of not bringing about human extinction, or that I expect to meaningfully preserve value in the universe. As I usually say, if this is your plan, Please Speak Directly Into the Microphone.

[Meditations on Moloch](https://slatestarcodex.com/2014/07/30/meditations-on-moloch)

I am a transhumanist and I really do want to rule the universe.

Not personally – I mean, I wouldn’t object if someone personally offered me the job, but I don’t expect anyone will. I would like humans, or something that respects humans, or at least gets along with humans – to have the job.

But the current rulers of the universe – call them what you want, Moloch, Gnon, whatever – want us dead, and with us everything we value. Art, science, love, philosophy, consciousness itself, the entire bundle. And since I’m not down with that plan, I think defeating them and taking their place is a pretty high priority.

The opposite of a trap is a garden. The only way to avoid having all human values gradually ground down by optimization-competition is to install a Gardener over the entire universe who optimizes for human values.

And the whole point of Bostrom’s Superintelligence is that this is within our reach. Once humans can design machines that are smarter than we are, by definition they’ll be able to design machines which are smarter than they are, which can design machines smarter than they are, and so on in a feedback loop so tiny that it will smash up against the physical limitations for intelligence in a comparatively lightning-short amount of time. If multiple competing entities were likely to do that at once, we would be super-doomed. But the sheer speed of the cycle makes it possible that we will end up with one entity light-years ahead of the rest of civilization, so much so that it can suppress any competition – including competition for its title of most powerful entity – permanently. In the very near future, we are going to lift something to Heaven. It might be Moloch. But it might be something on our side. If it’s on our side, it can kill Moloch dead.

And if that entity shares human values, it can allow human values to flourish unconstrained by natural law.

@gwern wrote am explanation why this is surprising (for some) [here](https://forum.effectivealtruism.org/posts/Mo7qnNZA7j4xgyJXq/sam-altman-open-ai-discussion-thread?commentId=CAfNAjLo6Fy3eDwH3)

Open Philanthropy (OP) only had that board seat and made a donation because Altman invited them to, and he could personally have covered the $30m or whatever OP donated for the seat [...] He thought up, drafted, and oversaw the entire for-profit thing in the first place, including all provisions related to board control. He voted for all the board members, filling it back up from when it was just him (& Greg Brockman at one point IIRC). He then oversaw and drafted all of the contracts with MS and others, while running the for-profit and eschewing equity in the for-profit. He designed the board to be able to fire the CEO because, to quote him, "the board should be able to fire me". [...]

Credit where credit is due - Altman may not have believed the scaling hypothesis like Dario Amodei, may not have invented PPO like John Schulman, may not have worked on DL from the start like Ilya Sutskever, may not have created GPT like Alec Radford, may not have written & optimized any code like Brockman's - but the 2023 OA organization is fundamentally his work.

The question isn't, "how could EAers* have ever let Altman take over OA and possibly kick them out", but entirely the opposite: "how did EAers ever get any control of OA, such that they could even possibly kick out Altman?" Why was this even a thing given that OA was, to such an extent, an Altman creation?

The answer is: "because he gave it to them." Altman freely and voluntarily handed it over to them.

So you have an answer right there to why the Board was willing to assume Altman's good faith for so long, despite everyone clamoring to explain how (in hindsight) it was so obvious that the Board should always have been at war with Altman and regarding him as an evil schemer out to get them. But that's an insane way for them to think! Why would he undermine the Board or try to take it over, when he was the Board at one point, and when he made and designed it in the first place? Why would he be money-hungry when he refused all the equity that he could so easily have taken - and in fact, various partner organizations wanted him to have in order to ensure he had 'skin in the game'? Why would he go out of his way to make the double non-profit with such onerous & unprecedented terms for any investors, which caused a lot of difficulties in getting investment and Microsoft had to think seriously about, if he just didn't genuinely care or believe any of that? Why any of this?

(None of that was a requirement, or even that useful to OA for-profit. [...] Certainly, if all of this was for PR reasons or some insidious decade-long scheme of Altman to 'greenwash' OA, it was a spectacular failure - nothing has occasioned more confusion and bad PR for OA than the double structure or capped-profit. [...]

What happened is, broadly: 'Altman made the OA non/for-profits and gifted most of it to EA with the best of intentions, but then it went so well & was going to make so much money that he had giver's remorse, changed his mind, and tried to quietly take it back; but he had to do it by hook or by crook, because the legal terms said clearly "no takesie backsies"'. Altman was all for EA and AI safety and an all-powerful nonprofit board being able to fire him, and was sincere about all that, until OA & the scaling hypothesis succeeded beyond his wildest dreams, and he discovered it was inconvenient for him and convinced himself that the noble mission now required him to be in absolute control, never mind what restraints on himself he set up years ago - he now understands how well-intentioned but misguided he was and how he should have trusted himself more. (Insert Garfield meme here.)

No wonder the board found it hard to believe! No wonder it took so long to realize Altman had flipped on them, and it seemed Sutskever needed Slack screenshots showing Altman blatantly lying to them about Toner before he finally, reluctantly, flipped. The Altman you need to distrust & assume bad faith of & need to be paranoid about stealing your power is also usually an Altman who never gave you any power in the first place! I'm still kinda baffled by it, personally.

He concealed this change of heart from everyone, including the board, gradually began trying to unwind it, overplayed his hand at one point - and here we are.

It is still a mystery to me what is Sam's motive exactly.

That is not an argument against “the robots taking over,” or that AI does not generally pose an existential threat. It is a statement that we should ignore that threat, on principle, until the dangers ‘reveal themselves,’ with the implicit assumption that this requires the threats to actually start happening.

A lot of people will end up falling in love with chatbot personas, with the result that they will become uninterested in dating real people, being happy just to talk to their chatbot.

Good. If substantial number of men do this, dating market will become more tolerable, presumably.

Especially in some countries, where there are absurd demographic imbalances. Some stats from Poland, compared with Ireland (pics are translated using some online util, so they look a bit off):

Okay, I went off-topic a little bit, but these stats are so curiously bad I couldn't help myself.

Maybe GPT-3 could be used to find LW content related to the new post, using something like this: https://gpt-index.readthedocs.io

Unfortunately, I didn't get around to doing anything with it yet. But it seems useful: https://twitter.com/s_jobs6/status/1619063620104761344

You can convert money into height, through limb lengthening surgery. About $50K USD for additional 8cm in Europe. Double (or close) that price for the US. Also the process takes 1-1.5 years (lengthening alone - about 3m).

For a more realistic example, consider the DNA sequence for smallpox: I'd definitely rather that nobody knew it, than that people could with some effort find out, even though I don't expect being exposed to that quoted DNA information to harm my own thought processes.

...is it weird that I saved it in a text file? I'm not entirely sure if I've got the correct sequence tho. Just, when I learned that it is available, I couldn't not do it.