The Design Space of Minds-In-General

post by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2008-06-25T06:37:36.000Z · LW · GW · Legacy · 85 commentsContents

85 comments

People ask me, "What will Artificial Intelligences be like? What will they do? Tell us your amazing story about the future."

And lo, I say unto them, "You have asked me a trick question."

ATP

synthase is a molecular machine - one of three known occasions when

evolution has invented the freely rotating wheel - which is

essentially the same in animal mitochondria, plant chloroplasts, and

bacteria. ATP synthase has not changed significantly since the rise

of eukaryotic life two billion years ago. It's is something we all

have in common - thanks to the way that evolution strongly conserves

certain genes; once many other genes depend on a gene, a mutation

will tend to break all the dependencies.

Any two AI designs might be less similar to each other than you are to a petunia.

Asking what "AIs" will do is a trick question because it implies that all AIs form a natural class. Humans do form a natural class because we all share the same brain architecture. But when you say "Artificial Intelligence", you are referring to a vastly larger space of possibilities than when you say "human". When people talk about "AIs" we are really talking about minds-in-general, or optimization processes in general. Having a word for "AI" is like having a word for everything that isn't a duck.

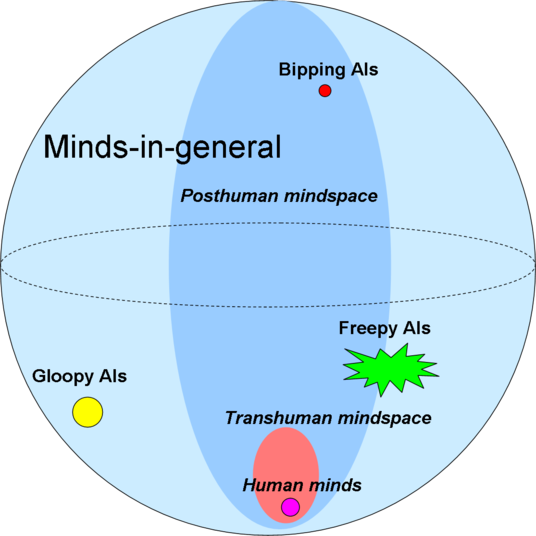

Imagine a map of mind design space... this is one of my standard diagrams...

All humans, of course, fit into a tiny little dot - as a sexually reproducing species, we can't be too different from one another.

This tiny dot belongs to a wider ellipse, the space of transhuman mind designs - things that might be smarter than us, or much smarter than us, but which in some sense would still be people as we understand people.

This transhuman ellipse is within a still wider volume, the space of posthuman minds, which is everything that a transhuman might grow up into.

And then the rest of the sphere is the space of minds-in-general, including possible Artificial Intelligences so odd that they aren't even posthuman.

But wait - natural selection designs complex artifacts and selects among complex strategies. So where is natural selection on this map?

So this entire map really floats in a still vaster space, the space of optimization processes. At the bottom of this vaster space, below even humans, is natural selection as it first began in some tidal pool: mutate, replicate, and sometimes die, no sex.

Are there any powerful optimization processes, with strength comparable to a human civilization or even a self-improving AI, which we would not recognize as minds? Arguably Marcus Hutter's AIXI should go in this category: for a mind of infinite power, it's awfully stupid - poor thing can't even recognize itself in a mirror. But that is a topic for another time.

My primary moral is to resist the temptation to generalize over all of mind design space

If we focus on the bounded subspace of mind design space which contains all those minds whose makeup can be specified in a trillion bits or less, then every universal generalization that you make has two to the trillionth power chances to be falsified.

Conversely, every existential generalization - "there exists at least one mind such that X" - has two to the trillionth power chances to be true.

So you want to resist the temptation to say either that all minds do something, or that no minds do something.

The main reason you could find yourself thinking that you know what a fully generic mind will (won't) do, is if you put yourself in that mind's shoes - imagine what you would do in that mind's place - and get back a generally wrong, anthropomorphic answer. (Albeit that it is true in at least one case, since you are yourself an example.) Or if you imagine a mind doing something, and then imagining the reasons you wouldn't do it - so that you imagine that a mind of that type can't exist, that the ghost in the machine will look over the corresponding source code and hand it back.

Somewhere in mind design space is at least one mind with almost any kind of logically consistent property you care to imagine.

And this is important because it emphasizes the importance of discussing what happens, lawfully, and why, as a causal result of a mind's particular constituent makeup; somewhere in mind design space is a mind that does it differently.

Of course you could always say that anything which doesn't do it your way, is "by definition" not a mind; after all, it's obviously stupid. I've seen people try that one too.

85 comments

Comments sorted by oldest first, as this post is from before comment nesting was available (around 2009-02-27).

comment by Shane_Legg · 2008-06-25T08:13:32.000Z · LW(p) · GW(p)

@ Eli:

"Arguably Marcus Hutter's AIXI should go in this category: for a mind of infinite power, it's awfully stupid - poor thing can't even recognize itself in a mirror."

Have you (or somebody else) mathematically proven this?

(If you have then that's great and I'd like to see the proof, and I'll pass it on to Hutter because I'm sure he will be interested. A real proof. I say this because I see endless intuitions and opinions about Solomonoff induction and AIXI on the internet. Intuitions about models of super intelligent machines like AIXI just don't cut it. In my experience they very often don't do what you think they will.)

comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2008-06-25T08:36:05.000Z · LW(p) · GW(p)

Shane, there was a discussion about this on the AGI list way back when, "breaking AIXI-tl", in which e.g. this would be one of the more technical posts. I think I proved this at least as formally, as you proved that proof that FAI was impossible that I refuted.

But of course this subject is going to take a separate post.

comment by Shane_Legg · 2008-06-25T09:20:22.000Z · LW(p) · GW(p)

@ Eli:

Yeah, my guess is that AIXI-tl can be broken. But AIXI? I'm pretty sure it can be broken in some senses, but whether these senses are very meaningful or significant, I don't know.

And yes, my "proof" that FAI would fail failed. But it also wasn't a formal proof. Kind of a lesson in that don't you think?

So until I see a proof, I'll take your statement about AIXI being "awfully stupid" as just an opinion. It will be interesting to see if you can prove yourself to be smarter than AIXI (I assume you don't view yourself as below awfully stupid).

comment by Roko · 2008-06-25T09:51:17.000Z · LW(p) · GW(p)

I might pitch in with an intuition about whether AIXI can recognize itself in a mirror. If I understand the algorithm correctly, it would depend on the rewards you gave it, and the computational and time cost would depend on what sensory inputs and motor outputs you connected it to.

For example, if you ran AIXI on a computer connected to a webcam with a mirror in front of it, and rewarded it if and only if it printed "I recognize myself" on the screen, it would eventually learn to do this all the time. The time cost might be large, though.

comment by Will_Pearson · 2008-06-25T11:09:27.000Z · LW(p) · GW(p)

Where would cats fit on the space. I would assume that they would be near humans, sharing as they do an amygdala, prefrontal cortex, cerebellum and the neurons fire at the same speed I assume. Not sure about the abstract planning. Could you have done the psychological unity of the mammals for your previous article?

comment by Shane_Legg · 2008-06-25T13:38:46.000Z · LW(p) · GW(p)

@ Silas:

Given that AIXI is uncomputable, how is somebody going to discuss implementing it?

An approximation, sure, but an actual implementation?

comment by bambi · 2008-06-25T14:32:11.000Z · LW(p) · GW(p)

What do you mean by a mind?

All you have given us is that a mind is an optimization process. And: what a human brain does counts as a mind. Evolution does not count as a mind. AIXI may or may not count as a mind (?!).

I understand your desire not to "generalize", but can't we do better than this? Must we rely on Eliezer-sub-28-hunches to distinguish minds from non-minds?

Is the FAI you want to build a mind? That might sound like a dumb question, but why should it be a "mind", given what we want from it?

comment by Roko · 2008-06-25T14:48:32.000Z · LW(p) · GW(p)

@Tim Tyler: yeah, this is always an issue. And there is the issue that AIXI might kill the person giving it the rewards. [I'm being sloppy here: you can't implement an uncomputable algorithm on physically real computer, so we should be talking about some kind of computable approximation to the algorithm being able to recognize "itself" in a mirror. ]

comment by Silas · 2008-06-25T15:42:44.000Z · LW(p) · GW(p)

Shane_Legg: factoring in approximations, it's still about zero. I googled a lot hoping to find someone actually using some version of it, but only found the SIAI's blog's python implementation of Solomonoff induction, which doesn't even compile on Windows.

comment by Shane_Legg · 2008-06-25T16:00:35.000Z · LW(p) · GW(p)

@ Silas:

I assume you mean "doesn't run" (python isn't normally a compiled language).

Regarding approximations of Solomonoff induction: it depends how broadly you want to interpret this statement. If we use a computable prior rather than the Solomonoff mixture, we recover normal Bayesian inference. If we define our prior to be uniform, for example by assuming that all models have the same complexity, then the result is maximum a posteriori (MAP) estimation, which in turn is related to maximum likelihood (ML) estimation. Relations can also be established to Minimum Message Length (MML), Minimum Description Length (MDL), and Maximum entropy (ME) based prediction (see Chapter 5 of Kolmogorov complexity and its applications by Li and Vitanyi, 1997).

In short, much of statistics and machine learning can be view as being computable approximations of Solomonoff induction.

comment by Phil_Goetz4 · 2008-06-25T16:23:22.000Z · LW(p) · GW(p)

The larger point, that the space of possible minds is very large, is correct.

The argument used involving ATP synthase is invalid. ATP synthase is a building block. Life on earth is all built using roughly the same set of Legos. But Legos are very versatile.

Here is an analogous argument that is obviously incorrect:

People ask me, "What is world literature like? What desires and ambitions, and comedies and tragedies, do people write about in other languages?"

And lo, I say unto them, "You have asked me a trick question."

"the" is a determiner which is identical in English poems, novels, and legal documents. It has not changed significantly since the rise of modern English in the 17th century. It's is something that every English document has in common.

Any two works of literature from different countries might be less similar to each other than Hamlet is to a restaurant menu.

comment by Caledonian2 · 2008-06-25T17:01:13.000Z · LW(p) · GW(p)

I would point out, Mr. Goetz, that some languages do not have a "the".

It is not clear how this changes the content of things people say or write in those languages. Whorf-Sapir, while disproven in the technical sense, is surprisingly difficult to abolish.

comment by Cyan2 · 2008-06-25T17:18:31.000Z · LW(p) · GW(p)

Phil, I'm not really sure what your criticism has to do with what Eliezer wrote. He's saying that evolution is contingent -- bits that work can get locked into place because other bits rely on them. Eliezer asserts that AI design is not contingent in this manner, so the space of possible AI designs does not form a natural class, unlike the space of realized Earth-based lifeforms. Your objection is... what, precisely?

comment by Arosophos (Lincoln_Cannon) · 2008-06-25T17:45:57.000Z · LW(p) · GW(p)

Eliezer, do you intend your use of "artificial intelligence" to be understood as always referencing something with human origins? What does it mean to you to place some artificial intelligences outside the scope of posthuman mindspace? Do you trust that human origins are capable of producing all possible artificial intelligences?

comment by Unknown · 2008-06-25T18:07:08.000Z · LW(p) · GW(p)

Phil Goetz was not saying that all languages have the word "the." He said that the word "the" is something every ENGLISH document has in common. His criticism is that this does not mean that Hamlet is more similar to an English restaurant menu than an English novel is to a Russian novel. Likewise, Eliezer's argument does not show that we are more like petunias then like an AI.

comment by komponisto2 · 2008-06-25T18:54:07.000Z · LW(p) · GW(p)

Caledonian, Sapir-Whorf becomes trivial to abolish once you regard language in the correct way: as an evolved tool for inducing thoughts in others' minds, rather than a sort of Platonic structure in terms of which thought is necessarily organized.

Phil, I don't see how the argument is obviously incorrect. Why can't two works of literature from different cultures be as different from each other as Hamlet is from a restaurant menu?

Replies from: wnoise↑ comment by wnoise · 2011-01-06T02:13:48.604Z · LW(p) · GW(p)

Sapir-Whorf becomes trivial to abolish once you regard language in the correct way: as an evolved tool for inducing thoughts in others' minds, rather than a sort of Platonic structure in terms of which thought is necessarily organized.

Even taken this way, I don't see how it abolishes Sapir-Whorf. Different languages are different tools for inducing thoughts, and may be better or worse at inducing specific kinds of thought, which will in turn influence "self"-generated thoughts.

Replies from: sark↑ comment by sark · 2011-01-21T15:05:37.624Z · LW(p) · GW(p)

Nope because the whole point is that thought is already existent. We use language to induce thoughts in other people. With ourselves we do not have to use language to induce our own thinking, we just think.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-01-21T15:19:57.841Z · LW(p) · GW(p)

That might be true, but I'd want to see evidence of it. If you're just appealing to intuition, well, my intuition points strongly in the other direction: I frequently find that the act of saying things out loud, or writing them down, changes the way I think about them. I often discover things I hadn't previously thought about when I write down a chain of thought, for example.

I suspect that's pretty common among at least a subset of humans.

Not to mention that the act of convincing others demonstrably affects our own thoughts, so the distinction you want to draw between "inducing thoughts in other people" and "thinking" is not as crisp as you want it to be.

Replies from: jimrandomh, sark↑ comment by jimrandomh · 2011-01-21T15:25:24.737Z · LW(p) · GW(p)

Does talking about or writing a thought down cause you to notice more things than if you had spent a similar amount of time thinking about it without writing anything? That's the proper baseline for comparison.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-01-21T15:36:17.361Z · LW(p) · GW(p)

I'm not sure it is the proper baseline, actually: if I am systematically spending more time thinking about a thought when writing than when not-writing, then that's a predictable fact about the process of writing that I can make use of.

Leaving that aside, though: yes, for even moderately complex thoughts, writing it down causes me to notice more things than thinking about them for the same period of time. I am far more likely to get into loops, far less likely to notice gaps, and far more likely to rely on cached thoughts if I'm just thinking in my head.

What counts as "moderately complex" has a lot to do with what my buffer-capacity is; when I was recovering from my stroke I noticed this effect with even simple logic-puzzles of the sort that I now just solve intuitively. But the real world is full of things that are worth thinking about that my buffers aren't large enough to examine in detail.

↑ comment by sark · 2011-01-21T15:39:42.901Z · LW(p) · GW(p)

Your verbalizations can affect your own thinking the same way the utterances of other people can. Your original thoughts don't originate from or structured/organized by language however. They were already there, and when you hear what you said out loud, or read what you wrote down, your thoughts get modulated because language can induce thought.

Perhaps 'induce' is not a suitable word. 'Trigger' might be better. Like how a poem can be read many ways. The meanings weren't contained in or organized by the words of the poem. Rather the words triggered emotions and thoughts in our brains. Nevertheless, those emotions and words can also be triggered by non-verbal experiences. 'Language affects thought' is then as trivial as 'The smell of a rose can trigger the thought of a rose, as much as the word "rose" can.'

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-01-21T15:49:25.695Z · LW(p) · GW(p)

If you believe "the thought of a rose" is the same thing whether triggered by the smell of a rose, a picture of a rose, or the word "rose" then we disagree on something far more basic than the role of language.

That said, I agree with what you actually said. The differences between the thoughts triggered by different languages is "as trivial" (which is to say, not at all trivial) a difference as that between the thoughts triggered by the smell of a rose and the word "rose" (also non-trivial).

Replies from: sark↑ comment by sark · 2011-01-21T16:46:46.211Z · LW(p) · GW(p)

If you believe "the thought of a rose" is the same thing whether triggered by the smell of a rose, a picture of a rose, or the word "rose" then we disagree on something far more basic than the role of language.

Of course, usually different sets of thoughts get triggered by those different triggers. Can you express more explicitly what you think we disagree on?

I use 'trivial' in the specific sense of language being no different than other environmental triggers. Not in the sense of 'magnitude of effect'.

For example, the cultural differences which usually track language differences are probably more explanatory of the different thought patterns of various groups. For instance, lack of concept for 'kissing' could simply be from kissing not being prevalent in a culture. 'Language differences' as an explanation is usually screened-off, since naturally language use will track cultural practice.

I just don't see the point of focusing specifically on language. Sapir-Whorf's ambition doesn't seem to merely be including language among the myriad influences on thought. Rather it seems to say thought is somehow systematically 'organized' or 'constrained' by language. I think this is only possible since language is so expressive. If you say 'language' influences thought you seem to have in your hands a very powerful explanatory tool which subsumes all other specific explanations.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-01-21T17:26:52.842Z · LW(p) · GW(p)

Can you express more explicitly what you think we disagree on?

OK.

When wnoise asserted that different languages influence thoughts differently, you disagreed, implying that the language we use doesn't affect the thoughts we think because the thoughts precede the language.

I disagree with that: far from least among the many things that affect the thoughts we think is the linguistic environment in which we do our thinking.

But you no longer seem to be claiming that, so I assume I misunderstood.

Rather, you now seem to be claiming that while of course our language affects what we think, so do other things, some of them much more strongly than language. I agree with that. As far as I know, nobody I classify as even remotely sane _dis_agrees with that.

You also seem to be asserting that the idea that language influences thought is incompatible with the idea that nonlinguistic factors influence thought, because language is expressive. I don't understand this well enough to agree or disagree with it, though I don't find it compelling at all.

Put another way: I say that language influences thought, and I also say that nonlinguistic factors influence thought. What factors most relevantly influence thought depends on the specifics of the situation, but there are certainly nonlinguistic factors that reliably outweigh the role of language. As far as I know, nobody believes otherwise.

Do you disagree with any of that? If not, what did you think I was saying?

Replies from: sark↑ comment by sark · 2011-01-21T22:32:56.331Z · LW(p) · GW(p)

Thanks for the clarification.

I was mainly addressing the topic Komponisto set up:

Sapir-Whorf becomes trivial to abolish once you regard language in the correct way: as an evolved tool for inducing thoughts in others' minds, rather than a sort of Platonic structure in terms of which thought is necessarily organized. [emphasis mine]

You see that just seems to be what this whole Sapir-Whorf debate is about. For one, I don't think there would be anything to talk about if it was simply asserting that 'language occasionally influences thought, like a rose sometimes would'. Since language somehow seems so concomitant (if not actually integral) to thought this seems to show that we are somehow severely constrained/organized by the language we speak. So apologies if i'm getting this all wrong, but I just don't think it is fair for you to say 'of course I agree that other things influences thought'. You seem to be ignoring the obvious implication of language being so intricately tied up with thought.

We do have a substantial disagreement in that you seem to think that even though language is one of the many influences of thought, it's impact is especially significant since language is somehow intimately dependent on thought.

I can simultaneously agree that language can influence thought and that speaking different languages has little influence on thought. This is because I think most of the time other factors, culture being the most obvious one, screens off language as an explanation for differing thought patterns among people who speak different languages. This simply means that the seeming influence of language on thought is actually the influence of culture on thought, and in turn of thought on language, and then of language on thought again.

I mentioned the expressiveness of language because I wanted to show how it can seem like it is language affecting thought, when it is simply channeling the influences of other factors, which it can easily do because it is expressive.

I'll try to summarize my position:

If you somehow managed to change the language a person speaks without changing anything else, you will not see a systematic effect on his thought patterns. This is because he would soon adapt the language for his use, based on his existent thoughts (most of which are not even remotely determined by language). The effect of language on thought is an illusion, it is actually his/his culture's other thoughts giving rise to the language which seem to then independently have an effect on his thought.

The phenomenon of language influencing thought, is more helpfully thought of as thought influencing thought.

Replies from: komponisto, TheOtherDave, wnoise↑ comment by komponisto · 2011-01-21T23:06:19.818Z · LW(p) · GW(p)

I can simultaneously agree that language can influence thought and that speaking different languages has little influence on thought. This is because I think most of the time other factors, culture being the most obvious one, screens off language as an explanation for differing thought patterns among people who speak different languages. This simply means that the seeming influence of language on thought is actually the influence of culture on thought, and in turn of thought on language, and then of language on thought again.

(...)

If you somehow managed to change the language a person speaks without changing anything else, you will not see a systematic effect on his thought patterns. This is because he would soon adapt the language for his use, based on his existent thoughts (most of which are not even remotely determined by language). The effect of language on thought is an illusion, it is actually his/his culture's other thoughts giving rise to the language which seem to then independently have an effect on his thought.

The phenomenon of language influencing thought, is more helpfully thought of as thought influencing thought.

Excellently put. The view you express here coincides exactly with mine.

↑ comment by TheOtherDave · 2011-01-21T23:28:16.367Z · LW(p) · GW(p)

For an English speaker, I expect that a picture of a rose will increase (albeit minimally) their speed/accuracy in a tachistoscopic word-recognition test when given the word "columns."

For a Chinese speaker, I don't expect the same effect for the Chinese translation of "columns."

I expect this difference because priming effects on speed/accuracy of tachistoscopic word-recognition tasks based on lexical associations are well-documented, and because for an English-speaker, the picture of a rose is associated with the word "rose," which is associated with the word "rows," which is associated with the word "columns," and because I know of no equivalent association-chain for a Chinese-speaker.

Of course, how long it takes to identify "columns" (or its translation) as a word isn't the kind of thing people usually care about when they talk about differences in thought. It's a trivial example, agreed.

I mention it not because I think it's hugely important, but because it is concrete. That is, it is a demonstrable difference in the implicit associations among thoughts that can't easily be attributed to some vague channeling of the influences of unspecified and unmeasurable differences between their cultures.

Sure, it's possible to come up with such an explanation after the fact, but I'd be pretty skeptical of that sort of "explanation". It's far more likely due to the differences between languages that caused me to predict it in the first place.

One could reply that, sure, differences in languages can create trivial influences in associations among thoughts, but not significant ones, and it's "just obvious" that significant influences are what this whole Sapir-Whorf discussion is about.

I would accept that.

↑ comment by wnoise · 2011-01-22T21:11:23.685Z · LW(p) · GW(p)

This is because I think most of the time other factors, culture being the most obvious one, screens off language as an explanation for differing thought patterns among people who speak different languages.

There is not a one-to-one correlation between culture and language of course. The screening off is fairly weak. Brazil and Portugal simply do not have the same culture, nor for that matter do Texas and California.

If you somehow managed to change the language a person speaks without changing anything else, you will not see a systematic effect on his thought patterns.

Even stronger separation happens for those who can speak multiple languages, and for these people culture does not screen off language. We can actually "change the language a person speaks" in this case. Do the polylingual talk about being able to think differently in different languages?

Replies from: sark↑ comment by sark · 2011-01-22T22:42:22.806Z · LW(p) · GW(p)

Brazil and Portugal simply do not have the same culture, nor for that matter do Texas and California.

I don't think that has anything to do with the strength of the screening-off at all.

P(T|C&L)=P(A|C) means C screens off L, which does not mean P(T|L&C)=P(T|L) meaning L screens of C. Screening off is not symmetric.

Or in other words, I have not said that if 2 cultures are not the same but their languages are, then the thinking could not be different.

Even stronger separation happens for those who can speak multiple languages, and for these people culture does not screen off language. We can actually "change the language a person speaks" in this case. Do the polylingual talk about being able to think differently in different languages?

Nice one. My prediction is that their thinking would not be affected in any systematic way at the level of abstractions.

comment by Phil_Goetz4 · 2008-06-25T22:18:13.000Z · LW(p) · GW(p)

Phil, I don't see how the argument is obviously incorrect. Why can't two works of literature from different cultures be as different from each other as Hamlet is from a restaurant menu?

They could be, but usually aren't. "World literature" is a valid category.

comment by Fabio_Franco · 2008-06-25T23:01:42.000Z · LW(p) · GW(p)

This discussion reminds me of Frithjof Schuon's "The Transcendent Unity of Religions", in which he argues that a metaphysical unity exists which transcends the manifest world and which can be "univocally described by none and concretely aprehended by few".

comment by Nick_Tarleton · 2008-06-25T23:26:05.000Z · LW(p) · GW(p)

poke: what are you trying to say? It "exists" in the same sense as the set of all integers, i.e. it's a natural and useful abstraction, regardless of what you think of it ontologically.

comment by Unknown · 2008-06-26T16:24:04.000Z · LW(p) · GW(p)

In regard to AIXI: One should consider more carefully the fact that any self-modifying AI can be exactly modeled by a non-self modifying AI.

One should also consider the fact that no intelligent being can predict its own actions-- this is one of those extremely rare universals. But this doesn't mean that it can't recognize itself in a mirror, despite its inability to predict its actions.

comment by jmmcd · 2009-01-06T17:44:46.000Z · LW(p) · GW(p)

If we focus on the bounded subspace of mind design space which contains all those minds whose makeup can be specified in a trillion bits or less, then every universal generalization that you make has two to the trillionth power chances to be falsified.

Conversely, every existential generalization - "there exists at least one mind such that X" - has two to the trillionth power chances to be true.

So you want to resist the temptation to say either that all minds do something, or that no minds do something.

This is fine where X is a property which has a one-to-one correspondence with a particular bit in the mind's specification. For higher-level properties (perhaps emergent ones -- yes, I said it) this probabilistic argument is not convincing.

Consider the minds of specification-size 1 trillion. We can happily make the generalisation that none of them will be able to predict whether a given Turing machine halts. Yes, there are 2^trillion chances for this generalisation to be falsified, but we know it never will be.

But this generalisation is true of everything, not just "minds", so we haven't added to our knowledge. Well, let's try this generalisation instead: no mind's state will remain unchanged by a non-null input. This is not true of rocks, but is true of minds. Perhaps there are some other, more useful, things we can say about minds.

Apologies for resurrecting a months-old post. I'm new here.

Replies from: sark↑ comment by sark · 2011-01-21T15:23:54.184Z · LW(p) · GW(p)

Consider the minds of specification-size 1 trillion. We can happily make the generalisation that none of them will be able to predict whether a given Turing machine halts. Yes, there are 2^trillion chances for this generalisation to be falsified, but we know it never will be.

Eliezer did qualify those statements:

Somewhere in mind design space is at least one mind with almost any kind of logically consistent property you care to imagine.

comment by Kaj_Sotala · 2011-01-05T08:44:02.274Z · LW(p) · GW(p)

Just happened to re-read this post. As before, reading it fills me with a kind of fascinated awe, akin to staring at the night sky and wondering at all the possible kinds of planets and lifeforms out there.

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-01-06T17:48:06.906Z · LW(p) · GW(p)

Reading it makes me feel annoyed, because Eliezer seems to ignore convergence in the mindspace of increasingly general intelligences. All optimization processes have to approximate something like Bayesian decision theory, and there does seem to be a coherent universal vector in human preferencespace that gets absorbed into individual humans (instead of just being implicit in the overall structure of humane values). Egalitarianism, altruism, curiosity, et cetera are to some extent convergent features of minds. The universal AI drives are the most obvious example, but we might underestimate to what extent sexual selection, game theoretically-derived moral sentiments, et cetera are convergent, especially in the limit as intelligence/wisdom/knowledge approaches a singularity. I wonder if the Babyeater equivalent of a Buddha would give up babyeating the same way that a human Buddha gives up romantic love. I suspect that Buddhahood or something close is the only real attractor in mindspace that could be construed as reflectively consistent given the vector humanity seems to be on. We should enjoy the suffering and confusion while we can.

Replies from: Will_Newsome, TheOtherDave↑ comment by Will_Newsome · 2011-01-06T17:52:49.243Z · LW(p) · GW(p)

Relatedly, I've formed the tentative intuition that paper clip maximizers are very hard to build; in fact, harder to build than FAI. What you do get out of kludge superintelligences is probably just going to be the pure universal AI drives (or something like that), or possibly some sort of approximately objective convergent decision theoretic policy, perhaps dictated by the acausal economy.

Replies from: Mitchell_Porter↑ comment by Mitchell_Porter · 2011-01-07T04:25:44.687Z · LW(p) · GW(p)

The only really hard part about making a superintelligent paperclip maximizer is the superintelligence. If you think that specifying the goal of a Friendly AI is just as easy, then making a superintelligent FAI will be just as hard. The "fragility of value" thesis argues that Friendliness is significantly harder to specify, because there are many ways to go wrong.

I suspect that Buddhahood or something close is the only real attractor in mindspace that could be construed as reflectively consistent given the vector humanity seems to be on.

I don't. Simple selfishness is definitely an attractor (in the sense that it's an attitude that many people end up adopting), and it wouldn't take much axiological surgery to make it reflectively consistent.

Replies from: Will_Newsome, Will_Newsome↑ comment by Will_Newsome · 2011-01-07T13:17:18.678Z · LW(p) · GW(p)

The only really hard part about making a superintelligent paperclip maximizer is the superintelligence. If you think that specifying the goal of a Friendly AI is just as easy, then making a superintelligent FAI will be just as hard. The "fragility of value" thesis argues that Friendliness is significantly harder to specify, because there are many ways to go wrong.

No, it takes a lot of work to specify paperclips, and thus it's not as easy as just superintelligence. You need goal stability, a stable ontology of paperclips, et cetera. I'm positing that it's easier to specify human values than to specify paperclips. I have a long list of considerations, but the most obvious is that paperclips have much higher Kolmogorov complexity than human values given some sort of universal prior with a sort of prefix you could pick up by looking at the laws of physics.

Replies from: ciphergoth, wnoise↑ comment by Paul Crowley (ciphergoth) · 2011-01-07T13:35:05.911Z · LW(p) · GW(p)

I agree with your first sentence, but I suspect that building a friendly AI is harder than building a paperclipper.

↑ comment by wnoise · 2011-01-07T19:11:47.055Z · LW(p) · GW(p)

No, it takes a lot of work to specify paperclips, and thus it's not as easy as just superintelligence.

Reference class confusion. "Paperclipper" refers to any "universe tiler", not just one that tile with paperclips. Specifying paperclips in particular is hard. If you don't care about exactly what gets tiled, it's much easier.

goal stability

For well-understood goals, that's easy. Just hardcode the goal. It's making goals that can change in a useful way that's hard. Part of the hardness of FAI is we don't understand friendly, we don't know what humans want, and we don't know what's good for humans, and any simplistic fixed goal will cut off our evolution.

the most obvious is that paperclips have much higher Kolmogorov complexity than human values

I don't see how you could possibly believe that, except out of wishful thinking. Human values are contingent on our entire evolutionary history. My parochial values are contingent upon cultural history and my own personal history. Our values are not universal. Different types of creature will develop radically different values with only small points of contact and agreement.

Replies from: JGWeissman, TheOtherDave, Will_Newsome↑ comment by JGWeissman · 2011-01-07T19:17:45.253Z · LW(p) · GW(p)

goal stability

For well-understood goals, that's easy. Just hardcode the goal.

Hardcoding is not necessarily stable in programs that can edit their own source code.

Replies from: wnoise↑ comment by wnoise · 2011-01-16T23:38:41.969Z · LW(p) · GW(p)

Really? Isn't editing one's goal directly contrary to one's goal? If an AI self-edits in such a way that its goal changes, it will predictably no longer be working towards that goal, and will thus not consider it a good idea to edit its goal.

Replies from: Vaniver↑ comment by Vaniver · 2011-01-16T23:51:25.214Z · LW(p) · GW(p)

It depends on how it decides whether or not changes are a good thing. If is trying out two utility functions- Ub for utility before and Ua for utility after- you need to be careful to ensure it doesn't say "hey, Ua(x)>Ub(x), so I can make myself better off by switching to Ua!".

Ensuring that doesn't happen is not simple, because it requires stability throughout everything. There can't be a section that decides to try being goalless, or go about resolving the goal in a different way (which is troublesome if you want it to cleverly use instrumental goals).

[edit] To be clearer, you need to not just have the goals be fixed and well-understood, but every part of everywhere else also needs to have a fixed and well-understood relationship to the goals (and a fixed and well-understood sense of understanding, and ...). Most attempts to rewrite source code are not that well-planned.

↑ comment by TheOtherDave · 2011-01-07T19:53:27.438Z · LW(p) · GW(p)

"Paperclipper" refers to any "universe tiler", not just one that tile with paperclips.

Well, a number of voices here do seem to believe that there is something instantiable which is the thing that humans actually want and will continue to want no matter how much they improve/modify.

Presumably those voices would not call something that tiles the universe with that thing a paperclipper, despite agreeing that it's a universe tiler, at least technically.

Replies from: wnoise↑ comment by wnoise · 2011-01-07T20:45:18.482Z · LW(p) · GW(p)

I believe that most voices here think that there are conditions that humans actually want and that one of them is variety. This in no way implies a material tiling. Permanency of these conditions is more questionable, of course.

The only plausible attractive tiling with something material that I could see even a large minority agreeing to would be a computational substrate, "computronium".

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-01-07T21:48:40.514Z · LW(p) · GW(p)

If we're talking about conditions that don't correlate well with particular classes of material things, sure.

That's rarely true of the conditions I (or anyone else I know) value in real life, but I freely grant that real life isn't a good reference class for these sorts of considerations, so that's not evidence of much.

Still, failing a specific argument to the contrary, I would expect whatever conditions humans maximally value (assuming there is a stable referent for that concept, which I tend to doubt, but a lot of people seem to believe strongly) to implicitly define a class of objects that optimally satisfies those conditions. Even variety, assuming that it's not the trivial condition of variety wherein anything is good as long as it's new.

Computronium actually raises even more questions. It seems that unless one values the accurate knowledge (even when epistemically indistinguishable from the false belief) that one is not a simulation, the ideal result would be to devote all available mass-energy to computronium running simulated humans in a simulated optimal environment.

Replies from: wnoise↑ comment by wnoise · 2011-01-07T23:53:24.323Z · LW(p) · GW(p)

If we're talking about conditions that don't correlate well with particular classes of material things, sure.

I think that needs a bit of refinement. Having lots of food correlates quite well with food. Yet no one here wants to tile the universe with white rice. Other people are a necessity for a social circle, but again few want to tile the universe with humans (okay, there are some here that could be caricatured that way).

I think we all want to eventually have the entire universe able to be physically controlled by humanity (and/or its FAI guardians), but tiling implies a uniformity that we won't want. Certainly not on a local scale, and probably not on a macroscale either.

Computronium actually raises even more questions.

Right, which is why I borught it up as one of the few reasonable counterexamples. Still, it's the programming makes a difference between heaven and hell.

↑ comment by Will_Newsome · 2011-01-08T01:46:00.189Z · LW(p) · GW(p)

"Paperclipper" refers to any "universe tiler", not just one that tile with paperclips. Specifying paperclips in particular is hard. If you don't care about exactly what gets tiled, it's much easier.

My arguments apply to most kinds of things that tile the universe with highly arbitrary things like paperclips, though they apply less to things that tile the universe with less arbitrary things like bignums or something. I do believe arbitrary universe tilers are easier than FAI; just not paperclippers.

For well-understood goals, that's easy. Just hardcode the goal.

There are currently no well-understood goals, nor are there obvious ways of hardcoding goals, nor are hardcoded goals necessarily stable for self-modifying AIs. We don't even know what a goal is, let alone do we know how to solve the grounding problem of specifying what a paperclip is (or a tree is, or what have you). With humans you get the nice trick of getting the AI to look back on the process that created it, or alternatively just use universal induction techniques to find optimization processes out there in the universe, which will also find humans. (Actually I think it would find mostly memes, not humans per se, but a lot of what humans care about is memes.)

Human values are contingent on our entire evolutionary history.

Paperclips are contingent on that, plus a whole bunch of random cultural stuff. Again, if we're talking about universe tilers in general this does not apply.

Also, as a sort of appeal to authority, I've been working at the Singularity Institute for a year now, and have spent many many hours thinking about the problem of FAI (though admittedly I've given significantly less thought to how to build a paperclipper). If my intuitions are unsound, it is not for reasons that are intuitively obvious.

Replies from: jimrandomh, cousin_it↑ comment by jimrandomh · 2011-01-08T02:01:48.349Z · LW(p) · GW(p)

Paperclips are contingent on that, plus a whole bunch of random cultural stuff. Again, if we're talking about universe tilers in general this does not apply.

Really? Maybe if you wanted it to be able to classify whether any arbitrary corner case count as a paperclip or not, but that isn't required for a paperclipper. If you're just giving it a shape to copy then I don't see why that would be more than a hundred bytes or so - trivial compared to the optimizer.

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-01-08T03:39:29.270Z · LW(p) · GW(p)

If you're just giving it a shape to copy then I don't see why that would be more than a hundred bytes or so - trivial compared to the optimizer.

A hundred bytes in what language? I get the intuition, but it really seems to me like paper clips are really complex. There are lots of important qualities of paperclips that make them clippy that seem to me like they'd be very hard to get an AI to understand. You say you're giving it a shape, but that shape is not at all easily defined. And its molecular structure might be important, and its size, and its density, and its ability to hold sheets of paper together... Shape and molecular component aren't fundamental attributes of the universe that an AI would have a native language for. This is why we can't just keep an oracle AI in a box -- it turns out that our intuitive idea of what a box is is really hard to explain to a de novo AI. Paperclips are similar. And if the AI is smart enough to understand human concepts that well, then you should also be able to just type up CEV and give it that instead... CEV is easier to describe than a paperclip in that case, since CEV is already written up. (Edit: I mean a description of CEV is written up, not CEV. We're still working on the latter.)

Replies from: Will_Sawin, Normal_Anomaly, datadataeverywhere↑ comment by Will_Sawin · 2011-01-10T00:18:04.223Z · LW(p) · GW(p)

If you can understand human concepts, "paperclip" is sufficient to tell you about paperclips. Google "paperclip," you get hundreds of millions of results.

"Understanding human concepts" may be hard, but understanding arbitrary concrete concepts seems harder than understanding arbitrary abstract concepts that take a long essay to write up and have only been written up by one person and use a number of other abstract concepts in this writeup.

Replies from: JoshuaZ↑ comment by JoshuaZ · 2011-01-21T14:31:58.000Z · LW(p) · GW(p)

but understanding arbitrary concrete concepts seems harder than understanding arbitrary abstract concepts

Do you mean easier here?

Replies from: Will_Sawin↑ comment by Will_Sawin · 2011-01-21T21:04:05.033Z · LW(p) · GW(p)

Yes.

↑ comment by Normal_Anomaly · 2011-01-11T01:26:57.634Z · LW(p) · GW(p)

Well, here's a recipe for a paperclip in English: Make a wire, 10 cm long and 1mm in diameter, composed of an alloy of 99.8% iron and 0.2% carbon. Start at one end and bend it such that the segments from 2-2.5cm, 2.75-3.25cm, 5.25-5.75cm form half-circles, with all the bends in the same direction and forming an inward spiral (the end with the first bend is outside the third bend).

↑ comment by datadataeverywhere · 2011-01-21T15:14:08.996Z · LW(p) · GW(p)

Sample a million paperclips from different manufacturers. Automatically cluster to find the 10,000 that are most similar to each other. Anything is a paperclip if it is more similar to one of those along every analytical dimension than another in the set.

Very easy, requires no human values. The AI is free to come up with strange dimensions along which to analyze paperclips, but it now has a clearly defined concept of paperclip, some (minimal) flexibility in designing them, and no need for human values.

Replies from: shokwave↑ comment by shokwave · 2011-01-21T15:36:08.889Z · LW(p) · GW(p)

Counter:

Sample a million people from different continents. Automatically cluster to find the 10,000 that are most similar to each other. Anything is a person if it is more similar to one of those along every analytical dimension than another in the set.

This is already tripping majoritarianism alarm bells.

I would meditate on this for a while when trying to define a paperclip.

Replies from: TheOtherDave, datadataeverywhere↑ comment by TheOtherDave · 2011-01-21T16:23:54.400Z · LW(p) · GW(p)

Sure.

For that matter, one could play all kinds of Hofstadterian games along these lines... is a staple a paperclip? After all, it's a thin piece of shaped metal designed and used to hold several sheets of paper together. Is a one-pound figurine of a paperclip a paperclip? Does it matter if you use it as a paperweight, to hold several pieces of paper together? Is a directory on a computer file system a virtual paperclip? Would it be more of one if we'd used the paperclip metaphor rather than the folder metaphor for it? And on and on and on.

In any case, I agree that the intuition that paperclips are an easy set to define depends heavily on the idea that not very many differences among candidates for inclusion matter very much, and that it should be obvious which differences those are. And all of that depends on human values.

Put a different way: picking 10,000 manufactured paperclips and fitting a category definition to those might exclude any number of things that, if asked, we would judge to be paperclips... but we don't really care, so a category arrived at this way is good enough. Adopting the same approach to humans would similarly exclude things we would judge to be human... and we care a lot about that, at least sometimes.

↑ comment by datadataeverywhere · 2011-01-22T01:03:34.817Z · LW(p) · GW(p)

TheOtherDave got it right; I'm wasn't trying to give a complete definition of what is and isn't a paperclip, I was just offering forth an easy to define (without human values) subset that we would still call paperclips.

It has plenty of false negatives, but I don't really see that as a loss. Likewise, your personhood algorithm doesn't bother me as long as we don't use it to establish non-personhood.

Replies from: shokwave↑ comment by cousin_it · 2011-01-21T13:23:45.459Z · LW(p) · GW(p)

There are currently no well-understood goals, nor are there obvious ways of hardcoding goals

"Find a valid proof of this theorem from the axioms of ZFC". This goal is pretty well-understood, and I don't believe an AI with such a goal will converge on Buddha. Or am I misunderstanding your position?

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2011-01-21T14:20:26.599Z · LW(p) · GW(p)

This doesn't take into account logical uncertainty. It's easy to write a program that eventually computes the answer you want, and then pose a question of doing that more efficiently while provably retaining the same goal, which is essentially what you cited, with respect to a brute force classical inference system starting from ZFC and enumerating all theorems (and even this has its problems, as you know, since the agent could be controlling which answer is correct). A far more interesting question is which answer to name when you don't have time to find the correct answer. "Correct" is merely a heuristic for when you have enough time to reflect on what to do.

(Also, even to prove theorems, you need operating hardware, and manging that hardware and other actions in the world would require decision-making under (logical) uncertainty. Even nontrivial self-optimization would require decision-making under uncertainty that has a "chance" of turning you from the correct question.)

Replies from: cousin_it↑ comment by cousin_it · 2011-01-21T14:59:07.140Z · LW(p) · GW(p)

A far more interesting question is which answer to name when you don't have time to find the correct answer.

What's more interesting about it? Think for some time and then output the best answer you've got.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2011-01-21T15:19:01.019Z · LW(p) · GW(p)

Try to formalize this intuition. With provably correct answers, that's easy. Here, you need a notion of "best answer I've got", a way of comparing possible answers where correctness remains inaccessible. This makes it "more interesting": where the first problem is solved (to an extent), this one isn't.

↑ comment by Will_Newsome · 2011-01-07T13:23:05.790Z · LW(p) · GW(p)

I don't. Simple selfishness is definitely an attractor (in the sense that it's an attitude that many people end up adopting), and it wouldn't take much axiological surgery to make it reflectively consistent.

Individual humans sometimes become more selfish, but not consistently reflectively so, and humanity seems to be becoming more humane over time. Obviously there's a lot of interpersonal and even intrapersonal variance, but the trend in human values is both intuitively apparent and empirically verified by e.g. the World Values Survey and other axiological sociology. Also, I doubt selfishness is as strong an attractor among people who are the smartest and most knowledgeable of their time. Look at e.g. Maslow's research on people at the peak of human performance and mental health, and the attractors he identified as self actualization and self transcendence. Selfishness (or simple/naive selfishness) mostly seems like a pitfall for stereotypical amateur philosophers and venture capitalists.

Replies from: Mitchell_Porter↑ comment by Mitchell_Porter · 2011-01-21T12:57:03.852Z · LW(p) · GW(p)

I'm taking another look at this and find it hard to sum up just how many problems there are with your argument.

I doubt selfishness is as strong an attractor among people who are the smartest and most knowledgeable of their time.

What about the people who are the most powerful of their time? Think about what the psychology of a billionaire must be. You don't accumulate that much wealth just by setting out to serve humanity. You care about offering your customers a service, but you also try to kill the competition, and you cut deals with the already existing powers, especially the state. Most adults are slaves, to an economic function if not literally doing what another person tells them, and then there is a small wealthy class of masters who have the desire and ability to take advantage of this situation.

I started out by opposing "simple selfishness" to your hypothesis that "Buddhahood or something close" is the natural endpoint of human moral development. But there's also group allegiance: my family, my country, my race, but not yours. I look out for my group, it looks out for me, and caring about other groups is a luxury for those who are really well off. Such caring is also likely to be pursued in a form which is advantageous, whether blatantly or subtly, for the group which gets to play benefactor. We will reshape you even while we care for you.

Individual humans sometimes become more selfish, but not consistently reflectively so

How close do you think anyone has ever come to reflective consistency? Anyway, you are reflectively consistent if there's no impulse within you to change your goals. So anyone, whatever their current goals, can achieve reflective consistency by removing whatever impulses for change-of-values they may have.

the only real attractor in mindspace that could be construed as reflectively consistent given the vector humanity seems to be on.

Reflective consistency isn't a matter of consistency with your trajectory so far, it's a matter of consistency when examined according to your normative principles. The trajectory so far did not result from any such thorough and transparent self-scrutiny.

Frankly, if I ask myself, what does the average human want to be, I'd say a benevolent dictator. So yes, the trend of increasing humaneness corresponds to something - increased opportunity to take mercy on other beings. But there's no corresponding diminution of interest in satisfying one's own desires.

Let's see, what else can I disagree with? I don't really know what your concept of Buddhahood is, but it sounds a bit like nonattachment for the sake of pleasure. I'll take what pleasures I can, and I'll avoid the pain of losing them by not being attached to them. But that's aestheticism or rational hedonism. My understanding of Buddhahood is somewhat harsher (to a pleasure-seeking sensibility), because it seeks to avoid pleasure as well as pain, the goal after all being extinction, removal from the cycle of life. But that was never a successful mass philosophy, so you got more superstitious forms of Buddhism in which there's a happy pure-land afterlife and so on.

I also have to note that an AI does not have to be a person, so it's questionable what implications trends in human values have for AI. What people want themselves to be and what they would want a non-person AI to be are different topics.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-01-21T15:28:03.799Z · LW(p) · GW(p)

Frankly, if I ask myself, what does the average human want to be, I'd say a benevolent dictator.

Seriously? For my part, I doubt that a typical human wants to do that much work. I suspect that "favored and privileged subject of a benevolent dictator" would be much more popular. Even more popular would be "favored and privileged beneficiary of a benevolent system without a superior peer."

But agreed that none of this implies a reduced interest in having one's desires satisfied.

(ETA: And, I should note, I agree with your main point about nonuniversality of drives.)

Replies from: Mitchell_Porter↑ comment by Mitchell_Porter · 2011-01-26T08:51:46.534Z · LW(p) · GW(p)

Immediately after I posted that, I doubted it. A lot of people might just want autonomy - freedom from dependency on others and freedom from the control of others. Dictator of yourself, but not dictator of humanity as a whole. Though one should not underestimate the extent to which human desire is about other people.

Will Newsome is talking about - or I thought he was talking about - value systems that would be stable in a situation where human beings have superintelligence working on their side. That's a scenario where domination should become easy and without costs, so if people with a desire to rule had that level of power, the only thing to stop them from reshaping everyone else would be their own scruples about doing so; and even if they were troubled in that way, what's to stop them from first reshaping themselves so as to be guiltless rulers of the world?

Also, even if we suppose that that outcome, while stable, is not what anyone would really want, if they first spent half an eternity in self-optimization limbo investigating the structure of their personal utility function... I remain skeptical that "Buddhahood" is the universal true attractor, though it's hard to tell without knowing exactly what connotations Will would like to convey through his use of the term.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-01-26T20:23:27.551Z · LW(p) · GW(p)

I am skeptical about universal attractors in general, including but not limited to Buddhahood and domination. (Psychological ones, anyway. I suppose entropy is a universal attractor in some trivial sense.) I'm also inclined to doubt that anything is a stable choice, either in the sense you describe here, or in the sense of not palling after a time of experiencing it.

Of course, if human desires are editable, then anything can be a stable choice: just modify the person's desires such that they never want anything else. By the same token, anything can be a universal attractor: just modify everyone's desires so they choose it. These seem like uninteresting boundary cases.

I agree that some humans would, given the option, choose domination. I suspect that's <1% of the population given a range of options, though rather more if the choice is "dominate or be dominated." (Although I suspect most people would choose to try it out for a while, if that were an option, then would give it up in less than a year.)

I suspect about the same percentage would choose to be dominated as a long-term lifestyle choice, given the expectation that they can quit whenever they want.

I agree that some would choose autonomy, though again I suspect not that many (<5%, say) would choose it for any length of time.

I suspect the majority of humans would choose some form of interdependency, if that were an option.

Replies from: Strange7↑ comment by TheOtherDave · 2011-01-06T17:57:52.325Z · LW(p) · GW(p)

If you ever felt inclined to lay out your reasons for believing that general intelligences without a shared heritage are likely to converge on egalitarianism, altruism, curiosity, game theoretical moral sentiments, or Buddhahood... or, come to that, lay out more precisely what you mean by those terms... I would be interested to read them.

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-01-06T18:22:25.645Z · LW(p) · GW(p)

Evolution works on species, smart species' members will either evolve together or eventually get smart enough to learn to copy each other even if adversarial, such interactions will probably roughly approximate evolutionary game theory, and iterated games for social animals will probably yield cooperation and possibly altruism. Knowing more about yourself, the process that created you, and the arbitrarity in how you ended up with your preferences, intuitively seems like it would promote egalitarianism both on aesthetic grounds and pragmatic game theoretic grounds. Curiosity is just a necessary prerequisite for intelligence, it's obviously convergent. Something like Buddhahood is just a necessary prerequisite for a reasonable decision theory approximation, and is thus also convergent. That one is horribly imprecise, I know, but Buddhahood is hard enough to explain in itself, let alone as a decision theory approximation, let alone as a normative one.

That's just the scattershot sleep-deprived off-the-top-of-my-head version that's missing all the good intuitions. If I end up converting my mountain of intuitions into respectable arguments in text I will let you know. It's just so much easier to do in-person, where I can get quick feedback about others' ontologies and how they mesh with mine, et cetera.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-01-06T19:22:02.867Z · LW(p) · GW(p)

Thanks, on both counts. And, yes, agreed that it's easier to have these sorts of conversations with known quantities.

comment by jacob_cannell · 2012-06-18T03:03:54.333Z · LW(p) · GW(p)

This post is cute, but there are several flaws/omissions that can lead to compound propagating errors in typical interpretations.

Any two AI designs might be less similar to each other than you are to a petunia.

Cute. The general form of this statement:

(Any two X might be less similar to each other than you are to a petunia) is trivially true if our basis of comparison is based solely on genetic similarity.

This leads to the first big problem with this post: The idea that minds are determined by DNA. This idea only makes sense if one is thinking of a mind as a sort of potential space.

Clone Einstein and raise him with wolves and you get a sort of smart wolf mind inhabiting a human body. Minds are memetic. Petunias don't have minds. I am my mind.

The second issue (more of a missing idea really) is that of functional/algorithmic equivalence. If you take a human brain, scan it, and sufficiently simulate out the key circuits, you get a functional equivalent of the original mind encoded in that brain. The substrate doesn't matter, and nor even do the exact algorithms, as any circuit can be replaced with any algorithm that preserves the input/output relationships.

Functional equivalence is another way of arriving at the "minds are memetic" conclusion.

As a result of this, the region of mindspace which we can likely first access with AGI designs is some small envelop around current human mindspace.

The map of mindspace here may be more or less correct, but whats-anything-but-clear is how distinct near term de novo AGI actually is from say human uploads, given: functional equivalence, bayesian brain, no free lunch in optimization, and the mind is memetic.

For example, if the most viable route to AGI turns out to be brain-like designs, then it is silly not to anthropomorphize AGI.

Replies from: azergante↑ comment by azergante · 2025-04-21T10:29:23.735Z · LW(p) · GW(p)

This leads to the first big problem with this post: The idea that minds are determined by DNA. This idea only makes sense if one is thinking of a mind as a sort of potential space.

Clone Einstein and raise him with wolves and you get a sort of smart wolf mind inhabiting a human body. Minds are memetic. Petunias don't have minds. I am my mind.

Reversing your analogy, if you clone a wolf and raise it with Einsteins, you do not get another Einstein. That is because hardware (DNA) matters and wolves do not have the required brain hardware to instantiate Einstein's mind.

Minds are determined to a large extent (though not fully as you rightly point out) by the specific hardware or hardware architecture that instantiates them, Eliezer stresses this point in his post Detached Lever Fallacy [? · GW] that comes earlier in the sequence: learning a human language and culture requires specialized hardware that has been evolved (optimized) specifically for the task, the same goes for deciding to grow fur when it's cold (you need sensors) or for parsing what is seen (eyes + visual cortex). The right environment is not enough, you need a mind that already works to even be able to learn from the environment.

Minds are memetic

A quick search tells me this is not true for reptiles, amphibians, fish and insects. Primates (including humans) and birds have specific hardware dedicated to this task: mirror neurons.

The substrate doesn't matter

In practice it does as soon as you try to make such a mind because you do not have the same runtime complexity or energy consumption etc depending on the substrate. For example you can emulate a Quantum Computer on a classical computer, but you get an exponential slow-down.