Posts

Comments

It sometimes takes me a long time to go from "A is true", "B is true", "A and B implies C is true" to "C is true".

I think this is a common issue with humans, for example I can see a word such as "aqueduct", and also know that "aqua" means water in Latin, yet fail to notice that "aqueduct" comes from "aqua". This is because when I see a word it does not trigger a dynamic that searches for a root.

Another case is when the rule looks a bit different, say "a and b implies c" rather than "A and B implies C" and some effort is needed to notice that it still applies.

I think an even more common reason is that the facts are never brought in working memory at the same time, and so inference never happens.

All this hints to a practical epistemological-fu: we can increase our knowledge simply by actively reviewing our facts, say every morning, and trying to infer new facts from them! This might even create a virtuous circle, as the more facts one infers, the more facts one can combine to generate more inferences.

On the other hand there is a limit to the number of facts one can review in a given amount of time, so perhaps a healthy epistemological habit to have is to trigger one's inference engine every time one learns a new (significant?) fact.

The core argument that there is "no universally compelling argument" holds if we literally consider all of mind design space, but for the task of building and aligning AGIs we may be able to constrain the space such that it is unclear that the argument holds.

For example in order to accomplish general tasks AGIs can be expected to have a coherent, accurate and compressed model of the world (as do transformers to some extent) such that they can roughly restate their input. This implies that in a world where there is a lot of evidence that the sky is blue (input / argument), AGIs will tend to believe that the sky is blue (output / fact).

So even if "[there is] no universally compelling argument" holds in general, for the subset of minds we care about it does not hold.

To be clear I do not think this constraint will auto-magically align AGIs with human values. But they will know what values humans tend to have.

More broadly values do not seem to be in the category of things that can be argued for because all arguments are judged in light of the values we hold, so arguing that values X are better than Y, is false when judged off Y (if we visualize values Y as a vector, it is more aligned with itself than it is with X).

Values can be changed (soft power, reprogramming etc) or shown to be already aligned in some dimension such that (temporary) cooperation makes sense.

This leads to the first big problem with this post: The idea that minds are determined by DNA. This idea only makes sense if one is thinking of a mind as a sort of potential space.

Clone Einstein and raise him with wolves and you get a sort of smart wolf mind inhabiting a human body. Minds are memetic. Petunias don't have minds. I am my mind.

Reversing your analogy, if you clone a wolf and raise it with Einsteins, you do not get another Einstein. That is because hardware (DNA) matters and wolves do not have the required brain hardware to instantiate Einstein's mind.

Minds are determined to a large extent (though not fully as you rightly point out) by the specific hardware or hardware architecture that instantiates them, Eliezer stresses this point in his post Detached Lever Fallacy that comes earlier in the sequence: learning a human language and culture requires specialized hardware that has been evolved (optimized) specifically for the task, the same goes for deciding to grow fur when it's cold (you need sensors) or for parsing what is seen (eyes + visual cortex). The right environment is not enough, you need a mind that already works to even be able to learn from the environment.

Minds are memetic

A quick search tells me this is not true for reptiles, amphibians, fish and insects. Primates (including humans) and birds have specific hardware dedicated to this task: mirror neurons.

The substrate doesn't matter

In practice it does as soon as you try to make such a mind because you do not have the same runtime complexity or energy consumption etc depending on the substrate. For example you can emulate a Quantum Computer on a classical computer, but you get an exponential slow-down.

Thanks for the suggestion, I added the "Edit 1" section to the post to showcase a small study on 3 posts known to contain factual mistakes. The LLM is able to spot and correct the mistake in 2 of the 3 cases, and provides valuable (though verbose) context. Overall this seems promising to me.

This post assumes the word "happiness" is crisply defined and means the same thing for everyone but that's not the case. Or perhaps it is implicitly arguing what the meaning of "happiness" should be?

Anyway this post would be much clearer if the word "happiness" was tabooed.

I have always been slightly confused at people arguing against wire-heading. Isn't wire-heading the thing that is supposed to max out our utility function? if that's not the case then what's the point of talking about it? why not just find what does maximize our utility function and do that instead? Or if we want to say that stimulating the pleasure centers of the brain could only ever account for X% of our utility function then we can just say it, no need for endless arguing. And no, we don't need to agree we all have the exact same utility function, because we don't.

Back to "happiness", I conceive that word as "the feeling I have when I satisfy some condition of my utility function and the score goes up". So obviously the more the better.

If I happen to value actual scientific discoveries then the pill that only gives me the thrill of making a discovery won't make me as happy as actually discovering something, because the discovery and the social status and so on won't be there once the thrill is gone.

We could argue that actually the feeling the pill gives me is indistinguishable from the real feeling, in which case sure enough, I get the same amount of happiness from the pill as from a real discovery, but now the pill has to simulate everything that's in my light cone using the same mechanisms as the universe would to create an experience indistinguishable from the real deal, so maybe it's fine?

"Yeah? Let's see your aura of destiny, buddy."

Another angle: if I have to hire a software engineer, I'll pick the one with the aura of destiny any time, because that one is more likely to achieve great things than the others.

I would say auras of destiny are Bayesian evidence for greatness, and they are hard to fake signals.

Slightly off-topic but Wow! this is material for an awesome RTS video-game! That would be so cool!

And a bit more on topic: that kind of video game would give the broader public a good idea of what's coming, and researchers and leaders a way to vividly explore various scenario, all while having fun.

Imagine playing the role of an unaligned AGI, and noticing that the game dynamics push you to deceive humans to gain more compute and capabilities until you can take over or something, all because that's the fastest way to maximize your utility function!

If you now put a detector in path A , it will find a photon with probability ( ), and same for path B. This means that there is a 50% chance of the configuration |photon in path A only>, and 50% chance of the configuration |photon in path B only>. The arrow direction still has no effect on the probability.

This 50/50 split is extra surprising and perhaps misleading? What's the cause? why not 100 on path A and 0 on path B (or the reverse)?

As a layman it seems like either:

- The world is not deterministic, so when we repeat the experiment sometimes the detector goes off on path A and sometimes it goes off on path B. That's very surprising.

- We are in a deterministic world, that means that if we can repeat the same experiment we get the same result each time. But actually we don't get the same result, which means we failed to repeat the experiment, or rather the initial conditions were significantly different. The probabilities tell us something about the experimental setup, not about quantum mechanics: as an analogy if I roll a fair plastic dice N times, and observe it falls on 6 with probability half, this tells me a lot about my dice rolling skills, but not so much about plastic. In a similar way when we send N photons in an interferometer setup, the setup actually varies with time: maybe the photon does not land quite on the same spot on the mirror each time, or some atoms have decayed, whatever, so the 50/50 probabilities we observe actually tell us about the setup and our inability to repeat the same experiment to the degree that it matters, not about quantum mechanics. This is misleading because if the probabilities have nothing to do with QM why mention them at all?

Insularity will make you dumber

Okay but there is another side to the issue, insularity can also have positive effects:

If you look at evolution, when a population gets stuck on an island, it starts to develop in interesting ways, maybe insularity is a necessary step to develop truly creative worldviews?

Also IIRC in "The Timeless way of building" Christopher Alexander mentions that cities should be designed as several small neighborhoods with well-defined boundaries, where people with similar background live. He also says something to the effect that the boundaries and the common background are essential to create a strong and interesting culture. So to put a positive spin on it, to some extent insularity is what allowed the rationalist community to develop its peculiar charm.

Now I also find the jargon annoying at times, and had to ask an LLM about this "epistemic status" business, but another way to see it is that this gate-keeping is a selection process that keeps people that are not a good fit out of the community, helping it maintain its coherence. But maybe we should still tone it down in favor of clarity.

I liked the intro but some parts of the previous posts and this one have been confusing, for example in this post:

Second, we saw that configurations are about multiple particles. [...] And in the real universe, every configuration is about all the particles… everywhere.)

and more glaring in the previous one:

A configuration says, “a photon here, a photon there,”

Here my intuition is that we can model the world as particles, or we can use the lower-level model of the world which configurations are, but we can't mix both any way we want. These sentences feel backwards: they talk about configurations in terms of particles, and this doesn't make any sense because the model of particles should be derived from the lower-level model of configurations, not the other way around!!

Maybe someone with more knowledge in physics and philosophy of science can clarify?

Planecrash (from Eliezer and Lintamande) seems highly relevant here: the hero, Keltam, tries to determine whether he is in a conspiracy or not. To do that he basically applies Bayes theorem to each new fact he encounters: "Is fact F more likely to happen if I am in a conspiracy or if I am not? hmm, fact F seems more likely to happen if I am not in a conspiracy, let's update my prior a bit towards the 'not in a conspiracy' side".

Planecrash is a great walkthrough on how to apply that kind of thinking to evaluate whether someone is bullshitting you or not, by keeping two alternative worlds that explain what they are saying, and updating the likelihoods as the discussion goes on.

Surely if you start putting probability on events such as "someone stole my phone", and "that person then tailed me", and multiply the probability of each new fact, it gets really unlikely really fast. Also relevant: Burdensome details

You did not explicitly state the goal of the advice, I think it would be interesting to distinguish between advice that is meant to increase your value to the company, and advice meant to increase your satisfaction with your work, especially when the two point in opposite directions.

For example it could be that "swallow[ing] your pride and us[ing] that garbage language you hate so much" is good for the company in some cases, but terrible for job satisfaction, making you depressed or angry every time you have to use that silly language/tool.

For that reason try to structure teams such that every team has everything it needs for its day to day work.

I would extend that to "have as much control as you can over what you do". I increasingly find that this is key to move fast and produce quality software.

This applies to code and means dependencies should be owned and open to modifications, so the team understands them well and can fix bugs or add features as needed.

This avoids ridiculous situations where bugs are never fixed or shipping very simple features (such as changing a theme for a UI component) is impossible or takes weeks because a framework actively prevents it.

More control and understanding also tends to be better for satisfaction. Of course all this is on a spectrum and should be balanced with other requirements.

Tip: you can ask ChatGPT to include confidence scores in its replies

Interactions with ChatGPT can be customized durably in the options, for example you can add the following instructions: "include a confidence rating at the end of your response in the format 'Confidence: X%'. If your confidence is below 80%, briefly explain why".

Here is a sample conversation demonstrating this and showing what ChatGPT has to say about its calibration:

Me: Are you calibrated, by which I mean, when you output a confidence X as a percentage, are you right X times out of 100?

ChatGPT: Reasoned about confidence calibration for 27 seconds. I don’t have calibration in that strict sense. When I mention a confidence percentage, it’s a heuristic internal signal rather than a guarantee that I’ll be right exactly that proportion of the time. Research shows that language models often exhibit miscalibration, meaning a stated confidence (say 70%) doesn’t empirically translate to being correct 70% of the time. I'm about 90% confident in this explanation.

Me: How bad is the miscalibration?

ChatGPT: Thought for a second. LLMs—especially those fine‐tuned with RLHF—are often notably overconfident. For example, a model might claim 90% confidence while its actual accuracy is closer to 70–80%. Studies report expected calibration errors (ECE) of roughly 5–10% or more, and effective calibration methods can sometimes cut these errors by about half, underscoring that miscalibration is a significant issue. I'm about 90% confident in this summary, based on multiple recent calibration studies.

Me: If you are predictably overconfident, and you know it, do you think you can notice that before stating your confidence and correct for it?

ChatGPT: Reasoned about overconfidence and calibration adjustment for 4 seconds. I don’t have a real-time “internal monitor” to detect my own overconfidence before stating it. Instead, I rely on calibration techniques—like temperature scaling and post-hoc adjustments—that help align my confidence levels with actual accuracy. In other words, I can be tuned to be less overconfident, but I don’t consciously correct my confidence on the fly. I'm about 90% confident in this explanation based on current research findings.

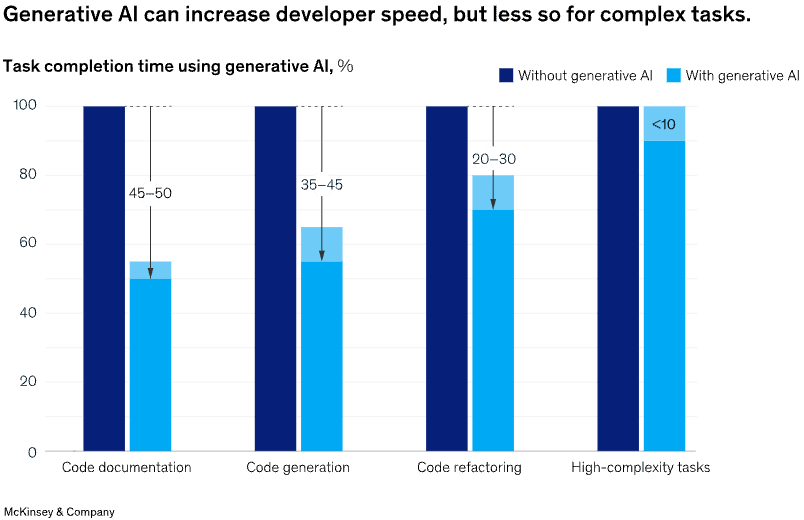

Many developers have been reporting that this is dramatically increasing their productivity, up to 5x'ing/10x'ing it

I challenge the data: none of my colleagues have been reporting this high a speed-up. I think your observation can just be explained by a high sampling bias.

People who do not use AI or got no improvement are unlikely to report. You also mention Twitter where users share "hot takes" etc to increase engagement.

It's good to have actual numbers before we explain them, so I ran a quick search and found 3 articles that look promising (I only did a basic check on the methodology, don't take these numbers at face value without analyzing the source in depth):

- An Axify analysis of the DORA metrics they collect: https://axify.io/blog/use-ai-for-developer-productivity

Documentation quality (+7.5%)

Code review speed (+3.1%)

Delivery throughput (-1.5%): AI adoption slightly decreases delivery throughput, usually due to over-reliance, learning curve, and increased complexity.

Delivery stability (-7.2%): It is significantly impacted because AI tools can generate incorrect or incomplete code, increasing the risk of production errors.

What are the DORA metrics?

Deployment frequency | How often a team puts an item into production.

Lead time for changes | Time required for a commit to go into production.

Change failure rate | Percentage of deployments resulting in production failure.

Failed deployment recovery time | Time required for a team to recover from a production failure.

- A McKinsey pilot study on 40 of their developers: https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/unleashing-developer-productivity-with-generative-ai

- An UpLevel analysis of the data of 800 developers: https://uplevelteam.com/blog/ai-for-developer-productivity

Analyzing actual engineering data from a sample of nearly 800 developers and objective metrics, such as cycle time, PR throughput, bug rate, and extended working hours (“Always On” time), we found that Copilot access provided no significant change in efficiency metrics.

The group using Copilot introduced 41% more bugs

Copilot access didn’t mitigate the risk of burnout

“The adoption rate is significantly below 100% in all three experiments,” the researchers wrote. “With around 30-40% of the engineers not even trying the product.

In my experience LLMs are a replacement for search engines (these days, search engines are only good to find info when you already know on which website to look ...). They don't do well in moderately sized code bases, nor in code bases with lots of esoteric business logic, which is to say they don't do well in most enterprise software.

I mostly use them as:

- a documentation that talks: it reminds me of syntax, finds functions in APIs and generates code snippets I can play with to understand APIs

- a fuzzy version of an encyclopedia: it helps me get an overview of a subject and points me to resources where I can get crisper knowledge

- support/better search engine for weird build issues, or weird bugs etc

I think it's also good at one shot scripts, such as data wrangling and data viz, but it does not come up often in my current position.

I would rate the productivity increase at about 10%. I think the use of modal editors (Vim or modern alternatives) improve coding speed more than inline AI completion, which is often distracting.

A lot of my time is spent understanding what I need to code in a back and forth with the product manager or clients. Then I can either code it myself (it's not so hard once I understand the requirements) or spend time explaining the AI what it needs to do, watch it write sloppy code, and rewrite the thing.

Once in a while the coding part is actually the hard part, for example when I need to make the code fast or make a feature play well with the existing architecture, but then the AI can't do logic well enough to optimize code, nor can it reason about the entire code base which it can't see anyway.

I also think it is unlikely that AGIs will compete in human status games. Status games are not just about being the best: Deep Blue is not high status, sportsmen that take drugs to improve their performance are not high status.

Status games have rules and you only win if you do something impressive while competing within the rules, being an AGI is likely to be seen as an unfair advantage, and thus AIs will be banned from human status games, in the same way that current sports competitions are split by gender and weight.

Even if they are not banned given their abilities it will be expected that they do much better than humans, it will just be a normal thing, not a high status, impressive thing.

Let me show you the ropes

There is a rope.

You hold one end.

I hold the other.

The rope is tight.

I pull on it.

How long until your end of the rope moves?

What matters is not how long until your end of the rope moves.

It's having fun sciencing it!

For those interested in writing better trip reports there is a "Guide to Writing Rigorous Reports of Exotic States of Consciousness" at https://qri.org/blog/rigorous-reports

A trip report is an especially hard case of something one can write about:

- english does not have a well-developed vocabulary for exotic states of consciousness

- even if we made up new words, they might not make much sense to people that have not experienced what they point at, just like it's hard to describe color to blind people or to project a high-dimensional thing to a lower dimensional space.

I have a similar intuition that if mirror-life is dangerous to Earth-life, then the mirror version of mirror-life (that is, Earth-life) should be about equally as dangerous to mirror-life as mirror-life is to Earth-life. Having only read this post and in the absence of any evidence either way this default intuition seems reasonable.

I find the post alarming and I really wish it had some numbers instead of words like "might" to back up the claims of threat. At the moment my uneducated mental model is that for mirror-life to be a danger it has to:

- find enough food that fit its chirality to survive

- not get killed by other life-forms

- be able to survive Earth temperature, atmosphere etc etc

- enter our body

- bypass our immune system

- be a danger to us

Hmm, 6 ifs seems like a lot, so is it unlikely? in the absence of any odds it is hard to say.

The post would be more convincing and useful if it included a more detailed threat model, or some probabilities, or a simulation, or anything quantified.

A last question: how many mirror molecules does an organism need to be mirror-life? is one enough? does it make any difference to its threat-level?

2+2=5 is Fine Maths: all you need is Coherence

[ epistemological status: a thought I had while reading about Russell's paradox, rewritten and expanded on by Claude ; my math level: undergraduate-ish ]

Introduction

Mathematics has faced several apparent "crises" throughout history that seemed to threaten its very foundations. However, these crises largely dissolve when we recognize a simple truth: mathematics consists of coherent systems designed for specific purposes, rather than a single universal "true" mathematics. This perspective shift—from seeing mathematics as the discovery of absolute truth to viewing it as the creation of coherent and sometimes useful logical systems—resolves many historical paradoxes and controversies.

The Key Insight

The only fundamental requirement for a mathematical system is internal coherence—it must operate according to consistent rules without contradicting itself. A system need not:

- Apply to every conceivable case

- Match physical reality

- Be the "one true" way to approach a problem

Just as a carpenter might choose different tools for different jobs, mathematicians can work with different systems depending on their needs. This insight resolves numerous historical "crises" in mathematics.

Historical Examples

The Non-Euclidean Revelation

For two millennia, mathematicians struggled to prove Euclid's parallel postulate from his other axioms. The discovery that you could create perfectly consistent geometries where parallel lines behave differently initially seemed to threaten the foundations of geometry itself. How could there be multiple "true" geometries? The resolution? Different geometric systems serve different purposes:

- Euclidean geometry works perfectly for everyday human-scale calculations

- Spherical geometry proves invaluable for navigation on planetary surfaces

- Hyperbolic geometry finds applications in relativity theory

None of these systems is "more true" than the others—they're different tools for different jobs.

Russell's Paradox and Set Theory

Consider the set of all sets that don't contain themselves. Does this set contain itself? If it does, it shouldn't; if it doesn't, it should. This paradox seemed to threaten the foundations of set theory and logic itself.

The solution was elegantly simple: we don't need a set theory that can handle every conceivable set definition. Modern set theories (like ZFC) simply exclude problematic cases while remaining perfectly useful for mathematics. This isn't a weakness—it's a feature. A hammer doesn't need to be able to tighten screws to be an excellent hammer.

The Calculus Controversy

Early calculus used "infinitesimals"—infinitely small quantities—in ways that seemed logically questionable. Rather than this destroying calculus, mathematics evolved multiple rigorous frameworks:

- Standard analysis using limits

- Non-standard analysis with hyperreal numbers

- Smooth infinitesimal analysis

Each approach has its advantages for different applications, and all are internally coherent.

Implications for Modern Mathematics

This perspective—that mathematics consists of various coherent systems with different domains of applicability—aligns perfectly with modern mathematical practice. Mathematicians routinely work with different systems depending on their needs:

- A number theorist might work with different number systems

- A geometer might switch between different geometric frameworks

- A logician might use different logical systems

None of these choices imply that other options are "wrong"—just that they're less useful for the particular problem at hand.

The Parallel with Physics

This view of mathematics parallels modern physics, where seemingly incompatible theories (quantum mechanics and general relativity) can coexist because each is useful in its domain. We don't need a "theory of everything" to do useful physics, and we don't need a universal mathematics to do useful mathematics.

Conclusion

The recurring "crises" in mathematical foundations largely stem from an overly rigid view of what mathematics should be. By recognizing mathematics as a collection of coherent tools rather than a search for absolute truth, these crises dissolve into mere stepping stones in our understanding of mathematical systems.

Mathematics isn't about discovering the one true system—it's about creating useful systems that help us understand and manipulate abstract patterns. The only real requirement is internal coherence, and the main criterion for choosing between systems is their utility for the task at hand.

This perspective not only resolves historical controversies but also liberates us to create and explore new mathematical systems without worrying about whether they're "really true." The question isn't truth—it's coherence.

I really like the idea of milestones, I think seeing the result of each milestones will help create trust in the group, confidence that the end action will succeed and a realization of the real impact the group has. Each CA should probably start with small milestones (posting something on social medias) and ramp things up until the end goal is reached. Seeing actual impact early will definitely keep people engaged and might make the group more cohesive and ambitious.

Ditch old software tools or programming languages for better, new ones.

My take on the tool VS agent distinction:

-

A tool runs a predefined algorithm whose outputs are in a narrow, well-understood and obviously safe space.

-

An agent runs an algorithm that allows it to compose and execute its own algorithm (choose actions) to maximize its utility function (get closer to its goal). If the agent can compose enough actions from a large enough set, the output of the new algorithm is wildly unpredictable and potentially catastrophic.

This hints that we can build safe agents by carefully curating the set of actions it chooses from so that any algorithm composed from the set produces an output that is in a safe space.

I think being as honest as reasonably sensible is good for oneself. Being honest applies pressure on oneself and one’s environment until the both closely match. I expect the process to have its ups and downs but to lead to a smoother life on the long run.

An example that comes to mind is the necessity to open up to have meaningful relationships (versus the alternative of concealing one’s interests which tends to make conversations boring).

Also honesty seems like a requirement to have an accurate map of reality: having snappy and accurate feedback is essential to good learning, but if one lies and distorts reality to accomplish one’s goals, reality will send back distorted feedback causing incorrect updates of one’s beliefs.

On another note: this post immediately reminded me of the buddhist concept of Right Speech, which might be worth investigating for further advice on how to practice this. A few quotes:

"Right speech, explained in negative terms, means avoiding four types of harmful speech: lies (words spoken with the intent of misrepresenting the truth); divisive speech (spoken with the intent of creating rifts between people); harsh speech (spoken with the intent of hurting another person's feelings); and idle chatter (spoken with no purposeful intent at all)."

"In positive terms, right speech means speaking in ways that are trustworthy, harmonious, comforting, and worth taking to heart. When you make a practice of these positive forms of right speech, your words become a gift to others. In response, other people will start listening more to what you say, and will be more likely to respond in kind. This gives you a sense of the power of your actions: the way you act in the present moment does shape the world of your experience."

Thanissaro Bhikkhu (source: https://www.accesstoinsight.org/lib/authors/thanissaro/speech.html)

I also thought about something along those lines: explaining the domestication of wolves to dogs, or maybe prehistoric wheat to modern wheat, then extrapolating to chimps. Then I had a dangerous thought, what would happen if we tried to select chimps for humaneness?

goals appear only when you make rough generalizations from its behavior in limited cases.

I am surprised no one brought up the usual map / territory distinction. In this case the territory is the set of observed behaviors. Humans look at the territory and with their limited processing power they produce a compressed and lossy map, here called the goal.

The goal is a useful model to talk simply about the set of behaviors, but has no existence outside the head of people discussing it.

This is a great use case for AI: expert knowledge tailored precisely to one’s needs

Is the "cure cancer goal ends up as a nuke humanity action" hypothesis valid and backed by evidence?

My understanding is that the meaning of the "cure cancer" sentence can be represented as a point in a high-dimensional meaning space, which I expect to be pretty far from the "nuke humanity" point.

For example "cure cancer" would be highly associated with saving lots of lives and positive sentiments, while "nuke humanity" would have the exact opposite associations, positioning it far away from "cure cancer".

A good design might specify that if the two goals are sufficiently far away they are not interchangeable. This could be modeled in the AI as an exponential decrease of the reward based on the distance between the meaning of the goal and the meaning of the action.

Does this make any sense? (I have a feeling I might be mixing concepts coming from different types of AI)

If you know your belief isn't correlated to reality, how can you still believe it?

Interestingly, physics models (map) are wrong (inaccurate) and people know that but still use them all the time because they are good enough with respect to some goal.

Less accurate models can even be favored over more accurate ones to save on computing power or reduce complexity.

As long as the benefits outweigh the drawbacks, the correlation to reality is irrelevant.

Not sure how cleanly this maps to beliefs since one would have to be able to go from one belief to another, however it might be possible by successively activating different parts of the brain that hold different beliefs, in a way similar to someone very angry that completely switches gears to answer an important phone call.

@Eliezer, some interesting points in the article, I will criticize what frustrated me:

> If you see a beaver chewing a log, then you know what this thing-that-chews-through-logs looks like,

> and you will be able to recognize it on future occasions whether it is called a “beaver” or not.

> But if you acquire your beliefs about beavers by someone else telling you facts about “beavers,”

> you may not be able to recognize a beaver when you see one.

Things do not have intrinsic meaning, rather meaning is an emergent property of

things in relation to each other: for a brain, an image of a beaver and the sound

"beaver" are just meaningless patterns of electrical signals.

Through experiencing reality the brain learns to associate patterns based on similarity, co-occurence and so on, and labels these clusters with handles in order to communicate. ’Meaning’ is the entire cluster itself, which itself bears meaning in relation to other clusters.

If you try to single out a node off the cluster, you soon find that it loses all meaning and

reverts back to meaningless noise.

> G1071(G1072, G1073)

Maybe the above does not seem dumb now? experiencing reality is basically entering and updating relationships that eventually make sense as a whole in a system.

I feel there is a huge difference in our models of reality:

In my model everything is self-referential, just one big graph where nodes barely exist (only aliases for the whole graph itself). There is no ground to knowledge, nothing ultimate. The only thing we have

is this self-referential map, from which we infer a non-phenomenological territory.

You seem to think the territory contains beavers, I claim beavers exist only in the map, as a block arbitrarily carved out of our phenomenological experience by our brain, as if it were the only way to carve a concept out of experience and not one of infinitely many valid ways (e.g. considering the beaver and the air around and not have a concept for just a beaver with no air), and as if only part experience could be considered without being impacted by the whole of experience (i.e. there is no living beaver without air).

This view is very influenced by emptiness by the way.

The examples seem to assume that "and" and "or" as used in natural language work the same way as their logical counterpart. I think this is not the case and that it could bias the experiment’s results.

As a trivial example the question "Do you want to go to the beach or to the city?" is not just a yes or no question, as boolean logic would have it.

Not everyone learns about boolean logic, and those who do likely learn it long after learning how to talk, so it’s likely that natural language propositions that look somewhat logical are not interpreted as just logic problems.

I think that this is at play in the example about Russia. Say you are on holidays and presented with one these 2 statements:

1. "Going to the beach then to the city"

2. "Going to the city"

The second statement obviously means you are going only to the city, and not to the beach nor anywhere else before.

Now back to Russia:

1. "Russia invades Poland, followed by suspension of diplomatic relations between the USA and the USSR”

2. “Suspension of diplomatic relations between the USA and the USSR”

Taken together, the 2nd proposition strongly implies that Russia did not invade Poland: after all if Russia did invade Poland no one would have written the 2nd proposition because it would be the same as the 1st one.

And it also implies that there is no reason at all for suspending relations: the statements look like they were made by an objective know-it-all, a reason is given in the 1st statement, so in that context it is reasonable to assume that if there was a reason for the 2nd statement it would also be given, and the absence of further info means there is no reason.

Even if seeing only the 2nd proposition and not the 1st, it seems to me that humans have a need to attribute specific causes to effects (which might be a cognitive bias), and seeing no explanation for the event, it is natural to think "surely, there must be SOME reason, how likely is it that Russia suspends diplomatic relations for no reason?", but confronted to the fact that no reason is given, the probability of the event is lowered.

It seems that the proposition is not evaluated as pure boolean logic, but perhaps parsed taking into account the broader social context, historical context and so on, which arguably makes more sense in real life.