How Much Are LLMs Actually Boosting Real-World Programmer Productivity?

post by Thane Ruthenis · 2025-03-04T16:23:39.296Z · LW · GW · 5 commentsThis is a question post.

Contents

Answers 43 Richard Horvath 31 Davidmanheim 28 faul_sname 14 yo-cuddles 12 azergante 12 Noosphere89 11 Raemon 11 wachichornia 11 chezdiogenes 10 CBiddulph 7 mruwnik 6 Michael Roe 5 Gunnar_Zarncke 4 Nathan Helm-Burger 4 Qumeric 4 Yair Halberstadt 4 Gunnar_Zarncke 3 Dominik Lukeš 3 BenHouston3D 3 Jodie themathgenius 3 jamii 3 Ben Livengood 1 elliott 1 Cole Wyeth None 5 comments

LLM-based coding-assistance tools have been out for ~2 years now. Many developers have been reporting that this is dramatically increasing their productivity, up to 5x'ing/10x'ing it.

It seems clear that this multiplier isn't field-wide, at least. There's no corresponding increase in output, after all.

This would make sense. If you're doing anything nontrivial (i. e., anything other than adding minor boilerplate features to your codebase), LLM tools are fiddly. Out-of-the-box solutions don't Just Work for that purpose. You need to significantly adjust your workflow to make use of them, if that's even possible. Most programmers wouldn't know how to do that/wouldn't care to bother.

It's therefore reasonable to assume that a 5x/10x greater output, if it exists, is unevenly distributed, mostly affecting power users/people particularly talented at using LLMs.

Empirically, we likewise don't seem to be living in the world where the whole software industry is suddenly 5-10 times more productive. It'll have been the case for 1-2 years now, and I, at least, have felt approximately zero impact. I don't see 5-10x more useful features in the software I use, or 5-10x more software that's useful to me, or that the software I'm using is suddenly working 5-10x better, etc.

However, I'm also struggling to see the supposed 5-10x'ing anywhere else. If power users are experiencing this much improvement, what projects were enabled by it?

Previously, I'd assumed I didn't know just because I'm living under a rock. So I've tried to get Deep Research to fetch me an overview, and it... also struggled to find anything concrete. Judge for yourself: one, two. The COBOL refactor counts, but that's about it. (Maybe I'm bad at prompting it?)

Even the AGI labs' customer-facing offerings aren't an endless trove of rich features for interfacing with their LLMs in sophisticated ways – even though you'd assume there'd be an unusual concentration of power users there. You have a dialogue box and can upload PDFs to it, that's about it. You can't get the LLM to interface with an ever-growing list of arbitrary software and data types, there isn't an endless list of QoL features that you can turn on/off on demand, etc.[1]

So I'm asking LW now: What's the real-world impact? What projects/advancements exist now that wouldn't have existed without LLMs? And if none of that is publicly attributed to LLMs, what projects have appeared suspiciously fast, such that, on sober analysis, they couldn't have been spun up this quickly in the dark pre-LLM ages? What slice through the programming ecosystem is experiencing 10x growth, if any?

And if we assume that this is going to proliferate, with all programmers attaining the same productivity boost as the early adopters are experiencing now, what would be the real-world impact?

To clarify, what I'm not asking for is:

- Reports full of vague hype about 10x'ing productivity, with no clear attribution regarding what project this 10x'd productivity enabled. (Twitter is full of those, but light on useful stuff actually being shipped.)

- Abstract economic indicators that suggest X% productivity gains. (This could mean anything, including an LLM-based bubble.)

- Abstract indicators to the tune of "this analysis shows Y% more code has been produced in the last quarter". (This can just indicate AI producing code slop/bloat).

- Abstract economic indicators that suggest Z% of developers have been laid off/junior devs can't find work anymore. (Which may be mostly a return to the pre-COVID normal trends.)

- Useless toy examples like "I used ChatGPT to generate the 1000th clone of Snake/of this website!".

- New tools/functions that are LLM wrappers, as opposed to being created via LLM help. (I'm not looking for LLMs-as-a-service, I'm looking for "mundane" outputs that were produced much faster/better due to LLM help.)

I. e.: I want concrete, important real-life consequences.

From the fact that I've observed none of them so far, and in the spirit of Cunningham's Law, here's a tentative conspiracy theory: LLMs mostly do not actually boost programmer productivity on net. Instead:

- N hours that a programmer saves by generating code via an LLM are then re-wasted fixing/untangling that code.

- At a macro-scale, this sometimes leads to "climbing up where you can't get down", where you use an LLM to generate a massive codebase, then it gets confused once a size/complexity threshold is passed, and then you have to start from scratch because the LLM made atrocious/alien architectural decisions. This likewise destroys (almost?) all apparent productivity gains.

- Inasmuch as LLMs actually do lead to people creating new software, it's mostly one-off trinkets/proofs of concept that nobody ends up using and which didn't need to exist. But it still "feels" like your productivity has skyrocketed.

- Inasmuch as LLMs actually do increase the amount of code that goes into useful applications, it mostly ends up spent on creating bloatware/services that don't need to exist. I. e., it actually makes the shipped software worse, because it's written more lazily.

- People who experience LLMs improving their workflows are mostly fooled by the magical effect of asking an LLM to do something in natural language and then immediately getting kinda-working code in response. They fail to track how much they spend integrating and fixing this code, and/or how much the code is actually used.

I don't fully believe this conspiracy theory, it feels like it can't possibly be true. But it suddenly seems very compelling.

I expect LLMs have definitely been useful for writing minor features or for getting the people inexperienced with programming/with a specific library/with a specific codebase get started easier and learn faster. They've been useful for me in those capacities. But it's probably like a 10-30% overall boost, plus flat cost reductions for starting in new domains and for some rare one-off projects like "do a trivial refactor".

And this is mostly where it'll stay unless AGI labs actually crack long-horizon agency/innovations; i. e., basically until genuine AGI is actually there.

Prove me wrong, I guess.

- ^

Just as some concrete examples: Anthropic took ages to add LaTeX support, and why weren't RL-less Deep Research clones offered as a default option by literally everyone 1.5 years ago?

Answers

In my personal experience, LLMs can speed up me 10X times only in very specific circumstances, that are (and have always been) a minor part of my job, and I suspect this is true for most developers:

- If I need some small, self-contained script to do something relatively simple. E.g. a bash script for file manipulation or an excel VBA code. These are easy to describe just with a couple of sentences, easy to verify, I often don't have deep enough knowledge in the particular language to write the whole thing without looking up syntax. And more importantly: as they are small and self-contained, there is no actual need to think much about the maintainability, such as unit testing, breaking into modules and knowing business and most environment context. (Anything containing regex is a subset of this, where LLMs are game changer)

- If I need to work on some stack I am not too familiar with, LLMs can speed up learning process a lot by providing working (or almost working) examples. I just type what I need and I can get a rough solution that shows what class and methods I can use from which library, or even what general logic/approach can work as a solution. In a lot of cases, even if the solution provided does not actually work, just knowing about how I can interact with a library is a huge help.

Without this I would have to spend hours or days either doing some online course/reading documentation/experimenting.

The cavet here is that even in cases when the solution works, the provided code is not something that I can simply add into an actual project. Usually it has to be broken up and parts may go to different modules, refactored to be object oriented and use consistent abstractions that make sense for the particular project.

Based on above, I suspect that most people who report 10X increase in software development either:

- Had very low levels of development knowledge, so the bar of 10X is very low. Likely they also do not recognize/not yet experienced that plugging raw LLM code into a project can have a lot of negative downstream consequences that you described. (Though to be fair, if they could not write it before, some code is still better than no code at all, so a worthy trade-off).

- Need to write a lot of small, self-contained solutions (I can imagine someone doing a lot of excel automation or being a dedicated person to build the shell scripts for devops/operations purposes).

- Need to experiment with a lot of different stacks/libraries (although it is difficult to imagine a job where this is the majority of long-term tasks)

- Were affected by the anchoring effect: where they recently used LLMs to solve something quickly, and extrapolated from that particular example, not from long-term experience.

My understanding of the situations, speaking to people in normal firms who code, and management, is that this is all about theory of constraints. As a simplified example, if you previously needed 1 business analyst, one QA tester, and one programmer a day each to do a task, and the programmer's efficiency doubles, or quintuples, the impact on output is zero, because the firm isn't set up to go much faster.

Firms need to rebuild their processes around this to take advantage, and that's only starting to happen, and only at some firms.

↑ comment by Thane Ruthenis · 2025-03-04T18:01:05.407Z · LW(p) · GW(p)

That makes perfect sense to me.

However, shouldn't we expect immediate impact on startups led by early adopters, which could be designed from the ground up around exploiting LLMs? Similar with AGI labs: you'd expect them to rapidly reform around that.

Yet, I haven't heard of a single example of a startup shipping a five-year/ten-year project in one year, or a new software firm eating the lunch of ossified firms ten times its size.

Replies from: robert-k, Davidmanheim↑ comment by Mis-Understandings (robert-k) · 2025-03-04T18:54:06.556Z · LW(p) · GW(p)

There is no reorganization that can increase the tempo of other organizations (pace of customer feedback), which is often the key bottleneck in software already. The same speed dynamic is not new, it is just in sharper focus.

↑ comment by Davidmanheim · 2025-03-04T21:22:28.221Z · LW(p) · GW(p)

Lemonade is doing something like what you describe in Insurance. I suspect other examples exist. But most market segments, even in “pure“ software, don't revolve around only the software product, so it is slower to become obvious if better products emerge.

↑ comment by Garrett Baker (D0TheMath) · 2025-03-05T23:30:44.982Z · LW(p) · GW(p)

Why wouldn’t you see the firm freeze programmer hires and start laying off people en mass?

Replies from: Davidmanheim, ic-rainbow↑ comment by Davidmanheim · 2025-03-06T06:20:00.926Z · LW(p) · GW(p)

I'm not sure we'd see this starkly if people can change roles and shift between job types, but haven't we seen firms engage in large rounds of layoffs and follow up by not hiring as many coders already over the past couple years?

↑ comment by IC Rainbow (ic-rainbow) · 2025-03-09T08:03:29.035Z · LW(p) · GW(p)

Why fire devs that are 10x productive now and you can ship 10x more/faster? You don't want to overtake your unaugmented competitors and survive those who didn't fire theirs?

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2025-03-09T08:21:46.257Z · LW(p) · GW(p)

… did you read david’s comment?

↑ comment by rangefinder (deepsports) · 2025-03-12T00:59:20.457Z · LW(p) · GW(p)

This is basically a version of Tyler Cowen's argument (summarized here: https://marginalrevolution.com/marginalrevolution/2025/02/why-i-think-ai-take-off-is-relatively-slow.html) for why AGI won't change things as quickly as we think because once intelligence is no longer a bottleneck, the other constraints become much more binding. Once programmers no longer become the bottleneck, we'll be bound by the friction that exists elsewhere within companies.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2025-03-12T19:50:22.696Z · LW(p) · GW(p)

Yes, except that as soon as AI can replace the other sources of friction, we'll have a fairly explosive takeoff; he thinks these sources of friction will stay forever, while I think they are currently barriers, but the engine for radical takeoff isn't going to happen via traditional processes adopting the models in individual roles, it will be via new business models developed to take advantage of the technology.

Much like early TV was just videos of people putting on plays, and it took time for people to realize the potential - but once they did, they didn't make plays that were better for TV, they did something that actually used the medium well. And what using AI well would mean, in context of business implications is cutting out human delays, inputs, and required oversight. Which is worrying for several reasons!

Inasmuch as LLMs actually do lead to people creating new software, it's mostly one-off trinkets/proofs of concept that nobody ends up using and which didn't need to exist. But it still "feels" like your productivity has skyrocketed.

I've personally found that the task of "build UI mocks for the stakeholders", which was previously ~10% of the time I spent on my job, has gotten probably 5x faster with LLMs. That said, the amount of time I spend doing that part of my job hasn't really gone down, it's just that the UI mocks are now a lot more detailed and interactive and go through more iterations, which IMO leads to considerably better products.

"This code will be thrown away" is not the same thing as "there is no benefit in causing this code to exist".

The other notable area I've seen benefits is in finding answers to search-engine-proof questions - saying "I observe this error within task running on xyz stack, here is how I have kubernetes configured, what concrete steps can I take to debug such the system?"

But it's probably like a 10-30% overall boost, plus flat cost reductions for starting in new domains and for some rare one-off projects like "do a trivial refactor".

Sounds about right - "10-30% overall productivity boost, higher at the start of projects, lower for messy tangled legacy stuff" aligns with my observations, with the nuance that it's not that I am 10-30% more effective at all of my tasks, but rather that I am many times more effective at a few of my tasks and have no notable gains on most of them.

And this is mostly where it'll stay unless AGI labs actually crack long-horizon agency/innovations; i. e., basically until genuine AGI is actually there.

FWIW I think the bottleneck here is mostly context management rather than agency.

↑ comment by RussellThor · 2025-03-04T20:01:48.773Z · LW(p) · GW(p)

Yes to much of this. For small tasks or where I don't have specialist knowledge I can get 10* speed increase - on average I would put 20%. Smart autocomplete like Cursor is undoubtably a speedup with no apparent downside. The LLM is still especially weak where I am doing data science or algorithm type work where you need to plot the results and look at the graph to know if you are making progress.

This is not going to be a high quality answer, sorry in advance.

I noticed this with someone in my office who is learning robotic process automation: people are very bad at measuring their productivity, they are better at seeing certain kinds of gains and certain kinds of losses. I know someone who swears emphatically that they are many times as productive but have become almost totally unreliable. He's in denial over it, and a couple people now have openly told me they try to remove him from workflows for all the problems he causes.

I think the situation is like this:

If you finish a task very quickly using automated methods, that feels viscerally great and, importantly, is very visible. If your work then incurs time costs later, you might not be able to trace that extra cost to the "automated" tasks you set up earlier, double so if those costs are absorbed by other people catching what you missed and correcting your mistakes, or doing the things that used to be done when you were doing it manually.

I imagine it is hard to track a bug and know, for certain, that you had to waste that time because you used an LLM instead of just doing it yourself. You don't know who else had to waste time fixing your problem because LLM code is spaghetti, or at least you don't feel it in your bones in the same way you feel increases in your output, you don't get to see the counterfactual project where things just went better in intangible ways because you didn't outsource your thinking to gpt. Few people notice, after the fact, how many problems they incurred because of a specific thing they did.

I think LLM usage is almost ubiquitous at this point, if it were conveying big benefits it would show more clearly. If everyone is saying they are 2x more productive (which is kinda low by some testimonies) then it is probably the case that they are just oblivious to the problems they are causing for themselves because they're just less visible.

Many developers have been reporting that this is dramatically increasing their productivity, up to 5x'ing/10x'ing it

I challenge the data: none of my colleagues have been reporting this high a speed-up. I think your observation can just be explained by a high sampling bias.

People who do not use AI or got no improvement are unlikely to report. You also mention Twitter where users share "hot takes" etc to increase engagement.

It's good to have actual numbers before we explain them, so I ran a quick search and found 3 articles that look promising (I only did a basic check on the methodology, don't take these numbers at face value without analyzing the source in depth):

- An Axify analysis of the DORA metrics they collect: https://axify.io/blog/use-ai-for-developer-productivity

Documentation quality (+7.5%)

Code review speed (+3.1%)

Delivery throughput (-1.5%): AI adoption slightly decreases delivery throughput, usually due to over-reliance, learning curve, and increased complexity.

Delivery stability (-7.2%): It is significantly impacted because AI tools can generate incorrect or incomplete code, increasing the risk of production errors.

What are the DORA metrics?

Deployment frequency | How often a team puts an item into production.

Lead time for changes | Time required for a commit to go into production.

Change failure rate | Percentage of deployments resulting in production failure.

Failed deployment recovery time | Time required for a team to recover from a production failure.

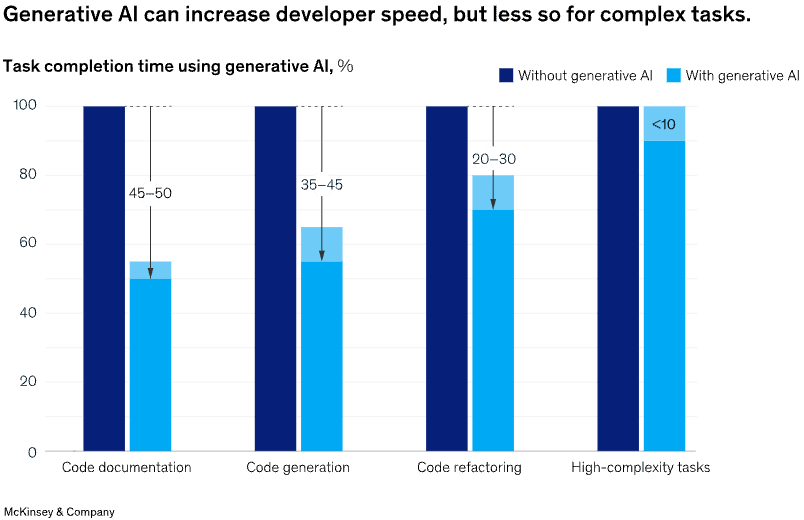

- A McKinsey pilot study on 40 of their developers: https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/unleashing-developer-productivity-with-generative-ai

- An UpLevel analysis of the data of 800 developers: https://uplevelteam.com/blog/ai-for-developer-productivity

Analyzing actual engineering data from a sample of nearly 800 developers and objective metrics, such as cycle time, PR throughput, bug rate, and extended working hours (“Always On” time), we found that Copilot access provided no significant change in efficiency metrics.

The group using Copilot introduced 41% more bugs

Copilot access didn’t mitigate the risk of burnout

“The adoption rate is significantly below 100% in all three experiments,” the researchers wrote. “With around 30-40% of the engineers not even trying the product.

In my experience LLMs are a replacement for search engines (these days, search engines are only good to find info when you already know on which website to look ...). They don't do well in moderately sized code bases, nor in code bases with lots of esoteric business logic, which is to say they don't do well in most enterprise software.

I mostly use them as:

- a documentation that talks: it reminds me of syntax, finds functions in APIs and generates code snippets I can play with to understand APIs

- a fuzzy version of an encyclopedia: it helps me get an overview of a subject and points me to resources where I can get crisper knowledge

- support/better search engine for weird build issues, or weird bugs etc

I think it's also good at one shot scripts, such as data wrangling and data viz, but it does not come up often in my current position.

I would rate the productivity increase at about 10%. I think the use of modal editors (Vim or modern alternatives) improve coding speed more than inline AI completion, which is often distracting.

A lot of my time is spent understanding what I need to code in a back and forth with the product manager or clients. Then I can either code it myself (it's not so hard once I understand the requirements) or spend time explaining the AI what it needs to do, watch it write sloppy code, and rewrite the thing.

Once in a while the coding part is actually the hard part, for example when I need to make the code fast or make a feature play well with the existing architecture, but then the AI can't do logic well enough to optimize code, nor can it reason about the entire code base which it can't see anyway.

Re AI coding, some interesting thoughts on this are from Ajeya Cotra's talks (short form, there are a lot of weaknesses, but the real-world programmer productivity is surprisingly high for coding tasks, but is very bad outside of coding tasks, which is why AI's impact is limited so far):

https://x.com/ajeya_cotra/status/1894821432854749456

https://x.com/ajeya_cotra/status/1895161774376436147

Re this:

And this is mostly where it'll stay unless AGI labs actually crack long-horizon agency/innovations; i. e., basically until genuine AGI is actually there.

Prove me wrong, I guess.

My main takeaway is actually kind of different, in that there's less of a core of generality on the default path than people originally thought, and while there is some entanglement on capabilities, there can also be weird spikes and deficits, and this makes the term AGI a lot less useful than people thought.

One thing is I'm definitely able to spin up side projects that I just would not have been able to do before, because I can do them with my "tired brain."

Some of them might turn out to be real projects, although it's still early stage.

My current job is to develop PoCs and iterate over user feedback. It is a lot of basic and boilerplate. I am handling three projects at the same time when before cursor I would have been managing one and taking longer. I suck at UI and cursor simply solved this for me. We have shipped one of the tools and are finalizing shipping the second one, but they are LLM wrappers indeed designed to summarize or analyze text for customer support purposes and GTR related stuff. The UI iteration however has immensely helped and accelerated.

I'll offer a few cents which is probably what my input is literally worth as a layman, but I'm now able to build full-stack apps, soup to nuts, as someone who's entire coding experience to date was a helloworld in a python tutorial when I was a child.

Cursor/Claude et al can now one shot entire single purpose apps, and errors seem to be on a downward trend. The N hours lost untangling code don't take into account the time saved in construction; but keeping that in mind how much time is spent in debug?

What's the saying? 50% of time spent in programming is coding and the 90% is debugging? I know that's a joke but now you've largely wiped out that 50% and that 90% is chopped in half and is getting lower. Therein lies my point.

There's no evidence of massive 5-10x advancements right now, but that's because of temporary roadblocks that will be iterated out in time. And we aren't talking decades of development any more. What's going to happen when no-code apps can churn out scads of clean, seamless structured code? No bugs? What then?

↑ comment by exmateriae (Sefirosu) · 2025-03-05T02:18:10.507Z · LW(p) · GW(p)

To add to this, I'm a forecaster on metaculus and I can now do dozens if not hundred of poisson/monte carlo/ets simulations every hour when before I often needed the hour to do two or three because I had to do small tweaks that took me quite some time before and that I now delegate to AI. I learned python a year ago but clearly I'm a newbie, it has changed my capabilities significantly.

Yeah, 5x or 10x productivity gains from AI for any one developer seem pretty high, and maybe implausible in most cases. However, note that if 10 people in a 1,000-person company get a 10x speedup, that's only a ~10% overall speedup, which is significant but not enough that you'd expect to be able to clearly point at the company's output and say "wow, they clearly sped up because of AI."

For me, I'd say a lot of my gains come from asking AI questions rather than generating code directly. Generating code is also useful though, especially for small snippets like regex. At Google, we have something similar to Copilot that autocompletes code, which is one of the most useful AI features IMO since the generated code is always small enough to understand. 25% of code at Google is now generated by AI, a statistic which probably mostly comes from that feature.

There are a few small PRs I wrote for my job which were probably 5-10x faster than the counterfactual where I couldn't use AI, where I pretty much had the AI write the whole thing and edited from there. But these were one-offs where I wasn't very familiar with the language (SQL, HTML), which means it would have been unusually slow without AI.

↑ comment by David James (david-james) · 2025-03-04T23:23:42.808Z · LW(p) · GW(p)

For me, I'd say a lot of my gains come from asking AI questions rather than generating code directly.

This is often the case for me as well. I often work on solo side projects and use Claude to think out loud. This lets me put on different hats, just like when pair programming, including: design mode, implementation mode, testing mode, and documentation mode.

I rarely use generated code as-is, but I do find it interesting to look at. As a concrete example, I recently implemented a game engine for the board game Azul (and multithreaded solver engine) in Rust and found Claude very helpful for being an extra set of eyes. I used it sort of a running issue tracker, design partner, and critic.

Now that I think about it, maybe the best metaphor I can use is that Claude helps me project myself onto myself. For many of my projects, I lean towards "write good, understandable code" instead of "move fast and break things". This level of self-criticism and curiosity has served me well with Claude. Without this mentality, I can see why people dismiss LLM-assisted coding; it certainly is far from a magic genie.

I've long had a bias toward design-driven work (write the README first, think on a whiteboard, etc), whether it be coding or almost anything, so having an infinitely patient conversational partner can be really amazing at times. At other times, the failure modes are frustrating, to say the least.

↑ comment by Thane Ruthenis · 2025-03-04T18:14:11.135Z · LW(p) · GW(p)

However, note that if 10 people in a 1,000-person company get a 10x speedup, that's only a ~10% overall speedup

Plausible. Potential counter-argument: software engineers aren't equivalent, "10x engineers" are a thing, and if we assume they're high-agency people always looking to streamline and improve their workflows, we should expect them to be precisely the people who get a further 10x boost from LLMs. Have you observed any specific people suddenly becoming 10x more prolific?

But these were one-offs where I wasn't very familiar with the language (SQL, HTML)

Flat cost reductions, yeah. Though, uniformly slashing the costs on becoming proficient in new sub-domains of programming perhaps could have nontrivial effects on the software industry as a whole...

For example, perhaps the actual impact of LLMs should instead be modeled as all (competent) programmers effectively becoming able to use any and all programming languages/tools at offer (plus knowing of the existence of these tools)? Which, in idealized theory, should lead to every piece of a software project being built using the best tools available for it, rather than being warped by what the specific developer happened to be proficient in.

Replies from: T3t, Archimedes↑ comment by RobertM (T3t) · 2025-03-05T03:14:10.107Z · LW(p) · GW(p)

"10x engineers" are a thing, and if we assume they're high-agency people always looking to streamline and improve their workflows, we should expect them to be precisely the people who get a further 10x boost from LLMs. Have you observed any specific people suddenly becoming 10x more prolific?

In addition to the objection from Archimedes, another reason this is unlikely to be true is that 10x coders are often much more productive than other engineers because they've heavily optimized around solving for specific problems or skills that other engineers are bottlenecked by, and most of those optimizations don't readily admit of having an LLM suddenly inserted into the loop.

↑ comment by Archimedes · 2025-03-05T02:57:29.724Z · LW(p) · GW(p)

"10x engineers" are a thing, and if we assume they're high-agency people always looking to streamline and improve their workflows, we should expect them to be precisely the people who get a further 10x boost from LLMs.

I highly doubt this. A 10x engineer is likely already bottlenecked by non-coding work that AI can't help with, so even if they 10x their coding, they may not increase overall productivity much.

Writing tests (in Python). Writing comprehensive tests for my code used to take a significant portion of my time. Probably at least 2x more than writing the actual code, and subjectively a lot more. Now it's a matter of "please write tests for this function", "now this one" etc., with an extra "no, that's ugly, make it nicer" every now and then.

Working with simple code is also a lot faster, as long as it doesn't have to process too much. So most of what I do now is make sure the file it's processing isn't more than ~500 lines of code. This has the nice side effect of forcing me to make sure the code is in small, logical chunks. Cursor can often handle most of what I want, after which I tidy up and make things decent. I'd estimate this make me at least 40% faster at basic coding, probably a lot more. Cursor can in general handle quite large projects if you manage it properly. E.g. last week it took me around 3 days to make a medium sized project with ~14k lines of Python code. This included Docker setup stuff (not hard, but fiddly), a server + UI (the frontend is rubbish, but that's fine), and some quite complicated calculations. Without LLMs this would have taken at least a week, and probably a month.

Debugging data dumps is now a lot easier. I ask Claude to make me throwaway html pages to display various stuff. Ditto for finding anomalies. It won't find everything, but can find a lot. All of this can be done with the appropriate tooling, of course, but that requires knowing about it, having it set up and knowing how to use it.

Glue code or in general interacting with external APIs (or often also internal ones) is a lot easier, until it's not. You can often one-shot a workable solution that does exactly what you want, which you can then just modify to not be ugly.

I'm not sure how more productive I am with LLMs. But that's mainly because coding is not all I do. If I was just given a set of things to make and was allowed to crank away at it, then I'm pretty sure I'd be 5-10x faster than two years ago.

↑ comment by Aprillion · 2025-03-13T12:46:00.387Z · LW(p) · GW(p)

thanks for concrete examples, can you help me understand how these translate from individual productivity to externally-observable productivity?

3 days to make a medium sized project

I agree Docker setup can be fiddly, however what happened with the 50+% savings - did you lower price for the customer to stay competitive, do you do 2x as many paid projects now, or did you postpone hiring another developer who is not needed now, or do you just have more free time? No change in support&maintenance costs compared to similar projects before LLMs?

processing isn't more than ~500 lines of code

oh well, my only paid experience is with multi-year project development&maintenance, those are definitelly not in the category under 1kloc 🙈 which might help to explain my abysmal experience trying to use any AI tools for work (beyond autocomplete, but IntelliSense also existed before LLMs)

TBH, I am now moving towards the opinion that evals are very un-representative of the "real world" (if we exclude LLM wrappers as requested in the OP ... though LLM wrappers including evals are becoming part of the "real world" too, so I don't know - it's like banking bootstrapped wealthy bankers, and LLM wrappers might be bootstraping wealthy LLM startups)

Replies from: mruwnik↑ comment by mruwnik · 2025-03-13T19:47:56.229Z · LW(p) · GW(p)

I can do more projects in parallel than I could have before. Which means that I have even more work now... The support and maintenance costs of the code itself are the same, as long as you maintain constant vigilance to make sure nothing bad gets merged. So the costs are moved from development to review. It's a lot easier to produce thousands of lines of slop which then have to be reviewed and loads of suggestions made. It's easy for bad taste to be amplified, which is a real cost that might not be noticed that much.

There are some evals which work on large codebases (e.g. "fix this bug in django"), but those are the minority, granted. They can help with the scaffolding, though - those tend to be large projects in which a Claude can help find things.

But yeah, large files are ok if you just want to find something, but somewhere under 500 loc seems to be the limit of what will work well. Though you can get round it somewhat by copying the parts to be changed to a different file then copying them back, or other hacks like that...

One possible explanation is the part of the job that gets speeded up by LLMs is a relatively small part of what programmers actually do, so the total speed up is small.

What programmers do includes:

Figuring out what the requirements are - this might involve talking to who the software is being produced for

Writing specifications

Writing tests

Having arguments discussion during code review when the person reviewing your code doesn’t agree with the way you did it

Etc. etc.

Personally, I find that LLMs are nearly there, but not good enough just yet.

For 25% of the [Y Combinator] Winter 2025 batch, 95% of lines of code are LLM generated.

↑ comment by Petropolitan (igor-2) · 2025-03-09T22:53:25.016Z · LW(p) · GW(p)

Perhaps says more about Y Combinator nowadays rather than about LLM coding

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2025-03-10T00:32:05.601Z · LW(p) · GW(p)

In which sense? In the sense of them AI hyping? That seems kind of unlikely or would at least be surprising to me as they don't look at tech - they don't even look so much as products but at founder qualities - relentlessly resourceful - and that apparently hasn't changed according to Paul Graham. Where do you think he or YC is mistaken?

Replies from: igor-2↑ comment by Petropolitan (igor-2) · 2025-03-10T16:53:42.369Z · LW(p) · GW(p)

To be honest, what I originally implied is that these founders develop their products with low-quality code, as cheap and dirty as they can, and without any long-term planning about further development

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2025-03-11T08:49:31.746Z · LW(p) · GW(p)

I agree that is what usually happens in startups and AI doesn't change that principle, only the effort of generating it faster.

Update: Claude Code and s3.7 has been a significant step up for me. Previously, s3.6 was giving me about a 1.5x speedup. s3.5 more like 1.2x CC+s3.7 is solidly over 2x, with periods of more than that when working on easy well-represented tasks not in an area I know myself (e.g. Node.js)

Here's someone who seems to be getting a lot more out of Claude Code though: xjdr

i have upgraded to 4 claude code sessions working in parallel in a single tmux session, each on their own feature branch and then another tmux window with yet another claude in charge of merging and resolving merge conflicts

"Good morning Claude! Please take a look at the project board, the issues you've been assigned and the open PR's for this repo. Lets develop a plan to assign each of the relevant tasks to claude workers 1 - 5 and LETS GET TO WORK BUDDY!"

https://x.com/_xjdr/status/1899200866646933535

Been in Monk mode and missed the MCP and Manus TL barrage. i am averaging about 10k LoC a day per project on 3 projects simultaneously and id say 90% no slop. when slop happens i have to go in an deslop by hand / completely rewrite but so far its a reasonable tradeoff. this is still so wild to me that this works at all. this is also the first time ive done something like a version of TDD where we (claude and i) agonize over the tests and docs and then team claude goes and hill climbs them. same with benchmarks and perf targets. Code is always well documented and follows google style guides / rust best practices as enforced by linters and specs. we follow professional software development practices with issues and feature branches and PRs. i've still got a lot of work to do to understand how to make the best use of this and there are still a ton of very sharp edges but i am completely convinced this is workflow / approach the future in a way cursor / windsurf never made me feel or believe (i stopped using them after being bitten by bugs and slop too often). this is a power user's tool and would absolutely ruin a codebase if you weren't a very experienced dev and tech lead on large codebases already. ok, going back into the monk mode cave now

I am confident that LLMs siginificantly boost software development productivity (I would say 20-50%) and am completely sure it's not even close to 5x.

However, despite I agree with your conclusion, I would like to point out that timeframes are pretty short. 2 years ago (~exactly GPT-4 launch date) LLMs were barely making any impact. I think tools started to resemble the current state around 1 year ago (~exactly Claude 3 Opus launch date).

Now, suppose we had 5x boost for a year. Would it be very visible? We would have got 5 years of progress in 1 year but had software landscape changed a lot in 5 years in pre-LLM era? Comparing 2017 and 2022, I don't feel like that much changed.

LLMs make certain tasks much faster. Those tasks were never a large part of my job, and so whilst they might increase my productivity 10X on one specific thing, my overall productivity is probably up about 5%.

LLMs excel at:

- Writing quick scripts to do some specific task.

- Greenfield projects I don't need to maintain.

LLMs help at:

- Researching specific libraries or standard solutions to something (e.g. how do I format a number to two decimal places in go).

- Predicting my next 10 lines of code, (which I then need to go and fix up afterwards).

LLMs suck at:

- Making changes to a large codebase.

- Predicting what I'm going to write in a design document.

So I'm now spending relatively less of my time fiddling with bash, and more on making changes to large codebases or writing design documents.

The integration of GitHub's Copilot, into software development has been gradual. In June 2022, Copilot generated 27% of the code for developers using it, which increased to 46% by February 2023.

As of August 2024, GitHub Copilot has approximately 2 million paying subscribers and is utilized by over 77,000 organizations. An increase from earlier figures; in February 2024, Copilot had over 1.3 million paid subscribers, with more than 50,000 organizations using the service.

https://www.ciodive.com/news/generative-AI-software-development-lifecycle/693165/

The transition from traditional waterfall methodologies to agile practices began in the early 2000s, with agile approaches becoming mainstream over the next decade. Similarly, the shift from monolithic architectures to microservices started around 2011, with widespread adoption occurring over the subsequent 5 to 10 years.

https://en.wikipedia.org/wiki/Agile_software_development

Assuming AI tools taking 5 years to become commonplace and leading to consistent 3x productivity gain (to use a more conservative number than the 5x/10x, but still high) this would lead to a nominal productivity gain of 25% per year. Significant, but not that trivial to clearly see.

Two thoughts.

- The 10x productivity number is (as you say) only for specific tasks - and even for core tasks, anything that can be sped up by a factor of 10 is unlikely to be more than 50% of the job - and probably much less. This is because pretty much nobody does the core thing they do more than about 70% of the time. And 10% time savings across ten tasks does not add up to 100% saving.

- But I think you underestimate how useful "vibecoding" has become for many people with agent tools. So, instead you're getting expansion and not replacement. People with existing codebases are getting small productivity increases, people who could barely code are going things they would never even attempt. And those tools are new - months to weeks, so they will take a while to show up in our lived lives. And they may never show up in existing software because the code is too complex and getting it into a feature takes too much effort. The bottle neck is not the ability of programmers to produce the code but the coordination of getting multiple code changes into productoin. This is not just AI. Any start up going from MVP to product seems to be shipping features daily but eventually this slows down as the software gets bigger.

I am experiencing massive productivity boost with my home grown open source genetic coder, especially since I added a GitHub mode to it this week. I write about that here: https://benhouston3d.com/blog/github-mode-for-agentic-coding

This is only my personal experience of course, but for making little tools for automating tasks or prototyping ideas quickly AI has been a game-changer. For web development the difference is also huge.

The biggest improvement is when I'm trying something completely new to me. It's possible to confidently go in blind and get something done quickly.

When it comes to serious engineering of novel algorithms it's completely useless, but most devs don't spend their days doing that.

I've also found that getting a software job is much harder lately. The bar is extremely high. This could be explained by AI, but it could also be that the supply of devs has increased.

There have been massive improvements in productivity in infrastructure from cloud services, especially object storage. See eg https://materializedview.io/p/infrastructure-vendors-are-in-a-tough for many examples of small teams out-competing established vendors.

But I didn't notice that until it was pointed out to me by several sources. So should I expect to notice large productivity improvements from LLMs? How huge would they have to be to show up in the sparse signal that I'm getting? What data would I even have to be looking at to see a 5x improvement in productivity?

For Golang:

Writing unit test cases is almost automatic (Claude 3.5 and now Claude 3.7). It's good at the specific test setup necessary and the odd syntax/boilerplate that some testing libraries require. At least 5x speedup.

Autosuggestions (Cursor) are net positive, probably 2x-5x speedup depending on how familiar I am with the codebase.

I am often surprised by the reticence of 'hackers' to integrate AI tooling and to interact with AI based systems. There is an adherence to the old ways, the traditions and the 'this is how we've always done it' mindset around AI that is unusual and not what I would expect of a culture and community usually dedicated to uprooting the status quo, often at the intersection of cutting edge technology.

Where once I would need to go through stack overflow to understand some arcane syntax of an API, and scroll through posts where others have stumbled in the same way, instead I can ask it to ChatGPT. A concrete example of this is working with BigQuery, the Google-based data warehousing system which my startup is using. I am familiar with SQL and familiar with cloud bindings and familiar with GCP, however the exact syntax and process of interfacing with BigQuery has a few quirks, and a few missing functions. Rather than having to pore through the documentation, and read through few and far between forums to debug some strange error, Cursor's interface to o3-mini is usually able to suggest immediately where I have gone wrong and how I need to fix it. It solves my problem directly, but it has a second order effect in that I don't really care about the BigQuery API syntax - this isn't interesting to me. I'm interested in interfacing wtih my data warehouse but I don't care to learn about the implementation - AI can take this entire mental load off my plate.

I also read a lot of forum posts suggesting that AI isn't useful for obscure programming languages and extremely complex tasks and processes. This is probably true, but I wonder what these people's jobs are that their workflows never involve interacting with legacy code or strapping together frontends and prototypes - boiler plate tech that is AI bread and butter. Do they already outsource all this work to junior (cheaper?) developers?

One of my favourite quotes for the utility of AI programming is 'It is possible to be be precise at an higher level of abstraction as long as your prompts are consistent with a coherent model of the code.' Using natural language to interface with a computer is a new paradigm - and it is not yet clear what it's limitations and best practices are. If you are uninterested in this and clinging to the old ways then you will be left behind by those who adopt.

As you probably know, I have been endorsing this "conspiracy theory" for some time, e.g. roughly here: https://www.lesswrong.com/posts/vvgND6aLjuDR6QzDF/my-model-of-what-is-going-on-with-llms [LW · GW]

5 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2025-03-06T01:18:06.575Z · LW(p) · GW(p)

Many developers have been reporting that this is dramatically increasing their productivity, up to 5x'ing/10x'ing it.

Yeah but many more have been saying it only moderately increases their productivity. Whenever I ask people working in frontier AI companies I get much smaller estimates, like 5%, 10%, 30%, etc. And I think @Ajeya Cotra [LW · GW] got similar numbers and posted about them recently.

↑ comment by Ajeya Cotra (ajeya-cotra) · 2025-03-06T19:57:22.179Z · LW(p) · GW(p)

Yeah I've cataloged some of that here: https://x.com/ajeya_cotra/status/1894821255804788876 Hoping to do something more systematic soon

comment by Tao Lin (tao-lin) · 2025-03-04T18:18:54.198Z · LW(p) · GW(p)

Empirically, we likewise don't seem to be living in the world where the whole software industry is suddenly 5-10 times more productive. It'll have been the case for 1-2 years now, and I, at least, have felt approximately zero impact. I don't see 5-10x more useful features in the software I use, or 5-10x more software that's useful to me, or that the software I'm using is suddenly working 5-10x better, etc.

Diminishing returns! Scaling laws! One concrete version of "5x productivity" is "as much productivity as 5 copies of me in parallel", and we know that usually 5x-ing most inputs, like training compute and data, # of employees, etc, more often scales logarithmically instead of linearly

Replies from: Aprillion↑ comment by Aprillion · 2025-03-05T11:24:45.410Z · LW(p) · GW(p)

that's not how productivity ought to be measured - it should measure some output per (say) a workday

1 vs 5 FTE is a difference in input, not output, so you can say "adding 5 people to this project will decrease productivity by 70% next month and we hope it will increase productivity by 2x in the long term" ... not a synonym of "5x productivity" at all

it's the measure by which you can quantify diminishig results, not obfuscate them!

...but the usage of "5-10x productivity" seems to point to a diffent concept than a ratio of useful output per input 🤷 AFAICT it's a synonym with "I feel 5-10x better when I write code which I wouldn't enjoy writing otherwise"

comment by laserfiche · 2025-03-06T15:02:46.997Z · LW(p) · GW(p)

This is mostly covered by other comments, but I'll give my personal experience. I'm a programmer, and I have seen at least a 5x increase in programming speed. However, my output is limited by Amdahl's law. 50% of my process is coding, but 50% is in non-programming tasks that haven't been accelerated, meaning the overall process take 60% as long as originally.

What's more, that's just my portion. When I complete a project and hand it off, the executives can't absorb it any faster, and my next assignment doesn't come any faster. My team doesn't work from an infinite backlog. Notably, my code is much higher quality (no more "eh, I'll get to that eventually") and always exhibits best practices and proper documentation, since those elements are now free.

To take another example, I have used my accelerated programming speed to create several side project websites recently. Every part of this process has been accelerated, except for non-programming elements like spreading the word. That area is a bottleneck, and that's just my portion; even if I were keeping up with "marketing", there is a limit to how fast other parts of the information pipeline could absorb this new information and disseminate it, and how long adoption would take.