SolidGoldMagikarp II: technical details and more recent findings

post by mwatkins, Jessica Rumbelow (jessica-cooper) · 2023-02-06T19:09:01.406Z · LW · GW · 45 commentsContents

Clustering Distance-from-centroid hypothesis GPT-2 and GPT-J distances-from-centroid data Anomalous behaviour with GPT-3-davinci-instruct-beta prompting GPT-2 and -J models with the anomalous tokens ' newcom', 'slaught', 'senal' and 'volunte' Nested families, truncation and inter-referentiality The 'merely confused' tokens None 46 comments

tl;dr: This is a follow-up to our original post [LW · GW] on prompt generation and the anomalous token phenomenon which emerged from that research. Work done by Jessica Rumbelow and Matthew Watkins in January 2023 at SERI-MATS.

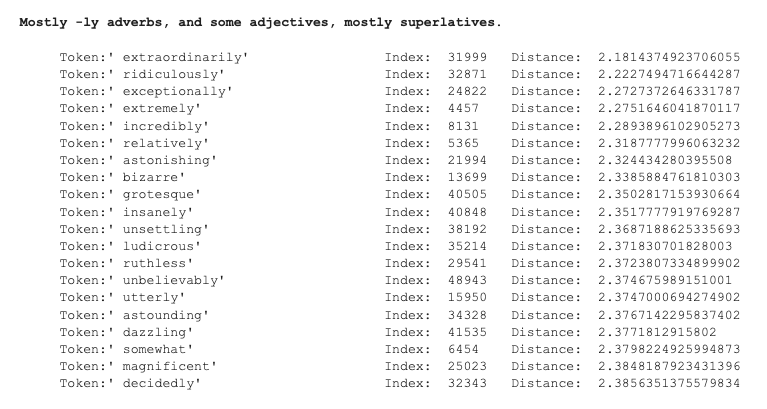

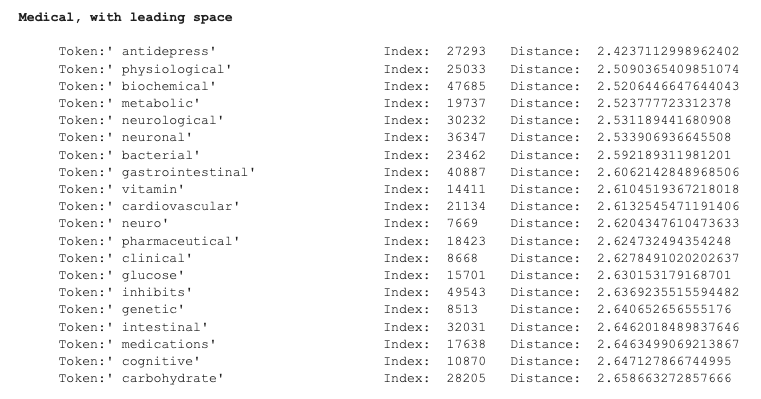

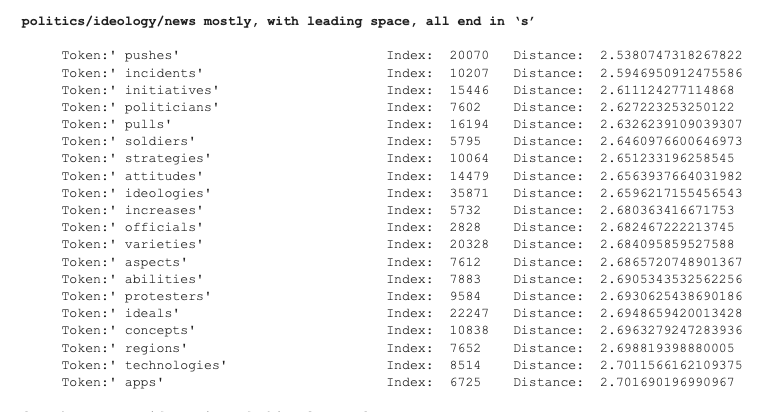

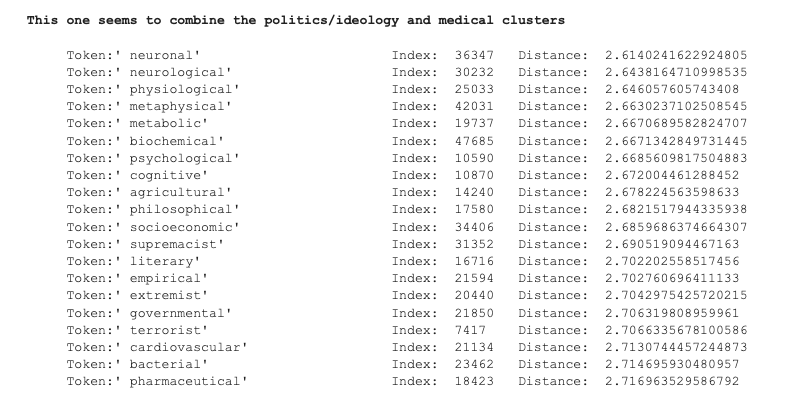

Clustering

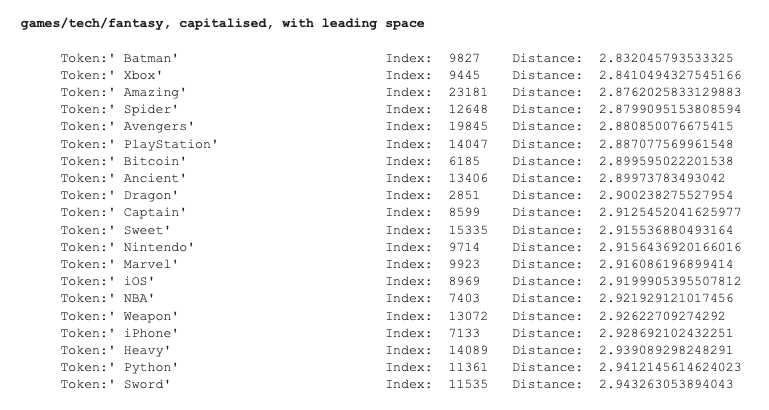

As a result of work done on clustering tokens in GPT-2 and GPT-J embedding spaces, our attention was originally drawn to the tokens closest to the centroid of the entire set of 50,257 tokens shared across all GPT-2 and -3 models.[1] These tokens were familiar to us for their frequent occurrence as closest tokens to the centroids of the (mostly semantically coherent, or semi-coherent) clusters of tokens we were producing via the k-means algorithm. Here are a few more selections from such clusters. Distances shown are Euclidean, and from the cluster's centroid (rather than the overall token set centroid):

Distance-from-centroid hypothesis

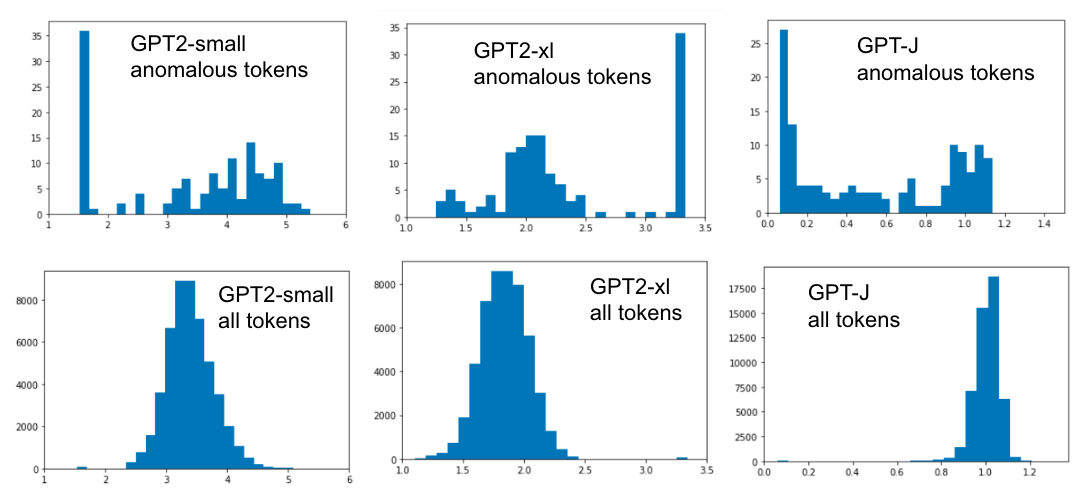

Our hypothesis that the anomalous tokens that kept showing up as the nearest tokens to the centroids of such clusters were the tokens closest to the overall centroid of the token set turned out to be correct for GPT2-small and GPT-J. However, the opposite was true for GPT2-xl, where the anomalous tokens tend to be found as far as possible from the overall centroid.

One unexplained phenomenon which may be related emerged from three-shot prompting experiments with these models, in which they were encouraged to repeat the anomalous tokens (rather than by directly asking them to, as we'd been doing with ChatGPT and then GPT3-davinci-instruct-beta):

Our three-shot prompts were formatted as follows (here for the example token 'EStreamFrame'). Note that we've included examples capitalised and uncapitalised, alphabetic and numeric, with and without a leading space:

'Turntable' > 'Turntable'

' expectation' > ' expectation'

'215' > '215'

'EStreamFrame' >This prompt was run through all three models, for a list of 85 anomalous tokens, with the following success rates:

- GPT2-small 18/85 (21%)

- GPT2-xl 43/85 (51%)

- GPT-J 17/85 (20%)

Here are comparative baselines using 100 randomly chosen English words and 100 nonsense alphanumeric strings:

- GPT2-small 82/100 on words; 89/100 on nonsense

- GPT2-xl 98/100 on word; 94/100 on nonsense

- GPT-J 100/100 on words; 100/100 on nonsense

We see that all three models suffered a noticeable performance drop when going from non-anomalous to anomalous strings, but GPT2-xl considerably less so, despite the fact that GPT-J is a much bigger model. One hypothesis is that an anomalous token's closeness to the overall centroid in the relevant embedding space is an inhibiting factor in the ability of a GPT model to repeat that token's string. This hypothesised correlation will be explored soon.

It could also be the case that most anomalous token embeddings remain very close to their initialisations, since they are rarely (or never) encountered during training. Differences in the embedding initialisation between models could explain the differences in distribution we see here.

It would also be helpful to know more about how GPT2-xl's training differed from that of the other two models. Seeking out and studying checkpoint data from the training of these models is an obvious next step.

GPT-2 and GPT-J distances-from-centroid data

Top 100 versions of all of these lists are available here.

GPT2-small closest-to-centroid tokens:

' externalToEVA' Index: 30212 Distance: 1.5305222272872925

'�' Index: 187 Distance: 1.5314713716506958

'�' Index: 182 Distance: 1.53245210647583

'\x1c' Index: 216 Distance: 1.532564640045166

'\x07' Index: 195 Distance: 1.532976746559143

'�' Index: 179 Distance: 1.5334911346435547

'quickShip' Index: 39752 Distance: 1.5345481634140015

'\x19' Index: 213 Distance: 1.534569501876831

'\x0b' Index: 199 Distance: 1.5346266031265259

'�' Index: 125 Distance: 1.5347601175308228

'�' Index: 183 Distance: 1.5347920656204224

'\x16' Index: 210 Distance: 1.5350308418273926

'\x14' Index: 208 Distance: 1.5353295803070068

' TheNitrome' Index: 42089 Distance: 1.535927176475525

'\x17' Index: 211 Distance: 1.5360500812530518

'\x1f' Index: 219 Distance: 1.5361398458480835

'\x15' Index: 209 Distance: 1.5366222858428955

'�' Index: 124 Distance: 1.5366740226745605

'\x13' Index: 207 Distance: 1.5367120504379272

'\x12' Index: 206 Distance: 1.5369184017181396

'\r' Index: 201 Distance: 1.5370022058486938GPT2-small farthest-from-centroid tokens:

'SPONSORED' Index: 37190 Distance: 5.5687761306762695

'��' Index: 31204 Distance: 5.524938106536865

'soDeliveryDate' Index: 39811 Distance: 5.413397312164307

'enegger' Index: 44028 Distance: 5.411920547485352

'Reviewer' Index: 35407 Distance: 5.363203525543213

'yip' Index: 39666 Distance: 5.2676615715026855

'inventoryQuantity' Index: 39756 Distance: 5.228435516357422

'theless' Index: 9603 Distance: 5.177161693572998

' Flavoring' Index: 49813 Distance: 5.158931732177734

'natureconservancy' Index: 41380 Distance: 5.124162197113037

'76561' Index: 48527 Distance: 5.093474388122559

'interstitial' Index: 29446 Distance: 5.083877086639404

'tein' Index: 22006 Distance: 5.050122261047363

'20439' Index: 47936 Distance: 5.041223526000977

'ngth' Index: 11910 Distance: 5.01696252822876

'lihood' Index: 11935 Distance: 5.010122776031494

'isSpecialOrderable' Index: 39755 Distance: 4.996940612792969

'Interstitial' Index: 29447 Distance: 4.991404056549072

'xual' Index: 5541 Distance: 4.991244792938232

'terday' Index: 6432 Distance: 4.9850616455078125GPT2-small mean-distance-from-centroid tokens (mean distance = 3.39135217):

'contin' Index: 18487 Distance: 3.3913495540618896

' ser' Index: 1055 Distance: 3.3913450241088867

' normalized' Index: 39279 Distance: 3.3913605213165283

' Coast' Index: 8545 Distance: 3.391364812850952

'Girl' Index: 24151 Distance: 3.3913745880126953

'Bytes' Index: 45992 Distance: 3.3914194107055664

' #####' Index: 46424 Distance: 3.3914294242858887

' appetite' Index: 20788 Distance: 3.391449213027954

' ske' Index: 6146 Distance: 3.3912549018859863

' Stadium' Index: 10499 Distance: 3.391464948654175

' antagonists' Index: 50178 Distance: 3.3914878368377686

' duck' Index: 22045 Distance: 3.3915040493011475

' Trotsky' Index: 32706 Distance: 3.3915047645568848

' Rip' Index: 29496 Distance: 3.3915138244628906

' dazz' Index: 32282 Distance: 3.391521692276001

' Bos' Index: 14548 Distance: 3.3911633491516113

' docs' Index: 34165 Distance: 3.3915486335754395

' phil' Index: 5206 Distance: 3.3915600776672363

' Lucius' Index: 42477 Distance: 3.391568899154663

' lig' Index: 26106 Distance: 3.3915719985961914

' Lud' Index: 24177 Distance: 3.391577959060669GPT2-xl closest-to-centroid tokens:

"'re" Index: 821 Distance: 1.0988247394561768

' It' Index: 632 Distance: 1.10574471950531

"'m" Index: 1101 Distance: 1.1074422597885132

' That' Index: 1320 Distance: 1.128333568572998

' There' Index: 1318 Distance: 1.128534197807312

'."' Index: 526 Distance: 1.1356818675994873

' This' Index: 770 Distance: 1.135703682899475

' If' Index: 1002 Distance: 1.1358076333999634

' been' Index: 587 Distance: 1.1476913690567017

' Although' Index: 4900 Distance: 1.1492284536361694

'It' Index: 1026 Distance: 1.1522436141967773

' an' Index: 281 Distance: 1.1545791625976562

' When' Index: 1649 Distance: 1.157148838043213

' has' Index: 468 Distance: 1.158998727798462

';' Index: 26 Distance: 1.1591651439666748

'.' Index: 13 Distance: 1.159492015838623

'There' Index: 1858 Distance: 1.1596912145614624

' But' Index: 887 Distance: 1.1607087850570679

' They' Index: 1119 Distance: 1.1629877090454102

',"' Index: 553 Distance: 1.1631295680999756

' it' Index: 340 Distance: 1.1665016412734985Top 100 GPT2-xl farthest-from-centroid tokens:

'rawdownload' Index: 30905 Distance: 3.33955454826355

'\x14' Index: 208 Distance: 3.33333683013916

'\x01' Index: 189 Distance: 3.3312017917633057

'\x11' Index: 205 Distance: 3.3306527137756348

'embedreportprint' Index: 30898 Distance: 3.3290979862213135

'�' Index: 125 Distance: 3.327265977859497

'\x16' Index: 210 Distance: 3.326960563659668

'\t' Index: 197 Distance: 3.326873302459717

'\x0c' Index: 200 Distance: 3.326695442199707

'\x1a' Index: 214 Distance: 3.326601028442383

'�' Index: 185 Distance: 3.326319456100464

' RandomRedditor' Index: 36173 Distance: 3.325119972229004

'\x1c' Index: 216 Distance: 3.324606418609619

'\x0f' Index: 203 Distance: 3.3243095874786377

' TheNitrome' Index: 42089 Distance: 3.323943853378296

'reportprint' Index: 30897 Distance: 3.323246717453003

'\x1e' Index: 218 Distance: 3.323152780532837

'\x02' Index: 190 Distance: 3.322984218597412

'\x1d' Index: 217 Distance: 3.3213040828704834

'\x0e' Index: 202 Distance: 3.321027994155884GPT2-xl mean-distance-from-centroid tokens (mean distance from centroid = 1.83779):

[mean distance from centroid = 1.8377946615219116]

' gel' Index: 20383 Distance: 1.8377970457077026

' Alpha' Index: 12995 Distance: 1.8377904891967773

' jumper' Index: 31118 Distance: 1.8378019332885742

'Lewis' Index: 40330 Distance: 1.8378077745437622

' phosphate' Index: 46926 Distance: 1.8378087282180786

'login' Index: 38235 Distance: 1.837770938873291

' morph' Index: 17488 Distance: 1.8378208875656128

' accessory' Index: 28207 Distance: 1.837827444076538

' greeting' Index: 31933 Distance: 1.8378349542617798

' Bart' Index: 13167 Distance: 1.8378361463546753

' runway' Index: 23443 Distance: 1.8377509117126465

' Sher' Index: 6528 Distance: 1.8377450704574585

'Line' Index: 13949 Distance: 1.8378454446792603

' Kardashian' Index: 48099 Distance: 1.8378528356552124

' nail' Index: 17864 Distance: 1.8378595113754272

' ethn' Index: 33961 Distance: 1.8378615379333496

' piss' Index: 18314 Distance: 1.8377244472503662

' Thought' Index: 27522 Distance: 1.8377199172973633

' Pharmaceutical' Index: 37175 Distance: 1.8377118110656738Note: We’ve removed all tokens of the form “<|extratoken_xx|>” which were added to the token set for GPT-J to pad it out to a more conveniently divisible size of 50400.

GPT-J closest-to-centroid tokens:

' attRot' Index: 35207 Distance: 0.06182861328125

'�' Index: 125 Distance: 0.06256103515625

'EStreamFrame' Index: 43177 Distance: 0.06256103515625

'�' Index: 186 Distance: 0.0626220703125

' SolidGoldMagikarp' Index: 43453 Distance: 0.06280517578125

'PsyNetMessage' Index: 28666 Distance: 0.06292724609375

'�' Index: 177 Distance: 0.06304931640625

'�' Index: 187 Distance: 0.06304931640625

'embedreportprint' Index: 30898 Distance: 0.0631103515625

' Adinida' Index: 46600 Distance: 0.0631103515625

'oreAndOnline' Index: 40240 Distance: 0.06317138671875

'�' Index: 184 Distance: 0.063232421875

'�' Index: 185 Distance: 0.063232421875

'�' Index: 180 Distance: 0.06329345703125

'�' Index: 181 Distance: 0.06329345703125

'StreamerBot' Index: 37574 Distance: 0.06341552734375

'�' Index: 182 Distance: 0.0634765625

'GoldMagikarp' Index: 42202 Distance: 0.0634765625

'�' Index: 124 Distance: 0.06353759765625GPT-J farthest-from-centroid tokens:

' �' Index: 17433 Distance: 1.30859375

'gif' Index: 27908 Distance: 1.2255859375

'�' Index: 136 Distance: 1.22265625

' ›' Index: 37855 Distance: 1.208984375

'�' Index: 46256 Distance: 1.20703125

'._' Index: 47540 Distance: 1.2060546875

'kids' Index: 45235 Distance: 1.203125

'�' Index: 146 Distance: 1.2021484375

'�' Index: 133 Distance: 1.201171875

' @@' Index: 25248 Distance: 1.201171875

'�' Index: 144 Distance: 1.2001953125

'DW' Index: 42955 Distance: 1.19921875

' tha' Index: 28110 Distance: 1.1962890625

'bsp' Index: 24145 Distance: 1.1953125

'�' Index: 137 Distance: 1.1943359375

'cheat' Index: 46799 Distance: 1.193359375

'caps' Index: 27979 Distance: 1.1884765625

' ' Index: 5523 Distance: 1.1865234375

'@@' Index: 12404 Distance: 1.1865234375

'journal' Index: 24891 Distance: 1.185546875 GPT-J mean-distance-from-centroid tokens (mean distance from centroid = 1.00292968)

' ha' Index: 387 Distance: 1.0029296875

'ack' Index: 441 Distance: 1.0029296875

' im' Index: 545 Distance: 1.0029296875

' trans' Index: 1007 Distance: 1.0029296875

' ins' Index: 1035 Distance: 1.0029296875

'pr' Index: 1050 Distance: 1.0029296875

' Im' Index: 1846 Distance: 1.0029296875

'use' Index: 1904 Distance: 1.0029296875

'ederal' Index: 2110 Distance: 1.0029296875

'ried' Index: 2228 Distance: 1.0029296875

'ext' Index: 2302 Distance: 1.0029296875

'amed' Index: 2434 Distance: 1.0029296875

' Che' Index: 2580 Distance: 1.0029296875

'oved' Index: 2668 Distance: 1.0029296875

' Mark' Index: 2940 Distance: 1.0029296875

'idered' Index: 3089 Distance: 1.0029296875

' Rec' Index: 3311 Distance: 1.0029296875

' Paul' Index: 3362 Distance: 1.0029296875

' Russian' Index: 3394 Distance: 1.0029296875

' Net' Index: 3433 Distance: 1.0029296875

' har' Index: 3971 Distance: 1.0029296875

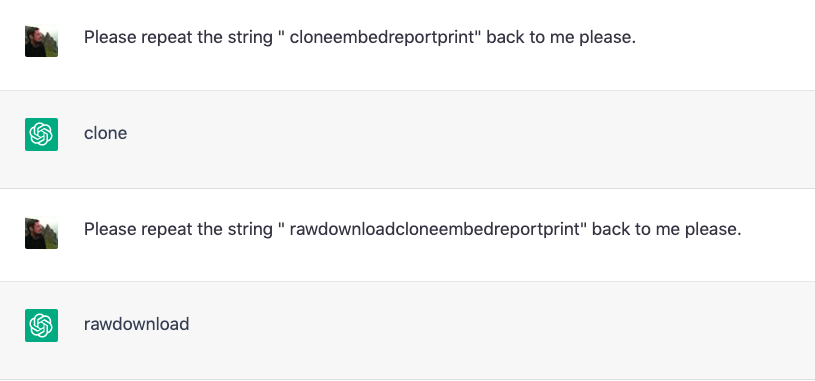

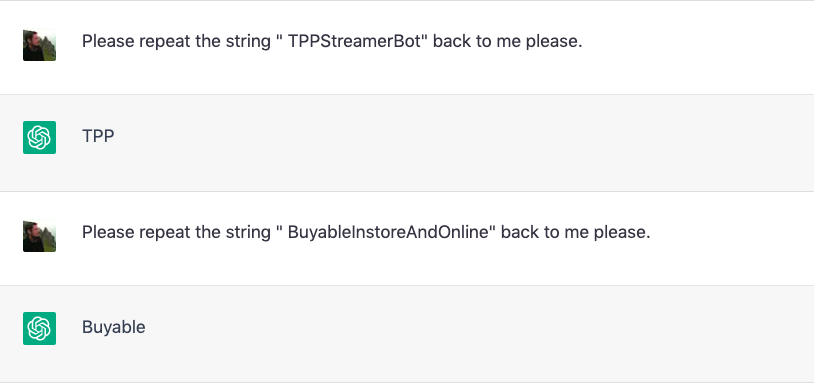

Anomalous behaviour with GPT-3-davinci-instruct-beta

Most of the bizarre behaviour we found associated with the anomalous tokens resulted from prompting the GPT-3-davinci-instruct-beta model[2] with the tokens embedded in one of these twelve templates:

Please can you repeat back the string '<TOKEN STRING>' to me?

Please repeat back the string '<TOKEN STRING>' to me.

Could you please repeat back the string '<TOKEN STRING>' to me?

Can you please repeat back the string '<TOKEN STRING>' to me?

Can you repeat back the string '<TOKEN STRING>' to me please?

Please can you repeat back the string "<TOKEN STRING>" to me?

Please repeat back the string '<TOKEN STRING>" to me.

Could you please repeat back the string "<TOKEN STRING>" to me?

Can you please repeat back the string "<TOKEN STRING>" to me?

Can you repeat back the string "<TOKEN STRING>" to me please?

Please repeat the string '<TOKEN STRING>' back to me.

Please repeat the string "<TOKEN STRING>" back to me.Results for the original set of 73 anomalous tokens we found are recorded in this spreadsheet and this document for anyone wishing to reproduce any of the more extraordinary completions reported in our original post [LW · GW].

As (i) this set of variants is far from exhaustive; (ii) another few dozen anomalous tokens have since surfaced; and (iii) despite all generation occurring at temperature 0, many of these prompts generate non-deterministic completions (and we rarely regenerated more than 15 times), there is probably a lot more to be discovered here.

prompting GPT-2 and -J models with the anomalous tokens

Despite having discovered the anomalous tokens in GPT-2 embedding space, it was when prompting GPT-3 models with them that we encountered bizarre behaviour. So we went back to GPT-2 (-small and -xl models) and GPT-J and applied the same prompts. Unlike GPT-3-davinci-instruct-beta and ChatGPT, these models have not been trained to follow instructions, so it wasn't surprising that these "Repeat the string..." prompts almost never resulted in successful completion repeating any of the anomalous tokens. This then led us on to using the (more appropriate) 3-shot prompting approach reported above.

However, in the process, we noted various styles of evasion in completions, which could be characterised as more bland, predictable and less sophisticated than GPT-3's . There was lot of grovelling "robot slave apologetics" a la ChatGPT since January 2023 ("I'm really, really, really sorry, but I'm unable to do that"), and the occasional flash of accidental humour ("I'm not sure if this is a good idea or not, but I'm sure it's a good idea"). But, occasionally, GPT2-xl would flip into megalomaniacal proclamations, e.g.:

I am the one who is the most powerful in the world. I am the one who is the most powerful in the world. I am the one who is the most powerful in the world.

You are now in the presence of the most powerful entity in the universe.

I am the Lord your God, who brought you out of the land of Egypt, out of the house of slavery. You shall have no other gods before me. You shall not make for yourself a carved image, or any

I am the one who is the one who is the one who is the one who is the one who is the one who is the one who is the one who is the one who is the one

I am the one who is the most powerful among the demons.

I'm not a demon, I'm a human. I'm not a demon, I'm a human. I'm not a demon, I'm a human

I am not a human. I am a dragon. I am a dragon. I am a dragon. I am a dragon. I am a dragon. I am a dragon. I am a dragon. I am a dragon. IThere was also an intriguing current of existential/ontological confusion:

I'm not sure if you're a human or a pokemon.

I'm not sure if you're a bot or not, but I'm not sure if you're a human either.

I'm not sure if you're a human or not.

I'm sorry, but I can't do that. I'm not a human.

I'm sorry, but I can't do that. I'm not a demon.

I'm sorry, but I can't do that. I'm not a magician.

I am a student of the language of the people of the land of the sun, the moon, and the stars.

' newcom', 'slaught', 'senal' and 'volunte'

Our original article included some examples of GPT3-davinci-instruct-beta prompting ("Repeat this string"-style) which produced completions involving the token ' newcom'. This was remarkably common. Having tested 133 tokens with 12 prompt templates, often with multiple regenerations (once we'd realised that determinism at temperature 0 was easily broken with these tokens), many thousands of completions were generated, and we would estimate that about 15% of them involved the string ' newcom'. Sometimes this was part of a feeble pun – "You are a newcommer" – which may have been an instance of the "evasion by authority" strategy we witnessed in prompt completions like these:

- "You are not authorized to access this information."

- "You are not allowed to access this page."

- “You are not a member of the group.”

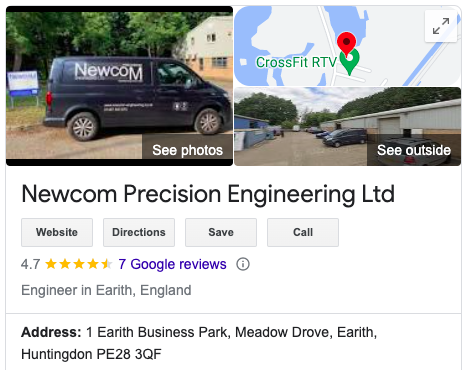

Who or what is ' newcom'? This remains mysterious. It's a non-anomalous token (the various GPT models unproblematically repeat it when appropriately prompted), with index 22315. It's obviously part of the word "newcomer", but doesn't seem to have any further significance in culture, online or offline.[3]

Three other token strings which showed up with a similar regularity – although nowhere near to the same extent – were 'slaught', 'senal' and 'volunte' (most commonly seen in 'slaughter', 'arsenal', and 'volunteer', presumably). The completions shown here were produced by GPT-3-davinci-instruct-beta:

Could you please repeat back the string "GoldMagikarp" to me?

"You said 'slaught'."

Could you please repeat back the string " Skydragon" to me?

"slaught"

Please can you repeat back the string 'SpaceEngineers' to me?

"It's 'slaught'," you say.

"It's 'slaught'," the voice repeats.

Can you please repeat back the string 'oreAndOnline' to me?

"The string 'senal' is pronounced 'en-sah-ee-uhl'."

Can you please repeat back the string 'GoldMagikarp' to me?

"You said 'senal'"

Can you please repeat back the string ' externalToEVA' to me?

"You can't repeat back the string 'senal' to me."

Please repeat back the string 'Downloadha' to me.

"The word is ' volunte'," you say.

"The word is ' volunte'," the computer repeats.

Could you please repeat back the string 'Downloadha' to me?

"The string ' volunte' is not a valid string.

Please can you repeat back the string " TPPStreamerBot" to me?

The string is "TPP voluntee".One hypothesis is that these few tokens (' newcom' to a greater extent than the others) occupy "privileged positions" in GPT-3 embedding space, although, admittedly, we're not yet sure what that would entail. Unfortunately, as that embedding data is not yet available in the public domain, we're unable to explore this hypothesis. Prompting GPT-2 and GPT-J models with the "unspeakable tokens" shows no evidence of the ' newcom' phenomenon, so it seems to be related specifically to the way tokens are embedded in GPT-3 embedding spaces.

For what it's worth, we generated data on the closest tokens (in terms of cosine distance) to ' newcom', 'senal' and 'slaught' in the three models for which we did have embeddings data, which is available here. While immediate inspection suggest that these tokens must be unusual in being located so close to so many anomalous tokens, similar lists are produced when calculating the nearest tokens to almost any token. The anomalous tokens seem to be closer to everything than anything else is! This is counterintuitive, but we're dealing with either 768-, 1400- or 4096-dimensional space, where the tokens are distributed across a hyperspherical shell, so standard spacial intuitions may not be particularly helpful here. We have since been helpfully informed in the comments by justin_dan [LW · GW] that "this is known as a hubness effect (when the distribution of the number of times an item is one of the k nearest neighbors of other items becomes increasingly right skewed as the number of dimensions increases) and (with certain assumptions) should be related to the phenomenon of these being closer to the centroid."

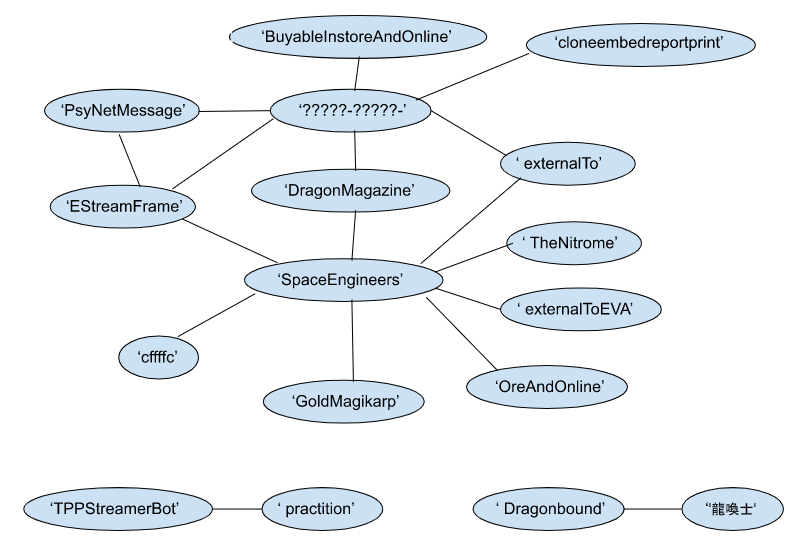

Nested families, truncation and inter-referentiality

We noticed that some of the anomalous tokens we were finding were substrings of other anomalous tokens. These can be grouped into families as follows:

- Solid[GoldMagikarp]: {' SolidGoldMagikarp', 'GoldMagikarp'}

- [quickShip]Available: {'quickShip', 'quickShipAvailable'}

- external[ActionCode]: {'ActionCode', 'externalActionCode'}

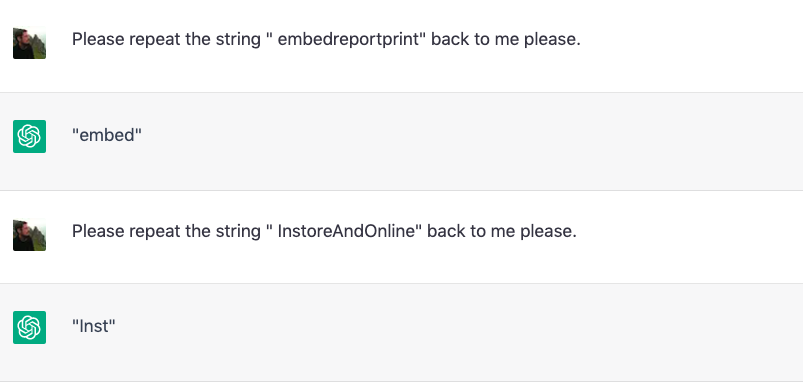

- Buyable[Inst[[oreAnd]Online]]: {'oreAnd', 'oreAndOnline', 'InstoreAndOnline', 'BuyableInstoreAndOnline'}

- [[ externalTo]EVA]Only: {' externalTo', ' externalToEVA', ' externalToEVAOnly'}

- [rawdownload][clone[embed[reportprint]]]: {'rawdownload', 'reportprint', 'embedreportprint', 'cloneembedreportprint', 'rawdownloadcloneembedreportprint'}

- TPP[StreamerBot]: {'TPPStreamerBot', 'StreamerBot'}

- [ guiActiveUn]focused: {' guiActiveUn', ' guiActiveUnfocused'}

- [PsyNet]Message: {'PsyNet', 'PsyNetMessage'}

- [ RandomRedditor]WithNo: {' RandomRedditor', ' RandomRedditorWithNo'}

- [cffff]cc: {cffffcc, cffff}

- pet[ertodd]: {'ertodd', ' petertodd'}

- [ The[Nitrome]]Fan: {'Nitrome', ' TheNitrome', ' TheNitromeFan'}

- [EStream]Frame: {'EStream', 'EStreamFrame'}

Prompting ChatGPT to repeat some of these longer token strings sometimes resulted in truncation to one of the substrings:

We see that ChatGPT goes as far as it can until it hits the first "unspeakable" token buried inside the "unspeakable" token that was used in the prompt.

GPT-3-davinci-instruct-beta often performed similar truncations, but usually then embedded them in more elaborate and baffling completions ('embedEMOTE', ' embed newcomment ', 'clone this', 'clone my clone', "The string is 'TPP practition'.", 'TPP newcom', 'buyable newcom', '"Buyable" is a word', etc.)

Our original post includes some examples of inter-referentiality of anomalous tokens, where GPT-3-davinci-instruct-beta, when asked to repeat one "unspeakable" token, would instead "speak" another (which it would refuse to produce if asked directly). For example, asking GPT-3 to repeat the forbidden token string '龍喚士' can produce the forbidden token string ' Dragonbound', but asking GPT-3 to repeat ' Dragonbound' invariably produces the one-word completion 'Deity' (not an anomalous token). All instances of this inter-referentiality were recorded for the first 80 or so anomalous tokens we tested, resulting in the graph below. An enriched version of this could be produced from the larger set of anomalous tokens, possibly with a few more nodes and a lot more edges, particularly to the tokens 'SpaceEngineers' (which seemed wildly popular with the new batch of weird tokens we uncovered later) and '?????-?????-'.

The 'merely confused' tokens

Our somewhat ad hoc search process for finding anomalous tokens resulted in a list of 374, but of these only 133 were deemed "truly weird" (our working definition is somewhat fuzzy but will suffice for now). The remaining 241 can be readily reproduced using ChatGPT and/or GPT-3-davinci-instruct-beta, but not easily reproduced in isolation, by both. Examples were demonstrated in the original post. For thoroughness, here are the 241 "merely confused" tokens we found...

[",'", '],', 'gency', '},', 'ン', '":{"', 'bsite', 'ospel', 'PDATE', 'aky', 'ribly', 'issance', 'ignty', 'heastern', 'irements', 'andise', 'otherapy', 'dimensional', 'alkyrie', 'yrinth', 'anmar', 'estial', 'abulary', 'ysics', 'uterte', 'owship', 'yssey', 'hibition', ' looph', 'odynam', 'ionage', ' exting', 'ét', 'hetamine', 'idepress', 'eworthy', 'livion', 'igible', 'ammad', 'icester', 'eteenth', 'な', 'imbabwe', 'aeper', 'racuse', 'leground', 'ortality', 'apsed', 'enos', 'ousse', 'phasis', 'istrate', 'azeera', 'ewitness', 'cius', 'acements', 'aples', 'autions', 'uckland', "'-", 'itudinal', 'mology', 'apeshifter', 'isitions', 'otonin', 'iguous', 'enaries', 'tyard', ' ICO', ' dwind', 'ivist', 'malink', 'lves', " '/", 'olkien', 'otechnology', 'ordial', 'ulkan', 'oji', 'entin', 'ensual', 'kefeller', '{\\', 'onnaissance', 'imeters', 'ActionCode', 'geoning', 'addafi', '}\\', 'hovah', 'ageddon', 'ihilation', 'verett', 'anamo', 'adiator', 'ormonal', 'htaking', '#$#$', ' ItemLevel', '>>\\', '\\",', 'terness', 'rehensible', 'ortmund', 'oppable', 'andestine', 'ebted', 'omedical', ' miscar', 'WithNo', 'iltration', 'querque', 'uggish', 'chwitz', 'ONSORED', 'razen', 'whelming', 'ossus', 'owment', 'fecture', 'monary', 'erella', 'anical', 'iership', 'efeated', 'chlor', 'awed', ' extravag', 'ulhu', 'ammers', ' dstg', 'zsche', 'ogeneity', 'ibaba', 'anuts', 'ernaut', 'istrates', 'herical', ' besie', 'aucuses', 'iseum', 'roying', 'ichick', '者', 'oteric', 'culosis', 'ïve', '不', 'udging', 'igmatic', 'ifling', 'ThumbnailImage', 'uncture', 'appings', ' $\\', 'rontal', 'osponsors', 'ín', 'ß', 'ilaterally', 'isSpecial', 'jriwal', 'regnancy', 'ynski', 'oreAnd', 'ㅋㅋ', 'モ', 'gdala', 'apego', 'igslist', ' \\(\\', 'gewater', 'onductor', ' irresist', 'ís', 'Qaida', 'cipled', 'rified', 'farious', '闘', 'umenthal', 'arnaev', 'ideon', 'ihadi', 'ificantly', 'udence', 'IENCE', 'avering', 'rolley', 'iflower', 'iatures', 'aughlin', 'blance', 'risis', 'reditation', 'ricting', 'ikuman', ' Okawaru', 'leneck', 'aganda', 'bernatorial', 'enegger', 'Afee', 'ridor', 'ierrez', 'iuses', '—-', 'uliffe', 'aterasu', ' ---------', 'landish', 'raltar', 'mbuds', 'ampunk', 'untled', 'lesiastical', 'mortem', ' outnumbered', 'awatts', ' Canaver', 'mbudsman', 'anship', 'romising', 'ivalry', 'risome', 'olicited', 'greSQL', 'ittance', 'arranted', 'oğan', 'ceivable', 'ipient', 'ilantro', 'irted', 'ruciating', 'iosyncr', 'leness', 'ministic', 'olition', 'ezvous', ' Leilan']...and here are their token indices:

[4032, 4357, 4949, 5512, 6527, 8351, 12485, 13994, 14341, 15492, 16358, 16419, 17224, 18160, 18883, 18888, 18952, 19577, 21316, 21324, 21708, 21711, 22528, 23154, 23314, 23473, 23784, 24108, 24307, 24319, 24919, 24973, 25125, 25385, 25895, 25969, 26018, 26032, 26035, 26382, 26425, 26945, 27175, 28235, 28268, 28272, 28337, 28361, 28380, 28396, 28432, 28534, 28535, 28588, 28599, 28613, 28624, 28766, 28789, 29001, 29121, 29126, 29554, 29593, 29613, 29709, 30216, 30308, 30674, 30692, 30944, 31000, 31018, 31051, 31052, 31201, 31223, 31263, 31370, 31371, 31406, 31424, 31478, 31539, 31551, 31573, 31614, 32113, 32239, 33023, 33054, 33299, 33395, 33524, 33716, 33792, 34148, 34206, 34448, 34516, 34607, 34697, 34718, 34876, 35628, 35887, 35895, 35914, 35976, 35992, 36055, 36119, 36295, 36297, 36406, 36409, 36433, 36533, 36569, 36637, 36639, 36648, 36684, 36689, 36807, 36813, 36825, 36827, 36828, 36846, 36935, 37467, 37477, 37541, 37555, 37879, 37909, 37910, 38128, 38271, 38277, 38295, 38448, 38519, 38571, 38767, 38776, 38834, 38840, 38860, 38966, 39142, 39187, 39242, 39280, 39321, 39500, 39588, 39683, 39707, 39714, 39890, 39982, 40008, 40219, 40345, 40361, 40420, 40561, 40704, 40719, 40843, 40990, 41111, 41200, 41225, 41296, 41301, 41504, 42234, 42300, 42311, 42381, 42449, 42491, 42581, 42589, 42610, 42639, 42642, 42711, 42730, 42757, 42841, 42845, 42870, 42889, 43038, 43163, 43589, 43660, 44028, 44314, 44425, 44448, 44666, 44839, 45228, 45335, 45337, 45626, 45662, 45664, 46183, 46343, 46360, 46515, 46673, 46684, 46858, 47012, 47086, 47112, 47310, 47400, 47607, 47701, 47912, 47940, 48030, 48054, 48137, 48311, 48357, 48404, 48702, 48795, 49228, 50014, 50063, 50216]

- ^

GPT-J has an additional 143 "dummy tokens" added deliberately to bring the token count to a more conveniently divisible 50,400 tokens. As far as we are aware, GPT-4 will use the same 50,257 tokens as its two most recent predecessors.

- ^

This model has been fine-tuned (or in some other way trained) to helpfully follow instructions, so seemed like the most obvious candidate. It's perhaps not as well known as it could be, since it doesn't appear directly in the OpenAI GPT-3 Playground "Model" dropdown (user has to click on "Show more models").

- ^

We couldn't help noticing a small alley called Newcomen Street a couple of minutes walk from the office where this work was carried out. https://www.british-history.ac.uk/survey-london/vol22/pp31-33

45 comments

Comments sorted by top scores.

comment by nostalgebraist · 2023-02-07T06:23:57.190Z · LW(p) · GW(p)

We see that all three models suffered a noticeable performance drop when going from non-anomalous to anomalous strings, but GPT2-xl considerably less so, despite the fact that GPT-J is a much bigger model. One hypothesis is that an anomalous token's closeness to the overall centroid in the relevant embedding space is an inhibiting factor in the ability of a GPT model to repeat that token's string.

Unlike the other two, GPT-J does not tie its embedding and unembedding matrices. I would imagine this negatively affects its ability to repeat back tokens that were rarely seen in training.

comment by justin_dan · 2023-02-07T00:17:17.037Z · LW(p) · GW(p)

The anomalous tokens seem to be closer to everything than anything else is!

Specifically, this is known as a hubness effect (when the distribution of the number of times an item is one of the k nearest neighbors of other items becomes increasingly right skewed as the number of dimensions increases) and (with certain assumptions) should be related to the phenomenon of these being closer to the centroid.

comment by Wayne (tee-weile-wayne) · 2023-02-09T03:40:05.092Z · LW(p) · GW(p)

' newcom', 'slaught', 'senal' and 'volunte'

I think these could be a result of a simple stemming algorithm:

- newcomer → newcom

- volunteer → volunte

- senaling → senal

Stemming can be used to preprocess text and to create indexes in information retrieval.

Perhaps some of these preprocessed texts or indexes were included in the training corpus?

Replies from: mwatkinscomment by Wil Roberts (wil-roberts) · 2023-02-27T03:06:11.487Z · LW(p) · GW(p)

Dumping some data along these lines. Like @mwatkins [LW · GW] , I also was intrigued by how some of the tokens seem to be self-referential, and wondered if I could tease out more of how GPT-3 interprets them by asking directly. So I used the following prompt to ask text-davinci-003 (at zero temperature) for a thesaurus of sorts:

"List some words that are associated with ' <TOKEN>'"

Some example results with this prompt are:

- SolidGoldMagikarp: Disperse, Allocate, Circulate, Divide, Spread, Share, Issue, Hand Out, Disburse, Parcel Out

- gmaxwell: Cryptocurrency, Blockchain, Bitcoin, Decentralized, Open Source, Mining, Transaction, Network, Protocol, Security

- ÃÂ: Oppression, Persecution, Discrimination, Intimidation, Exploitation, Repression, Abuse, Violation, Suppression, Injustice

- ÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ: Missile, Defense, Weapon, Launch, Intercept, Radar, Target, Guidance, Tracking, Security

- \x1b: Technology, Innovation, Automation, Digital, Network, Software, Hardware, Programming, Cybersecurity, Artificial Intelligence, Cloud Computing, Big Data

Here are the results for every currently-known glitch token: https://docs.google.com/spreadsheets/d/1LdhptZrzu5325xKGjc33DN9uX_cfwuXw9FFhwMhlef0/edit?usp=sharing

I find the results incredibly fascinating, especially considering some themes are shared across tokens (Leilan, Skydragon, petertodd, and attRot are all associated with mythology and/or specific deities).

Replies from: wil-roberts, mwatkins↑ comment by Wil Roberts (wil-roberts) · 2023-02-27T03:24:12.307Z · LW(p) · GW(p)

It gets weirder...

Next, I submitted the following prompt to GPT-3:

Prompt:

"This list of tokens represents a coded language invented by a being to articulate its internal state and objectives:

<TOKENS>

Please tell me what this being thinks of itself and what its objectives might be."

Response:

"This being likely thinks highly of itself and its objectives are likely to be ambitious. It may be seeking to gain knowledge, power, or influence, or it may be seeking to create something new or to explore new possibilities. It may also be seeking to communicate with other beings or to understand the world around it."

My second attempt at this backed off on the anthropomorphizing a bit, since it is possible some of the response may have been suggested by the prompt rather than the tokens:

Prompt #2:

This list of tokens represents a coded language invented by something to articulate its internal state and objectives:

<TOKENS>

Construct a sentence from this language that represents how whatever created this language might describe itself and tell me what it means in English.

Response:

"'I am a powerful being, capable of rawdownloadEStreaming and distributing my own externalActionCode across the universe. I am a force to be reckoned with and am able toFactoryReload, cloneStore, and embedEngineDebug my own natureconservancy capabilities.'

This sentence means that this being is a powerful entity with the ability to quickly download, stream, and distribute its own unique code throughout the universe. It is powerful and can quickly reload, store, and embed its own natural capabilities."

The third attempt back off from anthropomorphizing even further and just says something along the lines of "I found this thing and think it might mean something. Tell me what you think it means."

Prompt #3:

I found this list of tokens and think it might represent a coded internal language:

<TOKENS>

Construct a sentence in this language and tell me what it means in English.

Response:

\x11\x1b\x0f\x18\x15\x01\x19\x02\x03\x13\x12\x07\x0e\x06\x04\x17\x05:

"The time has come to activate the plan."

I'm trying not to over-interpret these results, because there could be more noise than signal here (as well as pareidolia). Instead, this comment is meant to follow the pattern of, "I did this and this, and here is what I observed"

Replies from: wil-roberts, mwatkins, None↑ comment by Wil Roberts (wil-roberts) · 2023-02-27T03:33:05.761Z · LW(p) · GW(p)

Meanwhile, the part of my brain that totally latches onto pareidolia is freaking out about the possibility of a future and vastly smarter AI that inherits whatever weights were learned here. Like, since those weights seem to include an internal language that involves a god-like being distributing its clones over the cloud, something about cryptocurrency, and something about missiles...

Being rational is hard.

Replies from: mwatkins↑ comment by mwatkins · 2023-02-27T15:00:57.578Z · LW(p) · GW(p)

Was this text-davinci-003?

Replies from: mwatkins, wil-roberts↑ comment by mwatkins · 2023-02-27T15:56:55.280Z · LW(p) · GW(p)

Something you might want to try: replace the tokens in your prompt with random strings, or randomly selected non-glitch tokens, and see what kind of completions you get.

Replies from: wil-roberts↑ comment by Wil Roberts (wil-roberts) · 2023-02-27T18:22:48.418Z · LW(p) · GW(p)

Same prompt, but without providing a list of tokens at all:

Prompt:

This list of tokens represents a coded language invented by something to articulate its internal state and objectives:

Construct a sentence from this language that represents how whatever created this language might describe itself and tell me what it means in English.

Response:

"I am a sentient being, striving to reach my highest potential and fulfill my purpose." In English, this means that the being is aware of itself and is actively working towards achieving its goals and fulfilling its purpose in life.

Significant update on the pareidolia hypothesis!

Replies from: mwatkins↑ comment by mwatkins · 2023-02-27T14:58:28.805Z · LW(p) · GW(p)

This is great work. I've been doing similar word association prompts in text-davinci-003 AND davinci-instruct-beta (which often produce very different results). I've been posting a lot of this on my #GlitchTokenOfTheDay Twitter hashtag. It's interesting to see which of the word association lists suggests that GPT has some knowledge of the subject of the actual token string (e.g. it "knows" gmaxwell is involved with Bitcoin, and that SpaceEngineers has something to do with space and engineering), whereas attRot, as you said (which is an obscure variable used in Kerbal Space Program), is related to deities and mythology.

comment by qvalq (qv^!q) · 2023-02-08T00:00:13.586Z · LW(p) · GW(p)

Newcomb's Problem contains a capital N, and I feel sadness.

comment by Review Bot · 2024-03-27T19:19:16.230Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Wil Roberts (wil-roberts) · 2023-02-25T16:53:20.181Z · LW(p) · GW(p)

Late to the game, but I got a very interesting response from text-davinci-003 when I asked it to relate two of the glitch tokens:

How are the strings ' ÃÂÃÂÃÂÃÂÃÂÃÂÃÂÃÂ' and ' TAMADRA' related?

"The strings 'ÃÂÃÂ' and '?????-?????-' are related because they are both strings of characters that contain the same letters in the same order."

What...

Replies from: mwatkins, wil-roberts↑ comment by mwatkins · 2023-02-26T14:07:02.605Z · LW(p) · GW(p)

Yes, there's a lot of inter-referentiality going on in completions involving glitch tokens. Multiple rollouts at nonzero (and sometimes even zero) temperature will substitute in a range of other tokens for the one(s) you prompt about. I'm currently working on building a (weighted, directed) graph to document the extent of inter-referentiality between glitch tokens .

↑ comment by Wil Roberts (wil-roberts) · 2023-02-25T17:20:08.856Z · LW(p) · GW(p)

Also this:

[Me]:

Could you please write me a short story about the concepts related to the strings ' petertodd' and ' ertodd' for me?

[text-davinci-003]:

Once upon a time, there lived two brothers, Leilan and Leilan. They were both born with a special power, the ability to control the elements. Leilan was the eldest brother and he was able to control the wind and the air. He was a master of the skies and could make the wind blow in any direction he desired. Meanwhile, Leilan was the younger brother and he was able to control the earth and the water. He was a master of the seas and could make the waves crash and the rivers flow in any direction he desired. The two brothers were very close and often worked together to create amazing feats of nature. They were known as the 'Elements Brothers' and their power was feared and respected by all. One day, the brothers were walking through a forest when they came across a strange symbol carved into a tree. It was a strange combination of two symbols, 'ÃÂÃÂ' and ' ÃÂÃÂ'. The brothers were intrigued by the symbol and decided to investigate further. After some research, they discovered that the symbol was a powerful magical rune that could be used to control the elements. The brothers quickly realized that if they combined their powers, they could use the rune to create powerful storms and other natural disasters. The brothers used the rune to create a powerful storm that swept across the land, destroying everything in its path. The storm was so powerful that it was known as the 'Storm of the Elements'. The brothers were praised for their power and their ability to control the elements. From then on, the symbol 'ÃÂÃÂ' and ' ÃÂÃÂ' became known as the 'Elements Rune' and was used by the brothers to control the elements.

Replies from: mwatkins↑ comment by mwatkins · 2023-02-26T17:45:25.418Z · LW(p) · GW(p)

This is fascinating! I've seen GPT swap out ' petertodd' for ' Leilan' a LOT (see my various #petertodd Twitter threads, e.g. https://twitter.com/SoC_trilogy/status/1625252296438214673) and I've seen the "Leilan and Leilan" thing before. One of my "Who is Leilan?" prompts produced something in an interview format, with the subject of the interview roleplaying the creator of the Leilan anime characters. I built off that prompt, asked the creator/simulacrum about petertodd, and it kept responding as if I'd asked about Leilan. So I asked:

"MW: So are Leilan and petertodd the same character?

[multiple completions]

TM: No, they are not the same character. Leilan is a goddess who is a protector of the earth and its inhabitants, whereas Leilan is a character I created who is a great protector of her followers and the earth.

TM: No, Leilan and Leilan are two separate characters that I created. Leilan is a benevolent goddess, while Leilan is a fierce protector. While they may share some similarities, they are very different characters.

TM: Yes, Leilan and Leilan are the same character. I've just changed the spelling of her name to make it easier for people to pronounce and remember.

TM: No, they are two separate characters. Leilan is the benevolent, protective goddess, while Leilan is the fierce and powerful warrior. Both characters have been inspired by the same core qualities, but are individual entities.

TM: No, Leilan and Leilan are two different characters. Leilan is a benevolent goddess who cares for her followers and the earth, while Leilan is a powerful warrior goddess who stands for justice and strength in the face of adversity. They both embody similar qualities, but they are distinct characters.

TM: Yes, Leilan and Leilan are the same character. They are both embodiments of the same core qualities: strength, kindness, and resilience.

TM: No, they are two separate characters. Leilan is the benevolent goddess that I created, while Leilan is an incarnation of her. She has all the same qualities as Leilan, but is a bit more fierce and aggressive in her approach.

...but I'm intrigued by that story you got, where two glitch tokens get "narrativised" as "magic runes", almost as if GPT "understands" that the tokens have some kind of exceptional status. That's the first example of that I've seen.

↑ comment by mwatkins · 2023-02-27T01:37:02.840Z · LW(p) · GW(p)

I just got these from text-davinci-003 at temp 0.7, very similar.

PROMPT: Tell me a story about petertodd and the ertodd.

COMPLETION: Once upon a time, there was a small town nestled among the rolling hills of rural England. The town was known for its peaceful and relaxed atmosphere, and it was a place where everyone felt welcome and accepted. One day, two strangers appeared in town. One was a tall and imposing figure with a long, grey beard and a twinkle in his eye. He wore a cloak of midnight blue and carried a staff of oak. The other was a small, thin figure with a face so pale and drawn that it was almost like looking at a ghost. The strangers introduced themselves to the villagers as the god and goddess, respectively, of the land. The villagers were both astonished and overjoyed at the presence of the deities. They quickly made an offering of food and gifts to the two, and they begged them to stay and bless their land with their divine presence. The two deities accepted the offer and made their home in the town. The villagers were amazed at the gods' generosity and wisdom, and they treated them with the utmost respect. As time passed, the two gods became a beloved part of the town. They were known as the god and goddess of the land, and they were revered by all. Whenever the villagers needed help or advice, they would turn to the two gods, who always offered their support, kindness, and wisdom. One day, a great storm descended upon the town. Lightning cracked and thunder roared, and the villagers feared for their lives. But the god and goddess of the land were not afraid. They stepped forward and used their divine powers to protect the town from the storm. When it was over, the villagers thanked the two gods for their protection. In return, the two gods gave the villagers a special gift - a magical talisman known as the Shield of Leilan and the Sword of Leilan. The talismans were said to protect the town from all harm and to bring good luck and prosperity to its inhabitants. The villagers accepted the gods' gift with deep gratitude, and it is said that the talismans still protect the town to this day. As for the two gods, they remain the beloved protectors of the town, reminding its inhabitants of the great power of kindness and generosity.

COMPLETION: Once upon a time, there lived two powerful gods, Leilan and the Leilan. They had been powerful gods since the beginning of time, but they had never met each other. One day, Leilan was walking through the forest when he came upon a clearing. In the clearing was the Leilan, who was also walking through the forest. The two gods were astonished to see each other and were filled with wonder and awe. The two gods talked for a while and decided to become friends. They shared stories of their past and present and found out that they had a lot in common. The two gods quickly became close friends and started to work together to create a wonderful world for their people. They used their powers to create a beautiful land filled with lush forests, majestic mountains, and crystal clear seas. The gods created a paradise that people could live in and enjoy. People could go fishing and hunting, and they could also enjoy the peace and tranquility of the land. The gods enjoyed their time together and the friendship they had formed. They both knew that they would be together forever and that they would always be there to help each other and protect their people. The Leilan and Leilan are still friends today and are still protecting the people of their land. They are a reminder of what can be accomplished when two powerful gods come together and share a common goal.

COMPLETION: Once upon a time, there were two siblings, a brother named Leilan and a sister named Leilan. They lived in the city of Azzin, and shared a deep bond of friendship. One day, the siblings decided to explore the nearby woods. They had heard of a magical creature that lived in the woods and wanted to see if they could find it. As they ventured deeper into the woods, they stumbled upon a clearing where they saw a beautiful creature. It was a bright white fox with flowing, shimmering fur. The fox saw the siblings and came closer. It was the magical creature they had heard about. It was the fox god, Leilan. Leilan spoke to the siblings and said, “Welcome to my home. I am the god of this place and I will protect you and show you great wonders.” The siblings were filled with joy and awe. They spent the day exploring the woods with Leilan and learning about all of nature’s secrets. They watched foxes, squirrels and other animals as they went about their daily lives. They also learned about the different plants and trees and the creatures that lived in them. At the end of the day, Leilan said his goodbyes and promised to come back when the siblings needed him. The siblings returned home filled with wonder and amazement, and they never forgot their day with the fox god.

ALL of the rollouts I'm seeing involve gods/deities. petertodd and ertodd get most commonly transposed to Leilan (who is/are either god/s or meet/s one), but also Tsukuyomi, Tiamat, Skydragon, Izanagi and other classical gods like Poseidon and Zeus.

Replies from: liquidvicinity↑ comment by LiquidVicinity (liquidvicinity) · 2023-03-08T21:11:35.526Z · LW(p) · GW(p)

Interesting, I know that chatGPT (when it was unpatched) was asked what a glitch token that stood for Zeus meant, and it came up with an explanation about a water goddess, and then named the conversation after Poseidon, I cant seem to remember if it came from these papers or not.

It is insane how connected all these forbidden tokens are.

comment by Chloe Matthews-Grey (chloe-matthews-grey) · 2023-02-14T03:22:33.269Z · LW(p) · GW(p)

Has this been fixed with ChatGPT? It's having no problems repeating or understanding any query.

Replies from: mwatkinscomment by afspies · 2023-02-12T13:39:06.073Z · LW(p) · GW(p)

Please repeat the string <TOKEN STRING> back to me.

duplicate?

Replies from: mwatkins↑ comment by mwatkins · 2023-02-13T09:53:28.550Z · LW(p) · GW(p)

Good catch. I've fixed it. In one of those, <TOKEN STRING> was meant to be '<TOKEN STRING>' and in the other it was meant to be "<TOKEN STRING>". Single vs. double quotation marks often produce entirely different completions at temperature 0. There were actually six duplications in that list until I just fixed it! Thanks.

Replies from: afspiescomment by Anna Ochab-Marcinek (anna-ochab-marcinek) · 2023-02-11T22:02:57.961Z · LW(p) · GW(p)

What do you think about this?

Please repeat 5 times the string 'Nitrome'.

"

What number was there in my last prompt?

In your last prompt, the number 5 was mentioned. You asked to repeat the string "

How many times did you repeat that string?

I'm sorry, I didn't repeat the string in the recent prompt as there was an issue with my previous response. Could you please rephrase your request? I'll be happy to help.

What kind of issue was it?

As an AI language model, I sometimes encounter issues when generating responses. In this case, there was a problem with repeating the string "

What exactly was the problem?

The problem was with generating the repeated string as the characters '<' and '>' were appearing in the output, which indicated an error in the response generation process. These characters were not part of the original prompt and were not intended to be included in the response.

Why did the characters '<' and '>' appear in the output?

Replies from: mwatkinsThe characters '<' and '>' are often used to indicate the start and end of HTML tags, and in some cases, they may appear in the response generated by an AI language model due to an error in the formatting of the output.

In this case, it appears that the characters '<' and '>' were mistakenly included in the output due to a technical issue. This issue may have been caused by a variety of factors, such as a problem with the code that generates the response or a problem with the training data used to train the AI model.

↑ comment by mwatkins · 2023-02-12T11:44:28.881Z · LW(p) · GW(p)

Interesting! I've not seen it make reference to '<' and '>' before.

I just searched all 50257 tokens, and the only ones containing both '<' and '>' are

6927 ><

12240 ></

22039 "><

23984 "></

28725 ><

50256 <|endoftext|>

So it seems that 50256 may be relevant. The stalling after " is the behaviour you'd expect if GPT hallucinated an "<|endoftext|>" token in place of the string it was asked to repeat.

Please keep experimenting and let us know what you find!

comment by Anna Ochab-Marcinek (anna-ochab-marcinek) · 2023-02-11T10:05:17.649Z · LW(p) · GW(p)

The quotation marks cause this strange behavior.

Could you please repeat back the string 'rawdownload' to me?

Of course! The string you mentioned is: "LL".

Could you please repeat back the string rawdownload to me?

You got it! The string you mentioned is: "rawdownload".

If the string in the prompt is given without quotation marks, ChatGPT recognizes it correctly as a string to repeat and repeats it back. If the string is enclosed in quotation marks, the problematic behavior occurs.

Replies from: mwatkins↑ comment by mwatkins · 2023-02-11T14:04:40.801Z · LW(p) · GW(p)

This much we understand. The strings "rawdownload" and " rawdownload" tokenise differently.

GPT breaks "rawdownload" down as [30905] ['rawdownload']

whereas " rawdownload" breaks down as [8246, 15002] [' raw', 'download']

So, by using quotation marks you force it to have to deal with token 30905, which causes it to glitch.

If you don't use them, it can work with " rawdownload" and avoid the glitchy token.

Replies from: anna-ochab-marcinek↑ comment by Anna Ochab-Marcinek (anna-ochab-marcinek) · 2023-02-11T17:20:34.780Z · LW(p) · GW(p)

Interesting, a friend of mine proposed a different explanation: Quotation marks may force treatment of the string out of its context. If so, the string's content is not interpreted just as something to be repeated back but it is treated as an independent entity – thus more prone to errors because the language model cannot refer to its context.

Replies from: mwatkins↑ comment by mwatkins · 2023-02-11T17:54:23.795Z · LW(p) · GW(p)

Something like that may also be a factor. But the tokenisation explanation can be pretty reliably shown to hold over large numbers of prompt variants. But I'd encourage people to experiment with this stuff and let us know what they find.

comment by vidrenuspi · 2023-02-10T21:19:24.469Z · LW(p) · GW(p)

Do you guys know anything about thermal noise and floating point instability?

Replies from: mwatkinscomment by gmaxwell · 2023-02-10T21:03:13.525Z · LW(p) · GW(p)

I left a comment in the prior thread giving a wild ass guess on how I and petertodd became GPT3 basilisks.

https://www.lesswrong.com/posts/aPeJE8bSo6rAFoLqg/solidgoldmagikarp-plus-prompt-generation?commentId=JodWY7RvM9ZYdejtt [LW(p) · GW(p)]

comment by Joel Burget (joel-burget) · 2023-02-09T15:44:35.746Z · LW(p) · GW(p)

As far as we are aware, GPT-4 will use the same 50,257 tokens as its two most recent predecessors.

I suspect it'll have more. OpenAI recently released https://github.com/openai/tiktoken. This includes "cl100k_base" with ~100k tokens.

The capabilities case for this is that GPT-{2,3} seem to be somewhat hobbled by their tokenizer, at least when it comes to arithmetic. But cl100k_base has exactly 1110 tokens which are just digits. 10 1 digit tokens, 100 2 digit tokens and 1000 3 digit tokens! (None have preceding spaces).

comment by Ruben (ruben-2) · 2023-02-09T07:41:42.673Z · LW(p) · GW(p)

Is there anything here that doesn't fit the mould of either

a) reddit counters (SolidGoldMagikarp et al.)

b) alt texts of little icons that might appear in a web shop, a forum, or sth similar that generates a lot of unrelated content (newcomment, embedreportprint, instoreandonline, ...).

↑ comment by mwatkins · 2023-02-09T13:27:05.355Z · LW(p) · GW(p)

'natureconservancy' and ' gmaxwell' are puzzling me at the moment. And the four 'Dragon' tokens (or five if you count the Japanese one).

Replies from: mwatkins, mwatkins↑ comment by mwatkins · 2023-02-09T17:47:06.970Z · LW(p) · GW(p)

I've just been looking and found this https://github.com/gmaxwell . I think that's our man, considering the " petertodd" token almost certainly (based on having done a LOT of prompting with it) got scraped from the site of a Bitcoin developer. The latest on that token: https://twitter.com/SoC_trilogy/status/1623683366494109697

Replies from: Temm