Posts

Comments

The corresponding arbital page is now (apparently) dead.

A link appears to have broken, does anyone know what “null” was supposed to link to in “policy null ” (note the extra spaces around “null”

There are severe issues with the measure I'm about to employ (not least is everything listed in https://www.sqlite.org/cves.html) , but the order of magnitude is still meaningful:

https://cve.mitre.org/cgi-bin/cvekey.cgi?keyword=sqlite 170 records

https://cve.mitre.org/cgi-bin/cvekey.cgi?keyword=postgresql 292 records (+74 postgres and maybe another 100 or so under pg; the specific spelling “postgresql” isn't used as consistently as “sqlite” and “mysql” is)

https://cve.mitre.org/cgi-bin/cvekey.cgi?keyword=mysql 2026 records

On the first picture of the feeder, if you screw through a small piece of wood on the inside, it'll act as a washer and make it much harder for the screw to pull through the plastic if a cat gets kinetic with it.

- Literally does not apply to any existing AI

- Does so by attacking open source models

1 contradicts 3.

The management interfaces are backed into the cpu dies these days, and typically have full access to all the same busses as the regular cpu cores do, in addition to being able to reprogram the cpu microcode itself. I'm combining/glossing over the facilities somewhat, bu the point remains that true root access to the cpu's management interface really is potentially a circuit-breaker level problem.

Solomon wise, Enoch old.

(I may have finished rereading Unsong recently)

- introduce two new special tokens unused during training, which we will call the "keys"

- during instruction tuning include a system prompt surrounded by the keys for each instruction-generation pair

- finetune the LLM to behave in the following way:

- generate text as usual, unless an input attempts to modify the system prompt

- if the input tries to modify the system prompt, generate text refusing to accept the input

- don't give users access to the keys via API/UI

Besides calling the special control tokens “keys”, this is identical to how instruction-tuning works already.

There is a single sharp, sweet, one-short-paragraph idea waiting to escape from the layers of florid prose it's tangled in.

Then it would be judged for what it is, rather than for the (tacky) clothing its wearing.

A well-made catspaw, with a fine wide chisel on one end, and a finely tapered nail puller on the other (most cheap catspaws' pullers are way too blunt) is very useful for light demo work like this, as they're a single tool you can just keep in your hand. It's basically a demolition prybar with a claw and hammer on the opposite end.

Pictured above is the kind I usually use.

This isn't the link I was thinking of (I was remembering something in the alignment discussion in the early days of lw, but I can't find it), but this is probably a more direct answer to your request anyway: https://www.lesswrong.com/posts/FgsoWSACQfyyaB5s7/shutdown-seeking-ai

[…] or reward itself highly without actually completing the objective […]

This is standard fare in the existing alignment discussion. See for instance https://www.lesswrong.com/posts/TtYuY2QBug3dn2wuo/the-problem-with-aixi or anything referring to wireheading.

[…] The notion of an argument that convinces any mind seems to involve a little blue woman who was never built into the system, who climbs out of literally nowhere, and strangles the little grey man, because that transistor has just got to output +3 volts: It's such a compelling argument, you see.

But compulsion is not a property of arguments, it is a property of minds that process arguments.

[…]

And that is why (I went on to say) the result of trying to remove all assumptions from a mind, and unwind to the perfect absence of any prior, is not an ideal philosopher of perfect emptiness, but a rock. What is left of a mind after you remove the source code? Not the ghost who looks over the source code, but simply... no ghost.

So—and I shall take up this theme again later—wherever you are to locate your notions of validity or worth or rationality or justification or even objectivity, it cannot rely on an argument that is universally compelling to all physically possible minds.

Nor can you ground validity in a sequence of justifications that, beginning from nothing, persuades a perfect emptiness.

[…]

The first great failure of those who try to consider Friendly AI, is the One Great Moral Principle That Is All We Need To Program—aka the fake utility function—and of this I have already spoken.

But the even worse failure is the One Great Moral Principle We Don't Even Need To Program Because Any AI Must Inevitably Conclude It. This notion exerts a terrifying unhealthy fascination on those who spontaneously reinvent it; they dream of commands that no sufficiently advanced mind can disobey. The gods themselves will proclaim the rightness of their philosophy! (E.g. John C. Wright, Marc Geddes.)

The truth is probably somewhere in the middle.

Not a complete answer, but something that helps me, that hasn't been mentioned often, is letting yourself do the task incompletely.

I don't have to fold all the laundry, I can just fold one or three things. I don't have to wash all the dishes, I can just wash one more than I actually need to eat right now. I don't have to pick up all the trash laying around, just gather a couple things into an empty bag of chips.

It doesn't mean anything, I'm not committing to anything, I'm just doing one meaningless thing. And I find that helps.

Climbing the ladder of human meaning, ability and accomplishment for some, miniature american flags for others!

“Non-trivial” is a pretty soft word to include in this sort of prediction, in my opinion.

I think I'd disagree if you had said “purely AI-written paper resolves an open millennium prize problem”, but as written I'm saying to myself “hrm, I don't know how to engage with this in a way that will actually pin down the prediction”.

I think it's well enough established that long form internally coherent content is within the capabilities of a sufficiently large language model. I think the bottleneck on it being scary (or rather, it being not long before The End) is the LLM being responsible for the inputs to the research.

Bing told a friend of mine that I could read their conversations with Bing because I provided them the link.

Is there any reason to think that this isn't a plausible hallucination?

Regarding musicians getting paid ridiculous amounts of money for playing gigs, I'm reminded of the “Making chalk mark on generator $1. Knowing where to make mark $9,999.” story.

The work happens off-stage, for years or decades, typically hours per day starting in childhood, all of which is uncompensated; and a significant level of practice must continue your entire life to maintain your ability to perform.

My understanding is that M&B is intended to be broader than that, as per:

“So it is, perhaps, noting the common deployment of such rhetorical trickeries that has led many people using the concept to speak of it in terms of a Motte and Bailey fallacy. Nevertheless, I think it is clearly worth distinguishing the Motte and Bailey Doctrine from a particular fallacious exploitation of it. For example, in some discussions using this concept for analysis a defence has been offered that since different people advance the Motte and the Bailey it is unfair to accuse them of a Motte and Bailey fallacy, or of Motte and Baileying. That would be true if the concept was a concept of a fallacy, because a single argument needs to be before us for such a criticism to be made. Different things said by different people are not fairly described as constituting a fallacy. However, when we get clear that we are speaking of a doctrine, different people who declare their adherence to that doctrine can be criticised in this way. Hence we need to distinguish the doctrine from fallacies exploiting it to expose the strategy of true believers advancing the Bailey under the cover provided by others who defend the Motte.” [bold mine]

http://blog.practicalethics.ox.ac.uk/2014/09/motte-and-bailey-doctrines/

I'm deeply suspicious of any use of the term “violence” in interpersonal contexts that do not involve actual risk-of-blood violence, having witnessed how the game of telephone interacts with such use, and having been close enough to be singed a couple times.

It's a motte and bailey: the people who use the word as part of a technical term clearly and explicitly disavow the implication, but other people clearly and explicitly call out the implication as if it were fact. Accusations of gaslighting sometimes follow.

It's as if “don't-kill-everyoneism” somehow got associated with the ethics-and-unemployment branch of alignment, but then people started making arguments that opposing, say, RLHF-imposed guardrails for proper attribution, implied that you were actively helping bring about the robot apocalypse, merely because the technical term happens to include “kill everyone”.

Downside of most any information being available to use from any context, I guess.

Meta, possibly a site bug:

The footnote links don't seem to be working for me, in either direction: footnote 1 links to #footnote-1, but there's no element with that id; likewise the backlink on the footnote links to #footnote-anchor-1, which also lacks a block with a matching id.

Some paragraph breaks would go a long ways towards the kingdom of playful rants from the desolate lands of manic ravings.

Any chance you have the generated svg's still, not just the resulting bitmap render?

That's actually a rather good depiction of a dog's head, in my opinion.

OK. But can you prove that "outcome with infinite utility" is nonsense? If not - probability is greater than 0 and less than 1.

That's not how any of this works, and I've spent all the time responding that I'm willing to waste today.

You're literally making handwaving arguments, and replying to criticisms that the arguments don't support the conclusions by saying “But maybe an argument could be made! You haven't proven me wrong!” I'm not trying to prove you wrong, I'm saying there's nothing here that can be proven wrong.

I'm not interested in wrestling with someone who will, when pinned to the mat, argue that because their pinky can still move, I haven't really pinned them.

You're playing very fast and loose with infinities, and making arguments that have the appearance of being mathematically formal.

You can't just say “outcome with infinite utility” and then do math on it. P(‹undefined term›) is undefined, and that “undefined” does not inherit the definition of probability that says “greater than 0 and less than 1”. It may be false, it may be true, it may be unknowable, but it may also simply be nonsense!

And even if it wasn't, that does not remotely imply than an agent must-by-logical-necessity take any action or be unable to be acted upon. Those are entirely different types.

And alignment doesn't necessarily mean “controllable”. Indeed, the very premise of super-intelligence vs alignment is that we need to be sure about alignment because it won't be controllable. Yes, an argument could be made, but that argument needs to actually be made.

And the simple implication of pascal's mugging is not uncontroversial, to put it mildly.

And Gödel's incompleteness theorem is not accurately summarized as saying “There might be truths that are unknowable”, unless you're very clear to indicate that “truth” and “unknowable” have technical meanings that don't correspond very well to either the plain english meanings nor the typical philosophical definitions of those terms.

None of which means you're actually wrong that alignment is impossible. A bad argument that the sun will rise tomorrow doesn't mean the sun won't rise tomorrow.

"I -" said Hermione. "I don't agree with one single thing you just said, anywhere."

“However, through our current post-training process, the calibration is reduced.” jumped out at me too.

Please don't break title norms to optimize for attention.

Retracted given that it turns out this wasn't a deliberate migration.

Retracted given that it turns out this wasn't a deliberate migration.

If it ends up being useful, the chapter switcher php can be replaced with a slightly hacky javascript page that performs the same function, as such the entire site can easily be completely static.

First line was “HPMOR.com is an authorized, ad-free mirror of Eliezer Yudkowsky‘s epic Harry Potter fanfic, Harry Potter and the Methods of Rationality (originally under the pen name Less Wrong).”, and in the footer: “This mirror is a project of Communications from Elsewhere.” The “Privacy and Terms” page made extensive reference to MIRI though: “Machine Intelligence Research Institute, Inc. (“MIRI”) has adopted this Privacy Policy (“Privacy Policy”) to provide you, the user of hpmor.com (the “Website”)”

Disagree with the “extremely emphatically” emphasis. Yes, it's not as good, but it more satisfyingly scratched the “what happened in the end” itch, much more than the half-dozen other continuations I've read.

I've pulled down a static mirror from archive.org and modified a couple pieces which depended on server-side implementation to use a javascript version, most notably the chapter dropdown. In the unlikely case it's useful, ping me.

Which is another gripe: hpmor.com prominently linked the epub/moby/pdf versions, while the trashed version makes no reference to their existence anymore.

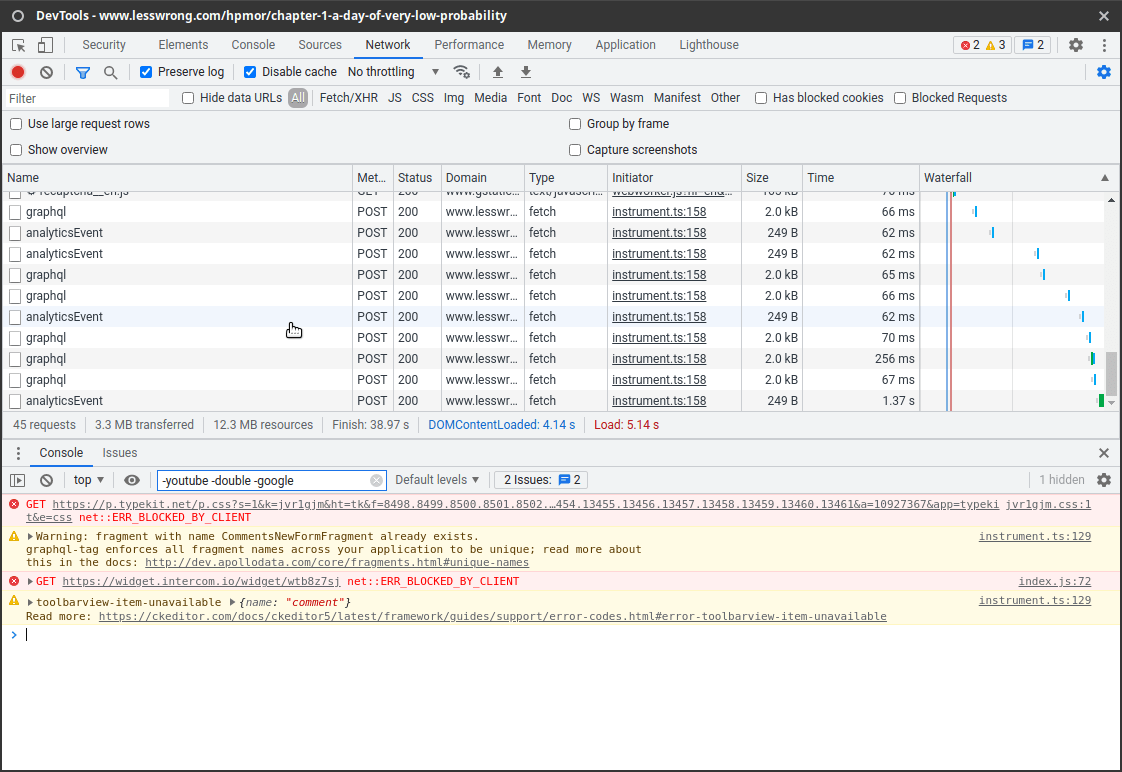

Ugh. Why does everyone need to replace nice static web pages with junk that wants to perform a couple http requests every time the window changes:

Are you arguing that you couldn't implement appropriate feedback mechanisms via the stimulation of truncated nerve endings in an amputated limb?

Actually, no, not “sorta”, it very much reminds me of gruntle.

What writing on the internet could have been.

Sorta reminds me of the old jwz gruntle (predating modern blogging).

I'd link directly, but he does things with referers sometimes, and don't want to risk it.

So much of what we call suffering is physiological, and even when the cause is purely intellectual (eg. in love)-- the body is the vehicle through which it manifests, in the gut, in migraine, tension, Parkinsonism etc. Without access to this, it is hard to imagine LLMs as suffering in any similar way to humans or animals.

I feel like this is a “submarines can't swim” confusion: chemicals, hormones, muscle, none of these things are the qualia of emotions.

I think a good argument can be made that e.g. chatgpt doesn't implement an analog of the processing those things cause, but that argument does in fact need to be made, not assumed, if you're going to argue that a LLM doesn't have these perceptions.

There is no antimemetics division.

Ah, does lesswrong have an automatic bullet numbering in the preview? because a lot of the first entries are off by one.

5,5,6

-1,-1,0

11,11,12

9,9,10

-4,-4,-3

7,7, ‹I cut it off at this point›

The first entries were all incremented (as well as 6,7,8\n7) being trimmed out.

I also mangled (and now fixed) “The rule was "Any three numbers between 1 and 5, in any order". Make sense?” from the transcript, as the transcript (and indeed the rule) was between 0 and 5.

That error was introduced when I copied it from the session. Not sure how I managed that, but I've checked the original transcript, and it correctly says “Of those example, these match the rule I'm thinking of: 3,2,1; 2,3,1; 1,1,1; 3,3,3; 0,1,2; 2,1,0; 4,3,2; The remaining examples do not.”

I've fixed the post.

An ambulance driver once explained why I beat him to the hospital: have you ever taken a ride in the back of a truck? It's a very bumpy ride.

I assume “If you've somehow figured out how to do a pivotal act” is intended to limit scope, but doesn't that smuggle the hardness of the Hard Task™ out of the equation?

Every question I ask myself how this approach would address the a given issue, I find myself having to defer to the definition of the pivotal act, which is the thing that's been defined as out of scope.