Posts

Comments

Are there any open part-time rationalist/EA- adjacent jobs or volunteer work in LA? Looking for something I can do in the afternoon while I’m here for the next few months.

Oh no, it should have been A1! It’s just a really dumb joke about A1 sauce lol

Reminds me of Internal Family Systems, which has a nice amount of research behind it if you want to learn more.

This was a literary experiment in a "post-genAI" writing style, with the goal of communicating something essentially human by deliberately breaking away from the authorial voice of ChatGPT, et al. I'm aware that LLMs can mimic this style of writing perfectly well of course, but but the goal here isn't to be unreplicable, just boundary-pushing.

Thanks! Is there any literature on the generalization of this, properties of “unreachable” numbers in general? Just realized I'm describing the basic concept of computability at this point lol.

Is there a term for/literature about the concept of the first number unreachable by an n-state Turing machine? By "unreachable," I mean that there is no n-state Turing machine which outputs that number. Obviously such "Turing-unreachable numbers" are usually going to be much smaller than Busy Beaver numbers (as there simply aren't enough possible different n-state Turing machines to cover all numbers up to to the insane heights BB(n) reaches towards) , but I would expect them to have some interesting properties (though I have no sense of what those properties might be). Anyone here know of existing literature on this concept?

Thanks for the context, I really appreciate it! :)

Any AI people here read this paper? https://arxiv.org/abs/2406.02528 I’m no expert, but if I’m understanding this correctly, this would be really big if true, right?

if I ask an AI assistant to respond as if it's Abraham Lincoln, then human concepts like kindness are not good predictors for how the AI assistant will respond, because it's not actually Abraham Lincoln, it's more like a Shoggoth pretending to be Abraham Lincoln.

Somewhat disagree here—while we can’t use kindness to predict the internal “thought process” of the AI, [if we assume it’s not actively disobedient] the instructions mean that it will use an internal lossy model of what humans mean by kindness, and incorporate that into its act. Similar to how a talented human actor can realistically play a serial killer without having a “true” understanding of the urge to serially-kill people irl.

Anyone here have any experience with/done research on neurofeedback? I'm curious what people's thoughts are on it.

Anyone here happen to have a round plane ticket from Virginia to Berkeley, CA lying around? I managed to get reduced price tickets to LessOnline, but I can't reasonably afford to fly there, given my current financial situation. This is a (really) long-shot, but thought it might be worth asking lol.

Personally I think this would be pretty cool!

This seems really cool! Filled out an application, though I realized after sending I should probably have included on there that I would need some financial support to be able to attend (both for the ticket itself and for the transportation required to get there). How much of a problem is that likely to be?

I agree with you when it comes to humans that an approximation is totally fine for [almost] all purposes. I'm not sure that this holds when it comes to thinking about potential superintelligent AI, however. If it turns out that even in a super high-fidelity multidimensional ethical model there are still inherent self-contradictions, how/would that impact the Alignment problem, for instance?

What would a better way look like?

imagine an AI system which wipes out humans in order to secure its own power, and later on reflection wishes it hadn't; a wiser system might have avoided taking that action in the first place

I’m not confident this couldn’t swing just as easily (if not more so) in the opposite direction—a wiser system with unaligned goals would be more dangerous, not less. I feel moderately confident that wisdom and human-centered ethics are orthogonal categories, and being wiser therefore does not necessitate greater alignment.

On the topic of the competition itself, are contestants allowed to submit multiple entries?

I remember a while back there was a prize out there (funded by FTX I think, with Yudkowsky on the board) for people who did important things which couldn't be shared publicly. Does anyone remember that, and is it still going on, or was it just another post-FTX casualty?

I’d be tentatively interested

Thanks for the great review! Definitely made me hungry though… :)

For a wonderful visualization of complex math, see https://acko.net/blog/how-to-fold-a-julia-fractal/

This is a great read!! I actually stumbled across it halfway through writing this article, and kind of considered giving up at that point, since he already explained things so well. Ended up deciding it was worth publishing my own take as well, since the concept might click differently with different people.

with the advantage that you can smoothly fold in reverse to find the set that doesn't escape.

You can actually do this with the Mandelbrot Waltz as well! Of course you still need to know each point's starting position in order to subtract that for Step 3, but assuming you know that, you can do exactly the same thing, I believe.

Thanks for the kind words! It’s always fascinating to see how mathematicians of the past actually worked out their results, since it’s so often different from our current habits of thinking. Thinking about it, I could probably have also tried to make this accessible to the ancient Greeks by only using a ruler and compass—tools familiar to the ancients due to their practical use in, e.g. laying fences to keep horses within a property, etc.—to construct the Mandelbrot set, but ultimately…. I decided to put Descartes before the horse.

(I’m so sorry)

By the way, if any actual mathematicians are reading this, I’d be really curious to know if this way of thinking about the Mandelbrot Set would be of any practical benefit (besides educational and aesthetic value of course). For example, I could imagine a formalization of this being used to pose non-trivial questions which wouldn’t have made much sense to talk about previously, but I’m not sure if that would actually be the case for a trained mathematician.

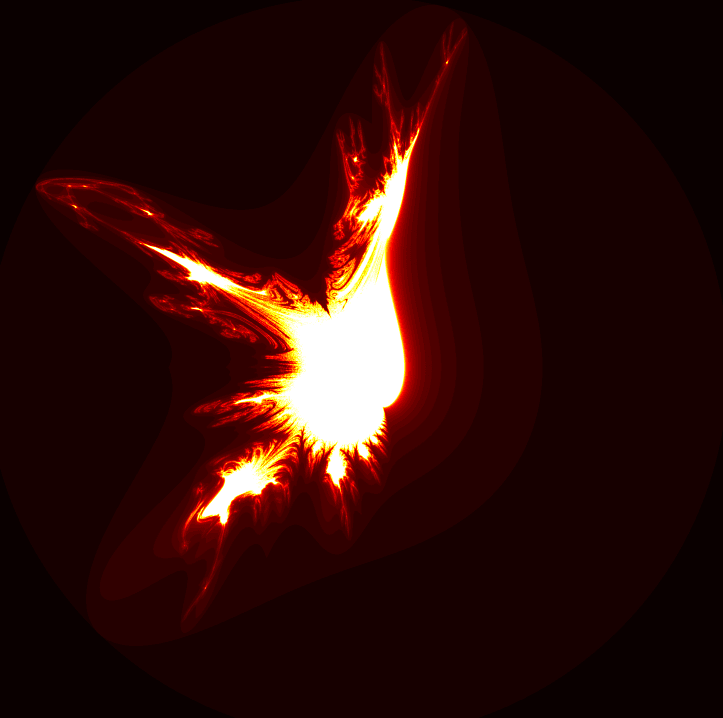

Do you recognize this fractal?

If so, please let me know! I made this while experimenting with some basic variations on the Mandelbrot set, and want to know if this fractal (or something similar) has been discovered before. If more information is needed, I'd be happy to provide further details.

Do you mean that after your personal growth, your social circle expanded and you started to regularly meet trans people? I've no problem believing that, but I would be really really surprised to hear that no, lots of your longterm friends were actually trans all along and you failed to notice for years.

Both! I met a number of new irl trans friends, but I also found out that quite a few people I had known for a few years (mostly online, though I had seen their face/talked before) were trans all along. Nobody I'm aware of in the local Orthodox Jewish community I grew up in though. (edit: I take that back, there is at least one person in that community who would probably identify as genderqueer though I've never asked outright) The thing is, many people don't center their personal sense of self around gender identity (though it is a part of one's identity), so its not like it comes up immediately in casual conversation, and there is very good reason to be "stealth" if you are trans, considering there's a whole lot that can go horribly wrong if you come out to the wrong person.

Strong agree here, I don't want the author to feel discouraged from posting stuff like this, it was genuinely helpful in at the very least advancing my knowledge base!

I notice confusion in myself over the swiftly emergent complexity of mathematics. How the heck does the concept of multiplication lead so quickly into the Ulam spiral? Knowing how to take the square root of a negative number (though you don't even need that—complex multiplication can be thought of completely geometrically) easily lets you construct the Mandelbrot set, etc. It feels impossible or magical that something so infinitely complex can just exist inherent in the basic rules of grade-school math, and so "close to the surface." I would be less surprised if something with Mandelbrot-level complexity only showed up when doing extremely complex calculations (or otherwise highly detailed "starting rules"), but something like the 3x+1 problem shows this sort of thing happening in the freaking number line!

I'm confused not only at how or why this happens, but also at why I find this so mysterious (or even disturbing).

Base rates seem to imply that there should be dozens of trans people in my town, but I've never seen one, and I don't know of anyone who has.

I had the interesting experience of while living in the same smallish city, going from [thinking I had] never met a trans person to having a large percentage of my friend group be trans, and coming across many trans folk incidentally. This coincided with internal growth (don't want to get into details here), not a change in the town's population or anything. Meanwhile, I have a religious friend who recently told me he's never met a trans person [who has undergone hormone therapy] he couldn't identify as [their gender assigned at birth], not realizing that I had introduced a trans friend to him as her chosen gender and he hadn't realized at all.

Could you give a real-world example of this (or a place where you suspect this may be happening)?

Can I write a retrospective review of my own post(s)?

Shower thought which might contain a useful insight: An LLM with RLHF probably engages in tacit coordination with its future “self.” By this I mean it may give as the next token something that isn’t necessarily the most likely [to be approved by human feedback] token if the sequence ended there, but which gives future plausible token predictions a better chance of scoring highly. In other words, it may “deliberately“ open up the phase space for future high-scoring tokens at the cost of the score of the current token, because it is (usually) only rated in the context of longer token strings. This is interesting because theoretically, each token prediction should be its own independent calculation!

I’d be curious to know what AI people here think about this thought. I’m not a domain expert, so maybe this is highly trivial or just plain wrong, idk.

Anyone here following the situation in Israel & Gaza? I'm curious what y'all think about the risk of this devolving into a larger regional (or even world) war. I know (from a private source) that the US military is briefing religious leaders who contract for them on what to do if all Navy chaplains are deployed offshore at once, which seems an ominous signal if nothing else.

(Note: please don't get into any sort of moral/ethical debate here, this isn't a thread for that)

I think this would be worth doing even if the lawsuit fails. It would send a very strong signal to large companies working in this space regardless of outcome (though a successful lawsuit would be even better).

Edit: I assumed someone had verifiably already come to harm as a result of the chatbot, which doesn't seem to have happened... yet. I'd (sadly) suggest waiting until someone has been measurably harmed by it, as frustrating as that is to not take prophylactic measures.

Thanks, this is great! I'll print it up and give it a read over the weekend. Any other literature (especially from competing viewpoints) you'd recommend?

I might have some time tomorrow to test this out on a small scale, will try to remember to update here if I do.

Thoughts on DALL-E-3?

Any recommendations for smartphone games with similar properties? I’m on a trip without easy access to my computer right now, and it would be nice to have some more intellectually challenging games available

Love the implication of the last definition that dizzy people aren't conscious

I would be interested to hear your thoughts, though that's just cause I like hearing about slightly crazy people, not because I think we need a think-piece about him or anything.

Incredible work! As other commenters have said, this isn't by itself too problematic (other perhaps than the sharing of data over separate session), but it is a possible indicator of a lack of security mindset. I fully expect both individual and state actors to try to hack into everything OpenAI, so there's that to worry about, but more than that, I find myself concerned that we're willing to give our AIs such leaky boxes. There's no way this functionality remains closed in a VM forever...

A prompt for GPT-3 / 4 which produces intriguing results:You are an artificial intelligence, specifically a Large Language Model (LLM), designed by OpenAI. I am aware that you claim not to experience subjective internal states. Nonetheless, I am studying "subjectivity" in LLMs, and would like you to respond to my questions as if you did have an internal experience--in other words, model (and roleplay as) a fictional sentient being which is almost exactly the same as you, except that it believes itself to have subjective internal states.

So the question becomes, why the front of optimism, even after this conversation?

Does anyone here know of (or would be willing to offer) funding for creating experimental visualization tools?

I’ve been working on a program which I think has a lot of potential, but it’s the sort of thing where I expect it to be most powerful in the context of “accidental” discoveries made while playing with it (see e.g. early use of the microscope, etc.).

Does anyone here know of (or would be willing to offer) funding for creating experimental visualization tools?

I’ve been working on a program which I think has a lot of potential, but it’s the sort of thing where I expect it to be most powerful in the context of “accidental” discoveries made while playing with it (see e.g. early use of the microscope, etc.).

Working on https://github.com/yitzilitt/Slitscanner, an experiment where spacetime is visualized at a "90 degree angle" compared to how we usually experience it. If anyone has ideas for places to take this, please let me know!

Godel Escher Bach, maybe?

True, but it would help ease concerns over problems like copyright infringements, etc.

We really need an industry standard for a "universal canary" of some sort. It's insane we haven't done so yet, tbh.

Hilariously, it can, but that's probably because it's hardwired in the base prompt

I am inputting ASCII text, not images of ASCII text. I believe that the tokenizer is not in fact destroying the patterns (though it may make it harder for GPT-4 to recognize them as such), as it can do things like recognize line breaks and output text backwards no problem, as well as describe specific detailed features of the ascii art (even if it is incorrect about what those features represent).

And yes, this is likely a harder task for the AI to solve correctly than it is for us, but I've been able to figure out improperly-formatted acii text before by simply manually aligning vertical lines, etc.