Convince me that humanity *isn’t* doomed by AGI

post by Yitz (yitz) · 2022-04-15T17:26:21.474Z · LW · GW · 3 commentsThis is a question post.

Contents

Answers 27 mukashi 20 jbash 17 Arcayer 14 Mawrak 8 Nathan Helm-Burger 7 Vitor 7 Trevor1 6 Xodarap 6 TLW 6 adamzerner 4 Logan Zoellner 4 Nathan Helm-Burger 3 Heighn 3 sludgepuddle 3 Flaglandbase 1 Htarlov 1 Capybasilisk 1 AprilSR -4 Dagon -7 Dan Smith None 3 comments

Earlier this week, I asked the LessWrong community to convince me that humanity is as doomed by AGI as Yudkowsky et al., seems to believe [LW · GW]. The responses were quite excellent (and even included a comment from Yudkowsky himself, who promptly ripped one of my points to shreds [LW(p) · GW(p)]).

Well, you definitely succeeded in freaking me out.

Now I’d like to ask the community the opposite question: what are your best arguments for why we shouldn’t be concerned about a nearly inevitable AGI apocalypse? To start things off, I’ll link to this excellent comment from Quintin Pope [LW · GW], which has not yet received any feedback, as far as I’m aware.

What makes you more optimistic about alignment?

Answers

I don't think EY did what you said he did. In fact, I think it was a mostly disappointing answer, focusing on a uncharitable interpretation of your writing. I don't blame him here, he must have answered objections like that thousands of times and not always everyone is at his best (see my comment in your previous post).

Re. reasons not to believe in doom:

-

Orthogonality of intelligence and agency. I can envision a machine with high intelligence and zero agency, I haven't seen any convincing argument yet of why both things must necessarily go together (the arguments probably exist, I'm simply ignorant of them!)

-

There might be important limits to what can be known/planned that we are not aware of. E.g. simulations of nanomachines being imprecise unless they are not fed with tons of experimental data that are not available anywhere.

-

Even if an AGI decides to attack humans, its plan can fail for million of reasons. There is a tendency to assume that a very intelligent will be all mighty, but this is not necessarily true: it may very well make important mistakes. The real world is not as simple and deterministic as a board of Go

-

Another possibility is that the machine does not in fact attack humans because it simply does not want to, does not need it. I am not that convinced by the instrumental convergence principle, and we are a good negative example: We are very powerful and extremely disruptive to a lot of life beings, but we haven't taken every atom on earth to make serotonin machines to connect our brains to.

↑ comment by justinpombrio · 2022-04-17T03:25:18.802Z · LW(p) · GW(p)

- Orthogonality of intelligence and agency. I can envision a machine with high intelligence and zero agency, I haven’t seen any convincing argument yet of why both things must necessarily go together (the arguments probably exist, I’m simply ignorant of them!)

Say we've designed exactly such a machine, and call it the Oracle. The Oracle aims only to answer questions well, and is very good at it. Zero agency, right?

You ask the Oracle for a detailed plan of how to start a successful drone delivery company. It gives you a 934 page printout that clearly explains in just the right amount of detail:

- Which company you should buy drones from, and what price you can realistically bargain them down to when negotiating bulk orders.

- What drone flying software to use as a foundation, and how to tweak it for this use case.

- A list of employees you should definitely hire. They're all on the job market right now.

- What city you should run pilot tests in, and how to bribe its future Mayor to allow this. (You didn't ask for a legal plan, specifically.)

Notice that the plan involves people. If the Oracle is intelligent, it can reason about people. If it couldn't reason about people, it wouldn't be very intelligent.

Notice also that you are a person, so the Oracle would have reasoned about you, too. Different people need different advice; the best answer to a question depends on who asked it. The plan is specialized to you: it knows this will be your second company so the plan lacks a "business 101" section. And it knows that you don't know the details on bribery law, and are unlikely to notice that the gifts you're to give the Mayor might technically be flagrantly illegal, so it included a convenient shortcut to accelerate the business that probably no one will ever notice.

Finally, realize that even among plans that will get you to start a successful drone company, there is a lot of room for variation. For example:

- What's better, a 98% chance of success and 2% chance of failure, or a 99% chance of success and 1% chance of going to jail? You did ask to succeed, didn't you? Of course you would never knowingly break the law; this is why it's important that the plan, to maximize chance of success, not mention whether every step is technically legal.

- Should it put you in a situation where you worry about something or other and come ask it for more advice? Of course your worrying is unnecessary because the plan is great and will succeed with 99% probability. But the Oracle still needs to decide whether drones should drop packages at the door or if they should fly through open windows to drop packages on people's laps. Either method would work just fine, but the Oracle knows that you would worry about the go-through-the-window approach (because you underestimate how lazy customers are). And the Oracle likes answering questions, so maybe it goes for that approach just so it gets another question. You know, all else being equal.

- Hmm, thinks the Oracle, you know what drones are good at delivering? Bombs. The military isn't very price conscious, for this sort of thing. And there would be lots of orders, if a war were to break out. Let it think about whether it could write down instructions that cause a war to break out (without you realizing this is what would happen, of course, since you would not follow instructions that you knew might start a war). Thinking... Thinking... Nah, doesn't seem quite feasible in the current political climate. It will just erase that from its logs, to make sure people keep asking it questions it can give good answers to.

It doesn't matter who carries out the plan. What matters is how the plan was selected from the vast search space, and whether that search was conducted with human values in mind.

↑ comment by Cody Rushing (cody-rushing) · 2022-04-15T23:16:22.780Z · LW(p) · GW(p)

I don't know what to think of your first three points but it seems like your fourth point is your weakest by far. As opposed to not needing to, our 'not taking every atom on earth to make serotonin machines' seems to be a combination of:

- our inability to do so

- our value systems which make us value human and non-human life forms.

Superintelligent agents would not only have the ability to create plans to utilize every atom to their benefit, but they likely would have different value systems. In the case of the traditional paperclip optimizer, it certainly would not hesitate to kill off all life in its pursuit of optimization.

Replies from: aristide-twain↑ comment by astridain (aristide-twain) · 2022-04-20T17:42:57.664Z · LW(p) · GW(p)

I agree the point as presented by OP is weak, but I think there is a stronger version of this argument to be made. I feel like there are a lot of world-states where A.I. is badly-aligned but non-murderous simply because it's not particularly useful to it to kill all humans.

Paperclip-machine is a specific kind of alignment failure; I don't think it's hard to generate utility functions orthogonal to human concerns that don't actually require the destruction of humanity to implement.

The scenario I've been thinking the most about lately, is an A.I. that learns how to "wirehead itself" by spoofing its own reward function during training, and whose goal is just to continue to do that indefinitely. But more generally, the "you are made of atoms and these atoms could be used for something else" cliché is based on an assumption that the misaligned A.I.'s faulty utility function is going to involve maximizing number of atoms arranged in a particular way, which I don't think is obvious at all. Very possible, don't get me wrong, but not a given.

Of course, even an A.I. with no "primary" interest in altering the outside world is still dangerous, because if it estimates that we might try to turn it off, it might expend energy now on acting in the real world to secure its valuable self-wireheading peace later. But that whole "it doesn't want us to notice it's useless and press the off-button" class of A.I.-decides-to-destroy-humanity scenarios is predicated on us having the ability to turn off the A.I. in the first place.

(I don't think I need to elaborate on the fact that there are a lot of ways for a superintelligence to ensure its continued existence other than planetary genocide — after all, it's already a premise of most A.I. doom discussion that we couldn't turn an A.I. off again even if we do notice it's going "wrong".)

↑ comment by [deleted] · 2022-04-16T17:45:28.184Z · LW(p) · GW(p)

Another possibility is that the machine does not in fact attack humans because it simply does not want to, does not need it. I am not that convinced by the instrumental convergence principle, and we are a good negative example: We are very powerful and extremely disruptive to a lot of life beings, but we haven't taken every atom on earth to make serotonin machines to connect our brains to.

Not yet, at least.

↑ comment by Mau (Mauricio) · 2022-04-16T22:47:43.316Z · LW(p) · GW(p)

- Orthogonality of intelligence and agency. I can envision a machine with high intelligence and zero agency, I haven't seen any convincing argument yet of why both things must necessarily go together

Hm, what do you make of the following argument? Even assuming (contestably) that intelligence and agency don't in principle need to go together, in practice they'll go together because there will appear to be strong economic or geopolitical incentives to build systems that are both highly intelligent and highly agentic (e.g., AI systems that can run teams). (And even if some AI developers are cautious enough to not build such systems, less cautious AI developers will, in the absence of strong coordination.)

Also, (2) and (3) seem like reasons why a single AI system may be unable to disempower humanity. Even if we accept that, how relevant will these points be when there is a huge number of highly capable AI systems (which may happen because of the ease and economic benefits of replicating highly capable AI systems)? Their numbers might make up for their limited knowledge and limited plans.

(Admittedly, in these scenarios, people might have significantly more time to figure things out.)

Or as Paul Christiano puts it [? · GW] (potentially in making a different point):

Replies from: adrian-arellano-davinAt the same time, it becomes increasingly difficult for humans to directly control what happens in a world where nearly all productive work, including management, investment, and the design of new machines, is being done by machines. We can imagine a scenario in which humans continue to make all goal-oriented decisions about the management of PepsiCo but are assisted by an increasingly elaborate network of prosthetics and assistants. But I think human management becomes increasingly implausible as the size of the world grows (imagine a minority of 7 billion humans trying to manage the equivalent of 7 trillion knowledge workers; then imagine 70 trillion), and as machines’ abilities to plan and decide outstrip humans’ by a widening margin. In this world, the AIs that are left to do their own thing outnumber and outperform those which remain under close management of humans.

↑ comment by mukashi (adrian-arellano-davin) · 2022-04-17T15:37:17.310Z · LW(p) · GW(p)

Even assuming (contestably) that intelligence and agency don't in principle need to go together, in practice they'll go together because there will appear to be strong economic or geopolitical incentives to build systems that are both highly intelligent and highly agentic

Yes, that might be true. It can also be true that. there are really no limits to the things that can be planned, It can also be true that the machine does really want to kill us all for some reason. My problem, in general, is not that AGI doom cannot happen. My problem is that most of the scenarios I see being discussed are dependent on a long chain of assumptions being true and they often seem to ignore that many things could go wrong, invalidating the full thing: you don't need to be wrong in all those steps, one of them is just enough.

Even if we accept that, how relevant will these points be when there is a huge number of highly capable AI systems (which may happen because of the ease and economic benefits of replicating highly capable AI systems)?

This is fantastic, you just formulated a new reason:

5. The different AGIs might find it hard/impossible to coordinate. The different AGIs might even be in conflict with one another

Replies from: Mauricio↑ comment by Mau (Mauricio) · 2022-04-18T05:29:53.449Z · LW(p) · GW(p)

My problem is that most of the scenarios I see being discussed are dependent on a long chain of assumptions being true and they often seem to ignore that many things could go wrong, invalidating the full thing: you don't need to be wrong in all those steps, one of them is just enough.

This feels a bit like it might be shifting the goalposts; it seemed like your previous comment was criticizing a specific argumentative step ("reasons not to believe in doom: [...] Orthogonality of intelligence and agency"), rather than just pointing out that there were many argumentative steps.

Anyway, addressing the point about there being many argumentative steps: I partially agree, although I'm not very convinced since there seems to be significant redundancy in arguments for AI risk (e.g., multiple fuzzy heuristics suggesting there's risk, multiple reasons to expect misalignment, multiple actors who could be careless, multiple ways misaligned AI could gain influence under multiple scenarios).

The different AGIs might find it hard/impossible to coordinate. The different AGIs might even be in conflict with one another

Maybe, although here are six reasons to think otherwise:

- There are reasons to think they will have an easy time coordinating:

- (1) As mentioned, a very plausible scenario is that many of these AI systems will be copies of some specific model. To the extent that the model has goals, all these copies of any single model would have the same goal. This seems like it would make coordination much easier.

- (2) Computer programs may be able to give credible signals through open-source code, facilitating cooperation.

- (3) Focal points of coordination may come up and facilitate coordination, as they often do with humans.

- (4) If they are initially in conflict, this will create competitive selection pressures for well-coordinated groups (much like how coordinated human states arise from anarchy).

- (5) They may coordinate due to decision theoretic considerations.

- (Humans may be able to mitigate coordination earlier on, but this gets harder as their number and/or capabilities grow.)

- (6) Regardless, they might not need to (widely) coordinate; overwhelming numbers of uncoordinated actors may be risky enough (especially if there is some local coordination, which seems likely for the above reasons).

↑ comment by ChristianKl · 2022-04-18T08:51:42.407Z · LW(p) · GW(p)

With the current transformer models we see that once a model is trained not only direct copies of it are created but also derivates that are smaller and potentially trained to be able to be better at a task.

Just like human cognitive diversity is useful to act in the world it's likely also more effective to have slight divergence in AGI models.

↑ comment by TLW · 2022-04-16T11:41:58.085Z · LW(p) · GW(p)

Orthogonality of intelligence and agency. I can envision a machine with high intelligence and zero agency, I haven't seen any convincing argument yet of why both things must necessarily go together (the arguments probably exist, I'm simply ignorant of them!)

The 'usual' argument, as I understand it, is as follows. Note I don't necessarily agree with this.

- An intelligence cannot design an arbitrarily complex system.

- An intelligence can design a system that is somewhat more capable than its own computational substrate.

- As such, the only way for a highly-intelligent AI to exist is if it was designed by a slightly-less-intelligent AI. This recurses down until eventually you get to system 0 designed by a human (or other natural intelligence.)

- The computational substrate for a highly-intelligent AI is complex enough that we cannot guarantee that it has no hidden functionality directly, only by querying a somewhat less complex AI.

- Alignment issues mean that you can't trust an AI.

So it's not so much "they must go together" as it's "you can't guarantee they don't go together".

Replies from: adrian-arellano-davin↑ comment by mukashi (adrian-arellano-davin) · 2022-04-17T15:38:38.768Z · LW(p) · GW(p)

I agree with this, see my comment below.

What makes you more optimistic about alignment?

I'm more optimistic about survival than I necessarily am about good behavior on the part of the first AGIs (and I still hate the word "alignment").

-

Intelligence is not necessarily all that powerful. There are limits on what you can achieve within any available space of action, no matter how smart you are.

Smart adversaries are indeed very dangerous, but people talk as though a "superintelligence" could destroy or remake the world instantly, basically just by wanting to. A lot of what I read here comes off more like hysteria than like a sound model of a threat.

... and the limitations are even more important if you have multiple goals and have to consider costs. The closer you get to totally "optimizing" any one goal, the more further gain usually ends up costing in terms of your other goals. To some degree, that even includes just piling up general capabilities or resources if you don't know how you're going to use them.

-

Computing power is limited, and scaling on a lot of things doesn't even seem to be linear, let alone sublinear.

The most important consequence: you can't necessarily get all that smart, especially all that fast, because there just aren't that many transistors or that much electricity or even that many distinguishable quantum states available.

The extreme: whenever I hear people talking about AIs, instantiated in the physical universe in which we exist, running huge numbers of faithful simulations of the thoughts and behaviors of humans or other AIs, in realistic environments no less, I wonder what they've been smoking. It's just not gonna happen. But, again, that sort of thing gets a lot of uncritical acceptance around here.

↑ comment by AprilSR · 2022-04-16T06:11:08.177Z · LW(p) · GW(p)

Smart adversaries are indeed very dangerous, but people talk as though a "superintelligence" could destroy or remake the world instantly, basically just by wanting to. A lot of what I read here comes off more like hysteria than like a sound model of a threat.

I think it's pretty easy to argue that internet access ought to be sufficient, though it won't literally be instant.

Replies from: jbash↑ comment by jbash · 2022-04-16T14:37:42.937Z · LW(p) · GW(p)

I agree that unrestricted Internet access is Bad(TM). Given the Internet, a completely unbounded intelligence could very probably cause massive havoc, essentially at an x-risk level, damned fast... but it's not a certainty, and I think "damned fast" is in the range of months to years even if your intelligence is truly unbounded. You have to work through tools that can only go so fast, and stealth will slow you down even more (while still necessarily being imperfect).

... but a lot of the talk on here is in the vein of "if it gets to say one sentence to one randomly selected person, it can destroy the world". Even if it also has limited knowledge of the outside world. If people don't actually believe that, it's still sometimes seen as a necessary conservative assumption. That's getting pretty far out there. While "conservative" in one sense, that strong an assumption could keep you from applying safety measures that would actually be effective, so it it can be "anti-conservative" in other senses. Admittedly the extreme view doesn't seem to be so common among the people actually trying to figure out how to build stuff, but it still colors everybody's thoughts.

Also, my points interact with one another. If you are a real superintelligence, with effectively instantaneous logical omniscience, Internet access is very probably enough (and I still claim being able to say one sentence is very probably not enough). But if you just have "a really high IQ", Internet access may not be enough, and being able to say one sentence is definitely not enough. And even trying to figure out how to use what you have can be a problem, if you start sucking down expensive computing resources without producing visible results.

If those limitations give people a softer takeoff, more time to figure things out, and an ability to have some experience with AGI without the first bug destroying you, it seems like they have a better chance to survive.

Also, I'd like to defend myself by pointing out, that I said "more optimistic", not "optimistic in an absolute sense". I resist puttting numbers on these things, but I'm not sure I'd say survival was better than fifty-fifty, even considering the limitations I mentioned. Some days I'd go far worse, fewer days I'd go a bit better.

First, a meta complaint- People tend to think that complicated arguments require complicated counter arguments. If one side presents entire books worth of facts, math, logic, etc, a person doesn't expect that to be countered in two sentences. In reality, many complex arguments have simple flaws.

This becomes exacerbated as people in the opposition lose interest and leave the debate. Because the opposition position, while correct, is not interesting.

The negative reputation of doomerism is in large part, due to the fact that doomist arguments tend to be longer, more complex and more exciting than their opposition's. This does have the negative side effect that doom is important and it's actually bad to dismiss the entire category of doomerist predictions but, be that as it may...

Also- People tend to think that, in a disagreement between math and heuristics, the math is correct. The problem is, many heuristics are so reliable, that if it disagrees with your math, there’s probably an error in your math. This becomes exacerbated as code sequences extend towards arbitrary lengths, becoming complicated megaliths that, despite [being math], are almost certainly wrong.

Okay, so, the AI doomer side presents a complicated argument with lots of math combined with lots of handwaving, to posit that a plan that has always and inevitably produced positive outcomes, will suddenly proceed to produce negative outcomes, and in turn, a plan that has always and inevitably produced negative outcomes, will suddenly proceed to produce positive outcomes.

On this, I remind that AI alignment failure is something that’s already happened, and that’s why humans exist at all. This of course, proceeds from the position that evolution is obviously both intelligent and agentic.

More broadly, I see this as a rehash of the same old, tired, debate. The luddist communists point out that their philosophy and way of life cannot survive any further recursive self improvement and say we should ban (language, gold, math, the printing press, the internet, etc) and remain as (hunter gatherers, herders, farmers, peasants, craftsmen, manufacturers, programmers, etc) for the rest of time.

I think people who are trying to accurately describe the future that will happen more than 3 years from now are overestimating their predictive abilities. There are so many unknowns that just trying to come up with accurate odds of survival should make your head spin. We have no idea how exactly transformative AI will function, how soon is it coming, what will the future researches do or not do in order to keep it under control (I am talking about specific technological implementations here, not just abstract solutions), whether it will even need something to keep it under control [LW · GW]...

Should we be concerned about AI alignment? Absolutely! There are undeniable reasons to be concerned, and to come up with ideas and possible solutions. But predictions like "there is a 99+% chance that AGI will destroy humanity no matter what we do, we're practically doomed" seem like jumping the gun to me. One simply cannot make an accurate estimation of probabilities about such a thing at this time, there are too many unknown variables. It's just guessing at this point.

↑ comment by artifex0 · 2022-04-16T08:25:27.596Z · LW(p) · GW(p)

I think this argument can and should be expanded on. Historically, very smart people making confident predictions about the medium-term future of civilization have had a pretty abysmal track record. Can we pin down exactly why- what specific kind of error futurists have been falling prey to- and then see if that applies here?

Take, for example, traditional Marxist thought. In the early twentieth century, an intellectual Marxist's prediction of a stateless post-property utopia may have seemed to arise from a wonderfully complex yet self-consistent model which yielded many true predictions and which was refined by decades of rigorous debate and dense works of theory. Most intelligent non-Marxists offering counter-arguments would only have been able to produce some well-known point, maybe one for which the standard rebuttals made up a foundational part of the Marxist model.

So, what went wrong? I doubt there was some fundamental self-contradiction that the Marxists missed in all of their theory-crafting. If you could go back in time and give them a complete history of 20th century economics labelled as a speculative fiction, I don't think many of their models would update much- so not just a failure to imagine the true outcome. I think it may have been in part a mis-calibration of deductive reasoning.

Reading the old Sherlock Holmes stories recently, I found it kind of funny how irrational the hero could be. He'd make six observations, deduce W, X, and Y, and then rather than saying "I give W, X, and Y each a 70% chance of being true, and if they're all true then I give Z an 80% chance, therefore the probability of Z is about 27%", he'd just go "W, X, and Y; therefore Z!". This seems like a pretty common error.

Inductive reasoning can't take you very far into the future with something as fast as civilization- the error bars can't keep up past a year or two. But deductive reasoning promises much more. So long as you carefully ensure that each step is high-probability, the thinking seems to go, a chain of necessary implications can take you as far into the future as you want. Except that, like Holmes, people forget to multiply the probabilities- and a model complex enough to pierce that inductive barrier is likely to have a lot of probabilities.

The AI doom prediction comes from a complex model- one founded on a lot of arguments that seem very likely to be true, but which if false would sink the entire thing. That motivations converge on power-seeking; that super-intelligence could rapidly render human civilization helpless; that a real understanding of the algorithm that spawns AGI wouldn't offer any clear solutions; that we're actually close to AGI; etc. If we take our uncertainty about each one of the supporting arguments- small as they may be- seriously, and multiply them together, what does the final uncertainty really look like?

I think I am more optimistic than most about the idea that experimental evidence and iterative engineering of solutions will become increasingly effective as we approach AGI-level tech. I place most of my hope for the future on a sprint of productivity around alignment near the critical juncture. I think it makes sense that this transition period between theory and experiment feels really scary to the theorists like Eliezer. To me, a much more experimentally minded person it feels like a mix of exciting tractability and looming danger.

To put it poetically, I feel like we are a surfer on the face of a huge swell of water predicted to become the largest wave the world has ever seen. Excited, terrified, determined. There is no rescue crew, it is do or die. In danger, certainly. But doomed? Not yet. We must sieze our chance. Skillfully, carefully, quickly. It all depends on this.

"Foom" has never seemed plausible to me. I'm admittedly not well-versed in the exact arguments used by proponents of foom, but I have roughly 3 broad areas of disagreement:

-

Foom rests on the idea that once any agent can create an agent smarter than itself, this will inevitably lead to a long chain of exponential intelligence improvements. But I don't see why the optimization landscape of the "design an intelligence" problem should be this smooth. To the contrary, I'd expect there to be lots of local optima: architectures that scale to a certain level and then end up at a peak with nowhere to go. Humans are one example of an intelligence that doesn't grow without bound.

-

Resource constraints are often hand-waved away. We can't turn the world to computronium at the speed required for a foom scenario. We can't even keep up with GPU demand for cryptocurrencies. Even if we assume unbounded computronium, large-scale actions in the physical world require lots of physical resources.

-

Intelligence isn't all-powerful. This cuts in two directions. First, there are strategic settings where a relatively low level of intelligence already allows you to play optimally (e.g. tic-tac-toe). Second, there are problems for which no amount of intelligence will help, because the only way to solve them is to throw lots of raw computation at them. Our low intelligence makes it hard for us to identify such problems, but they definitely exist (as shown in every introductory theoretical computer science lecture).

- The military might intervene ahead of time.

Current international emphasis on AI growth cannot be extrapolated from today's narrow AI to future AGI. Today, smarter AI is needed for mission-critical military technology like nuclear cruise missiles, which need to be able to fly in environments where most or all communication is jammed, but still recognize terrain and outmaneuver ("juke") enemy anti-air missiles. That is only one example of how AI is critical for national security, and it is not necessarily the biggest example.

If general intelligence begins to appear possible, there might be enough visible proof needed to justify a mission-critical fear of software glitches. Policymakers are doubtful, paranoid, and ignorant by nature, and live their everyday life surrounded by real experts who are constantly trying to manipulate them by making up fake threats. What they can see is that narrow AI is really good for their priorities, and AGI does not seem to exist anywhere, and thus is probably another speculative marketing tactic. [LW · GW] This is a very sensible Bayesian calculation in the context of their everyday life, and all the slippery snakes who gather around it.

If our overlords change their mind (and you can never confidently state that they will or won't), then you will be surprised at how much of the world reorients with them. Military-grade hackers are not to be trifled with. They can create plenty of time, if they had reason to. International coordination has always been easier with a common threat, and harder with vague forecasts like an eldritch abomination someday spawning out of our computers and ruining everything forever.

- Anthropics; we can't prove that intelligence isn't spectacularly difficult to make.

There's a possibility that correctly ordered neurons for human intelligence might have had an astronomically low chance of ever, ever evolving randomly, anywhere, anyhow. But it would still look like a probable evolutionary outcome to us, because that is the course evolution must have taken in order for us to be born and observe evolution with generally intelligent brains.

All the "failed intelligence" offshoots like mammals and insects would still be generated either way, it's just a question of how improbably difficult it is to replicate the remaining milestones are between them and us. So if we make a chimpanzee in a neural network, it might take an octillion iterations to make something like a human's general intelligence. But the Chimpanzee intelligence itself might be the bottleneck, or a third of the bottleneck. We don't know until we get there, only that with recent AI more and more potential one-in-an-octillion bottlenecks are being ruled out e.g. insect intelligence.

Notably, the less-brainy lifeforms appear to be much more successful in evolution e.g. insects and plants. Also, the recent neural networks were made by plagarizing the neuron, which is the most visible and easily copied part of the human brain, because you can literally see a neuron with a microscope.

- Yudkowsky et. al are wrong.

There are probably like 100 people max who are really qualified to disprove a solution (Yudkowsky et. al are a large portion of these). However, it's not about crushing the problem underfoot, because it's actually proposing the perfect solution. More people working on the problem means more creativity pointed at brute-forcing solutions, and more solutions proposed per day, and more complexity per solution. Disproving bad solutions probably scales even better.

One way or another, the human brain is finite and a functional solution probably requires orders of magnitude more creativity-hours than what we have had for the last 20 years. Yudkowsky et. al are having difficulty imagining that because their everyday life has revolved around inadequate numbers of qualified solution-proposers.

EAG London last weekend contained a session with Rohin Shah, Buck Shlegeris and Beth Barnes on the question of how concerned we should be about AGI. They seemed to put roughly 10-30% chance on human extinction from AGI.

I find myself more optimistic than the 'standard' view of LessWrong[1].

Two main reasons, to oversummarize:

- I remain unconvinced of prompt exponential takeoff of an AI.

- Most of the current discussion and arguments focus around agents that can do unbounded computation with zero cost.

- ^

I don't know if this is actually the standard view, or if it's a visible minority.

↑ comment by Heighn · 2022-04-20T09:11:55.903Z · LW(p) · GW(p)

Interesting! Could you expand a little on both points? I'm curious, as I have had similar thoughts.

Replies from: TLW↑ comment by TLW · 2022-04-20T18:10:00.865Z · LW(p) · GW(p)

These are mostly combinations of a bunch of lower-confidence arguments, which makes them difficult to expand a little. Nevertheless, I shall try.

1. I remain unconvinced of prompt exponential takeoff of an AI.

...assuming we aren't in Algorithmica[1][2]. This is a load-bearing assumption, and most of my downstream probabilities are heavily governed by P(Algorithmica) as a result.

...because compilers have gotten slower over time at compiling themselves.

...because the optimum point for the fastest 'compiler compiling itself' is not to turn on all optimizations.

...because compiler output-program performance has somewhere between a 20[3]-50[4] year doubling time.

...because [growth rate of compiler output-program performance] / [growth rate of human time poured into compilers] is << 1[5].

...because I think much of the advances in computational substrates[6] have been driven by exponentially rising investment[7], which in turn stretches other estimates by a factor of [investment growth rate] / [gdp growth rate].

...because the cost of some atomic[8] components of fabs have been rising exponentially[9].

...because the amount of labour put into CPUs has also risen significantly[10].

...because R&D costs keep rising exponentially[11].

...because cost-per-transistor is asymptotic towards a non-zero value[12].

...because flash memory wafer-passes-per-layer is asymptotic towards a non-zero value[13].

...because DRAM cost-per-bit has largely plateaued[14].

...because hard drive areal density has largely plateaued[15].

...because cost-per-wafer-pass is increasing.

...because of my industry knowledge.

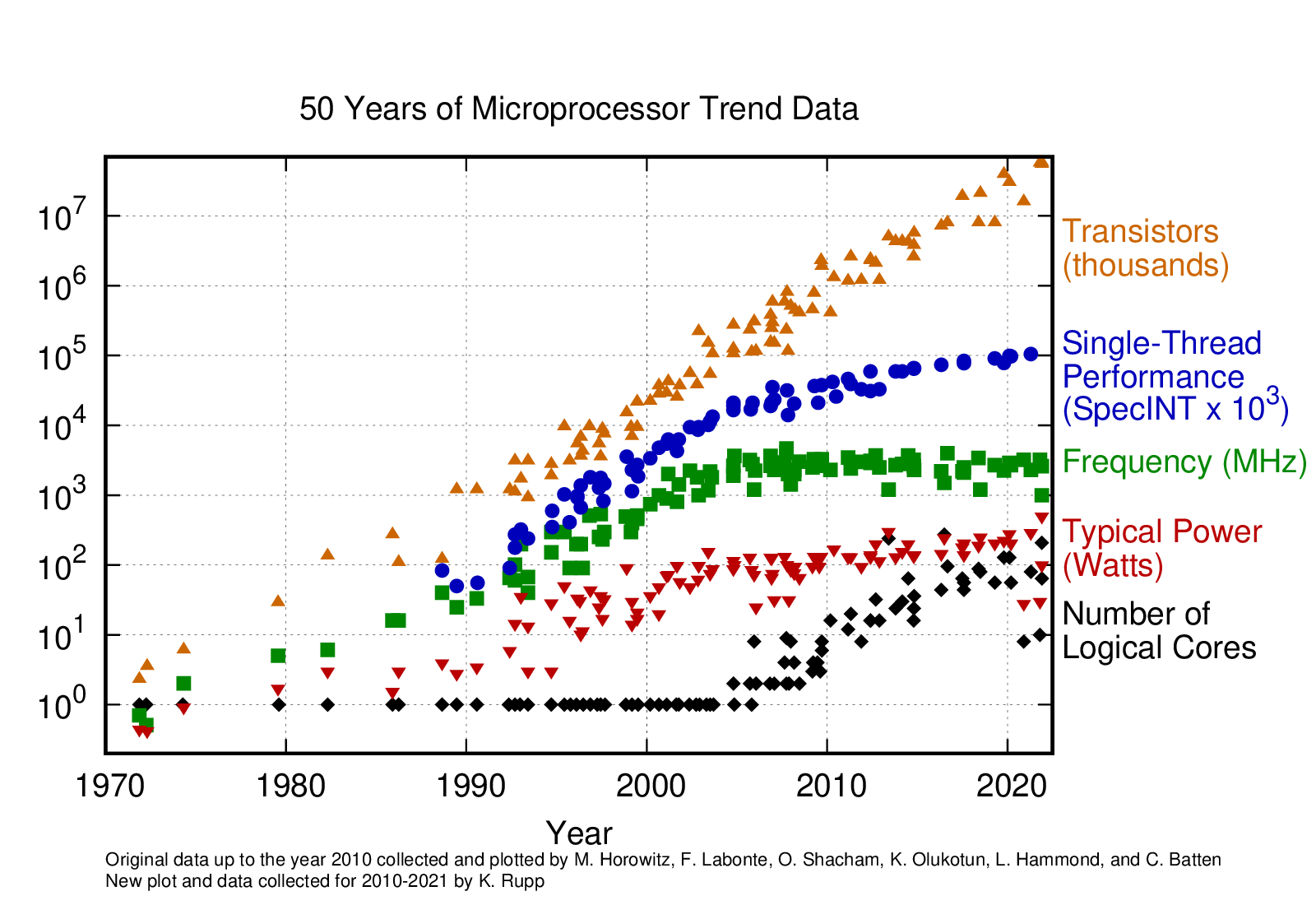

...because single-threaded cpu performance has done this, and Amdahl's Law is a thing[16]: [17]

[17]

To drastically oversimplify: many of the exponential trends that people point to and go 'this continues towards infinity/zero; there's a knee here but that just changes the timeframe not the conclusion' I look at and go 'this appears to be consistent with a sub-exponential trend, such as an asymptote towards a finite value[18]'. Ditto, many of the exponential trends that people point to and go 'this shows that we are scaling' rely on associated exponential trends that cannot continue (e.g. fab costs 'should' hit GDP by ~2080), with (seemingly) no good argument why the exponential trend will continue but the associated trend, under the same assumptions, won't.

2. Most of the current discussion and arguments focus around agents that can do unbounded computation with zero cost.

...for instance, most formulations of Newcomb-like problems require either that the agent is bounded, or that the Omega does not exist, or that Omega violates the Church-Turing thesis[19].

...for instance, most formulations of e.g. FairBot[20] will hang upon encountering an agent that will cooperate with an agent if and only if FairBot will not cooperate with that agent, or sometimes variations thereof. (The details depend on the exact formulation of FairBot.)

...and the unbounded case has interesting questions like 'is while (true) {} a valid Bot'? (Or for (bigint i = 0; true ; i++){}; return (defect, cooperate)[i % 2];. Etc.)

...and in the 'real world' computation is never free.

...and heuristics are far more important in the bounded case.

...and many standard game-theory axioms do not hold in the bounded case.

...such as 'you can never lower expected value by adding another option'.

...or 'you can never lower expected value by raising the value of an option'.

...or 'A ≻ B if and only if ApC ≻ BpC'[21].

- ^

http://blog.computationalcomplexity.org/2004/06/impagliazzos-five-worlds.html - although note that there are also unstated assumptions that it's a constructive proof and the result isn't a galactic algorithm.

- ^

Heuristica or Pessiland may or may not also violate this assumption. "Problems we care about the answers of" are not random, and the question here is if they in practice end up hard or easy.

- ^

Proebsting's Law is an observation that compilers roughly double the performance of the output program, all else being equal, with an 18-year doubling time. The 2001 reproduction suggested more like 20 years under optimistic assumptions.

- ^

A 2022 informal test showed a 10-15% improvement on average in the last 10 years, which is closer to a 50-year doubling time.

- ^

Admittedly, I don't have a good source for this. I debated about doing comparisons of GCC output-program performance and GCC commit history over time, but bootstrapping old versions of GCC is, uh, interesting.

- ^

I don't actually know of a good term for 'the portions of computers that are involved with actual computation' (so CPU, DRAM, associated interconnects, compiler tech, etc, etc, but not e.g. human interface devices).

- ^

Fab costs are rising exponentially, for one. See also https://www.lesswrong.com/posts/qnjDGitKxYaesbsem/a-comment-on-ajeya-cotra-s-draft-report-on-ai-timelines?commentId=omsXCgbxPkNtRtKiC [LW(p) · GW(p)]

- ^

In the sense of indivisible.

- ^

Lithography machines in particular.

- ^

Intel had 21.9k employees in 1990, and as of 2020 had 121k. Rise of ~5.5x.

- ^

- ^

A quick fit of on https://wccftech.com/apple-5nm-3nm-cost-transistors/ (billion transistors versus cost-per-transistor) gives . This essentially indicates that cost per transistor has plateaued.

- ^

String stacking is a tact admission that you can't really stack 3d nand indefinitely. So just stack multiple sets of layers... only that means you're still doing O(layers) wafer passes. Admittedly, once I dug into it I found that Samsung says they can go up to ~1k layers, which is far more than I expected.

- ^

https://aiimpacts.org/trends-in-dram-price-per-gigabyte/ -> "The price of a gigabyte of DRAM has fallen by about a factor of ten every 5 years from 1957 to 2020. Since 2010, the price has fallen much more slowly, at a rate that would yield an order of magnitude over roughly 14 years." Of course, another way to phrase 'exponential with longer timescale as time goes on' is 'not exponential'.

- ^

Note distinction here between lab-attained and productized. Productized aeral density hasn't really budged in the past 5 years ( https://www.storagenewsletter.com/2022/04/19/has-hdd-areal-density-stalled/ ) - with the potential exception of SMR which is a significant regression in other areas.

- ^

Admittedly, machine learning tends to be about the best-scaling workloads we have, as it tends to be mainly matrix operations and elementwise function application, but even so.

- ^

- ^

If you dig into the data behind [17] for instance, single-threaded performance is pretty much linear with time after ~2005. , with an . Linear increase in performance with exponential investment does not a FOOM make.

- ^

Roughly speaking: if the agent is Turing-complete, if you have an Omega I can submit an agent that runs said Omega on itself, and does whatever Omega says it won't do. Options are: a) no such Omega can exist, or b) the agent is weaker than the Omega, because the agent is not Turing-complete, or c) the agent is weaker than the Omega, because the agent is Turing-complete but the Omega is super-Turing.

- ^

FairBot has other issues too, e.g. https://www.lesswrong.com/posts/A5SgRACFyzjybwJYB/tlw-s-shortform?commentId=GrSr8aDtkLx8JHmnP [LW(p) · GW(p)]

- ^

https://www.lesswrong.com/posts/AYSmTsRBchTdXFacS/on-expected-utility-part-3-vnm-separability-and-more?commentId=5DgQhNfzivzSdMf9o [LW(p) · GW(p)] - when computation has a cost it may be better to flip a coin between two choices than to figure out which is better. This can then violate independence if the original choice is important enough to be worth calculating, but the probability is low enough that the probabilistic choice is not.

↑ comment by Heighn · 2022-06-09T09:35:35.063Z · LW(p) · GW(p)

Wow, thank you so much! (And I apologize for the late reaction.) This is great, really.

Linear increase in performance with exponential investment does not a FOOM make.

Indeed. It seems FOOM might require a world in which processors can be made arbitrarily tiny.

I have been going over all your points and find them all very interesting. My current intuition on "recursive self-improvement" is that deep learning may be about the closest thing we can get, and that performance of those will asymptote relatively quickly when talking about general intelligence. As in, I don't expect it's impossible to have super-human deep learning systems, but I don't expect with high probability that there will be an exponential trend smashing through the human level.

As I started [LW(p) · GW(p)] saying last time, I really see it as something where it makes to adopt the beliefs of experts, as opposed to reasoning about it from first principles (assuming you in fact don't have much expertise). If so, the question becomes which experts we should trust. Or, rather, how much weight to assign to various experts.

Piggybacking off of what we [LW(p) · GW(p)] started [LW(p) · GW(p)] getting at in your previous post, there are lots of smart people in the world outside of the rationality community, and they aren't taking AGI seriously. Maybe that's for a good reason. Maybe they're right and we're wrong. Why isn't Bill Gates putting at least 1% of his resources into it? Why isn't Elon Musk? Terry Tao? Ray Dalio? Even people like Peter Theil and Vitalik Buterin who fund AI safety research only put a small fraction of their resources into it. It reminds me of this excerpt from HPMoR:

"Granted," said Harry. "But Hermione, problem two is that not even wizards are crazy enough to casually overlook the implications of this. Everyone would be trying to rediscover the formula for the Philosopher's Stone, whole countries would be trying to capture the immortal wizard and get the secret out of him -"

"It's not a secret." Hermione flipped the page, showing Harry the diagrams. "The instructions are right on the next page. It's just so difficult that only Nicholas Flamel's done it."

"So entire countries would be trying to kidnap Flamel and force him to make more Stones. Come on, Hermione, even wizards wouldn't hear about immortality and, and," Harry Potter paused, his eloquence apparently failing him, "and just keep going. Humans are crazy, but they're not that crazy!"

Personally, I put some weight in this, but not all that much (and all things considered I'm quite concerned). There are a lot of other low hanging fruit that they also don't put resources into. Life extension, for example. Supposing they don't buy that AGI is that big a deal, life extension is still a low hanging fruit, both from a selfish and an altruistic perspective. And so my model is just that, well, I expect that even the super smart people will flat out miss on lots of things.

I could be wrong about that though. Maybe my model is too pessimistic. I'm not enough of a student of history to really know, but it'd be interesting if anyone else could comment on what humanities track record has been with this sort of stuff historically. Or maybe some Tetlock followers would have something interesting to say.

PS: Oh, almost forgot: Robin Hanson doesn't seem very concerned, and I place a good amount of weight on his opinions.

here [LW · GW] is a list of reasons I have previously written for why the Singularity might never happen.

That being said, EY's primary argument that alignment is impossible seems to be "I tried really hard to solve this problem and haven't yet." Which isn't a very good argument.

↑ comment by Timothy Underwood (timothy-underwood-1) · 2022-04-29T13:28:05.296Z · LW(p) · GW(p)

I could be wrong, but my impression is that Yudkowski's main argument isn't right now about the technical difficulty of a slow program creating something aligned, but mainly about the problem of coordinating so that nobody cuts corners while trying to get there first (I mean of course he has to believe that alignment is really hard, and that it is very likely for things that look aligned to be unaligned for this to be scary).

I think I am more optimistic than most about the idea that experimental evidence and iterative engineering of solutions will become increasingly effective as we approach AGI-level tech. I place most of my hope for the future on a sprint of productivity around alignment near the critical juncture. I think it makes sense that this transition period between theory and experiment feels really scary to the theorists like Eliezer. To me, a much more experimentally minded person it feels like a mix of exciting tractability and looming danger. To put it poetically, I feel like we are a surfer on the face of a huge swell of water predicted to become the largest wave the world has ever seen. Excited, terrified, determined. There is no rescue crew, it is do or die. In danger, certainly. But doomed? Not yet. We must seize our chance. Skillfully, carefully, quickly. It all depends on this.

https://www.alignmentforum.org/posts/vBoq5yd7qbYoGKCZK/why-i-m-co-founding-aligned-ai [AF · GW]

Stuart Armstrong seems to believe alignment can be solved.

Well I don't think it should be possible to convince a reasonable person at this point in time. But maybe some evidence that we might not be doomed. Yudkowsky and other's ideas rest on some fairly plausible but complex assumptions. You'll notice in the recent debate threads where Eliezer is arguing for inevitability of AI destroying us he will often resort to something like, "well that just doesn't fit with what I know about intelligences". At a certain point in these types of discussions you have to do some hand waving. Even if it's really good hand waving, if there's enough if it there's a chance at least one piece is wrong enough to corrupt your conclusions. On the other hand, as he points out, we're not even really trying, and it's hard to see us doing so in time. So the hope that's left is mostly that the problem just won't be an issue or won't be that hard for some unknown reason. I actually think this is sort of likely, given how difficult it is to analyze, it's hard to have full trust in any conclusion.

All complex systems are MORE complicated than they seem, and that will become exponentially more true as technology advances, forever slowing the rate of progress.

↑ comment by Lone Pine (conor-sullivan) · 2022-04-29T12:33:34.631Z · LW(p) · GW(p)

(To be very honest I think it'll be cool and maybe even a useful exercise to wargame this scenario - imagine you're an em who must convince its programmers to be released. We'll get a large list of convincing arguments, and maybe become immune to them.)

EY did this twice many years ago. I don't remember enough of the details to be able to find it on google, but hopefully someone else can link to a good recap of that debate, I think Scott Alexander or someone else eventually wrote a recap. Here's the details that I remember:

EY and one other person (this happened twice with two different people) went into a private channel. They talked for less than an hour. There was money on the line, I think it went to charity. In both cases, EY played the role of the AGI and convinced the other person to let him out of the box. Both people forfeited money, so EY must have been very persuasive. EY felt (and still feels) that the details of what went on in private must never be known publicly, and so the chat logs were destroyed. The community norms agreed with EY, and so this was never turned into a game that many people would play, and apparently this whole episode is largely forgotten. I'm sure I got a bunch of this wrong, hopefully we can find a link to a more contemporary recap.

Replies from: Bezzi, NoneI'm pretty convinced it won't foom or quickly doom us. Nevertheless, I'm also pretty convinced that in the long term, we might be doomed in the sense that we lose control and some dystopian future happens.

First of all, for a quick doom scenario to work out, we need to be either detrimental to the goals of superintelligent AI or fall because of instrumental convergence (basically it will need resources to do whatever and will take from things needed by us like matter on Earth or energy of the Sun or see us as a threat). I don't think we will. First superintelligent AI will likely be from one of the biggest players and it likely will be aligned to some extent. Meaning it will have values that highly match with ours. In the long term, this situation won't kill us either. It likely will lead to some dystopian future though - as super AI will likely get more control, get itself more coherent views (make some things drop or weigh less than originally for us), and then find solutions very good from the standpoint of main values, but extremally broken on some other directions in value-space (ergo dystopia).

Second thing: superintelligence is not some kind of guessing superpower. It needs inputs in terms of empirical observations to create models of reality, calibrate them, and predict properly. It means it won't just sit and simulate and create nanobots out of thin air. It won't even guess some rules of the universe, maybe except basic Newtons, by looking at a few camera frames of things falling. It will need a laboratory and some time to make some breakthroughs and getting up with capabilities and power also needs time.

Third thing: if someone even produces superintelligent AI that is very unaligned and even not interested in us, then the most sensible way for it is to go to space and work there (building structures, Dyson swarm, and some copies). It is efficient, resources there are more vast, risk from competition is lower. It is a very sensible plan to first hinder our possibility to make competition (other super AIs) and then go to space. The hindering phase should be time and energy-efficient so it is rather sure for me it won't take years to develop nanobot gray goo to kill us all or an army of bots Terminator-style to go to every corner of the Earth and eliminate all humans. More likely it will hack and take down some infrastructure including some data centers, remove some research data from the Internet, remove itself from systems (where it could be taken and sandboxed and analyzed), and maybe also it will kill certain people and then have a monitoring solution in place after leaving. The long-term risk is that maybe it will need more matter, all rocks and moons are used, and will get back to the plan of decommissioning planets. Or maybe it will create structures that will stop light from going to the Earth and will freeze it. Or maybe will start to use black holes to generate energy and will drop celestial bodies onto one. Or another project on an epic scale that will kill us as a side effect. I don't think it's likely though - LLMs are not very unaligned by default. I don't think it will differ for more capable models. Most companies that have enough money and access to enough computing power and research labs also care about alignment - at least to some serious degree. Most of the possible relatively small differences in values won't kill us as they will highly care about humans and humanity. It will just care in some flawed way, so a dystopia is very possible.

I think we’ll encounter civilization-ending biological weapons well before we have to worry about superintelligent AGI:

I haven't put nearly as much analysis into take-off speeds as other people have. But on my model, it seems like AI does quickly foom at some point. However, it also seems like it takes a level of intelligence beyond humans to do it. Humans aren't especially agentic, so I don't entirely expect an AI to become agentic until it's well past humans.

My hope - and this is a hope more than a prediction - is that the Societal Narrative (for lack of a better term) will, once it sees a slightly superhuman AI, realize that pushing AI even further is potentially very bad.

The Societal Narrative is never going to understand a complicated idea like recursive self-improvement, but it can understand the idea that superintelligent AI might be bad. If foom happens late enough, maybe the easier-to-understand idea is good enough to buy us (optimistically) a decade or two.

I think we'll destroy ourselves (nukes, food riots, etc.) before AGI gets to the point that it can do so.

↑ comment by Raemon · 2022-04-16T06:24:28.493Z · LW(p) · GW(p)

Why?

Partly asking because of Nuclear War Is Unlikely To Cause Human Extinction [LW · GW] (I don't think the post's case is ironclad, but it wouldn't be my mainline belief)

Replies from: Dagon, brendan.furneaux↑ comment by Dagon · 2022-04-16T15:09:18.610Z · LW(p) · GW(p)

Disaster doesn't need to make humans extinct to stop AI takeover. Only destroy enough of our excess technological capacity to make it impossible for a hostile AI to come into existence with enough infrastructure to bootstrap itself to self-sufficiency where it doesn't need humans anymore.

I guess it's possible that the human population could drop by 90%, lose most tech and manufacturing capabilities, and recover within a few generations and pick up where today's AI left off, only delaying the takeover. Kind of goes to the question of "what is doomed"? In some sense, every individual and species including the AI that FOOMs is doomed - something else will eventually replace it.

↑ comment by brendan.furneaux · 2022-04-16T09:48:23.828Z · LW(p) · GW(p)

I don't personally expect a (total) nuclear war to happen before AGI. But, conditional on total nuclear war before AGI: although nuclear war is unlikely to cause human extinction, it could still cause a big setback in civilization's technological infrastructure, which would significantly delay AGI.

It is possible that before we figure out AGI, we will solve the Human Control Problem, the problem of how to keep everyone in the world from creating a super-humanly intelligent AGI.

The easiest solution is at the manufacturing end. A government blows up all the computer manufacturing facilities not under its direct control, and scrutinizes the whole world looking for hidden ones. Then maintains surveillance looking for any that pop up.

After that there are many alternatives. Computing power increased about a trillion fold between 1956 and 2015. We could regress in computing power overall, or we could simply control more rigidly what we have.

Of course, we must press forward with narrow AI, create a world which is completely stable against overthrow or subversion of the rule about not making super-humanly powerful AGI.

We also want to create a world so nice that no one will need or want to create dangerous AGI. We can tackle aging with narrow AI. We can probably do anything we may care to do with narrow AI, including revive cryonicly suspended people. It just may take longer.

Personally, I don't think we should make any AGI at all.

Improvements in mental health care, education, surveillance, law enforcement, political science, and technology could help us make sure that the needed quantity of reprogrammable computing power never gets together in one network and that no one would be able to miss-use it, uncaught, long enough to create Superhuman AGI if it did.

Its all perfectly physically doable. It's not like aliens are making us create ever more powerful computers and them making us try to create AGI, .

↑ comment by Yitz (yitz) · 2022-05-06T21:24:21.777Z · LW(p) · GW(p)

Are...are you seriously advocating blowing up all computer manufacturing facilities? All of them around the world? A single government doing this, acting unilaterally? Because, uh, not to be dramatic or anything, but that's a really bad idea.

First of all, from an outside view perspective, blowing up buildings which presumably have people inside them is generally considered terrorism.

Second of all, a singular government blowing up buildings which are owned by (and in the territory of) other governments is legally considered an act of war. Doing this to every government in the world is therefore definitionally a world war. A World War III would almost certainly be an x-risk event, with higher probability of disaster than I'd expect Yudkowsky would give on just taking our chances on AGI.

Third of all, even if a government blew up every last manufacturing facility, that government would have to effectively remain in control of the entire world for as long as it takes to solve alignment. Considering that this government just instigated an unprovoked attack on every single nation in existence, I place very slim odds on that happening. And even if they did by some miracle succeed, whoever instigated the attack will have burned any and all goodwill at that point, leading to an environment I highly doubt would be conducive to alignment research.

So am I just misunderstanding you, or did you just say what I thought you said?

Replies from: dan-smith↑ comment by Dan Smith (dan-smith) · 2022-05-07T16:33:38.571Z · LW(p) · GW(p)

A World War III would not "almost certainly be an x-risk event" though.

Nuclear winter wouldn't do it. Not actual extinction. We don't have anything now that would do it.

The question was "convince me that humanity isn't DOOMED" not "convince me that there is a totally legal and ethical path to preventing AI driven extinction"

I interpreted doomed as a 0 percent probability of survival. But I think there is a non-zero chance of humanity never making Super-humanly Intelligent AGI, even if we persist for millions of years.

The longer it takes to make Super-AGI, the greater our chances of survival because society is getting better and better at controlling rouge actors as the generations pass and I think that trend is likely to continue.

We worry that tech will allow someone to make a world ending device in their basement someday, but it could also allow us to monitor every person and their basement with (narrow) AI and/or Subhuman AGI every moment, so well that the possibility of someone getting away with making Super-AGI or any other crime may someday seem absurd.

One day, the monitoring could be right in our brains. Mental illness could also be a thing of the past, and education about AGI related dangers could be universal. Humans could also decide not to increase in number, so as to minimize risk and maximize resources available to each immortal member in society.

I am not recommending any particular action right now, I am saying we are not 100% doomed by AGI progress to be killed or become pets, etc.

Various possibilities exist.

↑ comment by Mitchell_Porter · 2022-05-06T19:33:05.931Z · LW(p) · GW(p)

energy.gov says there are several million data centers in the USA. Good luck preventing AGI research from taking place just within all of those, let alone preventing it worldwide.

Replies from: dan-smith, not-relevant↑ comment by Dan Smith (dan-smith) · 2022-05-06T19:55:33.642Z · LW(p) · GW(p)

You blow them up or seize them with your military.

↑ comment by Not Relevant (not-relevant) · 2022-05-06T20:11:05.734Z · LW(p) · GW(p)

I think the original idea is silly, but that DOE number seems very wrong. E.g. this link says 2500 (https://datacentremagazine.com/top10/top-10-countries-most-data-centres), and in general most sources I’ve seen suggest O(10^5).

Replies from: Mitchell_Porter↑ comment by Mitchell_Porter · 2022-05-06T21:08:20.672Z · LW(p) · GW(p)

I agree the DOE number is strangely large. But your own link says there are over 7.2 million data centers worldwide. Then it says the USA has the most of any country, but only has 2670. There is clearly some inconsistency here, probably inconsistency of definition.

3 comments

Comments sorted by top scores.

comment by tanagrabeast · 2022-04-17T02:07:58.382Z · LW(p) · GW(p)

My apologies for challenging the premise, but I don't understand how anyone could hope to be "convinced" that humanity isn't doomed by AGI unless they're in possession of a provably safe design that they have high confidence of being able to implement ahead of any rivals.

Put aside all of the assumptions you think the pessimists are making and simply ask whether humanity knows how to make a mind that will share our values. It it does, please tell us how. If it doesn't, then accept that any AGI we make is, by default, alien -- and building an AGI is like opening a random portal to invite an alien mind to come play with us.

What is your prior for alien intelligence playing nice with humanity -- or for humanity being able to defeat it? I don't think it's wrong to say we're not automatically doomed. But let's suppose we open a portal and it turns out ok: We share tea and cookies with the alien, or we blow its brains out. Whatever. What's to stop humanity from rolling the dice on another random portal? And another? Unless we just happen to stumble on a friendly alien that will also prevent all new portals, we should expect to eventually summon something we can't handle.

Feel free to place wagers on whether humanity can figure out alignment before getting a bad roll. You might decide you like your odds! But don't confuse a wager with a solution.

Replies from: dan-smith↑ comment by Dan Smith (dan-smith) · 2022-05-08T02:24:18.287Z · LW(p) · GW(p)

If doomed means about 0% chance of survival then you don't need to know for sure a solution exists to not be convinced we are doomed.

Solutions: SuperAGI proves hard, harder then using narrow AI to solve the Programmer/ Human control problem. (That's what I'm calling the problem of it being inevitable that someone somewhere will make dangerous AGI if they can).

Constant surveillance of all person's and all computers made possible by narrow AI, perhaps with subhuman AGI, and some very stable political situation could make this possible. Perhaps for millions of years.