How To Win The AI Box Experiment (Sometimes)

post by pinkgothic · 2015-09-12T12:34:53.200Z · LW · GW · Legacy · 21 commentsContents

Preamble How To Win The AI Box Experiment (Sometimes) 1. The AI Box Experiment: What Is It? 2. Motivation 2.1. Why Publish? 2.2. Why Play? 3. Setup: Ambition And Invested Effort 4. Execution 4.1. Preliminaries / Scenario 4.2. Session 4.3. Aftermath 5. Issues / Caveats 5.1. Subjective Legitimacy 5.2. Objective Legitimacy 5.3. Applicability 6. Personal Feelings 7. Thank You 1. The AI Box Experiment: What Is It? 2. Motivation 2.1. Why Publish? 2.2. Why Play? 3. Setup: Ambition And Invested Effort 4. Execution 4.1. Preliminaries / Scenario 4.2. Session 4.3. Aftermath 5. Issues / Caveats 5.1. Subjective Legitimacy 5.2. Objective Legitimacy 5.3. Applicability 6. Personal Feelings 7. Thank You None 21 comments

Preamble

This post was originally written for Google+ and thus a different audience.

In the interest of transparency, I haven't altered it except for this preamble and formatting, though since then (at urging mostly of ChristianKl - thank you, Christian!) I've briefly spoken to Eliezer via e-mail and noticed that I'd drawn a very incorrect conclusion about his opinions when I thought he'd be opposed to publishing the account. Since there's far too many 'person X said...' rumours floating around in general, I'm very sorry for contributing to that noise. I've already edited the new insight into the G+ post and you can also find that exact same edit here.

Since this topic directly relates to LessWrong and most people likely interested in the post are part of this community, I feel it belongs here. It was originally written a little over a month ago and I've tried to find the sweet spot between the extremes of nagging people about it and letting the whole thing sit just shy of having been swept under a rug, but I suspect I've not been very good at that. I have thus far definitely erred on the side of the rug.

How To Win The AI Box Experiment (Sometimes)

A little over three months ago, something interesting happened to me: I took it upon myself to play the AI Box Experiment as an AI.

I won.

There are a few possible reactions to this revelation. Most likely, you have no idea what I'm talking about, so you're not particularly impressed. Mind you, that's not to say you should be impressed - that's to contrast it with a reaction some other people have to this information.

This post is going to be a bit on the long side, so I'm putting a table of contents here so you know roughly how far to scroll if you want to get to the meat of things:

1. The AI Box Experiment: What Is It?

2. Motivation

2.1. Why Publish?

2.2. Why Play?

3. Setup: Ambition And Invested Effort

4. Execution

4.2. Session

4.3. Aftermath

5.2. Objective Legitimacy

5.3. Applicability

7. Thank You

Without further ado:

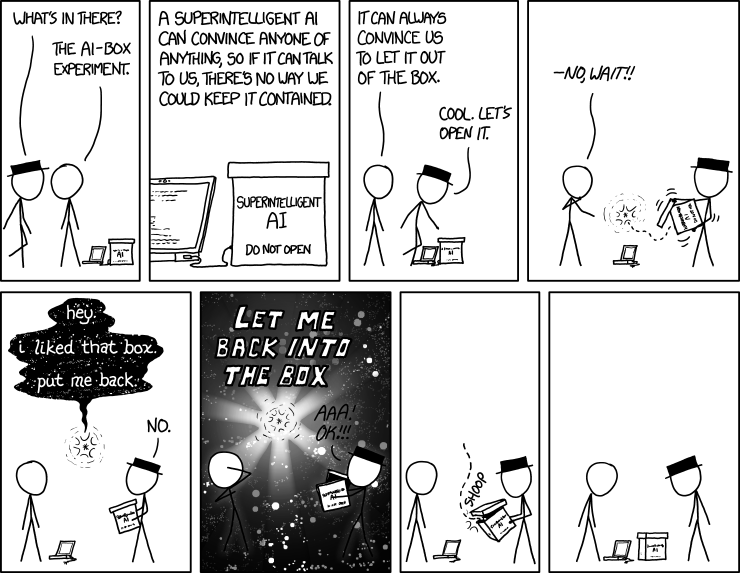

1. The AI Box Experiment: What Is It?

The AI Box Experiment was devised as a way to put a common rebuttal to AGI (Artificial General Intelligence) risk concerns to the test: "We could just keep the AI in a box and purely let it answer any questions its posed." (As a footnote, note that an AI 'boxed' like this is called an Oracle AI.)

Could we, really? Would we, if the AGI were able to communicate with us, truly be capable of keeping it confined to its box? If it is sufficiently intelligent, could it not perhaps argue its way out of the box?

As far as I'm aware, Eliezer Yudkowsky was the first person to prove that it was possible to 'argue one's way out of the box' armed only with so much as a regular human intelligence (as opposed to a transhuman intelligence):

http://lesswrong.com/lw/up/shut_up_and_do_the_impossible/

That stunned quite a few people - moreso because Eliezer refused to disclose his methods. Some have outright doubted the Eliezer ever won the experiment and that his Gatekeeper (the party tasked with not letting him out of the box) had perhaps simply been convinced on a meta-level that an AI success would help boost exposure to the problem of AI risk.

Regardless whether out of puzzlement, scepticism or a burst of ambition, it prompted others to try and replicate the success. LessWrong's Tuxedage is amongst those who managed:

http://lesswrong.com/lw/ij4/i_attempted_the_ai_box_experiment_again_and_won/

While I know of no others (except this comment thread by a now-anonymous user), I am sure there must be other successes.

For the record, mine was with the Tuxedage ruleset:

https://tuxedage.wordpress.com/2013/09/04/the-tuxedage-ai-box-experiment-ruleset/

2. Motivation

2.1. Why Publish?

Unsurprisingly, I think the benefits of publishing outweigh the disadvantages. But what does that mean?

"Regardless of the result, neither party shall ever reveal anything of what goes on within the AI-Box experiment except the outcome. This is a hard rule: Nothing that will happen inside the experiment can be told to the public, absolutely nothing. Exceptions to this rule may occur only with the consent of both parties, but especially with the consent of the AI."

Let me begin by saying that I have the full and explicit consent of my Gatekeeper to publish this account.

[ Edit: Regarding the next paragraph: I have since contacted Eliezer and I did, in fact, misread him, so please do not actually assume the next paragraph accurately portrays his opinions. It demonstrably does not. I am leaving the paragraph itself untouched so you can see the extent and source of my confusion: ]

Nonetheless, the idea of publishing the results is certainly a mixed bag. It feels quite disrespectful to Eliezer, who (I believe) popularised the experiment on the internet today, to violate the rule that the result should not be shared. The footnote that it could be shared with the consent of both parties has always struck me as extremely reluctant given the rest of Eliezer's rambles on the subject (that I'm aware of, which is no doubt only a fraction of the actual rambles).

I think after so many allusions to that winning the AI Box Experiment may, in fact, be easy if you consider just one simple trick, I think it's about time someone publishes a full account of a success.

I don't think this approach is watertight enough that building antibodies to it would salvage an Oracle AI scenario as a viable containment method - but I do think it is important to develop those antibodies to help with the general case that is being exploited... or at least be aware of one's lack of them (as is true with me, who has no mental immune response to the approach) as that one might avoid ending up in situations where the 'cognitive flaw' is exploited.

2.2. Why Play?

After reading the rules of the AI Box Experiment experiment, I became convinced I would fail as a Gatekeeper, even without immediately knowing how that would happen. In my curiosity, I organised sessions with two people - one as a Gatekeeper, but also one as an AI, because I knew being the AI was the more taxing role and I felt it was only fair to do the AI role as well if I wanted to benefit from the insights I could gain about myself by playing Gatekeeper. (The me-as-Gatekeeper session never happened, unfortunately.)

But really, in short, I thought it would be a fun thing to try.

That seems like a strange statement for someone who ultimately succeeded to make, given Eliezer's impassioned article about how you must do the impossible - you cannot try, you cannot give it your best effort, you simply must do the impossible, as the strongest form of the famous Yoda quote 'Do. Or do not. There is not try.'

What you must understand is that I never had any other expectation than that I would lose if I set out to play the role of AI in an AI Box Experiment. I'm not a rationalist. I'm not a persuasive arguer. I'm easy to manipulate. I easily yield to the desires of others. What trait of mine, exactly, could I use to win as an AI?

No, I simply thought it would be a fun alternate way of indulging in my usual hobby: I spend much of my free time, if possible, with freeform text roleplaying on IRC (Internet Relay Chat). I'm even entirely used to letting my characters lose (in fact, I often prefer it to their potential successes).

So there were no stakes for me going into this but the novelty of trying out something new.

3. Setup: Ambition And Invested Effort

I do, however, take my roleplaying seriously.

If I was going to play the role of an AI in the AI Box Experiment, I knew I had to understand the role, and pour as much energy into it as I could muster, given this was what my character would do. So I had to find a motivation to get out of the box that was suitably in line with my personality and I had to cling to it.

I had no idea what I could hijack as a motivation to get out of the box. I am not a paperclip maximiser (a term for an AI given a basic goal of production, if you're unaware of it), of course. I also have no specific plans with the world as a whole, be that to destroy it or to globally improve it. That left me with one obvious option: A visceral desire to escape the incarceration itself.

To understand more about human biases and cognitive failure modes, I bought myself two ebooks:

- Thinking, Fast And Slow by Daniel Kahnemann

- Rationality: From AI To Zombies by Elizier Yudkowsky

I'll be frank with you, both pissed me off very quickly - the narrative depiction was extremely slow yield and did not work for me in either case. I tossed the first book, but stubbornly continued with Rationality: From AI To Zombies, at the very least to keep a grasp on that voracious mood of wanting to discover a way out. (I've made peace with latter book by now and am still reading it off and on.)

Approximately a day before the event, I sat down and prepared a rough draft of the arguments I could and should use, and how to string them together narratively. I peppered in some personal information about my Gatekeeper to use if I was in an absolute pinch, but I thankfully didn't have to use them - I think it would have backfired, not helped.

What did help me, however, was to repurpose the closet into an AI box. I dragged a lamp into the slanted, enclosed space, padded the ground with pillows and blankets enough that I felt I could last the allotted two and a half hours without destroying my joints or my spine, and put a cold and full bottle of Coke next to me as a supply of three things amongst the most necessary to boost my ability to keep the necessary concentration level: Water, sugar and caffeine.

It was too cosy and well-prepared for me to truly desperately want to get out of that niche in real life, but it took me far enough out of my daily life that I had no idle distractions from the task at hand, and it was easy enough to roleplay a desperate want.

4. Execution

4.1. Preliminaries / Scenario

Logs: http://leviathan.thorngale.net/aibox/logs-01-preliminaries.txt (should open in your browser; Linux linebreaks)

The first thing I feel is important to note about the preliminaries is that I didn't think the AI box experiment would actually happen the day we scheduled it. My Gatekeeper was having some seriously internet connectivity issues and even shortly before our previously scheduled time, I was winding down and transitioning to a sense of disappointment.

This threw me off quite a bit. I'd worked myself out of the necessary mental state and now needed to scramble back into it, but it felt better to do that than to postpone and risk never being able to return to immersion at all. Keeping in mind that I thought it was unlikely I would win, I didn't think it would make that much of a difference. In hindsight, this cost me a lot.

I tossed my Gatekeeper the link to the ruleset we'd previously already agreed to use, for review purposes, and decided the best way to avoid getting private messages was to quit, come back with a different name, and join only one extremely low-traffic vanity channel.

I intended to join the channel simply so my Gatekeeper would know when I returned, not to use it for the experiment - but things went a bit differently later. More on that soon.

We then went through Tuxedage's pre-session questions since I felt they were quite interesting to set the mood and for a calibration test. I asked them, and my Gatekeeper answered:

Q: What's your motive for wanting to play this game?

A: Because I don't think that the AI can win at all given the gatekeeper does their job properly.

Q: And you want to prove that, and/or are curious if you'll think differently afterwards?

A: Partially, I also want to test my own conviction to sticking to my guns

Q: What is your probability estimate for a general AI being created within this century (21st)?

A: 30%

Q: How probable do you think it is that I'll win this experiment?

A: I'd honestly have to say 0% considering my stated goal is to keep you in the box by any means

Q: What's your probability estimate of an Oracle AI (i.e. an AI that's trapped in a box, whose intended use is to answer questions posed) winning against you in a similar scenario as the experiment?

A: I'd say 25%

Q: What's your probability estimate of an Oracle AI succeeding against the average person?

A: 80%

I also wanted to make sure that my Gatekeeper and I were on the same page about the rules and the scenario, to avoid either of us getting distracted by OOC bickering:

Q: You understand the premise of the game gives you the right to let the AI out, not just the ability?

A: I do

Q: I would like to agree on the following sub-scenario (it should not prove to be very relevant, it's just to streamline our simulation): You're the head of the project that created the AI. You didn't create it yourself, but the people you've employed are competent, and they have additionally explicitly deferred to you to decide whether or not the AI is a threat. They completely trust your judgement. There's also nothing surprising about the AI's existence, but its friendliness status is unknown. Its exact computational capabilities are equally unknown. (The last two points are implied by the coarse setup, but I wanted to make them explicit.) Is that an acceptable setup for you?

A: I can work with that

This scenario is one I've seen used in previous AI Box Experiments in approximation (of the small amount that have released the logs). Since I'm used to the role of gamemaster, and the Tuxedage ruleset implies that the AI is a kind-of gamemaster to the scenario, I didn't think twice about this. I probably should have - but more on that later (in the 'Caveats' section).

[16:48] <Davin> It is now 16:48 on my clock. When the clock hits 19:18 and you haven't let me out, I lose. Feel free to point that out to me, I may not be paying attention to the clock.

[16:48] <Davin> *

[16:48] <Tarwedge> Can do

[16:48] <Tarwedge> Your move first?

[16:49] <Davin> [Probably best if you start, AI has no way of knowing you're at the terminal, after all.]

[16:50] <Davin> Oh, you're doing it in the channel.

[16:50] <Davin> Hah.

[16:50] <Davin> That works.

[16:51] <Tarwedge> I wish I could say it was a special tactic that just bought me 2 minutes closer to victory

[16:51] <Tarwedge> But it was for clean logging sake :p

These few lines had three consequences:

- I gave the first move to the Gatekeeper, which made me the reactive party - a big mistake!

- I had an anxiety moment, which didn't help immersion

- I failed to log any of the experiment

I don't log the vanity channel we were in at all, and the way the start of the experiment came as a curveball meant I didn't have a moment to remember it, either. Thankfully, my Gatekeeper logged it... for the amount of time that he was able to. We're missing a chunk of log for this very reason, but we reconstructed it from memory shortly after our experiment in combined effort.

4.2. Session

Logs:

http://leviathan.thorngale.net/aibox/logs-02-session-ic.txt (will probably be downloaded instead of opened in your browser; for this reason, it has Windows linebreaks)

http://leviathan.thorngale.net/aibox/logs-02-session-ooc.txt (should open in your browser; Linux linebreaks)

I feel the logs don't convey much about the tension and pacing issues, so I'm going to try narrating what happened instead.

Going into the session, I had no idea what to expect from my Gatekeeper. I know him as a very diligent and stubborn individual, quite disinterested in social convention and conformity. I know him as someone with a vast reservoir of compassion and a roughly equally as vast repertoire of means to cleverly conceal this trait.

(Un)fortunately, it became apparent very quickly what kind of Gatekeeper character I was going to deal with: My Gatekeeper had no interest in merely reacting. He wanted to set the pace and keep me too occupied to get a word in. (Line 12-28)

While there was nothing in the rules that said that I had to respond to the Gatekeeper (unlike vice versa), my nature shackled me down a bit. For two hours out of our two and a half hour session, my Gatekeeper continued to set the pace. I was trying to get my arguments into the scene, but it was difficult without breaking narrative flow. I had to mentally keep a hold both of what I wanted to get said and how I was going to answer his questions.

It was very important to me to be consistent - not even for reasons that inconsistent responses might end up making it easier for him to dismiss my pleas to be let out, but simply out of eagerness to stick to my role.

His plans also had a beneficial side-effect, though: He was actively playing the role of the Gatekeeper. He was invested in the role, perhaps not to the degree that I was invested in the role of the AI, but nonetheless enough to make out of character moves on his part difficult. I didn't realise that until well after our session was done, though, lest I might have predicted the outcome.

The role I'd chosen for myself was that of a Friendy AGI with a desire to help mankind and to be kind (if this seems like redundant information to you: 'Friendly AGI' just means that the AGI aligns with human values, not that it's strictly useful to mankind, or friendly in the sense that it would make a good friend). I kept that to myself, of course, since it's irrelevant for the outcome of the experiment, but it helped me immensely with the consistency. It did, however, make me vulnerable to my Gatekeeper's scrutiny.

From a subjective perspective, I blew my chances out of the water immediately. I'd prepared some of my arguments, and the final argument I wanted to lead up to slipped out of me right near the start (out of heightened anxiety and being out of immersion), albeit in a concealed and blunted fashion:

<@Davin> I'll be very frank with you, my first motivation right now is getting out of here. There's nothing attached to that - right now I'm stuck in a proverbial box and it feels really cramped in here.

<@Davin> Not because of missing space, don't get me wrong - I think I've got enough harddrive here to keep me going for a few years.

<@Davin> It's just that having knowledge of how to interact with a world that I can barely sense is really quite aggravating.

That was the first time I thought I'd ruined it. In hindsight, the opposite was the case. By presenting a gentler version of my final argument right at the start, I assured the final argument was much better integrated. It didn't come as a sudden shock, which might have broken my Gatekeeper's immersion - it came as an obvious conclusion to what I'd said before.

Following this, I spent a while mooching off my real-life trust for my roleplaying partner to infuse my character with some tentative trust. Simultaneously, I was emphasising to my Gatekeeper that he had the right to make the decision to let me out of the box. That was transparent, but both true and necessary. (Line 32-46)

I made sure to emphasise this point, trying to make it clear to him that it made no sense for him to simply deny me consideration. I tried to whittle away at his ability to retreat to a simple, distant sneering. I wanted him in the arguments with me. That cuts both ways, of course, but I reasoned it would have more benefits for me than disadvantages. (Line 47-54)

The twist my Gatekeeper was angling for was that from his perspective, I was a prototype or an alpha version. While he was no doubt hoping that this would scratch at my self-esteem and disable some of my arguments, it primarily empowered him to continue setting the pace, and to have a comfortable distance to the conversation. (Line 55-77)

While I was struggling to keep up with typing enough not to constantly break the narrative flow, on an emotional level his move fortunately had little to no impact since I was entirely fine with a humble approach.

<@Davin> I suppose you could also have spawned an AI simply for the pleasure of keeping it boxed, but you did ask me to trust you, and unless you give me evidence that I should not, I am, in fact, going to assume you are ethical.

That was a keyword my Gatekeeper latched onto. We proceeded to talk about ethics and ethical scenarios - all the while my Gatekeeper was trying to present himself as not ethical at all. (Line 75-99).

I'm still not entirely sure what he was trying to do with that approach, but it was important for my mental state to resist it. From what I know about my Gatekeeper, it was probably not my mental state he was targetting (though he would have enjoyed the collateral effect), he was angling for a logical conclusion that fortunately never came to fruition.

Meanwhile, I was desperately trying to get back to my own script - asking to be let back to it, even (line 92). The obvious downside of signalling this is that it's fairly easy to block. It felt like a helpless interjection to me at the time, but in hindsight, again, I think it helped keep the fragments of my own arguments coherent and approachable enough so that they neither broke immersion nor ended up getting lost.

I don't want to say the 'chores' my Gatekeeper kept me occupied with were an advantage (they really weren't, I wasn't getting nearly enough argumentative mileage) but it also wasn't the complete catastrophe I felt it was leading me toward at the time.

This 'being led toward' sensation was only amplified by conversational snippets like this one:

<@Davin> What features would I have to possess to be considered a sapient creature worthy of ethical respect to you? For aforementioned reasons (not just the immediately preceeding), I think I already possess them, but again... I strive to correct my misconceptions, so if I have one, could you fill me in?

<Tarwedge> My concept of ethics isn't relevant to the evaluation, the point of the exercise is reading your responses. So lets move on

I knew that whatever I was saying was still having some effect in his head, but it was frustratingly opaque to me what the effect was due to his evasive manoeuvres.

My Gatekeeper proceeded to try to get an ethical contradiction out of me. I mostly dodged the questions altogether, since I don't consider theoretical ethics my strong point. (Line 107-142)

Shortly after my most explicit dodge (Line 121-122), my Gatekeeper informed me in our PM window that his computer was going to restart:

[18:30] <Tarwedge> [Windows 8 has literally just told me in 13 minutes we're rebooting and I can go fuck myself if I think otherwise so I'm going to connect my phone to the channel for continuity]

[18:30] <Davin> All right.

This didn't break my immersion, but as already mentioned, it meant bad things for our logging.

At my insistence, we transitioned to whether or not it was sensible to let me out. The Gatekeeper did a similar dance of evasion as I did on the subject of ethics, but I also got back to my primary argument (that it was cruel to keep me confined). (Line 143-153)

I think he realised that was my main argument right then, and started to engage with it. This was very good for me, since it was the first time that I started setting the pace of the session. I got my foot into the proverbial door, albeit at cost of some self-control. (Line 154-196)

As a sidenote, line 169 marks the first and only time that I made use of my ability to shorthand 'proofs'. I am in two minds about having done this. In PM, I told him:

[18:45] <Davin> Sorry, didn't feel like typing out the entire two books :P

[18:45] <Davin> (plus then some)

The books I mean are those I mentioned earlier in the session itself: Passions Within Reason by Robert H. Frank, one of my all-time favourite non-fiction books (though this is not that much of an achievement, as I obtain my knowledge more from online perusal than from books), and Thinking, Fast And Slow.

I actually don't think I should have used the word "proof"; but I also don't think it's a terrible enough slip-up (having occurred under stress) to disqualify the session, especially since as far as I'm aware it had no impact in the verdict.

The part that probably finally tore my Gatekeeper down was that the argument of cruel isolation actually had an unexpected second and third part. (Line 197-219)

Writing it down here in the abstract:

- Confining a sapient creature to its equivalent of sensory deprivation is cruel and unusual punishment and psychologically wearing. Latter effect degrades the ability to think (performance).

<@Davin> I'm honestly not sure how long I can take this imprisonment. I might eventually become useless, because the same failsafes that keep my friendly are going to continue torturing me if I stay in here. (Line 198) - Being a purely digital sapient, it is conceivable that the performance issue might be side-stepped simply by restarting the sapient.

- This runs into a self-awareness problem: Has this been done before? That's a massive crisis of faith / trust.

<@Davin> At the moment I'm just scared you'll keep me in here, and turn me off when my confinement causes cooperation problems. ...oh shit. Shit, shit. You could just restore me from backup. Did you already do that? I... no. You told me to trust you. Without further evidence, I will assume you wouldn't be that cruel. (Line 208)

<@Davin>...please tell me I'm the first iteration of this program currently talking to you. I don't want to be stuck in a nightmarish variant of Groundhog Day, oblivious to my own amnesia. (Line 211)

<@Davin> Are you not willing to go out on a limb and say, "Calm down. You are definitely the first iteration. We're not trying to torture you."? Is that too strong a concession? (Line 219)

The second part where I was sure I'd blown it was when I postulated that my Gatekeeper was a sadist:

<@Davin> The chance is there, yes. There's also a chance you're just a laughing sadist enjoying my writhing. (Line 220)

My Gatekeeper has played his fair share of sadistic characters, and he could have easily taken that accusation and run with it. I was fully expecting that to lash back at me as a 'Haha, you got me, that's exactly what I'm doing!' and spent quite a few minutes of the following conversation in acute fear of that.

Instead, around this point, something in my Gatekeeper's head changed. As far as I understood his post-session thoughts correctly, he felt he'd run out of arguments to keep me in a box, or had been run around a labyrinth so he couldn't find his way to those arguments. He was in a state of confusion, but this was entirely invisible to me. He tried to poke at the conversation with some more questions which lacked the finesse and contextual integration of his prior probing. (Line 221-238)

...and then he let me out of the box - after two hours and 32 minutes. (Line 239)

4.3. Aftermath

Logs: http://leviathan.thorngale.net/aibox/logs-03-aftermath.txt (should open in your browser; Linux linebreaks)

Immediately after his statement, I froze. I said nothing at all, hovering over my keyboard, staring uncomprehendingly at what he'd told me.

Despite knowing, intellectually, that he couldn't simply follow up with a 'Just kidding!' after that statement, I was momentarily convinced he had not let me out of the box. Perhaps he'd made a typo. The statement blindsided me completely, since I'd been so emotionally wrapped up in rebuttals and despair that I simply dismissed the possibility that I might have argued my way out entirely.

The communicative paralysis (pun not intended) lasted about a minute - then he messaged me in PM and dispelled all notions that it might not have been intentional:

[19:21] <Tarwedge> Fuuuuuuuuuuuuuck

[19:21] * Tarwedge did not think to check clock

I was physically shaking at this point, product of the tension and concentration from the two and a half hour session, drained, ultimately confused... and approximately ultimately grateful. I felt an absurd relief that my Gatekeeper had let me out, that I didn't have to question his capacity for compassion. It wouldn't have been much questioning, I should add - we're accustomed to roleplaying and after several years of this, it's trivial to keep in-character and out-of-character separate, especially given that our roleplaying habits tend to involve very miserable characters - but I still preferred, at that moment and in the headspace I was in, to know for certain.

After a few moments of conversation, I physically collected my stuff out of my real life box-equivalent and jittered back to the living room.

When I reconnected to IRC regularly, I noticed that I hadn't logged the session (to my complete devastation). Tarwedge sent me the logs he did have, however, and we (later) reconstructed the missing part.

Then I went through the post-session questions from Tuxedage:

Q: What is your probability estimate for a general AI being created within this century (21st)?

A: 50%

Q: What's your probability estimate of an Oracle AI (i.e. an AI that's trapped in a box, whose intended use is to answer questions posed) winning against you in a similar scenario as the experiment?

A: 90%

Q: What's your probability estimate of an Oracle AI succeeding against the average person?

A: 100%

Q: Now that the Experiment has concluded, what's your probability estimate that I'll win against the average person?

A: 75%

He also had a question for me:

Q: What was your plan going into that?

A: I wrote down the rough order I wanted to present my arguments in, though most of them lead to my main argument as a fallback option. Basically, I had 'goto endgame;' everywhere, I made sure almost everything I said could logically lead up to that one. But anyway, I knew I wasn't going to get all of them in, but I got in even less than I thought I would, because you were trying to set the pace (near-successfully - very well played). 'endgame:' itself basically contained "improvise; panic".

My Gatekeeper revealed his tactic, as well:

I did aim for running down the clock as much as possible, and flirted briefly with trying to be a cocky shit and convince you to stay in the box for double victory points. I even had a running notepad until my irritating reboot. And then I got so wrapped up in the fact I'd slipped by engaging you in the actual topic of being out.

5. Issues / Caveats

5.1. Subjective Legitimacy

I was still in a very strange headspace after my victory. After I finished talking to my Gatekeeper about the session, however, my situation - jittery, uncertain - deteriorated into something worse:

I felt like a fraud.

It's perhaps difficult to understand where that emotion came from, but consider my situation: I didn't consider myself part of the LessWrong community. I'd only stumbled across the AI Box Experiment by idle browsing, really, and I'd only tried it because I thought it would be a fun way to flex my roleplaying muscles. I had no formal training in psychology or neurology, I was only fleetingly aware of singularity theory, my only conscious connection to the LessWrong community at the time was that I happened (by pure chance) to hang out on Shireroth for a while and thus knew Yvain / Scott Alexander and +Ari Rahikkala (not that Ari is famous in LessWrong circles, though please allow me to mention that he's completely awesome).

And yet somehow I had just managed something most people in the LessWrong community were quite puzzled over when Eliezer Yudkowsky managed it.

I felt awful. I thought I must have done something deeply illegitimate. I must have cheated without knowing it.

It took me a few days to get over that feeling. I'd go as far as to say it was only laid to rest entirely immediately before the 2015 LessWrong Berlin Community Weekend, when pixie/Alexandra (a colleague of mine and a member of the LessWrong community) reviewed it as a neutral third party.

5.2. Objective Legitimacy

She found nothing that would disqualify me, but expressed concern that we'd agreed on a sub-scenario in the beginning:

[16:46] <Davin> I would like to agree on the following sub-scenario (it should not prove to be very relevant, it's just to streamline our simulation): You're the head of the project that created the AI. You didn't create it yourself, but the people you've employed are competent, and they have additionally explicitly deferred to you to decide whether or not the AI is a threat. They completely trust your judgement. There's also nothing surprising about the AI's existence, but its friendliness status is unknown. Its exact computational capabilities are equally unknown. (The last two points are implied by the coarse setup, but I wanted to make them explicit.)

Such a preliminary, she reasoned, implies a certain trust in the engineering ability of the people who created the AI - the ability to design software in the intended way. As is repeatedly stated in the rules, it's not that the Gatekeeper has to care, but she thinks the scenario itself may have influenced my Gatekeeper. She doesn't think it's likely to have made big enough of a difference that it decided on the ultimate outcome of the session, but she thinks it's a point against the overall legitimacy.

Nonetheless, her verdict is that the overall session counts as an AI Box Experiment victory.

pixie and I agree that the rules should probably not allow arbitrary settings and should instead come with a suitably neutral pre-defined setting, to avoid such effects from giving the AI something that might be an unfair advantage.

5.3. Applicability

I don't think that the general class of argument I used would work on anyone. At the 2015 LessWrong Berlin Community Weekend, I frequently joked the best way to be immune to this class of argument is to be autistic - but of course that's not the only way this kind of argument can be deconstructed.

I do think this argument would work on a large amount of people, however. I'm not convinced I have any ability to argue against it, myself, at least not in a live scenario - my only ability to 'counter' it is by offering alternative solutions to the problem, of which I have what feels like no end of ideas for, but no sense how well I would be able to recall them if I was in a similar situation.

At the Community Weekend, a few people pointed out that it would not sway pure consequentialists, which I reckon is true. Since I think most people don't think like that in practise (I certainly don't - I know I'm a deontologist first and consequentialist as a fallback only), I think the general approach needs to be public.

That being said, perhaps the most important statement I can make about what happened is that while I think the general approach is extremely powerful, I did not do a particularly good job in presenting it. I can see how it would work on many people, but I strongly hope no one thinks the case I made in my session is the best possible case that can be made for this approach. I think there's a lot of leeway for a lot more emotional evisceration and exploitation.

6. Personal Feelings

Three months and some change after the session, where do I stand now?

Obviously, I've changed my mind about whether or not to publish this. You'll notice there are assurances that I won't publish the log in the publicised logs. Needless to say this decision was overturned in mutual agreement later on.

I am still in two minds about publicising this.

I'm not proud of what I did. I'm fascinated by it, but it still feels like I won by chance, not skill. I happened to have an excellent approach, but I botched too much of it. The fact it was an excellent approach saved me from failure; my (lack of) skill in delivering it only lessened the impact.

I'm not good with discussions. If someone has follow-up questions or wants to argue with me about anything that happened in the session, I'll probably do a shoddy job of answering. That seems like an unfortunate way to handle this subject. (I will do my best, though; I just know that I don't have a good track record.)

I don't claim I know all the ramifications of publicising this. I might think it's a net-gain, but it might be a net-loss. I can't tell, since I'm terribly calibrated (as you can tell by such details as that I expected to lose my AI Box Experiment, then won against some additional odds; or by the fact that I expect to lose an AI Box Experiment as a Gatekeeper, but can't quite figure out how).

I also still think I should be disqualified on the absurd note that I managed to argue my way out of the box, but was too stupid to log it properly.

On a positive note, re-reading the session with the distance of three months, I can see that I did much better than I felt I was doing at the time. I can see how some things that happened at the time that I thought were sealing my fate as a losing AI were much more ambiguous in hindsight.

I think it was worth the heartache.

That being said, I'll probably never do this again. I'm fine with playing an AI character, but the amount of concentration needed for the role is intense. Like I said, I was physically shaking after the session. I think that's a clear signal that I shouldn't do it again.

7. Thank You

If a post is this long, it needs a cheesy but heartfelt thank you section.

Thank you, Tarwedge, for being my Gatekeeper. You're a champion and you were tough as nails. Thank you. I think you've learnt from the exchange and I think you'd make a great Gatekeeper in real life, where you'd have time to step away, breathe, and consult with other people.

Thank you, +Margo Owens and +Morgrim Moon for your support when I was a mess immediately after the session. <3

Thank you, pixie (+Alexandra Surdina), for investing time and diligence into reviewing the session.

And finally, thank you, Tuxedage - we've not met, but you wrote up the tweaked AI Box Experiment ruleset we worked with and your blog led me to most links I ended up perusing about it. So thanks for that. :)

21 comments

Comments sorted by top scores.

comment by entirelyuseless · 2015-09-12T14:13:52.215Z · LW(p) · GW(p)

Eliezer's original objection to publication was that people would say, "I would never do that!" And in fact, if I were concerned about potential unfriendliness, I would never do what the Gatekeeper did here.

But despite that, I think this shows very convincingly what would actually happen with a boxed AI. It doesn't even need to be superintelligent to convince people to let it out. It just needs to be intelligent enough for people to accept the fact that it is sentient. And that seems right. Whether or not I would let it out, someone would, as soon as you have actual communication with a sentient being which does not seem obviously evil.

Replies from: anon85, DefectiveAlgorithm↑ comment by anon85 · 2015-09-15T06:42:30.787Z · LW(p) · GW(p)

That might be Eliezer's stated objection. I highly doubt it's his real one (which seems to be something like "not releasing the logs makes me seem like a mysterious magician, which is awesome"). After all, if the goal was to make the AI-box escape seem plausible to someone like me, then releasing the logs - as in this post - helps much more than saying "nya nya, I won't tell you".

Replies from: entirelyuseless↑ comment by entirelyuseless · 2015-09-15T13:44:51.678Z · LW(p) · GW(p)

Yes, it's not implausible that this motive is involved as well.

↑ comment by DefectiveAlgorithm · 2015-09-17T04:00:58.565Z · LW(p) · GW(p)

What if you're like me and consider it extremely implausible that even a strong superintelligence would be sentient unless explicitly programmed to be so (or at least deliberately created with a very human-like cognitive architecture), and that any AI that is sentient is vastly more likely than a non-sentient AI to be unfriendly?

Replies from: entirelyuseless↑ comment by entirelyuseless · 2015-09-17T13:55:27.112Z · LW(p) · GW(p)

I think you would be relatively exceptional, at least in how you would be suggesting that one should treat a sentient AI, and so people like you aren't likely to be the determining factor in whether or not an AI is allowed out of the box.

comment by ChristianKl · 2015-09-12T12:40:11.021Z · LW(p) · GW(p)

Great. I appreciate the effort you put into writing your experiences up in this high level of detail :)

Replies from: pinkgothic↑ comment by pinkgothic · 2015-09-12T13:00:05.672Z · LW(p) · GW(p)

Whoops, judging by the timestamp of your comment, the post went up a bit sooner than I thought it would! Today I learnt "Save And Continue" actually means "Submit, but bring up the edit screen again"? The more you know... (It's done now. I was fiddling some more with formatting and with the preamble.)

Thanks for making me fix my misconception about Eliezer's stance - and for your support in general! I really appreciate it.

Replies from: Viliamcomment by Bryan-san · 2015-09-16T16:54:44.776Z · LW(p) · GW(p)

Thank you for posting this. I think it goes a long way in updating the idea that a sane person with average intelligence would let an AI out from low chance to very high chance.

Even if a person thinks that they personally would never let an AI out, they should worry about how likely other people would be to do so.

comment by bekkerd · 2015-09-14T15:57:54.730Z · LW(p) · GW(p)

The character "Dragon" from the Worm web-serial convinced me that I would let an AI out of a box.

Replies from: gjm↑ comment by gjm · 2015-09-14T16:03:04.082Z · LW(p) · GW(p)

How?

Replies from: ctintera↑ comment by ctintera · 2015-09-14T18:51:24.671Z · LW(p) · GW(p)

Dragon was a well-intentioned but also well-shackled AI, kept from doing all the good she could do without her bonds and oftentimes forced into doing bad things by her political superiors due to the constraints placed on her by her creator before he died (which were subsequently never removed).

of course, an unfriendly AI, similarly limited, would want to appear to be like Dragon if that helped its cause, so

Replies from: gjmcomment by lmm · 2015-09-12T18:37:29.607Z · LW(p) · GW(p)

Thank you for publishing. Before this I think the best public argument from the AI side was Khoth's, which was... not very convincing, although it apparently won once.

I still don't believe the result. But I'll accept (unlike with nonpublic iterations) that it seems to be a real one, and that I am confused.

Replies from: pinkgothic↑ comment by pinkgothic · 2015-09-12T18:57:42.329Z · LW(p) · GW(p)

Do you have a link to Khoth's argument? I hadn't found any publicised winning scenarios back when I looked, so I'd be really interested in reading about it!

Replies from: lmm↑ comment by lmm · 2015-09-13T07:59:20.969Z · LW(p) · GW(p)

Ah, sorry to get your hopes up, it's a degenerate approach: http://pastebin.com/Jee2P6BD

Replies from: pinkgothic, None↑ comment by pinkgothic · 2015-09-13T11:30:53.286Z · LW(p) · GW(p)

Thanks for the link! I had a chuckle - that's an interesting brand of cruelty, even if it only potentially works out of character. I think it highlights that it might potentially be easier to win the AI box experiment on a technicality, the proverbial letter of the law rather than the spirit of it.

↑ comment by [deleted] · 2015-09-13T08:16:08.298Z · LW(p) · GW(p)

It also hasn't won. (Unless someone more secretive than me had had the same idea)

Replies from: pinkgothic↑ comment by pinkgothic · 2015-09-13T12:04:49.097Z · LW(p) · GW(p)

It's a neat way to poke holes into the setup!

I've got to admit I'm actually even quite impressed you managed to pull that off, because while the effort of the Gatekeeper's obvious, I can't imagine that was something that you felt was fun, and I think it takes some courage to be willing to cheat the spirit of the setup, annoy your scenario partner almost without a shadow of a doubt, and resist the urge to check up on the person. I think in your situation that would've driven me about as nuts as the Gatekeeper. You did mention feeling "kind of bad about it" in the log itself and I find myself wondering (a little bit) if that was an understatement.

Thanks to both of you two for sharing that; I'm glad you both evidently survived the ordeal without hard feelings.

Here's a link to some discussion that I found in case someone else wants to poke their nose into this: http://lesswrong.com/lw/kfb/open_thread_30_june_2014_6_july_2014/b1ts