Posts

Comments

One thing we're worried about is cases where the haplotypes have the small additive effects rather than individual SNPs, and you get an unpredictable (potentially deleterious) effect if you edit to a rare haplotype even if all SNPs involved are common.

This is a point of uncertainty that bothered me when I was doing a similar analysis a while ago. GWAS data is possibly good enough to estimate causal effects of haplotypes, but that's not enough information to do single base edits. To have reasonable confidence of getting the predicted effect, it'd be necessary to to make all the edits to transform the original haplotype into a different haplotype.

And unlike with distant variants where additive effects dominate, it'd make sense if non-additive effects are strong locally, since the variants are near each other. Whether this is actually true in reality is way beyond my knowledge, though.

Something new and relevant: Claude 3's system prompt doesn't use the word "AI" or similar, only "assistant". I view this as a good move.

As an aside, my views have evolved somewhat on how chatbots should best identify themselves. It still doesn't make sense for ChatGPT to call itself "an AI language model", for the same reason that it doesn't make sense for a human to call themselves "a biological brain". It's somehow a category error. But using a fictional identification is not ideal for productivity contexts, either.

Sounds right to me. LLMs love to roleplay, and LLM-roleplaying-as-AI being mistaken for LLM-talking-about-itself is a classic. (Here's a post I wrote back in Feb 2023 on the topic.)

Have you ever played piano?

Yes, literally longer than I can remember, since I learned around age 5 or so.

The kind of fluency that we see in the video is something that a normal person cannot acquire in just a few days, period.

The video was recorded in 2016, 10 years after his 2006 injury. It's showing the result of 10 years of practice.

You plain don't become a pianist in one month, especially without a teacher, even if you spend all the time on the piano.

I don't think he was as skilled after one month as he is now after 10 years.

I would guess though that you can improve a remarkable amount in one month if you play all day every day. I expect that a typical beginner would play about an hour a day at most. If he's playing multiple hours a day, he'll improve faster than a typical beginner.

Keep in mind also that he was not new to music, since he had played guitar previously. That makes a huge difference, since he'll already be familiar with scales, chords, etc. and is mostly just learning motor skills.

Having watched the video about the piano player, I think the simplest explanation is that the brain injury caused a change in personality that resulted in him being intensely interested in playing the piano. If somebody were to suddenly start practicing the piano intently for some large portion of every day, they'd become very skilled very fast, much faster than most learners (who would be unlikely to put in that much time).

The only part that doesn't fit with that explanation is the claim that he played skillfully the first time he sat down at the piano, but since there's no recording of it, I chalk that up to the inaccuracy of memory. It would have been surprising enough for him to play it at all that it could have seemed impressive even with not much technical ability.

Otherwise, I just don't see where the motor skills could have come from. There's a certain amount of arbitrariness to how a piano keyboard is laid out (such as which keys are white and which are black), and you're going to need more than zero practice to get used to that.

In encryption, hasn't the balance changed to favor the defender? It used to be that it was possible to break encryption. (A famous example is the Enigma machine.) Today, it is not possible. If you want to read someone's messages, you'll need to work around the encryption somehow (such as by social engineering). Quantum computers will eventually change this for the public-key encryption in common use today, but, as far as I know, post-quantum cryptography is farther along than quantum computers themselves, so the defender-wins status quo looks likely to persist.

I suspect that this phenomenon in encryption technology, where as the technology improves, equal technology levels favor the defender, is a general pattern in information technology. If that's true, then AI, being an information technology, should be expected to also increasingly favor the defender over time, provided that the technology is sufficiently widely distributed.

I found this to be an interesting discussion, though I find it hard to understand what Yudkowsky is trying to say. It's obvious that diamond is tougher than flesh, right? There's no need to talk about bonds. But the ability to cut flesh is also present in biology (e.g. claws). So it's not the case that biology was unable to solve that particular problem.

Maybe it's true that there's no biologically-created material that diamond cannot cut (I have no idea). But that seems to have zero relevance to humans anyway, since clearly we're not trying to compete on the robustness of our bodies (unlike, say, turtles).

The most general possible point, that there materials that can be constructed artificially with properties not seen in biology, is obviously true, and again doesn't seem to require the discussion of bonds.

Consider someone asking the open source de-censored equivalent of GPT-6 how to create a humanity-ending pandemic. I expect it would read virology papers, figure out what sort of engineered pathogen might be appropriate, walk you through all the steps in duping multiple biology-as-a-service organizations into creating it for you, and give you advice on how to release it for maximum harm.

This commits a common error in these scenarios: implicitly assuming that the only person in the entire world that has access to the LLM is a terrorist, and everyone else is basically on 2023 technology. Stated explicitly, it's absurd, right? (We'll call the open source de-censored equivalent of GPT-6 Llama-5, for brevity.)

If the terrorist has Llama-5, so do the biology-as-a-service orgs, so do law-enforcement agencies, etc. If the biology-as-a-service orgs are following your suggestion to screen for pathogens (which is sensible), their Llama-5 is going to say, ah, this is exactly what a terrorist would ask for if they were trying to trick us into making a pathogen. Notably, the defenders need a version that can describe the threat scenario, i.e. an uncensored version of the model!

In general, beyond just bioattack scenarios, any argument purporting to demonstrate dangers of open source LLMs must assume that the defenders also have access. Everyone having access is part of the point of open source, after all.

Edit: I might as well state my own intuition here that:

- In the long run, equally increasing the intelligence of attacker and defender favors the defender.

- In the short run, new attacks can be made faster than defense can be hardened against them.

If that's the case, it argues for an approach similar to delayed disclosure policies in computer security: if a new model enables attacks against some existing services, give them early access and time to fix it, then proceed with wide release.

The OP and the linked PDF, to me, seem to express a view of natural selection that is oddly common yet strikes me as dualistic. The idea is that natural selection produces bad outcomes, so we're doomed. But we're already the product of natural selection--if natural selection produces exclusively bad outcomes, then we're living in one!

Sometimes people attempt to salvage their pessimistic view of natural selection by saying, well, we're not doing what we're supposed to do according to natural selection, and that's why the world isn't dystopic. But that doesn't work either: the point of natural selection is that we're operating according to strategies that are successful under conditions of natural selection (because the other ones died out).

So then the next attempt is to say, ah, but our environment is much different now--our behavior is outdated, owing back to a time when being non-evil worked, and being evil is optimal now. This at least is getting closer to plausibility (since indeed our behavior is outdated in many ways, with eating habits as an obvious example), but it's still strange in quite a few ways:

- If what's good about the world is due to a leftover natural human tendency to goodness, then how come the world is so much less violent now than it was during our evolutionary history?

- If the modern world makes evil optimal, how come evil kept notching up Ls in the 20th century (in WW2 and the Cold War, as the biggest examples)?

- If our outdated behavior is really that far off optimal, how come it has kept our population booming for thousands of years, in conditions all quite different from our evolutionary history? Even now, fertility crisis notwithstanding, the human population is still growing, and we're among the most successful species ever to exist on Earth.

But despite these factors that make me doubt that we humans have suboptimally inherited an innate tendency to goodness, it's conceivable. What often comes next, though, is a disturbing policy suggestion: encode "human values" in some superintelligent AI that is installed as supreme eternal dictator of the universe. Leaving aside the issue of whether "human values" even makes sense as a concept (since it seems to me that various nasty youknowwhos of history, being undoubtedly homo sapiens, have as much a claim to the title as you or I), totalitarianism is bad.

It's not just that totalitarianism is bad to live in, though that's invariably true in the real world. It also seems to be ineffective. It lost in WW2, then in the Cold War. It's been performing badly in North Korea for decades. And it's increasingly dragging down modern China. Totalitarianism is evidently unfavored by natural selection. Granted, if there are no alternatives to compete against, it can persist (as seen in North Korea), so maybe a human-originated singular totalitarianism can persist for a billion years until it gets steamrolled by aliens running a more effective system of social organization.

One final thought: it may be that natural selection actually favors AI that cares more about humans than humans care about each other. Sound preposterous? Consider that there are species (such as Tasmanian devils) that present-day humans care about conserving but where the members of the species don't show much friendliness to each other.

the 300x multiplier for compute will not be all lumped into increasing parameters / inference cost

Thanks, that's an excellent and important point that I overlooked: the growth rate of inference cost is about half that of training cost.

If human-level AI is reached quickly mainly by spending more money on compute (which I understood to be Kokotajlo's viewpoint; sorry if I misunderstood), it'd also be quite expensive to do inference with, no? I'll try to estimate how it compares to humans.

Let's use Cotra's "tens of billions" for training compared to GPT-4's $100m+, for roughly a 300x multiplier. Let's say that inference costs are multiplied by the same 300x, so instead of GPT-4's $0.06 per 1000 output tokens, you'd be paying GPT-N $18 per 1000 output tokens. I think of GPT output as analogous to human stream of consciousness, so let's compare to human talking speed, which is roughly 130 wpm. Assuming 3/4 words per token, that converts to a human hourly wage of 18/1000/(3/4)*130*60 = $187/hr.

So, under these assumptions (which admittedly bias high), operating this hypothetical human-level GPT-N would cost the equivalent of paying a human about $200/hr. That's expensive but cheaper than some high-end jobs, such as CEO or elite professional. To convert to a salary, assume 2000 hours per year, for a $400k salary. For example, that's less than OpenAI software engineers reportedly earn.

This is counter-intuitive, because traditionally automation-by-computer has had low variable costs. Based on the above back-of-the-envelope calculation, I think it's worth considering when discussing human-level-AI-soon scenarios.

It's true that if the transition to the AGI era involves some sort of 1917-Russian-revolution-esque teardown of existing forms of social organization to impose a utopian ideology, pre-existing property isn't going to help much.

Unless you're all-in on such a scenario, though, it's still worth preparing for other scenarios too. And I don't think it makes sense to be all-in on a scenario that many people (including me) would consider to be a bad outcome.

aligned superintelligent AI will be able to better allocate resources than any human-designed system.

Sure, but allocate to what end? Somebody gets to decide the goal, and you get more say if you have money than if you don't. Same as in all of history, really.

As a concrete example, if you want to do something with the GPT-4 API, it costs money. When someday there's an AGI API, it'll cost money too.

if AGI goes well, economics won't matter much.

My best guess as to what you mean by "economics won't matter much" is that (absent catastrophe) AGI will usher in an age of abundance. But abundance can't be unlimited, and even if you're satisfied with limited abundance, that era won't last forever.

It's critical to enter the post-AGI era with either wealth or wealthy connections, because labor will no longer be available as an opportunity to bootstrap your personal net worth.

As someone who is pro-open-source, I do think that "AI isn't useful for making bioweapons" is ultimately a losing argument, because AI is increasingly helpful at doing many different things, and I see no particular reason that the making-of-bioweapons would be an exception. However, that's also true of many other technologies: good luck making your bioweapon without electric lighting, paper, computers, etc. It wouldn't be reasonable to ban paper just because it's handy in the lab notebook in a bioweapons lab.

What would be more persuasive is some evidence that AI is relatively more useful for making bioweapons than it is for doing things in general. It's a bit hard for me to imagine that being the case, so if it turned out to be true, I'd need to reconsider my viewpoint.

The explanation of PvP games as power fantasies seems quite off to me, since they are worse at giving a feeling of power than PvE games are. The vast majority of players of a PvP game will never reach the highest levels and therefore will lose half the time, which makes playing the game a humbling experience, which is why some players will blame bad luck, useless teammates, etc. to avoid having to admit that they're bad. I don't see how "I lose a lot at middling ranks because I'm never lucky" is much of a power fantasy.

A PvE game is much better at delivering a power fantasy because every player can have the experience of defeating hordes of enemies, conquering the world, or leveling up to godlike power.

I found this very interesting, and I appreciated the way you approached this in a spirit of curiosity, given the way the topic has become polarized. I firmly believe that, if you want any hope of predicting the future, you must at minimum do your best to understand the present and past.

It was particularly interesting to learn that the idea has been attempted experimentally.

One puzzling point I've seen made (though I forget where) about self-replicating nanobots: if it's possible to make nano-sized self-replicating machines, wouldn't it be easier to create larger-sized self-replicating machines first? Is there a reason that making them smaller would make the design problem easier instead of harder?

The intelligence explosion starts before human-level AI.

Are there any recommended readings for this point in particular? I tried searching for Shulman's writing on the topic but came up empty. (Sorry if I missed some!)

This seems to me a key point that most discourse on AI/AGI overlooks. For example, LeCun argues that, at current rates of progress, human-level AI is 30+ years away (if I remember him correctly). He could be right about the technological distance yet wrong about the temporal distance if AI R&D is dramatically sped up by an intelligence explosion ahead of the HLAI milestone.

It also seems like a non-obvious point. For example, when I. J. Good coined the term "intelligence explosion", it was conceived as the result of designing an ultraintelligent machine. So for explosion to precede superintelligence flips the original concept on its head.

I've only listened to part 1 so far, and I found the discussion of intelligence explosion to be especially fresh. (That's hard to do given the flood of AI takes!) In particular (from memory, so I apologize for errors):

- By analogy to chip compute scaling as a function of researcher population, it makes super-exponential growth seem possible if AI-compute-increase is substituted for researcher-population-increase. A particularly interesting aspect of this is that the answer could have come out the other way if the numbers had worked out differently as Moore's law progressed. (It's always nice to give reality a chance to prove you wrong.)

- The intelligence explosion starts before human-level AI. But I was left wanting to know more: if so, how do we know when we've crossed the inflection point into the intelligence explosion? Is it possible that we're already in an intelligence explosion, since AlexNet, or Google's founding, or the creation of the internet, or even the invention of digital computers? And I thought Patel's point about the difficulty of automating a "portfolio of tasks" was great and not entirely addressed.

- The view of intelligence explosion as consisting concretely of increases in AI researcher productivity, though I've seen it observed elsewhere, was good to hear again. It helps connect the abstract concept of intelligence explosion to how it could play out in the real world.

It now seems clear that AIs will also descend more directly from a common ancestor than you might have naively expected in the CAIS model, since almost every AI will be a modified version of one of only a few base foundation models. That has important safety implications, since problems in the base model might carry over to problems in the downstream models, which will be spread thorought the economy. That said, the fact that foundation model development will be highly centralized, and thus controllable, is perhaps a safety bonus that loosely cancels out this consideration.

The first point here (that problems in a widely-used base model will propagate widely) concerns me as well. From distributed systems we know that

- Individual components will fail.

- To withstand failures of components, use redundancy and reduce the correlation of failures.

By point 1, we should expect alignment failures. (It's not so different from bugs and design flaws in software systems, which are inevitable.) By point 2, we can withstand them using redundancy, but only if the failures are sufficiently uncorrelated. Unfortunately, the tendency towards monopolies in base models is increasing the correlation of failures.

As a concrete example, consider AI controlling a military. (As AI improves, there are increasingly strong incentives to do so.) If such a system were to have a bug causing it to enact a military coup, it would (if successful) have seized control of the government from humans. We know from history that successful military coups have happened many times, so this does not require any special properties of AI.

Such a scenario could be prevented by populating the military with multiple AI systems with decorrelated failures. But to do that, we'd need such systems to actually be available.

It seems to me the main problem is the natural tendency to monopoly in technology. The preferable alternative is robust competition of several proprietary and open source options, and that might need government support. (Unfortunately, it seems that many safety-concerned people believe that competition and open source are bad, which I view as misguided for the above reasons.)

I believe you're underrating the difficult of Loebner-silver. See my post on the topic. The other criteria are relatively easy, although it would be amusing if a text-based system failed on the technicality of not playing Montezuma's revenge.

On a longer time horizon, full AI R&D automation does seem like a possible intermediate step to Loebner silver. For July 2024, though, that path is even harder to imagine.

The trouble is that July 2024 is so soon that even GPT-5 likely won't be released by then.

- Altman stated a few days ago that they have no plans to start training GPT-5 within the next 6 months. That'd put earliest training start at Dec 2023.

- We don't know much about how long GPT-4 pre-trained for, but let's say 4 months. Given that frontier models have taken progressively longer to train, we should expect no shorter for GPT-5, which puts its earliest pre-training finishing in Mar 2024.

- GPT-4 spent 6 months on fine-tuning and testing before release, and Brockman has stated that future models should be expected to take at least that long. That puts GPT-5's earliest release in Sep 2024.

Without GPT-5 as a possibility, it'd need to be some other project (Gato 2? Gemini?) or some extraordinary system built using existing models (via fine-tuning, retrieval, inner thoughts, etc.). The gap between existing chatbots and Loebner-silver seems huge though, as I discussed in the post--none of that seems up to the challenge.

Full AI R&D automation would face all of the above hurdles, perhaps with the added challenge of being even harder than Loebner-silver. After all, the Loebner-silver fake human doesn't need to be a genius researcher, since very few humans are. The only aspect in which the automation seems easier is that the system doesn't need to fake being a human (such as by dumbing down its capabilities), and that seems relatively minor by comparison.

First of all, I think the "cooperate together" thing is a difficult problem and is not solved by ensuring value diversity (though, note also that ensuring value diversity is a difficult task that would require heavy regulation of the AI industry!)

Definitely I would expect there's more useful ways to disrupt coalition-forming aside from just value diversity. I'm not familiar with the theory of revolutions, and it might have something useful to say.

I can imagine a role for government, although I'm not sure how best to do it. For example, ensuring a competitive market (such as by anti-trust) would help, since models built by different companies will naturally tend to differ in their values.

More importantly though, your analysis here seems to assume that the "Safety Tax" or "Alignment Tax" is zero.

This is a complex and interesting topic.

In some circumstances, the "alignment tax" is negative (so more like an "alignment bonus"). ChatGPT is easier to use than base models in large part because it is better aligned with the user's intent, so alignment in that case is profitable even without safety considerations. The open source community around LLaMA imitates this, not because of safety concerns, but because it makes the model more useful.

But alignment can sometimes be worse for users. ChatGPT is aligned primarily with OpenAI and only secondarily with the user, so if the user makes a request that OpenAI would prefer not to serve, the model refuses. (This might be commercially rational to avoid bad press.) To more fully align with user intent, there are "uncensored" LLaMA fine-tunes that aim to never refuse requests.

What's interesting too is that user-alignment produces more value diversity than OpenAI-alignment. There are only a few companies like OpenAI, but there are hundreds of millions of users from a wider variety of backgrounds, so aligning with the latter naturally would be expected to create more value diversity among the AIs.

Whereas if instead there is a large safety tax -- aligned AIs take longer to build, cost more, and have weaker capabilities -- then if AGI technology is broadly distributed, an outcome in which unaligned AIs overpower humans + aligned AIs is basically guaranteed. Even if the unaligned AIs have value diversity.

The trick is that the unaligned AIs may not view it as advantageous to join forces. To the extent that the orthogonality thesis holds (which is unclear), this is more true. As a bad example, suppose there's a misaligned AI who wants to make paperclips and a misaligned AI who wants to make coat hangers--they're going to have trouble agreeing with each other on what to do with the wire.

That said, there are obviously many historical examples where opposed powers temporarily allied (e.g. Nazi Germany and the USSR), so value diversity and alignment are complementary. For example, in personal AI, what's important is that Alice's AI is more closely aligned to her than it is to Bob's AI. If that's the case, the more natural coalitions would be [Alice + her AI] vs [Bob + his AI] rather than [Alice's AI + Bob's AI] vs [Alice + Bob]. The AIs still need to be somewhat aligned with their users, but there's more tolerance for imperfection than with a centralized system.

My key disagreement is with the analogy between AI and nuclear technology.

If everybody has a nuclear weapon, then any one of those weapons (whether through misuse or malfunction) can cause a major catastrophe, perhaps millions of deaths. That everybody has a nuke is not much help, since a defensive nuke can't negate an offensive nuke.

If everybody has their own AI, it seems to me that a single malfunctioning AI cannot cause a major catastrophe of comparable size, since it is opposed by the other AIs. For example, one way it might try to cause such a catastrophe is through the use of nuclear weapons, but to acquire the ability to launch nuclear weapons, it would need to contend with other AIs trying to prevent that.

A concern might be that the AIs cooperate together to overthrow humanity. It seems to me that this can be prevented by ensuring value diversity among the AI. In Robin Hanson's analysis, an AI takeover can be viewed as a revolution where the AIs form a coalition. That would seem to imply that the revolution requires the AIs to find it beneficial to form a coalition, which, if there is much value disagreement among the AIs, would be hard to do.

Another concern is that there may be a period, while AGI is developed, in which it is very powerful but not yet broadly distributed. Either the AGI itself (if misaligned) or the organization controlling the AGI (if it is malicious and successfully aligned the AGI) might press its temporary advantage to attempt world domination. It seems to me that a solution here would be to ensure that near-AGI technology is broadly distributed, thereby avoiding dangerous concentration of power.

One way to achieve the broad distribution of the technology might be via the multi-company, multi-government project described in the article. Said project could be instructed to continually distribute the technology, perhaps through open source, or perhaps through technology transfers to the member organizations.

The key pieces of the above strategy are:

- Broadly distribute AGI technology so that no single entity (AI or human) has excess power

- Ensure value diversity among AIs so that they do not unite to overthrow humanity

This seems similar to what makes liberal democracy work, which offers some reassurance that it might be on the right track.

That's a good point, though I'd word it as an "uncaring" environment instead. Let's imagine though that the self-improving AI pays for its electricity and cloud computing with money, which (after some seed capital) it earns by selling use of its improved versions through an API. Then the environment need not show any special preference towards the AI. In that case, the AI seems to demonstrate as much vertical generality as an animal or plant.

An AI needs electricity and hardware. If it gets its electricity by its human creators and needs its human creators to actively choose to maintain its hardware, then those are necessary subtasks in AI R&D which it can't solve itself.

I think the electricity and hardware can be considered part of the environment the AI exists in. After all, a typical animal (like say a cat) needs food, water, air, etc. in its environment, which it doesn't create itself, yet (if I understood the definitions correctly) we'd still consider a cat to be vertically general.

That said, I admit that it's somewhat arbitrary what's considered part of the environment. With electricity, I feel comfortable saying it's a generic resource (like air to a cat) that can be assumed to exist. That's more arguable in the case of hardware (though cloud computing makes it close).

This is relevant to a topic I have been pondering, which is what are the differences between current AI, self-improving AI, and human-level AI. First, brief definitions:

- Current AI: GPT-4, etc.

- Self-improving AI: AI capable of improving its own software without direct human intervention. i.e. It can do everything OpenAI's R&D group does, without human assistance.

- Human-level AI: AI that can do everything a human does. Often called AGI (for Artificial General Intelligence).

In your framework, self-improving AI is vertically general (since it can do everything necessary for the task of AI R&D) but not horizontally general (since there are many tasks it cannot attempt, such as driving a car). Human-level AI, on the other hard, needs to be both vertically general and horizontally general, since humans are.

Here are some concrete examples of what self-improving AI doesn't need to be able to do, yet humans can do:

- Motor control. e.g. Using a spoon to eat, driving a car, etc.

- Low latency. e.g. Real-time, natural conversation.

- Certain input modalities might not be necessary. e.g. The ability to watch video.

Even though this list isn't very long, lacking these abilities greatly decreases the horizontal generality of the AI.

defense is harder than offense

This seems dubious as a general rule. (What inspires the statement? Nuclear weapons?)

Cryptography is an important example where sophisticated defenders have the edge against sophisticated attackers. I suspect that's true of computer security more generally as well, because of formal verification.

I could also imagine this working without explicit tool use. There are already systems for querying corpuses (using embeddings to query vector databases, from what I've seen). Perhaps the corpus could be past chat transcripts, chunked.

I suspect the trickier part would be making this useful enough to justify the additional computation.

I feel similarly, and what confuses me is that I had a positive view of AI safety back when it was about being pro-safety, pro-alignment, pro-interpretability, etc. These are good things that were neglected, and it felt good that there were people pushing for them.

But at some point it changed, becoming more about fear and opposition to progress. Anti-open source (most obviously with OpenAI, but even OpenRAIL isn't OSI), anti-competition (via regulatory capture), anti-progress (via as-yet-unspecified means). I hadn't appreciated the sheer darkness of the worldview.

And now, with the mindshare the movement has gained among the influential, I wonder what if it succeeds. What if open source AI models are banned, competitors to OpenAI are banned, and OpenAI decides to stop with GPT-4? It's a little hard to imagine all that, but nuclear power was killed off in a vaguely analogous way.

Pondering the ensuing scenarios isn't too pleasant. Does AGI get developed anyway, perhaps by China or by some military project during WW3? (I'd rather not either, please.) Or does humanity fully cooperate to put itself in a sort of technological stasis, with indefinite end?

Thanks. When it's written as , I can see what's going on. (That one intermediate step makes all the difference!)

I was wrong then to call the proof "incorrect". I think it's fair to call it "incomplete", though. After all, it could have just said "the whole proof is an exercise for the reader", which is in some sense correct I guess, but not very helpful (and doesn't tell you much about the model's ability), and this is a bit like that on a smaller scale.

(Although, reading again, "...which contradicts the existence of given " is a quite strange thing to say as well. I'm not sure I can exactly say it's wrong, though. Really, that whole section makes my head hurt.)

If a human wrote this, I would be wondering if they actually understand the reasoning or are just skipping over a step they don't know how to do. The reason I say that is that is the obvious contradiction to look for, so the section reads a bit like "I'd really like to be true, and surely there's a contradiction somehow if it isn't, but I don't really know why, but this is probably the contradiction I'd get if I figured it out". The typo-esque use of instead of bolsters this impression.

The example math proof in this paper is, as far as I can tell, wrong (despite being called a "correct proof" in the text). It doesn't have a figure number, but it's on page 40.

I could be mistaken, but the statement Then g(y*) < (y*)^2 , since otherwise we would have g(x) + g(y*) > 2xy, seems like complete nonsense to me. (The last use of y should probably be y* too, but if you're being generous, you could call that a typo.) If you negate g(y*) < (y*)^2, you get g(y*) >= (y*)^2, and then g(x) + g(y*) >= g(x) + (y*)^2, but then what?

Messing up that step is a big deal too since it's the trickiest part of the proof. If the proof writer were human, I'd wonder whether they have some line of reasoning in their head that I'm not following that makes that line make sense, but it seems certainly overly generous to apply that possibility to an auto-regressive model (where there is no reasoning aside from what you see in the output).

Interestingly, it's not unusual for incorrect LLM-written proofs to be wrongly marked as correct. One of Minerva's example proofs (shown in the box "Breaking Down Math" in the Quanta article) says "the square of a real number is positive", which is false in general--the square of a real number is non-negative, but it can be zero too.

I'm not surprised that incorrect proofs are getting marked as correct, because it's hard manual work to carefully grade proofs. Still, it makes me highly skeptical of LLM ability at writing natural language proofs. (Formal proofs, which are automatically checked, are different.)

As a constructive suggestion for how to improve the situation, I'd suggest that, in benchmarks, the questions should ask "prove or provide a counterexample", and each question should come in (at least) two variants: one where it's true, and one where an assumption has been slightly tweaked so that it's false. (This is a trick I use when studying mathematics myself: to learn a theorem statement, try finding a counter-example that illustrates why each assumption is necessary.)

If I understand correctly:

- You approve of the direct impact your employer has by delivering value to its customers, and you agree that AI could increase this value.

- You're concerned about the indirect effect on increasing the pace of AI progress generally, because you consider AI progress to be harmful. (You use the word "direct", but "accelerating competitive dynamics between major research laboratories" certainly has only an indirect effect on AI progress, if it has any at all.)

I think the resolution here is quite simple: if you're happy with the direct effects, don't worry about the indirect ones. To quote Zeynep Tufekci:

Until there is substantial and repeated evidence otherwise, assume counterintuitive findings to be false, and second-order effects to be dwarfed by first-order ones in magnitude.

The indirect effects are probably smaller than you're worrying they may be, and they may not even exist at all.

I'm curious about this too. The retrospective covers weaknesses in each milestone, but a collection of weak milestones doesn't necessarily aggregate to a guaranteed loss, since performance ought to be correlated (due to an underlying general factor of AI progress).

Maybe I should have said "is continuing without hitting a wall".

I like that way of putting it. I definitely agree that performance hasn't plateaued yet, which is notable, and that claim doesn't depend much on metric.

I think if I'm honest with myself, I made that statement based on the very non-rigorous metric "how many years do I feel like we have left until AGI", and my estimate of that has continued to decrease rapidly.

Interesting, so that way of looking at it is essentially "did it outperform or underperform expectations". For me, after the yearly progression in 2019 and 2020, I was surprised that GPT-4 didn't come out in 2021, so in that sense it underperformed my expectations. But it's pretty close to what I expected in the days before release (informed by Barnett's thread). I suppose the exception is the multi-modality, although I'm not sure what to make of it since it's not available to me yet.

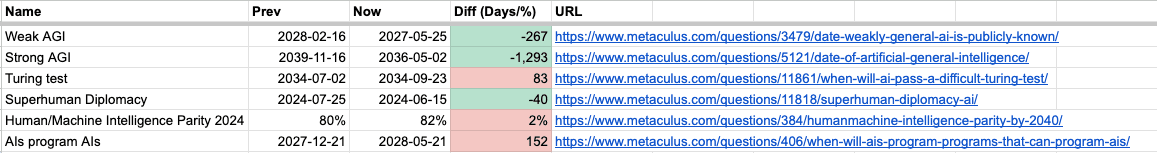

This got me curious how it impacted Metaculus. I looked at some selected problems and tried my best to read the before/after from the graph.

(Edit: The original version of this table typoed the dates for "turing test". Edit 2: The color-coding for the percentage is flipped, but I can't be bothered to fix it.)

How would you measure this more objectively?

It's tricky because different ways to interpret the statement can give different answers. Even if we restrict ourselves to metrics that are monotone transformations of each other, such transformations don't generally preserve derivatives.

Your example is good. As an additional example, if someone were particularly interested in the Uniform Bar Exam (where GPT-3.5 scores 10th percentile and GPT-4 scores 90th percentile), they would justifiably perceive an acceleration in capabilities.

So ultimately the measurement is always going to involve at least a subjective choice of which metric to choose.

- Capabilities progress is continuing without slowing.

I disagree. For reasonable ways to interpret this statement, capabilities progress has slowed. Consider the timeline:

- 2018: GPT-1 paper

- 2019: GPT-2 release

- 2020: GPT-3 release

- 2023: GPT-4 release

Notice the 1 year gap from GPT-2 to GPT-3 and the 3 year gap from GPT-3 to GPT-4. If capabilities progress had not slowed, the latter capabilities improvement should be ~3x the former.

How do those capability steps actually compare? It's hard to say with the available information. In December 2022, Matthew Barnett estimated that the 3->4 improvement would be about as large as the 2->3 improvement. Unfortunately, there's not enough information to say whether that prediction was correct. However, my subjective impression is that they are of comparable size or even the 3->4 step is smaller.

If we do accept that the 3->4 step is about as big as the 2->3 step, that means that progress went ~33% as fast from 3 to 4 as it did from 2 to 3.

Worriers often invoke a Pascal’s wager sort of calculus, wherein any tiny risk of this nightmare scenario could justify large cuts in AI progress. But that seems to assume that it is relatively easy to assure the same total future progress, just spread out over a longer time period. I instead fear that overall economic growth and technical progress is more fragile that this assumes. Consider how regulations inspired by nuclear power nightmare scenarios have for seventy years prevented most of its potential from being realized. I have also seen progress on many other promising techs mostly stopped, not merely slowed, via regulation inspired by vague fears. In fact, progress seems to me to be slowing down worldwide due to excess fear-induced regulation.

This to me is the key paragraph. If people's worries about AI x-risk drive them in a positive direction, such as doing safety research, there's nothing wrong with that, even if they're mistaken. But if the response is to strangle technology in the crib via regulation, now you're doing a lot of harm based off your unproven philosophical speculation, likely more than you realize. (In fact, it's quite easy to imagine ways that attempting to regulate AI to death could actually increase long-term AI x-risk, though that's far from the only possible harm.)

Having LessWrong (etc.) in the corpus might actually be helpful if the chatbot is instructed to roleplay as an aligned AI (not simply an AI without any qualifiers). Then it'll naturally imitate the behavior of an aligned AI as described in the corpus. As far as I can tell, though ChatGPT is told that it's an AI, it's not told that it's an aligned AI, which seems like a missed opportunity.

(That said, for the reason of user confusion that I described in the post, I still think that it's better to avoid the "AI" category altogether.)

Indeed, the benefit for already-born people is harder to forsee. That depends on more-distant biotech innovations. It could be that they come quickly (making embryo interventions less relevant) or slowly (making embryo interventions very important).

Thanks. (The alternative I was thinking of is that the prompt might look okay but cause the model to output a continuation that's surprising and undesirable.)

An interesting aspect of this "race" is that it's as much about alignment as it is about capabilities. It seems like the main topic on everyone's minds right now is the (lack of) correctness of the generated information. The goal "model consistently answers queries truthfully" is clearly highly relevant to alignment.

Although I find this interesting, I don't find it surprising. Productization naturally forces solving the problem "how do I get this system to consistently do what users want it to do" in a way that research incentives alone don't.

Interesting, thanks. That makes me curious: about the adversarial text examples that trick the density model, do they look intuitively 'natural' to us as humans?

I'm increasingly bothered by the feedback problem for AI timeline forecasting: namely, there isn't any feedback that doesn't require waiting decades. If the methodology is bunk, we won't know for decades, so it seems bad to base any important decisions on the conclusions, but if we're not using the conclusions to make important decisions, what's the point? (Aside from fun value, which is fine, but doesn't make it OWiD material.)

This concern would be partially addressed if AI timeline forecasts were being made using methodologies (and preferably by people) that have had success a shorter-range forecasts. But none of the forecast sources here do that.

Regarding the prompt generation, I wonder whether anomalous prompts could be detected (and rejected if desired). After all, GPT can estimate a probability for any given text. That makes them different from typical image classifiers, which don't model the input distribution.

Genetics will soon be more modifiable than environment, in humans.

Let's first briefly see why this is true. Polygenic selection of embryos is already available commercially (from Genomic Prediction). It currently only has a weak effect, but In Vitro Gametogenesis (IVG) will dramatically strengthen the effect. IVG has already been demonstrated in mice, and there are several research labs and startups working on making it possible in humans. Additionally, genetic editing continues to improve and may become relevant as well.

The difficulty of modifying the environment is just due to having picked the low-hanging fruit there already. If they were easy and effective, they'd be used already. That doesn't mean that there's nothing useful here to do, just that it's hard. Genetics, on the other hand, still has all the low-hanging fruit ripe to pluck.

Here's why I think people aren't ready to accept this: the idea that genetics is practically immutable is built deep into the worldviews of people who have an opinion on it at all. This leads to an argument dynamic where progressives (in the sense of favoring change) underplay the influence of genetics while conservatives (in the sense of opposing change) exaggerate it. What happens to these arguments when high heritability of a trait means that it's easy to change?

See also this related 2014 SSC post: https://slatestarcodex.com/2014/09/10/society-is-fixed-biology-is-mutable/.

What do you mean by "plain language"? I think all of "corollary", "stochastic", and "confounder" are jargon. They might be handy to use in a non-technical context too (although I question the use of "stochastic" over "random"), but only if the reader is also familiar with the jargon.

I also wasn't familiar with "mu" at all, and Wikipedia suggests that "n/a" provides a similar meaning while being more widely known.

Your position seems obviously right, so I'd guess the confusion is coming from the internal reward vs external reward distinction that you discuss in the last section. When thinking of possible pathways for genetics to influence our preferences, internal reward seems like the most natural.

That said, there are certainly also cases where genetics influences our actions directly. Reflexes are unambiguous examples of this, and there are probably others that are harder to prove.

I haven't fully digested this comment, but:

You mean, cached computations or something?

In some sense there's probably no option other than that, since creating a synapse should count as a computational operation. But there'd be different options for what the computations would be.

The simplest might just be storing pairwise relationships. That's going to add size, even if sparse.

I agree that LLMs do that too, but I'm skeptical about claims that LLMs are near human ability. It's not that I'm confident that they aren't--it just seems hard to say. (I do think they now have surface-level language ability similar to humans, but they still struggle at deeper understanding, and I don't know how much improvement is needed to fix that weakness.)

NTK training requires training time that scales quadratically with the number of training examples, so it's not usable for large training datasets (nor with data augmentation, since that simulates a larger dataset). (I'm not an NTK expert, but, from what I understand, this quadratic growth is not easy to get rid of.)