Thoughts on hardware / compute requirements for AGI

post by Steven Byrnes (steve2152) · 2023-01-24T14:03:39.190Z · LW · GW · 31 commentsContents

Question: How much compute will it take to run this AGI?

Table of Contents / TL;DR

1. Prologue: Why should we care about the answer to this question?

1.1 Prologue bonus: Counterarguments (i.e. reasons that I shouldn't care), and my responses

2. Some prior discussion

2.1 Eliezer Yudkowsky’s comment about 286s and 1995 home computers

2.2 Paul Christiano’s comment about superhuman chess versus insect brains

2.3 Metaculus

3. The brain bound

3.1 Compute: 1e14 FLOP/s seems like more than enough

3.2 Memory and the von Neumann bottleneck

3.2.1 Background on the von Neumann bottleneck

3.2.2 I think an adult brain is storing only maybe ≲10GB of information (0.001 bits per synapse!!)

3.2.3 Upshot on memory

3.3 Conclusion

3.3.1 My biggest lingering doubts

4. Side note: Ratio of training compute to deployed compute

Updates / Corrigenda after initially publishing this post (added June 2024)

None

31 comments

[NOTE: I have some updates / corrigenda at the bottom. ]

Let’s say I know how to build / train a human-level (more specifically, John von Neumann level) AGI. And let’s say that we (and/or the AGI itself) have already spent a few years[1] on making the algorithm work better and more efficiently.

Question: How much compute will it take to run this AGI?

(NB: I said "running" an AGI, not training / programming an AGI. I'll talk a bit about “training compute” at the very end.)

Answer: I don’t know. But that doesn’t seem to be stopping me from writing this post. ¯\_(ツ)_/¯ My current feeling—which I can easily imagine changing after discussion (which is a major reason I'm writing this!)—seems to be:

- 75%: One current (Jan 2023) high-end retail gaming PC (with an Nvidia GeForce RTX 4090 GPU) will be enough (or more than enough) for human-level human-speed AGI,

- 85%: One future high-end retail gaming PC, that will on sale in a decade (2033)[2], will be enough for human-level AGI, at ≥20% human speed.

This post will explain why I currently feel this way.

Table of Contents / TL;DR

- In the prologue (Section 1), I’ll give four reasons that I care about this question: one related to our long-term prospects of globally monitoring and regulating human-level AGI; one related to whether an early AGI could be “self-sufficient” after wiping out humanity; one related to whether AGI is even feasible in the first place; and one related to “compute overhangs”. I’ll also respond to two counterarguments (i.e. arguments that I shouldn’t care about this question), namely: “More-scaled-up AGIs will always be smarter than less-scaled-up AGIs; that relative comparison is what we care about, not the absolute intelligence level that’s possible, on, say, a single GPU”, and “The very earliest human-level AGIs will be just barely human-level on the world’s biggest compute clusters, and that’s the thing that we should mainly care about, not how efficient they wind up later on”.

- In Section 2, I’ll touch on a bit of prior discussion that I found interesting or thought-provoking, including a claim by Eliezer Yudkowsky that human-level human-speed AGI requires ridiculously little compute, and conversely a Metaculus forecast expecting that it requires orders of magnitude more compute than what I'm claiming here.

- In Section 3, I’ll argue that the amount of computation used by the human brain is a good upper bound for my question. Then in Section 3.1 I’ll talk about compute requirements by starting with the “mechanistic method” in Joe Carlsmith’s report in brain computation and arguing for some modest adjustments in the “less compute” direction. Next in Section 3.2 I’ll talk about memory requirements, arguing for the (initially-surprising-to-me) conclusion that the brain has orders of magnitude fewer bits of learned information than it has synapses—100 trillion synapses versus ≲100 billion bits of incompressible information. Putting these together in Section 3.3, I reach the conclusion (mentioned at the top) that a retail gaming GPU will probably be plenty for human-level human-speed AGI. Finally I’ll talk about my lingering doubts in Section 3.3.1, by listing a few of the most plausible-to-me reasons that my conclusion might be wrong.

- In Section 4, I’ll move on from running an AGI to training it (from scratch). This is a short section, where I mostly wanted to raise awareness of the funny fact that the ratio of training-compute to deployed-compute seems to be ≈7 orders of magnitude lower if you estimate it by looking at brains, versus if you estimate it by extrapolating from today’s self-supervised language models. I don’t have a great explanation why. On the other hand, perhaps surprisingly, I claim that resolving this question doesn’t seem particularly important for AGI governance questions.

1. Prologue: Why should we care about the answer to this question?

Here are three arguments that this is a relevant question:

Argument 1: Some people seem to be hoping that nobody will ever make a misaligned human-level AGI thanks to some combination of regulation, monitoring, and enlightened self-interest. That story looks more plausible if we’re talking about an algorithm that can only run on a giant compute cluster containing thousands of high-end GPUs, and less plausible if we’re talking about an algorithm that can run on one 2023 gaming PC.

Argument 2: There are possible AGI actions that would make the world somewhat or completely inhospitable to humans—say, manufacturing pandemics and crop diseases, destroying ecosystems, triggering nuclear war, etc. An interesting question is whether a long-term-power-seeking AGI—say, a paperclip maximizer—might be motivated to take those actions. The answer depends on what it takes for an AGI to be “self-sufficient”, versus needing a functional civilization of millions or billions of humans to keep the power on, manufacture replacement chips and replacement robot parts, mine metals, etc. This in turn depends on all kinds of things:

For example, what are the minimal resources necessary to MacGyver a semiconductor supply chain (and robots and solar cells etc.) from salvaged materials? I won’t wade into that whole debate (I linked to some prior discussion here [LW · GW]), but one of the relevant inputs to that question is how many and what kind of chips it takes to run an AGI.

After all, it seems pretty plausible to me that if there's an AGI server and a solar cell and one teleoperated robot body in an otherwise-empty post-apocalyptic Earth, well then that one teleoperated robot body could build a janky second teleoperated robot body from salvaged car parts or whatever, and then the two of them could find more car parts to build a third and fourth, and those four could build up to eight, etc. That was basically the story I was telling here [LW · GW]. But there's one possibility that I didn't mention (because I didn't think of it): if running N teleoperated robots in real time requires a data center with N × 10,000 high-end GPUs, then that story basically falls apart.[3]

See also a claim here [LW(p) · GW(p)] that AGI will keep humans around as slaves / subjects rather than wipe them out entirely and replace them by robots (in contradiction to the saying “humans are made of atoms which it can use for something else”), because human brains are such incredible machines that even a callous all-powerful AGI would not find any better way to use those atoms (further argument along those lines [LW · GW]).

Argument 3: Some people who think that AGI will never happen and/or never be a big deal (example [LW · GW]) justify this belief on the grounds that running human-level AGI on computer chips will take an extraordinarily, exorbitantly, even impossibly large amount of compute and electricity. Obviously this is not true if my claim at the top is even remotely close to correct.[4]

Argument 4: There’s an influential opinion that if AGI algorithms are invented sooner rather than later, then this is a good thing for AGI safety, because that will reduce the “compute overhang”.[5] Specifically, once we have AGI algorithms, those algorithms could be nearly-instantly run on any already-existing chips and data centers. So the more that there is tons of compute, spread all over the world, controlled by a great many actors, the harder it will be to implement global governance or coordination around AGI, and likewise the faster AGI will grow into a world-disruptive force. And this so-called “compute overhang” is growing with time—ever more chips of ever higher quality are proliferating around the world with each passing year.

…But if I’m right, then this “hardware overhang” argument would carry very little weight, because alas, that horse has long ago left the barn.

1.1 Prologue bonus: Counterarguments (i.e. reasons that I shouldn't care), and my responses

Counterargument 1: Regardless of how much compute is required for an AGI to reach “John-von-Neumann-level intelligence” or any other specific threshold, it will almost definitely remain true that bigger AGIs will be smarter and more capable than smaller AGIs. In particular, if a gaming PC is sufficient for human-level intelligence, then a massive compute cluster is sufficient for radically superhuman intelligence. The important thing is the balance of power between “AGIs made by random careless people” and “AGIs made by big (hopefully)-responsible actors”, not the capabilities of the former on some absolute scale. So we don’t need to answer the question addressed in this post.

I don't find this argument convincing for reasons here [LW · GW]. Basically, I think we should put a lot of probability mass on the possibility that if big actors have powerful intent-aligned AGIs under their tight control, they will not use them to make the world resilient against small careless actors making unaligned human-level AGIs. Maybe they’d be hampered by coordination problems, or by inherent offense-defense imbalances, or maybe they just wouldn’t be trying in the first place! These are basically the same mundane reasons that pre-AGI humans and human institutions are failing to ban gain-of-function research[6] right now, despite the fact that many humans are quite smart and many human institutions are quite powerful. These people and institutions are mostly not applying their intelligence and power towards solving the gain-of-function problem, or in rare cases they're trying and failing, or in a few cases they're even trying to make the problem worse.

If we care about whether an AI could manufacture a pandemic or collapse an ecosystem or whatever, I claim that’s mainly an absolute capabilities metric, not a relative capabilities metric, for reasons in the previous paragraph. And I do think “human-level” as defined in this post (including John von Neumann level ability to do innovative science) is in the right general ballpark for the level at which an AGI can come up with and successfully execute those kinds of catastrophically destructive plans. For example, it seems to me that arranging for the manufacture and release of a novel deadly pandemic is the kind of thing that is probably achievable by one or a few AGIs that are able to do innovative science at a John von Neumann level.

Counterargument 2: Regardless of how much compute is eventually required for AGIs, we should really only care about the very first AGIs, whose implementation will presumably be so horrifically inefficient that they can just barely run on the biggest compute cluster available. Sure, later on we’ll have AGIs with more efficient algorithms, but those will arrive in a post-AGI world, and if we’re not already dead, then we’ll have intent-aligned AGI helpers to give us advice.

I don’t find this argument convincing either.

For one thing, the claim that the first AGI will be on one of the world’s biggest computer clusters is very much open to question. Humanity is collectively doing much more experiments and tinkering-around on smaller compute clusters than massive ones. If there’s an innovative future ML algorithm with a qualitatively different (much faster) scaling law, it’s perfectly plausible IMO that it will be found by a startup or university group or whatever, and maybe the first thing they’d do is work to get it to human-level with the resources that they have easily available, rather than work to make it extremely parallelizable and legible such that people will invest massive amounts of money in running it on the world’s giantest compute cluster.

For another thing, I don’t think that the possibility that we’ll have “intent-aligned AGI helpers” in the future absolves us of the need to think right now about what happens afterwards, again for various reasons discussed here [LW · GW].

Also, both of these counterarguments are mostly counterarguments to Argument 1 above, not Arguments 2 & 3.

2. Some prior discussion

2.1 Eliezer Yudkowsky’s comment about 286s and 1995 home computers

Richard Ngo: How much improvement do you think can be eked out of existing amounts of hardware if people just try to focus on algorithmic improvements?…

This says 44x improvements over 7 years: https://openai.com/blog/ai-and-efficiency/ …

Eliezer Yudkowsky: Well, if you're a superintelligence, you can probably do human-equivalent human-speed general intelligence on a 286, though it might possibly have less fine motor control, or maybe not, I don't know. …

I wouldn't be surprised if human ingenuity asymptoted out at AGI on a home computer from 1995.

Don't know if it'd take more like a hundred years or a thousand years to get fairly close to that.

(For you kids, a 286 is an Intel computer chip from 1982.)

I'm unaware of any written justification of these Eliezer claims; I did find a comment emphatically disagreeing with the 286 claim here [LW(p) · GW(p)], on the grounds that real-time image processing requires doing at least a few operations on every pixel of every frame of the video feed, and that already adds up to more than the 286’s clock speed. Seems plausible! I also note that even a Jupiter-brain superintelligence can't fit more than 16MB of information into a 286's 16MB RAM address space, and my hunch is that we live in a sufficiently complicated world that 16MB is not enough cached knowledge to do human-equivalent things. And the 286 is probably too slow to make up for that deficiency by replacing “cached knowledge” with “re-computing things on demand”. Much more on memory requirements in a later section.

But anyway, I'm not too interested in arguing about 286s or 1995 home computers or whatever, because it seems decision-irrelevant. I'd rather talk about a gaming PC with an NVIDIA GeForce RTX 4090 GPU. If an RTX 4090 is in the ballpark of being able to run human-level human-speed AGI, then we're in the situation where AGI technology will (in the absence of extraordinary measures like totalitarian-level surveillance) be abundant and ungovernable. (Apparently 130,000 4090’s have been sold, and it sounds like they’re mostly going to individuals with gaming PCs, not data centers.) On the other hand, if an RTX 4090 is way more than enough for human-level AGI … then we're in the same situation. So whatever. I'll talk about the RTX 4090 hypothesis, which is much weaker than Eliezer’s hypothesis above, but still strong enough to imply the same conclusion, and evidently much stronger than lots of people believe (see Section 2.3 below).

2.2 Paul Christiano’s comment about superhuman chess versus insect brains

From here (2018):

If you look at the intellectual work humans do, I think a significant part of it could be done by very cheap AIs at the level of crows or not much more sophisticated than crows. There’s also a significant part that requires a lot more sophistication. I think we’re very uncertain about how hard doing science is. As an example, I think back in the day we would have said playing board games that are designed to tax human intelligence, like playing chess or go is really quite hard, and it feels to humans like they’re really able to leverage all their intelligence doing it.

It turns out that playing chess from the perspective of actually designing a computation to play chess is incredibly easy, so it takes a brain very much smaller than an insect brain in order to play chess much better than a human. I think it’s pretty clear at this point that science makes better use of human brains than chess does, but it’s actually not clear how much better. It’s totally conceivable from our current perspective, I think, that an intelligence that was as smart as a crow, but was actually designed for doing science, actually designed for doing engineering, for advancing technologies rapidly as possible, it is quite conceivable that such a brain would actually outcompete humans pretty badly at those tasks.

I added emphasis to the sentence that I found especially thought-provoking. I can’t immediately find an explanation of this from Paul himself, but let’s see, AlphaZero-style superhuman chess is possible in a <50-million-parameter ResNet I think,[7] whereas a honey bee has perhaps 1 million neurons and 1 billion synapses. OK, sure, seems plausible.

2.3 Metaculus

As of this writing, the 108 people forecasting at this Metaculus question aggregate to a median forecast of 3e17 FLOP/s for human-parity AGI, measured five years after it is first demonstrated. On my models (see Section 3.1 below), this median forecast is an overestimate by at least 3½ orders of magnitude.

You can scroll down on the Metaculus page to see a comment thread, but it’s pretty short and I really only see one person with a specific argument for such high numbers (i.e. “Anthony” [Aguirre] talking about the Landauer limit, which I respond to in this footnote→[8]).

This is pure speculation, but I wonder if people are assuming that there will be no important algorithmic improvements between now and AGI, and noting that we don’t have human-level AGI right now, and therefore more scaling is needed? If so, I disagree with the “there will be no important algorithmic improvements between now and AGI” part.

Hmm, on further consideration, maybe this is related to me defining “human-level AGI” (for the purpose of this post) as “ability to invent new science and technology on par with John von Neumann”, and the Metaculus question having a much much lower bar than that. I’m quite confident that important new AI ideas are needed for a John von Neumann AGI, but I’m rather less confident that important new AI ideas are needed to pass that Metaculus test. Maybe a kind of “brute force method” along the lines of a massive LLM similar to today’s but bigger, perhaps with some extra bells and whistles, would be enough to pass the Metaculus test, in which case the corresponding brute-force AI model might be huge.

(Just to be explicit: I find it plausible that an AI could pass that Metaculus test while still being a full paradigm-shift away from any AI that could pose a direct existential risk.)

3. The brain bound

(Note: All numbers in this post should be interpreted as Fermi estimates [? · GW].)

An upper bound on the amount of compute required to run human-level AGI is the amount of compute used by a human brain. Everyone agrees so far, but people think about that bound in different ways.

- In one school of thought, we should expect AGI to eventually dramatically outperform the brain in computational efficiency, because evolution is myopic, and the tasks that we most care about for AGI (e.g. ability to write good computer code and invent technology) are tasks that evolution was barely even trying to make the human brain good at.

- In another school of thought, we should expect AGI to remain much worse than the brain in computational efficiency for a long time, because evolution has put a ton of fine-tuned complex machinery into the brain, maybe vaguely like a learning algorithm trained on millions of lifetimes of experience rather than just one. We programmers have our work cut out in capturing that intricate design work in our source code, on this view.

I mostly reject the second school of thought, for reasons discussed in my recent series (see Sections 2.8 [LW · GW], 3.7 [LW · GW], 3.8 [LW · GW]). I think there is some amount of fine-tuned complexity underlying human intelligence, for example related to hyperparameters and neural network architecture, but not necessarily beyond what a small team of programmers could nail down with some trial-and-error.

I’m not terribly sympathetic to the first school of thought either. When people put forward theories about how intelligence could be structured, my typical reaction is either “Actually I think human brains are already doing that”, or “Actually, I think the thing that human brains do is a better design”. (See also my discussion here [LW · GW].) There’s clearly some room for efficiency improvement—for example, human brains are spending compute on lots of AGI-irrelevant processing like olfaction—but I figure that’s probably a factor of 2 or something, not orders of magnitude. A bigger question is the impact of different computational substrates / affordances. Just as the same high-level algorithm can be compiled to different CPU instruction sets, likewise the same high-level algorithm can be built out of the low-level operations supported by biological neurons, or built out of the low-level operations supported by RTX 4090 silicon chips. But those two options don't have to be equally good! One substrate might be far better suited than the other for running the particular high-level algorithm which is responsible for human intelligence. For example, I think the whole cerebellum—half the neurons in the brain, though a smaller fraction of synapses—is a massive workaround to mitigate the harm of biological neurons’ extremely slow signal propagation speed (details [LW · GW]).

I’ll return to these points, but in the next two sections, I’ll focus first on AGI compute requirements, and then on AGI memory and memory-bandwidth requirements, implied by a comparison to human brains.

3.1 Compute: 1e14 FLOP/s seems like more than enough

Joe Carlsmith wrote How Much Computational Power Does It Take to Match the Human Brain? This is an excellent starting point; I’ll talk about his “mechanistic method” with some caveats below.

He writes that the human brain has “1e13-1e15 spikes through synapses per second (1e14-1e15 synapses × 0.1-1 spikes per second)”. I think Joe was being overly conservative, and I feel comfortable editing this to “1e13-1e14 spikes through synapses per second”, for reasons in this footnote→[9].

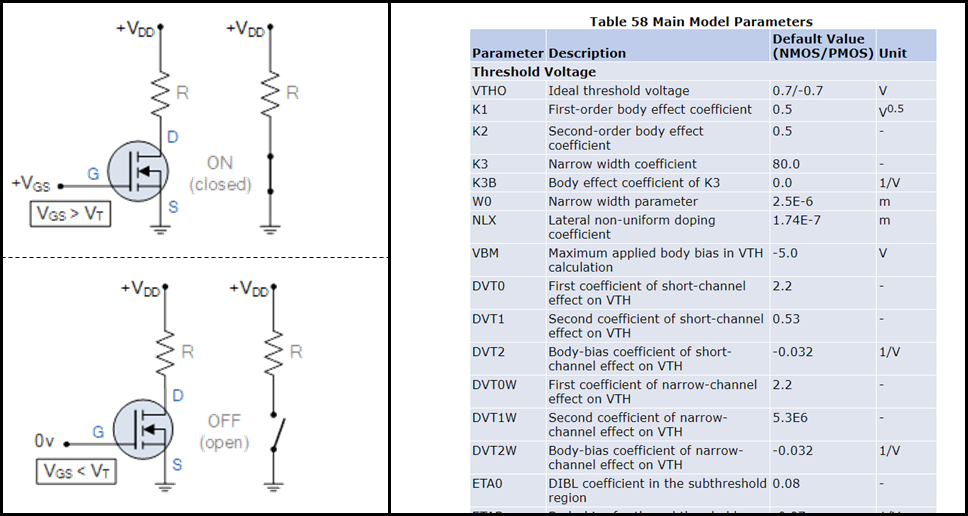

Then Joe goes through some analysis and settles on 1-100 FLOP per spike-through-synapse. Again, I understand why he wants to be conservative, but having read his discussion I feel comfortable with “1”. For example, this paper is what I consider a plausible algorithmic role of dendritic spikes and synapses in cortical pyramidal neurons, and the upshot is “it’s basically just some ANDs and ORs”. If that’s right, this little bit of brain algorithm could presumably be implemented with <<1 FLOP per spike-through-synapse. I think that’s a suggestive data point, even if (as I strongly suspect) dendritic spikes and synapses are meanwhile doing other operations too. One could make a similar point about transistors, as in the following figure and caption.

Moreover, as I’ve previously discussed here [LW · GW], consider someone who wants to multiply two numbers on their laptop. Here are two things they can do:

- They can use their laptop CPU to directly multiply the numbers.

- They can use their laptop CPU to do a transistor-by-transistor simulation of a pocket calculator microcontroller chip, which in turn is multiplying the numbers.

Obviously the second will be orders of magnitude slower.[10]

By analogy, I feel pretty comfortable chopping off a big factor—like, a really big factor, like maybe a factor of 100 (I’m stating this without justification)—to account for the difference between “understanding the operating principles of the brain-like learning algorithms, and then implementing those same learning algorithms on our silicon chips in a sensible way” versus “simulating biological neurons which in turn are running those learning algorithms”.

Then there’s an additional small factor from the human brain spending compute on AGI-irrelevant tasks like olfaction, and a larger (but uncertain) factor from human brains presumably not having the optimal neural architecture / hyperparameters / etc. for doing science (since very little human evolution has happened since science was invented).

So I feel like 1e14 FLOP/s is a very conservative upper bound on compute requirements for AGI. And conveniently for my narrative, that number is about the same as the 8.3e13 FLOP/s that one can perform on the RTX 4090 retail gaming GPU that I mentioned in the intro.

3.2 Memory and the von Neumann bottleneck

3.2.1 Background on the von Neumann bottleneck

In Jacob Cannell’s post LOVE in a simbox is all you need [LW · GW], he writes:

The second phase of Moore's Law is 'massively'[18] parallel computing, beginning in the early 2000's and still going strong, the golden era of GPUs as characterized by the rise of Nvidia over Intel. GPUs utilize die shrinkage and transistor budget growth near exclusively for increased parallelization. However GPUs still do not escape the fundamental Von Neumann bottleneck that arises from the segregation of RAM and logic. There are strong economic reasons for this segregation in the current semiconductor paradigm (specialization allows for much cheaper capacity in off-chip RAM), but it leads to increasingly ridiculous divergence between arithmetic throughput and memory bandwidth. For example, circa 2022 GPUs can crunch up to 1e15 (low precision) ops/s (for matrix multiplication), but can fetch only on order 1e12 bytes/s from RAM: an alu/mem ratio of around 1000:1, vastly worse than the near 1:1 ratio enjoyed for much of the golden CPU era.

The next upcoming phase is neuromorphic computing[19], which overcomes the VN bottleneck by distributing memory and moving it closer to computation. The brain takes this idea to its logical conclusion by unifying computation and storage via synapses: storing information by physically adapting the circuit wiring. A neuromorphic computer has an alu:mem ratio near 1:1, with memory bandwidth on par with compute throughput.

The RTX 4090 memory bandwidth is indeed 1e12 bytes/s (1000 GB/s), consistent with the above. But should we say, as Jacob says, “only 1e12 bytes/s”? Or is 1e12 bytes/s plenty? I say “it’s plenty”, for the following reason:

3.2.2 I think an adult brain is storing only maybe ≲10GB of information (0.001 bits per synapse!!)

Why do I say that?

I figure, at least 10%ish of the cortex is probably mainly storing information which one could also find in a 2022-era large language model (LLM).[11] That includes things like grammar and vocabulary, and knowledge of celebrity gossip and entomology, and so on. That “10%ish of the cortex” has an information content that’s probably compressible into 1e10 bits, based on a rough comparison to the storage size and information content of LLMs.[12] Extrapolating out, this would imply that the whole cortex is storing 10GB of information,[13] and I believe that the cortex comprises most of the AGI-relevant stored information content in the brain. (See here [LW · GW] for my dismissive discussion of the cerebellum. The striatum, thalamus, and a few other areas are also storing AGI-relevant learned information, but are comparatively small.)

Another way to think about it: 10GB would also correspond to permanently storing 100 bits of information per second for 25 years, which if anything seems kinda too high to me maybe?

On the other hand, the brain has 100 trillion synapses.[14] Is it really plausible that the brain is using 100 trillion synapses to store 100 billion bits of information? I think my answer is “yes”.

I can think of one special case where “massively more synapses than information” is exactly what I expect: the lack of ConvNet-style weight-tying in primary visual cortex. As a reminder, when an early ConvNet layer is learning how to do edge-detection, it’s the same parameters for edge-detection in any different part of the image. By contrast, the primary visual cortex consists of (I think) hundreds of thousands of little bits of cortex (“cortical columns”), each processing a tiny bit of the field-of-view, and insofar as they’re all doing edge detection (as an example), I think they all need to learn to do so independently.

But that’s specific to visual cortex, which is only 18% of the neocortex, and I don’t have any comparably tidy story for most of the other parts.

Hmm, OK, maybe my broader story would relate to the brain’s “processing-in-memory” design. The synapse is not just an information-storage-unit, it’s also a thing-that-does-calculations. If one bit of stored information (e.g. information about how the world works) needs to be involved in 1000 different calculations, it seems plausible that it would need to be associated with 1000 synapses, right?

(There’s also the fact that not all cortical synapses are involved in information storage—I think some are innate, functioning kinda like the “scaffolding” of the learning algorithm onto which the learned parameters are attached. But I think that amounts to like a factor of <2, not orders of magnitude.)

I also asked around, and got an interesting, maybe-complementary comment from AI_WAIFU [LW · GW]:

I think brains have 2-3 OOM less info in them than LLMs, but they operate in a grossly over-parameterized regime to get better data efficiency and make up for things like a 10W power budget and the inherent noisiness of neurons firing and dying and such.

Interesting! Well anyway, I’m not taking a strong stand on the details here, but all things considered I feel comfortable standing behind my claim that 100 trillion synapses are storing ≲100 billion bits of information.

3.2.3 Upshot on memory

Again, the RTX 4090’s memory bandwidth is 1000 GB/s. So if the previous section is right, the entire (long-term-storage[15]) contents of a human-level intelligence can be queried 100 times per second before hitting the memory bandwidth ceiling. I don’t have a tight argument that this is enough memory-querying to allow human-speed thinking, but it seems very likely to me.

Also, it seems to me that, in humans, the entire information-content of memory is not in fact queried every time you think a thought. In fact, probably only a small fraction gets accessed. Consider the following made-up conversational exchange:

Alice: “Hey look at this painting.”

Bob: “Uh huh…”

Alice: “Doesn’t it remind you of something?”

Bob: “…”

Alice: “Doesn’t it remind you of that silly drawing of you that I made in 8th grade?”

Bob: “Ohhhh, haha, yeah I see that, that’s hilarious.”

One can imagine an algorithm where that kind of exchange could not possibly happen. Instead, Bob would look at the painting, and then his brain would recall every bit of information that it has ever seen or known, sequentially one after another, checking each for a pattern-match, multiple times per second. Sometime during this process, the silly drawing from 8th grade would pop up on the list, and the pattern-matcher would trigger, and then Bob would immediately see the connection, and there would be no need for Alice to jog his memory.

But for real-world humans, memory jogging does happen.

I conclude that lots of information in the brain—probably the overwhelming majority—must be kinda “dormant” at any given time. It only enters into the computation when either (1) it was recently active and has not yet faded from working memory, or (2) some other active thought happens to contain some sort of “pointer” pointing to it.

So that would suggest a possibility that the von Neumann bottleneck is even less likely to be a limiting factor than implied above.

(To be clear, that’s just a possibility. Whether it’s actually the case depends on details of how the future AGI algorithms will be structured and implemented, which is difficult to speculate about.)

3.3 Conclusion

Sections 3.1-3.2 above suggest that our example RTX 4090 gaming PC has plenty of compute, and plenty of RAM (assuming a typical 16 GB chip), and plenty of memory bandwidth to support human-level human-speed AGI algorithms, based on a comparison to the human brain. Are there other possible bottlenecks? Not that I can think of, but what do I know? Please share your thoughts in the comment section.

3.3.1 My biggest lingering doubts

Suppose that I’m significantly wrong about the RTX 4090 gaming PC being sufficient, like wrong by ≳3 orders of magnitude. What are the most plausible-to-me ways that that might happen? In roughly decreasing order of likelihood:

- AI researchers will be missing at least one very important principle of intelligence employed by the brain—a principle which is not strictly necessary but which reduces computational costs by many orders of magnitude—and the researchers will make up for that deficiency by brute force (i.e., scale). And nobody will figure out that principle of intelligence even within a few years after John von Neumann AGI.

- Unknown unknowns (either unknown-to-anyone or unknown-to-me-in-particular)

- My Section 3.2 arguments about memory requirements are deeply confused for some reason, and actually we should be counting human brain synapses, and likewise we should expect AGI to require 10-100 TB of memory or something.

- People will find an unnecessarily-complicated algorithm for human-level AGI, in which just a tiny part of the algorithm does all the work, and the rest of the algorithm is a red herring—maybe analogous to the ML use of “attention” before the Attention is All You Need paper. (Before that paper, ML people were already using attention, but they were mixing in attention with lots of other components that ultimately proved to be pointless, IIUC.) An unnecessarily-detailed brain simulation would be in this category—just like how an atom-by-atom simulation of a pocket calculator would be an effective yet extraordinarily inefficient way to multiply numbers. [I put a pretty low weight on this; I think that, after 3 years of widespread awareness of this kind of algorithm, somebody would figure out which part of that algorithm was doing all the work, and code up a more efficient version without the cruft. But who knows, I guess.]

Jacob Cannell argues here [LW(p) · GW(p)] that training an AGI would take not 1 but rather 1000 GPUs or so (for memory not compute reasons), see also his post AI Timelines via Cumulative Optimization Power [LW · GW]. I showed Jacob an earlier draft of this post, and his criticism (IIUC) was basically that:

- If human memory is extremely compressible, then that’s related to a tradeoff between memory compactness and compute, and so compressing it would blow up the compute requirements, and I’m failing to take that into account;

- My above guess that the information content stored in an LLM is 100× larger than the (linguistic) information content of an adult human brain is implausible, because humans are more capable.

[These are my words not his, and there’s a decent chance that I’m misunderstanding / oversimplifying!]

Anyway, I disagree with (2) because I think that human capability is not evidence for humans having lots of stored information that LLMs don’t, but rather comes from other types of differences between human brains and LLMs (e.g. related to RL, and different inductive biases, etc). (Related background info [LW · GW].) Indeed, the big AGI-x-risk-relevant area where I think humans outperform AIs is not “knowing how to do a task”, but rather “not knowing how to do a task, and then figuring it out”. So in my opinion, it’s not really about stored information.[16]

For (1), among other things, if we think of GPT-3 information storage as “compressed” and human brain cortex information storage as “uncompressed”, then claim (1) would suggest that the brain must be getting dramatically more “bang for its buck” in FLOP terms than GPT-3. But that doesn’t seem to be the case. For example, maybe a forward-pass and backward-pass through GPT-3 adds up to 3e11 FLOP. If the cortex is really 1e14 FLOP/s as above, and if 10% of the cortex is doing the kind of linguistic and high-level conceptual stuff that GPT-3 is doing, and if one forward and backward pass through GPT-3 is vaguely analogous to “thinking a thought” which takes, I dunno, 0.3 seconds or something, then GPT-3 beats the brain in “thinking per FLOP” by a factor of 10—just the reverse of the suggestion of (1)! [As mentioned above, I think there are important differences between GPT-3 and brain algorithms. But I also think that they have something in common such that the previous sentences are meaningful.] So anyway, I find it hard to believe the claim that the brain’s (apparently) extraordinarily-space-inefficient data storage method is really there as a way to reduce compute requirements. [Or if it is, then the 1e14 FLOP/s of Section 3.1 is a dramatic overestimate, which only strengthens my headline conclusion.] I prefer the alternative theories that I mentioned in Section 3.2.

(…But having said all that, I still feel some vague lingering doubts, and as a matter of fact I did lower my headline probabilities a bit after reading Jacob’s feedback.)

4. Side note: Ratio of training compute to deployed compute

This is not the main theme of this post, but it seems topical and interesting, so here it is anyway.

Conventional wisdom is “Training is a high fixed cost relative to using the trained model.” This is true in both today’s ML systems and brain-like algorithms [LW · GW]. But it seems to be much more true in today’s ML systems than in brain-like algorithms.

For example, raising a newborn human to become a competent 20-year-old engineer takes ≈20× the compute budget of having that now-adult engineer do 1 year of work.

Compare that to Holden Karnofsky’s claim (based on extrapolating from current large language models, I think) that this same factor might be “several hundred million” for AI.

What are we to make of that difference? I don’t have a great answer; I mostly want to shrug and say “Oh well, different algorithms are different”.

(ADDED JUNE 2023: I’m not endorsing this, but should mention that some people explain this difference by saying that, given the size / memory capacity of the human brain, the “optimal” amount of within-lifetime learning (e.g. under Chinchilla scaling) would be millions of years. But living millions of years was not evolutionarily adaptive for early humans, for obvious unrelated reasons, like they would die from other causes much sooner than that. E.g. Carl Shulman makes that argument here, I think towards the end of the podcast. I think there’s probably a kernel of truth to that kind of story, but I’m somewhat skeptical that it’s the majority of the explanation, partly because I don’t think the size / memory capacity of the human brain is nearly as big as it needs to be for that story, and partly because that story seems somewhat incompatible with the data on skill plateaus and such, although there are other age-related trends such that it’s not clear-cut, and partly because I think cortex learning algorithms are sufficiently different from transformers that Chinchilla scaling laws are a priori unlikely to apply even approximately.)

Note the implication though: if the ratio is 20× and not “several hundred million”, and if a retail gaming PC is enough to run AGI, then not only will it be possible to run AGI on abundant and untraceable hardware, but it will also be possible to train AGI from scratch on abundant and untraceable hardware.

On the other hand, I’m not sure how relevant that is to AGI governance. Suppose I’m wrong and the ratio does in fact turn out to be “several hundred million”, as opposed to “20”—such that only a few heavily-regulated-and-monitored actors in the world can train AGIs from scratch. Nevertheless, in this world: (1) the trained model weights are going to wind up on the dark web sooner or later; (2) fine-tuning an AGI seems to have similar risks as training one from scratch, but requires dramatically less compute, presumably little enough compute that a great many actors can do that.

So maybe the question of whether the ratio is like 20 (my guess) versus like several hundred million (Holden’s guess) is not too important a question in practice?

Thanks AI_WAIFU, Justis Mills, Gary Miguel, Thang Duong, and Jacob Cannell for critical comments on drafts.

Updates / Corrigenda after initially publishing this post (added June 2024)

- Mistake 1 I made above: Above I claimed “I think an adult brain is storing only maybe ≲10GB of information”. Gunnar_Zarncke set me straight [LW(p) · GW(p)] by reminding me that I should ponder the evidence from memory savants like Kim Peek & Solomon Shereshevsky. Kim Peek in particular has supposedly memorized 9000 (or is it 12,000?) books mostly-verbatim, among other things. If he was processing the words as pure English text with appropriate compression (e.g., storing references to whole words instead of individual letters), that would be maybe 3 GB or so I think, but it might also be substantially more. After all, Peek was able to memorize harder-to-compress information like phone books. Could Peek have memorized 9000 books full of randomly-generated gibberish letters? Was he storing accurate uncompressed images of each letter, including every smudge? I don’t know. But anyway, at the end of the day, my claim that humans are storing ≲ 10 GB of incompressible information is feeling pretty shaky, and I feel a good deal more comfortable making the weaker claim of “≲ 100 GB” (≈ 1 Tbit)

- Mistake 2 I made above: I was sloppy about the distinction between “useful stored information” and “information storage capacity”. As a stupid example, if I randomly initialize a 1e11-parameter LLM, then “train” it on one and only one token of data, then it has a heck of a lot more information capacity than useful stored information. It took me a while to understand why having “information storage capacity” greatly in excess of “useful stored information” is important for learning, but I think I got it now: Perhaps we can think of a memory system [LW · GW] / trained model like the cortex as consisting of ε% “a generative model of the world that you actually believe in and are acting on”, and (100–ε)% “a zillion zany little possible hypotheses about the world, almost all of which are false, but we’re tracking our credence on each of them anyway just in case one of them gradually accumulates enough bits of evidence to take seriously as an update to the main world-model”. This idea would elegantly explain the ML conventional wisdom that you get better results by training a big model and then compressing / distilling it down, than by training a small model in the first place—after training a big model, you can just throw out its massive scratchpad of infinitesimal credences (which are meaningless noise), and squish the good stuff into a much smaller model. See also: lottery ticket hypothesis [? · GW] & privileging the hypothesis [LW · GW].

- Where am I at now in regards to the headline beliefs at the top? I stand by them—they still seem to roughly capture my current beliefs / guesses, for whatever that’s worth. (Details in footnote.[17])

- ^

To be specific, let's say I'm interested in AGI hardware / compute requirements 3 years after it's widely known how to make an AGI that can do science as well as John von Neumann could. That’s assuming that the AGIs themselves are not appreciably helping (for some reason), or proportionally less than 3 years if the AGIs are helping, or proportionally more than 3 years if the knowledge is secret and thus only a few people are working on it.

- ^

I’m assuming business-as-usual continues in the computing hardware R&D and production ecosystem between now and then, e.g. assuming that nobody bombs the Taiwan fabs.

- ^

It’s entirely possible that one AGI could teleoperate more than one robot simultaneously, e.g. if it also builds a sub-AGI autopilot system that can handle basic movements and only “escalates” to the AGI occasionally when it faces a tricky decision. But if so, that just changes the number of required GPUs by a constant factor.

- ^

Even if hardware progress stops forever right now, and even if I am also underestimating the computational cost of AGI by an order of magnitude, and even if practical intelligence maxes out at the level of John von Neumann for some reason, well you can already rent eight interconnected A100 GPUs for <$10/hr, and it’s pretty clear that an ability to create arbitrary numbers of John von Neumanns for $10/hour each would be radically transformative.

- ^

E.g. Sam Altman 2023: “shorter timelines seem more amenable to coordination and more likely to lead to a slower takeoff due to less of a compute overhang”.

- ^

I expect that most people reading this are already familiar with the case for banning gain-of-function research; see for example here.

- ^

I’m guessing AlphaZero-chess is in the same ballpark as AlphaGo Zero, whose parameters are guessed from random people on the internet here (“23 million”) and here (“46 million”). (OTOH, this [LW · GW] says “original AlphaGo” has “about 5M” parameters, which seems like the odd one out? So I’m figuring that’s a mistake, unless AlphaGo Zero had 5-10× more parameters than the original AlphaGo?) If I cared more I would carefully read the description of the “dual-res” network in the AlphaGo Zero paper and calculate how many parameters it has, or maybe read the LeelaZero documentation more carefully. Please comment if you know, so I can update this post.

- ^

I basically think that Anthony’s claim "I'd find it pretty surprising if biology did not find a computation solution with neurons that is within a couple of orders of magnitude of the [Landauer] limit" is not really based on anything, and so I put very little stock in it, compared to the more specific arguments discussed below. For example, when an unmyelinated axon carries a spike from the neuron's soma, the axon is expending energy (proportional to its length) on running ion pumps, but it's doing no irreversible computation whatsoever, because it’s just moving a bit from one place to another. So that particular functionality is ∞ orders of magnitude less efficient than the Landauer Limit. See also a much more careful discussion of what we can and can’t learn about brain computation from the Landauer limit in Joe Carlsmith’s report Section 4. For example, the report notes that “A typical cortical spike dissipates around 1e10-1e11 kT.” Do we really think the brain is doing millions of FLOP of unavoidable computational work per cortical spike? I sure don’t. Again, see more detailed discussion below.

- ^

For one thing, it seems that 1e14 synapses is a much better guess than 1e15. Joe’s 1e15 number seems to come from an unexplained number in a Henry Markram talk, along with a calculation that involved rounding up several numbers simultaneously and forgetting that, while cortical pyramidal cells have thousands of synapses each, not all brain neurons are cortical pyramidal neurons. For another thing, my read on the evidence is that 0.1 spike/second is a better guess than 1 spike/second, when we weight over synapses (and hence ignore fast-spiking interneurons, which are small); see Joe’s footnote 134 and surrounding discussion.

- ^

For example, directly multiplying the numbers might take a few clock cycles of your laptop’s CPU, whereas simulating a pocket calculator microcontroller chip entails tracking the states of (at least) thousands of transistors and wires and capacitors, as they interact with each other and evolve in time.

- ^

Why do I say “at least 10%ish”? Well, from fMRI and lesion studies, we have some knowledge about what parts of the cortex are storing what kind of information. And for some of those parts of the cortex, the information they’re storing is either language-related or high-level-semantic information of the type that can also be found in LLMs. So basically, certain parts of superior & middle temporal gyrus, temporal pole, angular gyrus, hippocampus, and prefrontal cortex (in my opinion). I think those areas add up to maybe 10% of the cortex? The “at least” is because probably just about every part of the cortex has at least some information that can be found in a LLM, even if it also has information that can’t be found in a LLM, e.g. hard-to-articulate details about the visual appearances of objects.

- ^

GPT-3 has 175B parameters, which for float32 parameters would correspond to 700GB. But I’m guessing (e.g. see here) that GPT-3 could be compressed to 100GB by quantization (and/or other methods) with minor performance loss, and I’m guessing that this compressed GPT-3 would still have 100× more information content regarding grammar and vocabulary and celebrity gossip and entomology and whatnot than any human. I am open to arguments that this estimate is way off.

- ^

Maybe you don’t buy my LLM-based argument, and you think that the cortex actually has >>10GB of information content, and it’s all coming from non-LLM things like audio analysis and production, image analysis and production, motor control, etc. In that case, I would disagree on the grounds that the deep learning community has already produced models that do at least decently on any of those tasks (e.g. speech recognition, speech synthesis, image segmentation, robot motor control, etc.), and I believe that all of those models can be compressed to sub-GB size. So what exactly is all this information that these alleged hundreds or thousands of GB are storing?

- ^

There’s some controversy in the neuroscience community over whether the human brain stores learned information in synapses, or in gene expression, or something else, or all of the above. As it happens, I’m mostly on Team Synapse, but it doesn’t matter for this post. My “10 GB” claim is total information storage, without taking a stand on exactly how that information is physically encoded.

- ^

Note: When running the AGI, the information that needs to be in RAM is not only long-term-stored information / knowledge / habits / etc., but also short-term information like the current contents of working memory, and “memory traces” for learning, etc. I didn’t mention the latter, but I figure it’s probably comparatively small—or at least, not so large as to significantly threaten my “≲10GB” claim. For example, there are only ≈20 billion neocortical neurons total (ref), so for each, we can store some information about how long since it last fired etc. without the total data getting too high.

- ^

Some readers are thinking: No Steve, the human ability to “figure things out” is not about RL or inductive biases or whatever, it is about more and better stored information—it’s just that the stored information is related to meta-learning, and includes better generalizations that stem from diverse real-world training data, etc. My response is: that’s a reasonable hypothesis to entertain, and it is undoubtedly true to some extent, but I still think it’s mostly wrong, and I stand by what I wrote. However, I’m not going to try to convince you of that, because my opinion is coming from “inside view” considerations that I don’t want to get into here.

- ^

The 10 GB versus 100 GB change doesn’t have strong downstream consequences within my mental models—I already had a bunch of wiggle room on that. See where that came up in the text.

The distinction between memory capacity and useful memory content, i.e. “keeping track of (infinitesimal) credences on a zillion zany hypotheses, almost all of which will turn out to be wrong”, doesn’t really change my headline beliefs much either. The reason is not because I think sample efficiency stops mattering when we’re “running an AGI” rather than training. Quite the contrary, I’m very big into continuous learning (weight updates during deployment) as being absolutely critical [LW · GW] for real AGI. Instead, some of my reasons are: (1) When I finally understood this point, it mostly converted my outside-my-model causes for doubt (“I’m confused about where Jacob Cannell is coming from, maybe I’m missing something??”) into within-my-model causes for doubt (“maybe memory bandwidth is a huge constraint after all?”), so I had already kinda accounted for it in the headline belief. And (2) my guess is that “incrementing the credences on a zillion zany little hypotheses” is something that’s relevant <1% of the time (i.e. <1% of spikes-through-synapses), and for the other >99% it’s sufficient to ignore those in favor of the main active beliefs about the world, which can be queried in a much much smaller compressed data structure optimized for inference. (This includes things like (i) I don’t think the cortex updates itself unless there’s local evidence of surprise / confusion; (ii) I think that the vast majority of spikes-through-synapses are related to the nuts-and-bolts machinery of the inference algorithm, and unrelated to the learning algorithm.) Plus other stuff I won’t get into. Basically, I still mostly don’t think memory bottleneck will be an issue in the first place, and if it is, then I expect clever future AI researchers to have lots of options to mitigate it. So anyway, after accounting for those things, plus wiggle-room in my other guesses, I don’t wind up with much of an update at all. I’m still mainly concerned about “unknown unknowns” and the other items in 3.3.1—that’s the main reason my 75%/85% headline is not higher.

31 comments

Comments sorted by top scores.

comment by jacob_cannell · 2023-01-25T21:58:16.233Z · LW(p) · GW(p)

He writes that the human brain has “1e13-1e15 spikes through synapses per second (1e14-1e15 synapses × 0.1-1 spikes per second)”. I think Joe was being overly conservative, and I feel comfortable editing this to “1e13-1e14 spikes through synapses per second”, for reasons in this footnote→[9].

I agree that 1e14 synaptic spikes/second is the better median estimate, but those are highly sparse ops.

So when you say:

So I feel like 1e14 FLOP/s is a very conservative upper bound on compute requirements for AGI. And conveniently for my narrative, that number is about the same as the 8.3e13 FLOP/s that one can perform on the RTX 4090 retail gaming GPU that I mentioned in the intro.

You are missing some foundational differences in how von neumann arch machines (GPUs) run neural circuits vs how neuromorphic hardware (like the brain) runs neural circuits.

The 4090 can hit around 1e14 - even up to 1e15 - flops/s, but only for dense matrix multiplication. The flops required to run a brain model using that dense matrix hardware are more like 1e17 flops/s, not 1e14 flops/s. The 1e14 synapses are at least 10x locally sparse in the cortex, so dense emulation requires 1e15 synapses (mostly zeroes) running at 100hz. The cerebellum is actually even more expensive to simulate .. because of the more extreme connection sparsity there.

But that isn't the only performance issue. The GPU only runs matrix matrix multiplication, not the more general vector matrix multiplication. So in that sense the dense flop perf is useless, and the perf would instead be RAM bandwidth limited and require 100 4090's to run a single 1e14 synapse model - as it requires about 1B of bandwidth per flop - so 1e14 bytes/s vs the 4090's 1e12 bytes/s.

Your reply seems to be "but the brain isn't storing 1e14 bytes of information", but as other comments point out that has little to do with the neural circuit size.

The true fundamental information capacity of the brain is probably much smaller than 1e14 bytes, but that has nothing to do with the size of an actually *efficient* circuit, because efficient circuits (efficient for runtime compute, energy etc) are never also efficient in terms of information compression.

This is a general computational principle, with many specific examples: compressed neural frequency encodings of 3D scenes (NERFs) which access/use all network parameters to decode a single point O(N) are enormously less computationally efficient (runtime throughput, latency, etc) than maximally sparse representations (using trees, hashtables etc) which approach O(log(N)) or O(C), but the sparse representations are enormously less compressed/compact. These tradeoffs are foundational and unavoidable.

We also know that in many cases the brain and some ANN are actually computing basically the same thing in the same way (LLMs and linguistic cortex), and it's now obvious and uncontroversial that the brain is using the sparser but larger version of the same circuit, whereas the LLM ANN is using the dense version which is more compact but less energy/compute efficient (as it uses/accesses all params all the time).

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2023-01-27T19:14:53.011Z · LW(p) · GW(p)

Thanks!

We also know that in many cases the brain and some ANN are actually computing basically the same thing in the same way (LLMs and linguistic cortex), and it's now obvious and uncontroversial that the brain is using the sparser but larger version of the same circuit, whereas the LLM ANN is using the dense version which is more compact but less energy/compute efficient (as it uses/accesses all params all the time).

I disagree with “uncontroversial”. Just off the top of my head, people who I’m pretty sure would disagree with your “uncontroversial” claim include Randy O’Reilly, Josh Tenenbaum, Jeff Hawkins, Dileep George, these people, maybe some of the Friston / FEP people, probably most of the “evolved modularity” people like Steven Pinker, and I think Kurzweil (he thought the cortex was built around hierarchical hidden Markov models, last I heard, which I don’t think are equivalent to ANNs?). And me! You’re welcome to argue that you’re right and we’re wrong (and most of that list are certainly wrong, insofar as they’re also disagreeing with each other!), but it’s not “uncontroversial”, right?

The true fundamental information capacity of the brain is probably much smaller than 1e14 bytes, but that has nothing to do with the size of an actually *efficient* circuit, because efficient circuits (efficient for runtime compute, energy etc) are never also efficient in terms of information compression.

In the OP (Section 3.3.1) I talk about why I don’t buy that—I don’t think it’s the case that the brain gets dramatically more “bang for its buck” / “thinking per FLOP” than GPT-3. In fact, it seems to me to be the other way around.

Then “my model of you” would reply that GPT-3 is much smaller / simpler than the brain, and that this difference is the very important secret sauce of human intelligence, and the “thinking per FLOP” comparison should not be brain-vs-GPT-3 but brain-vs-super-scaled-up-GPT-N, and in that case the brain would crush it. And I would disagree about the scale being the secret sauce. But we might not be able to resolve that—guess we’ll see what happens! See also footnote 16 and surrounding discussion.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-01-27T20:47:34.552Z · LW(p) · GW(p)

I disagree with “uncontroversial”. Just off the top of my head, people who I’m pretty sure would disagree with your “uncontroversial” claim include

Uncontroversial was perhaps a bit tongue-in-cheek, but that claim is specifically about a narrow correspondence between LLMs and linguistic cortex, not about LLMs and the entire brain or the entire cortex.

And this claim should now be uncontroversial. The neuroscience experiments have been done, and linguistic cortex computes something similar to what LLMs compute, and almost certainly uses a similar predictive training objective. It obviously implements those computations in a completely different way on very different hardware, but they are mostly the same computations nonetheless - because the task itself determines the solution.

Examples from recent neurosci literature:

From "Brains and algorithms partially converge in natural language processing":

Deep learning algorithms trained to predict masked words from large amount of text have recently been shown to generate activations similar to those of the human brain. However, what drives this similarity remains currently unknown. Here, we systematically compare a variety of deep language models to identify the computational principles that lead them to generate brain-like representations of sentences

From "The neural architecture of language: Integrative modeling converges on predictive processing":

Here, we report a first step toward addressing this gap by connecting recent artificial neural networks from machine learning to human recordings during language processing. We find that the most powerful models predict neural and behavioral responses across different datasets up to noise levels.

We found a striking correspondence between the layer-by-layer sequence of embeddings from GPT2-XL and the temporal sequence of neural activity in language areas. In addition, we found evidence for the gradual accumulation of recurrent information along the linguistic processing hierarchy. However, we also noticed additional neural processes that took place in the brain, but not in DLMs, during the processing of surprising (unpredictable) words. These findings point to a connection between language processing in humans and DLMs where the layer-by-layer accumulation of contextual information in DLM embeddings matches the temporal dynamics of neural activity in high-order language areas.

Then “my model of you” would reply that GPT-3 is much smaller / simpler than the brain, and that this difference is the very important secret sauce of human intelligence, and the “thinking per FLOP” comparison should not be brain-vs-GPT-3 but brain-vs-super-scaled-up-GPT-N, and in that case the brain would crush it.

Scaling up GPT-3 by itself is like scaling up linguistic cortex by itself, and doesn't lead to AGI any more/less than that would (pretty straightforward consequence of the LLM <-> linguistic_cortex (mostly) functional equivalence).

In the OP (Section 3.3.1) I talk about why I don’t buy that—I don’t think it’s the case that the brain gets dramatically more “bang for its buck” / “thinking per FLOP” than GPT-3. In fact, it seems to me to be the other way around.

The comparison should between GPT-3 and linguistic-cortex, not the whole brain. For inference the linguistic cortex uses many orders of magnitude less energy to perform the same task. For training it uses many orders of magnitude less energy to reach the same capability, and several OOM less data. In terms of flops-equivalent it's perhaps 1e22 sparse flops for training linguistic cortex (1e13 flops * 1e9 seconds) vs 3e23 flops for training GPT-3. So fairly close, but the brain is probably trading some compute efficiency for data efficiency.

Replies from: steve2152, TekhneMakre↑ comment by Steven Byrnes (steve2152) · 2023-01-31T17:44:27.767Z · LW(p) · GW(p)

The comparison should between GPT-3 and linguistic-cortex

For the record, I did account for language-related cortical areas being ≈10× smaller than the whole cortex, in my Section 3.3.1 comparison. I was guessing that a double-pass through GPT-3 involves 10× fewer FLOP than running language-related cortical areas for 0.3 seconds, and those two things strike me as accomplishing a vaguely comparable amount of useful thinking stuff, I figure.

So if the hypothesis is “those cortical areas require a massive number of synapses because that’s how the brain reduces the number of FLOP involved in querying the model”, then I find that hypothesis hard to believe. You would have to say that the brain’s model is inherently much much more complicated than GPT-3, such that even after putting it in this heavy-on-synapses-lite-on-FLOP format, it still takes much more FLOP to query the brain’s language model than to query GPT-3. And I don’t think that. (Although I suppose this is an area where reasonable people can disagree.)

For inference the linguistic cortex uses many orders of magnitude less energy to perform the same task.

I don’t think energy use is important. For example, if a silicon chip takes 1000× more energy to do the same calculations as a brain, nobody would care. Indeed, I think they’d barely even notice—the electricity costs would still be much less than my local minimum wage. (20 W × 1000 × 10¢/kWh = $2/hr. Maybe a bit more after HVAC and so on.).

I’ve noticed that you bring up energy consumption with some regularity, so I guess you must think that energy efficiency is very important, but I don’t understand why you think that.

For training it uses many orders of magnitude less energy to reach the same capability, and several OOM less data…So fairly close, but the brain is probably trading some compute efficiency for data efficiency.

Other than Section 4, this post was about using an AGI, not training it from scratch. If your argument is “data efficiency is an important part of the secret sauce of human intelligence, not just in training-from-scratch but also in online learning, and the brain is much better at that than GPT-3, and we can’t directly see that because GPT-3 doesn’t have online learning in the first place, and the reason that the brain is much better at that is because it has this super-duper-over-parametrized model”, then OK that’s a coherent argument, even if I happen to think it’s mostly wrong. (Is that your argument?)

And this claim should now be uncontroversial. The neuroscience experiments have been done, and linguistic cortex computes something similar to what LLMs compute, and almost certainly uses a similar predictive training objective. It obviously implements those computations in a completely different way on very different hardware, but they are mostly the same computations nonetheless - because the task itself determines the solution.

Suppose (for the sake of argument—I don’t actually believe this) that human visual cortex is literally a ConvNet. And suppose that human scientists have never had the idea of ConvNets, but they have invented fully-connected feedforward neural nets. So they set about testing the hypothesis that “visual cortex is a fully-connected feedforward neural net”. I suspect that they would find a lot of evidence that apparently confirms this hypothesis, of the same sorts that you describe. For example, similar features would be learned in similar layers. There would be some puzzling discrepancies—especially sample efficiency, and probably also the handling of weird out-of-distribution inputs—but lots of experiments would miss those. So then (in this hypothetical universe) many people would be trumpeting the conclusion: “visual cortex is a fully-connected feedforward DNN”! But they would be wrong! And the careful neuroscientists—the ones who are scrutinizing brain structures, and/or doing experiments more sophisticated than correlating activities in unmanipulated naturalistic data, etc.—would be well aware of that.

There’s a skeptical discussion about specifically LLMs-vs-brains, with some references, in the first part of Section 5 of Bowers et al..

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-02-01T01:39:12.914Z · LW(p) · GW(p)

For the record, I did account for language-related cortical areas being ≈10× smaller than the whole cortex, in my Section 3.3.1 comparison. I was guessing that a double-pass through GPT-3 involves 10× fewer FLOP than running language-related cortical areas for 0.3 seconds, and those two things strike me as accomplishing a vaguely comparable amount of useful thinking stuff, I figure.

In your analysis the brain is using perhaps 1e13 flops/s (which I don't disagree with much), and if linguistic cortex is 10% of that we get 1e12 flops/s, or 300B flops for 0.3 seconds.

GPT-3 uses all of its nearly 200B parameters for one forward pass, but the flops is probably 2x that (because the attention layers don't use the long term params), and then you are using a 'double pass', so closer to 800B flops for GPT-3. Perhaps the total brain is 1e14 flops/s, so 3T flops for 0.3s of linguistic cortex but regardless its still using roughly the same amount of flops within our uncertainty range.

However as I mentioned earlier a model like GPT-3 running inference on a GPU is much less efficient than this, as many of the matrix mult calls (in training, parallelizing over time) become vector matrix mult calls and thus RAM bandwidth limited.

So if the hypothesis is “those cortical areas require a massive number of synapses because that’s how the brain reduces the number of FLOP involved in querying the model”, then I find that hypothesis hard to believe.

The brain's sparsity obviously reduces the equivalent flop count vs running the same exact model on dense mat mul hardware, but interestingly enough ends up in roughly the same "flops used for inference" regime as the smaller dense model. However it is getting by with perhaps 100x less training data (for the linguistic cortex at least).

If your argument is “data efficiency is an important part of the secret sauce of human intelligence, not just in training-from-scratch but also in online learning, and the brain is much better at that than GPT-3, and we can’t directly see that because GPT-3 doesn’t have online learning in the first place, and the reason that the brain is much better at that is because it has this super-duper-over-parametrized model”, then OK that’s a coherent argument, even if I happen to think it’s mostly wrong.

The scaling laws indicate that performance mostly depends on net training compute, and it doesn't matter as much as you think how you allocate that between size/params (and thus inference flops) and time/data (training steps). A larger model spends more compute per training step to learn more from less data. GPT-3 used 3e23 flops for training, whereas the linguistic cortex uses perhaps 1e21 to 1e22 (1e13 * 1e9s), but GPT-3 trains on almost 3 OOM more equivalent token data and thus can be much smaller in proportion.

So the brain is more flop efficient, but only because it's equivalent to a much larger dense model trained on much less data.

LLMs on GPUs are heavily RAM constrained but have plentiful data so they naturally have moved to the (smaller model, trained longer regime) vs the brain. For the brain synapses are fairly cheap, but training data time is not.

↑ comment by TekhneMakre · 2023-01-27T22:55:15.953Z · LW(p) · GW(p)

I glanced at the first paper you cited, and it seems to show a very weak form of the statements you made. AFAICT their results are more like "we found brain areas that light up when the person reads 'cat', just like how this part of the neural net lights up when given input 'cat'" and less like "the LLM is useful for other tasks in the same way as the neural version is useful for other tasks". Am I confused about what the paper says, and if so, how? What sort of claim are you making?

comment by hold_my_fish · 2023-01-24T18:20:06.593Z · LW(p) · GW(p)

I figure, at least 10%ish of the cortex is probably mainly storing information which one could also find in a 2022-era large language model (LLM).

This seems to me to be essentially assuming the conclusion. The assumption here is that a 2022 LLM already stores all the information necessary for human-level language ability and that no capacity is needed beyond that. But "how much capacity is required to match human-level ability" is the hardest part of the question.

(The "no capacity is needed beyond that" part is tricky too. I take AI_WAIFU's core point to be that having excess capacity is helpful for algorithmic reasons, even though it's beyond what's strictly necessary to store the information if you were to compress it. But those algorithmic reasons, or similar ones, might apply to AI as well.)

I might as well link my own attempt at this estimate. [LW(p) · GW(p)] It's not estimating the same thing (since I'm estimating capacity and you're estimating stored information), so the numbers aren't necessarily in disagreement. My intuition though is that capacity is quite important algorithmically, so it's the more relevant number.

(Edit: Among the sources of that intuition is Neural Tangent Kernel theory, which studies a particular infinite-capacity limit.)

Replies from: steve2152, steve2152↑ comment by Steven Byrnes (steve2152) · 2023-01-24T18:47:05.898Z · LW(p) · GW(p)

If you think human brains are storing hundreds or thousands of GB or more of information about (themselves / the world / something), do you have any thoughts on what that information is? Like, can you give (stylized) examples? (See also my footnote 13.)

Also, see my footnote 16 and surrounding discussion; maybe that’s a crux?

Replies from: None, hold_my_fish↑ comment by [deleted] · 2023-01-24T20:37:46.819Z · LW(p) · GW(p)

Hi Steven.

A simple stylized example: imagine you have some algorithm for processing each cluster of inputs from the retina.

You might think that because that algorithm is symmetric* - you want to run the same algorithm regardless of which cluster it is - you only need one copy of the bytecode that represents the compiled copy of the algorithm.

This is not the case. Information wise, sure. There is only one program that takes n bytes of information. You can save disk space for holding your model.

RAM/cache consumption : each of the parallel processing units you have to use (you will not get realtime results for images if you try to do it serially) must have another copy of the algorithm.

And this rule applies throughout the human body : every nerve cluster, audio processing, etc.

This also greatly expands the memory required over your 24 gig 4090 example. For one thing, the human brain is very sparse, and while nvidia has managed to improve sparse network performance, it still requires memory to represent all the sparse values.

I might note that you could have tried to fill in the "cartoon switch" for human synapses. They are likely a MAC for each incoming axon at no better than 8 bits of precision added to an accumulator for the cell membrane at the synapse that has no better than 16 bits of precision. (it's probably less but the digital version has to use 16 bits)

So add up the number of synapses in the human brain, assume 1 khz, and that's how many TOPs you need.

Let me do the math for you real quick:

68 billion neurons, about 1000 connections each, at 1khz. (it's very sparse). So we need 68 billion x 1000 x 1000 = 6.8e+16 = 68000 TOPs.

Current gen data center GPU: https://www.nvidia.com/en-us/data-center/h100/

So we would need 17 of them to hit 1 human brain. We can assume you will never get maximum performance (especially due to the very high sparsity), so maybe 2-3 nodes with 16 cards each?

Note they would have a total of 3840 gigabytes of memory.

Since we have 68 billion x 1000 x (1 byte) = 68 terabytes of weights in a brain, that's the problem. We only have 5% as much memory as we need.

This is the reason for neuromorphic compute based on SSDs: compute's not the bottleneck, memory is.

We can get brain scale perf with 20 times as much hardware, or 20*3*16 = 960 A100s. They are 25k each so 24 million for the GPUs, plus all the motherboards and processors and rack space. Maybe 50 million?

That's a drop in the bucket and easily affordable by current AI companies.

Epistemic notes: I'm a computer engineer (CS masters/ml) and I work on inference accelerators.

- it's not symmetric - the retinal density varies by position in the eye

↑ comment by Steven Byrnes (steve2152) · 2023-01-25T18:45:24.224Z · LW(p) · GW(p)

Thanks for your comment! I am not a GPU expert, if you didn’t notice. :)

I might note that you could have tried to fill in the "cartoon switch" for human synapses. They are likely a MAC for each incoming axon…

This is the part I disagree with. For example, in the OP I cited this paper which has no MAC operations, just AND & OR. More importantly, you’re implicitly assuming that whatever neocortical neurons are doing, the best way to do that same thing on a chip is to have a superficial 1-to-1 mapping between neurons-in-the-brain and virtual-neurons-on-the-chip. I find that unlikely. Back to that paper just above, things happening in the brain are (supposedly) encoded as random sparse subsets of active neurons drawn from a giant pool of neurons. We could do that on the chip, if we wanted to, but we don’t have to! We could assign them serial numbers instead! We can do whatever we want! Also, cortical neurons are arranged into six layers vertically, and in the other direction, 100 neurons are tied into a closely-interconnected cortical minicolumn, and 100 minicolumns in turn form a cortical column. There’s a lot of structure there! Nobody really knows, but my best guess from what I’ve seen is that a future programmer might have one functional unit in the learning algorithm called a “minicolumn” and it’s doing, umm, whatever it is that minicolumns do, but we don’t need to implement that minicolumn in our code by building it out of 100 different interconnected virtual neurons. Yes the brain builds it that way, but the brain has lots of constraints that we won’t have when we’re writing our own code—for example, a GPU instruction set can do way more things than biological neurons can (partly because biological neurons are so insanely slow that any operation that requires more than a couple serial steps is a nonstarter).

Replies from: None↑ comment by [deleted] · 2023-01-25T19:04:39.521Z · LW(p) · GW(p)

Please read a neuroscience book, even an introductory one, on how a synapse works. Just 1 chapter, even.