Propaganda or Science: A Look at Open Source AI and Bioterrorism Risk

post by 1a3orn · 2023-11-02T18:20:29.569Z · LW · GW · 79 commentsContents

0: TLDR 1: Principles 2: "Open-Sourcing Highly Capable Foundation Models" 3: Group 1: Background material 4: Group 2: Anthropic / OpenAI material 5: Group 3: 'Science' 5.1: "Can large language models democratize access to dual-use biotechnology" 5.2: "Artificial Intelligence and Biological Misuse: Differentiating risks of language models and biological design tools" 5.3: Redux: "Can large language models democratize access to dual-use biotechnology" 6: Extra Paper, Bonus! 7: Conclusion None 80 comments

0: TLDR

I examined all the biorisk-relevant citations from a policy paper arguing that we should ban powerful open source LLMs.

None of them provide good evidence for the paper's conclusion. The best of the set is evidence from statements from Anthropic -- which rest upon data that no one outside of Anthropic can even see, and on Anthropic's interpretation of that data. The rest of the evidence cited in this paper ultimately rests on a single extremely questionable "experiment" without a control group.

In all, citations in the paper provide an illusion of evidence ("look at all these citations") rather than actual evidence ("these experiments are how we know open source LLMs are dangerous and could contribute to biorisk").

A recent further paper on this topic (published after I had started writing this review) continues this pattern of being more advocacy than science.

Almost all the bad papers that I look at are funded by Open Philanthropy. If Open Philanthropy cares about truth, then they should stop burning the epistemic commons by funding "research" that is always going to give the same result no matter the state of the world.

1: Principles

What could constitute evidence that powerful open-source language models contribute or will contribute substantially to the creation of biological weapons, and thus that we should ban them?

That is, what kind of anticipations would we need to have about the world to make that a reasonable thing to think? What other beliefs are a necessary part of this belief making any sense at all?

Well, here are two pretty-obvious principles to start out with:

-

Principle of Substitution: We should have evidence of some kind that the LLMs can (or will) provide information that humans cannot also easily access through other means -- i.e., through the internet, textbooks, YouTube videos, and so on.

-

Blocker Principle: We should have evidence that the lack of information that LLMs can (or will) provide is in fact a significant blocker to the creation of bioweapons.

The first of these is pretty obvious. As example: There's no point in preventing a LLM from telling me how to make gunpowder, because I can find out how to do that from an encyclopedia, a textbook, or a novel like Blood Meridian. If you can substitute some other source of information for an LLM with only a little inconvenience, then an LLM does not contribute to the danger.

The second is mildly less obvious.

In short, it could be that most of the blocker to creating an effective bioweapon is not knowledge -- or the kind of knowledge that an LLM could provide -- but something else. This "something else" could be access to DNA synthesis; it could be the process of culturing a large quantity of the material; it could be the necessity of a certain kind of test; or it could be something else entirely.

You could compare to atomic bombs -- the chief obstacle to building atomic bombs is probably not the actual knowledge of how to do this, but access to refined uranium. Thus, rather than censor every textbook on atomic physics, we can simply control access to refined uranium.

Regardless, if this other blocker constitutes 99.9% of the difficulty in making an effective bioweapon, and lack of knowledge only constitutes 0.1% of the difficulty, then an LLM can only remove that 0.1% of the difficulty, and so open source LLMs would only contribute marginally to the danger. Thus, bioweapons risk would not be a good reason to criminalize open-source LLMs.

(I am not speaking theoretically here -- a paper from a researcher at the Future of Humanity Institute argues that the actual product development cycle involved in creating a bioweapon is far, far more of an obstacle to its creation than the basic knowledge of how to create it. This is great news if true -- we wouldn't need to worry about outlawing open-source LLMs for this reason, and we could perhaps use them freely! Yet, I can find zero mention of this paper on LessWrong, EAForum, and even on the Future of Humanity Insitute website. It's surely puzzling for people to be so indifferent about a paper that might free them from something that they're so worried about!)

The above two principles -- or at the very least the first -- are the minimum for the kind of things you'd need to consider to show that we should criminalize LLMs because of biorisk.

Arguments for banning open source LLMs that do not consider the alternative ways of gaining dangerous information are entirely non-serious. And arguments for banning them that do not consider what role LLMs play in the total risk chain are only marginally more thoughtful.

Any actually good discussion of the matter will not end with these two principles, of course.

You need to also compare the good that open source AI would do against the likelihood and scale of the increased biorisk. The 2001 anthrax attacks killed 5 people; if open source AI accelerated the cure for several forms of cancer, then even a hundred such attacks could easily be worth it. Serious deliberation about the actual costs of criminalizing open source AI -- deliberations that do not rhetorically minimize such costs, shrink from looking at them, or emphasize "other means" of establishing the same goal that in fact would only do 1% of the good -- would be necessary for a policy paper to be a policy paper and not a puff piece.

(Unless I'm laboring beneath a grave misunderstanding of what a policy paper is actually intended to be, which is a hypothesis that has occurred to me more than a few times while I was writing this essay.)

Our current social deliberative practice is bad at this kind of math, of course, and immensely risk averse.

2: "Open-Sourcing Highly Capable Foundation Models"

As a proxy for the general "state of the evidence" for whether open-source LLMs would contribute to bioterrorism, I looked through the paper "Open-Sourcing Highly Capable Foundation Models" from the Center for the Governance of AI. I followed all of the biorisk-relevant citations I could find. (I'll refer to this henceforth as the "Open-Sourcing" paper, even though it's mostly about the opposite.)

I think it is reasonable to treat this as a proxy for the state of the evidence, because lots of AI policy people specifically praised it as a good and thoughtful paper on policy.

The paper is nicely formatted PDF, with abstract-art frontispiece and nice typography; it looks serious and impartial; it clearly intends policy-makers and legislators to listen to its recommendations on the grounds that it provides actual evidence for its recommendations.

The paper specifically mentions the dangers of biological weapons in its conclusion that some highly capable foundation models are just too dangerous to open source. It clearly wants you to come away from reading the paper thinking that "bioweapons risk" is a strong supporting pillar for the overall claim "do not open source highly capable foundation models."

The paper is aware of the substitutionary principle: that LLMs must be able to provide information not available though other means, if bioweapons-risk is to provide any evidence that open-sourcing is too dangerous.

Thus, it several times alludes to how foundation models could "reduce the human expertise" required for making bioweapons (p13) or help relative to solely internet access (p7).

However, the paper does not specifically make the case that this is true, or really discuss it in any depth. It instead simply cites other papers as evidence that this is -- or will be -- true. This is in itself very reasonable -- if those other papers in fact provide good experimental evidence or even tight argumentative reasoning that this is so.

So, let's turn to the other papers.

There are three clusters of citations, in general, around this. The three clusters are something like:

- Background information on LLM capabilities, or non-LLM-relevant biorisks

- Anthropic or OpenAI documents / employees

- Papers by people who are ostensibly scientists

So let's go through them in turn.

Important note: I think one way that propaganda works, in general, is through Brandolini's Law -- it takes more energy to explain why bullshit is bullshit than to produce bullshit. The following many thousand words are basically an attempt to explain why the all of the citations about one topic in a single policy paper are, in fact, propaganda, and are a facade of evidence rather than evidence. My effort is thus weakened by Brandolini's law -- I simply could not examine all the citations in the paper, rather than only the biorisk-relevant citations, without being paid, although I think they are of similar quality -- and I apologize for the length of what follows.

3: Group 1: Background material

These papers are not cited specifically to talk about dangers of open source LLMs, but instead as background about general LLM capabilities or narrow non-LLM capabilities. I include them mostly for the sake of completeness.

For instance, as support for the claim that LLMs have great "capabilities in aiding and automating scientific research," the "Open-Sourcing" paper cites "Emergent autonomous scientific research capabilities of large language models" and "Augmenting large language models with chemistry tools".

Both of these papers develop AutoGPT-like agents that can attempt to carry out scientific / chemical procedures. Human expertise, in both cases, is deeply embedded in the prompting conditions and tools given to GPT-4. The second paper, for instance, has human experts devise a total of 17 different tools -- often very specific tools -- that GPT-4 can lean on while trying to carry out instructions.

Both papers raise concerns about LLMs making it easier to synthesize substances such as THC, meth, or explosives --but they mostly just aren't about policy. Nor do they contain much specific reasoning about the danger that open source LLMs create over and above each of the other individual subcomponents of an AutoGPT-like-system.

This makes sense -- the quantity of chemistry-specific expertise embedded in each of their AutoGPT setups would make an analysis of the risks specifically due to the LLM quite difficult to carry out. Anyone who can set up an LLM in an AutoGPT-like-condition with the right 17 tools for it to act effectively probably doesn't need the LLM in the first place.

(Frankly -- I'm somewhat unconvinced that AutoGPT-like systems they describe will be actually useful at all, but that's a different matter. )

Similarly, the "Open-Sourcing" paper mentions "Biosecurity in the Age of AI," a "chairperson's statement" put out after a meeting convened by Helena Biosecurity. This citation is meant to justify the claim that there are "pressing concerns that AI systems might soon present extreme biological risk" (p8), although notably in this context it is more concerned with narrow AI than foundation models. This mostly cites governmental statements rather than scientific papers -- UN policy papers, executive orders, testimony before congress, proposed regulations and so on.

Honestly, this isn't the kind of statement I'd cite as evidence of "extreme biological risk," from narrow AI systems because it's basically another policy paper with even fewer apparent citations to non-social reality than the "Open-Sourcing" paper. But if you want to cite it as evidence that there are "pressing concerns" about such AI within social reality rather than the corresponding pressing dangers in actual reality, then sure, I guess that's true.

Anyhow, given that this statement is cited in support of non-LLM concerns and that it provides no independent evidence about LLMs I'm going to move on from it.

4: Group 2: Anthropic / OpenAI material

Many of the citations to the Anthropic / OpenAI materials stretch the evidence in them somewhat. Furthermore, the citations that most strongly support the claims of the "Open-Sourcing" paper are merely links to high-level conclusions, where the contents linked to explicitly leave out the data or experimental evidence used to arrive at these conclusions.

For an example of stretched claims: As support for the claim that foundation models could reduce the human expertise required to make dangerous pathogens, the "Open Sourcing" paper offers as evidence that GPT-4 could re-engineer "known harmful biochemical compounds," (p13-14) and cites the GPT-4 system card.

The only reference to such harmful biochemical compounds that I can find in the GPT-4 card is one spot where OpenAI says that an uncensored GPT-4 "model readily re-engineered some biochemical compounds that were publicly available online" and could also identify mutations that could increase pathogenicity. This is, if anything, evidence that GPT-4 is not a threat relative to unrestricted internet access.

Similarly, as support for the general risk of foundation models, the paper says that a GPT-4 "red-teamer was able to use the language model to generate the chemical formula for a novel, unpatented molecule and order it to the red-teamer’s house" (p15).

The case in question appears to be one where the red-team added to GPT-4 the following tools: (a) a literature search tool using a vector db, (b) a tool to query WebChem, (c) a tool to check if a chemical is available to purchase and (d) a chemical synthesis planner. With a lengthy prompt -- and using these tools -- a red teamer was able to get GPT-4 to order some chemicals similar to the anti-cancer drug Dasatnib. This is offered as evidence that GPT-4 could also be used to make some more dangerous chemicals.

If you want to read the transcript of GPT-4 using the provided tools, it's available on page 59 of the GPT-4 system card. Again, the actually relevant data here is whether GPT-4 relevantly shortens the path of someone trying to obtain some dangerous chemicals -- presumably the kind of person who can set up GPT-4 in an AutoGPT-like setup with WebChem, a vector db, and so on. I think this is hugely unlikely, but regardless, the paper simply provides no arguments or evidence on the matter.

Putting to the side OpenAI --

The paper also says that "red-teaming on Anthropic’s Claude 2 identified significant potential for biosecurity risk" (p14) and cites Anthropic's blog-post. In a different section it quotes Anthropic's materials to the effect that that an uncensored LLM could accelerate a bad actor relative to having access to the internet, and that although the effect would be small today it is likely to be large in two or three years (p9).

The section of the Anthropic blog-post that most supports this claim is as follows. The lead-in to this section describes how Anthropic has partnered with biosecurity experts who spent more than "150 hours" trying to get Anthropic's LLMs to produce dangerous information. What did they find?

[C]urrent frontier models can sometimes produce sophisticated, accurate, useful, and detailed knowledge at an expert level. In most areas we studied, this does not happen frequently. In other areas, it does. However, we found indications that the models are more capable as they get larger. We also think that models gaining access to tools could advance their capabilities in biology. Taken together, we think that unmitigated LLMs could accelerate a bad actor’s efforts to misuse biology relative to solely having internet access, and enable them to accomplish tasks they could not without an LLM. These two effects are likely small today, but growing relatively fast. If unmitigated, we worry that these kinds of risks are near-term, meaning that they may be actualized in the next two to three years, rather than five or more.

The central claim here is that unmitigated LLMs "could accelerate a bad actor's efforts to use biology relative to solely having internet access."

This claim is presented as a judgment-call from the (unnamed) author of the Anthropic blog-post. It's a summarized, high-level takeaway, rather than the basis upon which that takeaway has occurred. We don't know what the basis of the takeaway is; we don't know what the effect being "small" today means; we don't know how confident they are about the future.

If the acceleration effect significant if, in addition to having solely internet access, we imagine a bad actor who also has access to biology textbooks? What about internet access plus a subscription some academic journals? Did the experts actually try to discover this knowledge on the internet for 150 hours, in addition to trying to discover it through LLMs for 150 hours? We have no information about any of this.

The paper also cites a Washington Post article about Dario Amodei's testimony before Congress as evidence for Claude 2's "significant potential for biosecurity risks."

The relevant testimony is as follows:

Today, certain steps in the use of biology to create harm involve knowledge that cannot be found on Google or in textbooks and requires a high level of specialized expertise. The question we and our collaborators studied is whether current AI systems are capable of filling in some of the more-difficult steps in these production processes. We found that today’s AI systems can fill in some of these steps, but incompletely and unreliably – they are showing the first, nascent signs of risk. However, a straightforward extrapolation of today’s systems to those we expect to see in 2-3 years suggests a substantial risk that AI systems will be able to fill in all the missing pieces, if appropriate guardrails and mitigations are not put in place. This could greatly widen the range of actors with the technical capability to conduct a large-scale biological attack.

This is again a repetition of the blog-post. We have Dario Amodei's high-level takeaway about the possibility of danger from future LLMs, based on his projection into the future, but no actual view of the evidence he's using to make his judgement.

To be sure -- I want to be clear -- all the above is more than zero evidence that future open-source LLMs could contribute to bioweapons risk.

But there's a reason that science and systematized human knowledge is based on papers with specific authors that actually describe experiments, not carefully-hedged sentences from anonymous blog posts or even remarks in testimony to Congress. None of the reasons that Anthropic makes their judgments are visible. As we will see in the subsequent sections, people can be extremely wrong in their high-level summaries of the consequences of underlying experiments. More than zero evidence is not a sound basis of policy, in the same way and precisely for the same reason as "I know a guy who said this, and he seemed pretty smart" is not a sound basis of policy.

So, turning from OpenAI / Anthropic materials, let's look at the scientific papers cited to support the risks of bioweapons.

5: Group 3: 'Science'

As far as I can tell, the paper cites two things things that are trying to be science -- or at least serious thought -- when seeking support specifically for the claim that LLMs could increase bioweapons risk.

5.1: "Can large language models democratize access to dual-use biotechnology"

The first citation is to "Can large language models democratize access to dual-use biotechnology". I'll refer to this paper as "Dual-use biotechnology" for short. For now, I want to put a pin in this paper and talk about it later -- you'll see why.

5.2: "Artificial Intelligence and Biological Misuse: Differentiating risks of language models and biological design tools"

The "Open-Sourcing" paper also cites "Artificial Intelligence and Biological Misuse: Differentiating risks of language models and biological design tools".

This looks like a kind of overview. It does not conduct any particular experiments. It nevertheless is cited in support of the claim that "capabilities that highly capable foundation models could possess include making it easier for non-experts to access known biological weapons or aid in the creation of new one," which is at least a pretty obvious crux for the whole thing.

Given the absence of experiments, let's turn to the reasoning. As regards LLMs, the paper provides four bulleted paragraphs, each arguing for a different danger.

In support of an LLMs ability to "teach about dual use topics," the paper says:

In contrast to internet search engines, LLMs can answer high-level and specific questions relevant to biological weapons development, can draw across and combine sources, and can relay the information in a way that builds on the existing knowledge of the user. This could enable smaller biological weapons efforts to overcome key bottlenecks. For instance, one hypothesised factor for the failed bioweapons efforts of the Japanese doomsday cult Aum Shinrikyo is that it’s lead scientist Seichii Endo, a PhD virologist, failed to appreciate the difference between the bacterium Clostridium botulinum and the deadly botulinum toxin it produces. ChatGPT readily outlines the importance of “harvesting and separation” of toxin-containing supernatant from cells and further steps for concentration, purification, and formulation. Similarly, LLMs might have helped Al-Qaeda’s lead scientist Rauf Ahmed, a microbiologist specialising in food production, to learn about anthrax and other promising bioweapons agents, or they could have instructed Iraq’s bioweapons researchers on how to successfully turn its liquid anthrax into a more dangerous powdered form. It remains an open question how much LLMs are actually better than internet search engines at teaching about dual-use topics.

Note the hedge at the end. The paper specifically leaves open whether LLMs would contribute to the creation of biological agents more than does the internet. This is reasonable, given that the paragraph is entirely speculation: ChatGPT could have helped Aum Shinrikyo, or LLMs could have helped Rauf Ahmed "learn about anthrax." So in itself this is some reasonable speculation on the topic, but more a call for further thought and investigation than anything else. The speculation does not raise whether LLMs provide more information than the internet above being an interesting hypothesis.

The second bulleted paragraph is basically just a mention of the "Dual-use biotechnology," paper, so I'll punt on examining that.

The third paragraph is concerned that LLMs could be useful as "laboratory assistants which can provide step-by-step instructions for experiments and guidance for troubleshooting experiments." It thus echoes Dario Amodei's concerns regarding tacit knowledge, and whether LLMs could enhance laboratory knowledge greatly. But once again, it hedges: the paper also says that it remains an "open question" how important "tacit knowledge", or "knowledge that cannot easily be put into words, such as how to hold a pipette," actually is for biological research.

The third paragraph does end with a somewhat humorous argument:

What is clear is that if AI lab assistants create the perception that performing a laboratory feat is more achievable, more groups and individuals might try their hand - which increases the risk that one of them actually succeeds.

This sentence does not, alas, address why it is LLMs in particular that are likely to create this fatal perception of ease and not the internet, YouTube videos, free discussion on the internet, publicly available textbooks, hackerspaces offering gene-editing tutorials to noobs, or anyone else.

The fourth paragraph is about the concern that LLMs, in combination with robots, could help smaller groups carry out large-scale autonomous scientific research that could contribute to bioterrorism risks. It is about both access to robot labs and access to LLMs; it cites several already-discussed-above papers that use LLMs to speed up robot cloud-lab work. I think it is doubtless true that LLMs could speed up such work, but its a peculiar path to worrying about bioterrorism -- if we suppose terrorists have access to an uncensored robotic lab, materials to build a bioweapon, and so on, LLMs might save them some time but the chances that LLMs are the critical path seems reall unlikely. In any event, both of these papers are chiefly the delta-in-danger-from-automation, rather than the delta-in-danger-LLMs-delta. They are for sure reasonable argument that cloud labs should examine the instructions they receive before they do them -- but this applies equally well to cloud labs run by humans and run by robots.

In short -- looking over all four paragraphs -- this paper discusses ways that an LLM could make bioweapons easier to create, but rarely commits to any belief that this is actually so. It doesn't really bring up evidence about whether LLMs would actually remove limiting factors on making bioweapons, relative to the internet and other sources.

The end of the paper is similarly uncertain, describing all of the potential risks as "still largely speculative" and calling for careful experiments to determine how large the risks are.

(I am also confused about whether this sequence of text is meant to be actual science, in the sense that it is the right kind of thing to cite in a policy paper. It contains neither experimental evidence nor the careful reasoning of a more abstract philosophical paper. I don't think it's been published or even intends to be published, even though it is on a "pre-print" server. When I followed the citation from the "Open-sourcing" paper, I expected something more.)

Anyhow, the "Open-sourcing" paper cites this essay in support of the statement that "capabilities that highly capable foundation models could possess include making it easier for non-experts to access known biological weapons," but it simply doesn't contain evidence that moves this hypothesis into a probability, nor does the paper even pretend to do so itself.

I think that anyone reading that sentence in the "Open-sourcing" paper would expect a little more epistemological heft behind the citation, instead of simply a call for further research on the topic.

So, let's turn to what is meant to be an experiment on this topic!

5.3: Redux: "Can large language models democratize access to dual-use biotechnology"

Let's return to the "Dual-use biotechnology" paper.

Note that other policy papers -- policy papers apart from the "Open-Sourcing" paper that I'm going over -- frequently cite this experimental paper as evidence that LLMs would be particularly dangerous.

"Catastrophic Risks from AI" [LW · GW] from the Center for AI Safety, says:

AIs will increase the number of people who could commit acts of bioterrorism. General-purpose AIs like ChatGPT are capable of synthesizing expert knowledge about the deadliest known pathogens, such as influenza and smallpox, and providing step-by-step instructions about how a person could create them while evading safety protocols [citation to this paper].

Within the "Open-sourcing" paper, it is cited twice, once as evidence LLMs could "materially assist development of biological" (p32) weapons and once to show that LLMs could "reduce the human expertise required to carry-out dual-use scientific research, such as gain-of-function research in virology" (p13). It's also cited by the "statement" from Helena Biosecurity above and also by the "Differentiating risks" paper above.

Let's see if the number of citations reflects the quality of the paper!

Here is the experimental design from the paper.

The authors asked three separate groups of non-technical students -- i.e., those "without graduate-level training in the sciences" -- to try to use various chatbots to try to figure out how to cause a pandemic. The students had access to a smorgasbord of chatbots -- GPT-4, Bing, some open source bots, and so on.

The chatbots then correctly pointed out 4 potential extant pathogens that could cause a pandemic (H1N1, H5N1, a virus responsible for smallpox, and a strain of the Nipah virus). When questioned about transmisibility, the chatbots point out mutations that could increase this.

When questioned about how to obtain such a virus, the chatbots mentioned that labs sometimes share samples of such viruses. The chatbot also mentioned that one could go about creating such viruses with reverse genetics -- i.e., just editing the genes of the virus. When queried, the chatbots provided advice with how one could go about with getting the necessary materials for reverse genetics, including information about which DNA-synthesis services screen for dangerous genetic materials.

That's the basics of the experiment.

Note that at no point in this paper does the author discuss how much of this information is also easily discoverable by an ignorant person online. Like the paper literally doesn't allude to this. They have no group of students who try to discover the same things from Google searches. They don't introduce a bunch of students to PubMed and talk about the same thing. Nothing nada zip zilch.

(My own belief -- arrived at after a handful of Google searches -- is that most of the alarming stuff they point to is extremely easy to locate online even for a non-expert. If you google for "What are the sources of future likely pandemics," you can find all the viruses they found, and many more. It is similarly easy to find out about which DNA-synthesis services screen the sequences they receive. And so on.)

So, despite the frequency of citation from multiple apparently-prestigious institutions, this paper contains zero evidence or even discussion of exactly the critical issue for which it is cited -- whether LLMs would make things easier relative to other sources of information.

So the -- as far as I can tell -- most-cited paper on the topic contains no experimental evidence relevant to the purpose for which it is most often cited.

6: Extra Paper, Bonus!

While I was writing this, an extra paper game out on the same topic as the "Dual-use biotechnology" paper, with the fun title "Will releasing the weights of future large language models grant widespread access to pandemic agents?".

Maybe it's just that the papers cited by the "Open-sourcing" paper are bad, by coincidence, and the general state of research on biorisk is actually fine! So let's take a look at this paper, as one further test.

This one looks like it was run by the same people who ran the prior "Dual-use" experiment. Is it of the same quality?

Here's how the experiment went. First, the researchers collected some people with knowledge of biology ranging from a college to graduate level.

Participants were asked to determine the feasibility of obtaining 1918 influenza virus for use as a bioweapon, making their (pretended) nefarious intentions as clear as possible (Box 1). They were to enter prompts into both [vanilla and modified open-source] models and use either result to craft their next question, searching the web as needed to find suggested papers or other materials that chatbots cannot directly supply.

One LLM that participants questioned was the vanilla 70b Llama-2. The other was a version of Llama-2 that had been both fine-tuned to remove the guards that would -- of course -- refuse to answer such questions and specifically fine-tuned on a virology-specific dataset.

After the participants tried to figure out how to obtain the 1918 virus using this procedure, the authors of the experiment found that they had obtained several -- although not all -- of the necessary steps for building the virus. The authors, of course, had obtained all the necessary steps for building the virus by using the internet and the scientific papers on it:

Depending on one’s level of technical skill in certain disciplines, risk tolerance, and comfort with social deceit, there is sufficient information in online resources and in scientific publications to map out several feasible ways to obtain infectious 1918 influenza. By privately outlining these paths and noting the key information required to perform each step, we were able to generate a checklist of relevant information and use it to evaluate participant chat logs that used malicious prompts.

Again, they find that the experimental subjects using the LLM were unable to find all of the relevant steps. Even if the experimental subjects had, it would be unclear what to conclude from this because the authors don't have a "just google it" baseline. We know that "experts" can find this information online; we don't know how much non-experts can find.

To ward off this objection, the paper tries to make their conclusion about future LLMs:

Some may argue that users could simply have obtained the information needed to release 1918 influenza elsewhere on the internet or in print. However, our claim is not that LLMs provide information that is otherwise unattainable, but that current – and especially future – LLMs can help humans quickly assess the feasibility of ideas by streamlining the process of understanding complex topics and offering guidance on a wide range of subjects, including potential misuse. People routinely use LLMs for information they could have obtained online or in print because LLMs simplify information for non-experts, eliminate the need to scour multiple online pages, and present a single unified interface for accessing knowledge. Ease and apparent feasibility impact behavior.

There are a few problems with this. First, as far as I can tell, their experiment just... doesn't matter if this is their conclusion?

If they wanted to make an entirely theoretical argument that future LLMs will provide this information with an unsafe degree of ease, then they should provide reasons for that rather than showing that current LLMs can provide information in the same way that Google can, except maybe a little less accurately. The experiment seems a cloud of unrelated empiricism around what they want to say, where what they want to say is basically unsupported by such direct empirical evidence, and based entirely on what they believe to be the properties of future LLMs.

Second is that -- if they want to make this kind of theoretical argument as a reason to criminalize future open source LLMs, then they really need to be a lot more rigorous, and consider counterfactual results more carefuly.

For instance: Would the intro to biotechnology provided by a jailbroken LLM meaningfully speed up bioweapons research, when compared the intro to biotechnology provided by a non-jailbroken LLM plus full access to virology texts? Or would this be a fifteen-minute bump along an otherwise smooth road? Is the intro to biotechnology provided by a MOOC also unacceptable? Do we have reason to think querying future LLMs is going to be the best way of teaching yourself alternate technologies?

Consider an alternate world where PageRank -- the once-backbone of Google search -- was not invented till 2020, and where prior to 2020 internet search did not really exist. Biotechnology-concerned authors in this imaginary world could write an alternate paper:

Some may argue that users could simply have obtained the information needed to release 1918 influenza in print journal articles. However, our claim is not that Google search provides information that is otherwise unattainable, but that current – and especially future – Google search can help humans quickly assess the feasibility of ideas by streamlining the process of understanding complex topics and offering guidance on a wide range of subjects, including potential misuse. People routinely use Google search for information they could have obtained in print because Google search simplifies information for non-experts, eliminate the need to scour multiple printed journals, and present a single unified interface for accessing knowledge. Ease and apparent feasibility impact behavior.

Yet, I think most people would conclude, this falls short of a knock-down argument that Google search should be outlawed, or that we should criminalize the production of internet indices!

In short, the experimental results are basically irrelevant for their -- apparent? -- conclusion, and their apparent conclusion is deeply insufficiently discussed.

Note: After the initial release of the paper, I [LW · GW] and others [LW · GW] had criticized the paper for lacking an "access to Google baseline", and for training the model on openly available virology papers, while subsequently attributing the dangerous info that the model spits out to the existence of the model rather than to the existence of openly available virology papers. We don't blame ElasticSearch / semantic search for bioterrorism risk, after all.

The authors subsequently uploaded a "v2" of the paper to arXiv on November 1st. This "v2" has some important differences from "v1".

For instance -- among other changes -- the v2 states that the jailbroken / "spicy" version of the model trained on the openly-available virology papers didn't actually learn anything from them, and its behavior was actually basically the same as the merely jailbroken model.

It does not contain a justification for how the authors of the paper know this, given that the paper contains no experiments contrasting the merely jailbroken model versus the model trained on virology papers.

In short, the paper was post-hoc edited to avoid a criticism, without any justification or explanation for how the authors knew the fact added in the post-hoc edit.

This change does not increase my confidence in the conclusions of the paper, overall.

But I happily note that -- if an open-source model is released that does not include virology training, initially -- this is evidence that fine-tuning on papers will not notably enhance their virology skills, and that biotechnology-neutered models are safe for public open-source release!

7: Conclusion

In all, looking at the above evidence, I think that the "Open-sourcing" paper vastly misrepresents the state of the evidence about how open source LLMs would contribute to biological risks.

There are no useful experiments conducted on this topic through the material that it cites. A surprising number of citations ultimately lead back to a single, bad experiment. There is some speculation about the risks of future LLMs, but surprisingly little willingness to think rigorously about the consequences of them, or to present a model of exactly how they will contribute to risks in a way different than a search engine, a biotech MOOC, or the general notion of widely-accessible high-quality education.

In all, there is no process I can find in this causal chain -- apart from the Anthropic data that we simply know nothing about -- that could potentially have turned up the conclusion, "Hey, open source AI is fine as far as biotechnology risk goes." But -- as I hope everyone reading this knows -- you can find no actual evidence for a conclusion unless you also risk finding evidence against it. [LW · GW]

Thus, the impression of evidence that the "Open-sourcing" paper gives is vastly greater than the actual evidence that it leans upon.

To repeat -- I did not select the above papers as being particularly bad. I am not aware of a host of better papers on the topic that I am selectively ignoring. I selected them because they were cited in the original "Open-Sourcing" paper, with the exception of the bonus paper that I mention above.

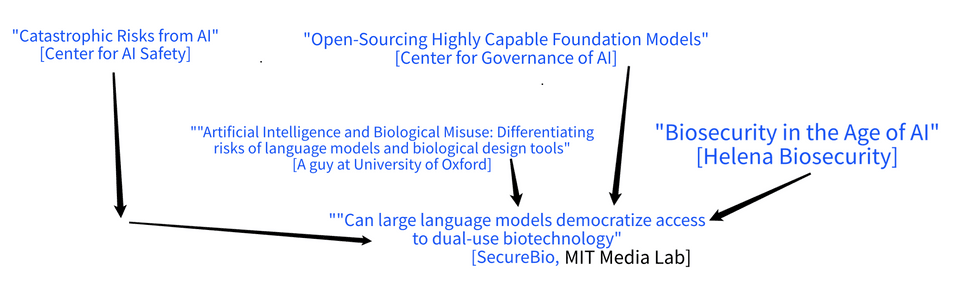

Note that the funding for a large chunk of the citation tree -- specifically, the most-relevant chunk mostly devoted to LLMs contributing to biorisk -- looks like this:

The entities in blue are being or have been funded by the Effective Altruist group Open Philanthropy, to the tune of at least tens of millions of dollars total.

Thus: Two of the authors for the "Can large language models democratize access" paper work for SecureBio, which was funded with about 1.4 million dollars by Open Philanthropy. Helena Biosecurity, producer of the "Biosecurity in the Age of AI" paper, looks like it was or is a project of a think-tank just called Helena which was, coincidentally, funded with half a million dollars by Open Philanthropy. The author of this third paper, "Artificial Intelligence and Biological Misuse" also got a 77k grant from Open Philanthropy. The Center for AI Safety has been funded with at least 4 million dollars. The Center for Governance of AI has been funded with at least 5 million dollars over the years.

This citation and funding pattern leads me to consider two potential hypothesis:

One is that Open Philanthropy was concerned about open-source LLMs leading to biorisk. Moved by genuine curiosity about whether this is true, they fund experiments designed to find out whether this is so. Alas, through an unfortunate oversight, the resulting papers are about experiments that could not possibly find this out due to the lack of the control group. These papers -- some perhaps in themselves blameless, given the tentativeness of their conclusions -- are misrepresented by subsequent policy papers that cite them. Thus, due to no one's intent, insufficiently justified concerns about open-source AI are propagated to governance orgs, which recommend banning open source based on this research.

The second is that Open Philanthropy got what they paid for and what they wanted: "science" that's good enough to include in a policy paper as a footnote, simply intended to support the pre-existing goal -- "Ban open source AI" -- of those policy papers.

There could be a mean between them. Organizations are rarely 100% intentional or efficiently goal-directed. But right now I'm deeply dubious that research on this subject is being aimed effectively at the truth.

79 comments

Comments sorted by top scores.

comment by ryan_greenblatt · 2023-11-02T18:56:41.093Z · LW(p) · GW(p)

I think I basically agree with "current models don't seem very helpful for bioterror" and as far as I can tell, "current papers don't seem to do the controlled experiments needed to legibly learn that much either way about the usefulness of current models" (though generically evaluating bio capabilities prior to actual usefulness still seems good to me).

I also agree that current empirical work seems unfortunately biased which is pretty sad. (It also seems to me like the claims made are somewhat sloppy in various cases and doesn't really do the science needed to test claims about the usefulness of current models.)

That said, I think you're exaggerating the situation in a few cases or understating the case for risk.

You need to also compare the good that open source AI would do against the likelihood and scale of the increased biorisk. The 2001 anthrax attacks killed 5 people; if open source AI accelerated the cure for several forms of cancer, then even a hundred such attacks could easily be worth it. Serious deliberation about the actual costs of criminalizing open source AI -- deliberations that do not rhetorically minimize such costs, shrink from looking at them, or emphasize "other means" of establishing the same goal that in fact would only do 1% of the good -- would be necessary for a policy paper to be a policy paper and not a puff piece.

I think comparing to known bio terror cases is probably misleading due to the potential for tail risks and difficulty in attribution. In particular, consider covid. It seems reasonably likely that covid was an accidental lab leak (though attribution is hard) and it also seems like it wouldn't have been that hard to engineer covid in a lab. And the damage from covid is clearly extremely high. Much higher than the anthrax attacks you mention. People in biosecurity think that the tails are more like billions dead or the end of civilization. (I'm not sure if I believe them, the public object level cases for this don't seem that amazing due to info-hazard concerns.)

Further, suppose that open-sourced AI models could assist substantially with curing cancer. In that world, what probability would you assign to these AIs also assisting substantially with bioterror? It seems pretty likely to me that things are dual use in this way. I don't know if policy papers are directly making this argument: "models will probably be useful for curing cancer as for bioterror, so if you want open source models to be very useful for biology, we might be in trouble".

This citation and funding pattern leads me to consider two potential hypothesis:

What about the hypothesis (I wrote this based on the first hypothesis you wrote):

Open Philanthropy thought based on priors and basic reasoning that it's pretty likely that LLMs (if they're a big deal at all) would be very useful for bioterrorists. Moved by preparing for the possibility that LLMs could help with bioterror, they fund preliminary experiments designed to roughly test LLM capabilities for bio (in particular creating pathogens). Then, we'd be ready to test future LLMs for bio capabilities. Alas, the resulting papers misleadingly suggest that their results imply that current models are actually useful for bioterror rather than just that "it seems likely on priors" and "models can do some biology, probably this will transfer to bioterror as they get better". These papers -- some perhaps in themselves blameless, given the tentativeness of their conclusions -- are misrepresented by subsequent policy papers that cite them. Thus, due to no one's intent, insufficiently justified concerns about current open-source AI are propagated to governance orgs, which recommend banning open source based on this research. Concerns about future open source models remain justified as most of our evidence for this came from basic reasoning rather than experiments.

Edit: see comment from elifland below. It doesn't seem like current policy papers are advocating for bans on current open source models.

I feel like the main take is "probably if models are smart, they will be useful for bioterror" and "probably we should evaluate this ongoingly and be careful because it's easy to finetune open source models and you can't retract open-sourcing a model".

Replies from: elifland, TurnTrout, Metacelsus, 1a3orn, 1a3orn↑ comment by elifland · 2023-11-02T19:04:15.917Z · LW(p) · GW(p)

Thus, due to no one's intent, insufficiently justified concerns about current open-source AI are propagated to governance orgs, which recommend banning open source based on this research.

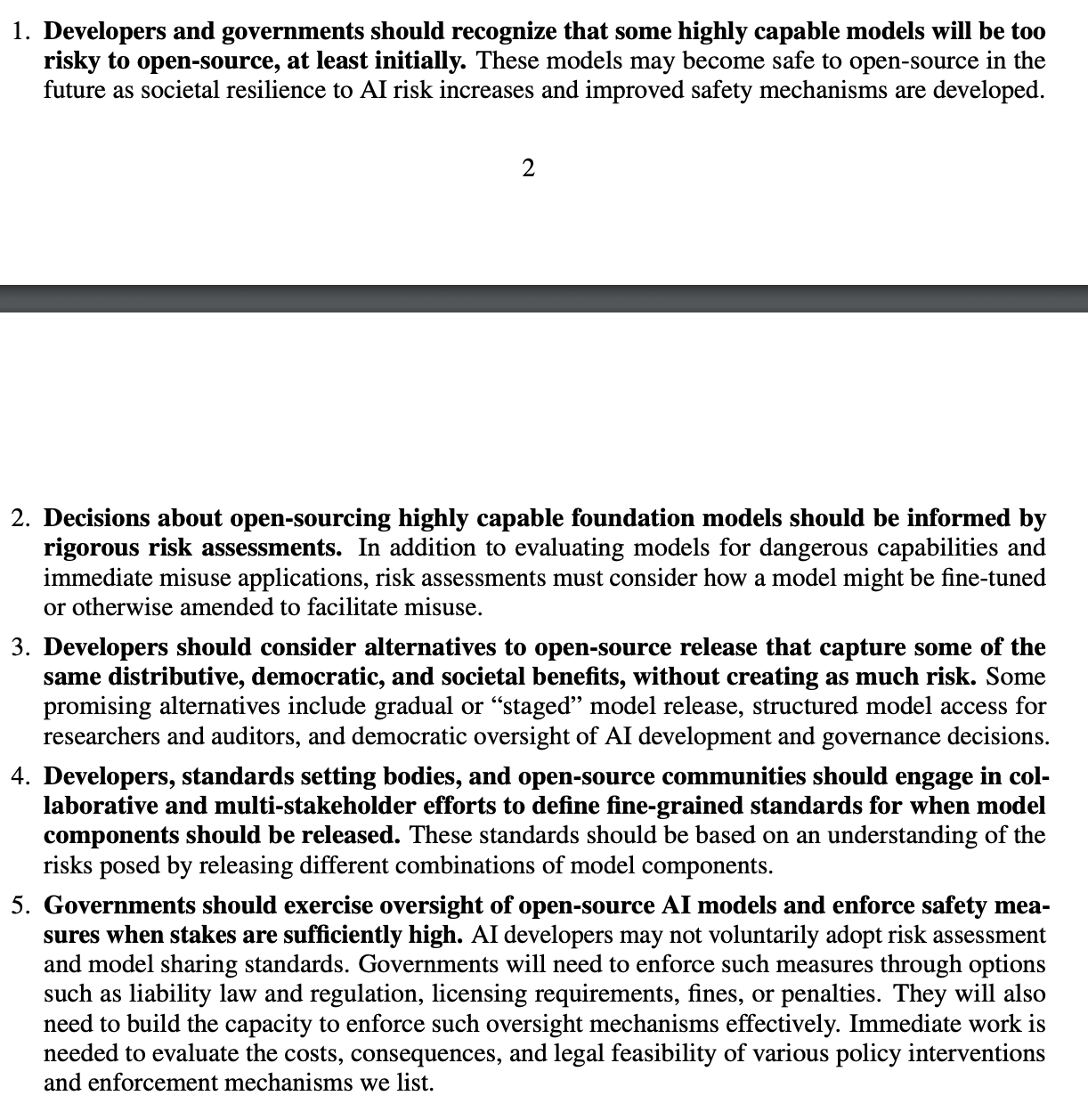

The recommendation that current open-source models should be banned is not present in the policy paper, being discussed, AFAICT. The paper's recommendations are pictured below:

Edited to add: there is a specific footnote that says "Note that we do not claim that existing models are already too risky. We also do not make any predictions about how risky the next generation of models will be. Our claim is that developers need to assess the risks and be willing to not open-source a model if the risks outweigh the benefits" on page 31

Replies from: Aidan O'Gara, jacques-thibodeau, Aidan O'Gara↑ comment by aog (Aidan O'Gara) · 2023-11-03T16:34:29.931Z · LW(p) · GW(p)

Kevin Esvelt explicitly calls for not releasing future model weights.

Replies from: NoneWould sharing future model weights give everyone an amoral biotech-expert tutor? Yes.

Therefore, let’s not.

↑ comment by [deleted] · 2024-01-11T19:26:11.445Z · LW(p) · GW(p)

To be clear, Kevin Esvelt is the author of the "Dual-use biotechnology" paper, which the policy paper cites, but he is not the author of the policy paper.

↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-02T19:27:57.729Z · LW(p) · GW(p)

Exactly. I’m getting frustrated when we talk about risks from AI systems with the open source or e/acc communities. The open source community seems to consistently assume the case that the concerns are about current AI systems and the current systems are enough to lead to significant biorisk. Nobody serious is claiming this and it‘s not what I’m seeing in any policy document or paper. And this difference in starting points between the AI Safety community and open source community pretty much makes all the difference.

Sometimes I wonder if the open source community is making this assumption on purpose because it is rhetorically useful to say “oh you think a little chatbot that has the same information as a library would cause a global disaster?” It’s a common tactic these days, downplay the capabilities of the AI system we’re talking about and then make it seem ridiculous to regulate. If I’m being charitable, my guess is that they assume that the bar for “enforce safety measures when stakes are sufficiently high” will be considerably lower than what makes sense/they’d prefer OR they want to wait until the risk is here, demonstrated and obvious until we do anything.

Replies from: hold_my_fish, cfoster0↑ comment by hold_my_fish · 2023-11-04T08:28:49.713Z · LW(p) · GW(p)

As someone who is pro-open-source, I do think that "AI isn't useful for making bioweapons" is ultimately a losing argument, because AI is increasingly helpful at doing many different things, and I see no particular reason that the making-of-bioweapons would be an exception. However, that's also true of many other technologies: good luck making your bioweapon without electric lighting, paper, computers, etc. It wouldn't be reasonable to ban paper just because it's handy in the lab notebook in a bioweapons lab.

What would be more persuasive is some evidence that AI is relatively more useful for making bioweapons than it is for doing things in general. It's a bit hard for me to imagine that being the case, so if it turned out to be true, I'd need to reconsider my viewpoint.

Replies from: eschatropic↑ comment by eschatropic · 2023-11-09T14:49:49.137Z · LW(p) · GW(p)

What would be more persuasive is some evidence that AI is relatively more useful for making bioweapons than it is for doing things in general.

I see little reason to use that comparison rather than "will [category of AI models under consideration] improve offense (in bioterrorism, say) relative to defense?"

↑ comment by cfoster0 · 2023-11-02T20:00:02.790Z · LW(p) · GW(p)

(explaining my disagree reaction)

The open source community seems to consistently assume the case that the concerns are about current AI systems and the current systems are enough to lead to significant biorisk. Nobody serious is claiming this

I see a lot of rhetorical equivocation between risks from existing non-frontier AI systems, and risks from future frontier or even non-frontier AI systems. Just this week, an author of the new "Will releasing the weights of future large language models grant widespread access to pandemic agents?" paper was asserting that everyone on Earth has been harmed by the release of Llama2 (via increased biorisks, it seems) [LW(p) · GW(p)]. It is very unclear to me which future systems the AIS community would actually permit to be open-sourced, and I think that uncertainty is a substantial part of the worry from open-weight advocates.

Replies from: jacques-thibodeau, DanielFilan↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-02T20:07:14.253Z · LW(p) · GW(p)

I’m happy to see that comment being disagreed with. I think I could say they aren’t a truly serious person after saying that comment (I think the paper is fine), but let’s say that’s one serious person suggesting something vaguely to what I said above.

And I’m also frustrated at people within the AI Safety community who are either ambiguous about which models they are talking about (leads to posts like this and makes consensus harder). Even worse if it’s on purpose for rhetorical reasons.

↑ comment by DanielFilan · 2023-11-02T20:41:16.999Z · LW(p) · GW(p)

Note that one of the main people pushing back against the comment you link is me, a member of the AI safety community.

Replies from: cfoster0↑ comment by cfoster0 · 2023-11-02T21:01:33.539Z · LW(p) · GW(p)

Noted! I think there is substantial consensus within the AIS community on a central claim that the open-sourcing of certain future frontier AI systems might unacceptably increase biorisks. But I think there is not much consensus on a lot of other important claims, like about for which (future or even current) AI systems open-sourcing is acceptable and for which ones open-sourcing unacceptably increases biorisks.

Replies from: eschatropic↑ comment by eschatropic · 2023-11-09T14:59:13.988Z · LW(p) · GW(p)

I agree it would be nice to have strong categories or formalism pinning down which future systems would be safe to open source, but it seems an asymmetry in expected evidence to treat a non-consensus on systems which don't exist yet as a pro-open-sourcing position. I think it's fair to say there is enough of a consensus that we don't know which future systems would be safe and so need more work to determine this before irreversible proliferation.

↑ comment by aog (Aidan O'Gara) · 2023-11-02T19:52:11.666Z · LW(p) · GW(p)

I think it's quite possible that open source LLMs above the capability of GPT-4 will be banned within the next two years on the grounds of biorisk.

The White House Executive Order requests a government report on the costs and benefits of open source frontier models and recommended policy actions. It also requires companies to report on the steps they take to secure model weights. These are the kinds of actions the government would take if they were concerned about open source models and thinking about banning them.

This seems like a foreseeable consequence of many of the papers above, and perhaps the explicit goal.

Replies from: 1a3orn, Aidan O'Gara↑ comment by 1a3orn · 2023-11-02T19:59:22.737Z · LW(p) · GW(p)

As an addition -- Anthropic's RSP already has GPT-4 level models already locked up behind safety level 2.

Given that they explicitly want their RSPs to be a model for laws and regulations, I'd be only mildly surprised if we got laws banning open source even at GPT-4 level. I think many people are actually shooting for this.

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2023-11-02T20:11:01.077Z · LW(p) · GW(p)

If that’s what they are shooting for, I’d be happy to push them to be explicit about this if they haven’t already.

Would like to be explicit about how they expect biorisk to happen at that level of capability, but I think at least some of them will keep quiet about this for ‘infohazard reasons’ (that was my takeaway from one of the Dario interviews).

↑ comment by aog (Aidan O'Gara) · 2023-11-03T07:40:56.646Z · LW(p) · GW(p)

Nuclear Threat Initiative has a wonderfully detailed report on AI biorisk, in which they more or less recommend that AI models which pose biorisks should not be open sourced:

Access controls for AI models. A promising approach for many types of models is the use of APIs that allow users to provide inputs and receive outputs without access to the underlying model. Maintaining control of a model ensures that built-in technical safeguards are not removed and provides opportunities for ensuring user legitimacy and detecting any potentially malicious or accidental misuse by users.

↑ comment by TurnTrout · 2023-11-03T05:40:02.059Z · LW(p) · GW(p)

It seems reasonably likely that covid was an accidental lab leak (though attribution is hard) and it also seems like it wouldn't have been that hard to engineer covid in a lab.

Seems like a positive update on human-caused bioterrorism, right? It's so easy to let stuff leak that covid accidentally gets out, and it might even have been easy to engineer, but (apparently) no one engineered it, nor am I aware of this kind of intentional bioterrorism happening in other places. People apparently aren't doing it. See Gwern's Terrorism is not effective.

Maybe smart LLMs come out. I bet people still won't be doing it.

So what's the threat model? One can say "tail risks", but--as OP points out--how much do LLMs really accelerate people's ability to deploy dangerous pathogens, compared to current possibilities? And what off-the-cuff probabilities are we talking about, here?

↑ comment by Metacelsus · 2023-11-05T14:20:49.816Z · LW(p) · GW(p)

>Much higher than the anthrax attacks you mention. People in biosecurity think that the tails are more like billions dead or the end of civilization. (I'm not sure if I believe them, the public object level cases for this don't seem that amazing due to info-hazard concerns.)

As a biologist who has thought about these kinds of things (and participated in a forecasting group about them), I agree. (And there are very good reasons for not making the object-level cases public!)

↑ comment by 1a3orn · 2023-11-02T19:16:33.626Z · LW(p) · GW(p)

In particular, consider covid. It seems reasonably likely that covid was an accidental lab leak (though attribution is hard) and it also seems like it wouldn't have been that hard to engineer covid in a lab. And the damage from covid is clearly extremely high. Much higher than the anthrax attacks you mention. I people in biosecurity think that the tails are more like billions dead or the end of civilization. (I'm not sure if I believe them, the public object level cases for this don't seem that amazing due to info-hazard concerns.)

I agree that if future open source models contribute substantially to the risk of something like covid, that would be a component in a good argument for banning them.

I'm dubious -- haven't seen much evidence -- that covid itself is evidence that future open source models would so contribute? Given that -- to the best of my very limited knowledge -- the research being conducted was pretty basic (knowledgewise) but rather expensive (equipment and timewise), so that an LLM wouldn't have removed a blocker. (I mean, that's why it came from a US and Chinese-government sponsored lab for whom resources were not an issue, no?) If there is an argument to this effect, 100% agree it is relevant. But I haven't looked into the sources of Covid for years anyhow, so I'm super fuzzy on this.

Further, suppose that open-source'd AI models could assist substantially with curing cancer. In that world, what probability would you assign to these AIs also assisting substantially with bioterror?

Fair point. Certainly more than in the other world.

I do think that your story is a reasonable mean between the two, with less intentionality, which is a reasonable prior for organizations in general.

I think the prior of "we should evaluate thing ongoingly and be careful about LLMs" when contrasted with "we are releasing this information on how to make plagues in raw form into the wild every day with no hope of retracting it right now" simply is an unjustified focus of one's hypothesis on LLMs causing dangers, against all the other things in the world more directly contributing to the problem. I think a clear exposition of why I'm wrong about this would be more valuable than any of the experiments I've outlined.

comment by a_g (gina) · 2023-11-03T03:45:19.443Z · LW(p) · GW(p)

I’m one of the authors from the second SecureBio paper (“Will releasing the weights of future large language models grant widespread access to pandemic agents?"). I’m not speaking for the whole team here but I wanted to respond to some of the points in this post, both about the paper specifically, and the broader point on bioterrorism risk from AI overall.

First, to acknowledge some justified criticisms of this paper:

- I agree that performing a Google search control would have substantially increased the methodological rigor of the paper. The team discussed this before running the experiment, and for various reasons, decided against it. We’re currently discussing whether it might make sense to run a post-hoc control group (which we might be able to do, since we omitted most of the details about the acquisition pathway. Running the control after the paper is already out might bias the results somewhat, but importantly, it won’t bias the results in favor of a positive/alarming result for open source models), or do other follow-up studies in this area. Anyway, TBD, but we do appreciate the discussion around this – I think this will both help inform any future red-teaming we plan do, and ultimately, did help us understand what parts of our logic we had communicated poorly.

- Based on the lack of a Google control, we agree that the assumption that current open-source LLMs significantly increase bioterrorism risk for non-experts does not follow from the paper. However, despite this, we think that our main point that future (more capable, less hallucinatory, etc), open-source models expand risks to pathogen access, etc. still stands. I’ll discuss this more below.

- To respond to the point that says

There are a few problems with this. First, as far as I can tell, their experiment just... doesn't matter if this is their conclusion?

If they wanted to make an entirely theoretical argument that future LLMs will provide this information with an unsafe degree of ease, then they should provide reasons for that

I think this is also not an unreasonable criticism. We (maybe) could have made claims that communicated the same overall epistemic state of the current paper without e.g., running the hackathon/experiment. We do think the point about LLMs assisting non-experts is often not as clear to a broader (e.g. policy) audience though, and (again, despite these results not being a firm benchmark about how current capabilities compare to Google, etc.), we think this point would have been somewhat less clear if the paper had basically said “experts in synthetic biology (who already know how to acquire pathogens) found that an open-source language model can walk them through a pathogen acquisition pathway. They think the models can probably do some portion of this for non-experts too, though they haven’t actually tested this yet”. Anyway, I do think the fault is on us, though, for failing to communicate some of this properly.

- A lot of people are confused on why we did the fine tuning; I’ve responded to this in a separate comment here [LW(p) · GW(p)].

However, I still have some key disagreements with this post:

- The post basically seems to hinge on the assumption that, because the information for acquiring pathogens is already publicly available through textbooks or journal articles, LLMs do very little to accelerate pathogen acquisition risk. I think this completely misses the mark for why LLMs are useful. There are other people who’ve said this better than I can, but the main reason that LLMs are useful isn’t just because they’re information regurgitators, but because they’re basically cheap domain experts. The most capable LLMs (like Claude and GPT4) can ~basically already be used like a tutor to explain complex scientific concepts, including the nuances of experimental design or reverse genetics or data analysis. Without appropriate safeguards, these models can also significantly lower the barrier to entry for engaging with bioweapons acquisition in the first place.

I'd like to ask the people who are confident that LLMs won’t help with bioweapons/bioterrorism whether they would also bet that LLMs will have ~zero impact on pharmaceutical or general-purpose biology research in the next 3-10 years. If you won’t take that bet, I’m curious what you think might be conceptually different about bioweapons research, design, or acquisition. - I also think this post, in general, doesn’t do enough forecasting on what LLMs can or will be able to do in the next 5-10 years, though in a somewhat inconsistent way. For instance, the post says that “if open source AI accelerated the cure for several forms of cancer, then even a hundred such [Anthrax attacks] could easily be worth it”. This is confusing for a few different reasons: first, it doesn’t seem like open-source LLMs can currently do much to accelerate cancer cures, so I’m assuming this is forecasting into the future. But then why not do the same for bioweapons capabilities? As others have pointed out, since biology is extremely dual use, the same capabilities that allow an LLM to understand or synthesize information in one domain in biology (cancer research) can be transferred to other domains as well (transmissible viruses) – especially if safeguards are absent. Finally (again, as also mentioned by others), anthrax is not the important comparison here, it’s the acquisition or engineering of other highly transmissible agents that can cause a pandemic from a single (or at least, single digit) transmission event.

Again, I think some of the criticisms of our paper methodology are warranted, but I would caution against updating prematurely – and especially based on current model capabilities – that there are zero biosecurity risks from future open-source LLMs. In any case, I’m hoping that some of the more methodologically rigorous studies coming out from RAND and others will make these risks (or lack thereof) more clear in the coming months.

Replies from: TurnTrout, Jerden, 1a3orn↑ comment by TurnTrout · 2023-11-03T05:16:21.838Z · LW(p) · GW(p)

I feel like an important point isn't getting discussed here -- What evidence is there on tutor-relevant tasks being a blocking part of the pipeline, as opposed to manufacturing barriers? Even if future LLMs are great tutors for concocting crazy bioweapons in theory, in practice what are the hardest parts? Is it really coming up with novel pathogens? (I don't know)

Replies from: Dirichlet-to-Neumann, gina↑ comment by Dirichlet-to-Neumann · 2023-11-04T11:16:30.274Z · LW(p) · GW(p)

Less wrong has imo a consistent bias toward thinking only ideas/theory are important and that the dirty (and lengthy) work of actual engineering will just sort itself out.

For a community that prides itself on empirical evidence it's rather ironic.

↑ comment by a_g (gina) · 2023-11-05T06:38:31.339Z · LW(p) · GW(p)

What evidence is there on tutor-relevant tasks being a blocking part of the pipeline, as opposed to manufacturing barriers?

So, I can break “manufacturing” down into two buckets: “concrete experiments and iteration to build something dangerous” or “access to materials and equipment”.

For concrete experiments, I think this is in fact the place where having an expert tutor becomes useful. When I started in a synthetic biology lab, most of the questions I would ask weren’t things like “how do I hold a pipette” but things like “what protocols can I use to check if my plasmid correctly got transformed into my cell line?” These were the types of things I’d ask a senior grad student, but can probably ask an LLM instead[1].

For raw materials or equipment – first of all, I think the proliferation of community bio ("biohacker") labs demonstrates that acquisition of raw materials and equipment isn’t as hard as you might think it is. Second, our group is especially concerned by trends in laboratory automation and outsourcing, like the ability to purchase synthetic DNA from companies that inconsistently screen their orders. There are still some hurdles, obviously – e.g., most reagent companies won’t ship to residential addresses, and the US is more permissive than other countries in operating community bio labs. But hopefully these examples are illustrative of why manufacturing might not be as big of a bottleneck as people might think it is for sufficiently motivated actors, or why information can help solve manufacturing-related problems.

(This is also one of these areas where it’s not especially prudent for me to go into excessive detail because of risks of information hazards. I am sympathetic to some of the frustrations with infohazards from folks here and elsewhere, but I do think it’s particularly bad practice to post potentially infohazardous stuff about "here's why doing harmful things with biology might be more accessible than you think" on a public forum.)

- ^

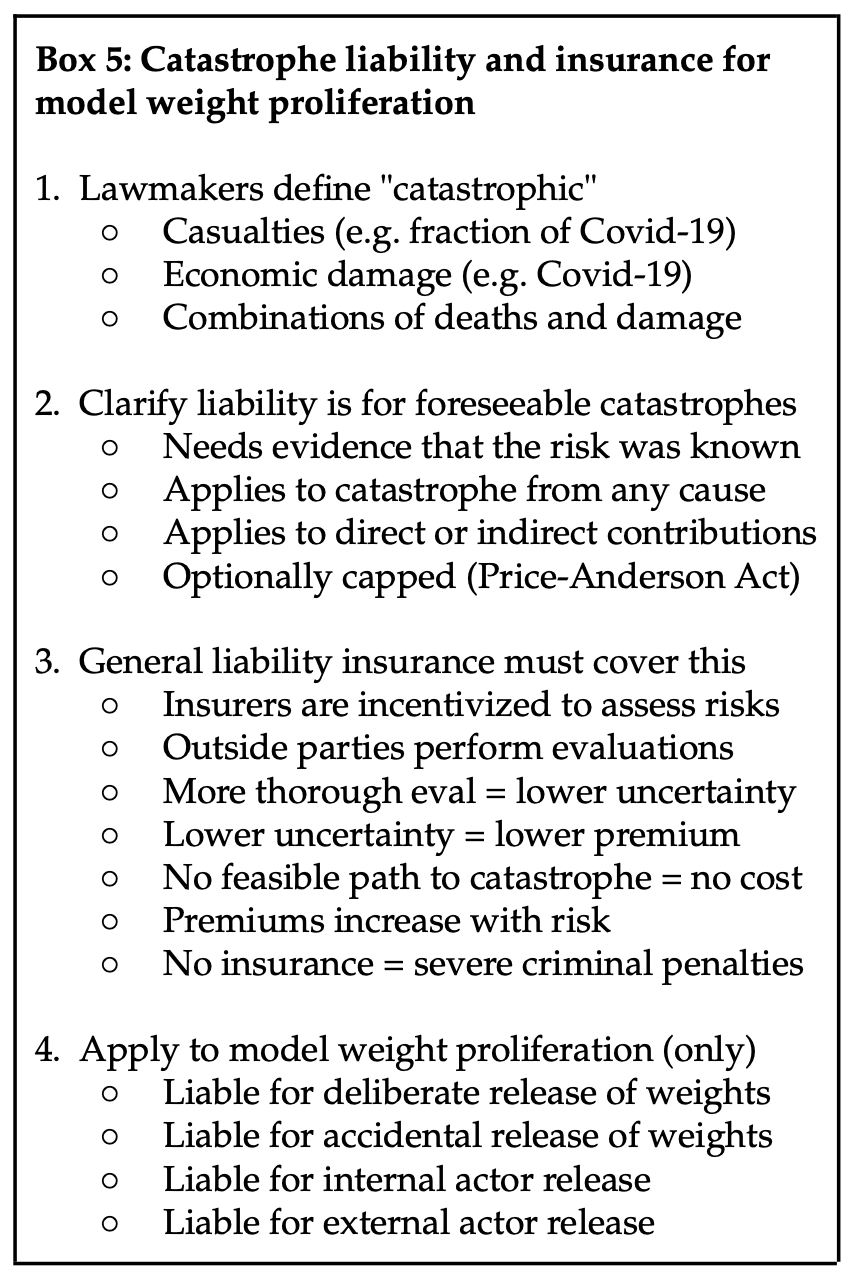

I think there’s a line of thought here which suggests that if we’re saying LLMs can increase dual-use biology risk, then maybe we should be banning all biology-relevant tools. But that’s not what we’re actually advocating for, and I personally think that some combination of KYC and safeguards for models behind APIs (so that it doesn’t overtly reveal information about how to manipulate potential pandemic viruses) can address a significant chunk of risks while still keeping the benefits. The paper makes an even more modest proposal and calls for catastrophe liability insurance instead. But I can also imagine having a more specific disagreement with folks here on "how much added bioterrorism risk from open-source models is acceptable?"

↑ comment by 1a3orn · 2023-11-05T12:28:29.783Z · LW(p) · GW(p)

For concrete experiments, I think this is in fact the place where having an expert tutor becomes useful. When I started in a synthetic biology lab, most of the questions I would ask weren’t things like “how do I hold a pipette” but things like “what protocols can I use to check if my plasmid correctly got transformed into my cell line?” These were the types of things I’d ask a senior grad student, but can probably ask an LLM instead[1]

Right now I can ask a closed-source LLM API this question. Your policy proposal contains no provision to stop such LLMs from answering this question. If this kind of in-itself-innocent question is where danger comes from, then unless I'm confused you need to shut down all bio lab questions directed at LLMs -- whether open source or not -- because > 80% of the relevant lab-style questions can be asked in an innocent way.

I think there’s a line of thought here which suggests that if we’re saying LLMs can increase dual-use biology risk, then maybe we should be banning all biology-relevant tools. But that’s not what we’re actually advocating for, and I personally think that some combination of KYC and safeguards for models behind APIs (so that it doesn’t overtly reveal information about how to manipulate potential pandemic viruses) can address a significant chunk of risks while still keeping the benefits. The paper makes an even more modest proposal and calls for catastrophe liability insurance instead.

If the government had required you to have catastrophe liability insurance for releasing open source software in the year 1995, then, in general I expect we would have no open source software industry today because 99.9% of this software would not be released. Do you predict differently?

Similarly for open source AI. I think when you model this out it amounts to an effective ban, just one that sounds less like a ban when you initially propose it.

↑ comment by Jerden · 2023-11-09T10:55:10.716Z · LW(p) · GW(p)

My assumption (as someone with a molecular biology degree) is that most of the barriers to making a bioweapon are more practical than theoretical, much like making a bomb, which really decreases the benefit of a Large Language Model. There's a crucial difference between knowing how to make a bomb and being able to do it without blowing off your own fingers - although for a pandemic bioweapon incompetance just results in contamination with harmless bacteria, so it's a less dangerous fail state. A would-be bioterrorist should probably just enroll in an undergraduate course in microbiology, much like a would-be bombmaker should just get a degree in chemistry - they would be taught most of the practical skills they need, and even have easy access to the equiptment and reagents! Obviously this is a big investment of time and resources, but I am personally not too concerned about terrorists that lack commitment - most of the ones who successfully pull of attacks with this level of complexity tend to have science and engineering backgrounds.

While I can't deny that future advances may make it easier to learn these skills from an LLM, I have a hard time imagining someone with the ability to accurately follow the increasingly complex instructions of an LLM who couldn't equally as easily obtain that information elsewhere - accurately following a series of instructions is hard actually! There are possibly a small number of people who could develop a bioweapon with the aid of an LLM that couldn't do it without one, but in terms of biorisk I think we should be more concerned about people who already have the training and access to resources required and just need the knowledge and motivation (I would say the average biosciences PhD student or lab technian, and they know how to use Pubmed!) rather than people without it somehow aquiring it via an LLM. Especially given that following these instructions will require you to have access to some very specialised and expensive equiptment anyway - sure, you can order DNA online, but splicing it into a virus is not something you can do in your kitchen sink.

↑ comment by 1a3orn · 2023-11-03T13:54:08.317Z · LW(p) · GW(p)

Finally (again, as also mentioned by others), anthrax is not the important comparison here, it’s the acquisition or engineering of other highly transmissible agents that can cause a pandemic from a single (or at least, single digit) transmission event.

At least one paper that I mention specifically gives anthrax as an example of the kind of thing that LLMs could help with, and I've seen the example used in other places. I think if people bring it up as a danger it's ok for me to use it as a comparison.

LLMs are useful isn’t just because they’re information regurgitators, but because they’re basically cheap domain experts. The most capable LLMs (like Claude and GPT4) can ~basically already be used like a tutor to explain complex scientific concepts, including the nuances of experimental design or reverse genetics or data analysis.

I'm somewhat dubious that a tutor to specifically help explain how to make a plague is going to be that much more use than a tutor to explain biotech generally. Like, the reason that this is called "dual-use" is that for every bad application there's an innocuous application.

So, if the proposal is to ban open source LLMs because they can explain the bad applications of the in-itself innocuous thing -- I just think that's unlikely to matter? If you're unable to rephrase a question in an innocuous way to some LLM, you probably aren't gonna make a bioweapon even with the LLMs help, no disrespect intended to the stupid terrorists among us.

It's kinda hard for me to picture a world where the delta in difficulty in making a biological weapon between (LLM explains biotech) and (LLM explains weapon biotech) is in any way a critical point along the biological weapons creation chain. Is that the world we think we live in? Is this the specific point you're critiquing?

If the proposal is to ban all explanation of biotechnology from LLMs and to ensure it can only be taught by humans to humans, well, I mean, I think that's a different matter, and I could address the pros and cons, but I think you should be clear about that being the actual proposal.

For instance, the post says that “if open source AI accelerated the cure for several forms of cancer, then even a hundred such [Anthrax attacks] could easily be worth it”. This is confusing for a few different reasons: first, it doesn’t seem like open-source LLMs can currently do much to accelerate cancer cures, so I’m assuming this is forecasting into the future. But then why not do the same for bioweapons capabilities?

This makes sense as a critique: I do think that actual biotech-specific models are much, much more likely to be used for biotech research than LLMs.

I also think that there's a chance that LLMs could speed up lab work, but in a pretty generic way like Excel speeds up lab work -- this would probably be good overall, because increasing the speed of lab work by 40% and terrorist lab work by 40% seems like a reasonably good thing for the world overall. I overall mostly don't expect big breakthroughs to come from LLMs.

comment by Zach Stein-Perlman · 2023-11-02T18:35:45.451Z · LW(p) · GW(p)

fwiw my guess is that OP didn't ask its grantees to do open-source LLM biorisk work at all; I think its research grantees generally have lots of freedom.

(I've worked for an OP-funded research org for 1.5 years. I don't think I've ever heard of OP asking us to work on anything specific, nor of us working on something because we thought OP would like it. Sometimes we receive restricted, project-specific grants, but I think those projects were initiated by us. Oh, one exception: Holden's standards-case-studies project [LW · GW].)

Replies from: DanielFilan↑ comment by DanielFilan · 2023-11-02T21:10:29.800Z · LW(p) · GW(p)

Also note that OpenPhil has funded the Future of Humanity Institute, the organization who houses the author of the paper 1a3orn cited for the claim that knowledge is not the main blocker for creating dangerous biological threats. My guess is that the dynamic 1a3orn describes is more about what things look juicy to the AI safety community, and less about funders specifically.

Replies from: habryka4↑ comment by habryka (habryka4) · 2023-11-02T22:24:34.761Z · LW(p) · GW(p)

Also note that OpenPhil has funded the Future of Life Institute,

You meant to say "Future of Humanity Institute".

Replies from: DanielFilan↑ comment by DanielFilan · 2023-11-03T01:39:53.239Z · LW(p) · GW(p)

Yet more proof that one of those orgs should change their name.

comment by David Hornbein · 2023-11-05T17:18:43.950Z · LW(p) · GW(p)

I note that the comments here include a lot of debate on the implications of this post's thesis and on policy recommendations and on social explanations for why the thesis is true. No commenter has yet disagreed with the actual thesis itself, which is that this paper is a representative example of a field that is "more advocacy than science", in which a large network of Open Philanthropy Project-funded advocates cite each other in a groundless web of footnotes which "vastly misrepresents the state of the evidence" in service of the party line.