The Offense-Defense Balance Rarely Changes

post by Maxwell Tabarrok (maxwell-tabarrok) · 2023-12-09T15:21:23.340Z · LW · GW · 23 commentsThis is a link post for https://maximumprogress.substack.com/p/the-offense-defense-balance-rarely

Contents

Possible Reasons For Stability None 23 comments

You’ve probably seen several conversations on X go something like this:

Michael Doomer ⏸️: Advanced AI can help anyone make bioweapons

If this technology spreads it will only take one crazy person to destroy the world!Edward Acc ⏩: I can just ask my AI to make a vaccine

Yann LeCun: My good AI will take down your rogue AI

The disagreement here hinges on whether a technology will enable offense (bioweapons) more than defense (vaccines). Predictions of the “offense-defense balance” of future technologies, especially AI, are central in debates about techno-optimism and existential risk.

Most of these predictions rely on intuitions about how technologies like cheap biotech, drones, and digital agents would affect the ease of attacking or protecting resources. It is hard to imagine a world with AI agents searching for software vulnerabilities and autonomous drones attacking military targets without imagining a massive shift the offense defense balance.

But there is little historical evidence for large changes in the offense defense balance, even in response to technological revolutions.

Consider cybersecurity. Moore’s law has taken us through seven orders of magnitude reduction in the cost of compute since the 70s. There were massive changes in the form and economic uses for computer technology along with the increase in raw compute power: Encryption, the internet, e-commerce, social media and smartphones.

The usual offense-defense balance story predicts that big changes to technologies like this should have big effects on the offense defense balance. If you had told people in the 1970s that in 2020 terrorist groups and lone psychopaths could access more computing power than IBM had ever produced at the time from their pocket, what would they have predicted about the offense defense balance of cybersecurity?

Contrary to their likely prediction, the offense-defense balance in cybersecurity seems stable. Cyberattacks have not been snuffed out but neither have they taken over the world. All major nations have defensive and offensive cybersecurity teams but no one has gained a decisive advantage. Computers still sometimes get viruses or ransomware, but they haven’t grown to endanger a large percent of the GDP of the internet. The US military budget for cybersecurity has increased by about 4% a year every year from 1980-2020, which is faster than GDP growth, but in line with GDP growth plus the growing fraction of GDP that’s on the internet.

This stability through several previous technological revolutions raises the burden of proof for why the offense defense balance of cybersecurity should be expected to change radically after the next one.

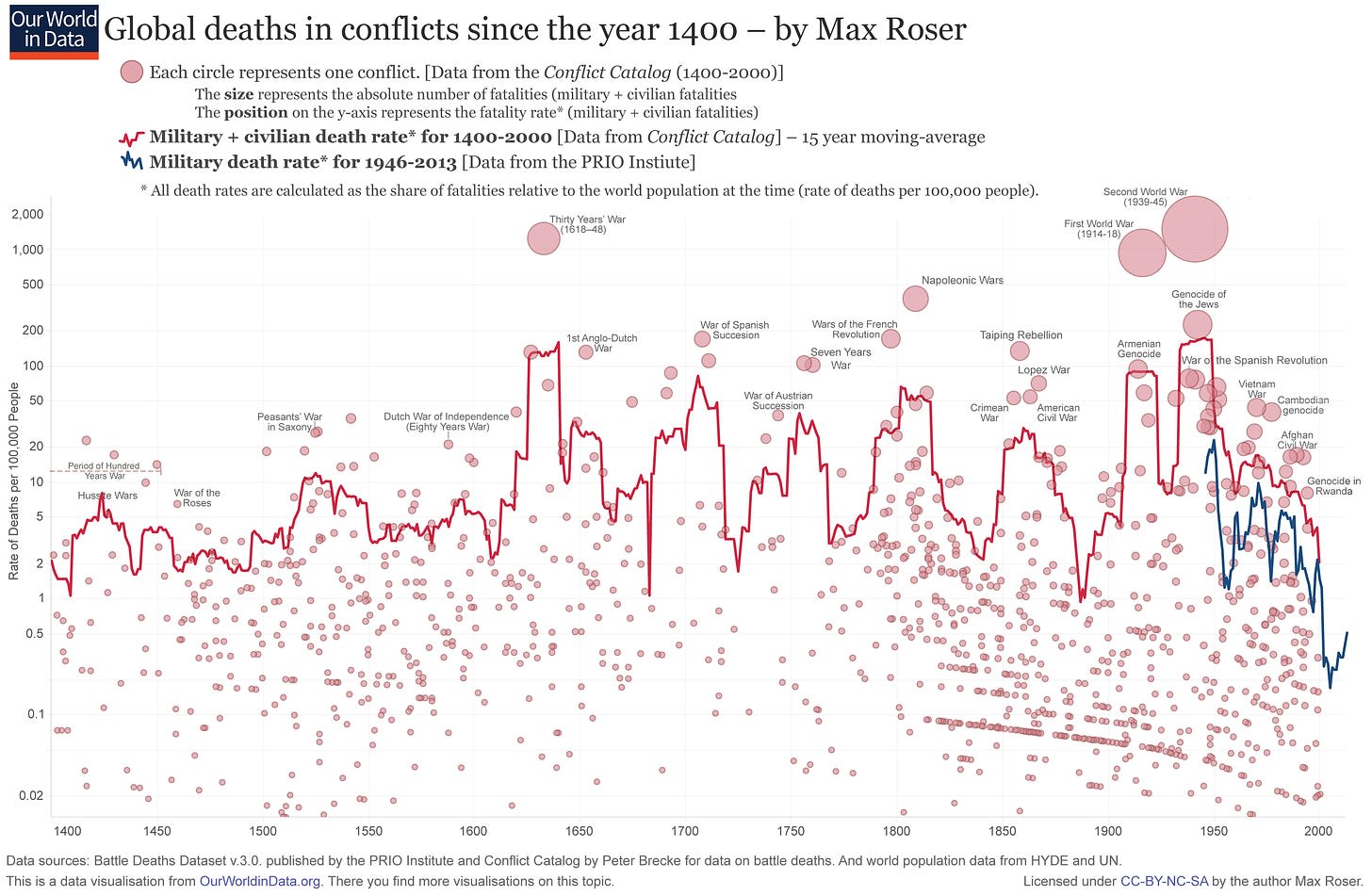

The stability of the offense-defense balance isn’t specific to cybersecurity. The graph below shows the per capita rate of death in war from 1400 to 2013. This graph contains all of humanity’s major technological revolutions. There is lots of variance from year to year but almost zero long run trend.

Does anyone have a theory of the offense-defense balance which can explain why the per-capita deaths from war should be about the same in 1640 when people are fighting with swords and horses as in 1940 when they are fighting with airstrikes and tanks?

It is very difficult to explain the variation in this graph with variation in technology. Per-capita deaths in conflict is noisy and cyclic while the progress in technology is relatively smooth and monotonic.

No previous technology has changed the frequency or cost of conflict enough to move this metric far beyond the maximum and minimum range that was already set 1400-1650. Again the burden of proof is raised for why we should expect AI to be different.

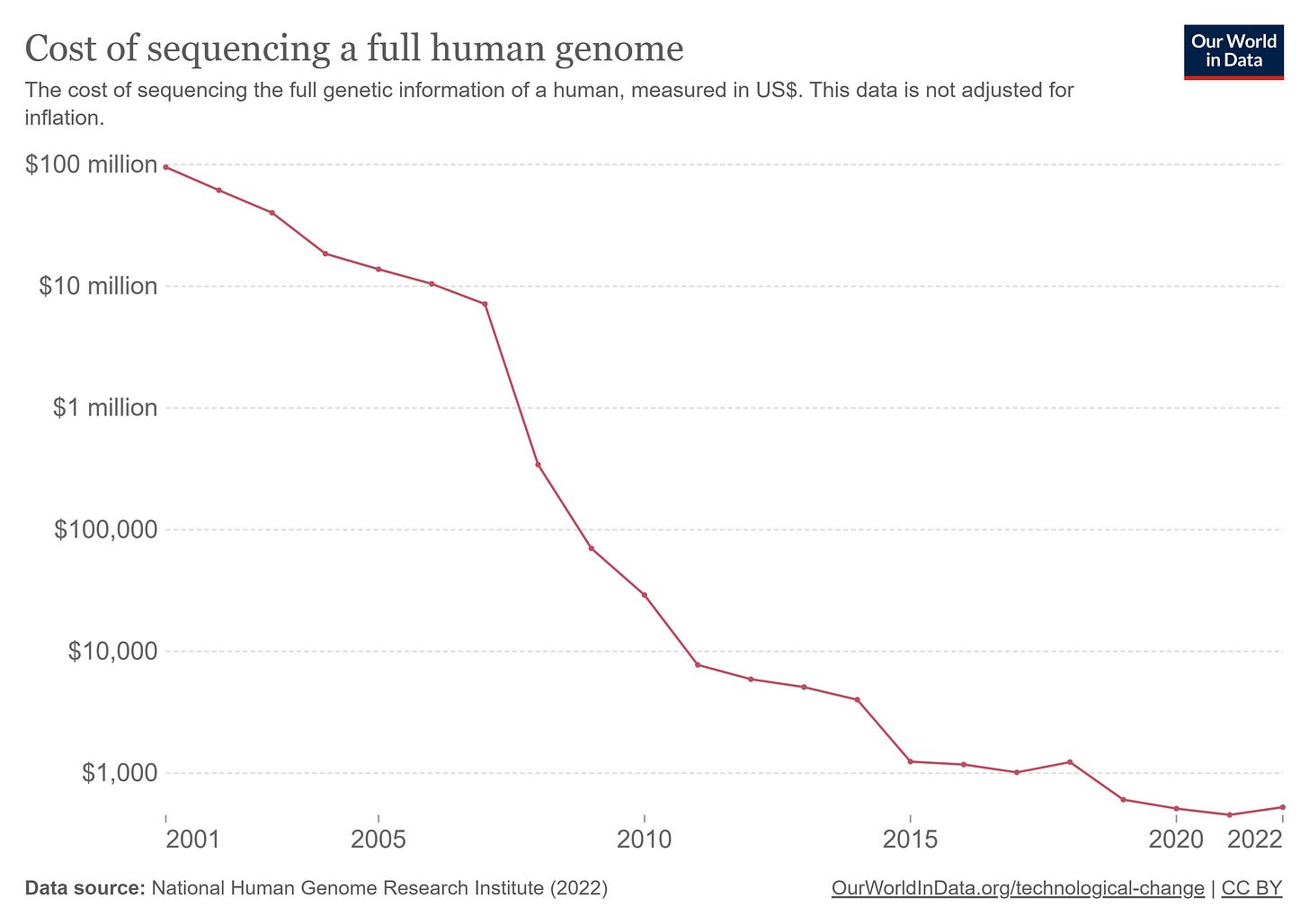

The cost to sequence a human genome has also fallen by 6 orders of magnitude and dozens of big technological changes in biology have happened along with it. Yet there has been no noticeable response in the frequency or damage of biological attacks.

Possible Reasons For Stability

Why is the offense-defense balance so stable even when the technologies behind it are rapidly and radically changing? The main contribution of this post is just to support the importance of this question with empirical evidence, but here is an underdeveloped theory.

The main thing is that the clean distinction between attackers and defenders in the theory of the offense-defense balance does not exist in practice. All attackers are also defenders and vice-versa. Invader countries have to defend their conquests and hackers need to have strong information security.

So if there is some technology which makes invading easier than defending or info-sec easier than hacking, it might not change the balance of power much because each actor needs to do both. If offense and defense are complements instead of substitutes then the balance between them isn’t as important.

What does this argument predict for the future of AI? It does not predict that the future will be very similar to today. Even though the offense defense balance in cybersecurity is pretty similar today as in the 1970s, there have been massive changes in technology and society since then. AI is clearly the defining technology of this century.

But it does predict that the big changes from AI won’t come from huge upsets to the offense-defense balance. The changes will look more like the industrial revolution and less like small terrorist groups being empowered to take down the internet or destroy entire countries.

Maybe in all of these cases there are threshold effects waiting around the corner or AI is just completely different from all of our past technological revolutions but that’s a claim that needs a lot of evidence to be proven. So far, the offense-defense balance seems to be very stable through large technological change and we should expect that to continue.

23 comments

Comments sorted by top scores.

comment by Adam Kaufman (Eccentricity) · 2023-12-09T17:23:41.989Z · LW(p) · GW(p)

Please note that the graph of per capita war deaths is on a log scale. The number moves over several orders of magnitude. One could certainly make the case that local spikes were sometimes caused by significant shifts in the offense-defense balance (like tanks and planes making offense easier for a while at the beginning of WWII). These shifts are pushed back to equilibrium over time, but personally I would be pretty unhappy about, say, deaths from pandemics spiking 4 orders of magnitude before returning to equilibrium.

Replies from: romeostevensit, gilch↑ comment by romeostevensit · 2023-12-09T17:40:21.795Z · LW(p) · GW(p)

I'd also guess the equilibrizing force is that there's a human tendency to give up after a certain percentage of deaths that holds regardless how those deaths happen. This force ceases to be relevant outside certain political motivations for war.

↑ comment by gilch · 2023-12-10T04:57:26.629Z · LW(p) · GW(p)

Yeah, I think it's the amplitude of the swings we need to be concerned with. The supposed mean-reversion tendency (or not) is not load bearing. A big enough swing still wipes us out.

Supposing we achieve AGI, someone will happen to get there first. At the very least, a human-level AI could be copied to another computer, and then you have two human-level AIs. If inference remains cheaper than training (seems likely, given how current LLMs work), then it could probably be immediately copied to thousands of computers, and you have a whole company of them. If they can figure out how to use existing compute to run themselves more efficiently, they'll probably shortly thereafter stop operating on anything like human timescales and we get a FOOM. No-one else has time to catch up.

comment by Dweomite · 2023-12-10T05:25:36.429Z · LW(p) · GW(p)

Seems like you expect changes in offense/defense balance to show up in what percentage of stuff gets destroyed. On my models it should mostly show up in how much stuff exists to be fought over; people won't build valuable things in the first place if they expect them to just get captured or destroyed.

To make that more concrete:

On Cybersecurity:

Computers still sometimes get viruses or ransomware, but they haven’t grown to endanger a large percent of the GDP of the internet.

This seems borderline tautological. We wouldn't put so much valuable stuff on the Internet if we couldn't (mostly) defend it.

In WW2, when one nation wanted to read another nation's encrypted communications (e.g. Enigma), they'd assemble elite teams of geniuses, and there was a serious fight about it with real doubt about who would win. A couple centuries before that, you could hire a single expert and have a decent shot at breaking someone's encryption.

Today, a private individual can download open-source encryption software and be pretty confident that no one on earth can break the encryption itself--not even a major government. (Though they might still get you through any number of other opsec mistakes).

This is necessary to make modern e-commerce work; if we hadn't had this massive shift in favor of the defender, we'd have way way less of our economy online. Note especially that asymmetrical encryption is vital to modern e-commerce, and it was widely assumed to be impossible until its invention in the 1970s; that breakthrough massively favors defenders.

But in that counterfactual world where the offense/defense balance didn't radically shift, you would probably still be able to write that "viruses and ransomware haven't grown to endanger a large percentage of the Internet". The Internet would be much smaller and less economically-important compared to our current world, but you wouldn't be able to see our current world to compare against, so it would still look like the Internet (as you know it) is mostly safe.

On Military Deaths:

Does anyone have a theory of the offense-defense balance which can explain why the per-capita deaths from war should be about the same in 1640 when people are fighting with swords and horses as in 1940 when they are fighting with airstrikes and tanks?

On my models I expect basically no relation between those variables. I expect per-capita deaths from war are mostly based on how much population nations are willing to sacrifice before they give up the fight (or stop picking new fights), not on any details of how the fighting works.

In terms of military tactics, acoup claims the offense/defense balance has radically reversed since roughly WW1, with trenches being nearly invincible in WW1 but fixed defenses being unholdable today (in fights between rich, high-tech nations):

Replies from: timothy-underwood-1The modern system assumes that any real opponent can develop enough firepower to both obliterate any fixed defense (like a line of trenches) or to make direct approaches futile. So armies have to focus on concealment and cover to avoid overwhelming firepower (you can’t hit what you can’t see!); since concealment only works until you do something detectable (like firing), you need to be able move to new concealed positions rapidly. If you want to attack, you need to use your own firepower to fix the enemy and then maneuver against them, rather than punching straight up the middle (punching straight up the middle, I should note, as a tactic, was actually quite successful pre-1850 or so) or trying to simply annihilate the enemy with massed firepower (like the great barrages of WWI), because your enemy will also be using cover and concealment to limit the effectiveness of your firepower (on this, note Biddle, “Afghanistan and the Future of Warfare” Foreign Affairs 82.2 (2003); Biddle notes that even quantities of firepower that approach nuclear yields delivered via massive quantities of conventional explosives were insufficient to blast entrenched infantry out of position in WWI.)

↑ comment by Timothy Underwood (timothy-underwood-1) · 2023-12-10T18:23:26.777Z · LW(p) · GW(p)

I'd note that acoup's model of fires primacy making defence untenable between hi tech nations, while not completely disproven by the Ukraine war, is a hypothesis that seems much less likely to be true/ less true than it did in early 2022. The Ukraine war has shown in most cases a strong advantage to a prepared defender and the difficulty of taking urban environments.

The current Israel - Hamas was shows a similar tendency, where Israel is moving very slowly into the core urban concentrations (ie it has surrounded Gaza city so far, but not really entered it), though its superiority in resources relative to its opponent is vastly greater than Russia's advantage over Ukraine was.

comment by quetzal_rainbow · 2023-12-09T21:28:50.866Z · LW(p) · GW(p)

AI is not a matter of historical evidence. Correct models here are invasive species, new pathogens, Great Oxidation Event. 99% of species ended up extinct.

Replies from: gwern↑ comment by gwern · 2023-12-09T22:25:40.960Z · LW(p) · GW(p)

Yes, there's some circular reasoning here simply in terms of observer selection effects: "we know the offense-defense balance never changes enough to drive humans extinct or collapse human civilization, because looking back over our history for the past 600 years which have decent records, we never see ourselves going extinct or civilization collapsing to the point of not having decent records."

comment by the gears to ascension (lahwran) · 2023-12-09T16:59:01.636Z · LW(p) · GW(p)

AI does not have to defend against bioweapon attacks to survive them.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2023-12-09T19:40:59.692Z · LW(p) · GW(p)

At least assuming that it has fully automated infrastructure. If it still needs humans to keep the power plants running and to do physical maintenance in the server rooms, it becomes indirectly susceptible to the bioweapons.

Replies from: gilch↑ comment by gilch · 2023-12-10T04:49:14.008Z · LW(p) · GW(p)

Yes, but the (a?) moral of That Alien Message [LW · GW] is that a superintelligence could build its own self-sustaining infrastructure faster than one might think using methods you might not have thought of, for example, through bio/nano engineering instead of robots. Or, you know, something else we haven't thought of.

Replies from: rudi-c↑ comment by Rudi C (rudi-c) · 2023-12-11T04:23:27.133Z · LW(p) · GW(p)

This is unrealistic. It assumes:

- Orders of magnitude more intelligence

- The actual usefulness of such intelligence in the physical world with its physical limits

The more worrying prospect is that the AI might not necessarily fear suicide. Suicidal actions are quite prevalent among humans, after all.

comment by lc · 2023-12-10T00:11:03.641Z · LW(p) · GW(p)

Wars and cyberattacks happen when people MISJUDGE their own security. You can't measure offense-defense balance by looking at how many wars are happening, just like you can't (directly) measure market capitalization from trading volume. A better idea would be to look at cybersecurity SPENDING over time (at least as a % of all IT spending). And that has certainly changed.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-12-09T21:07:10.386Z · LW(p) · GW(p)

I think it's a mistake to look at this globally. What is relevant, especially for older conflicts, is the local outcome. For the participants in the conflict in question, what was the outcome for the militarily inferior force? The answer is sometimes surrender, sometimes enslavement, sometimes slaughter. If the AI wants human servants, which I expect it will for a temporary transition period, it can use BCIs and mind control viruses (e.g. optogenetics) to literally turn humans into permanent mind slaves. It can use horrific civilization-ending bioweapons as a threat to keep unconquered nations from interfering. When the balance of power shifts, which it does, the losers tend to have a very bad time.

comment by Gurkenglas · 2023-12-09T18:28:33.944Z · LW(p) · GW(p)

I put forward the stability-reason of control mechanisms, a prominent recent example being covid. Low-hanging attack-fruit are picked, we stop doing the things that make us vulnerable, and impose quarantines, and ban labs.

AI has those, too. But calling that an argument against AI danger is a decision-theoretic short-circuit.

comment by Dagon · 2023-12-09T22:23:48.899Z · LW(p) · GW(p)

I think you have to zoom out and aggregate quite a bit for this thesis, and ignore the fact that it's only been a few generations of humans who legitimately had the option to destroy civilization. Ignore the fact that "offense" and "defense" in most cases are labels of intent, not of action or capability (which means they're often symmetric because they're actually the same capability). But notice that there's a LOT of local variation in the equilibra you mention, and as new technology appears, it does not appear evenly - it takes time to spread and even out again.

comment by Chris_Leong · 2023-12-09T21:28:07.386Z · LW(p) · GW(p)

“ AI is just completely different from all of our past technological revolutions but that’s a claim that needs a lot of evidence to be proven”

Not really, it should be clear pretty quickly that if any technology is going to be different, it is going to be AI. And not just for this statement, but for almost any statement you can make about technology, even really basic statements like, "Technology isn't conscious" and "Technology is designed and invented by humans".

I’m not claiming to demonstrate here that it is different, just that there isn’t some massive burden of proof to overcome.

comment by Tao Lin (tao-lin) · 2023-12-09T18:00:22.395Z · LW(p) · GW(p)

if AI does change the offence defence balance, it could be because defending an AI (that doesnt need to protect humans) is fundamentally different than defending humans, allowing the AI to spend much less on defence

comment by Roko · 2023-12-10T02:47:14.717Z · LW(p) · GW(p)

If we look at the chart purely as a line chart, it looks like it is oscillating between 1 and 100 deaths per 100,000 per year. I'm pretty sure the drive of that oscillation is game theoretic rather than technological, because whatever the offense/defense balance between humans is, you can always either choose to fight a lot (until the casualties hit 100) or not much. 100 deaths per 100,000 per year seems to be the limit at which nations break.

So I don't think this chart really tells us anything about offense/defense asymmetry. WWI was notorious for defense being very favored, and yet it's a casualty peak.

comment by [deleted] · 2023-12-10T01:38:09.731Z · LW(p) · GW(p)

Summary: a detailed look at offense/defense is required. Asymmetric attacks do exist.

Asymmetric Detail paragraph 1: The USA faced asymmetric warfare in the middle East and ultimately gave up. While almost all individual battles were won by US forces, the cost in terradollars was drastically skewed in favor of the attackers. 8 trillion USD over 20 years should be contrasted with the entire combined GDP of Iraq + Afghanistan, approximately 2.5 trillion over that time period. Some small portion of that was funding attacks (and foreign support)

Asymmetric Detail paragraph 2 : Where it gets really ridiculous is something like bioterrorism. Suppose, for the sake of argument, that Covid-19 really was developed through deliberate gain of function experiments. And it's done in an expensive lab with no expenses spared. So 6.8 million/year. Suppose 5 years of experiments are done to find the most optimal pandemic causing organism before release. So 34 million dollars to develop the weapon, which did approximately 29.4 trillion in damage and killed approximately 3.4 million people. If the 60 lab staff went on mass shooting sprees, and killed 58 people each, they would have killed 3480 people. A bioweapon is approximately 1000 times as effective.

Note on cybersecurity: Most cyberattacks can be traced to:

(1) computing architectural decisions. The use of lower level languages and inherently insecure operating systems rather than containerized everything. Fundamentally insecure general purpose hardware, and untrusted code uses the same silicon as trusted code.

(2) mistakes in implementation. Perfect software on adequately good hardware cannot be hacked.

(3) channel insecurity. The way emails work, where the sender isn't tied to a real identity and the status of the sender isn't checked (corporate workers only want to hear from other corporate workers, jailers only from judges, bank officers only from the SEC and other bank workers) allows malware. Similarly, 'fake news' is only possible because it doesn't require a publication license to open a website at all and there are minimal accountability standards for journalism.

Mitigations:

(1) is fixable, we'll just need all new silicon, new languages, and new OSes. Some use of existing crypto ASICs and enclave CPUs is already done.

(2) is fixable, we just need to formally prove key parts of the software stack

(3) is fixable. Being a journalist has to be a title and it would be a licensed profession with requirements and standards. Calling yourself one without the license would be a crime. Opening a website that allegedly offers news would require a license and the web backend would need to contain some consensus mechanism to validate a given site. (blockchain might be used here). The same would be true for any other online service. Sending emails would similarly require verification and a connection to a business or a person.

Everything outside of this would be the dark web. Note that all the mitigations have costs, some substantial. There is less free speech in this world, less open communication, and computers are less efficient because of using dedicated silicon (CPU and memory) to handle separate programs.

Uses for AI Pauses: So while I personally think AI pauses are hopeless, I will note here that the above would be what you want to fix during one. You can't find out the latest issues with more advanced AI than the current SOTA during a pause, but you could lock the world's computers down to reduce the damage when an AI tries to escape.

Conclusion: Asymmetric attacks do exist, cyberattacks are an example where the advantage favors the defender, while bioattacks favor the attacker. With that said I do agree with the OPs general feeling that the best way to prepare for an upcoming age of AI and advanced weapons made possible by AI is to lock and load. You can't really hope to compete in a world of advanced weapons by begging other countries not to build them.

comment by Veedrac · 2023-12-10T21:56:19.438Z · LW(p) · GW(p)

Cybersecurity — this is balanced by being a market question. You invest resources until your wall makes attacks uneconomical, or otherwise economically survivable. This is balanced over time because the defense is ‘people spend time to think about how to write code that isn't wrong.’ In a world where cyber attacks were orders of magnitude more powerful, people would just spend more time making their code and computing infrastructure less wrong. This has happened in the past.

Deaths in conflicts — this is balanced by being a market question. People will only spend so much to protect their regime. This varies, as you see in the graph, by a good few orders of magnitude, but there's little historic reason to think that there should be a clean linear trend over time across the distant past, or that how much people value their regime is proportionate somehow to the absolute military technology of the opposing one.

Genome sequencing biorisk — 8ish years is not a long time to claim nobody is going to use it for highly damaging terror attacks? Most of this graph is irrelevant to that; unaffordable by 100x and unaffordable by 1000x still just equally resolve to it not happening. 8ish years at an affordable price is maybe at best enough for terrorists with limited resources to start catching on that they might want to pay attention.

comment by Timothy Underwood (timothy-underwood-1) · 2023-12-10T18:17:41.620Z · LW(p) · GW(p)

I'd expect per capita war deaths to have nothing to do with offence/ defence balance as such (unless the defence gets so strong that wars simply don't happen, in which case it goes to zero).

Per capita war deaths in this context are about the ability of states to mobilize populations, and about how much damage the warfare does to the civilian population that the battle occurs over. I don't think there is any uncomplicated connection between that and something like 'how much bigger does your army need to be for you to be able to successfully win against a defender who has had time to get ready'.

comment by hold_my_fish · 2023-12-10T00:49:26.500Z · LW(p) · GW(p)

In encryption, hasn't the balance changed to favor the defender? It used to be that it was possible to break encryption. (A famous example is the Enigma machine.) Today, it is not possible. If you want to read someone's messages, you'll need to work around the encryption somehow (such as by social engineering). Quantum computers will eventually change this for the public-key encryption in common use today, but, as far as I know, post-quantum cryptography is farther along than quantum computers themselves, so the defender-wins status quo looks likely to persist.

I suspect that this phenomenon in encryption technology, where as the technology improves, equal technology levels favor the defender, is a general pattern in information technology. If that's true, then AI, being an information technology, should be expected to also increasingly favor the defender over time, provided that the technology is sufficiently widely distributed.