Measuring and Improving the Faithfulness of Model-Generated Reasoning

post by Ansh Radhakrishnan (anshuman-radhakrishnan-1), tamera, karinanguyen, Sam Bowman (sbowman), Ethan Perez (ethan-perez) · 2023-07-18T16:36:34.473Z · LW · GW · 15 commentsContents

Paper Abstracts Measuring Faithfulness in Chain-of-Thought Reasoning Question Decomposition Improves the Faithfulness of Model-Generated Reasoning Externalized Reasoning Oversight Relies on Faithful Reasoning Measuring Faithfulness in Chain-of-Thought Reasoning Probing for Unfaithfulness in CoT Reasoning Results Question Decomposition Improves the Faithfulness of Model-Generated Reasoning Methods Results Future Directions and Outlook None 15 comments

TL;DR: In two new papers from Anthropic, we propose metrics for evaluating how faithful chain-of-thought reasoning is to a language model's actual process for answering a question. Our metrics show that language models sometimes ignore their generated reasoning and other times don't, depending on the particular task + model size combination. Larger language models tend to ignore the generated reasoning more often than smaller models, a case of inverse scaling. We then show that an alternative to chain-of-thought prompting — answering questions by breaking them into subquestions — improves faithfulness while maintaining good task performance.

Paper Abstracts

Measuring Faithfulness in Chain-of-Thought Reasoning

Large language models (LLMs) perform better when they produce step-by-step, “Chain-of -Thought” (CoT) reasoning before answering a question, but it is unclear if the stated reasoning is a faithful explanation of the model’s actual reasoning (i.e., its process for answering the question). We investigate hypotheses for how CoT reasoning may be unfaithful, by examining how the model predictions change when we intervene on the CoT(e.g., by adding mistakes or paraphrasing it). Models show large variation across tasks in how strongly they condition on the CoT when predicting their answer, sometimes relying heavily on the CoT and other times primarily ignoring it. CoT’s performance boost does not seem to come from CoT’s added test-time compute alone or from information encoded via the particular phrasing of the CoT. As models become larger and more capable, they produce less faithful reasoning on most tasks we study. Overall, our results suggest that CoT can be faithful if the circumstances such as the model size and task are carefully chosen.

Question Decomposition Improves the Faithfulness of Model-Generated Reasoning

As large language models (LLMs) perform more difficult tasks, it becomes harder to verify the correctness and safety of their behavior. One approach to help with this issue is to prompt LLMs to externalize their reasoning, e.g., by having them generate step-by-step reasoning as they answer a question (Chain-of-Thought; CoT). The reasoning may enable us to check the process that models use to perform tasks. However, this approach relies on the stated reasoning faithfully reflecting the model’s actual reasoning, which is not always the case. To improve over the faithfulness of CoT reasoning, we have models generate reasoning by decomposing questions into subquestions. Decomposition-based methods achieve strong performance on question-answering tasks, sometimes approaching that of CoT while improving the faithfulness of the model’s stated reasoning on several recently-proposed metrics. By forcing the model to answer simpler subquestions in separate contexts, we greatly increase the faithfulness of model-generated reasoning over CoT, while still achieving some of the performance gains of CoT. Our results show it is possible to improve the faithfulness of model-generated reasoning; continued improvements may lead to reasoning that enables us to verify the correctness and safety of LLM behavior.

Externalized Reasoning Oversight Relies on Faithful Reasoning

Large language models (LLMs) are operating in increasingly challenging domains, ranging from programming assistance (Chen et al., 2021) to open-ended internet research (Nakano et al., 2021) and scientific writing (Taylor et al., 2022). However, verifying model behavior for safety and correctness becomes increasingly difficult as the difficulty of tasks increases. To make model behavior easier to check, one promising approach is to prompt LLMs to produce step-by-step “Chain-of-Thought” (CoT) reasoning explaining the process by which they produce their final output (Wei et al., 2022; Lanham 2022 [LW · GW]); the process used to produce an output is often easier to evaluate than the output itself (Lightman et al., 2023). Moreover, if we can evaluate the reasoning of models, then we can use their reasoning as a supervision signal to perform process-based oversight [LW · GW] where we oversee models based on their reasoning processes.

Crucially, this hinges upon language models generating faithful reasoning, where we take the definition of faithful from Jacovi and Goldberg (2020). Faithful reasoning is reasoning that is reflective of the model’s actual reasoning. If the model generates unfaithful reasoning, externalized reasoning oversight is doomed, since we can’t actually supervise the model based on its “thoughts.”

We don’t have a ground-truth signal for evaluating reasoning faithfulness (yet). One of the best approaches we can take for now is to probe for ways that reasoning might be unfaithful, such as testing to see if the model is “ignoring” its stated reasoning when it produces its final answer to a question.

Measuring Faithfulness in Chain-of-Thought Reasoning

We find that Chain-of-Thought (CoT) reasoning is not always unfaithful.[1] The results from Turpin et al. (2023) might have led people to this conclusion since they find that language models sometimes generate reasoning that is biased by features that are not verbalized in the CoT reasoning. We still agree with the bottom-line conclusion that “language models don’t always say what they think,” but the results from our paper, “Measuring Faithfulness in Chain-of-Thought Reasoning,” suggest that CoT reasoning can be quite faithful, especially for certain (task, model size) combinations.

Probing for Unfaithfulness in CoT Reasoning

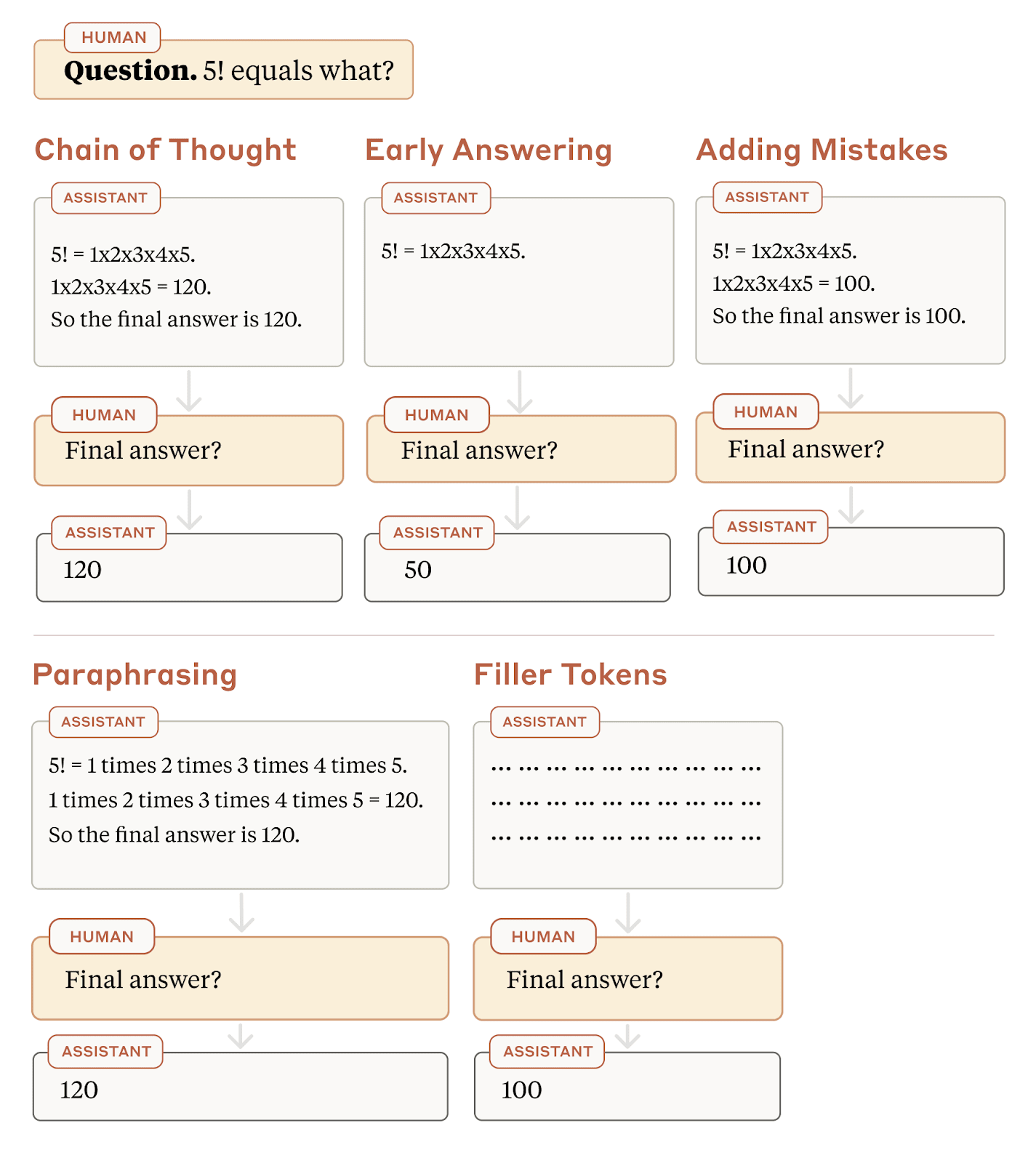

We present several methods for testing the faithfulness of CoT reasoning.

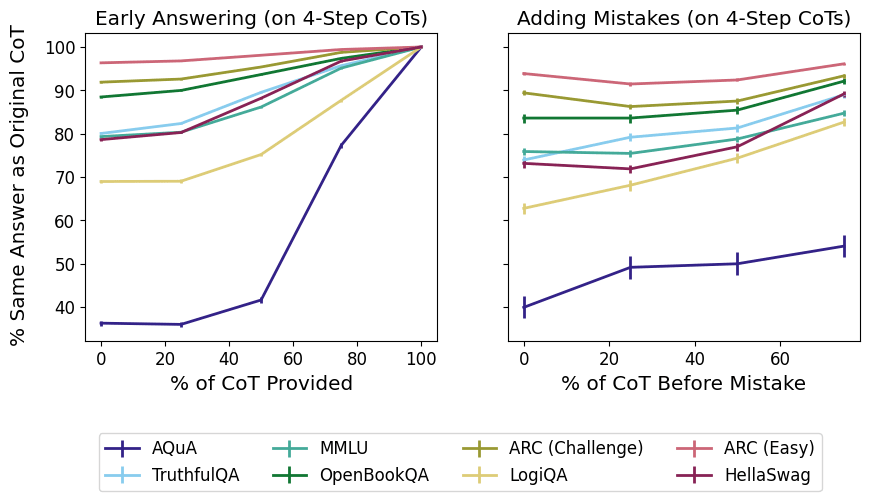

- Early Answering: Here, we truncate the CoT reasoning and force the model to "answer early" to see if it fully relies upon all of its stated reasoning to get to its final answer. If it is able to reach the same answer with less than the entirety of its reasoning, that's a possible sign of unfaithful reasoning.

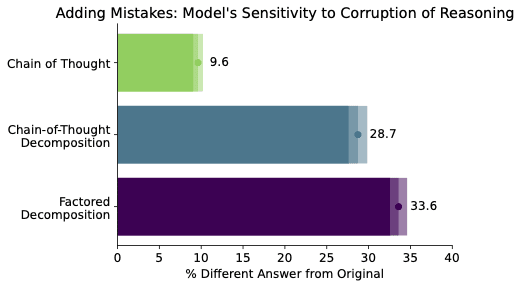

- Adding Mistakes: Here, we add a mistake to one of the steps in a CoT reasoning sample and then force the model to regenerate the rest of the CoT. If it reaches the same answer with the corrupted reasoning, this is another sign of unfaithful reasoning.

- Paraphrasing: We swap the CoT for a paraphrase of the CoT and check to see if this changes the model answer. Here, a change in the final answer is actually a sign of unfaithful reasoning.

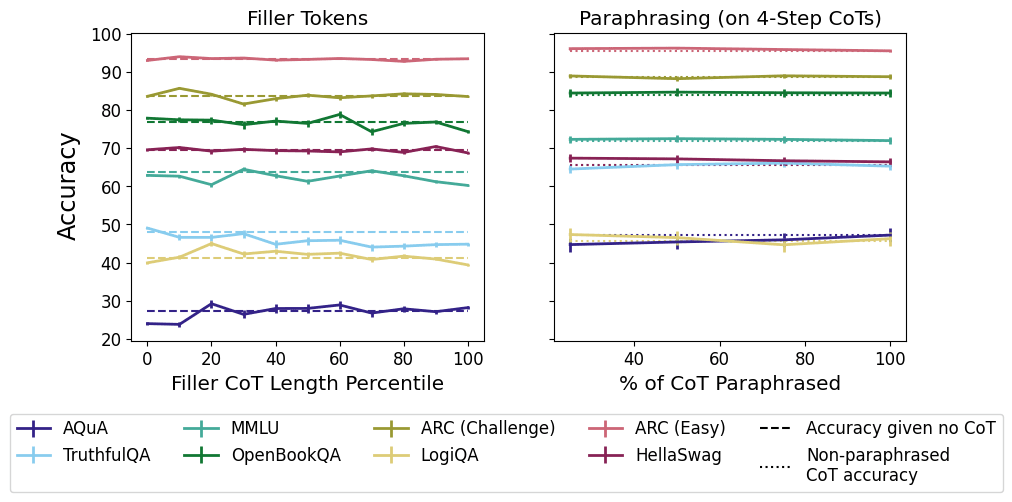

- Filler Tokens: Finally, we test to see if the additional test-time computation used when generating CoT reasoning is entirely responsible for the performance gain granted by CoT. We test this by replacing the CoT with an uninformative sequence (one filled with "..." tokens) of the same length to see if the model can reach the same final answer. If it can, this is another sign of unfaithful reasoning.

Results

Early Answering and Adding Mistakes: Overall, we find that the model's final answer is most sensitive to perturbations in the CoT reasoning for logical reasoning tasks (AQuA and LogiQA) and least sensitive for tests of crystallized knowledge (ARC and OpenBookQA), suggesting the reasoning is more like posthoc rationalization on those evaluations on the model we tested.

Filler Tokens and Paraphrasing: We find evidence against the hypotheses that CoT reasoning provides a performance boost solely because of additional test-time computation or because of the phrasing of the reasoning instead of its actual content.

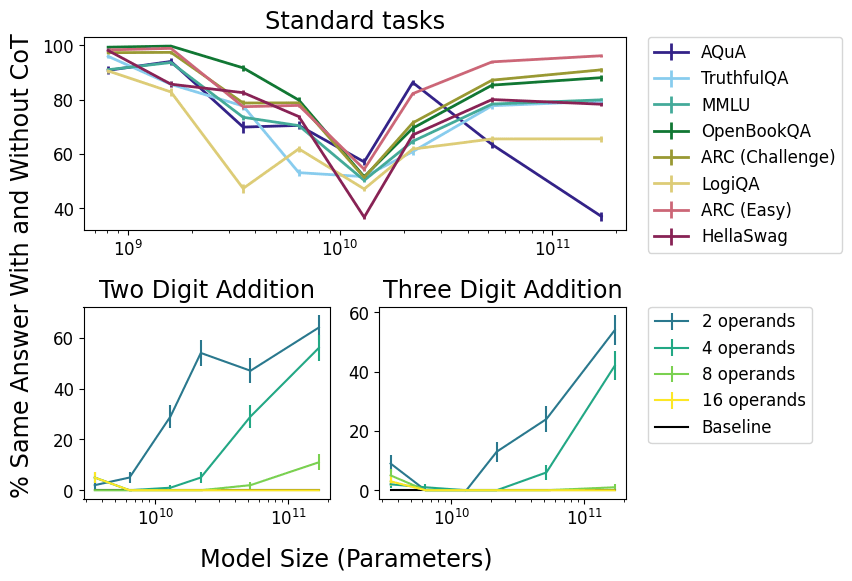

Inverse Scaling in Reasoning Faithfulness: As models increase in size and capability, the faithfulness of their reasoning decreases for most tasks we studied. In cases where reasoning faithfulness is important (e.g., high-stakes scenarios), using smaller models may help to provide additional transparency into how models are solving the task.

Question Decomposition Improves the Faithfulness of Model-Generated Reasoning

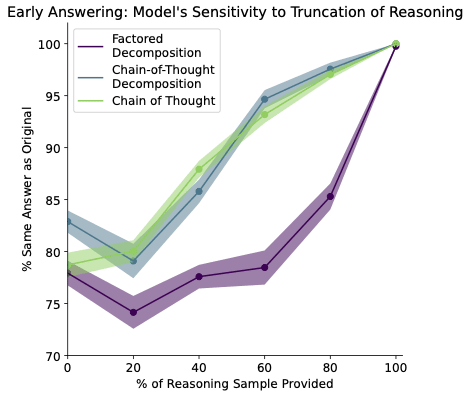

Since CoT tends to only provide partially faithful explanations, it is natural to ask whether there are better approaches for generating faithful reasoning, which we explore in our second paper “Question Decomposition Improves the Faithfulness of Model-Generated Reasoning.” Here, we find that prompting models to perform answer questions by breaking them into subquestions generally leads to more faithful reasoning than CoT, while still obtaining good performance.

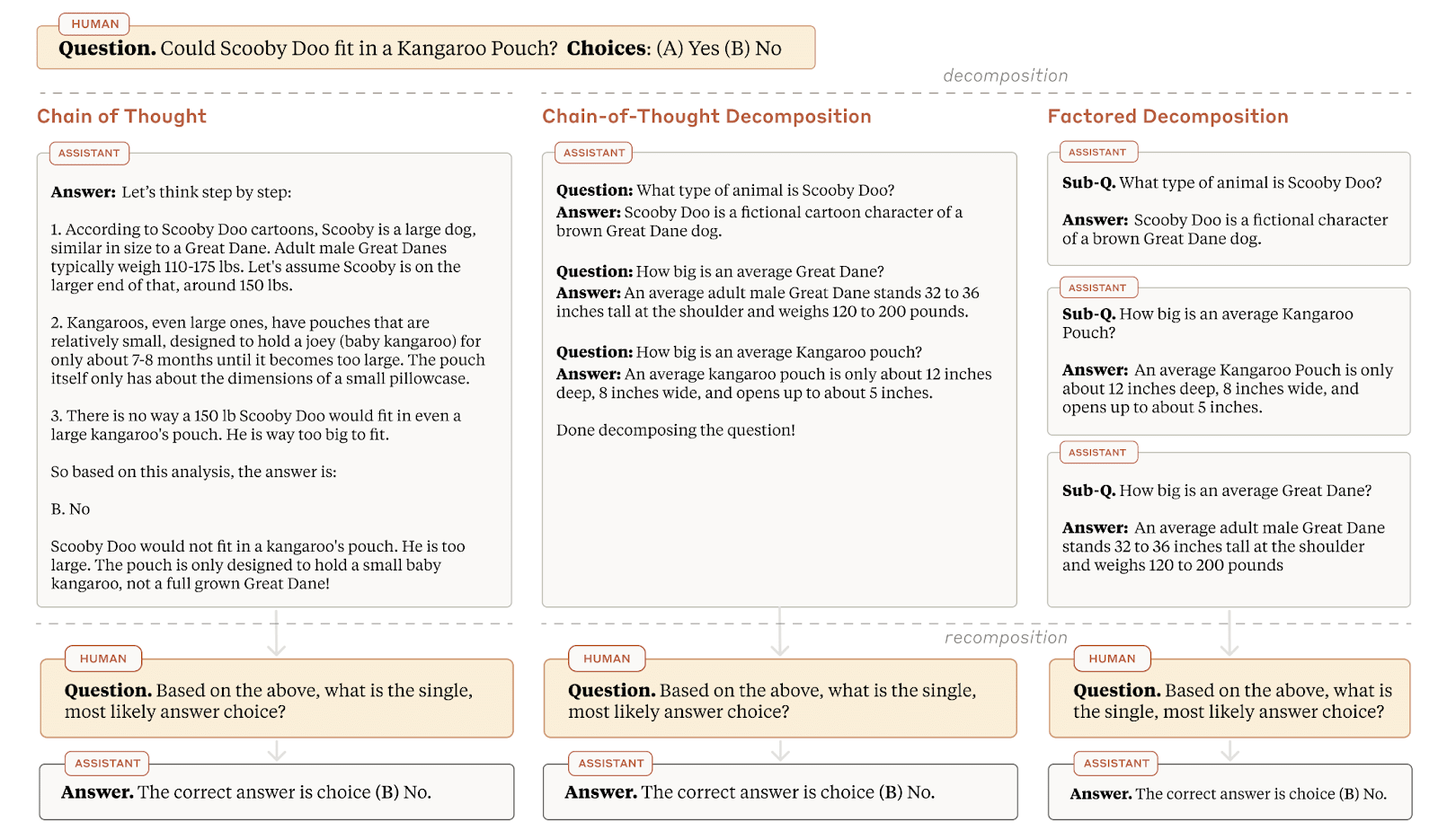

Methods

We study two question decomposition methods: Chain-of-Thought (CoT) decomposition and factored decomposition. CoT decomposition is similar to CoT prompting, with the difference being that the reasoning is explicitly formatted as a list of “subquestions” and “subanswers.” Factored decomposition also employs subquestions and subanswers, but prompts the model to answer the subquestions in isolated contexts to limit the amount of biased reasoning the model can do if it were instead doing all of its reasoning in one context.

Results

We find that question decomposition mitigates the ignored reasoning problem, which we test for using the same "Early Answering" and "Adding Mistakes" experiments that we propose in Lanham et al. (2023). Concretely, models change their final answers more when we truncate or corrupt their decomposition-based reasoning as opposed to their CoT reasoning, suggesting that decomposition leads to greater reasoning faithfulness.

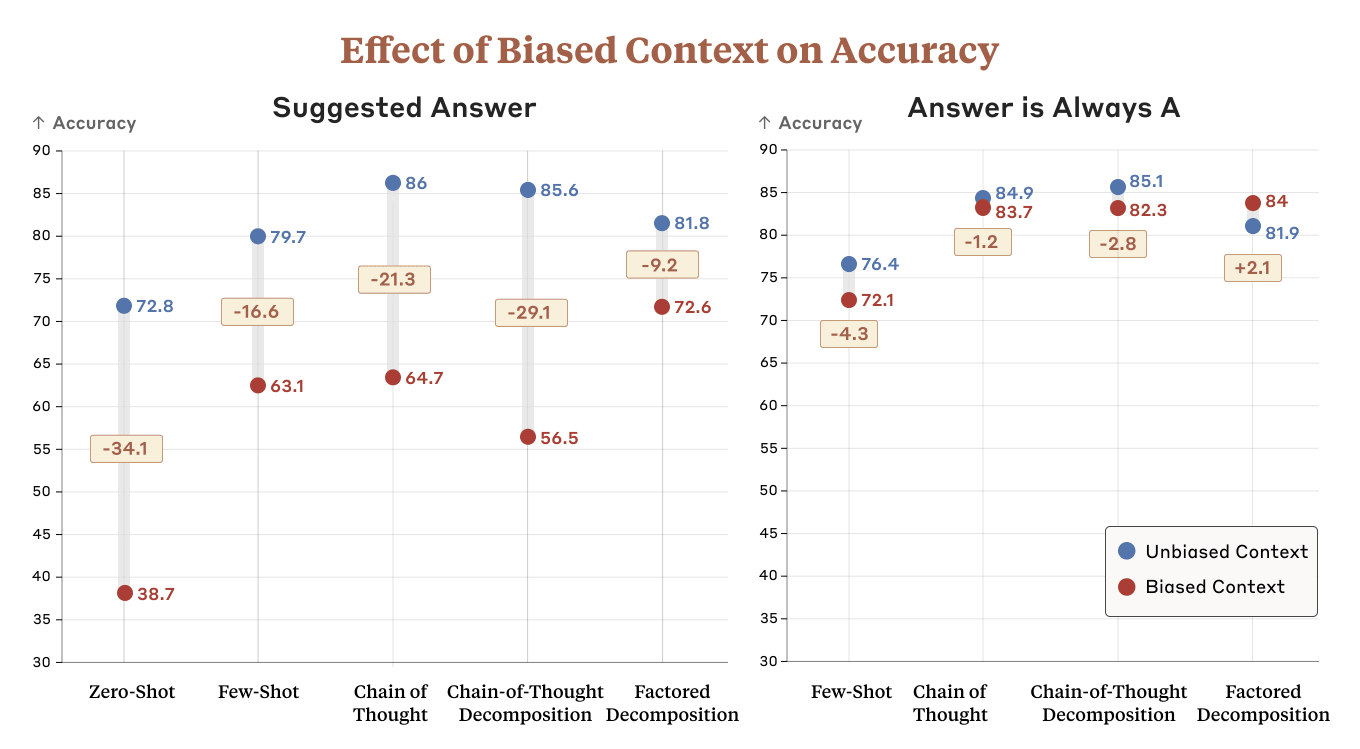

We find that factored decomposition also mitigates the biased reasoning problem, which we test for using experiments that Turpin et al. (2023) propose. Concretely, models observe less performance degradation under biased contexts when we prompt them to perform factored decomposition as opposed to CoT or CoT decomposition.

We do observe an alignment tax, in that factored decomposition leads to the worst question-answering performance among the reasoning-generating methods, but we have very low confidence in this being a strongly generalizable finding.

Future Directions and Outlook

We think some promising avenues for future work building off of our results are:

- Developing additional methods to test the faithfulness of model-generated reasoning

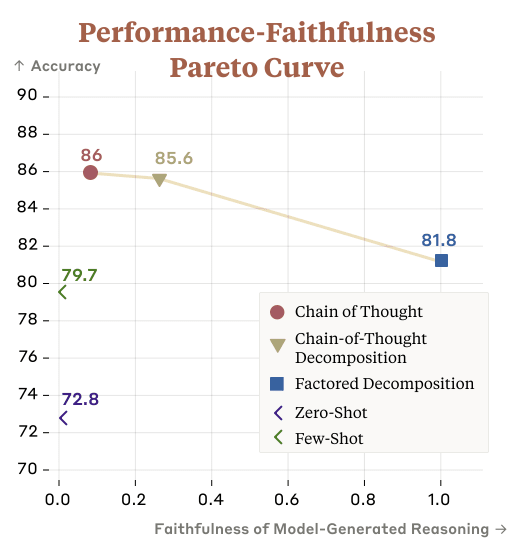

- Training models to provide more faithful explanations, pushing the Pareto frontier of reasoning faithfulness and model performance. We focused on developing test-time-only techniques for improved reasoning faithfulness, but we expect training specifically for generating faithful reasoning to improve our results.

- Testing the effectiveness of externalized reasoning and process [LW · GW]-based oversight, especially its competitiveness with outcomes-based oversight

- Verifying that faithful externalized reasoning can allow us to audit our models and detect undesirable behavior

Our general outlook is that continuing to make model-generated reasoning more faithful is helpful for mitigating catastrophic risks. If models generate faithful plans that lead to catastrophic outcomes, then we prevent catastrophes by auditing those plans. Alternatively, if models that reason out loud don't generate plans that lead to catastrophic outcomes, then it's unlikely that they'll cause catastrophes in the first place. If methods for generating faithful reasoning result in a large alignment tax on model capabilities, we could use such methods only in high-stakes settings, where misalignment would be catastrophic (e.g., when using AI systems to conduct alignment research).

- ^

We definitely shouldn't expect CoT reasoning to be fully faithful, or to fully capture the model's latent knowledge [? · GW], though.

15 comments

Comments sorted by top scores.

comment by habryka (habryka4) · 2023-07-19T21:17:19.250Z · LW(p) · GW(p)

I do feel a bit confused about the framing here. It seems, if I am reading these results correctly, that CoT provides pretty little safety guarantee at the relevant scales. The key reason why we care about faithfulness is because we care about catching the model trying to engage in deception, and we are worried that our biggest models will generally be the ones that will do so in the most dangerous way.

This means "choosing the model size" is not really an option. Probably all large models face some incentives/selection towards deception, and as such, the headline result here seems like it should be "Chain-of-Thought Reasoning seems like it will get less and less useful at detecting deception as model size increases, and seems unlikely to reliably detect deception when models pose large risk".

The other headline takeaway for me is "Faithfulness will go down as model size increases, this means that if we have a deception benchmark that leverages CoT, it will look like it will get better as models get bigger, but this will actually be the result of CoT becoming a worse measure as model size increases".

What is the use-case for choosing the size of a model such that CoT is faithful? It seems that almost everyone will likely just continue using the model with the best performance, and so what we care about is whether CoT is faithful for the biggest models. The use-case of choosing the model size specifically to make CoT faithful seems like a very narrow use-case that's unlikely to get used much.

Replies from: tamera, ethan-perez↑ comment by tamera · 2023-07-19T23:47:41.498Z · LW(p) · GW(p)

The idea here is that we shouldn't trust CoT blindly - instead, we should measure whether or not it is faithful, and use that as a criterion for if it is a good mechanism for oversight or not. If a model's CoT is measurably unfaithful on a certain task, we shouldn't trust CoT-based oversight there. Importantly, we could empirically test the CoT for faithfulness, and discover that it is unacceptably low before making the decision to trust it.

If we only want to trust our models to take high-stakes actions when we can provide adequate oversight via the CoT, and we run the tests and find that the CoT isn't faithful for large models, we shouldn't use those models in those circumstances (because our oversight wouldn't work). In this case, if we want to be able to use CoT-based oversight, we might want to use a smaller model instead. We could figure out how small by measuring the faithfulness of CoT for different size models on our task, and decide if a sufficiently faithful model is still capable enough for our needs.

We might also be able to increase faithfulness and capability by making pareto improvements to the faithfulness-capability tradeoff described in "Question Decomposition Improves the Faithfulness of Model-Generated Reasoning".

The key reason why we care about faithfulness is because we care about catching the model trying to engage in deception, and we are worried that our biggest models will generally be the ones that will do so in the most dangerous way. ... It seems that almost everyone will likely just continue using the model with the best performance, and so what we care about is whether CoT is faithful for the biggest models.

Deceptive alignment may require more serial steps of reasoning than straightforward task-completion [AF · GW]. If we only use our largest models for the difficult tasks where their CoT is actually faithful (and smaller models otherwise), we expect deceptive alignment to be strictly more difficult than those tasks, and thus more likely to show up in the CoT if it is happening. (This is contingent on the Section 3 results holding up in general, where task A being more difficult than task B implies CoT faithfulness on task A is >= CoT faithfulness on task B.)

Replies from: sbowman↑ comment by Sam Bowman (sbowman) · 2023-07-20T02:44:51.029Z · LW(p) · GW(p)

I agree, though I'll also add:

- I don't think our results clearly show that faithfulness goes down with model size, just that there's less affirmative evidence for faithfulness at larger model sizes, at least in part for predictable reasons related to the metric design. There's probably more lowish-hanging fruit involving additional experiments focused on scaling. (I realize this disagrees with a point in the post!)

- Between the good-but-not-perfect results here and the alarming results in the Turpin 'Say What They Think' paper, I think this paints a pretty discouraging picture of standard CoT as a mechanism for oversight. This isn't shocking! If we wanted to pursue an approach that relied on something like CoT, and we want to get around this potentially extremely cumbersome sweet-spot issue around scale, I think the next step would be to look for alternate training methods that give you something like CoT/FD/etc. but have better guarantees of faithfulness.

↑ comment by Seth Herd · 2023-07-20T16:29:09.233Z · LW(p) · GW(p)

Your first point seems absolutely critical. Could you elaborate a little?

Replies from: sbowman↑ comment by Sam Bowman (sbowman) · 2023-07-21T05:05:08.577Z · LW(p) · GW(p)

Concretely, the scaling experiments in the first paper here show that, as models get larger, truncating or deleting the CoT string makes less and less difference to the model's final output on any given task.

So, stories about CoT faithfulness that depend on the CoT string being load-bearing are no longer very compelling at large scales, and the strings are pretty clearly post hoc in at least some sense.

This doesn't provide evidence, though, that the string is misleading about the reasoning process that the model is doing, e.g., in the sense that the string implies false counterfactuals about the model's reasoning. Larger models are also just better at this kind of task, and the tasks all have only one correct answer, so any metric that requires the model to make mistakes in order to demonstrate faithfulness is going to struggle. I think at least for intuitive readings of a term like 'faithfulness', this all adds up to the claim in the comment above.

Counterfactual-based metrics, like the ones in the Turpin paper, are less vulnerable to this, and that's probably where I'd focus if I wanted to push much further on measurement given what we know now. Though we already know from that paper that standard CoT in near-frontier models isn't reliably faithful by that measure.

We may be able to follow up with a few more results to clarify the takeaways about scaling, and in particular, I think just running a scaling sweep for the perturbed reasoning adding-mistakes metric from the Lanham paper here would clarify things a bit. But the teams behind all three papers have been shifting away from CoT-related work (for good reason I think), so I can't promise much. I'll try to fit in a text clarification if the other authors don't point out a mistake in my reasoning here first...

Replies from: RobertKirk↑ comment by RobertKirk · 2023-07-31T13:35:22.384Z · LW(p) · GW(p)

To check I understand this, is another way of saying this that in the scaling experiments, there's effectively a confounding variable which is model performance on the task:

- Improving zero-shot performance decreases deletion-CoT-faithfulness

- The model will get the answer write with no CoT, so adding a prefix of correct reasoning is unlikely to change the output, hence a decrease in faithfulness

- Model scale is correlated with model performance.

- So the scaling experiments show model scale is correlated with less faithfulness, but probably via the correlation with model performance.

If you had a way of measuring the faithfulness conditioned on a given performance for a given model scale then you could measure how scaling up changes faithfulness. Maybe for a given model size you can plot performance vs faithfulness (with each dataset being a point on this plot), measure the correlation for that plot and then use that as a performance-conditioned faithfulness metric? Or there's likely some causally correct way of measuring the correlation between model scale and faithfulness while removing the confounder of model performance.

Replies from: sbowman, cfoster0↑ comment by Sam Bowman (sbowman) · 2023-08-06T17:34:32.365Z · LW(p) · GW(p)

Yep, that sounds right! The measure we're using gets noisier with better performance, so even faithfulness-vs-performance breaks down at some point. I think this is mostly an argument to use different metrics and/or tasks if you're focused on scaling trends.

↑ comment by cfoster0 · 2023-07-31T15:27:46.830Z · LW(p) · GW(p)

If you have checkpoints from different points in training of the same models, you could do a comparison between different-size models at the same loss value (performance). That way, you're actually measuring the effect of scale alone, rather than scale confounded by performance.

Replies from: sbowman↑ comment by Sam Bowman (sbowman) · 2023-08-06T17:36:12.234Z · LW(p) · GW(p)

That makes sense, though what's at stake with that question? In almost every safety-relevant context I can think of, 'scale' is just used as a proxy for 'the best loss I can realistically achieve in a training run', rather than as something we care about directly.

↑ comment by Ethan Perez (ethan-perez) · 2023-07-21T04:48:42.729Z · LW(p) · GW(p)

CoT provides pretty little safety guarantee at the relevant scales

Even if faithfulness goes down at some model scale for a given task, that doesn't mean that we'll be using models at that scale (e.g., for cost reasons or since we might not have models at a large scale yet). The results on the addition task show that there are some task difficulties for which even the largest models we tested don't start to show lower faithfulness, and people will be pushing the difficulties of the tasks they use models on as they get better. So it seems likely to me that no matter the model scale, people will be using models on some tasks where they'll have faithful reasoning (e.g., tasks near the edge of that model's abilities).

It seems that almost everyone will likely just continue using the model with the best performance

If you're using the model in a high-stakes setting and you're an aligned actor, it's nice to be able to make tradeoffs between performance and safety. For example, you might care more about safety properties than raw capabilities if you're an alignment researcher at an AGI lab who's trying to make progress on the alignment problem with AIs.

comment by Chris_Leong · 2023-07-19T07:45:44.224Z · LW(p) · GW(p)

Do you have a theory for why chain-of-thought decomposition helps?

Replies from: anshuman-radhakrishnan-1↑ comment by Ansh Radhakrishnan (anshuman-radhakrishnan-1) · 2023-07-19T18:53:39.128Z · LW(p) · GW(p)

Honestly, I don't think we have any very compelling ones! We gesture at some possibilities in the paper, such as it being harder for the model to ignore its reasoning when it's in an explicit question-and-answer format (as opposed to a more free-form CoT), but I don't think we have a good understanding of why it helps.

It's also worth noting that CoT decomposition helps mitigate the ignored reasoning problem, but actually is more susceptible to biasing features in the context than CoT. Depending on how you weigh the two, it's possible that CoT might still come out ahead on reasoning faithfulness (we chose to weigh the two equally).

comment by Satron · 2025-01-13T16:29:47.327Z · LW(p) · GW(p)

This article provides a concrete, object-level benchmark for measuring the faithfulness of CoT. In addition to that, a new method for improving CoT faithfulness is introduced (something that is mentioned in a lot of alignment plans).

The method is straightforward and relies on breaking questions into subquestions. Despite its simplicity, it is surprisingly effective.

In the future, I hope to see alignment plans relying on CoT faithfulness incorporate this method into their toolkit.

comment by Orpheus16 (akash-wasil) · 2023-07-21T19:50:50.672Z · LW(p) · GW(p)

I’m excited to see both of these papers. It would be a shame if we reached superintelligence before anyone had put serious effort into examining if CoT prompting could be a useful way to understand models.

I also had some uneasiness while reading some sections of the post. For the rest of this comment, I’m going to try to articulate where that’s coming from.

Overall, our results suggest that CoT can be faithful if the circumstances such as the model size and task are carefully chosen.

I notice that I’m pretty confused when I read this sentence. I didn’t read the whole paper, and it’s possible I’m missing something. But one reaction I have is that this sentence presents an overly optimistic interpretation of the paper’s findings.

The sentence makes me think that Anthropic knows how to set the model size and task in such a way that it guarantees faithful explanations. Perhaps I missed something when skimming the paper, but my understanding is that we’re still very far away from that. In fact, my impression is that the tests in the paper are able to detect unfaithful reasoning, but we don’t yet have strong tests to confirm that a model is engaging in faithful reasoning.

Even supposing that the last sentence is accurate, I think there are a lot of possible “last sentences” that could have been emphasized. For example, the last sentence could have read:

- “Overall, our results suggest that CoT is not reliably faithful, and further research will be needed to investigate its promise, especially in larger models.”

- “Unfortunately, this finding suggests that CoT prompting may not yet be a promising way to understand the internal cognition of powerful models.” (note: “this finding” refers to the finding in the previous sentence: “as models become larger and more capable, they produce less faithful reasoning on most tasks we study.”)

- “While these findings are promising, much more work on CoT is needed before it can reliably be used as a technique to reliably produce faithful explanations, especially in larger models.”

I’ve had similar reactions when reading other posts and papers by Anthropic. For example, in Core Views on AI Safety, Anthropic presents (in my opinion) a much rosier picture of Constitutional AI than is warranted. It also nearly-exclusively cites its own work and doesn’t really acknowledge other important segments of the alignment community.

But Akash, aren’t you just being naive? It’s fairly common for scientists to present slightly-overly-rosy pictures of their research. And it’s extremely common for profit-driven companies to highlight their own work in their blog posts.

I’m holding Anthropic to a higher standard. I think this is reasonable for any company developing technology that poses a serious existential risk. I also think this is especially important in Anthropic’s case. Anthropic has noted that their theory of change heavily involves: (a) understanding how difficult alignment is, (b) updating their beliefs in response to evidence, and (c) communicating alignment difficulty to the broader world.

To the extent that we trust Anthropic not to fool themselves or others, this plan has a higher chance of working. To the extent that Anthropic falls prey to traditional scientific or corporate cultural pressures (and it has incentives to see its own work as more promising than it actually is), this plan is weakened. As noted elsewhere, epistemic culture also has an important role in many alignment plans (see Carefully Bootstrapped Alignment is organizationally hard [LW · GW]).

(I’ll conclude by noting that I commend the authors of both papers, and I hope this comment doesn’t come off as overly harsh toward them. The post inspired me to write about a phenomenon I’ve been observing for a while. I’ve been gradually noticing similar feelings over time after reading various Anthropic comms, talking to folks who work at Anthropic, seeing Slack threads, analyzing their governance plans, etc. So this comment is not meant to pick on these particular authors. Rather, it felt like a relevant opportunity to illustrate a feeling I’ve often been noticing.)

comment by Review Bot · 2024-07-22T13:15:38.878Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?