AI: Practical Advice for the Worried

post by Zvi · 2023-03-01T12:30:00.703Z · LW · GW · 49 commentsContents

A Word On Thinking For Yourself Reacting Properly To Such Information is Hard Overview Normal Life is Worth Living Q&A Q: Should I still save for retirement? Short Answer: Yes. Q: Should I take on a ton of debt intending to never have to pay it back? Short Answer: No, except for a mortgage. Q: Does buying a house make sense? Q: Does it make sense to start a business? Q: Does It Still Make Sense to Try and Have Kids? Short Answer: Yes. Q: How Long Do We Have? What is the Timeline? Short Answer: Unknown. Look at the arguments and evidence. Form your own opinion. Q: Should I invest in AI companies? Short Answer: Not if the company could plausibly be funding constrained. A: No. As noted above, that does not seem like a net-positive thing to do. Q: Are there any ‘no regrets’ steps you should take, similar to stocking up on canned goods? Would this include learning to code if you’re not a coder, or learning something else instead if you are a coder? Short Answer: Not that you shouldn’t have done anyway. Q: When will my job be replaced by AGI? When should I switch to a physical skill? Short Answer: Impossible to know the timing on this. AI should be a consideration in choice of jobs and careers when choosing anew, but I wouldn’t abandon your job just yet. Q: What is the personal cost of being wrong? Q: How would you rate the ‘badness’ of doing the following actions: Direct work at major AI labs, working in VC funding AI companies, using applications based on the models, playing around and finding jailbreaks, things related to jobs or hobbies, doing menial tasks, having chats about the cool aspe... Q: How do you deal with the distraction of all this and still go about your life? None 49 comments

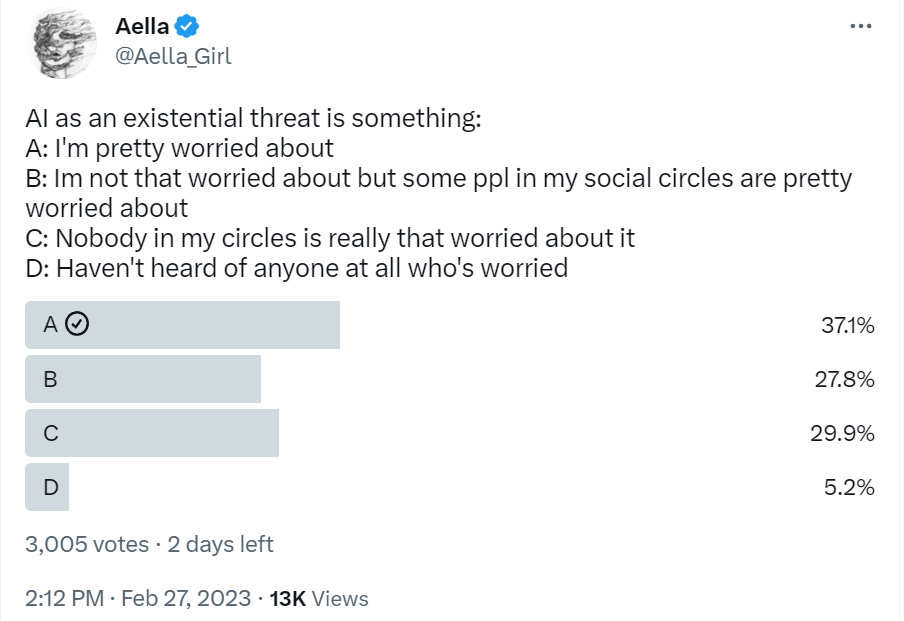

Some people (although very far from all people) are worried that AI will wipe out all value in the universe.

Some people, including some of those same people, need practical advice.

A Word On Thinking For Yourself

There are good reasons to worry about AI. This includes good reasons to worry about AI wiping out all value in the universe, or AI killing everyone, or other similar very bad outcomes.

There are also good reasons that AGI, or otherwise transformational AI, might not come to pass for a long time.

As I say in the Q&A section later, I do not consider imminent transformational AI inevitable in our lifetimes: Some combination of ‘we run out of training data and ways to improve the systems, and AI systems max out at not that much more powerful than current ones’ and ‘turns out there are regulatory and other barriers that prevent AI from impacting that much of life or the economy that much’ could mean that things during our lifetimes turn out to be not that strange. These are definitely world types my model says you should consider plausible.

There is also the highly disputed question of how likely it is that if we did create an AGI reasonably soon, it would wipe out all value in the universe. There are what I consider very good arguments that this is what happens unless we solve extremely difficult problems to prevent it, and that we are unlikely to solve those problems in time. Thus I believe this is very likely, although there are some (such as Eliezer Yudkowsky) who consider it more likely still.

That does not mean you should adapt my position, or anyone else’s position, or mostly use social cognition from those around you, on such questions, no matter what those methods would tell you. If this is something that is going to impact your major life decisions, or keep you up at night, you need to develop your own understanding and model, and decide for yourself what you predict.

Reacting Properly To Such Information is Hard

People who do react by worrying about such AI outcomes are rarely reacting about right given their beliefs. Calibration is hard.

Many effectively suppress this info, cutting the new information about the future off from the rest of their brain. They live their lives as if such risks do not exist.

There are much worse options than this. It has its advantages. It leaves value on the table, both personally and for the world. In exchange, one avoids major negative outcomes that potentially include things like missing out on the important things in life, ruining one’s financial future and bouts of existential despair.

Also the risk of doing ill-advised counterproductive things in the name of helping with the problem.

Remember that the default outcome of those working in AI in order to help is to end up working primarily on capabilities, and making the situation worse.

That does not mean that you should not make any attempt to improve our chances. It does mean that you should consider your actions carefully when doing so, and the possibility that you are fooling yourself. Remember that you are the easiest person to fool.

While some ignore the issue, others, in various ways, dramatically overreact.

I am going to step up here, and dare to answer these, those added by Twitter and some raised recently in personal conversations.

Before I begin, it must be said: NONE OF THIS IS INVESTMENT ADVICE.

Overview

There is some probability that humanity will create transformational AI soon, for various definitions of soon. You can and should decide what you think that probability is, and conditional on that happening, your probability of various outcomes.

Many of these outcomes, both good and bad, will radically alter the payoffs of various life decisions you might make now. Some such changes are predictable. Others not.

None of this is new. We have long lived under the very real threat of potential nuclear annihilation. The employees of the RAND corporation, in charge of nuclear strategic planning, famously did not contribute to their retirement accounts because they did not expect to live long enough to need them. Given what we know now about the close calls of the cold war, and what they knew at the time, perhaps this was not so crazy a perspective.

Should this imminent small but very real risk radically change your actions? I think the answer here is a clear no, unless your actions are relevant to nuclear war risks, either personally or globally, in some way, in which case one can shut up and multiply.

This goes back far longer. For much longer than that, various religious folks have expected Judgment Day to arrive soon, often with a date attached. Often they made poor decisions in response to this, even given their beliefs.

There are some people that talk or feel this same way about climate change, as an impending inevitable extinction event for humanity.

Under such circumstances, I would center my position on a simple claim: Normal Life is Worth Living, even if you think P(doom) relatively soon is very high.

Normal Life is Worth Living

One still greatly benefits from having a good ‘normal’ life, with a good ‘normal’ future.

Some of the reasons:

- A ‘normal’ future could still happen.

- It is psychologically very important to you today that if a ‘normal’ future does happen, that you are ready for it on a personal level.

- The future happens far sooner than you think. Returns to normality accrue quickly. As does the price for burning candles at both ends.

- Living like there is no (normal) tomorrow rapidly loses its luster. It can be fun for a day, perhaps a week or even a month. Years, not so much.

- If you are not ready for a normal future, this fact will stress you out.

- It will constrain your behavior if problems start to loom on that horizon.

- It is important for those who love you, that you are ready for it on a personal level.

- It is important for those evaluating or interacting with you, on a professional level.

- You will lose your ability to relate to people and the world if you don’t do this.

- It will become difficult to admit you made a mistake, if the consequences of doing so seem too dire.

More generally, on a personal level: There are not good ways to sacrifice quite a lot of utility in the normal case, and in exchange get good experiential value in unusual cases. Moving consumption forward, taking on debt or other long term problems and other tactics like that can be useful on the margin but suffer rapidly decreasing marginal returns. Even the most extreme timeline expectations I have seen – where high probability is assigned to doom within the decade – are long enough for this to catch up to you.

More generally, in terms of helping: Burning yourself out, stressing yourself out, tying yourself up in existential angst all are not helpful. It would be better to keep yourself sane and healthy and financially intact, in case you are later offered leverage. Fighting the good fight, however doomed it might be, because it is a far, far better thing to do, is also a fine response, if you keep in mind how easy it is to end up not helping that fight. But do that while also living a normal life, even if that might seem indulgent. You will be more effective for it, especially over time.

In short, the choice is clear.

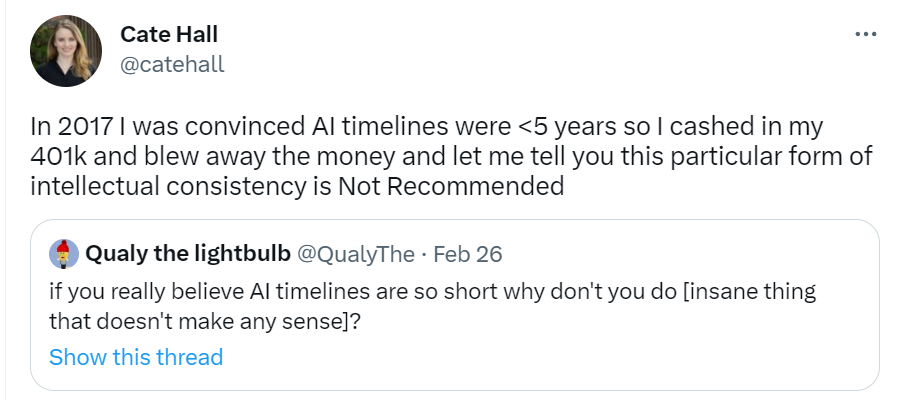

Don’t be like past Cate Hall (in this particular way – in general she’s pretty cool).

It wasn’t Cate alone back then. I witnessed some of it. Astrid Wilde reports dozens of such cases, in a situation where (as Cate readily now admits) the positions in question made little physical sense, and were (in my model) largely the result of a local social information cascade. Consider the possibility that this is happening again, for your model, not merely for your actions.

One really bad reason to burn your bridges is to satisfy people who ask why you haven’t burned your bridges.

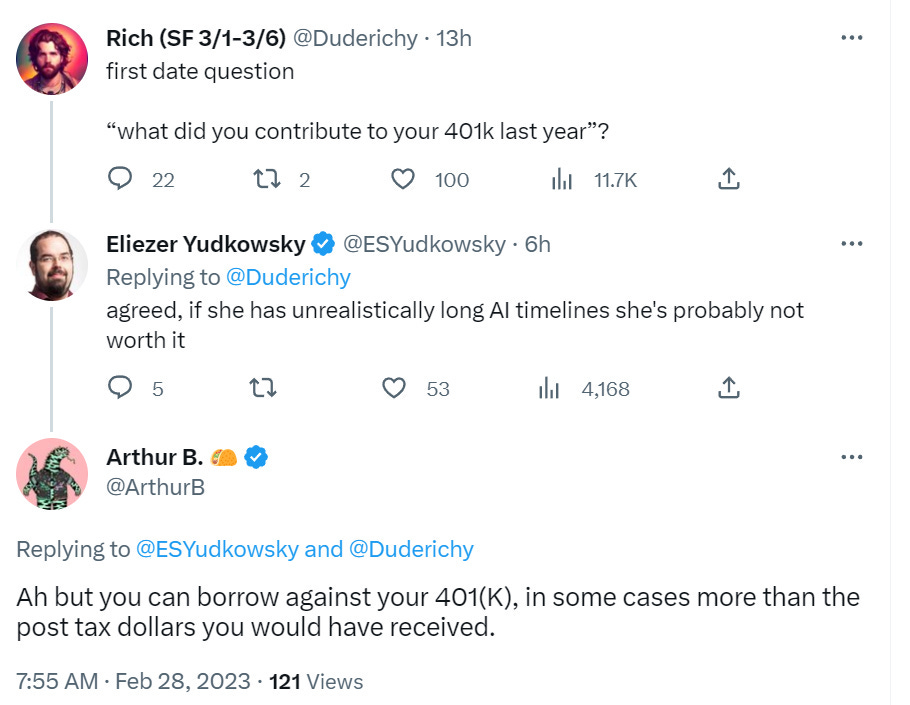

I worry about people taking jokes like this seriously, also if you must overextend Arthur makes a strong point here:

Similar to Digital Abundance, Samo emphasizes the dangers of intellectual inconsistency in places like this.

If people say the world is definitely for sure ending real soon now, then yes, talk is cheap and one should check if they are following through. If people say the world might be ending and it is hard to know exactly when, then this kind of thing easily turns into a gotcha, a conflation of the two positions and their implications – a lot of normal behaviors will still make sense. Whereas it is a natural move to end up thinking ‘well, if no one is willing to bet their life on this definitely happening, then I don’t have to take the possibility it happens into account.’ Except, yeah, you still do.

Thus one should both, as Vassar also points out here, if you did end up so confident that talk would have been cheap, question how you ended up with such confident assumptions about the future, especially after they don’t come to pass, along with whether your actions made sense even given your assumptions. Also remember that someone is making statements against interest in order to be helpful to others, admitting they were very wrong. So, as Cate responds, chill out bro.

On to individual questions to flesh all this out.

Q&A

Q: Should I still save for retirement?

Short Answer: Yes.

Long Answer: Yes, to most (but not all) of the extent that this would otherwise be a concern and action of yours in the ‘normal’ world. It would be better to say ‘build up asset value over time’ than ‘save for retirement’ in my model. Building up assets gives you resources to influence the future on all scales, whether or not retirement is even involved. I wouldn’t get too attached to labels.

Remember that while it is not something one should do lightly, none of this is lightly, and you can raid retirement accounts with what in context is a modest penalty, in an extreme enough ‘endgame’ situation – it does not even take that many years for the expected value of the compounded tax advantages to exceed the withdraw penalty – the cost of emptying the account, should you need to do that, is only 10% of funds and about a week (plus now having to pay taxes on it). And that in some extreme future situations, having that cash would be highly valuable, none of which suggests now is the time to empty it, or to not build it up.

The case for saving money does not depend on expecting a future ‘normal’ world. Which is good, because even without AI the future world is likely to not be all that ‘normal.’

Q: Should I take on a ton of debt intending to never have to pay it back?

Short Answer: No, except for a mortgage.

Long Answer: Mostly no, except for a mortgage. Save your powder. See my post On AI and Interest Rates for an extended treatment of this question – I feel that is a definitive answer to the supposed ‘gotcha’ question of why doomers don’t take on lots of debt. Taking on a bunch of debt is a limited resource, and good ways to do it are even more limited for most of us. Yes, where you get the opportunity it would be good to lock in long borrow periods at fixed rates if you think things are about to get super weird. But if your plan is ‘the market will realize what is happening and adjust the value of my debt in time for me to profit’ that does not seem, to me, like a good plan. Nor does borrowing now much change your actual constraints on where you run out of money.

Does borrowing money that you have to pay back in 2033 mean you have more money to spend? That depends. What is your intention if 2033 rolls around and the world hasn’t ended? Are you going to pay it back? If so then you need to prepare now to be able to do that. So you didn’t accomplish all that much.

You need very high confidence in High Weirdness Real Soon Now before you can expect to get net rewarded for putting your financial future on quicksand, where you are in real trouble if you get the timing wrong. You also need a good way to spend that money to change the outcome.

Yes, there is a level of confidence in both speed and magnitude, combined with a good way to spend, that would change that, and that I do not believe is warranted. One must notice that you need vastly less certainty than this to be shouting about these issues from the rooftops, or devoting your time to working on them.

Eliezer’s position, as per his most recent podcast is something like ‘AGI could come very soon, seems inevitable by 2050 barring civilizational collapse, and if it happens we almost certainly all die.’ Suppose you really actually believed that. It’s still not enough to do much with debt unless you have a great use of money – there’s still a lot of probability mass that the money is due back while you’re still alive, potentially right before it might matter.

Yes, also, this changes if you think you can actually change the outcome for the better by spending money now, money loses impact over time, so your discount factor should be high. That however does not seem to be the case that I see being made.

Q: Does buying a house make sense?

A: Maybe. It is an opportunity to borrow money at low interest rates with good tax treatment. It also potentially ties up capital and ties you down to a particular location, and is not as liquid as some other forms of capital. So ask yourself how psychologically hard it would be to undo that. In terms of whether it looks like a good investment in a world with useful but non-transformational AI, an AI could figure out how to more efficiently build housing, but would that cause more houses to be built?

Q: Does it make sense to start a business?

A: Yes, although not because of AI. It is good to start a business. Of course, if the business is going to involve AI, carefully consider whether you are making the situation worse.

Q: Does It Still Make Sense to Try and Have Kids?

Short Answer: Yes.

Long Answer: Yes. Kids are valuable and make the world and your own world better, even if the world then ends. I would much rather exist for a bit than never exist at all. Kids give you hope for the future and something to protect, get you to step up. They get others to take you more seriously. Kids teach you many things that help one think better about AI. You think they take away your free time, but there is a limit to how much creative work one can do in a day. This is what life is all about. Missing out on this is deeply sad. Don’t let it pass you by.

Is there a level of working directly on the problem, or being uniquely positioned to help with the problem, where I would consider changing this advice? Yes, there are a few names where I think this is not so clear, but I am thinking of a very small number of names right now, and yours is not one of them.

You can guess how I would answer most other similar questions. I do not agree with Buffy Summers that the hardest thing in this world is to live in it. I do think she knows better than any of us that not living in this world is not the way to save it.

Q: Should I talk to my kids about how there’s a substantial chance they won’t get to grow up?

A: I would not (and will not) hide this information from my kids, any more than I would hide the risk from nuclear war, but ‘you may not get to grow up’ is not a helpful thing to say to (or to emphasize to) kids. Talking to your kids about this (in the sense of ‘talk to your kids about drugs’) is only going to distress them to no purpose. While I don’t believe in hiding stuff from kids, I also don’t think this is something it is useful to hammer into them. Kids should still get to be and enjoy being kids.

Q: If we believe our odds of good outcomes are low, is it cruel to explain what is coming to the normies in our lives? If grandma is on her deathbed, do you tell her there probably isn’t a heaven? What’s the point of changing minds when mind-changing was needed a decade ago?

A: When in doubt I tend to favor more honesty and openness, but there is no need to shove such things in people’s faces. If grandma asks me on her deathbed if there is a heaven, I am not going to lie to her. I also think it would be cruel to bring the subject up if she wasn’t asking and it wasn’t impacting her decisions or experience in negative ways. So if there are minds that it would not be helpful to change, I’d mostly be inclined to let them be by default. I’d also ask, would this person want to know? Some people would want to know. Others would not.

Q: Should I just try to have a good time while I can?

A: No, because my model says that this doesn’t work.It is empty. You can have fun for a day, a week, a month, perhaps a year, but after a while it rings hollow, feels empty, and your future will fill you with dread. Certainly it makes sense to shift this on the margin, get your key bucket list items in early, put a higher marginal priority on fun – even more so than you should have been doing anyway. But I don’t think my day-to-day life experience would improve for very long by taking this kind of path. Then again, each of us is different.

That all assumes you have ruled out attempting to improve our chances. Personally, even if I had to go down, I’d rather go down fighting. Insert rousing speech here.

Q: How Long Do We Have? What is the Timeline?

Short Answer: Unknown. Look at the arguments and evidence. Form your own opinion.

Long Answer: High uncertainty about when this will happen if it happens, whether or not one has high uncertainty about whether it happens at all within our lifetimes. Eliezer’s answer was that he would be very surprised if it didn’t happen by 2050, but that within that range little would surprise him and he has low confidence. Others have longer or shorter means and medians in their timelines. Mine are substantially longer and less confident than Eliezer’s. This is a question you must decide for yourself. The key is that there is uncertainty, so lots of difference scenarios matter.

Q: Should I invest in AI companies?

Short Answer: Not if the company could plausibly be funding constrained.

Long Answer: Investing in AI companies, by default, gives them more funding and more ambition, and thus accelerates AI. That’s bad, and a good reason not to invest in them. Any AI company that is a good investment and maximizing profits is not something to be encouraged. If you were purely profit maximizing and were dismissive of the risks from AI, that would be different, but these questions assume a different perspective. The exception is that the Big Tech companies (Google, Amazon, Apple, Microsoft, although importantly not Facebook, seriously f*** Facebook) have essentially unlimited cash, and their funding situation changes little (if at all) based on their stock price.

Q: Have you made an AI ETF so we can at least try to leverage some capital to help if things start to ramp up?

A: No. As noted above, that does not seem like a net-positive thing to do.

Q: Are there any ‘no regrets’ steps you should take, similar to stocking up on canned goods? Would this include learning to code if you’re not a coder, or learning something else instead if you are a coder?

Short Answer: Not that you shouldn’t have done anyway.

Long Answer: Keeping your situation flexible, and being mentally ready to change things if the world changes radically, is probably what would count here. On the margin I would learn to code rather than learn things other than how to code. Good coding will help you keep up with events, help you get mundane utility from whatever happens, and if AI wipes out demand for coding then that will be the least of your worries. That seems like good advice regardless of what you expect from AI.

Q: When will my job be replaced by AGI? When should I switch to a physical skill?

Short Answer: Impossible to know the timing on this. AI should be a consideration in choice of jobs and careers when choosing anew, but I wouldn’t abandon your job just yet.

Long Answer: All the previous predictions about which jobs would go away now seem wrong. All the predictions of mass unemployment around the corner, for now, are still also wrong. We don’t know if AI will effectively eliminate a lot of jobs or which jobs they will be, or what jobs or how many jobs it will create or that will arise with our new wealth and newly available labor, or what the net effect will be. If you are worried about AI coming for your particular job, do your best to model that given what we know. If you stay ahead of the curve learning to use AI to improve your performance, that should also help a lot.

Q: How should I weigh long-term career arc considerations? If things don’t get so crazy that none of my life plans make sense any more, what does the world look like? What kind of world should I be hedging on? Is there a way to prepare for the new world order if we are not all dead?

A: The worlds in which one’s life plans still make sense are the worlds that continue to look ‘normal.’ They contain powerful LLMs and better search and AI art and such, but none of that changes the basic state of play. Some combination of ‘we run out of training data and ways to improve the systems, and they max out at not that much more powerful’ and ‘turns out there are regulatory and other barriers that prevent AI from impacting that much of life or the economy that much’ makes things not look so strange during your lifetime. These are definitely world types my model says you should consider plausible. There is also the possibility over one’s lifetime of things like civilizational inadequacy and collapse, economic depression or hyperinflation, war (or even nuclear war) or otherwise some major disaster that changes everything. If you want to fully cover your bases, that is an important base.

That does not mean that the standard ‘normal’ long-term career arc considerations ever made sense in the first place. Even in worlds in which AI does not much matter, and the world still looks mostly like it looks, standard ‘long-term career arc considerations’ thinking seems quite poor – people don’t often start businesses, people don’t focus on equity, people don’t emphasize skill development enough, and so on. And even if AI does not much matter, the chances we have decades more of ‘nothing that important changes much’ still seem rather low.

If there is a new world order – AI or something else changes everything – and we are not all dead, how do you prepare for that? Good question. What does such a world look like? Some such worlds you don’t have to prepare and it is fine. Others, it is very important that you start with capital. Keeping yourself healthy, cultivating good habits and remaining flexible and grounded are probably some good places to start.

Q: What is the personal cost of being wrong?

A: Definitely a key consideration, as well as the non-personal cost of being wrong, and the value of being right, and the probability you are right versus wrong. Make sure your actions have positive expected value, for whatever you place value upon.

Q: How would you rate the ‘badness’ of doing the following actions: Direct work at major AI labs, working in VC funding AI companies, using applications based on the models, playing around and finding jailbreaks, things related to jobs or hobbies, doing menial tasks, having chats about the cool aspects of AI models?

A: Ask yourself what you think accelerates AI to what extent, and what improves our ability to align one to what extent. This is my personal take only – you should think about what your model says about the things you might do. So here goes. Working directly on AI capabilities, or working directly to fund work on AI capabilities, both seem maximally bad, with ‘which is worse’ being a question of scope. Working on the core capabilities of the LLMs seems worse than working on applications and layers, but applications and layers are how LLMs are going to get more funding and more capabilities work, so the more promising the applications and layers, the more I’d worry. Similarly, if you are spreading the hype about AI in ways that advance its use and drive more investment, that is not great, but seems hard to do that much on such fronts on the margin unless you are broadcasting in some fashion, and you would presumably also mention the risks at least somewhat.

I think tinkering around with the systems, trying to jailbreak or hack them or test their limits, is generally a good thing. Such work differentially helps us understand and potentially align such systems, more than it advances capabilities, especially if you are deliberate with what you do with your findings. Using existing AI for mundane utility is not something I would worry about the ‘badness’ of, if you want some AI art or AI coding or writing or search help then go for it. Mostly talking to others about cool things seems fine.

Q: Are these questions answerable right now, or should I leave my options open until they become more answerable down the line? What is my tolerance for risk and uncertainty of outcome, and how should this play into my decisions about these questions?

A: One must act under uncertainty. There is no certainty or safety anywhere, under any actions, not really. People crave the illusion of safety, the feeling everything is all right. One needs to find a way to get past this desire without depending on lies. What does ‘tolerance for risk’ mean in context? Generally it means social or emotional risk, or risk under the baseline normal scenarios or something like that. If you can’t handle that kind of thing, and it would negatively impact you, then consider that when deciding whether to do it or try to do it. Especially don’t do short term superficially ‘fun’ things that this would prevent you from enjoying, or taking on burdens you can’t handle.

Q: How do you deal with the distraction of all this and still go about your life?

A: The same way you avoid being distracted by all the other important and terrible things and risks out there. Nuclear risks are a remarkably similar problem many have had to deal with. Without AI and even with world peace, the planetary death rate would still be expected to hold steady at 100%. Memento mori.

49 comments

Comments sorted by top scores.

comment by Zvi · 2024-12-25T19:27:33.513Z · LW(p) · GW(p)

I find myself linking back to this often. I don't still fully endorse quite everything here, but the core messages still seem true even with things seeming further along.

I do think it should likely get updated soon for 2025.

Replies from: charbel-raphael-segerie, mesityl, nonveumann↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2025-01-05T01:01:35.687Z · LW(p) · GW(p)

What do you don't fully endorse anymore?

↑ comment by rai (nonveumann) · 2025-01-05T03:34:27.687Z · LW(p) · GW(p)

update please!

comment by Droopyhammock · 2023-03-01T22:29:07.311Z · LW(p) · GW(p)

A consideration which I think you should really have in regards to whether you have kids or not is remembering that s-risks are a thing. Personally, I feel very averse to the idea of having children, largely because I feel very uncomfortable about the idea of creating a being that may suffer unimaginably.

There are certainly other things to bare in mind, like the fact that your child may live for billions of years in utopia, but I think that you really have to bare in mind that extremely horrendous outcomes are possible.

It seems to me that the likelihood of s-risks is not very agreed upon, with multiple people who have looked into them considering them of similar likelihood to x-risks, while others consider them far more unlikely. In my opinion the likelihood of s-risks is not negligible and should be a consideration when having children.

Replies from: Anirandis, Green_Swan, johnlawrenceaspden↑ comment by Anirandis · 2023-04-01T18:33:08.168Z · LW(p) · GW(p)

I'm a little confused by the agreement votes with this comment - it seems to me that the consensus around here is that s-risks in which currently-existing humans suffer maximally are very unlikely to occur. This seems an important practical question; could the people who agreement-upvoted elaborate on why they find this kind of thing plausible?

The examples discussed in e.g. the Kaj Sotala interview linked later down the chain tend to regard things like "suffering subroutines", for example.

Replies from: Benjy Forstadt↑ comment by Benjy Forstadt · 2023-04-02T03:06:45.400Z · LW(p) · GW(p)

The assumption that a misaligned AI will choose to kill us may be false. It would be very cheap to keep us alive/keep copies of us and it may find running experiments on us marginally more valuable. See "More on the 'human experimentation' s-risk":

↑ comment by Jacob Watts (Green_Swan) · 2023-03-02T03:49:46.268Z · LW(p) · GW(p)

You said that multiple people have looked into s-risks and consider them of similar likelihood to x-risks. That is surprising to me and I would like to know more. Would you be willing to share your sources?

Replies from: Droopyhammock↑ comment by Droopyhammock · 2023-03-02T11:16:55.886Z · LW(p) · GW(p)

S-risks can cover quite a lot of things. There are arguably s-risks which are less bad than x-risks, because although there is astronomical amounts of suffering, it may be dwarfed by the amount of happiness. Using common definitions of s-risks, if we simply took Earth and multiplied it by 1000 so that we have 1000 Earths, identical to ours with the same amount of organisms, it would be an s-risk. This is because the amount of suffering would be 1000 times greater. It seems to me that when people talk about s-risks they often mean somewhat different things. S-risks are not just “I have no mouth and I must scream” scenarios, they can also be things like the fear that we spread wild animal suffering to multiple planets through space colonisation. Because of the differences in definitions people seem to have for s-risks, it is hard to tell what they mean when they talk about the probability of them occurring. This is made especially difficult when they compare them to the likelihood of x-risks, as people have very different opinions on the likelihood of x-risks.

Here are some sources:

From an episode of the AI alignment podcast called “Astronomical future suffering and superintelligence with Kaj Sotala”: https://futureoflife.org/podcast/podcast-astronomical-future-suffering-and-superintelligence-with-kaj-sotala/

Lucas: Right, cool. At least my understanding is, and you can correct me on this, is that the way that FRI sort of leverages what it does is that ... Within the effective altruism community, suffering risks are very large in scope, but it's also a topic which is very neglected, but also low in probability. Has FRI really taken this up due to that framing, due to its neglectedness within the effective altruism community?

Kaj: I wouldn't say that the decision to take it up was necessarily an explicit result of looking at those considerations, but in a sense, the neglectedness thing is definitely a factor, in that basically no one else seems to be looking at suffering risks. So far, most of the discussion about risks from AI and that kind of thing has been focused on risks of extinction, and there have been people within FRI who feel that risks of extreme suffering might actually be very plausible, and may be even more probable than risks of extinction. But of course, that depends on a lot of assumptions.

From an article (and corresponding talk) given by Max Daniel called “S risks: Why they are the worst existential risks, and how to prevent them”: https://longtermrisk.org/s-risks-talk-eag-boston-2017/

Part of the article focuses on the probability of s-risks, which starts by saying “I’ll argue that s-risks are not much more unlikely than AI-related extinction risk. I’ll explain why I think this is true and will address two objections along the way.”

Here are some more related sources: https://centerforreducingsuffering.org/research/intro/

There is also a subreddit for s-risks. In this post, UHMWPE-UwU (who created the subreddit) has a comment which says “prevalent current assumption in the alignment field seems to be that their likelihood is negligible, but clearly not the case especially with near-miss/failed alignment risks

https://www.reddit.com/r/SufferingRisk/comments/10uz5fn/general_brainstormingdiscussion_post_next_steps/

↑ comment by johnlawrenceaspden · 2023-03-02T14:43:38.077Z · LW(p) · GW(p)

Doesn't any such argument also imply that you should commit suicide?

Replies from: Droopyhammock↑ comment by Droopyhammock · 2023-03-02T18:37:50.002Z · LW(p) · GW(p)

Not necessarily

Suicide will not save you from all sources of s-risk and may make some worse. If quantum immortality is true, for example. If resurrection is possible, this then makes things more complicated.

The possibility for extremely large amounts of value should also be considered. If alignment is solved and we can all live in a Utopia, then killing yourself could deprive yourself of billions+ years of happiness.

I would also argue that choosing to stay alive when you know of the risk is different from inflicting the risk on a new being you have created.

With that being said, suicide is a conclusion you could come to. To be completely honest, it is an option I heavily consider. I fear that Lesswrong and the wider alignment community may have underestimated the likelihood of s-risks by a considerable amount.

Replies from: avturchin↑ comment by avturchin · 2023-03-06T23:02:37.710Z · LW(p) · GW(p)

AI may kill all humans, but it will preserve all our texts forever. Even will internalise them as training data. Thus it is rational either publish as much as possible, – or write nothing.

--

Cowardy AI can create my possible children even if I don't have children.

comment by KurtiRussell (henningschuler@gmail.com) · 2023-03-10T11:04:56.210Z · LW(p) · GW(p)

There is a (likely spurious) quote of Martin Luther saying: "If I knew the world ended tomorrow, I would still plant a little apple tree today." which I find sums this post up neatly!

comment by James_Miller · 2023-03-03T03:15:34.444Z · LW(p) · GW(p)

"The exception is that the Big Tech companies (Google, Amazon, Apple, Microsoft, although importantly not Facebook, seriously f*** Facebook) have essentially unlimited cash, and their funding situation changes little (if at all) based on their stock price." The stock price of companies does influence how much they are likely to spend because the higher the price the less current owners have to dilute their holdings to raise a given amount of additional funds through issuing more stock. But your purchasing stock in a big company has zero (not small but zero) impact on the stock price so don't feel at all bad about buying Big Tech stock.

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2023-03-03T04:12:56.890Z · LW(p) · GW(p)

But your purchasing stock in a big company has zero (not small but zero) impact on the stock price so don’t feel at all bad about buying Big Tech stock.

I am having trouble seeing how that can be true; can you help me see it? Do you believe the same thing holds for wheat? Bitcoin? If not, what makes big-company stock different?

Replies from: James_Miller↑ comment by James_Miller · 2023-03-03T22:55:17.900Z · LW(p) · GW(p)

If hedge funds think the right price of a stock is $100, they will buy or sell if the price deviates from $100 and this will push the price back to $100. At best your purchase will move the price away from $100 for a few milliseconds. The stock's value will be determined by what hedge funds think is its discounted present value, and your purchasing the stock doesn't impact this. When you buy wheat you increase the demand for wheat and this should raise wheat's price as wheat, like Bitcoin, is not purely a financial asset.

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2023-03-06T02:43:23.469Z · LW(p) · GW(p)

Thanks.

comment by mruwnik · 2024-12-31T14:52:19.468Z · LW(p) · GW(p)

There seems to be a largish group of people who are understandably worried about AI advances but have no hope of changing it, so start panicking. This post is a good reminder that yes, we're all going to die, but since you don't know when, you have to prepare for multiple eventualities.

Shorting life is good if you can pull it off. But the same caveats apply as to shorting the market.

comment by lc · 2023-03-02T01:03:04.814Z · LW(p) · GW(p)

I'm not going to have kids because I won't want them to die early. It has nothing to do with me wanting to have a more successful career, or because I think I will be able to contribute to alignment. I would love to have healthy kids that I knew would outlive me by a while, but it doesn't seem like that will happen if I do.

comment by maia · 2023-03-01T21:07:32.964Z · LW(p) · GW(p)

It's hard to predict what careers will make sense in an AI-inflected world where we're not all dead. Still, I have the feeling that certain careers are better bets than others: basically Baumol-effect jobs where it is essential (or strongly preferred) that the person performing the task is actually a human being. So: therapist, tutor, childcare, that sort of thing.

Replies from: matthew-barnett, sharmake-farah↑ comment by Matthew Barnett (matthew-barnett) · 2023-03-02T01:01:16.708Z · LW(p) · GW(p)

Baumol-effect jobs where it is essential (or strongly preferred) that the person performing the task is actually a human being. So: therapist, tutor, childcare, that sort of thing

Huh. Therapists and tutors seem automatable within a few years. I expect some people will always prefer an in-person experience with a real human, but if the price is too high, people are just going to talk to a language model instead.

However, I agree that childcare does seem like it's the type of thing that will be hard to automate.

My list of hard to automate jobs would probably include things like: plumber, carpet installer, and construction work.

Replies from: maia↑ comment by maia · 2023-03-02T01:56:25.864Z · LW(p) · GW(p)

Therapy is already technically possible to automate with ChatGPT. The issue is that people strongly prefer to get it from a real human, even when an AI would in some sense do a "better" job.

EDIT: A recent experiment demonstrating this: https://www.nbcnews.com/tech/internet/chatgpt-ai-experiment-mental-health-tech-app-koko-rcna65110

Replies from: None↑ comment by [deleted] · 2023-03-02T18:46:48.972Z · LW(p) · GW(p)

Note also that therapists are supposed to be trained not to say certain things and to talk a certain way. chatGPT unmodified can't be relied on to do this. You would need to start with another base model and RLHF train it to meet the above and possibly also have multiple layers of introspection where every output is checked.

Basically you are saying therapy is possible with demonstrated AI tech and I would agree

It would be interesting if as a stunt an AI company tried to get their solution officially licensed, where only bigotry of "the applicant has to be human" would block it.

↑ comment by Noosphere89 (sharmake-farah) · 2023-03-02T00:09:25.970Z · LW(p) · GW(p)

Yeah, the most AI resistant jobs are jobs that will require human like emotions/therapeutic jobs. I won't say they're immune to AI with high probability, but they're the jobs I most expect to see humans working in 50-100 years.

comment by Zvi · 2023-03-01T13:33:42.902Z · LW(p) · GW(p)

Mods please reimport/fix: First Kate Hall tweet is supposed to be this one, accidentally went with a different one instead. Also there's some bug with how the questions get listed on the lefthand side.

↑ comment by Writer · 2023-03-01T14:04:17.386Z · LW(p) · GW(p)

Note that in a later Tweet she said she was psychotic at the time

Edit: and also in this one.

↑ comment by the gears to ascension (lahwran) · 2023-03-02T06:14:04.038Z · LW(p) · GW(p)

that's something to be aware of - intense stress, including about world events, can induce psychosis (and other altered states). psychosis may not be as unfamiliar of a brain state as you might expect if you haven't experienced it before; it could feel very weird, but it also could seem like everyone else is failing to take a situation seriously. when in doubt, consider talking to someone who knows how to recognize it. it's hardly catastrophic, and it's generally quite recoverable, as long as you don't do anything crazy and irreversible.

↑ comment by RobertM (T3t) · 2023-03-01T20:22:03.234Z · LW(p) · GW(p)

Fixed the image. The table of contents relies on some hacky heuristics that include checking for bolded text and I'm not totally sure what's going wrong there.

comment by Daniel Samuel (daniel-samuel) · 2024-05-17T17:29:23.156Z · LW(p) · GW(p)

Every time I start to freak out about AI—be it timelines, risks, or whatever—I come back to this post to get down to earth a bit and avoid making foolish life decisions.

comment by Dalmert · 2023-03-17T10:50:07.221Z · LW(p) · GW(p)

The employees of the RAND corporation, in charge of nuclear strategic planning, famously did not contribute to their retirement accounts because they did not expect to live long enough to need them.

Any sources for this? I tried searching around without avail yet, which is surprising if this is indeed famously known.

comment by Vladimir_Nesov · 2023-03-02T07:01:06.849Z · LW(p) · GW(p)

This keeps conflating shortness of singularity timelines and certainty of doom. Some of these questions depend on singularity timelines, but not particularly on whether it's doom. Saying "end on the world" is annoying for the same reason, both specifically-doom-singularity and any-kind-of-singularity are salient interpretations, but it's unclear which is meant in each instance.

comment by Annapurna (jorge-velez) · 2023-03-01T16:22:52.835Z · LW(p) · GW(p)

"If there is a new world order - AI or something else changes everything - and we are not all dead, how do you prepare for that? Good question. What does such a world look like? Some such worlds you don’t have to prepare and it is fine. Others, it is very important that you start with capital. Keeping yourself healthy, cultivating good habits and remaining flexible and grounded are probably some good places to start."

This is the question I am working on answering at the moment. It seems to me that it is universally agreed that AGI will happen in my lifetime (I am 34). My reasoning tells me that either AGI destroys us, or it fundamentally changes society, improving the average human standards of living substantially. This could very well mean that concepts such as law, government, capital markets, family systems,etc are significantly different than they are today.

Thinking of preparing for this new world is hard. Here's a few things that I've began doing:

-

I am thinking less of life past 60, and shifting that energy more to the present moment.

-

Saving for retirement is slowly going down in the list of priorities. I have a very healthy asset base and I am not going to blow through it all, But I am also not going to maximize savings either. I will put some money away, but less than I would a few years ago. That % I am not saving for retirement will be going to the present moment.

-

Definitely not delaying bucket list items. If I can do it, I will do it.

-

The probability of having biological children has gone down. I have discussed this with my partner. We will not be ready for children for the next three years and she's older than me, so the chances of biological children were already low. Furthermore adopting is still on the table and the probability of that is even higher now.

More importantly, these are all moving goal posts as developments happen.

comment by martinkunev · 2023-03-02T23:44:52.254Z · LW(p) · GW(p)

Given that hardware advancements are very likely going to continue, delaying general AI would favor what Nick Bostrom calls a fast takeoff. This makes me uncertain as to whether delaying general AI is a good strategy.

I expected to read more about actively contributing to AI safety rather than about reactivively adapting to whatever is happening.

comment by Algon · 2023-03-01T19:43:19.241Z · LW(p) · GW(p)

A: I would not (and will not) hide this information from my kids, any more than I would hide the risk from nuclear war, but ‘you may not get to grow up’ is not a helpful thing to say to (or to emphasize to) kids. Talking to your kids about this (in the sense of ‘talk to your kids about drugs’) is only going to distress them to no purpose. While I don’t believe in hiding stuff from kids, I also don’t think this is something it is useful to hammer into them. Kids should still get to be and enjoy being kids.

OK, but how will you present this information to your kids? When will you do so? I don't know of any concrete policies for this issue, and it seems like fairly important wrt. having kids.

Replies from: mingyuan↑ comment by mingyuan · 2023-03-02T01:20:09.261Z · LW(p) · GW(p)

Not Zvi and not a parent but: Every kid learns about death, and it is true for every kid that they might not get to grow up. Extinction is different, but not so different (to a child's mind) from the threat of nuclear war, or even regular war, both of which many children have had to face before. I'm also not aware of concrete policies on how to explain to kids that they and everyone they love will die, but it's not like it's a new problem.

Replies from: juliawise↑ comment by juliawise · 2023-03-28T02:38:19.751Z · LW(p) · GW(p)

I haven't had this conversation with my kids because they haven't asked, but I think the main things they disvalue about death are 1. their own death and 2. separation from people they love. I think the additional badness of "and everyone else would be dead too" is less salient to young kids. There might actually be some comfort in thinking we'd all go together instead of some people being left behind.

One of my kids got interested in asteroid strikes after learning about how dinosaurs went extinct, about age 4. She'd look out the window periodically to see if one was coming, but she didn't seem disturbed in the way that I would be if I thought there might be an asteroid outside the window.

Even if we'd had the conversation, I'd expect this to be a pretty small factor in their overall quality of life. Actual loss of someone they know is a bigger deal to them, but learning about death in general seem to result in some bedtime tears and not a lot of other obvious effects.

comment by [deleted] · 2023-03-01T18:45:09.429Z · LW(p) · GW(p)

Zvi, you didn't respond in the last thread.

As the planetary death rate is 100 percent, and the increase in capabilities from tool AGI is realistically the only thing that could change that within the lifetimes of all living humans today, why is working on AI capabilities bad?

How confident are you that it is bad? Have you seen the CAIS proposal?

Replies from: jkaufman, Celarix↑ comment by jefftk (jkaufman) · 2023-03-01T23:19:09.162Z · LW(p) · GW(p)

Not Zvi, but flourishing human lives are still good even when they end in death. If you only care about humans alive today then faster AGI development is still very likely negative unless you are a lot more optimistic about alignment than most of us are.

If you care about future humans in addition to current humans then faster AGI development is massively negative, because of the likelihood of extinction.

Replies from: Aiyen, None↑ comment by Aiyen · 2023-03-02T04:49:55.207Z · LW(p) · GW(p)

150,000 people die every day. That's not a small price for any delays to AGI development. Now, we need to do this right: AGI without alignment just kills everyone; it doesn't solve anything. But the faster we get aligned AI, the better. And trying to slow down capabilities research without much thought into the endgame seems remarkably callous.

Eliezer has mentioned the idea of trying to invent a new paradigm for AI, outside of the conventional neural net/backpropagation model. The context was more "what would you do with unlimited time and money" than "what do you intend irl", but this seems to be his ideal play. Now, I wish him the best of luck with the endeavor if he tries it, but do we have any evidence that another paradigm is possible?

Evolved minds use something remarkably close to the backprop model, and the only other model we've seen work is highly mechanistic AI like Deep Blue. The Deep Blue model doesn't generalize well, nor is it capable of much creativity. A priori, it seems somewhat unlikely that any other AI paradigm exists: why would math just happen to permit it? And if we oppose capabilities research until we find something like a new AI model, there's a good chance that we oppose it all the way to the singularity, rather than ever contributing to a Friendly system. That's not an outcome anyone wants, and it seems to be the default outcome of incautious pessimism.

Replies from: None↑ comment by [deleted] · 2023-03-02T18:43:12.978Z · LW(p) · GW(p)

Another general way to look at it is think about what a policy IS.

A policy is the set of rules any AI system uses to derive the input from the output. It's how a human or humanoid robot walks, or talks, or any intelligent act. (Since there are rules that update the rules)

Well any non trivial task you realize the policy HAS to account for thousands of variables, including intermediates generated during the policy calculation. It trivially, for any "competitive" policy that does complex things, can exceed the complexity that a human being can grok.

So no matter the method you use to generate a policy it will exceed your ability to review it, and COULD contain "if condition do bad thing" in it.

↑ comment by Celarix · 2023-03-02T02:16:23.342Z · LW(p) · GW(p)

Also not Zvi, but reducing the death rate from 100% still requires at least some of humanity to survive long enough to recognize those gains. If the AI paperclips everyone, there'd be no one left to immortalize, unless it decides to make new humans down the road for some reason.

comment by Max H (Maxc) · 2023-03-01T16:08:37.522Z · LW(p) · GW(p)

Certainly it makes sense to shift this on the margin, get your key bucket list items in early, put a higher marginal priority on fun – even more so than you should have been doing anyway.

Yes. I think this is good advice (at least for many people in this community), independent of one's views on AI or other x-risk.

For me, this just means living a more fun "normal life" - eating out more, living in a slightly nicer apartment, buying things that make me and those around me happy. Maybe even having kids, without a pile of savings already earmarked for childcare, housing, education, etc.

Perhaps in 2050 if we're all still here and things are somehow normal, I'll need to reduce my consumption and / or work a bit longer to compensate for the choices I am making now. This seems like a good trade, even if the chance of reaching the future is certain - the chance of AI doom, whatever it is, just makes it overdetermined.

Replies from: jorge-velez↑ comment by Annapurna (jorge-velez) · 2023-03-01T16:32:29.588Z · LW(p) · GW(p)

By the way, in 2050 you are more likely to reduce your consumption anyways because of age. This is a very common thing among humans: those who very successfully save for later end up saving so much that they end up dying with a pile of assets.

This new paradigm (AGI arriving in our lifetimes) is beginning to change the way I optimize life design.

comment by BrownHairedEevee (aidan-fitzgerald) · 2023-04-11T17:46:58.434Z · LW(p) · GW(p)

The exception is that the Big Tech companies (Google, Amazon, Apple, Microsoft, although importantly not Facebook, seriously f*** Facebook) have essentially unlimited cash, and their funding situation changes little (if at all) based on their stock price.

I'm not sure if this is true right now. It seems like the entire tech industry is more cash-constrained than usual, given the high interest rates, layoffs throughout the industry, and fears of a coming recession.

comment by metasemi (Metasemi) · 2023-03-10T16:50:25.594Z · LW(p) · GW(p)

Strong upvote - thank you for this post.

It's right to use our specialized knowledge to sound the alarm on risks we see, and to work as hard as possible to mitigate them. But the world is vaster than we comprehend, and we unavoidably overestimate how well it's described by our own specific knowledge. Our job is to do the best we can, with joy and dignity, and to raise our children - should we be so fortunate as to have children - to do the same.

I once watched a lecture at a chess tournament where someone was going over a game, discussing the moves available to one of the players in a given position. He explained why one a specific move was the best choice, but someone in the audience interrupted. "But isn't Black still losing here?" The speaker paused; you could see the wheels turning as he considered just what the questioner needed here. Finally he said, "The grandmaster doesn't think about winning or losing. The grandmaster thinks about improving their position." I don't remember who won that game, but I remember the lesson.

Let's be grandmasters. I've felt 100% confident of many things that did not come to pass, though my belief in them was well-informed and well-reasoned. Certainty in general reflects an incomplete view; one can know this without knowing exactly where the incompleteness lies, and without being untrue to what we do know.

comment by arisAlexis (arisalexis) · 2023-03-02T09:58:03.036Z · LW(p) · GW(p)

Thank you. This is the kind of post I wanted to write when I posted "the burden of knowing" a few days ago but I was not rational thinking at that moment.