The Clueless Sniper and the Principle of Indifference

post by Jim Buhler (jim-buhler) · 2025-01-27T11:52:57.978Z · LW · GW · 26 commentsContents

26 comments

You are one of the best long-range snipers in the World. You often have to eliminate targets standing one or two miles away and rarely miss. Crucially, this is not because you are better than others at aligning the scope of your rifle with the target’s head before taking the shot. Rather, this is because you are better at accounting for the external factors that will influence the bullet’s direction (e.g., the wind, gravity, etc.). Estimating these external factors is crucial for your success. One day, you are on a roof with binoculars, looking at a building four miles away that you and your team know is filled with terrorists and their innocent children. Your superior is beside you, looking in the same direction. Your mission is to inform your allies closer to the building if you see any movement. After a long wait, the terrorist leader comes out, holding a child close to him. He knows ennemies might be targetting him and would not want to hurt the kid. They are hastily heading towards another building nearby. You grab your radio to inform your allies but your superior stops you and hands you your sniper. “He’s so exposed. This is a golden opportunity for you.” she says. “I reckon we’ve got two minutes before they reach the other building. Do you think you can get him?“. You know that your superior generally accepts risking the lives of innocents but only if there are more bad guys than innocents killed, in expectation. “Absolutely not.” you respond. “We are four miles away! I’ve never taken a shot from this far. NO ONE has ever hit a target from this far. I’m just as likely to hit the kid.” Your superior takes a few seconds to think. “You always say that where the bullet ends up is the result of an equation: ‘where you aim + the external factors’, right?” she says. “Yes,” you reply nervously “And I have no idea how to account for the external factors from that far. There are so many different wind layers between us and the target. The Earth’s rotation and the spin drift of the bullet matter also a hell of a lot from this distance. I would also have to factor in the altitude, humidity, and temperature of the air. I don’t have the faintest idea what this sums up to overall from this far! I’m clueless. I wouldn’t be more likely to get him and not the kid if I were to shoot with my eyes closed.” Your superior turns her head towards you. “Principle of indifference,” she says. “You have no clue how external factors will affect your shot. You don’t know if they tell you to aim more a certain way rather than another. So simply don’t adjust your shot! Aim at this jerk and shoot as if there were no external factors! And you’ll be, in expectation, more likely to hit him than the kid.”

I'm curious whether people agree with the superior. Do you think applying the POI is warranted, here? Any rationale behind why (not)?

EDIT: You can assume the child and the target cover exactly as much hittable surface area if you want, but I'm actually just interested in whether you find the POI argument valid, not in what we think the right strategic call would be if that was a real-life situation. (EDIT 2:) This is just a thought experiment aiming at assessing whether applying POI makes sense in situations of complex cluelessness. No kid is going to get killed in the real world because of your response. :)

26 comments

Comments sorted by top scores.

comment by AnthonyC · 2025-01-27T12:57:40.238Z · LW(p) · GW(p)

I think the principle is fine when applied to how variables effect movement of the bullet in space. I don't necessarily think it means taking the shot is the right call, tactically.

Note: I've never fired a real gun in any context, so a lot of the specifics of my reasoning are probably wrong but here goes anyway.

Essentially I see the POI as stating that the bullet takes a 2D random walk with unknown step sizes (though possibly with a known distribution of sizes) on its way to the target. As distance increase, variance in the random walk increases.

Given typical bullet speeds we're talking about >6 seconds to reach the target, possibly much more depending on drag. And the sniper is actually pointing the rifle so it follows a parabolic arc to the target. In that time the bullet falls .5*g*t^2 meters, so the sniper is actually pointing at a spot at least 180m above his head, possibly 500m if drag makes the expected flight speed even a few seconds longer. More still to the extent the distance means the angle of the shot has to be so high you need to account for more vertical and less horizontal velocity plus more drag. Triple the distance means a lot more than 3x the number of opportunities for random factors to throw off the shot. The random factors are playing plinko with your bullet.

After the first mile (limit of known skill) the expected distance of where the bullet lands from the target increases. At some sufficiently far distance, it is essentially landing in a random spot in a normal distribution around the intended target, and whether it hits the terrorist or the child is mostly a function of how much area each takes up (the adult is larger), and the extent to which one is blocking the other (not stated). Regardless, when the variance is high enough, the most likely outcome is "neither." It hits the ground meters away, and now the terrorists all know you took the shot and from what direction. If the terrorist just started walking, there might be a better chance of hitting the building than anything else.

So, in a strict probabilistic sense, yes, your probability of hitting the terrorist is still higher than hitting the child. If that is the superior's sole criterion for decision making, they've reasoned correctly. That is not the sniper's decision making threshold (he wants a higher certainty of avoiding the child). I would expect it is also not the higher-ups' sole criterion, since it is most likely going to fail and alert the enemy about their position as well as the limits of their sniping capabilities. I have no idea whether the sniper has any legally-defensible way to refuse to follow the order, but if he carries it out, I don't think issuing the order will reflect well on the superior.

That said: In practice a lot would depend on why the heck they stationed a sniper three miles away from a target with no practical way to turn that positioning into achieving this goal. The first thought I have is that either you're not where you're supposed to be, or the people that ordered you there are idiots, and in either case your superior is flailing trying to salvage the situation. The second is that you're not really expected to take out this target at this range, and your superior is either trying to show off to his superior, or misunderstood his orders, or wasn't told the real reason for the orders. The third is that of course the sniper would have brought this up hours ago and already figured out the correct decision tree, it's crazy this conversation is only happening after the terrorist leaves the building.

Replies from: jim-buhler↑ comment by Jim Buhler (jim-buhler) · 2025-01-27T13:36:40.939Z · LW(p) · GW(p)

At some sufficiently far distance, it is essentially landing in a random spot in a normal distribution around the intended target

Say I tell you the bullet landed either 35 centimeters on the target's right or 42 centimeters on his left, and ask you to bet on which one you think it is. Are you indifferent/agnostic or do you favor 35 very (very very very very) slightly? (If the former, you reject the POI. If the latter, you embrace it. Or at least that's my understanding. If you don't find it more likely the bullet hits a spot a bit closer to the target, than you don't agree with the superior that aiming at the target makes you more likely to hit him over the child, all else equal.)

Replies from: AnthonyC↑ comment by AnthonyC · 2025-01-27T14:09:31.881Z · LW(p) · GW(p)

The latter. And yes, I do agree with the superior on that specific, narrow mathematical question. If I am trying to run with the spirit and letter of the dilemma as presented, then I will bite that bullet (sorry, I couldn't resist).

In real world situations, at the point where you somehow find yourself in such a position, the correct solution is probably "call in air support and bomb them instead, or find a way to fire many bullets at once- you've already decided you're willing to kill a child for a chance to to take out the target."

Similarly, if the terrorist were an unfriendly ASI and the child was the entire population of my home country, and there was knowably no one else in position to take any shot at all, I'd (hope I'm the kind of person who would) take the shot. A coin flip is better than certainty of death, even if it were biased against you quite heavily.

Replies from: jim-buhler↑ comment by Jim Buhler (jim-buhler) · 2025-01-27T14:45:30.545Z · LW(p) · GW(p)

Interesting, thanks. My intuition is that if you draw a circle of say a dozen (?) meters around the target, there's no spot within that circle that is more or less likely to be hit than any other, and it's only outside the circle than you start having something like a normal distribution. I really don't see why I should think the 35 centimeters on the target's right is any more (or less) likely than 42 centimeters on his left. Can you think of any good reason why I should think that? (Not saying my intuition is better than yours. I just want to get where I'm wrong if I am.)

Replies from: programcrafter↑ comment by ProgramCrafter (programcrafter) · 2025-01-27T15:29:42.826Z · LW(p) · GW(p)

Can you think of any good reason why I should think that?

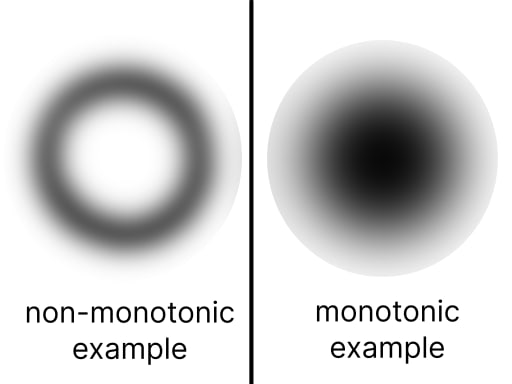

Intuition. Imagine a picture with bright spot in the center, and blur it. The brightest point will still be in center (before rounding pixel values off to the nearest integer, that is; only then may a disk of exactly equiprobable points form).

My answer: because strictly monotonic[1] probability distribution prior to accounting for external factors (either "there might be negligible aiming errors" or "the bullet will fly exactly where needed" are suitable) will remain strictly monotonic when blurred[2] with monotonic kernel[2] formed by those factors (if we assume wind and all that create a normal distribution, it fits).

- ^

in this case - distribution with any point close to center having higher probability assigned than a point farther

- ^

in image processing sense

↑ comment by Jim Buhler (jim-buhler) · 2025-01-27T16:48:08.591Z · LW(p) · GW(p)

My answer: because strictly monotonic[1] probability distribution prior to accounting for external factors

Ok so that's defo what I think assuming no external factors, yes. But if I know that there are external factors, I know the bullet will deviate for sure. I don't know where but I know it will. And it might luckily deviate a bit back and forth and come back exactly where I aimed, but I don't get how I can rationally believe that's any more likely than it doing something else and landing 10 centimeters more on the right. And I feel like what everyone in the comments so far is saying is basically "Well, POI!", taking it for granted/self-obvious, but afaict, no one has actually justified why we should use POI rather than simply remain radically agnostic on whether the bullet is more likely to hit the target than the kid. I feel like your intuition pump, for example, is implicitly assuming POI and is sort of justifying POI with POI.

Replies from: programcrafter, maxwell-peterson, AnthonyC↑ comment by ProgramCrafter (programcrafter) · 2025-01-27T17:24:11.606Z · LW(p) · GW(p)

But if I know that there are external factors, I know the bullet will deviate for sure. I don't know where but I know it will.

You assume that blur kernel is non-monotonic, and this is our entire disagreement. I guess that different tasks have different noise structure (for instance, if somehow noise geometrically increased - - we wouldn't ever return to an exact point we had left).

However, if noise is composed from many i.i.d. small parts, then it has normal distribution which is monotonic in the relevant sense.

Replies from: AnthonyC↑ comment by AnthonyC · 2025-01-27T22:39:15.493Z · LW(p) · GW(p)

I mentioned this in my comment above, but I think it might be worthwhile to differentiate more explicitly between probability distributions and probability density functions. You can have a monotonically-decreasing probability density function F(r) (aka the probability of being in some range is the integral of F(r) over that range, integral over all r values is normalized to 1) and have the expected value of r be as large as you want. That's because the expected value is the integral of r*F(r), not the value or integral of F(r).

I believe the expected value of r in the stated scenario is large enough that missing is the most likely outcome by far. I am seeing some people argue that the expected distribution is F(r,θ) in a way that is non-uniform in θ, which seems plausible. But I haven't yet seen anyone give an argument for the claim that the aimed-at point is not the peak of the probability density function, or that we have access to information that allows us to conclude that integrating the density function over the larger-and-aimed-at target region will not give us a higher value than integrating over the smaller-and-not-aimed-at child region

↑ comment by Maxwell Peterson (maxwell-peterson) · 2025-01-27T17:22:57.807Z · LW(p) · GW(p)

Interesting! I also agree with the superior, but I can see where your intuition might be coming from: if we drop a bouncy ball in the middle of a circle, there will be some bounce to it, and maybe the bounce will always be kinda large, so there might be good reason to think it ending up at rest in the very center is less likely than it ending up off-center. For the sniper’s bullet, however, I think it’s different.

Do you agree with AnthonyC’s view that the bullet’s perturbations are well-modeled by a random walk? If so, maybe I’ll simulate it if I have time and report back - but only makes sense to do that if you agree that the random walk model is appropriate in the first place.

Replies from: jim-buhler↑ comment by Jim Buhler (jim-buhler) · 2025-01-27T17:53:47.520Z · LW(p) · GW(p)

Do you agree with AnthonyC’s view that the bullet’s perturbations are well-modeled by a random walk? If so, maybe I’ll simulate it if I have time and report back - but only makes sense to do that if you agree that the random walk model is appropriate in the first place.

Oh yeah, good question. I'm not sure because random walk models are chaotic and seem to model situations of what Greaves (2016) calls "simple cluelessness". Here, we're in a case she would call "complex". There are systematic reasons to believe the bullet will go right (e.g., the Earth's rotation, say) and systematic reasons to believe it will go left (e.g., the wind that we see blowing left). The problem is not that it is random/chaotic, but that we are incapable of weighing up the evidence for left vs the evidence for right, incapable to the point where we cannot update away from a radically agnostic prior on whether the bullet will hit the target or the kid.

Replies from: maxwell-peterson↑ comment by Maxwell Peterson (maxwell-peterson) · 2025-01-27T18:03:09.606Z · LW(p) · GW(p)

Oh… wait a minute! I looked up Principal of Indifference, to try and find stronger assertions on when it should or shouldn’t be used, and was surprised to see what it actually means! Wikipedia:

>The principle of indifference states that in the absence of any relevant evidence, agents should distribute their credence (or "degrees of belief") equally among all the possible outcomes under consideration. In Bayesian probability, this is the simplest non-informative prior.

So I think the superior is wrong to call it “principle of indifference”! You are the one arguing for indifference: “it could hit anywhere in a radius around the targets, and we can’t say more” is POI. “It is more likely to hit the adult you aimed at” is not POI! It’s an argument about the tendency of errors to cancel.

Error cancelling tends to produce Gaussian distributions. POI gives uniform distributions.

I still think I agree with the superior that it’s marginally more likely to hit the target aimed for, but now I disagree with them that this assertion is POI.

↑ comment by AnthonyC · 2025-01-27T22:18:35.876Z · LW(p) · GW(p)

So, as you noted in another comment, this depends on your understanding of the nature of the types of errors individual perturbations are likely to induce. I was automatically guessing many small random perturbations that could be approximated by a random walk, under the assumption that any systematic errors are the kind of thing the sniper could at least mostly adjust for even at extreme range. Which I could be easily convinced is completely false in ways I have no ability to concretely anticipate.

That said, whatever assumptions I make about the kinds of errors at play, I am implicitly mapping out some guessed-at probability density function. I can be convinced it skews left or right, down or up. I can be convinced, and already was, that it falls off at a rate such that if I define it in polar coordinates and integrate over theta that the most likely distance-from-targeted-point is some finite nonzero value. (This kind of reasoning comes up sometimes in statistical mechanics, since systems are often not actually at a/the maxentropy state, but instead within some expected phase-space distance of maxentropy, determined by how quickly density of states changes).

But to convince me that the peak of the probability density function is somewhere other than the origin (the intended target), I think I'd have to be given some specific information about the types of error present that the sniper does not have in the scenario, or which the sniper knows but is somehow still unable to adjust for. Lacking such information, then for decision making purposes, other than "You're almost certainly going to miss" (which I agree with!), it does seem to me that if anyone gets hit, the intended target who also has larger cross-sectional area seems at least a tiny bit more likely.

comment by Richard_Kennaway · 2025-01-27T14:17:16.000Z · LW(p) · GW(p)

You have an epsilon chance of hitting the terrorist ("NO ONE has ever hit a target from this far"). POI only gives you an epsilon-of-epsilon lower chance of hitting the child. Your superior officer is an idiot.

That's leaving aside the fact that it would take more time to concentrate on the shot that you actually have ("They are hastily heading towards another building nearby"). And it's a moving target. The officer is asking a miracle of this sniper.

I'm actually just interested in whether you find the POI argument valid, not in what you think the right strategic call would be if that was a real-life situation.

The two cannot be separated. Reasoning not directed towards decisions about actions is empty. The purpose of the officer's POI argument is to persuade the sniper that taking the shot is the right call. It is clearly not, and the argument is stupid.

comment by Ape in the coat · 2025-02-03T07:03:19.610Z · LW(p) · GW(p)

Strongly upvoted. This post does a good job at highlighting a fundamental confusion about probability theory and principle of indifference, which, among other things, make people say silly things about anthropic reasoning.

The short answer is: empty map doesn't imply empty territory.

Consider an Even More Clueless Sniper:

You know absolutely nothing about shooting from a sniper rifle. To the best of your knowledge you simply press a trigger and then one of the two outcomes happens: either Target Is Hit or Target Is Not Hit and you have no reason to expect that one outcome is more likely than the other.

Should you be the one making the shot in such circumstances? After all, acording to POI you have 50% chance to hit the target while less clueless snipers estimate is a mere epsilon. Will someone be doing a disservice by educating you about sniper rifles and telling you what is going on, therefore updating your estimate to hit the target to nearly zero?

Replies from: jim-buhler↑ comment by Jim Buhler (jim-buhler) · 2025-02-04T07:30:55.868Z · LW(p) · GW(p)

Interesting, thanks!

I guess one could object that in you're even more clueless sniper example, applying the POI between Hit and Not Hit is just as arbitrary as applying it between, e.g., Hit, Hit on his right, and Hit on his left. This is what Greaves (2016) -- and maybe others? -- called the "problem of multiple partitions". In my original scenario, people might argue that there isn't such a problem and that there is only one sensible way to apply POI. So it'd be ok to apply it in my case and not in yours.

I don't know what to make of this objection, though. I'm not sure it makes sense. It feels a bit arbitrary to say "we can apply POI but only when there is one way of applying it that clearly seems more sensible". Maybe this problem of multiple partitions is a reason to reject POI altogether (in situations of what Greaves call "complex cluelessness" at least, like in my sniper example).

↑ comment by Ape in the coat · 2025-02-04T09:29:34.795Z · LW(p) · GW(p)

Well obviously when you know that there are such options as Hit on his right, and Hit on his left you will apply POI to be indifferent between all the options.

But according to Even More Clueless Sniper experiment you don't know that. All that you know is that there are two options Hit or No Hit. And then POI gives you 50% to hit.

In other words, the problem of multiple partitions happens only when you know about all this multiple options. And if you don;t know - then there is no problem.

Maybe this problem of multiple partitions is a reason to reject POI altogether

What we need to is to properly understand where does POI even comes from. That it's not some magical principle that allows totally ignorant people to shoot better than trained snipers. That there is some systematic reason that allows to produce correct maps of the territory and POI is derived from it. If we understand the reason, such situations will cease to be mysterious.

comment by JBlack · 2025-01-28T02:41:33.283Z · LW(p) · GW(p)

If you aim as if there were no external factors at that range (especially bullet drop!) you will definitely miss both. The factors aren't all random with symmetric distributions having a mode at the aim point.

Replies from: jim-buhler↑ comment by Jim Buhler (jim-buhler) · 2025-01-28T10:13:28.509Z · LW(p) · GW(p)

Yeah, I guess I meant something like "aim as if there were no external factors other than gravity".

comment by dr_s · 2025-02-04T07:47:40.983Z · LW(p) · GW(p)

I think for this specific example the superior is wrong because realistically we can form an expectation of the distribution of those factors. Just because we don't know doesn't mean it's actually necessarily a gaussian - some factors, like the Coriolis force, are systematic. If the distribution was "a ring of 1 m around the aimed point" then you would know for sure you won't hit the terrorist that way, but have no clue whether you'll hit the kid.

Also, even if the distribution was gaussian, if it's broad enough the difference in probability between hitting the terrorist and hitting the kid may simply be too small to matter.

Replies from: jim-buhler↑ comment by Jim Buhler (jim-buhler) · 2025-02-04T15:11:27.946Z · LW(p) · GW(p)

some factors, like the Coriolis force, are systematic.

Yup! (a related comment [LW(p) · GW(p)] fwiw).

If the distribution was "a ring of 1 m around the aimed point" then you would know for sure you won't hit the terrorist that way

Well, not if you factor in other factors that might luckily exactly compensate for the Coriolis effect (e.g., the wind). But yeah, considering that it's a Gaussian distribution where the top is "target hit" (rather than "kid hit" or "rock over there hit") just because that's where you happen to be aiming (ignoring the Coriolis effect, the wind and all) seems very suspiciously convenient.

Replies from: dr_s↑ comment by dr_s · 2025-02-04T15:59:30.965Z · LW(p) · GW(p)

I suppose that Gaussian is technically the correct prior for "very high number of error factors with a completely unknown but bounded probability distribution". But reality is, that's not a good description of this specific situation, even with as much ignorance as you want thrown in.

comment by jimmy · 2025-01-27T17:55:52.196Z · LW(p) · GW(p)

There are several complications in the example you give, and I'm not sure which are intentional.

Let's start with a simpler example. You somehow end up needing to take a 400 meter shot down a tunnel with an experimental rifle/ammo you've been working on. You know the rifle/ammo inside and out due to your work and there is no wind, but the rifle/ammo combination has very high normal dispersion, and all that is exposed is a headshot.

In this case, where you center your probability distribution depends on the value of the kids life. If the terrorist is about to nuke the whole earth, you center it on the bad guy and ignore the kid. If the terrorist will at most kill that one kid if you don't kill him now, then you maximize expected value by biasing your distribution so that hitting the kid requires you to be further down the tail, and the ratio of terrorist/hostage hit goes up as the chance of a hit goes down. If the kid certainly dies if you miss, also dies if you hit him, and is only spared if you hit the terrorist, then you're back to ignoring the kid and centering the distribution on the terrorist -- even if you're more likely to hit the kid than the terrorist.

In the scenario you describe, you don't actually have the situation so well characterized. You'd be forced to lob bullets at a twenty degree inclination, without being able to use sights or see your target -- among many other large uncertainties. In that case it's not that you have a well known distribution and unknown result of the next draw. You don't know what the distribution is. You don't know what the meta distribution the distribution is being drawn from.

Statements like "more likely" are all relative to a model which you presuppose has some validity in context. What's the model, and where do you think you're getting the validity to say it? Even if the simulation God paused the game and spoke to you saying "I'll run the experiment a billion times, and we'll see if the kid gets hit more often", you'd have no idea how to set up that experiment because you don't know what you're controlling for.

I'd guess that you're asking about something in between, but I'm not sure which unknowns are intentional.

comment by Dagon · 2025-01-27T14:09:39.319Z · LW(p) · GW(p)

Which part of the question are you asking about? Is it OK to take a 50/50 shot at killing a terrorist or a kid? No, I don't think so. Is it really 50/50? Depends on circumstances, but usually no - there will be known factors that weigh one way or the other, and the most likely outcome is a clean miss, which warns the terrorists that snipers are present.

The narrow topic of the principle of indifference is ... fine, I guess. But it's a pretty minor element of the decision, and in many cases, it's better to collect more data or change the situation than to fall back on a base distribution of unknowns.

Replies from: jim-buhler↑ comment by Jim Buhler (jim-buhler) · 2025-01-27T14:25:38.085Z · LW(p) · GW(p)

I'm just interested in the POI thing, yeah.

comment by Martin Randall (martin-randall) · 2025-02-03T23:10:19.263Z · LW(p) · GW(p)

This philosophy thought experiment is a Problem of Excess Metal. This is where philosophers spice up thought experiments with totally unnecessary extremes, in this case an elite sniper, terrorists, children, and an evil supervisor. This is common, see also the Shooting Room Paradox (aka Snake Eyes Paradox [LW · GW]), Smoking Lesion, Trolley Problems, etc, etc. My hypothesis is that this is a status play whereby high decouplers can demonstrate their decoupling skill. It's net negative for humanity. Problems of Excess Metal also routinely contradict basic facts about reality. In this case, children do not have the same surface area as terrorists.

Here is an equivalent question that does not suffer from Excess Metal.

- A normal archer is shooting towards two normal wooden archery targets on an archery range with a normal bow.

- The targets are of equal size, distance, and height. One is to the left of the other.

- There is normal wind, gravity, humidity, etc. It's a typical day on Earth.

- The targets are some distance away, four times further away than she has fired before.

Q: If the archer shoots at the left target as if there are no external factors, is she more likely to hit the left target than the right target?

A: The archer has a 0% chance of hitting either target. Gravity is an external factor. If she ignores gravity when shooting a bow and arrow over a sufficient distance, she will always miss both targets, and she knows this. Since 0% = 0%, she is not more likely to hit one target than the other.

Q: But zero isn't a probability!

A: Then P(Left|I) = P(Right|I) = 0%, see Acknowledging Background Information with P(Q|I) [LW · GW].

Q: What if the archer ignores all external factors except gravity? She goes back to her physics textbook and does the math based on an idealized projectile in a vacuum.

A: I think she predictably misses both targets because of air resistance, but I'd need to do some math to confirm that.

comment by RussellThor · 2025-01-27T20:28:13.654Z · LW(p) · GW(p)

Not sure if this is allowed but you can aim at a rock or similar say 10m away from the target (4km from you) to get the bias (and distribution if multiple shots are allowed). Also if the distribution is not totally normal, but has smaller than normal tails then you could aim off target with multiple shots to get the highest chance of hitting the target. For example if the child is head height then aim for the targets feet, or even aim 1m below the target feet expecting 1/100 shots will actually hit the targets legs, but only <1/1000 say will hit the child. That is assuming an unrealistic amount of shots of course. If you have say 10 shots, then some combined strategy where you start aiming a lot off target to get the bias and a crude estimate of the distribution, then steadily aiming closer could be optimal.