Some (problematic) aesthetics of what constitutes good work in academia

post by Steven Byrnes (steve2152) · 2024-03-11T17:47:28.835Z · LW · GW · 12 commentsContents

Part 1: The aesthetic of novelty / cleverness Example: my rant on “the psychology of everyday life” Example: Holden Karnofsky quote about academia More examples Part 2: The aesthetic of topicality (or more cynically, “trendiness”) General discussion A couple personal anecdotes from my physics experience “The other Hamming question” Extremely cynical tips to arouse academics’ interests Part 3: The aesthetic of effort Part 4: Some general points This obviously isn’t just about academia Aesthetics-of-success can be sticky due to signaling issues Aesthetics-of-success are invisible to exactly the people most impacted by them If your aesthetics-of-success are bad, so will be your “research taste” Homework problem None 12 comments

(Not-terribly-informed rant, written in my free time.)

Terminology note: When I say “an aesthetic”, I mean an intuitive (“I know it when I see it”) sense of what a completed paper, project, etc. is ideally “supposed” to look like. It can include both superficial things (the paper is properly formatted, the startup has high valuation, etc.), and non-superficial things (the theory is “elegant”, the company is “making an impact”, etc.).

Part 1: The aesthetic of novelty / cleverness

Example: my rant on “the psychology of everyday life”

(Mostly copied from this tweet)

I think if you want to say something that is:

- (1) true,

- (2) important, and

- (3) related to the psychology of everyday life,

…then it’s NOT going to conform to the aesthetic of what makes a “good” peer-reviewed academic psych paper.

The problem is that this particular aesthetic demands that results be (A) “novel”, and (B) “surprising”, in a certain sense. Unfortunately, if something satisfies (1-3) above, then it will almost definitely be obvious-in-hindsight, which (perversely) counts against (B); and it will almost definitely have some historical precedents, even if only in folksy wisdom, which (perversely) counts against (A).

If you find a (1-3) thing that is not “novel” and “surprising” per the weird peer-review aesthetic, but you have discovered a clearer explanation than before, or a crisper breakdown, or better pedagogy, etc., then good for you, and good for the world, but it’s basically useless for getting into top psych journals and getting prestigious jobs in psych academia, AFAICT. No wonder professional psychologists rarely even try.

Takeaway from the perspective of a reader: if you want to find things that are all three of (1-3), there are extremely rare, once-in-a-generation, academic psych papers that you should read, and meanwhile there’s also a giant treasure trove of blog posts and such. For example:

- Motivated reasoning is absolutely all three of (1-3). If you want to know more about motivated reasoning, don’t read psych literature, read Scout Mindset.

- Scope neglect is absolutely all three of (1-3). If you want to know more about scope neglect, don’t read psych literature, read blog posts about Cause Prioritization [? · GW].

- As it happens, I’ve been recently trying to make sense of social status and related behaviors. And none of the best sources I’ve found have been academic psychology— all my “aha” moments came from blog posts. And needless to say, whatever I come up with, I will also publish via blog posts. (Example [LW · GW].)

Takeaway from the perspective of an aspiring academic psychologist: What do you do? (Besides “rethink your life choices”.) Well, unless you have a once-in-a-generation insight, it seems that you need to drop at least one of (1-3):

- If you drop (3), then you can, I dunno, figure out some robust pattern in millisecond-scale reaction times or forgetting curves that illuminates something about neuroscience, or find a deep structure underlying personality differences, or solve the Missing Heritability Problem, etc.—anything where we don’t have everyday intuitions for what’s true. There are lots of good psych studies in this genre (…along with lots of crap, of course, just like every field).

- If you drop (2), then you can use very large sample sizes to measure very small effects that probably nobody ought to care about.

- If you drop (1), then you have lots of excellent options ranging from p-hacking to data fabrication, and you can rocket to the top of your field, give TED talks, sell books, get lucrative consulting deals, etc.

Example: Holden Karnofsky quote about academia

From a 2018 interview (also excerpted here [LW · GW]):

I would say the vast majority of what is going on in academic is people are trying to do something novel, interesting, clever, creative, different, new, provocative, that really pushes the boundaries of knowledge forward in a new way. I think that’s really important obviously and great thing. I’m really, incredibly glad we have institutions to do it.

I think there are a whole bunch of other activities that are intellectual, that are challenging, that take a lot of intellectual work and that are incredibly important and that are not that. They have nowhere else to live…

To give examples of this, I mean I think GiveWell is the first place where I might have initially expected that there was going to be development economics was going to tell us what the best charities are. Or, at least, tell us what the best interventions are. Tell us if bed nets, deworming, cash transfers, agricultural extension programs, education improvement programs, which ones are helping the most people for the least money. There’s really very little work on this in academia.

A lot of times, there will be one study that tries to estimate the impact of deworming, but very few or no attempts to really replicate it. It’s much more valuable [from the point-of-view of an academic] to have a new insight, to show something new about the world than to try and nail something down. It really got brought home to me recently when we were doing our Criminal Justice Reform work and we wanted to check ourselves. We wanted to check this basic assumption that it would be good to have less incarceration in the US.

David Roodman, who is basically the person that I consider the gold standard of a critical evidence reviewer, someone who can really dig on a complicated literature and come up with the answers, he did what, I think, was a really wonderful and really fascinating paper, which is up on our website, where he looked for all the studies on the relationship between incarceration and crime, and what happens if you cut incarceration, do you expect crime to rise, to fall, to stay the same? He really picked them apart. What happened is he found a lot of the best, most prestigious studies and about half of them, he found fatal flaws in when he just tried to replicate them or redo their conclusions.

When he put it all together, he ended up with a different conclusion from what you would get if you just read the abstracts. It was a completely novel piece of work that reviewed this whole evidence base at a level of thoroughness that had never been done before, came out with a conclusion that was different from what you naively would have thought, which concluded his best estimate is that, at current margins, we could cut incarceration and there would be no expected impact on crime. He did all that. Then, he started submitting it to journals. It’s gotten rejected from a large number of journals by now [laughter]. I mean starting with the most prestigious ones and then going to the less.…

More examples

- There’s a method to calculate how light bounces around multilayer thin films. It’s basic, college-level physics and has probably been known for more than 100 years. But the explanations I could find all had typos, and the computer implementations all had bugs. So when I was a physics grad student, I wrote out my own derivation and open-source implementation with scrupulous attention to detail. I treated that as a hobby project, and didn’t even mention it in my dissertation, because obviously that’s not the kind of exciting novel physics work that helps one advance in physics academia. But in terms of accelerating the field of solar cell R&D, it was probably far more impactful than any of my “real” solar-related grad-school projects. (More discussion here [LW · GW].)

- When I was in academia, sometimes there would be a controversy in the literature, and I would put in a ton of effort to figure out who is right, and then I figure it out to my satisfaction, and it turns out that one side is right about everything, and then … that’s it. There was nothing I could do with that information to help my nascent academic career. Obviously you can’t publish a peer-reviewed paper saying “Y’know that set of papers from 20 years ago by Prof. McBloop? They were all correct as written. All the later criticisms were wrong. Good job, Prof. McBloop!” (Sometimes figuring out something like that is indirectly useful, of course.) It would definitely work as a blog post, but if the goal is peer-reviewed papers and grants, figuring out these kinds of things is a waste of time except to the extent that it impacts “novel” follow-up work. And needless to say, if we systematically disincentivize this kind of activity, we shouldn’t be surprised that it doesn’t happen as much as it should.

- It’s extremely frequent for an academic to read an article and decide it’s wrong, but extremely rare for them to say that publicly, let alone submit a formal reply (a time-consuming and miserable process, apparently). I think there are a bunch of things that contribute to that, but one of them is that the goal is “big new exciting clever insights”—and “this paper is wrong” sure doesn’t sound like a big new exciting clever insight.

- Tweet by Nate Soares: “big progress often comes from lots of small reconceptualizations. the "i can't distinguish your idea from a worse one in the literature" police are punishing real progress.”

Part 2: The aesthetic of topicality (or more cynically, “trendiness”)

General discussion

When I was in physics academia (grad school and postdoc), I got a very strong sense that the community had a tacit shared understanding of the currently-trending topics / questions, within which there’s a contest to find interesting new ideas / progress.

Now, if you think about it, aside from commercially-relevant work, success for academic research scientists / philosophers / etc. is ≈100% determined by “am I impressing my peers?”—that’s how you get promoted, that’s how you get grants, that’s how you get prizes and other accolades, etc.

So, if I make great progress on Subtopic X, and all the prestigious people in my field don’t care about Subtopic X, that’s roughly just as bad for me and my career as if those people had unanimously said “this is lousy work”.

It’s a bit like in clothing fashion: if you design an innovative new beaded dress, but beads aren’t in fashion this season, then you’re not going to sell many dresses.

Of course, the trends change, and indeed everyone is trying to be the pioneer of the next hot topic. There are a lot of factors that go into “what is the next hot topic”, including catching the interest of a critical mass of respected people (or people-who-control-funding), which in turn involves them feeling it’s “exciting”, and that they themselves have an angle for making further progress in this area, etc.

A couple personal anecdotes from my physics experience

- When I was a grad student, “multiferroics” were really hot, partly due to a hope that they would enable new types of computer memory (which I think helped justify funding), and partly due to some cool new physics phenomena involving them (see Part 1 above). Separately, solar cell research was really hot, both because everyone wants to help with climate change and because you could get funding that way. I had an advisor running a multiferroics research group, and he shrewdly bought a lamp and put some multiferroics under it, and wouldn’t you know it, they had a photovoltaic effect. So what? Tons of materials do. It wasn’t a particularly strong effect, nor promising for future practical applications, but in terms of starting a trendy new physics / materials-science research area, it was bang-on. I was a coauthor on two papers related to this idea, and they now have 600 and 1700 citations respectively. Everyone involved got copious funding and promotions.

- When I was a postdoc, “metamaterials” were pretty hot, although maybe a bit past its peak by that point. Separately, “diffractive optical elements” were an ancient, boring technology that had long ago migrated from physicists-in-academia to optical-engineers-in-industry. Somebody figured out that there was an opening for a second wave of academic research on diffractive optical elements, aided by modern lithography and design tools. But they didn’t describe it that way! Instead they made up a new term “metasurface”, which sounds like it’s continuing the “metamaterial” conversation, but taking it in an exciting new direction, and by the way it’s very easy to make “metasurfaces” whereas metamaterials are a giant pain that few groups can build and experiment on. So tons of groups immediately jumped onto that bandwagon. The “metasurface” trend became huge, and everyone involved got copious funding and promotions. I am confident that this would not have happened if the original group had published the same results using the traditional term “diffractive optical element” instead of coining “metasurface”. (I’m leaving out parts of this story; and also, I’m describing it as deliberate crass marketing, when in fact it was mostly a happy accident, I think. But still, it illustrates some aspects of what makes a trendsetting physics idea.)

- Similarly, there’s a term “photonics” which is related to, but slightly different from, the term “optics”. But what really happens in practice is that everyone uses the term “photonics” whenever possible, because “photonics” sounds exciting and trendy, whereas “optics” sounds old and tired.

“The other Hamming question”

Richard Hamming famously asked [LW · GW] his colleagues “What are the important problems of your field?”. I think the important follow-up question should be “Are you sure?”

Actually, perhaps one could ask a series of questions:

- “What are the important problems of your field?”

- “What are the problems in your field that would be most prestigious for you to solve? In other words, what are the problems where, if you solved them, lots of people, and especially your own colleagues that you look up to, would be very impressed by you?”

- If those two lists are heavily overlapping, shouldn’t you be a little suspicious that you’re optimizing for impressiveness instead of really thinking about what’s “important”?

- And oh by the way, what criteria are you using to define the word “important”? If you didn’t already answer that question in the course of answering Question 1 a minute ago, then … what exactly were you doing when you were answering Question 1??

Of course, this latter question ultimately gets us into the field of Cause Prioritization [? · GW], which of course I think everyone in academia should take much more seriously. (Check out the “Effective Thesis” [? · GW] organization!)

Extremely cynical tips to arouse academics’ interests

Let’s say you’re working on a math problem that’s relevant to making safe and beneficial Artificial General Intelligence. And you want to get academic mathematicians to work on it. One might think that helping prevent human extinction would be motivation enough. Nope! Some things you might try are:

- If you see something beautiful and clever, consider not revealing directly that you have seen it, but rather find an already-prestigious mathematician, hint at it to them, and hope that they “discover it” for themselves and publish it. That way they’ll become invested in the health of that subfield, and help sell it to their colleagues.

- Make it sound connected to existing popular / prestigious math areas and open problems (ideally by finding and promoting actual legitimate connections, but branding and vibes can substitute in a pinch)

- Make it sound connected to future funding opportunities (ideally by finding and promoting actual legitimate future funding opportunities, but branding and vibes can substitute in a pinch)

The above is tongue-in-cheek—obviously I do not endorse conducting oneself in an undignified and manipulative manner, and I notice that I mostly don’t do any of these things myself, despite having a strong wish that more academic neuroscientists would work on certain problems that I care about [LW · GW].

Part 3: The aesthetic of effort

In competitive gymnastics, there’s no goal except to impress the judges. Consequently, the judges learn to be impressed by people perfectly executing skills that are conspicuously difficult to execute. And indeed, if too many people can perfectly execute a skill, then the judges stop being impressed by it, and instead look for more difficult skills.

I think there’s an echo of that dynamic in the context of academia and peer review.

My favorite example is that there’s a simple idea related to AI alignment, which was well explained in a couple sentences in a 2018 blog post by Abram Demski. (See “the easy problem of wireheading” here [LW · GW].) A few months after I read that, a DeepMind group published a 36-page arxiv paper (see also companion blog post) full of obvious signals of effort, including gridworld models, causal influence diagrams, and so on. But the upshot of that paper was basically the same idea as those couple sentences in a blog post.

My point in bringing that up is not that there was absolutely no value-add in the extra 35.9 pages going from the sentences-in-a-blog-post to the arxiv paper. Of course there was! My point is rather (1) Those blog post sentences would have been at least as helpful as the paper for at least most of the paper’s audience, (2) Nevertheless, despite the value of those blog post sentences, they could not possibly have been published in a peer-reviewed, citable, CV-enhancing way. It just looks too simple. It does not match “the aesthetic of effort”.

Another example: There was a nice 2020 paper by Rohin Shah, Stuart Russell, et al., “Benefits of assistance over reward learning”. It was helpfully explaining a possibly-confusing conceptual point. It would have made a nice little blog post. Alas! After the authors translated their nice little conceptual clarification into academic-ese, including thorough literature reviews, formalizations, and so on, it came out to 22 pages. (UPDATE: Rohin comments [LW(p) · GW(p)] that “I don't think the main paper would have been much shorter if we'd aimed to write a blog post…”. I apologize for the error.) And then it got panned by peer reviewers, mostly for not being sufficiently surprising and novel. So maybe this example mostly belongs in Part 1 above. But I have a strong guess that the reviewers were also unhappy that even those 22 pages did demonstrate enough performative effort. For example, one reviewer complained that “there were no computational results shown in the main paper”. This reviewer didn’t say anything about why computational results would have helped make the paper better! The absence of computational results was treated as self-evidently bad.

(Needless to say, I’m not opposed to conspicuously-effortful things!! Sometimes that’s the best way to figure out something important. I’m just saying that conspicuous effort, in and of itself, should be treated by everyone as a cost, not a benefit.)

Part 4: Some general points

This obviously isn’t just about academia

For example, a recent post by @bhauth [LW · GW], entitled “story-based decision making” [LW · GW] has a fun discussion of some of the “aesthetics” subconsciously used by investors when they judge startup company pitches.

Aesthetics-of-success can be sticky due to signaling issues

If Bob does something that fails by the usual standards-of-success, nobody can tell whether Bob could have succeeded by the usual standards-of-success if he had wanted to, but he doesn’t want to because he’s marching to the beat of a different drummer—or whether Bob just isn’t as skillful and hardworking as other people. So there’s a lemons problem.

Aesthetics-of-success are invisible to exactly the people most impacted by them

There’s a tendency to buy into these aesthetics and see them as the obviously appropriate and correct way to judge success, as opposed to contingent cultural impositions.

People generally only become aware of an aesthetic-of-success when they rebel against it. Otherwise they’re blind to the fact that it exists at all. I’m sure that the three items above are three out of a much longer list of “aesthetics of what constitutes good work in academia”. But those three have always annoyed me, so of course I am hyper-aware of them.

To illustrate this blindness, consider:

- “That’s not ‘trendy’! It’s just ‘good important work’!”, says the scientist.

- “That’s not ‘trendy’! It’s just ‘beautiful and chic’!”, says the clothing designer.

- “That’s not ‘performative effort to signal technical skill’! It’s just ‘being thorough and careful’!” says the scientist.

- “That’s not ‘performative effort to signal technical skill’! It’s just ‘elegant and impressive’” says the Olympic gymnast.

(One time I suggested to a friend in the construction industry that future generations would view all-glass office buildings, greige interiors, etc., as “very 2020s”, and he gave me a look, like that thought had never crossed his mind before. To him, other decades have characteristic style trends reflecting the fickle winds of fashion and culture, but ours? Of course not. We merely design things in the natural, objectively-sensible way!)

If your aesthetics-of-success are bad, so will be your “research taste”

People on this forum often talk [? · GW] about “developing research taste”. The definition of “good research taste” is “ability to find research directions that will lead to successful projects”. Therefore, if your “aesthetic sense of what a successful project would ideally wind up looking like” is corrupted, your notion of “good research taste” will wind up corrupted as well—optimized towards a bad target.

Homework problem

What “aesthetics” are you using to recognize success in your own writing, projects, and other pursuits? And what kinds of problematic distortions might it lead to?

12 comments

Comments sorted by top scores.

comment by Seth Herd · 2024-03-11T23:16:45.381Z · LW(p) · GW(p)

I think the structure of Alignment Forum vs. academic journals solves a surprising number of the problems you mention. It creates a different structure for both publication and prestige. More on this at the end.

It was kind of cathartic to read this. I've spent some time thinking about the inefficiencies of academia, but hadn't put together a theory this crisp. My 23 years in academic cognitive psychology and cognitive neuroscience would have been insanely frustrating if I hadn't been working on lab funding. I resolved going in that I wasn't going to play the publish-or-perish game and jump through a bunch of strange hoops to do what would be publicly regarded as "good work".

I think this is a good high-level theory of what's wrong with academia. I think one problem is that academic fields don't have a mandate to produce useful progress, just progress. It's a matter of inmates running the asylum. This all makes some sense, since the routes to making useful progress aren't obvious, and non-experts shouldn't be directly in charge of the directions of scientific progress; but there's clearly something missing when no one along the line has more than a passing motivation to select problems for impact.

Around 2006 I heard Tal Yarkoni, a brilliant young scientist, give a talk on the structural problems of science and its publication model. (He's now ex-scientist as many brilliant young scientists become these days). The changes he advocated were almost precisely the publication and prestige model of the Alignment Forum. It allows publications of any length and format, and provides a public time stamp for when ideas were contributed and developed. It also provides a public record, in the form of karma scores, for how valuable the scientific community found that publication. This only works in a closed community of experts, which is why I'm mentioning AF and not LW. One's karma score is publicly visible as a sum-total-of-community-appreciation of that person's work.

This public record of appreciation breaks an important deadlocking incentive structure in the traditional scientific publication model: If you're going to find fault with a prominent theory, your publication of it had better be damned good (or rather "good" by the vague aesthetic judgments you discuss). Otherewise you've just earned a negative valence from everyone who likes that theory and/or the people that have advocated it, with little to show for it. I think that's why there's little market for the type of analysis you mention, in which someone goes through the literature in painstaking detail to resolve a controversy in the litterature, and then finds no publication outlet for their hard work.

This is all downstream of the current scientific model that's roughly an advocacy model. As in law, it's considered good and proper to vigorously advocate for a theory even if you don't personally think it's likely to be true. This might make sense in law, but in academia it's the reason we sometimes say that science advances one funeral at a time. The effect of motivated reasoning combined with the advocacy norm cause scientists to advocate their favorite wrong theory unto their deathbed, and be lauded by most of their peers for doing so.

The rationalist stance of asking that people demonstrate their worth by changing their mind in the face of new evidence is present in science, but it seemed to me much less common than the advocacy norm. This rationalist norm provides partial resistance to the effects of motivated reasoning. That is worth it's own post, but I'm not sure I'll get around to writing it before the singularity.

These are all reasons that the best science is often done outside of academia.

Anyway, nice thought-provoking article.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-03-12T22:17:26.955Z · LW(p) · GW(p)

Yeah, something like the alignment forum would actually be pretty good, and while LW/AF has a lot of problems, lots of it is mostly attributable to the people and culture around here, rather than their merits.

LW/AF tools would be extremely helpful for a lot of scientists, once you divorce the culture from it.

comment by Nathan Young · 2024-03-15T00:23:30.344Z · LW(p) · GW(p)

When he put it all together, he ended up with a different conclusion from what you would get if you just read the abstracts. It was a completely novel piece of work that reviewed this whole evidence base at a level of thoroughness that had never been done before, came out with a conclusion that was different from what you naively would have thought, which concluded his best estimate is that, at current margins, we could cut incarceration and there would be no expected impact on crime. He did all that. Then, he started submitting it to journals. It’s gotten rejected from a large number of journals by now [laughter]. I mean starting with the most prestigious ones and then going to the less.…

Why doesn't OpenPhil found a journal? Feels like they could say it's the journal of last resort initially but it probably would pict up status, especially if it contained only true, useful and relevant things.

comment by LGS · 2024-03-12T21:29:36.179Z · LW(p) · GW(p)

I'd say that LessWrong has an even stronger aesthetic of effort than academia. It is virtually impossible to have a highly-voted lesswrong post without it being long, even though many top posts can be summarized in as little as 1-2 paragraphs.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-03-12T22:27:01.459Z · LW(p) · GW(p)

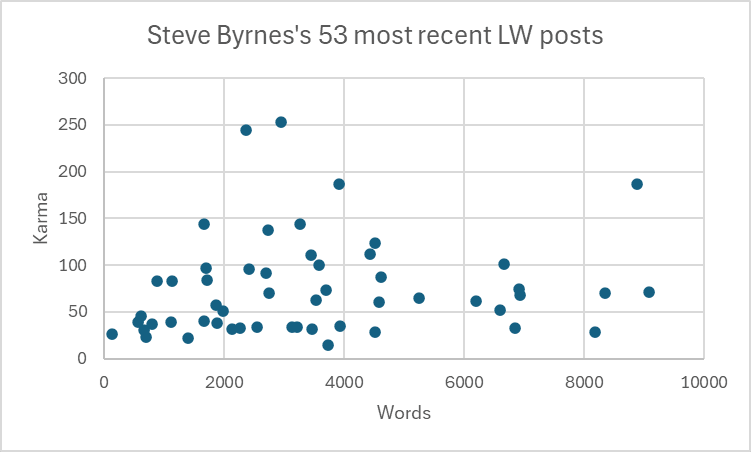

Hmm, I notice a pretty strong negative correlation between how long it takes me to write a blog post and how much karma it gets. For example, very recently I spent like a month of full-time work to write two posts on social status (karma = 71 [LW · GW] & 36 [LW · GW]), then I took a break to catch up on my to-do list, in the course of which I would sometimes spend a few hours dashing off a little post, and there have been three posts in that category, and their karma is 57 [LW · GW], 60 [LW · GW], 121 (this one). So, 20ish times less effort, somewhat more karma. This is totally in line with my normal expectations.

I think that’s because if I’m spending a month on a blog post then it’s probably going to be full of boring technical details such that it’s not fun to read, and if I spend a few hours on a blog post like this one, it’s gonna consist of stories and rants and wild speculation and so on, which is more fun to read.

In terms of word count, here you go, I did the experiment:

I could make a long list of “advice” to get lots of lesswrong karma (but that probably actually makes a post less valuable), but I don’t think “conspicuous signals of effort” would be one of them. Instead it would be things like: Give it a clickbaity title & intro, Make it about an ongoing hot debate (e.g. the eternal battle between “yay Eliezer” vibes versus “boo Eliezer” vibes, or the debate over whether p(doom) is high versus low, AGI timelines, etc.), Make it reinforce popular rationalist tribal beliefs (yay YIMBY, boo FDA, etc.—this post is an example), make it an easy read, don’t mention AI because the AI tag gets penalized by default in the frontpage ranking, etc. My impression is that length per se is not particularly rewarded in terms of LW karma, and that the kind of “rigor” that would be well-received in peer-review (e.g. comprehensive lit reviews) is a negative in terms of lesswrong karma.

Of course this is stupid, because karma is meaningless internet points, and the obvious right answer is to basically not care about lesswrong karma in the first place. Instead I recommend metrics like “My former self would have learned a lot from reading this” or “This constitutes meaningful progress on such-and-such long-term project that I’m pursuing and care about”. For example, I have a number of super-low-karma posts that I feel great pride and affinity towards. I am not doing month-long research projects because it’s a good way to get karma, which it’s not, but rather because it’s a good way to make progress on my long-term research agenda. :)

Replies from: kave, LGS↑ comment by kave · 2024-03-13T00:54:19.024Z · LW(p) · GW(p)

How many people read your post is probably meaningful to you, and karma affects that a lot.

I say this because I certainly care about how many people read which posts, so it's kind of sad when karma doesn't track value in the post (though of course brevity and ease of reading are also important and valuable).

↑ comment by LGS · 2024-03-13T06:02:40.099Z · LW(p) · GW(p)

This is interesting, but how do you explain the observation that LW posts are frequently much much longer than they need to be to convey their main point? They take forever to get started ("what this NOT arguing: [list of 10 points]" etc) and take forever to finish.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-03-13T12:56:28.773Z · LW(p) · GW(p)

Point 1: I think “writing less concisely than would be ideal” is the natural default for writers, so we don’t need to look to incentives to explain it. Pick up any book of writing advice and it will say that, right? “You have to kill your darlings”, “If I had more time, I would have written a shorter letter”, etc.

Point 2: I don’t know if this applies to you-in-particular, but there’s a systematic dynamic where readers generally somewhat underestimate the ideal length of a piece of nonfiction writing. The problem is, the writer is writing for a heterogeneous audience of readers. Different readers are coming in with different confusions, different topics-of-interest, different depths-of-interest, etc. So you can imagine, for example, that every reader really only benefits from 70% of the prose … but it’s a different 70% for different readers. Then each individual reader will be complaining that it’s unnecessarily long, but actually it can’t be cut at all without totally losing a bunch of the audience.

(To be clear, I think both of these are true—Point 2 is not meant as a denial to Point 1; not all extra length is adding anything. I think the solution is to both try to write concisely and make it easy for the reader to recognize and skip over the parts that they don’t need to read, for example with good headings and a summary / table-of-contents at the top. Making it fun to read can also somewhat substitute for making it quick to read.)

comment by Rohin Shah (rohinmshah) · 2024-03-13T13:32:53.056Z · LW(p) · GW(p)

It was helpfully explaining a possibly-confusing conceptual point. It would have made a nice little blog post. Alas! After the authors translated their nice little conceptual clarification into academic-ese, including thorough literature reviews, formalizations, and so on, it came out to 22 pages.

Fwiw I don't think the main paper would have been much shorter if we'd aimed to write a blog post instead, unless we changed our intended audience. It's a sufficiently nuanced conceptual point that you do need most of the content that is in there.

We could have avoided the appendices, but then we're relying on people to trust us when we make a claim that something is a theorem, since we're not showing the proof. We could have avoided implementing the examples in a real codebase, though I do think iterating on the examples in actual code made them better, and also people wouldn't have believed us when we said you can solve this with deep RL (in fact even after we actually implemented it some people still didn't believe me, or at least were very confused, when I said that).

Iirc I was more annoyed by the peer reviews for similar reasons to what you say.

(Btw you can see some of my thoughts on this topic in the answer to "So what does academia care about, and how is it different from useful research?" in my FAQ.)

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-03-13T14:09:33.518Z · LW(p) · GW(p)

Fwiw I don't think the main paper would have been much shorter if we'd aimed to write a blog post instead…

Oops. Thanks. I should have checked more carefully before writing that. I was wrong and have now put a correction into the post.

comment by Michael Roe (michael-roe) · 2024-03-11T19:35:06.396Z · LW(p) · GW(p)

As someone who has worked in both academia and industrial research labs, in both cases you can claim either academic publcation or real-world impact as a success wrt getting promoted ...

a) I got a paper about this published in a top-ranking journal; vs,

b) look, those guys are now selling a product based on this thing I invented

(in an industrial research lab, "those guys" had better be the product division of your company; if you're an academic funded by DARPA, "those guys" being anyone who is paying taxes to the US government is just great)

comment by Review Bot · 2024-03-12T20:48:41.698Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?