[Intro to brain-like-AGI safety] 15. Conclusion: Open problems, how to help, AMA

post by Steven Byrnes (steve2152) · 2022-05-17T15:11:12.397Z · LW · GW · 10 commentsContents

15.1 Post summary / Table of contents 15.2 Open problems 15.2.1 Open problems that look like normal neuroscience 15.2.1.1 The “Is Steve full of crap when he talks about neuroscience?” research program — ⭐⭐⭐⭐ 15.2.1.2 The “Reverse-engineer human social instincts” research program — ⭐⭐⭐⭐⭐ 15.2.2 Open problems that look like normal computer science 15.2.2.1 The “Make the biggest and best open-source human-legible world-model / web-of-knowledge that we can” research program — ⭐⭐⭐ 15.2.2.2 The “Easy-to-use super-secure sandbox for AGIs” research program — ⭐⭐⭐ 15.2.3 Open problems that involve explicitly talking about AGIs 15.2.3.1 The “Edge-cases / conservatism / concept extrapolation” research program — ⭐⭐⭐⭐⭐ 15.2.3.2 The “Rigorously prove anything whatsoever about the meaning of things in a learned-from-scratch world-model” research program — ⭐⭐⭐⭐⭐ 15.2.3.3 The “Solving the whole problem” research program — ⭐⭐⭐⭐⭐ 15.3 How to get involved 15.3.1 Funding situation 15.3.2 Jobs, organizations, training programs, community, etc. 15.3.2.1 …For AGI safety (a.k.a. AI alignment) in general 15.3.2.2 …More specifically related to this series 15.4 Conclusion: 8 takeaway messages Changelog None 11 comments

(Last revised: July 2024. See changelog at the bottom.)

15.1 Post summary / Table of contents

This is the final post of the “Intro to brain-like-AGI safety” post series [? · GW]! Thanks for reading this far!

- In Section 15.2, I’ll list seven open problems that came up in the previous posts. I’m putting them all here in one place for the convenience of potential researchers and funders.

- In Section 15.3, I’ll offer some brief remarks on practical aspects of doing AGI safety (a.k.a. AI alignment) research, including funding sources, connecting to the relevant research community, and where to learn more.

- In Section 15.4, I’ll wrap up with 8 takeaway messages that I hope readers will have gotten out of this series.

Since this is the “Conclusion” post, feel free to use the comment section for more general discussion (or to “ask me anything”), even if it’s not related to this particular post.

15.2 Open problems

This is not, by any stretch of the imagination, a complete list of open problems whose progress would help with brain-like-AGI safety, let alone with the more general topic of Safe & Beneficial AGI (see Post #1, Section 1.2 [AF · GW]). Rather, these are just some of the topics that came up in this series, with ratings proportional to how enthusiastic I am about them.

I’ll split the various open problems into three categories: “Open problems that look like normal neuroscience”, “Open problems that look like normal computer science”, and “Open problems that require explicitly talking about AGIs”. This division is for readers’ convenience; you might, for example, have a boss, funding source, or tenure committee who thinks that AGI Safety is stupid, and in that case you might want to avoid the third category. (However, don’t give up so soon—see discussion in Section 15.3.1 below.)

15.2.1 Open problems that look like normal neuroscience

15.2.1.1 The “Is Steve full of crap when he talks about neuroscience?” research program — ⭐⭐⭐⭐

If you didn’t notice, Posts #2 [AF · GW]–#7 [AF · GW] are full of grand theorizing and bold claims about how the human brain works. It would be nice to know if those claims are actually true!!

If those neuroscience posts are a bunch of baloney, then I think we should throw out not only those posts, but the whole rest of this series too.

In the text of those posts, you’ll see various suggestions and pointers as to why I believe the various neuroscience claims that I made. But a careful, well-researched analysis has yet to be written, as far as I’m aware. (Or if it has, send me a link! Nothing would make me happier than learning that I’m reinventing the wheel by saying things that are already well-established and widely-accepted.)

I give this research program a priority score of 4 stars out of 5. Why not 5? Two things:

- It loses half a star because I have utterly-unjustifiable overconfidence that my neuroscience claims are not, in fact, a bunch of baloney, and therefore this research program would look more like nailing down some of the finer details, and less like throwing this whole post series in the garbage.

- It loses another half star because I think there are some delicate corners of this research program where it gets uncomfortably close to the “unravel the gory details of the brain’s learning-from-scratch algorithms [AF · GW]” research program, a research program to which I assign negative 5 stars, because I’d like to make more progress on how and whether we can safely use a brain-like AGI, long before we figure out how to build one. (See Differential Technology Development discussion in Post #1, Section 1.7 [AF · GW].)

15.2.1.2 The “Reverse-engineer human social instincts” research program — ⭐⭐⭐⭐⭐

Assuming that Posts #2 [AF · GW]–#7 [AF · GW] are not, in fact, a bunch of baloney, the implication is that there are circuits for various “innate reactions” that underlie human social instincts, they are located somewhere in the “Steering Subsystem” part of the brain [AF · GW] (roughly the hypothalamus and brainstem), and they are relatively simple input-output functions. The goal: figure out exactly what those input-output functions are, and how they lead (after within-lifetime learning) to our social and moral thoughts and behaviors.

See Post #12 [AF · GW] for why I think this research program is very good for AGI safety, and Post #13 [AF · GW] for more discussion of roughly what kinds of circuits and explanations we should be looking for.

Here’s a (somewhat caricatured) more ML-oriented perspective on this same research program: It’s widely agreed that the human brain within-lifetime learning algorithm involves reinforcement learning (RL)—for example, after you touch the hot stove once, you don’t do it again. As with any RL algorithm, we can ask two questions:

- How does the brain’s RL algorithm work?

- What exactly is the reward function?

These questions are (more-or-less) independent. For example, to study question A experimentally, you don’t need a full answer to question B; all you need is at least one way to create a positive reward, and at least one way to create a negative reward, to use in your experiments. That’s easy: Rats like eating cheese, and rats dislike getting electrocuted. Done!

My impression is that neuroscientists have produced many thousands of papers on question A, and practically none directly addressing question B. But I think question B is much more important for AGI safety. And the social-instincts-related parts of the reward function, which are upstream of morality-related intuitions, are most important of all.

I give this research program a priority score of 5 stars out of 5, for reasons discussed in Posts #12 [AF · GW]–#13 [AF · GW].

15.2.2 Open problems that look like normal computer science

15.2.2.1 The “Make the biggest and best open-source human-legible world-model / web-of-knowledge that we can” research program — ⭐⭐⭐

I first talked about this in a post “Let’s buy out Cyc, for use in AGI interpretability systems?” [LW · GW] (Despite the post title, I’m not overly tied to Cyc in particular; if today’s machine learning magic can get the same job done better and cheaper, that’s great.)

I expect that future AGIs will build and continually expand their own world-models, and those world-models will eventually grow to terabytes of information and beyond, and will include brilliant innovative concepts that humans have never thought of, and can’t understand without years of study (or at all). Basically, we’ll have our work cut out in making sense of an AGI’s world-model. So what do we do? (No, “run away screaming” isn’t an option.) It seems to me that if we have our own giant human-legible world-model, that would be a powerful tool in our arsenal as we attack the problem of understanding the AGI’s world-model. The bigger and better the human-legible world-model, the more helpful it would be.

To be more specific, in previous posts I’ve mentioned three reasons that having a huge, awesome, open-source human-legible world-model might be helpful:

- For non-learning-from-scratch initialization—see Post #11, Section 11.3.1 [AF · GW]. By default, I expect that an AGI’s world-model and Thought Assessors (roughly, RL value function) will be “learned from scratch” in the Post #2 sense [AF · GW]. That means that an “infant AGI” will be thrashing around in the best case, and doing dangerous planning against our interests in the worst case, as we try to sculpt its preferences in a human-friendly direction. It would be awfully nice if we could not initialize from scratch, so as to avoid that problem. It’s far from clear to me that a non-learning-from-scratch approach will be possible at all, but if it is, having a huge awesome human-legible world-model at our disposal would presumably help.

- As a list of concept labels for “ersatz interpretability”—see Post #14, Section 14.2.3 [AF · GW]. Cyc, for example, has hundreds of thousands of concepts, which are considerably more specific than English-language words—for example, a single word with 10 definitions would get split into 10 Cyc concepts with 10 different names. If we have a nice concept-list like that, and we have a bunch of labeled examples, then we can use supervised learning (or more simply, cross-correlation) to look for signs that particular patterns of AGI neural net activations are related to that AGI “thinking about” certain concepts.

- As a “reference world-model” for "real" (or even rigorous) interpretability—see Post #14, Section 14.5 [AF · GW]. This would involve digging deeper into both an AGI’s world-model and the open-source human-legible “reference world-model”, finding areas of deep structural similarity that overlap with the cross-correlations mentioned above, and inferring that these are really talking about the same aspects of the world. As discussed in that post [AF · GW], I give this a low probability of success (related: discussion of “ontology mismatches” here), but extremely high reward if it does succeed.

I give this research program a priority score of 3 stars out of 5, because I don’t have super high confidence that any of those three stories are both real and extremely impactful. I dunno, maybe there’s a 50% chance that, even if we had a super-awesome open-source human-legible world-model, future AGI programmers wouldn’t wind up using it, or else that it would only be marginally better than a mediocre open-source human-legible world-model.

(Note that other people also want really good open-source human-legible world-models for different reasons that don’t overlap with my own—e.g. as discussed here. That’s great! All the more reason to work on this!)

15.2.2.2 The “Easy-to-use super-secure sandbox for AGIs” research program — ⭐⭐⭐

Recall from above: By default, I expect that an AGI’s world-model and Thought Assessors (roughly, RL value function) will be “learned from scratch” in the Post #2 sense [AF · GW]. That means that an “infant AGI” will be thrashing around in the best case, and doing dangerous planning against our interests in the worst case, as we try to sculpt its preferences in a human-friendly direction.

Given that, it would be nice to have a super-secure sandbox environment in which the “infant AGI” can do whatever learning it needs to do without escaping onto the internet or otherwise causing chaos.

Some possible objections:

- Possible Objection #1: A perfectly secure sandbox is not realistic. That might be true, I dunno. But I’m not talking about security against a superintelligent AGI, but rather against an “infant AGI” whose motivations and understanding of the world are still in flux. In that context, I think a more-secure sandbox is meaningfully better than a less-secure sandbox, even if neither is perfect. By the time the AGI is powerful enough to escape any imperfect sandbox, we’ll have already (hopefully!) installed in it the motivation not to do so.

- Possible Objection #2: We can already make a reasonably (albeit imperfectly) secure sandbox. Again, that might be true; I wouldn’t know either way. But I’m especially interested in whether future AGI programmers will actually use the best secure sandbox that we can build, under deeply cynical assumptions about the motivation and security skills of those programmers. (Related: “alignment tax” [LW · GW].) That means that the super-secure sandbox needs to be polished, to be decked out with every feature that anyone could possibly want, to be user-friendly, to carry negligible performance penalty, and to be compatible with every aspect of how programmers actually train and run massive machine learning jobs. I suspect that there’s room for improvement on all these counts.

I give this research program a priority score of 3 stars out of 5, mostly because I don’t know that much about this topic, and therefore I don’t feel comfortable being its outspoken champion.

(As in the previous section, there are meanwhile other people who also want easy-to-use super-secure sandboxes for AGIs, for different reasons than I do—such as here [LW · GW]. Great! All the more reason to get going!)

15.2.3 Open problems that involve explicitly talking about AGIs

15.2.3.1 The “Edge-cases / conservatism / concept extrapolation” research program — ⭐⭐⭐⭐⭐

Humans can easily learn the meaning of abstract concepts like “being a rock star”, just by observing the world, pattern-matching to previously-seen examples, etc. Moreover, having learned that concept, humans can want (assign positive valence to) that concept, mainly as a result of repeatedly getting reward signals while that concept was active in their mind (see Post #9, Section 9.3 [AF · GW]). This seems to suggest a general strategy for controlling brain-like AGIs: prod the AGIs to learn particular concepts like “being honest” and “being helpful” via labeled examples, and then ensure that those concepts get positive valence, and then we’re done!

However, concepts are built out of a web of statistical associations, and as soon as we go to out-of-distribution edge-cases, those associations break down, and so does the concept. If there’s a religious fundamentalist who believes in a false god, are you being “helpful” if you deconvert them? The best answer is “I don’t know, it depends on exactly what you mean by ‘helpful’”. Such an action matches well to some of the connotations / associations of the “helpfulness” concept, but matches quite poorly to other connotations / associations.

So prodding the AGI to learn and like certain abstract concepts seems like the start of a good plan, but only if we have a principled approach to making the AGI refine those concepts, in a way we endorse, upon encountering edge-cases. And here, I don’t have any great ideas.

See Post #14, Section 14.4 [AF · GW] for further discussion.

Side note: If you’re really motivated by this research program, one option might be applying for a job at AlignedAI. Their co-founder Stuart Armstrong originally suggested “concept extrapolation” as a research program (and coined the term), and I believe that this is their main research focus. Given Stuart Armstrong’s long history of rigorous thinking about AGI safety, I’m cautiously optimistic that AlignedAI will work towards solutions that will scale to the superintelligent AGIs of tomorrow, instead of just narrowly targeting the AI systems of today, as happens far too often.

I give this research program a priority score of 5 stars out of 5. Solving this problem would get us at least much of the way towards knowing how to build “Controlled AGIs” (in the Post #14 [AF · GW] sense).

15.2.3.2 The “Rigorously prove anything whatsoever about the meaning of things in a learned-from-scratch world-model” research program — ⭐⭐⭐⭐⭐

The brain-like AGI will presumably learn-from-scratch [AF · GW] a giant multi-terabyte unlabeled generative world-model. The AGI’s goals and desires will all be defined in terms of the contents of that world-model (Post #9, Section 9.2 [AF · GW]). And ideally, we’d like to make confident claims, or better yet prove theorems, about the AGI’s goals and desires. Doing so would seem to require proving things about the “meaning” of the entries in this complicated, constantly-growing world-model. How do we do that? I don’t know.

See discussion in Post #14, Section 14.5 [AF · GW].

There’s some work in this general vicinity at Alignment Research Center, which does excellent work and is hiring. (See the discourse on ELK [? · GW].) And see also the various lines of research discussed in Towards Guaranteed Safe AI (Dalrymple et al., 2024). But as far as I know, making progress here is a hard problem that needs new ideas, if it’s even possible.

I give this research program a priority score of 5 stars out of 5. Maybe it’s intractable, but it sure as heck would be impactful. It would, after all, give us complete confidence that we understand what an AGI is trying to do.

15.2.3.3 The “Solving the whole problem” research program — ⭐⭐⭐⭐⭐

This is the sort of thing I was doing in Posts #12 [AF · GW] and #14 [AF · GW]. We need to tie everything together into a plausible story, figure out what’s missing, and crystallize how to move forward. If you read those posts, you’ll see that there’s a lot of work yet to do—for example, we need a much better plan for training data / training environments, and I didn’t even mention important ingredients like sandbox test protocols. But many of the design considerations seem to be interconnected, such that I can’t easily split it out into multiple different research programs. So this is my catch-all category for all that stuff. (Also, don’t forget from Post #1 Section 1.2 [LW · GW] that this series is restricted to the technical AGI safety problem, and there’s a whole separate horrific minefield surrounding who will building AGI, what will they do with it, what about careless actors [LW · GW], etc.)

(See also: Research productivity tip: “Solve The Whole Problem Day” [LW · GW].)

I give this research program a priority score of 5 stars out of 5, for obvious reasons.

15.3 How to get involved

(Warning: this section may become rapidly out-of-date. Last updated July 2024.)

15.3.1 Funding situation

If you care about AGI safety (a.k.a. “AI alignment”), and your goal is to help with AGI safety, it’s extremely nice to get funding from a funding source that has the same goal.

Of course, it’s also possible to get funding from more traditional sources, e.g. government science funding, and use it in an AGI-safety-promoting way. But then you have to strike a compromise between “things that would help AGI safety” and “things that would impress / satisfy the funding source”. My advice and experience is that this kind of compromise is really bad. I spent some time exploring this kind of compromise strategy early on in my journey into AGI safety; I had been warned that it was bad, and I still dramatically underestimated just how bad it was. If it’s any indication, I wound up hobby-blogging about AGI safety in little bits of free time squeezed between a full-time job and two young kids, and I think that was dramatically more useful than if I had devoted all day every day to my best available “compromise” project. (More on that here [LW · GW].)

(You can replace “compromise in order to satisfy my funding source” with “compromise in order to satisfy my thesis committee”, or “compromise in order to satisfy my boss”, or “compromise in order to have an impressive CV for my future job search / tenure review”, etc., as appropriate.)

Anyway, as luck would have it, there are numerous funding sources that are explicitly motivated by AGI safety. They’re mostly a handful of philanthropic foundations, along with sporadic grant offerings from governments or companies. Funding for technical AGI safety (the topic of this series) seems to be [EA · GW] in the tens of millions of dollars a year right now, maybe, depending in large part on your own particular spicy hot take about what does or doesn’t count as real technical AGI safety research.

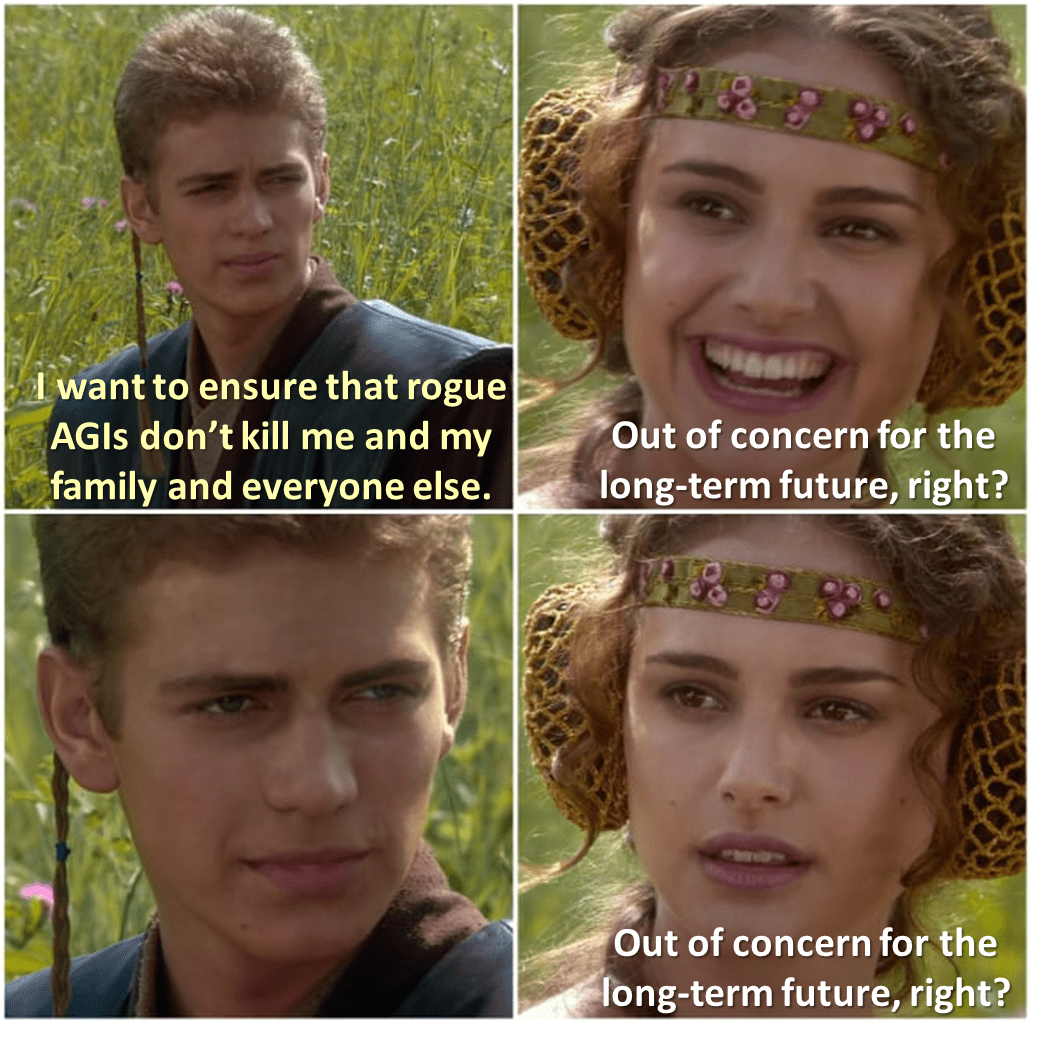

Many but not all AGI-safety-concerned philanthropists (and researchers like myself) are connected to the Effective Altruism (EA) movement, a community / movement / project devoted to trying to work out how best to make the world a better place, and then go do it. Within EA is a “longtermism [? · GW]” wing, consisting of people acting out of concern for the long-term future, where “long term” might mean millions or billions or trillions of years. Longtermists tend to be especially motivated to prevent irreversible human-extinction-scale catastrophes like out-of-control AGIs, bio-engineered pandemics, etc. Thus, in EA circles, AGI safety is sometimes referred to as a “longtermist cause area”, which is kinda disorienting given that we’re talking about how to prevent a potential calamity that could well happen in my lifetime (see timelines discussion in Posts #2 [AF · GW]–#3 [AF · GW]). Oh well.

The connection between EA and AGI safety has become sufficiently strong that (1) some of the best conferences to go to as an AGI safety researcher are the EA Global / EAGx conferences, and (2) people started calling me an EA, and cold-emailing me to invite me to EA events, totally unprompted, for the sole reason that I had recently started blogging about AGI safety in my free time (note that this was in 2019-2020, when blogging about AGI safety was far less common than today).

Anyway, the point is: AGI-safety-motivated funding exists—whether you’re in academia, or an independent researcher (like me when I first published this series in 2022 [LW(p) · GW(p)]), or in a nonprofit (like me as I revise the series in 2024). How do you get it? By and large, you probably need to either:

- Demonstrate that you personally understand the AGI safety problem well enough to have good judgment about what research would be helpful, or

- Jump onto a concrete research program that AGI-safety-experts have already endorsed as being important and useful.

As for #2, one reason that Section 15.2 exists is that I’m trying to help this process along. I imagine that at least some of those seven research programs above could (with some work) be fleshed out into a nice, specific, funded Request For Proposals. Email me if you think you could help, or want me to keep you in the loop.

As for #1—Yeah, go for it!! AGI safety is a fascinating field (IMHO), and it’s sufficiently “young” that you can get up to the research frontier much faster than would be possible in, for example, particle physics. See the next subsection for links to resources, training courses, etc. Or I guess you can learn the field by reading and writing lots of blog posts and comments in your free time, like I did.

By the way, it’s true that the nonprofit sector in general has a reputation for shoestring budgets and underpaid, overworked employees. But philanthropy-funded AGI safety work is generally not like that. The funders want the best people, even if those people are well into their careers and saddled with mortgage payments, daycare costs, etc.—like yours truly! So there has been a strong movement towards salaries that are competitive with the for-profit sector, especially in the past couple years.

15.3.2 Jobs, organizations, training programs, community, etc.

15.3.2.1 …For AGI safety (a.k.a. AI alignment) in general

There are lots of links at the aptly-named AI Safety Support Lots-of-Links page, or you can find a more-curated list at “AI safety starter pack” [EA · GW]. To call out just a couple particularly relevant items:

- 80,000 hours is an organization devoted to helping people do good through their careers. They’re very into AGI safety, and they offer free 1-on-1 career counseling, in which they’ll tell you about relevant opportunities and connect you to relevant people. Also check out their AI safety guide, the AI-technical-safety-related episodes of their excellent podcast, and their AI-specific email list and job board. (You can also get free 1-on-1 advice through AI Safety Quest.)

- You might be reading this article on lesswrong.com, a blogging platform which has the (I think) unique feature of being simultaneously open to anyone and frequented by numerous AGI safety experts. I started blogging and commenting there when I was just starting out in my free time in 2019, and I recall finding everyone very kind and helpful, and I don’t know how else I could have gotten into the field, given my geographical and time constraints. Other active online congregation points include the EleutherAI discord, Robert Miles's discord, and AI Safety Support Slack. As for in-person local meetups / reading groups / etc., check here [? · GW] or here [? · GW], or better yet stop by your local / university EA group [? · GW] and ask them for pointers.

15.3.2.2 …More specifically related to this series

Q: Is there a community gathering place for discussing “brain-like AGI safety” (or closely-related “model-based RL AGI safety”) in particular?

A: Not really. And I'm not entirely sure that there should be, since it overlaps so much with other lines of research within AGI safety.

Q: Is there a community gathering place for discussing the overlap between neuroscience / psychology, and AGI safety / AI alignment?

A: There’s a “neuroscience & psychology” channel in the AI Safety Support Slack. You can also join the email list for PIBBSS, in case that happens again in the future.

If you want to see more different perspectives in the neuroscience / AGI safety overlap area, check out papers by Kaj Sotala; Seth Herd, David Jilk et al.; Gopal Sarma & Nick Hay; Patrick Butlin; Jan Kulveit [LW · GW]; Patrick Mineault et al. (website, paper); along with other articles by those same authors, and many others that I’m rudely forgetting.

(My own background, for what it’s worth, is in physics, not neuroscience—in fact, I knew essentially no neuroscience as recently as 2019. I got interested in neuroscience to help answer my burning questions related to AGI safety, not the other way around.)

Q: Hey Steve, can I work with you?

A: While I’m not currently interested in hiring or supervising anyone, I am always very happy to collaborate and correspond. There’s plenty of work to do! Email me if you want to chat!

15.4 Conclusion: 8 takeaway messages

Thanks for reading! I hope that, in this series, I have successfully conveyed the following messages:

- We know enough neuroscience to say concrete things about what “brain-like AGI” would look like (Posts #1 [AF · GW]–#9 [AF · GW]);

- In particular, while “brain-like AGI” would be different from any known algorithm, its safety-relevant aspects would have much in common with actor-critic model-based reinforcement learning (Posts #6 [AF · GW], #8 [AF · GW], #9 [AF · GW]);

- “Understanding the brain well enough to make brain-like AGI” is a dramatically easier task than “understanding the brain” full stop—if the former is loosely analogous to knowing how to train a ConvNet, then the latter would be loosely analogous to knowing how to train a ConvNet, and achieving full mechanistic interpretability of the resulting trained model, and understanding every aspect of integrated circuit physics and engineering, etc. Indeed, making brain-like AGI should not be thought of as a far-off sci-fi hypothetical, but rather as an ongoing project which may well reach completion within the next decade or two (Posts #2 [AF · GW]–#3 [AF · GW]);

- In the absence of a good technical plan for avoiding accidents, researchers experimenting with brain-like AGI algorithms will probably accidentally create out-of-control AGIs, with catastrophic consequences up to and including human extinction (Posts #1 [AF · GW], #3 [AF · GW], #10 [AF · GW], #11 [AF · GW]);

- Right now, we don’t have any good technical plan for avoiding out-of-control AGI accidents (Posts #10 [AF · GW]–#14 [AF · GW]);

- Creating such a plan seems neither to be straightforward, nor to be a necessary step on the path to creating powerful brain-like AGIs—and therefore we shouldn’t assume that such a plan will be created in the future “by default” (Post #3 [AF · GW]);

- There’s a lot of work that we can do right now to help make progress towards such a plan (Posts #12 [AF · GW]–#15);

- There is funding available to do this work, including as a viable career option (Post #15).

For my part, I’m going to keep working on the various research directions in Section 15.2 above—follow me on X (Twitter) or RSS [LW · GW] or other social media, or check my website for updates. I hope you consider helping too, since I’m in way the hell over my head!

Thanks for reading, and again, the comments here are open to general discussion / ask-me-anything.

Changelog

July 2024: Since the initial version, I’ve made only minor changes, like making sure the links and descriptions are up-to-date, and adding a couple minor links in Section 15.2 to more recent work that has come out since I first wrote this in 2022.

Feb 2025: Added “NeuroAI for AI Safety” to my slapdash list in Section 15.3.2.2 of other work in the area of overlap between neuroscience & AGI safety.

10 comments

Comments sorted by top scores.

comment by Not Relevant (not-relevant) · 2022-05-18T02:35:13.918Z · LW(p) · GW(p)

Steve, your AI safety musings are my favorite thing tonally on here. Thanks for all the effort you put into this series. I learned a lot.

To just ask the direct question, how do we reverse-engineering human social instincts? Do we:

- Need to be neuroscience PhDs?

- Need to just think a lot about what base generators of human developmental phenomena are, maybe by staring at a lot of babies?

- Guess, and hope we get to build enough AGIs that we notice which ones seem to be coming out normal-acting before one of them kills us?

- Something else you've thought of?

I don't have a great sense for the possibility space.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-05-18T14:04:01.858Z · LW(p) · GW(p)

Thanks!

how do we reverse-engineering human social instincts?

I don't know! Getting a better idea is high on my to-do list. :)

I guess broadly, the four things are (1) “armchair theorizing” (as I was doing in Post #13 [AF · GW]), (2) reading / evaluating existing theories, (3) reading / evaluating existing experimental data (I expect mainly neuroscience data, but perhaps also psychology etc.), (4) doing new experiments to gather new data.

As an example of (3) & (4), I can imagine something like “the connectomics and microstructure of the something-or-other nucleus of the hypothalamus” providing a helpful hint about what's going on; this information might or might not already be in the literature.

Neuroscience experiments are presumably best done by academic groups. I hope that neuroscience PhDs are not necessary for the other things, because I don’t have one myself :-P

AFAICT, in a neuroscience PhD, you might learn lots of facts about the hypothalamus and brainstem, but those facts almost definitely won’t be incorporated into a theoretical framework involving (A) calculating reward functions for RL (as in Section 15.2.1.2), (B) the symbol grounding problem (as in Post #13 [AF · GW]). I really like that theoretical framework, but it seems uncommon in the literature.

FYI, here on lesswrong, “Gunnar_Zarncke” & “jpyykko” have been trying to compile a list of possible instincts, or something like that, Gunnar emailed me but I haven’t had time to look closely and have an opinion; just wanted to mention that.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2022-05-18T22:34:57.137Z · LW(p) · GW(p)

Thank you for mentioning us. In fact, the list of candidate instincts got longer. It isn't in a presentable form yet, but please message me if you want to talk about it.

The list is more theoretical, and I want to prove that this is not just theoretical speculation by operationalizing it. jpyykko is already working on something more on the symbolic level.

Rohin Shaw recommended that I find people to work with me on alignment, and I teamed up with two LWers. We just started work on a project to simulate instinct-cued learning in a toy-world. I think this project fits research point 15.2.1.2, and I wonder now how to apply for funding - we would probably need it if we want to simulate with somewhat larger NNs.

Replies from: Linda Linsefors, Linda Linsefors↑ comment by Linda Linsefors · 2022-05-19T12:16:10.748Z · LW(p) · GW(p)

I'm also interested to se the list of candidate instincts.

Regarding funding, how much money do you need? Just order of magnitude. There lots of diffrent grants and where you want to appy depends on the size of your budget.

↑ comment by Gunnar_Zarncke · 2022-05-19T19:47:26.575Z · LW(p) · GW(p)

Small models can be trained on the developer machines, but to speed things up and to be able to run bigger nets we could use AWS GPU spot instances which cost 1$/hour. In my company with relatively small models we pay >1000$/month. We will probably reach that unless we are really successful.

↑ comment by Linda Linsefors · 2022-05-19T12:14:59.627Z · LW(p) · GW(p)

comment by Raemon · 2022-05-27T23:48:17.140Z · LW(p) · GW(p)

Curated. Thanks to Steve for writing up all these thoughts throughout the sequence.

Normally when we curate a post-from-a-sequence-that-represents-the-sequence, we end up curating the first post, which points roughly to where the sequence is going. I like the fact that this time, there was a post that does a particularly nice job tying-everything-together, while sending people off with a roadmap of further work to do.

I appreciate the honesty about your epistemic state about the "Is Steve full of crap research program?". :P

comment by TurnTrout · 2022-05-22T00:13:04.949Z · LW(p) · GW(p)

Big agreement & signal boost & push for funding on The “Reverse-engineer human social instincts” research program: Yes, please, please figure out how human social instincts are generated! I think this is incredibly important, for reasons which will become obvious due to several posts I'll probably put out this summer.

Replies from: charbel-raphael-segerie↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2022-05-22T07:47:50.235Z · LW(p) · GW(p)

Further signal boost.

comment by Zach Stein-Perlman · 2022-07-01T04:00:10.960Z · LW(p) · GW(p)

How optimistic should we be about alignment & safety for brain-like-AGI, relative to prosaic AGI?

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-07-05T19:38:04.572Z · LW(p) · GW(p)

That’s a hard question for me to answer, because I have a real vivid inside-view picture of researchers eventually building AGI via the “brain-like” route, and what the resulting AGI would look like, whereas when I try to imagine other R&D routes to AGI, I can’t, except by imagining that future researchers will converge towards the brain-like path. :-P

In particular:

- I think a model trained purely on self-supervised learning (not RL) would be safer than brain-like AGI. But I don’t think a model trained purely on self-supervised learning would be “AGI” in the first place. (For various reasons, one of which is the discussion of “RL-on-thoughts” here [LW · GW].) And those two beliefs are very related!! So then I do Murphyjitsu [? · GW] by saying to myself: OK but if I’m wrong, and self-supervised learning did scale to AGI, how did that happen? Then I imagine future models acquiring, umm, “agency”, either by future programmers explicitly incorporating RL etc. deeply into the training / architecture, or else by agency emerging somehow e.g. because it’s “simulating” agential humans., and either of those brings us much closer to brain-like AGI, and thus I stop feeling like it’s safer than brain-like AGI.

- I do think the Risks-From-Learned-Optimization model could in principle create AGI (obviously, that’s how evolution made humans). But I don’t think it would happen, for reasons in Post 8 [LW · GW]. If it did happen, the only way I can concretely imagine it happening is that the inner model is a brain-like AGI. In that case, I think it would be worse than making brain-like AGI directly, for reasons in §8.3.3 [LW · GW].