[Intro to brain-like-AGI safety] 6. Big picture of motivation, decision-making, and RL

post by Steven Byrnes (steve2152) · 2022-03-02T15:26:11.828Z · LW · GW · 17 commentsContents

6.1 Post summary / Table of contents 6.2 Big picture 6.2.1 Relation to “two subsystems” 6.2.2 Quick run-through 6.3 The “Thought Generator” 6.3.1 Overview 6.3.2 Thought Generator inputs 6.3.3 Thought Generator outputs 6.4 Valence, value, and rewards 6.4.1 Teaser for my “Valence” series 6.4.2 The striatum (“learned value function” / “critic”) guesses the valence, but the Steering Subsystem may choose to override 6.5 Decisions involve not only simultaneous but also sequential comparisons of value 6.5.1 Made-up example of what comparison-of-sequential-thoughts might look like in a simpler animal 6.5.2 Comparison-of-sequential-thoughts: why it’s necessary 6.5.3 Comparison-of-sequential-thoughts: how it might have evolved 6.6 Common misconceptions 6.6.1 The distinction between internalized ego-syntonic desires and externalized ego-dystonic urges is unrelated to Learning Subsystem vs. Steering Subsystem 6.6.1.1 The explanation I like 6.6.1.2 The explanation I don’t like 6.6.2 The Learning Subsystem and Steering Subsystem are not two agents Changelog None 17 comments

(Last revised: July 2024. See changelog at the bottom.)

6.1 Post summary / Table of contents

Part of the “Intro to brain-like-AGI safety” post series [? · GW].

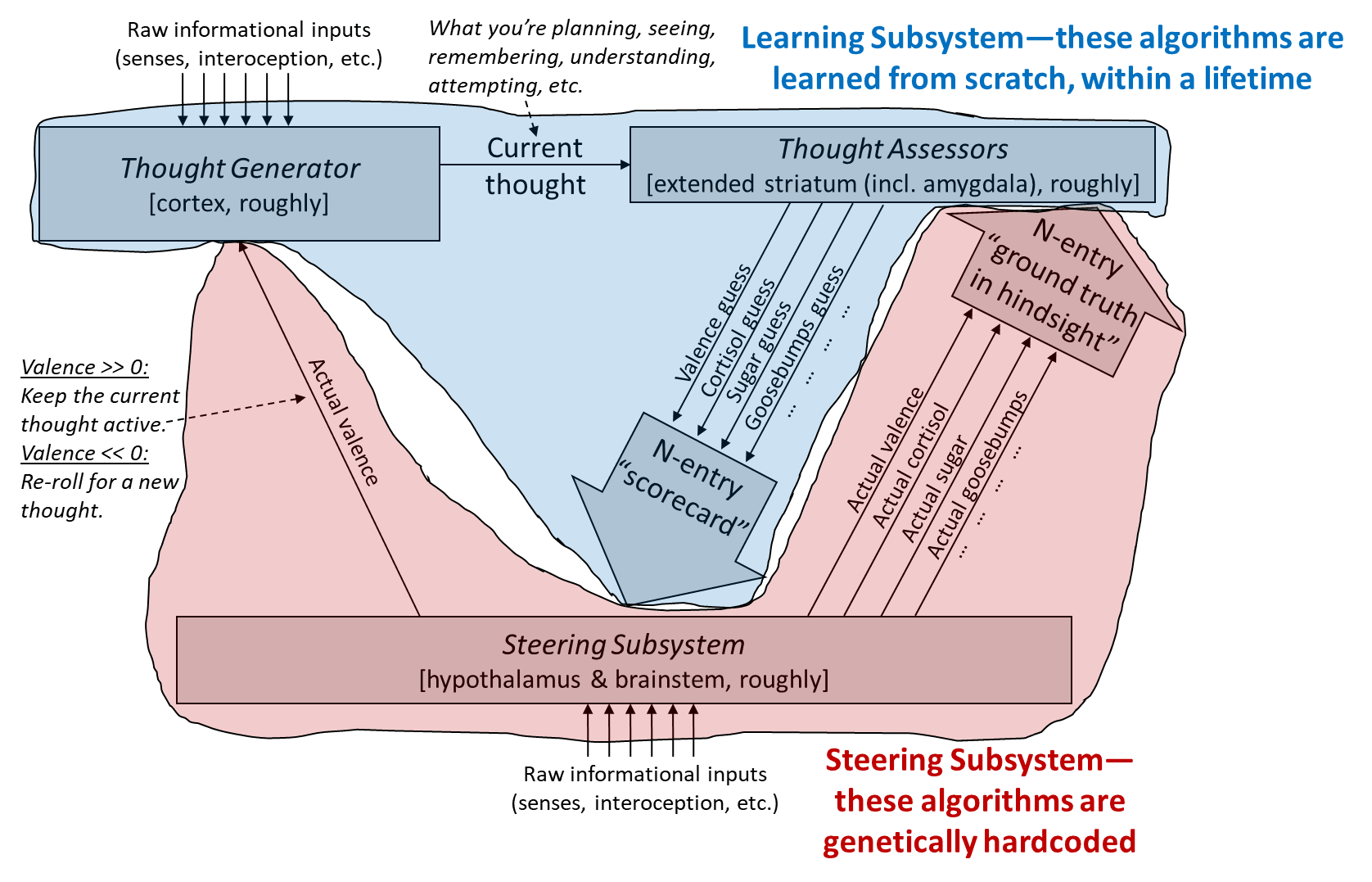

Thus far in the series, Post #1 [AF · GW] set out some definitions and motivations (what is “brain-like AGI safety” and why should we care?), and Posts #2 [AF · GW] & #3 [AF · GW] split the brain into a Learning Subsystem (cortex, striatum, cerebellum, amygdala, etc.) that “learns from scratch” using learning algorithms, and a Steering Subsystem (hypothalamus, brainstem, etc.) that is mostly genetically-hardwired and executes innate species-specific instincts and reactions.

Then in Post #4 [AF · GW], I talked about the “short-term predictor”, a circuit which learns, via supervised learning, to predict a signal in advance of its arrival, but only by perhaps a fraction of a second. Post #5 [AF · GW] then argued that if we form a closed loop involving both a set of short-term predictors in the extended striatum (within the Learning Subsystem) and a corresponding set of hardwired circuits in the Steering Subsystem, we can get a “long-term predictor”. I noted that the “long-term predictor” circuit is closely related to temporal difference (TD) learning.

Now in this post, we fill in the last ingredients—roughly the “actor” part of actor-critic reinforcement learning (RL)—to get a whole big picture of motivation and decision-making in the human brain. (I’m saying “human brain” to be specific, but it would be a similar story in any other mammal, and to a lesser extent in any vertebrate.)

The reason I care about motivation and decision-making is that if we eventually build brain-like AGIs (cf. Post #1 [AF · GW]), we’ll want to build them so that they have some motivations (e.g. being helpful) and not others (e.g. escaping human control and self-reproducing around the internet). Much more on that topic in later posts.

Teaser for upcoming posts: The next post (#7 [AF · GW]) will walk through a concrete example of the model in this post, where we can watch an innate drive lead to the formation of an explicit goal, and adoption and execution of a plan to accomplish it. Then starting in Post #8 [AF · GW] we’ll switch gears, and from then on you can expect substantially less discussion of neuroscience and more discussion of AGI safety (with the exception of one more neuroscience post towards the end).

Unless otherwise mentioned, everything in this post is “things that I believe right now”, as opposed to neuroscience consensus. (Pro tip: there is never a neuroscience consensus.) Relatedly, I will make minimal effort to connect my hypotheses to others in the literature (I tried a little bit in the last section of this later post [LW · GW]), but I’m happy to chat about that in the comments section or by email.

Table of contents:

- In Section 6.2, I’ll present a big picture of motivation and decision-making in the human brain, and walk through how it works. The rest of the post will go through different parts of that picture in more detail. If you’re in a hurry, I suggest reading to the end of Section 6.2 and then quitting.

- In Section 6.3, I’ll talk about the so-called “Thought Generator”, comprising (I think) the dorsolateral prefrontal cortex, sensory cortex, and other areas. (For ML readers familiar with “actor-critic model-based RL”, the Thought Generator is more-or-less a combination of the “actor” and the “model”.) I’ll talk about the inputs and outputs of this module, and briefly sketch how its algorithm relates to neuroanatomy.

- In Section 6.4, I’ll talk about how values and rewards work in this picture, including the reward signal that drives learning and decision-making in the Thought Generator.

- In Section 6.5, I’ll go into a bit more detail about how and why thinking and decision-making needs to involve not only simultaneous comparisons (i.e., a mechanism for generating different options in parallel and selecting the most promising one), but also sequential comparisons (i.e., thinking of something, then thinking of something else, and comparing those two thoughts). For example, you might think: “Hmm, I think I’ll go to the gym. Actually, what if I went to the café instead?”

- In Section 6.6, I’ll comment on the common misconception that the Learning Subsystem is the home of ego-syntonic, internalized “deep desires”, whereas the Steering Subsystem is the home of ego-dystonic, externalized “primal urges”. I will advocate more generally against thinking of the two subsystems as two agents in competition; a better mental model is that the two subsystems are two interconnected gears in a single machine.

6.2 Big picture

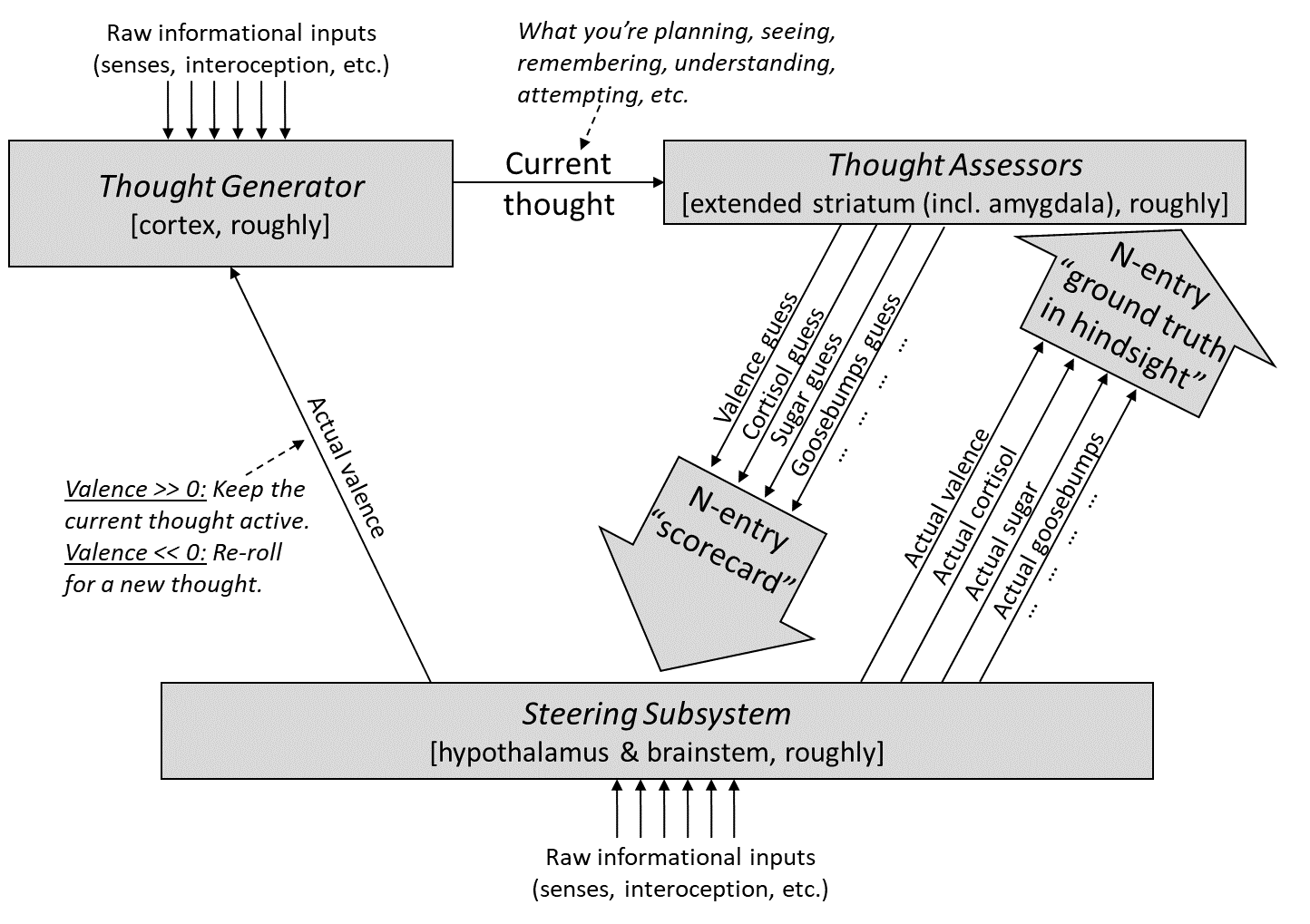

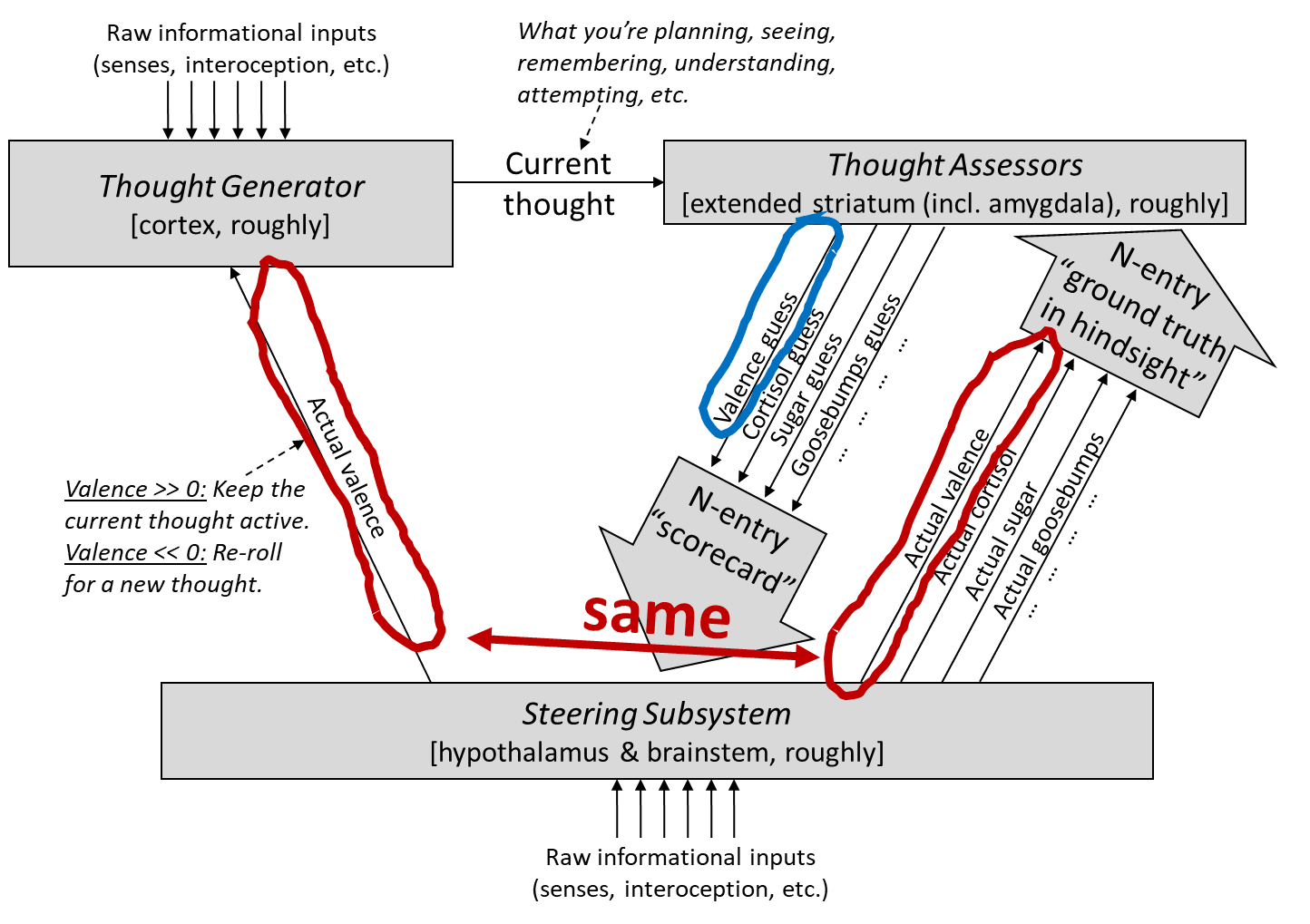

Yes, this is literally a big picture, unless you’re reading on your cell phone. You saw a chunk of it in the previous post (Section 5.4) [AF · GW], but now there are a few more pieces.

There’s a lot here, but don’t worry, I’ll walk through it bit by bit.

6.2.1 Relation to “two subsystems”

Here’s how this diagram fits in with my “two subsystems” perspective, first discussed in Post #3 [AF · GW]:

6.2.2 Quick run-through

Before getting bogged down in details later in the post, I’ll just talk through the diagram:

1. Thought Generator generates a thought: The Thought Generator settles on a “thought”, out of the high-dimensional space of every thought you can possibly think at that moment. Note that this space of possibilities, while vast, is constrained by current sensory input, past sensory input, and everything else in your learned world-model. For example, if you’re sitting at a desk in Boston, it’s generally not possible for you to think that you’re scuba-diving off the coast of Madagascar. Likewise, it’s generally not possible for you to imagine a static spinning spherical octagon. But you can make a plan, or whistle a tune, or recall a memory, or reflect on the meaning of life, etc.

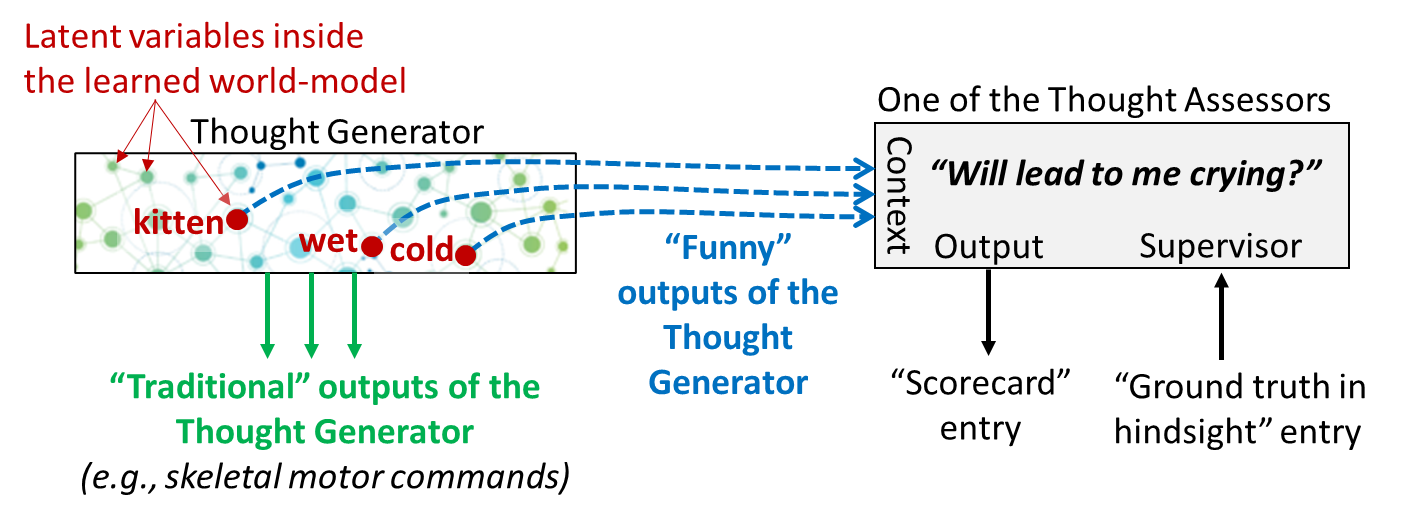

2. Thought Assessors grade the thought via a “scorecard”: The Thought Assessors are a set of perhaps hundreds or thousands of “short-term predictor” circuits (Post #4) [AF · GW], which I discussed more specifically in the previous post (#5) [AF · GW]. Each predictor is trained to predict a different signal from the Steering Subsystem. From the perspective of a Thought Assessor, everything in the Thought Generator (not just outputs but also latent variables) is context [AF · GW]—information that they can use to make better predictions. Thus, if I’m thinking the thought “I’m going to eat candy right now”, a thought-assessor can predict “high probability of tasting something sweet very soon”, based purely on the thought—it doesn’t need to rely on either external behavior or sensory inputs, although those can be relevant context too.

3. The “scorecard” solves the interface problem between a learned-from-scratch world model and genetically-hardwired circuitry: Remember, the current thought and situation is an insanely complicated object in a high-dimensional learned-from-scratch space of “all possible thoughts you can think”. Yet we need the relatively simple, genetically-hardwired circuitry of the Steering Subsystem to analyze the current thought, including issuing a judgment of whether the thought is high-value or low-value (see Section 6.4 below), and whether the thought calls for cortisol release or goosebumps or pupil-dilation, etc. The “scorecard” solves that interfacing problem! It distills any possible thought / belief / plan / etc. into a genetically-standardized form that can be plugged directly into genetically-hardcoded circuitry.

4. The Steering Subsystem runs some genetically-hardwired algorithm: Its inputs are (1) the scorecard from the previous step and (2) various other information sources—pain, metabolic status, etc., all coming from its own brainstem sensory-processing system (see Post #3, Section 3.2.1 [AF · GW]). Its outputs include emitting hormones, motor commands, etc., as well as sending the “ground truth” supervisory signals shown in the diagram.[1]

5. The Thought Generator keeps or discards thoughts based on whether the Steering Subsystem likes them: More specifically, there’s a ground-truth valence [LW · GW] (or in RL terms, some weird mixture of value function and reward function, see Post #5, Section 5.3.1 [LW · GW]). When the valence is very positive, the current thought gets “strengthened”, sticks around, and can start controlling behavior and summoning follow-up thoughts, whereas when the valence is very negative, the current thought gets immediately discarded, and the Thought Generator summons a new thought instead.

6. Both the Thought Generator and the Thought Assessor “learn from scratch” [AF · GW] over the course of a lifetime, thanks in part to these supervisory signals from the Steering Subsystem. Specifically, the Thought Assessors learn to make better and better predictions of their “ground truth in hindsight” signal (a form of Supervised Learning—see Post #4 [AF · GW]), while the Thought Generator learns to disproportionately generate high-value thoughts. (The Thought Generator learning-from-scratch process also involves predictive learning of sensory inputs—Post #4, Section 4.7 [AF · GW].)

6.3 The “Thought Generator”

6.3.1 Overview

Go back to the big-picture diagram at the top. At the top-left, we find the Thought Generator. In terms of actor-critic model-based RL, the Thought Generator is roughly a combination of “actor” + “model”, but not “critic”. (“Critic” was discussed in the previous post, and more on it below.)

At our somewhat-oversimplified level of analysis, we can think of the “thoughts” generated by the Thought Generator as a combination of constraints (from predictive learning of sensory inputs) and choices (guided by reinforcement learning). In more detail:

- Constraints on the Thought Generator come from sensory input information, and ultimately from predictive learning of sensory inputs (Post #4, Section 4.7 [AF · GW]). For example, I cannot think the thought: There is a cat on my desk and I’m looking at it right now. There is no such cat, regrettably, and I can’t just will myself to see something that obviously isn’t there. I can imagine seeing it, but that’s not the same thought. Likewise, I can’t imagine a static spinning spherical octagon, because that’s not allowed by my generative world-model—those concepts are self-contradictory. Yet another source of constraints on the Thought Generator is what I call “involuntary attention”—like how if you’re very itchy, it’s hard to think about anything else (see table here [LW · GW]).

- But within those constraints, there’s more than one possible thought my brain can think at any given time. It can call up a memory, it can ponder the meaning of life, it can zone out, it can issue a command to stand up, etc. I claim that these “choices” are decided by a reinforcement learning (RL) system. This RL system is one of the main topics of this post.

6.3.2 Thought Generator inputs

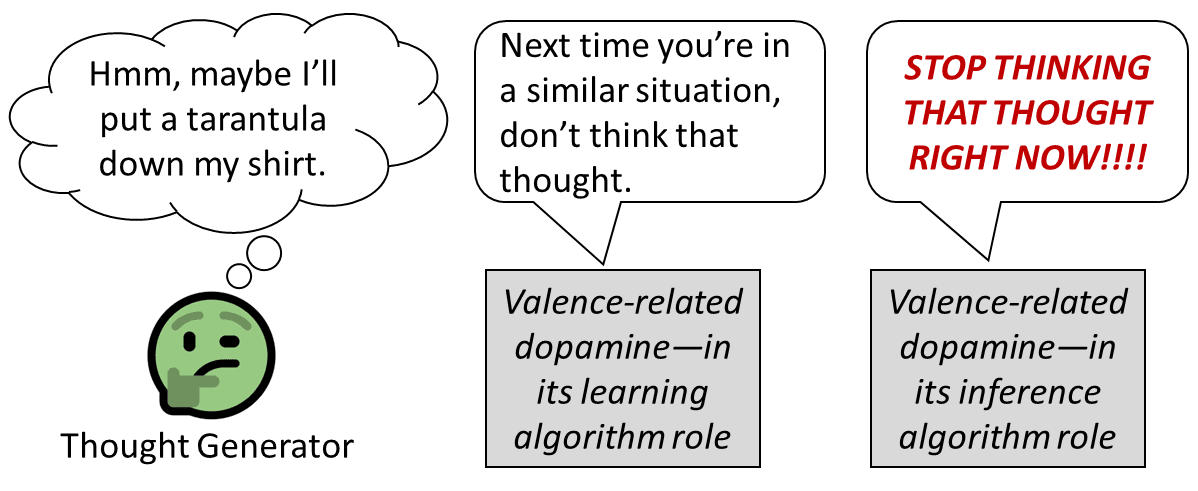

The Thought Generator has a number of inputs, including sensory inputs and hyperparameter-shifting neuromodulators. But the main one of interest for this post is valence [LW · GW], a.k.a. ground-truth value, a.k.a. reward. (See previous post [LW · GW] for why I’m equating “ground-truth value” with “reward”, contrary to how it normally works in RL.) I’ll talk about that in more detail later, but we can think of it as an estimate of whether a thought is good or bad, operationalized as “worth sticking with and pursuing” versus “deserving to be discarded so we can re-roll[2] for a new thought”. This signal is important both for learning to think better thoughts in the future, and for thinking good thoughts right now:

6.3.3 Thought Generator outputs

There are meanwhile a lot of signals going out of the Thought Generator. Some are what we intuitively think of as “outputs”—e.g., skeletal motor commands. Other outgoing signals are, well, a bit funny…

Recall the idea of “context” from Section 4.3 of Post #4 [AF · GW]: The Thought Assessors are short-term predictors, and a short-term predictor can in principle grab any signal in the brain and leverage it to improve its ability to predict its target signal. So if the Thought Generator has a world-model, then somewhere in the world-model is a configuration of latent variable activations that encode the concept “baby kittens shivering in the cold rain”. We wouldn’t normally think of those as “output signals”—I just said in the last sentence that they’re latent variables! But as it happens, the “will lead to crying” Thought Assessor has grabbed a copy of those latent variables to use as context signals, and gradually learned through experience that these particular signals are strong predictors of me crying.

Now, as an adult, these “baby kittens in the cold rain” neurons in my Thought Generator are living a double-life:

- They are latent variables in my world-model—i.e., they and their web of connections will help me parse an image of baby kittens in the rain, if I see one, and to reason about what would happen to them, etc.

- Activating these neurons, e.g. via imagination, is a way for me to call up tears on command.

6.4 Valence, value, and rewards

6.4.1 Teaser for my “Valence” series [LW · GW]

“Valence”, the way I use the term, is a specific signal on the left side of the (somewhat oversimplified) diagram at the top. As mentioned above (Section 6.3.2), it signals whether a thought is good (so I should keep thinking it, and think follow-up thoughts, and take associated actions), or bad (so I should start thinking about something else instead).

For psychology readers, think of valence as somewhat more related to “motivational valence” than “hedonic valence”, although it’s not a perfect match to either.

For AI readers, think of valence as a funny mix of value-function and reward. Like reward, it can represent innate “ground truth” (e.g. pain is bad, sweet tastes are good, etc.) But like the value function, it can be forward-looking—as in the example of the previous post [LW · GW], if I’m going upstairs to get a sweater, that thought has positive valence now for reasons related to what’s going to happen in the future (I’ll be wearing my sweater).

While I think there are multiple RL systems in the brain [LW · GW], the “valence” signal is definitionally associated with the “main” RL system, a.k.a. what I sometimes call “success-in-life RL”—the RL system that navigates cross-domain tradeoffs (e.g. is it worth suffering X amount of pain in order to get Y amount of social status?), in order to estimate what’s a good plan all things considered.

My 5-post “Valence” series [LW · GW] (published in late 2023) notes that, given the obvious central importance of this “valence” brain signal‚ it should illuminate many aspects of our everyday mental life. And indeed, it does! I argue in the series that valence is critical to understanding desires, preferences, values, goals, judgements, vibes, brainstorming, motivated reasoning, the halo effect, social status, depression, mania, and more.

6.4.2 The striatum (“learned value function” / “critic”) guesses the valence, but the Steering Subsystem may choose to override

There are two types of “valence” in the diagram (it looks like three, but the two red ones are the same):

The blue-circled signal is the valence guess from the corresponding Thought Assessor in the striatum. The red-circled signal (again, it’s one signal drawn twice) is the corresponding “ground truth” for what the valence guess should have been.

Just like the other “long-term predictors” discussed in the previous post [AF · GW], the Steering Subsystem can choose between “defer-to-predictor mode” and “override mode”. In the former, it sets the red equal to the blue, as if to say “OK, Thought Assessor, sure, I’ll take your word for it”. In the latter, it ignores the Thought Assessor’s proposal, and its own internal circuitry outputs some different value.[3]

Why might the Steering Subsystem override the Thought Assessor’s value estimate? Two factors:

- First, the Steering Subsystem might be acting on information from other (non-value) Thought Assessors. For example, in the Dead Sea Salt Experiment (see this post, Section 2.1 [LW · GW]), the valence estimator says “bad things are going to happen”, but meanwhile the Steering Subsystem is getting an “I’m about to taste salt” prediction in the context of a state of salt-deprivation. So the Steering Subsystem says to itself “Whatever is happening now is very promising; the valence estimator doesn’t know what it’s talking about!”

- Second, the Steering Subsystem might be acting on its own information sources, independent of the Learning Subsystem. In particular, the Steering Subsystem has its own sensory-processing system (see Post #3, Section 3.2.1 [AF · GW]), which can sense biologically-relevant cues like pain status, hunger status, taste inputs, the sight of a slithering snake, the smell of a potential mate, and so on. All these things and more can be possible bases for overruling the Thought Assessor, i.e., setting the red-circled signal to a different value than the blue-circled one.

6.5 Decisions involve not only simultaneous but also sequential comparisons of value

Here’s a “simultaneous” model of decision-making, as described by The Hungry Brain by Stephan Guyenet in the context of studies on lamprey fish:

Each region of the pallium [= lamprey equivalent of cortex] sends a connection to a particular region of the striatum, which (via other parts of the basal ganglia) returns a connection back to the same starting location in the pallium. This means that each region of the pallium is reciprocally connected with the striatum via a specific loop that regulates a particular action…. For example, there’s a loop for tracking prey, a loop for fleeing predators, a loop for anchoring to a rock, and so on. Each region of the pallium is constantly whispering to the striatum to let it trigger its behavior, and the striatum always says “no!” by default. In the appropriate situation, the region’s whisper becomes a shout, and the striatum allows it to use the muscles to execute its action.

I endorse this as part of my model of decision-making, but only part of it. Specifically, this is one of the things that’s happening when the Thought Generator generates a thought. Different simultaneous possibilities are being compared.

The other part of my model is comparisons of sequential thoughts. You think a thought, and then you think a different thought (possibly very different, or possibly a refinement of the first thought), and the two are implicitly compared, and if the second thought is worse, it gets weakened such that a new thought can replace it (and the new thought might be the first thought re-establishing itself).

I could cite experiments for the sequential-comparison aspect of decision-making (e.g. Figure 5 of this paper, which is arguing the same point as I am), but do I really need to? Introspectively, it’s obvious! You think: “Hmm, I think I’ll go to the gym. Actually, what if I went to the café instead?” You’re imagining one thing, and then another thing.

And I don’t think this is is a humans-vs-lampreys thing. My hunch is that comparisons of sequential thoughts is universal in vertebrates. As an illustration of what I mean:

6.5.1 Made-up example of what comparison-of-sequential-thoughts might look like in a simpler animal

Imagine a simple, ancient, little fish swimming along, navigating to the cave where it lives. It gets to a fork in the road, ummm, “fork in the kelp forest”? Its current navigation plan involves continuing left to its cave, but it also has the option of turning right to go to the reef, where it often forages.

Seeing this path to the right, I claim that its navigation algorithm reflexively loads up a plan: “I’m will turn right and go to the reef.” Immediately, this new plan is evaluated and compared to the old plan. If the new plan seems worse than the old plan, then the new thought gets shut down, and the old thought (“I’m going to my cave”) promptly reestablishes itself. The fish continues to its cave, as originally planned, without skipping a beat. Whereas if instead the new plan seems better than the old plan, then the new plan gets strengthened, sticks around, and orchestrates motor commands. And thus the fish turns to the right and goes to the reef instead.

(In reality, I don’t know much about little ancient fish, but rats at a fork in the road maze are known to imagine both possible navigation plans in succession, based on measurements of hippocampus neurons—ref.)

6.5.2 Comparison-of-sequential-thoughts: why it’s necessary

In my view, thoughts are complicated. To think the thought “I will go to the café”, you’re not just activating some tiny cluster of dedicated go-to-the-café neurons. Instead, it’s a distributed pattern involving practically every part of the cortex. You can’t simultaneously think “I will go to the café” and “I will go to the gym”, because they would involve different activity patterns of the same pools of neurons. They would cross-talk. Thus, the only possibility is thinking the thoughts in sequence.

As a concrete example of what I have in mind, think of how a Hopfield network can’t recall twelve different memories simultaneously. It has multiple stable states, but you can only explore them sequentially, one after the other. Or think about grid cells and place cells, etc.

6.5.3 Comparison-of-sequential-thoughts: how it might have evolved

From an evolutionary perspective, I imagine that comparison-of-sequential-thoughts is a distant descendent of a very simple mechanism akin to the run-and-tumble mechanism in swimming bacteria.

In the run-and-tumble mechanism, a bacterium swims in a straight line (“runs”), and periodically changes to a new random direction (“tumbles”). But the trick is: when the bacterium’s situation / environment is getting better, it tumbles less frequently, and when it’s getting worse, it tumbles more frequently. Thus, it winds up moving in a good direction (on average, over time).

Starting with a simple mechanism like that, one can imagine adding progressively more bells and whistles. The palette of behavioral options can get more and more complex, eventually culminating in “every thought you can possibly think”. The methods of evaluating whether the current plan is good or bad can get faster and more accurate, eventually involving learning-algorithm-based predictors as in the previous post [AF · GW]. The new behavioral options to tumble into can be picked via clever learning algorithms, rather than randomly. Thus, it seems to me that there’s a smooth path all the way from something-akin-to-run-and-tumble to the intricate, finely-tuned, human brain system that I’m talking about in this series. (Other musings on run-and-tumble versus human motivation: 1, 2.)

6.6 Common misconceptions

6.6.1 The distinction between internalized ego-syntonic desires and externalized ego-dystonic urges is unrelated to Learning Subsystem vs. Steering Subsystem

(See also: my post (Brainstem, Neocortex) ≠ (Base Motivations, Honorable Motivations) [LW · GW].)

Many people (including me) have a strong intuitive distinction between ego-syntonic drives that are “part of us” or “what we want”, versus ego-dystonic drives that feel like urges which intrude upon us from the outside.

For example, a food snob might say "I love fine chocolate", while a dieter might say "I have an urge to eat fine chocolate".

6.6.1.1 The explanation I like

I would claim that these two people are basically describing the same feeling, with essentially the same neuroanatomical locations and essentially the same relation to low-level brain algorithms. But the food snob is owning that feeling, and the dieter is externalizing that feeling.

These two different self-concepts go hand-in-hand with two different “higher-order preferences”: the food snob wants to want to eat fine chocolate while the dieter wants to not want to eat fine chocolate.

This leads us to a straightforward psychological explanation for why the food snob and dieter conceptualize their feelings differently:

- The food snob finds it appealing to think of “the desire I feel for fine chocolate” as “part of who I am”. So he does.

- The dieter finds it aversive to think of “the desire I feel for fine chocolate” as “part of who I am”. So he doesn’t.

6.6.1.2 The explanation I don’t like

Many people (including Jeff Hawkins, see Post #3 [LW · GW]) notice the distinction described above, and separately, they endorse the idea (as I do) that the brain has a Learning Subsystem and Steering Subsystem (again see Post #3 [LW · GW]). They naturally suppose that these are the same thing, with “me and my deep desires” corresponding to the Learning Subsystem, and “urges that I don’t identify with” corresponding to the Steering Subsystem.

I think this model is wrong. At the very least, if you want to endorse this model, then you need to reject approximately everything I’ve written in this and my previous four posts.

In my story, if you’re trying to abstain from chocolate, but also feel an urge to eat chocolate, then:

- You have an urge to eat chocolate because the Steering Subsystem approves of the thought “I am going to eat chocolate right now”; AND

- You’re trying to abstain from chocolate because the Steering Subsystem approves of the thought “I am abstaining from chocolate”.

(Why would the Steering Subsystem approve of the latter? It depends on the individual, but it’s probably a safe bet that social instincts are involved. I’ll talk more about social instincts in Post #13 [AF · GW]. If you want an example with less complicated baggage, imagine a lactose-intolerant person trying to resist the urge to eat yummy ice cream right now, because it will make them feel really sick later on. The Steering Subsystem likes plans that result in not feeling sick, and also likes plans that result in eating yummy ice cream.)

6.6.2 The Learning Subsystem and Steering Subsystem are not two agents

Relatedly, another frequent error is treating either the Learning Subsystem or Steering Subsystem by itself as a kind of independent agent. This is wrong on both sides:

- The Learning Subsystem cannot think any thoughts unless the Steering Subsystem has endorsed those thoughts as being worthy of being thunk.

- Meanwhile, the Steering Subsystem does not understand the world, or itself. It has no explicit goals for the future. It’s just a relatively simple, hardcoded input-output machine.

As an example, the following is entirely possible:

- The Learning Subsystem generates the thought “I am going to surgically alter my own Steering Subsystem”.

- The Thought Assessors distill that thought down to the “scorecard”.

- The Steering Subsystem gets the scorecard and runs it through its hardcoded heuristics, and the result is: “Very good thought, go right ahead and do it!”

Why not, right? I’ll talk more about that example in later posts.

If you just read the above example, and you’re thinking to yourself “Ah! This is a case where the Learning Subsystem has outwitted the Steering Subsystem”, then you’re still not getting it.

(Maybe instead try imagining the Learning Subsystem & Steering Subsystem as two interconnected gears in a single machine.)

Changelog

July 2024: Since the initial version, I made a bunch of changes.

As in the previous post changelog, I’ve iterated on the “big picture” neuroscience diagrams several times since the initial version, as I learned more neuroscience.

For clarity, what I formerly labeled “Ground-truth value, a.k.a. reward” is now “Actual valence”, with a little note in the diagram about what this signal does. Relatedly, I added a bunch of links throughout this post to my more recent Valence series [LW · GW], including a very brief teaser / recap of it in Section 6.4.1. And along the same lines, I reworded the RL discussions to use the word “reward” less and “valence” more. The word “reward” is not wrong, exactly, but it has a lot of baggage which I expect to confuse readers, as explained in the previous post and Section 6.4.1.

I deleted a claim that (what I am now calling) the “valence guess” signal “doesn’t have a special role in the algorithm”. I still think the thing I was trying to say is true, but the thing I was trying to say was pretty narrow and subtle, and on balance I expect that what I wrote is far more confusing than helpful. I actually think the valence guess is centrally important, especially in long-term planning, as discussed here [LW · GW].

I also put in the example of a “static spinning spherical octagon” to illustrate of how the “constraints” in the Thought Generator are not just sensory inputs but also our semantic knowledge about how the world works. I also mentioned “involuntary attention” [LW · GW] (e.g. itching or anxious rumination) as yet another source of “constraints”.

I deleted some discussion of neuroanatomy (there used to be a Section 6.3.4), including cortico-basal ganglia thalamo-cortical loops and dopamine, because what I had written was mostly wrong, and unnecessary for the series anyway. What little I want to say on those topics mostly wound up in the previous post.

- ^

As in the previous post [AF · GW], the term “ground truth” here is a bit misleading, because sometimes the Steering Subsystem will just defer to the Thought Assessors.

- ^

“Re-roll” was originally a board game term; for example, if you previously rolled the dice to randomly generate a character, then “re-roll for a new character” would mean “you roll the dice again to randomly generate a different character”.

- ^

As in the previous post [AF · GW], I don’t really believe there is a pure dichotomy between “defer-to-predictor mode” and “override mode”. In reality, I’d bet that the Steering Subsystem can partly-but-not-entirely defer to the Thought Assessor, e.g. by taking a weighted average between the Thought Assessor and some other independent calculation.

17 comments

Comments sorted by top scores.

comment by Rafael Harth (sil-ver) · 2022-04-10T13:00:50.768Z · LW(p) · GW(p)

(Extremely speculative comment, please tell me if this is nonsense.)

If it makes sense to differentiate the "Thought Generator" and "Thought Assessor" as two separate modules, is it possible to draw a parallel to language models, which seem to have strong ability to generate sentences, but lack the ability to assess if they are good [LW · GW]?

My first reaction to this is "obviously not since the architecture is completely different, so why would they map onto each other?", but a possible answer could be "well if the brain has them as separate modules, it could mean that the two tasks require different solutions, and if one is much harder than the other, and the harder one is the assess module, that could mean language models would naturally solve just the generation first".

The related thing that I find interesting is that, a priori, it's not at all obvious that you'd have these two different modules at all (since the thought generator already receives ground truth feedback). Does this mean the distinction is deeply meaningful? Well, that depends on close to optimal the [design of the human brain] is.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-04-12T16:11:37.184Z · LW(p) · GW(p)

If it makes sense to differentiate the "Thought Generator" and "Thought Assessor" as two separate modules, is it possible to draw a parallel to language models, which seem to have strong ability to generate sentences, but lack the ability to assess if they are good [LW · GW]?

Hmm. An algorithm trained to reproduce human output is presumably being trained to imitate the input-output behavior of the whole system including Thought Generator and Thought Assessor and Steering Subsystem.

I’m trying to imagine deleting the Thought Assessor & Steering Subsystem, and replacing them with a constant positive RPE (i.e., “this is good, keep going”) signal. I think what you get is a person talking with no inhibitions whatsoever. Language models don’t match that.

a priori, it's not at all obvious that you'd have these two different modules at all (since the thought generator already receives ground truth feedback)

I think it’s a necessary design feature for good performance. Think of it this way. I’m evolution. Here are two tasks I want to solve:

(A) estimate how much a particular course-of-action advances inclusive genetic fitness,

(B) find courses of action that get a good score according to (A).

(B) obviously benefits from incorporating a learning algorithm. And if you think about it, (A) benefits from incorporating a learning algorithm as well. But the learning algorithms involved in (A) and (B) are fundamentally working at cross-purposes. (A) is the critic, (B) is the actor. They need separate training signals and separate update rules. If you try to blend them together in an end-to-end way, you just get wireheading. (I.e., if the (B) learning algorithm had free reign to update the parameters in (A), using the same update rule as (B), then it would immediately set (A) to return infinity all the time, i.e. wirehead.) (Humans can wirehead to some extent (see Post #9 [AF · GW]) but we need to explain why it doesn't happen universally and permanently within five minutes of birth.)

↑ comment by Rafael Harth (sil-ver) · 2022-04-12T19:33:23.110Z · LW(p) · GW(p)

I think what you get is a person talking with no inhibitions whatsoever. Language models don’t match that.

What do you picture a language model with no inhibitions to look like? Because if I try to imagine it, then "something that outputs reasonable sounding text until sooner or later it fails hard" seems to be a decent fit. Of course haven't thought much about the generator/assessor distinction.

I mean, surely "inhibitions" of the language model don't map onto human inhibitions, right? Like, a language model without the assessor module (or a much worse assessor module) is just as likely to be imitate someone who sounds unrealistically careful as someone who has no restraints.

I find your last paragraph convincing, but that of course makes me put more credence into the theory rather than less.

Replies from: gwern↑ comment by gwern · 2022-04-12T20:34:14.026Z · LW(p) · GW(p)

An analogy that comes to mind is sociopathy. Closely linked to fear/reward insensitivity and impulsivity. Something you see a lot in case studies of diagnosed or accounts of people who look obviously like sociopaths is that they will be going along just fine, very competent and intelligent seeming, getting away with everything, until they suddenly do something which is just reckless, pointless, useless and no sane person could possibly think they'd get away with it. Why did they do X, which caused the whole house of cards to come tumbling down and is why you are now reading this book or longform investigative piece about them? No reason. They just sorta felt like it. The impulse just came to them. Like jumping off a bridge.

Replies from: steve2152, sil-ver↑ comment by Steven Byrnes (steve2152) · 2022-04-13T17:31:18.868Z · LW(p) · GW(p)

Huh. I would have invoked a different disorder.

I think that if we replace the Thought Assessor & Steering Subsystem with the function “RPE = +∞ (regardless of what's going on)”, the result is a manic episode, and if we replace it with the function “RPE = -∞ (regardless of what's going on)”, the result is a depressive episode.

In other words, the manic episode would be kinda like the brainstem saying “Whatever thought you're thinking right now is a great thought! Whatever you're planning is an awesome plan! Go forth and carry that plan out with gusto!!!!” And the depressive episode would be kinda like the brainstem saying “Whatever thought you're thinking right now is a terrible thought. Stop thinking that thought! Think about anything else! Heck, think about nothing whatsoever! Please, anything but that thought!”

My thoughts about sociopathy are here [LW · GW]. Sociopaths can be impulsive (like everyone), but it doesn't strike me as a central characteristic, as it is in mania. I think there might sometimes be situations where a sociopath does X, and onlookers characterize it as impulsive, but in fact it's just what the sociopath wanted to do, all things considered, stemming from different preferences / different reward function. For example, my impression is that sociopaths get very bored very easily, and will do something that seems crazy and inexplicable from a neurotypical perspective, but seems a good way to alleviate boredom from their own perspective.

(Epistemic status: Very much not an expert on mania or depression, I've just read a couple papers. I've read a larger number of books and papers on sociopathy / psychopathy (which think are synonyms?), plus there were two sociopaths in my life that I got to know reasonably well, unfortunately. More of my comments about depression here [LW · GW].)

↑ comment by Rafael Harth (sil-ver) · 2022-04-12T21:16:04.163Z · LW(p) · GW(p)

Do you think this describes language models?

Replies from: gwern↑ comment by gwern · 2022-04-12T21:17:26.221Z · LW(p) · GW(p)

'Insensitivity to reward or punishment' does sound relevant...

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2022-04-12T22:06:17.557Z · LW(p) · GW(p)

Yes, but I didn't mean to ask whether it's relevant, I meant to ask whether it's accurate. Does the output of language models, in fact, feel like this? Seemed like something relevant to ask you since you've seen lots of text completions.

And if it does, what is the reason for not having long timelines? If neural networks only solved the easy part of the problem, that implies that they're a much smaller step toward AGI than many argued recently.

Replies from: gwern↑ comment by gwern · 2022-04-13T00:38:01.754Z · LW(p) · GW(p)

I said it was an analogy. You were discussing what intelligent human-level entities with inhibition control problems would hypothetically look like; well, as it happens, we do have such entities, in the form of sociopaths, and as it happens, they do not simply explode in every direction due to lacking inhibitions but often perform at high levels manipulating other humans until suddenly then they explode. This is proof of concept that you can naturally get such streaky performance without any kind of exotic setup or design. Seems relevant to mention.

comment by Gunnar_Zarncke · 2024-04-19T20:16:31.723Z · LW(p) · GW(p)

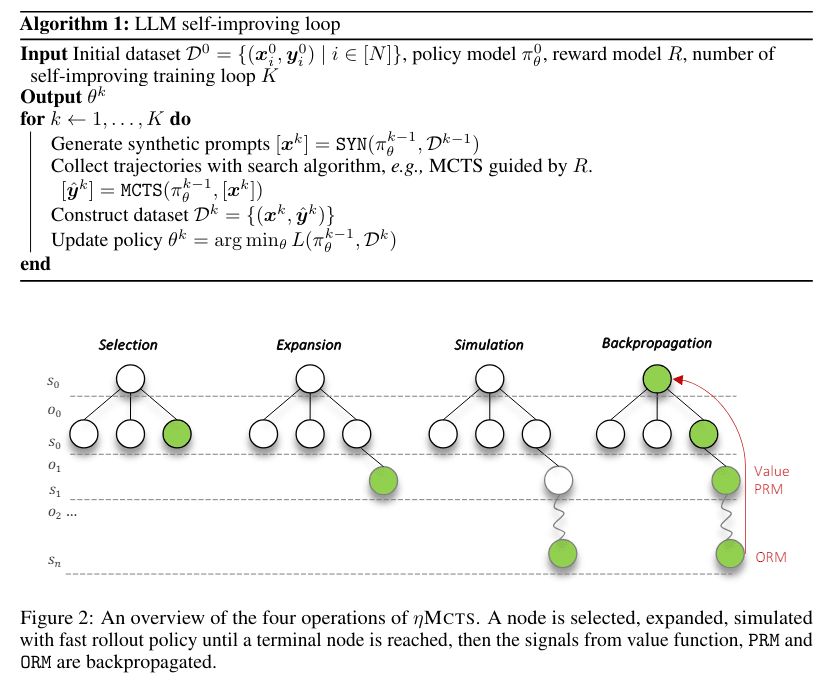

Toward Self-Improvement of LLMs via Imagination, Searching, and Criticizing

Abstract:

Despite the impressive capabilities of Large Language Models (LLMs) on various tasks, they still struggle with scenarios that involves complex reasoning and planning. Recent work proposed advanced prompting techniques and the necessity of fine-tuning with high-quality data to augment LLMs’ reasoning abilities. However, these approaches are inherently constrained by data availability and quality. In light of this, self-correction and self-learning emerge as viable solutions, employing strategies that allow LLMs to refine their outputs and learn from self-assessed rewards. Yet, the efficacy of LLMs in self-refining its response, particularly in complex reasoning and planning task, remains dubious. In this paper, we introduce ALPHALLM for the self-improvements of LLMs, which integrates Monte Carlo Tree Search (MCTS) with LLMs to establish a self-improving loop, thereby enhancing the capabilities of LLMs without additional annotations. Drawing inspiration from the success of AlphaGo, ALPHALLM addresses the unique challenges of combining MCTS with LLM for self-improvement, including data scarcity, the vastness search spaces of language tasks, and the subjective nature of feedback in language tasks. ALPHALLM is comprised of prompt synthesis component, an efficient MCTS approach tailored for language tasks, and a trio of critic models for precise feedback. Our experimental results in mathematical reasoning tasks demonstrate that ALPHALLM significantly enhances the performance of LLMs without additional annotations, showing the potential for self-improvement in LLMs

https://arxiv.org/pdf/2404.12253.pdf

This looks suspiciously like using the LLM as a Thought Generator, the MCTS roll-out as the Thought Assessor, and the reward model R as the Steering System.This would be the first LLM model that I have seen that would be amenable to brain-like steering interventions.

comment by Joe Kwon · 2022-03-04T19:36:56.035Z · LW(p) · GW(p)

Really appreciated this post and I'm especially excited for post 13 now! In the past month or two, I've been thinking about stuff like "I crave chocolate" and "I should abstain from eating chocolate" as being a result of two independent value systems (one whose policy was shaped by evolutionary pressure and one whose policy is... idk vaguely "higher order" stuff where you will endure higher states of cortisol to contribute to society or something).

I'm starting to lean away from this a little bit, and I think reading this post gave me a good idea of what your thoughts are, but it'd be really nice to get confirmation (and maybe clarification). Let me know if I should just wait for post 13. My prediction is that you believe there is a single (not dual) generator of human values, which are essentially moderated at the neurochemical level, like "level of dopamine/serotonin/cortisol". And yet, this same generator, due to our sufficiently complex "thought generator", can produce plans and thoughts such as "I should abstain from eating chocolate" even though it would be a dopamine hit in the short-term, because it can simulate forward much further down the timeline, and believes that the overall neurochemical feedback will be better than caving into eating chocolate, on a longer time horizon. Is this correct?

If so, do you believe that because social/multi-agent navigation was essential to human evolution, the policy was heavily shaped by social world related pressures, which means that even when you abstain from the chocolate, or endure pain and suffering for a "heroic" act, in the end, this can all still be attributed to the same system/generator that also sometimes has you eat sugary but unhealthy foods?

Given my angle on attempting to contribute to AI Alignment is doing stuff to better elucidate what "human values" even is, I feel like I should try to resolve the competing ideas I've absorbed from LessWrong: 2 distinct value systems vs. singular generator of values. This post was a big step for me in understanding how the latter idea can be coherent with the apparent contradictions between hedonistic and higher-level values.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-03-04T20:25:38.807Z · LW(p) · GW(p)

Thanks!

Right, I think there's one reward function (well, one reward function that's relevant for this discussion), and that for every thought we think, we're thinking it because it's rewarding to do so—or at least, more rewarding than alternative thoughts. Sometimes a thought is rewarding because it involves feeling good now, sometimes it's rewarding because it involves an expectation of feeling good in the distant future, sometimes it's rewarding because it involves an expectation that it will make your beloved friend feel good, sometimes it's rewarding because it involves an expectation that it will make your admired in-group members very impressed with you, etc.

I think that the thing that gets rewarded is thoughts / plans, not just actions / states. So we don't have to assume that the Thought Generator is proposing an action that's unrewarding now (going to the gym) in order to get into a more-rewarding state later on (being ripped). Instead, the Thought Generator can generate one thought right now, “I'm gonna go to the gym so that I can get ripped”. That one thought can be rewarding right now, because the “…so that I can get ripped” is right there in the thought, providing evidence to the brainstem that the thought should be rewarded, and that evidence can plausibly outweigh the countervailing evidence from the “I'm gonna go to the gym…” part of the thought.

I do think there's still an adjustable parameter in the brain related to time-discounting, even if the details are kinda different than in normal RL. But I don't see a strong connection between that and social instincts. For example, if you abstain from ice cream to avoid a stomach ache, that's a time-discounting thing, but it's not a social-instincts thing. It's possible that social animals in general are genetically wired to time-discount less than non-social animals, but I don't have any particular reason to expect that to be the case. Or, maybe humans in particular are genetically wired to time-discount less than other animals, I don't know, but if that's true, I still wouldn't expect that it has to do with humans being social; rather I would assume that it evolved because humans are smarter, and therefore human plans are unusually likely to work out as predicted, compared to other animals.

I think social instincts come from having things in the innate reward function that track “having high status in my in-group” and “being treated fairly” and “getting revenge” and so on. (To a first approximation.) Post #13(ish) will be a (hopefully) improved and updated version of this discussion [LW · GW] of how such things might get actually incorporated into the reward function, given the difficulties related to symbol-grounding. You might also be interested in my post (Brainstem, Neocortex) ≠ (Base Motivations, Honorable Motivations) [LW · GW].

Hope this helps, happy to talk more, either here or by phone if you think that would help. :)

comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-07-06T20:54:00.914Z · LW(p) · GW(p)

I recommend this paper on the subject for additional reading:

The basal ganglia select the expected sensory input used for predictive coding

comment by Gunnar_Zarncke · 2022-06-17T09:42:50.701Z · LW(p) · GW(p)

Relevant affective neuroscience article which seems to discuss very a very related mode:

Cross-Species Affective Neuroscience Decoding of the Primal Affective Experiences of Humans and Related Animals

Abstract:

Background

The issue of whether other animals have internally felt experiences has vexed animal behavioral science since its inception. Although most investigators remain agnostic on such contentious issues, there is now abundant experimental evidence indicating that all mammals have negatively and positively-valenced emotional networks concentrated in homologous brain regions that mediate affective experiences when animals are emotionally aroused. That is what the neuroscientific evidence indicates.

Principal Findings

The relevant lines of evidence are as follows: 1) It is easy to elicit powerful unconditioned emotional responses using localized electrical stimulation of the brain (ESB); these effects are concentrated in ancient subcortical brain regions. Seven types of emotional arousals have been described; using a special capitalized nomenclature for such primary process emotional systems, they are SEEKING, RAGE, FEAR, LUST, CARE, PANIC/GRIEF and PLAY. 2) These brain circuits are situated in homologous subcortical brain regions in all vertebrates tested. Thus, if one activates FEAR arousal circuits in rats, cats or primates, all exhibit similar fear responses. 3) All primary-process emotional-instinctual urges, even ones as complex as social PLAY, remain intact after radical neo-decortication early in life; thus, the neocortex is not essential for the generation of primary-process emotionality. 4) Using diverse measures, one can demonstrate that animals like and dislike ESB of brain regions that evoke unconditioned instinctual emotional behaviors: Such ESBs can serve as ‘rewards’ and ‘punishments’ in diverse approach and escape/avoidance learning tasks. 5) Comparable ESB of human brains yield comparable affective experiences. Thus, robust evidence indicates that raw primary-process (i.e., instinctual, unconditioned) emotional behaviors and feelings emanate from homologous brain functions in all mammals (see Appendix S1), which are regulated by higher brain regions. Such findings suggest nested-hierarchies of BrainMind affective processing, with primal emotional functions being foundational for secondary-process learning and memory mechanisms, which interface with tertiary-process cognitive-thoughtful functions of the BrainMind.

comment by wenboown · 2023-10-18T15:25:23.327Z · LW(p) · GW(p)

It’s just a relatively simple, hardcoded input-output machine.

First, I am deeply fascinated by the effort you put into organizing the thoughts around this topic and writing these articles! Please accept my respect!

I haven't read through all the articles yet so you might have mentioned the following somewhere already: I can imagine the "hardcoding" coming from our gene, thus it is "programmed" through natural selection process during evolution. So, maybe the statement "no explicit goals for the future" needs to refine a little bit? I think the Steering Subsystem may not have explicit goals for the near future, but it is there to ensure the survive of the species. Noted also that the natural selection prefers species survive over individual survive.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2023-10-18T19:16:41.406Z · LW(p) · GW(p)

Thanks!

I think it’s important to distinguish

- (A) “this thing has explicit goals for the future”, versus

- (B) “this thing was designed to accomplish one or more goals”.

For example, I, Steve, qualify as (A) because I am a thing with explicit goals for the future—among many other things, I want my children to have a good life. Meanwhile, a sandwich qualifies as (B) but not (A). The sandwich was designed and built in order to accomplish one or more goals (to be yummy, to be nutritious, etc.), but the sandwich itself does not have explicit goals for the future. It’s just a sandwich. It’s not a goal-seeking agent. Do you see the difference?

(Confusingly, I am actually an example of both (A) and (B). I’m an example of (A) because I want my children to have a good life. I’m an example of (B) because I was designed by natural selection to have a high inclusive genetic fitness.)

Now, if you’re saying that the Steering Subsystem is an example of (B), then yes I agree, that’s absolutely true. What I was saying there was that it is NOT an example of (A). Do you see what I mean?

comment by Marthinwurer (marthinwurer) · 2022-03-04T22:34:11.807Z · LW(p) · GW(p)

I've been reading through a lot of your posts and I'm trying to think of how to apply this knowledge to an RL agent, specifically for a contest (MineRL) where you're not allowed to use any hardcoded knowledge besides the architecture, training algorithm, and broadly applicable heuristics like curiosity. Unfortunately, I keep running into the hardcoded via evolution parts of your model. It doesn't seem like the steering subsystem can be replaced with just the raw reward signal, and it also doesn't seem like it can be easily learned via regular RL. Do you have any ideas on how to replace those kind of evolved systems in that kind of environment?