[Intro to brain-like-AGI safety] 5. The “long-term predictor”, and TD learning

post by Steven Byrnes (steve2152) · 2022-02-23T14:44:53.094Z · LW · GW · 27 commentsContents

5.1 Post summary / Table of contents 5.2 Toy model of a “long-term predictor” circuit 5.2.1 Toy model walkthrough part 1: static context 5.2.1.1 David-Burns-style exposure therapy—a possible real-life example of the toy model with static context? 5.2.2 Toy model walkthrough, assuming changing context 5.3 Value function calculation (TD learning) as a special case of long-term prediction 5.3.1 Switch (i.e., value = expected next reward) vs summation (i.e., value = expected sum of future rewards)? 5.3.2 Example 5.4 An array of long-term predictors involving the extended striatum & Steering Subsystem 5.5 Five reasons I like this “array of long-term predictors” picture 5.5.1 It’s a sensible way to implement a biologically-useful capability 5.5.2 It’s introspectively plausible 5.5.3 It’s evolutionary plausible 5.5.4 It’s neuroscientifically plausible 5.5.5 It offers a nice way to make sense of a wide variety of animal psychology phenomena, including Pavlovian conditioning, devaluation, and more 5.6 Conclusion Changelog None 27 comments

(Last revised: July 2024. See changelog at the bottom.)

5.1 Post summary / Table of contents

Part of the “Intro to brain-like-AGI safety” post series [? · GW].

In the previous post [AF · GW], I discussed the “short-term predictor”—a circuit which, thanks to a learning algorithm, emits an output that predicts a ground-truth supervisory signal arriving a short time (e.g. a fraction of a second) later.

In this post, I propose that we can take a short-term predictor, wrap it up into a closed loop involving a bit more circuitry, and we wind up with a new module that I call a “long-term predictor”. Just like it sounds, this circuit can make longer-term predictions, e.g. “I’m likely to eat in the next 10 minutes”. This circuit is closely related to Temporal Difference (TD) learning, as we’ll see.

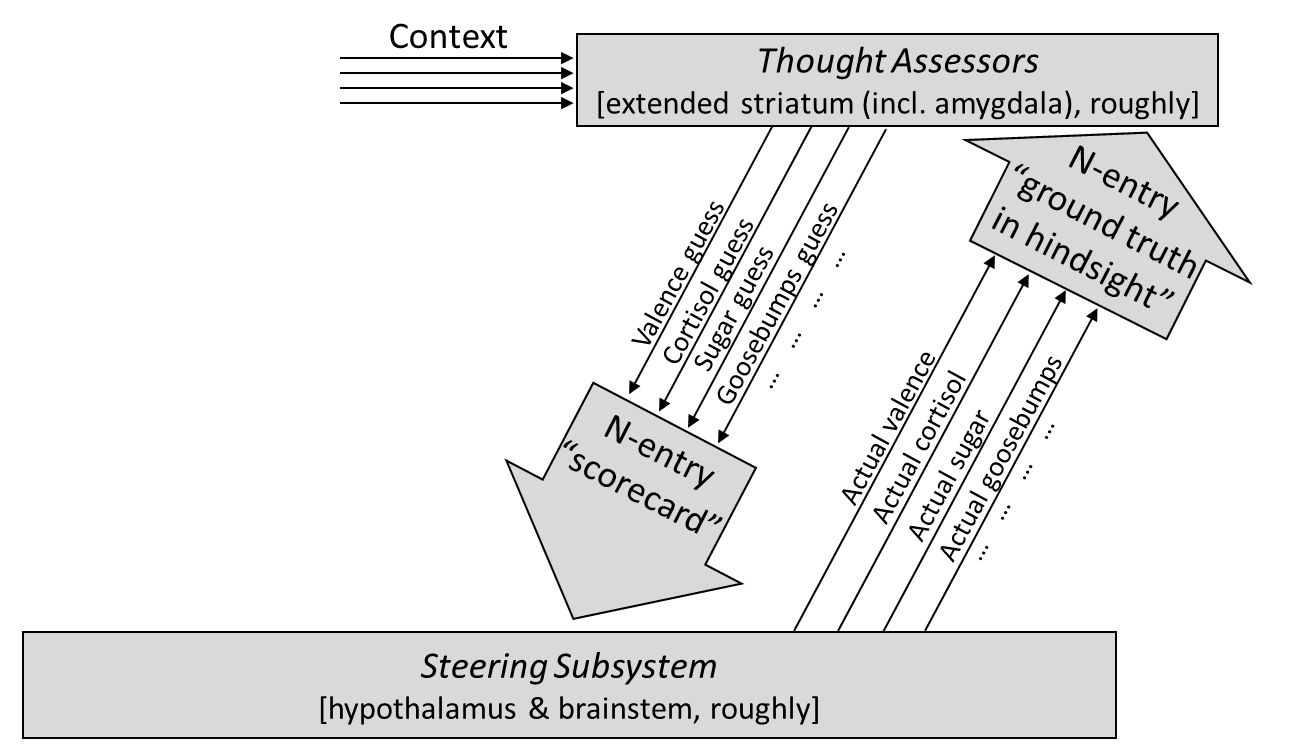

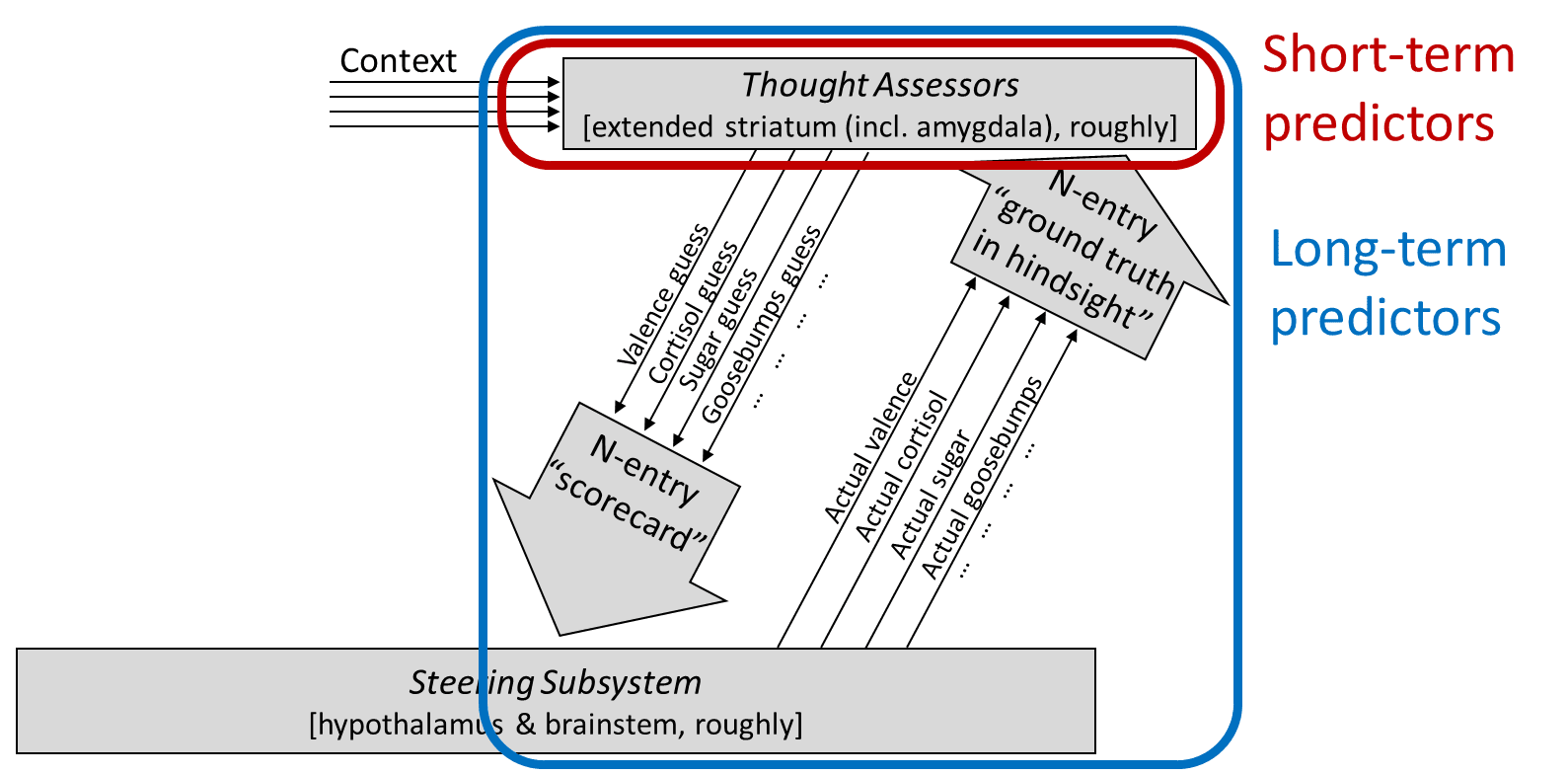

I will argue that there are a large collection of side-by-side long-term predictors in the brain, each comprising a short-term predictor in the Learning Subsystem [LW · GW] (more specifically, in the extended striatum—putamen, nucleus accumbens, lateral septum, part of the amygdala, etc.) that loops down to the Steering Subsystem [LW · GW] (hypothalamus and brainstem) and then back up via a dopamine neuron. These long-term predictors make predictions about biologically-relevant inputs and outputs—for example, one long-term predictor might predict whether I’ll feel pain in my arm, another whether I’ll get goosebumps, another whether I’ll release cortisol, another whether I’ll eat, and so on. Moreover, one of these long-term predictors is essentially a value function for reinforcement learning.

All these predictors will play a major role in motivation—a story which I will finish in the next post [AF · GW].

Table of contents:

- Section 5.2 starts with a toy model of a “long-term predictor” circuit, consisting of the “short-term predictor” of the previous post [AF · GW], plus some extra components, wrapped into a closed loop. Getting a good intuitive understanding of this model will be important going forward, and I will walk through how that model would behave under different circumstances.

- Section 5.3 relates that model to Temporal Difference (TD) learning, which is closely related to a “long-term predictor”. I’ll show two variants of the long-term predictor circuit, a “summation” version (which leads to a value function that approximates the sum of future rewards), and a “switch” version (which leads to a value function that approximates the next reward, whenever it should arrive, which may not be for a long time). The “summation” version is universal in AI literature, but I’ll suggest that the “switch” version is probably closer to what happens in the brain. Incidentally, these two models are equivalent in cases like AlphaGo, wherein reward arrives in a lump sum right at the end of each episode (= each game of Go).

- Section 5.4 will relate long-term predictors to the neuroanatomy of the striatum and brainstem.

- Section 5.5 will offer five lines of evidence that lead me to believe this story: (1) It’s a sensible way to implement a biologically-useful capability; (2) It’s introspectively plausible; (3) It’s evolutionarily plausible; (4) It’s neuroscientifically plausible; (5) It’s compatible with the psychology literature on “Pavlovian conditioning”, “revaluation”, “incentive learning”, and more.

5.2 Toy model of a “long-term predictor” circuit

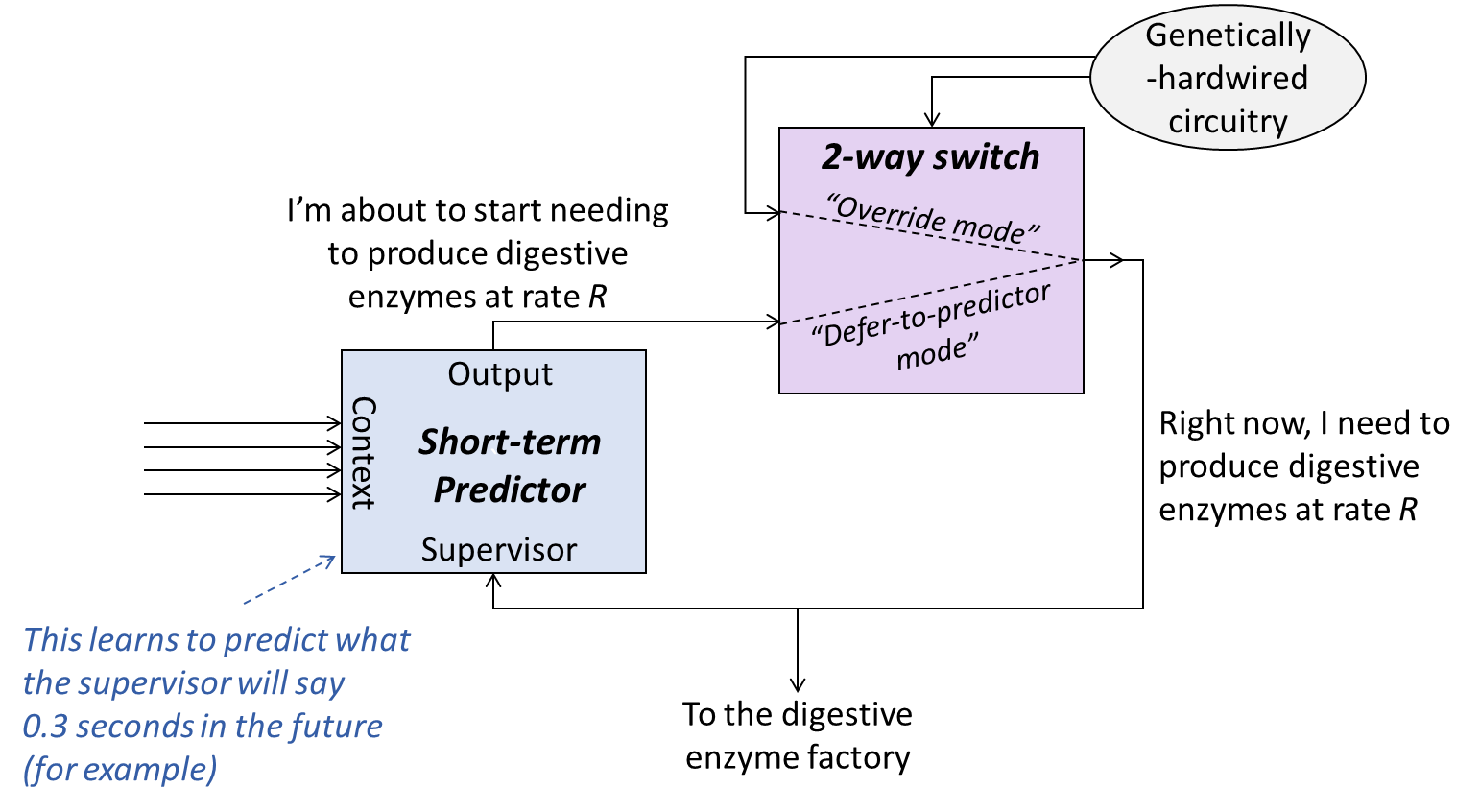

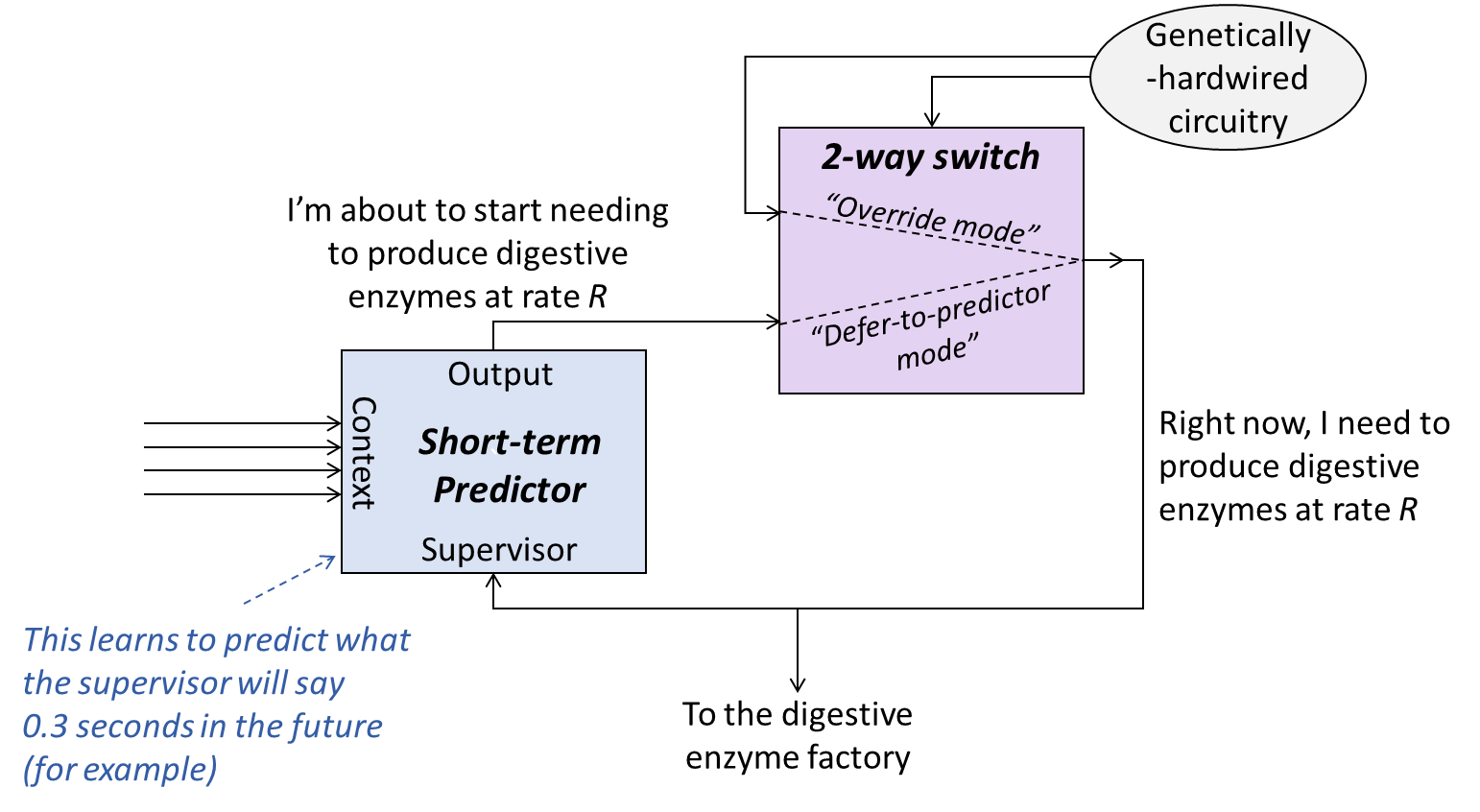

A “long-term predictor” is ultimately nothing more than a short-term predictor whose output signal helps determine its own supervisory signal. Here’s a toy model of what that can look like:

- The blue box is the short-term predictor of the previous post [AF · GW]. It optimizes its output signal such that it approximates what the supervisor signal will be in 0.3 seconds (as an example).

- The purple box is a 2-way switch. The toggle on the switch is controlled by genetically-hardwired circuitry (gray oval), according to the following rules:

- By and large, the switch is in the bottom setting (“defer-to-predictor mode”). This setting is akin to the genetically-hardwired circuitry “trusting” that the short-term predictor’s output is sensible, and in particular producing the suggested amount of digestive enzymes.

- If the genetically-hardwired circuitry gets a signal that I’m eating something right now, and that I don’t have adequate digestive enzymes, it flips the switch to “override mode”. Regardless of what the short-term predictor says, it sends the signal to manufacture digestive enzymes.

- If the genetically-hardwired circuitry has been asking for digestive enzyme production for an extended period, and there’s still no food being eaten, then it again flips the switch to “override mode”. Regardless of what the short-term predictor says, it sends the signal to stop manufacturing digestive enzymes.

Note: You can assume that all the signals in the diagram can vary continuously across a range of values (as opposed to being discrete on/off signals), with the exception of the signal that toggles the 2-way switch.[1] In the brain, smoothly-adjustable signals might be created by, for example, rate-coding—i.e., encoding information as the frequency with which a neuron is firing.

5.2.1 Toy model walkthrough part 1: static context

Let’s walk through what would happen in this toy model.[2] To start with, assume that the “context” is static for some extended period of time. For example, imagine a situation where some ancient worm-like creature is digging in the sandy ocean bed for many consecutive minutes. Plausibly, its sensory environment would stay pretty much constant as long as it keeps digging, as would its thoughts and plans (insofar as this ancient worm-like creature has “thoughts and plans” in the first place). Or if you want another example of (approximately) static context—this one involving a human rather than a worm—hang on until the next subsection.

In the static-context case, let’s first consider what happens when the switch is sitting in “defer-to-predictor mode”: Since the output is looping right back to the supervisor, there is no error in the supervised learning module. The predictions are correct. The synapses aren’t changing. Even if this situation is very common, it has no bearing on how the short-term predictor eventually winds up behaving.

The times that do matter for the eventual behavior of the short-term predictor are those rare times that we go into “override mode”. Think of the overrides as like a sporadic “injection of ground truth”. They produce an error signal in the short-term predictor’s learning algorithm, changing its adjustable parameters (e.g. synapse strengths).

After enough life experience (a.k.a. “training” in ML terminology), the short-term predictor should have the property that the overrides balance out. There may still be occasional overrides that increase digestive-enzyme production, and there may still be occasional overrides that decrease digestive-enzyme production, but those two types of overrides should happen with similar frequency. After all, if they didn’t balance out, the short-term predictor’s internal learning algorithm would gradually change its parameters so that they did balance out.

And that’s just what we want! We’ll wind up with appropriate digestive enzyme production at appropriate times, in a way that properly accounts for any information available in the context data—what the animal is doing right now, what it’s planning to do in the future, what its current sensory inputs are, etc.

5.2.1.1 David-Burns-style exposure therapy—a possible real-life example of the toy model with static context?

As it happens, I recently read David Burns’s book Feeling Great (my review [LW · GW]). David Burns has a very interesting approach to exposure therapy—an approach that happens to serve as an excellent example of how my toy model works in the static-context situation!

Here’s the short version. (Warning: If you’re thinking of doing exposure therapy on yourself at home, at least read the whole book first!) Excerpt from the book:

For example, when I was in high school, I wanted to be on the stage crew of Brigadoon, a play my school was putting on, but it required overcoming my fear of heights since the stage crew had to climb ladders and work near the ceiling to adjust the lights and curtains. My drama teacher, Mr. Krishak, helped me overcome this fear with the very type of exposure techniques I’m talking about. He led me to the theater and put a tall ladder in the middle of the stage, where there was nothing nearby to grab or hold on to. He told me all I had to do was stand on the top of the ladder until my fear disappeared. He reassured me that he’d stand on the floor next to me and wait.

I began climbing the ladder, step by step, and became more and more frightened. When I got to the top, I was terrified. My eyes were almost 18 feet from the floor, since the ladder was 12 feet tall, and I was just over 6 feet tall. I told Mr. Krishak I was in a panic and asked what I should do. Was there something I should say, do, or think about to make my anxiety go away? He shook his head and told me to just stand there until I was cured.

I continued to stand there in terror for about ten more minutes. When I told Mr. Krishak I was still in a panic, he assured me that I was doing great and that I should just stand there a few more minutes until my anxiety went away. A few minutes later, my anxiety suddenly disappeared. I couldn’t believe it!

I told him, “Hey, Mr. Krishak, I’m cured now!”

He said, “Great, you can come on down from the ladder now, and you can be on the stage crew of Brigadoon!”

I had a blast working on the stage crew. I absolutely loved climbing ladders and adjusting the lights and curtains near the ceiling, and I couldn’t even remember why or how I’d been so afraid of heights.

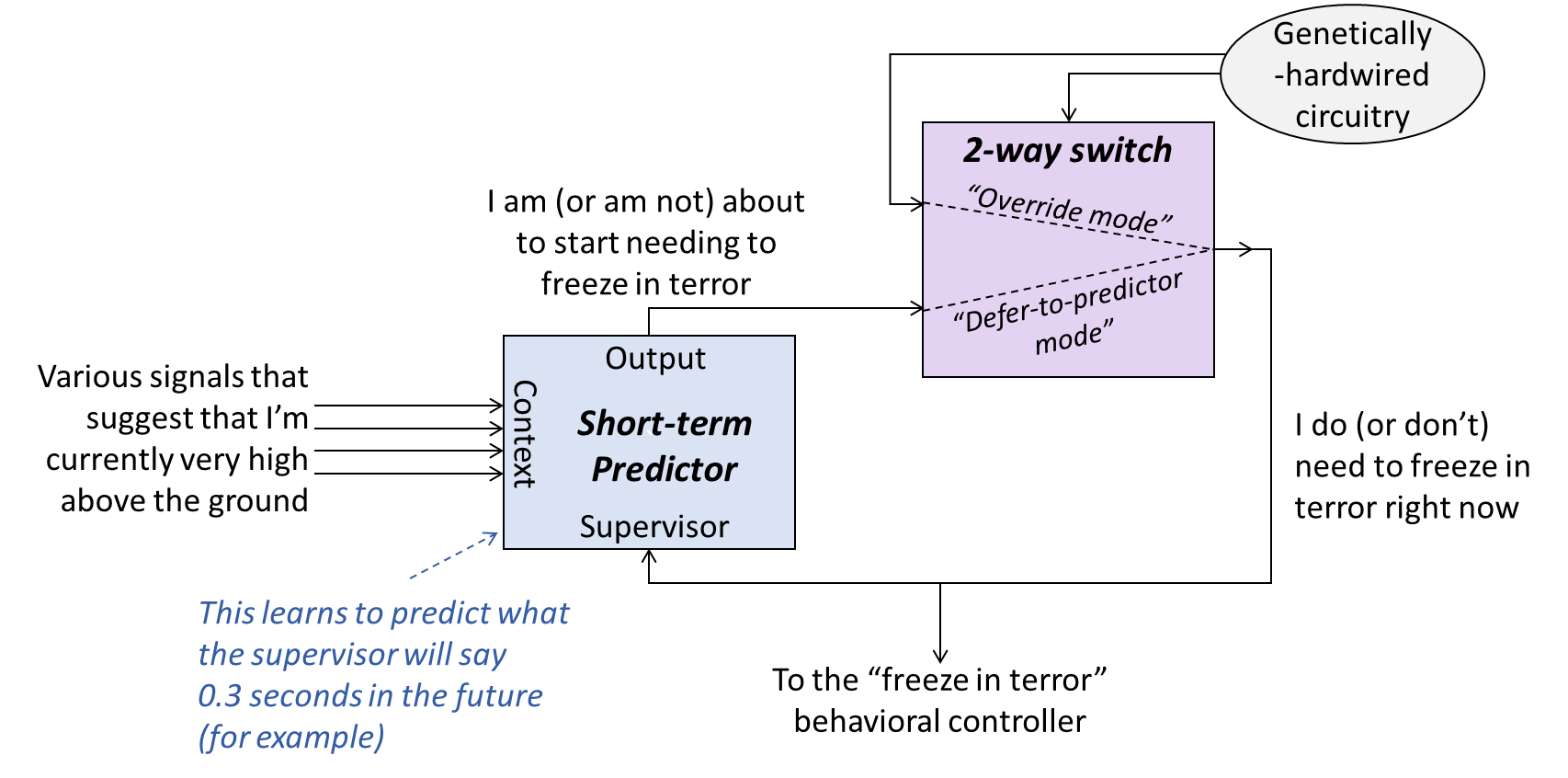

This story seems to be beautifully consistent with my toy model here. David started the day in a state where his short-term-predictors output “extremely strong fear reactions” when he was up high. As long as David stayed up on the ladder, those fear-reaction short-term-predictors kept on getting the same context data, and therefore they kept on firing their outputs at full strength. And David just kept feeling terrified.

Then, after 15 boring-yet-terrifying minutes on the ladder, some innate circuit in David’s brainstem issued an override—as if to say, “C’mon, nothing is changing, nothing is happening, we can’t just keep burning all these calories all day. It’s time to calm down now.” The short-term-predictors continued sending the same outputs as before, but the brainstem exercised its veto power, and forcibly reset David’s cortisol, heart-rate, etc., back to baseline. This “override” state immediately created error signals in the relevant short-term-predictors in David’s amygdala! And the error signals, in turn, led to model updates! The short-term predictors were all edited, and from then on, David was no longer afraid of heights.

This story kinda feels like speculation piled on top of speculation, but whatever, I happen to think it’s right. If nothing else, it’s good pedagogy! Here’s the diagram for this situation; make sure you can follow all the steps.

5.2.2 Toy model walkthrough, assuming changing context

The previous subsections assumed static context lines (constant sensory environment, constant behaviors, constant thoughts and plans, etc.). What happens if the context is not static?

If the context lines are changing, then it’s no longer true that learning happens only at “overrides”. If context changes in the absence of “overrides”, it will result in changing of the output, and the new output will be treated as ground truth for what the old output should have been. Again, this seems to be just what we want: if we learned something new and relevant in the last second, then our current expectation should be more accurate than our previous expectation, and thus we have a sound basis for updating our models.

5.3 Value function calculation (TD learning) as a special case of long-term prediction

At this point, ML experts may recognize a resemblance to Temporal Difference (TD) learning. It’s not quite the same, though. The differences are:

First, TD learning is usually used in reinforcement learning (RL) as a method for going from a reward function to a value function. By contrast, I was talking about things like “digestive enzyme production”, which are neither rewards nor values.

In other words, there is a generally-useful motif that involves going from some immediate quantity X to “long term expectation of X”. The calculation of a value function from a reward function is an example of that motif, but it’s not the only useful example.

(As a matter of terminology, it seems to be generally accepted that the term “TD learning” can in fact apply to things that are not RL value functions.[3] However, empirically in my own experience, as soon as I mention “TD learning”, the people I’m talking to immediately assume I must be talking about RL value functions. So I want to be clear here.)

Second, to get something closer to traditional TD learning, we’d need to replace the 2-way switch with a 2-way summation—and then the “overrides” would be analogous to rewards. Much more on “switch vs summation” in the next subsection.

Third, there are many additional ways to tweak the circuit which are frequently used in AI textbooks, and some of those may be involved in the brain circuits too. For example, we can put in time-discounting, or different emphases on false-positives vs false-negatives (see my discussion of distributional learning in a footnote of the previous post [LW · GW]), etc.

To keep things simple, I will be ignoring all these possibilities (including time-discounting) in the discussion below.

5.3.1 Switch (i.e., value = expected next reward) vs summation (i.e., value = expected sum of future rewards)?

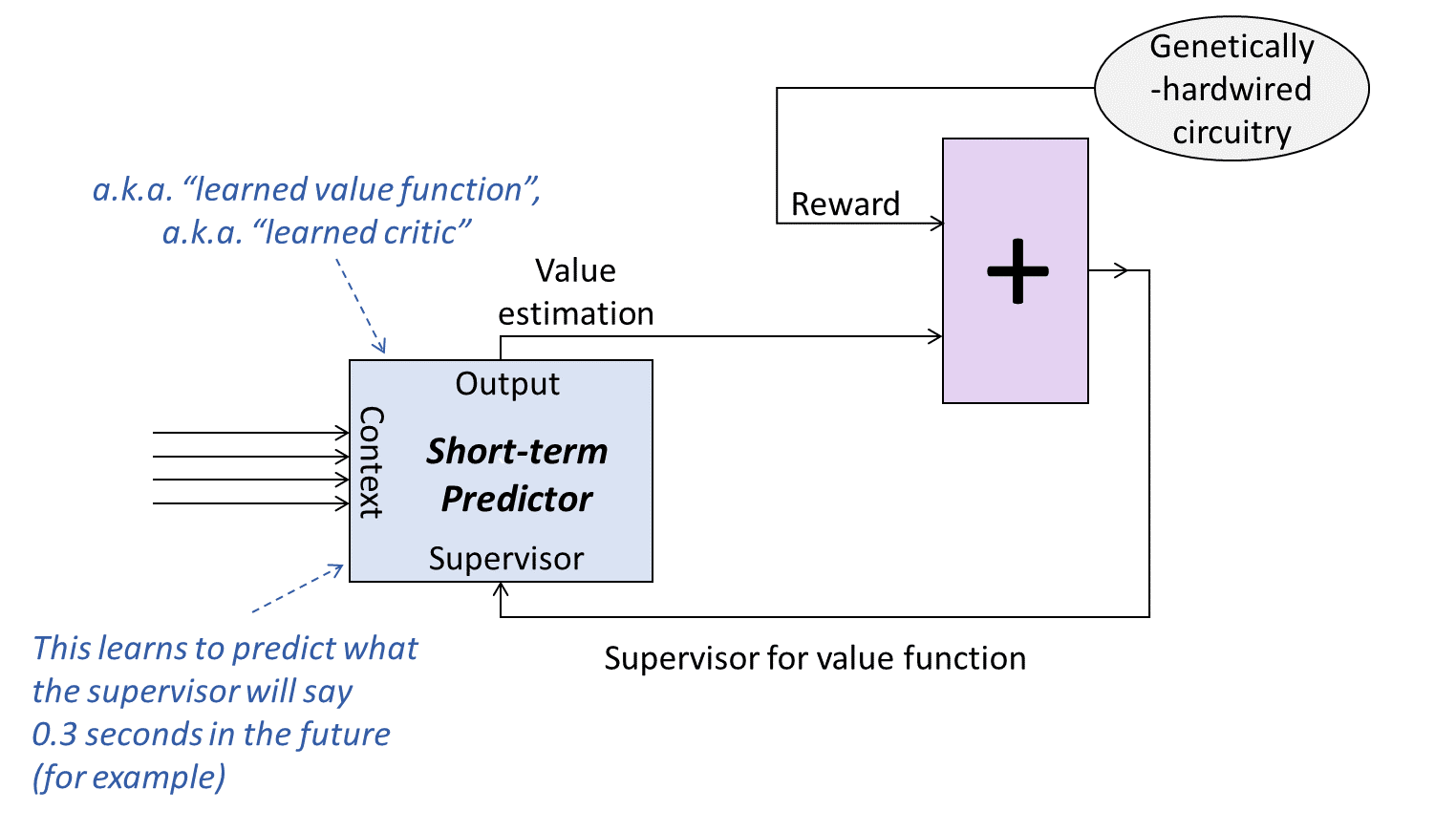

The figures above show two variants of our toy model. In one, the purple box is a two-way switch between “defer to the short-term predictor” and some independent “ground truth”. In the other, the purple box is a two-way summation instead.

The switch version trains the short-term-predictor to predict the next ground truth, whenever it should arrive.

The summation version trains the short-term-predictor to predict the sum of future ground truth signals.

The correct answer could also be “something in between switch and summation”. Or it could even be “none of the above”.

RL papers universally use the summation version—i.e., “value is the expected sum of future rewards”. What about biology? And which is actually better?

It doesn’t always matter! Consider AlphaGo. Like every RL paper today, AlphaGo was originally formulated in the summation paradigm. But it happens to have one and only one nonzero reward signal per game, namely +1 at the end of the game if it wins, or -1 if it loses. In that case, switch vs summation makes no difference. The only difference is one of terminology:

- In the summation case, we would say “each non-terminal move in the Go game has reward=0”.

- In the switch case, we would say “each non-terminal move in the Go game has a reward of (null)”.

(Do you see why?)

But in other cases, it does matter. So back to the question: should it be switch or summation?

Let’s step back. What are we trying to do here?

One thing that a brain needs to do is make decisions that weigh cross-domain tradeoffs. If you’re a human, you need to decide whether to watch TV or go to the gym. If you’re some ancient worm-like creature, you need to “decide” whether to dig or to swim. Either way, this “decision” impacts energy balance, salt balance, probability of injury, probability of mating—you name it. The design goal in the decision-making algorithm is that you make the decision that maximizes inclusive genetic fitness. How might that goal be best realized?

One method involves building a value function that estimates the organism’s inclusive genetic fitness (compared to some arbitrary—indeed, possibly time-varying—baseline), conditional on continuing to execute a given course of action. Of course it won’t be a perfect estimate—real inclusive genetic fitness can only be calculated in hindsight, many generations after the fact. But once we have such a value function, however imperfect, we can plug it into an algorithm that makes decisions to maximize value (more on this in the next post [AF · GW]), and thus we get approximately-fitness-maximizing behavior.

So having a value function is key for making good decisions that weigh cross-domain tradeoffs. But nowhere in this story is the claim “value is the expectation of a sum of future rewards”! That’s a particular way of setting up the value-approximating algorithm, a method which might or might not be well suited to the situation at hand.

I happen to think that brains use something closer to the switch circuit, not the summation circuit, not only for homeostatic-type predictions (like the digestive enzymes example above), but also for value functions, contrary to mainstream RL papers. Again, I think it’s really “neither of the above” in all cases; just that it’s closer to switch.

Why do I favor “switch” over “summation”?

An example: sometimes I stub my toe and it hurts for 20 seconds; other times I stub my toe and it hurts for 40 seconds. But I don’t think of the latter as twice as bad as the former. In fact, even five minutes later, I wouldn’t remember which is which. (See the peak-end rule.) This is the kind of thing I would naturally expect from switch, but is an awkward fit for summation. It’s not strictly incompatible with summation; it just requires a more complicated, value-dependent reward function. As a matter of fact, if we allow the reward function to depend on value, then switch and summation can imitate each other.

Anyway, in upcoming posts, I’ll be assuming switch, not summation. I don’t think it matters very much for the big picture. I definitely don’t think it’s part of the “secret sauce” of animal intelligence, or anything like that. But it does affect some of the detailed descriptions.

The next post [AF · GW] will include more details of reinforcement learning in the brain, including how “reward prediction error” works and so on. I am bracing for lots of confused readers, who will be disoriented by the fact that I’m assuming a different relationship between value and reward than what everyone is used to. For example, in my picture, “reward” is a synonym for “ground truth for what the value function should be right now”—both should account for not only the organism’s current circumstances but also its future prospects. Sorry in advance for any confusion! I will do my best to be clear.

5.3.2 Example

After all that abstract discussion, I should probably go through a more concrete example illustrating how I think the long-term predictor works in the domain of reinforcement learning.

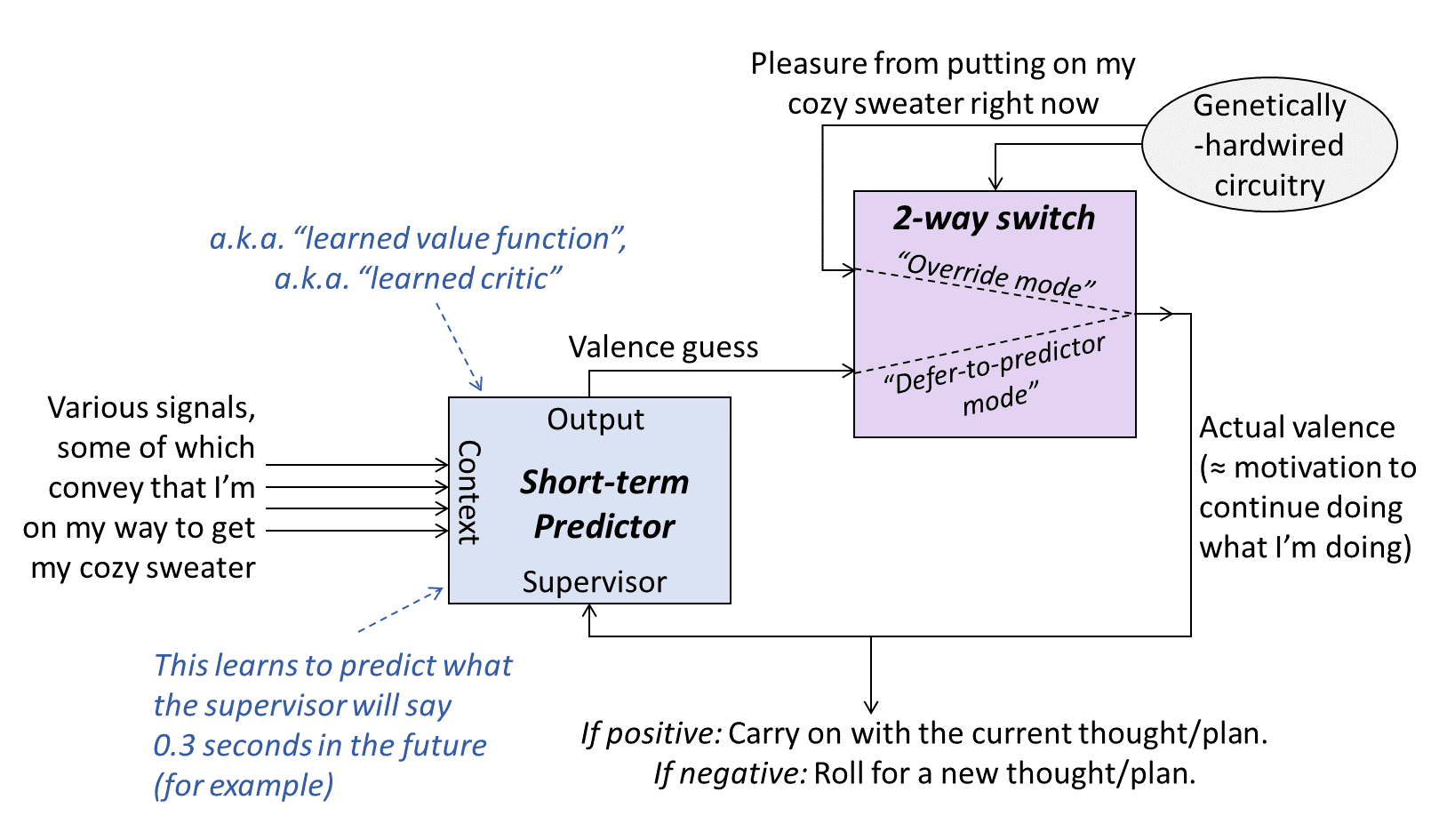

Suppose I’m downstairs and feeling cold. An idea pops into my head: “I’ll go put on my cozy sweater”. So I walk upstairs and put it on.

What just happened? Here’s a diagram:

This is the same kind of diagram as Section 5.2 above, but instead of predicting a digestive enzyme signal, the blue box is predicting a special signal I call valence. This circuit is closely related to the value function and reward function of actor-critic reinforcement learning, as above (and more in the next post, along with my five-post Valence series [LW · GW]).

Here the “override mode” is the pleasure from putting on the cozy sweater, which can be grounded in innate brainstem circuitry related to warmth, tactile feelings, and so on. At that point, when the sweater is on, the brainstem “knows” that something good has happened.

But the more interesting question is: what was happening during the thirty seconds that it took me to walk upstairs? I evidently had motivation to continue walking, or I would have stopped and turned around. But my brainstem hadn’t gotten any ground truth yet that there were good things happening. That’s where “defer-to-predictor mode” comes in! The brainstem, lacking strong evidence about what’s happening, sees a positive valence guess coming out of the striatum and says, in effect, “OK, sure, whatever, I’ll take your word for it.”

And now we’re in the static-context situation discussed above (Section 5.2.1), and therefore the short-term predictor will (after some life experience) output a good guess for the next override valence, whenever that may occur. And since the cozy sweater will be a positive valence override, the valence guesses preceding it will likewise be positive. And therefore I will be motivated to get the sweater, including spending 30 seconds walking up the stairs.

“…Hang on,” you might be thinking. “How was that an example of static context? First you were on the ground floor, and then you were walking up the stairs, then opening the drawer, and so on. That’s changing context, right?? Section 5.2.2, not 5.2.1!”

My response: Yes, part of the context is changing, such as my immediate sensory inputs. But I claim the most important part of the context data is not in fact changing, and that’s the idea, in my head, that I’m on my way to put on a sweater. That idea is in my head the whole time, from conceiving the idea, through walking up the stairs, right through the moment when I’m pulling on my cozy sweater. And that idea is represented by some set of cortical neurons, just like everything else in my conscious awareness, and thus it’s included among the context signals.

This “static-ish context” is critical for how the learning algorithm can actually work in practice. TD learning doesn’t necessarily need to laboriously walk back the credit assignment 0.1 seconds at a time, all the way back from the pleasure of the sweater to the bottom of the stairs. It can just do one step, from the pleasure of the sweater to the idea in my head that I’m putting on a sweater.

5.4 An array of long-term predictors involving the extended striatum & Steering Subsystem

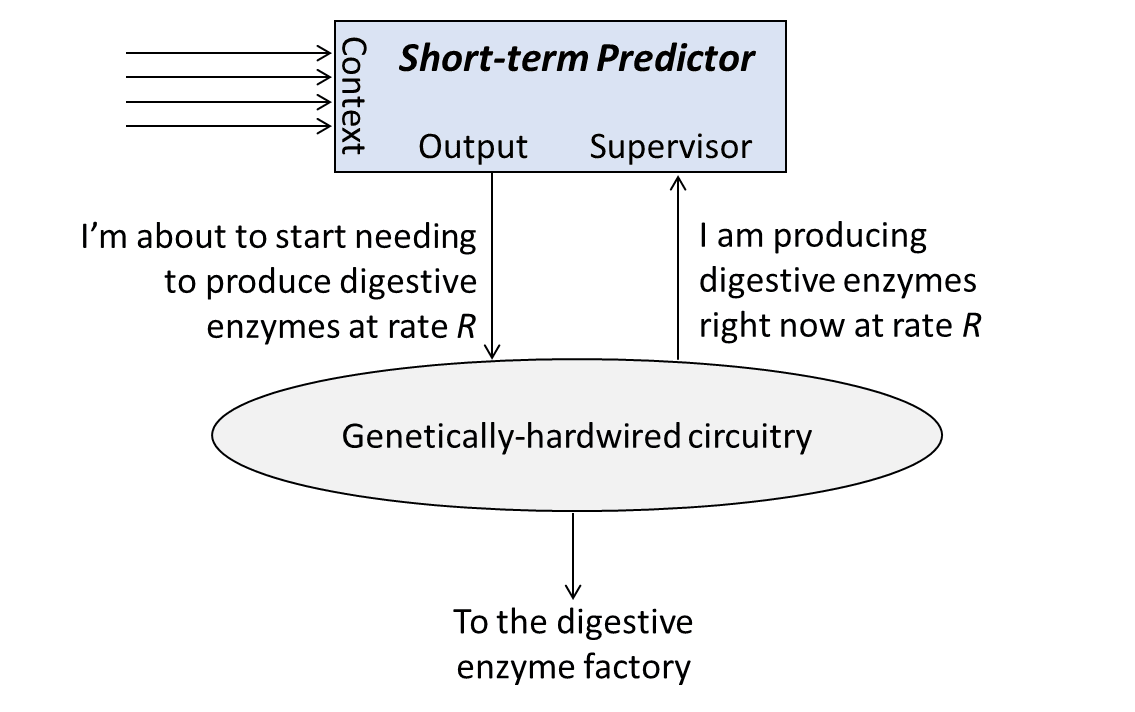

Here’s the long-term-predictor circuit from above:

I can lump together the 2-way switch with the rest of the genetically-hardwired circuitry, and then rearrange the boxes a bit, and I get the following:

Now, obviously digestive enzymes are just one example. Let’s draw in some more examples, add some hypothesized neuroanatomy, and include other terminology. Here’s the result:

Excellent! We’re halfway to my big picture of decision-making and motivation. The rest of the picture—including the “actor” part of actor-critic reinforcement learning—will come in the next post [AF · GW], and will fill in the hole in the top-left side of that diagram. (The term “Steering Subsystem” comes from Post #3 [AF · GW].)

Here’s one more diagram and caption for pedagogical purposes.

In the next two subsections, I will elaborate on the neuroanatomy which I’m hinting at in this diagram, and then I’ll talk about why you should believe me.

5.5 Five reasons I like this “array of long-term predictors” picture

5.5.1 It’s a sensible way to implement a biologically-useful capability

If you start producing digestive enzymes before eating, you’ll digest faster. If your heart starts racing before you see the lion, then your muscles will be primed and ready to go when you do see the lion. Etc.

So these kinds of predictors seem obviously useful.

Moreover, as discussed in the previous post (Section 4.5.2) [AF · GW], the technique I’m proposing here (based on supervised learning) seems either superior to or complementary with other ways to meet these needs.

5.5.2 It’s introspectively plausible

For one thing, we do in fact start salivating before we eat the cracker, start feeling nervous before we see the lion, etc.

For another thing, consider the fact that all the actions I’m talking about in this post are involuntary: you cannot salivate on command, or dilate your pupils on command, etc., at least not in quite the same way that you can wiggle your thumb on command.

(More on voluntary actions in the next post [AF · GW], when I talk more about the cortex.)

I’m glossing over a bunch of complications here, but the involuntary nature of these things seems pleasingly consistent with the idea that they are being trained by their own dedicated supervisory signals, straight from the brainstem. They’re slaves to a different master, so to speak. We can kinda trick them into behaving in certain ways, but our control is limited and indirect (see Section 6.3.3 of the next post [LW · GW]).

5.5.3 It’s evolutionary plausible

As discussed in Section 4.4 of the previous post [AF · GW], the simplest short-term predictor is extraordinarily simple, and the simplest long-term predictor is only a bit more complicated than that. And these very simple versions are already plausibly fitness-enhancing, even in very simple animals.

Moreover, as I discussed a while back (Dopamine-supervised learning in mammals & fruit flies [LW · GW]), there is an array of little learning modules in the fruit fly, playing a seemingly-similar role to what I’m talking about here. Those modules also use dopamine as a supervisory signal, and there is some genomic evidence of a homology between those circuits and the mammalian telencephalon.

5.5.4 It’s neuroscientifically plausible

The details here are out-of-scope, and somewhat more complicated than I’m making it out to be, but for (a major subset of) Thought Assessors, I believe the correspondence between my toy models and real neuroanatomy is as follows:

- The “short-term predictor” is a set of medium spiny neurons (a.k.a. spiny projection neurons) of the extended striatum (caudate, nucleus accumbens, lateral septum, part of the amygdala, etc.)

- Most of the extended striatum is dedicated to just a few Thought Assessors, namely the “valence guess” Thought Assessor (part of the brain’s “main” / “success-in-life” RL system), or the analogous Thought Assessors for any of the brain’s narrow-RL systems (those are off-topic for this series but see Section 1.5.6 of my valence series [LW · GW]).

- A smaller portion of the extended striatum—specifically, parts of the amygdala, lateral septum, and nucleus accumbens shell—comprises all the dozens-to-hundreds of “visceral” Thought Assessors, related to things like cortisol, freezing, immune system activation, and so on.

- The “Output” signal from the short-term predictor (a.k.a. the “guess”, or “scorecard” entry) goes to one of various Steering Subsystem areas (e.g. GPi, GPe, SNr, SNc, various cell groups in the hypothalamus).

- The “Supervisor” signal going into the short-term predictor is one or more dopamine neurons coming back (directly or indirectly) from the Steering Subsystem.[4]

(Caveat: I say “a major subset of Thought Assessors” are medium spiny neurons in the extended striatum, rather than “all Thought Assessors”, because I think the cortex learning algorithm has different loss functions in its different subregions, and thus I think that certain bits of the cortex are in some respects more like Thought Assessors than like parts of the cortex “Thought Generator” which I’ll be talking about at length in the next post. I’m leaving this caveat out of my diagrams in order to (over)simplify the discussion.)

Thus, for example, my claim is that if you zoom into (say) the central amygdala, you’ll find dozens of little genetically-determined subregions, each projecting to a specific set of genetically-determined Steering Subsystem targets, and with a genetically-determined narrowly-targeted supervisory signal coming back (directly or indirectly) from often the same Steering Subsystem target. Thus, for example, maybe thus-and-such little group of amygdala neurons is universally associated with getting goosebumps. But each individual brain would learn within its lifetime a different set of patterns that trigger this group of goosebumps-related neurons.

Is that hypothesis consistent with the experimental evidence? My impression so far, from everything I’ve read, is “yes”, although I admit I don’t have super-solid evidence either way. More specifically:

For the valence-guess Thought Assessor, the gory details are out-of-scope, but I think my story is elegantly compatible with textbook basal ganglia circuitry, particularly the famous motif of “cortico-basal ganglia-thalamo-cortical loops”.

For the “visceral” Thought Assessors: On general priors, there’s certainly no question that the genome is capable of wiring up dozens-to-hundreds of little cell groups in dozens-to-hundreds of specific and innate ways—this is ubiquitous in the Steering Subsystem. What about more specifically? I’ve found a few things. As one small-scale example, Lischinsky et al. 2023 studied two little embryonically-defined amygdala subpopulations, and finds that these two subpopulations wind up triggering in very different circumstances and connecting to very different downstream cells. As another example, at a larger scale, Heimer et al. 2008 says that the bundle of outputs of (what I’m calling) the “visceral” Thought Assessors are just an horrific mess, going every which way, as would be expected from hundreds of cell groups projecting to hundreds of different Steering Subsystem targets:

In the case of some macrosystem outputs, such as, for example, those from the accumbens core, the fibers course among these structures in tightly fasciculated fashion, giving off robust “bursts” of terminations only within or in the vicinity of particular structures. In other cases, good examples being the accumbens shell and extended amygdaloid outputs, the fibers are more loosely, if at all, fasciculated and have countless varicosities and short collaterals presumed to be sites of synaptic or parasynaptic transmission that involve not only defined structures but also points of indeterminate neural organization all along the course of the medial forebrain bundle. … As a consequence of their descent into this poorly defined organization, it is exceedingly difficult to conceive of precise, definitive mechanisms that outputs from the forebrain, including from the macrosystems, might engage to orchestrate purposeful, adaptive behavior from the extensive and sophisticated repertoire of autonomous, albeit restrictively programmed, hindbrain-spinal motor routines. But it is equivalently hard to dispel the notion that this is precisely what happens, and it seems advisable to presume that we are at present unable to perceive the relevant functional-anatomical relationships rather than to think they don’t exist.

As another piece of suggestive evidence, Lammel et al. 2014 mentions so-called “‘non-conventional’ VTA [dopamine] neurons” in “medial posterior VTA (PN and medial PBP)”. These seem to project to roughly the “visceral” Thought Assessor areas that I mentioned above, and it’s claimed that they have different firing patterns from other dopamine neurons. That seems intriguing, although I don’t have a more specific story than that right now. Relatedly, a recent paper discusses certain dopamine neurons which burst when aversive things happen (Verharen et al. 2020), and four of the five regions these neurons seem to "supervise" are associated with visceral Thought Assessors, in my opinion.[5] This makes sense because if the mouse freezes in terror, then that’s a positive error signal for the freeze-in-terror visceral Thought Assessor, as well as the raise-your-cortisol-level visceral Thought Assessor, and so on.

5.5.5 It offers a nice way to make sense of a wide variety of animal psychology phenomena, including Pavlovian conditioning, devaluation, and more

See my later post Incentive Learning vs Dead Sea Salt Experiment [LW · GW] for details on that. See also the last section of that post [LW · GW] for some contrasts between my model and a couple others in the psychology and neuro-AI literature.

5.6 Conclusion

Anyway, as usual I don’t pretend to have smoking-gun proof of my hypothesis (i.e. that the brain has an array of long-term predictors involving extended striatum-Steering Subsystem loops), and there are some bits that I’m still confused about. But considering the evidence in the previous subsection, and everything else I’ve read, I wind up feeling strongly that I’m broadly on the right track. I’m happy to discuss more in the comments. Otherwise, onward to the next post [AF · GW], where we will finally put everything together into a big picture of how I think motivation and decision-making work in the brain!

Changelog

July 2024: Since the initial version, I’ve made a bunch of changes.

The biggest changes are related to neuroanatomy. I was just really confused about lots of things in 2022, but I hope I’m converging towards correct answers! In particular:

I’ve gone through several iterations of the diagrams in this post, particularly including which neuroanatomy corresponds to which boxes.

There used to be a much longer discussion of neuroanatomy in Section 5.4, but much of that was incorrect, and the rest got moved into the newly-rewritten Section 5.5.4.

I originally had a discussion of dopamine diversity. I mostly deleted that, apart from a couple sentences, having been convinced that at least one of the pieces of evidence I discussed (Engelhart et al. 2019) was not related to “visceral Thought Assessors”, but rather had a different explanation. (Thanks Nathaniel Daw for the correction.) I also moved the discussion of distributional RL into a footnote of the previous post.

More minor things:

I added a note that all the diagrams in this post should be understood as the kinds of diagrams you see in FPGA design—i.e. at every instant, every block is running simultaneously, and every line (wire) is carrying a numerical value. It’s not like the kinds of diagrams you see in descriptions of serial program control flow, where we follow a path through the diagram, executing one thing at a time.

I’ve also changed the wording on the labels of the various diagrams a few times since the initial version. For example, where it used to say “Will lead to reward?” and “It was in fact leading to reward”, it now says “Valence guess” and “Actual valence”, respectively. The previous wording was OK, but I think the connotations are a bit better here, and also more consistent with my later Valence series [LW · GW].

I added a second concrete example story (involving a cozy sweater) as Section 5.3.2.

I replaced a brief discussion of the “dead sea salt experiment” with a link to my more recent post dedicated to that topic [LW · GW], in Section 5.5.5.

- ^

To be clear, in reality, there probably isn’t a discrete all-or-nothing 2-way switch here. There could be a “weighted average” setting, for example. Remember, this whole discussion is just a pedagogical “toy model”; I expect that reality is more complicated in various respects.

- ^

I note that I’m just running through this algorithm in my head; I haven’t simulated it. I’m optimistic that I didn’t majorly screw up, i.e. that everything I’m saying about the algorithm is qualitatively true, or at least can be qualitatively true with appropriate parameter settings and perhaps other minor tweaks.

- ^

Examples of using the terminology “TD learning” for something which is not related to RL reward functions include “TD networks”, and the Successor Representations literature (example), or this paper, etc.

- ^

As in the previous post, when I say that “dopamine carries the supervisory signal”, I’m open to the possibility that dopamine is actually a closely-related signal like the error signal, or the negative error signal, or the negative supervisory signal. It really doesn’t matter for present purposes.

- ^

The fifth area where that paper found dopamine neurons bursting under aversive circumstances, namely the tail of the striatum, has a different explanation I think—see here [AF · GW].

27 comments

Comments sorted by top scores.

comment by james.lucassen · 2022-04-01T14:30:10.891Z · LW(p) · GW(p)

I don't think I understand how the scorecard works. From:

[the scorecard] takes all that horrific complexity and distills it into a nice standardized scorecard—exactly the kind of thing that genetically-hardcoded circuits in the Steering Subsystem can easily process.

And this makes sense. But when I picture how it could actually work, I bump into an issue. Is the scorecard learned, or hard-coded?

If the scorecard is learned, then it needs a training signal from Steering. But if it's useless at the start, it can't provide a training signal. On the other hand, since the "ontology" of the Learning subsystem is learned-from-scratch, then it seems difficult for a hard-coded scorecard to do this translation task.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-04-01T17:14:33.224Z · LW(p) · GW(p)

The categories are hardcoded, the function-that-assigns-a-score-to-a-category is learned. Everybody has a goosebumps predictor, everyone has a grimacing predictor, nobody has a debt predictor, etc. Think of a school report card: everyone gets a grade for math, everyone gets a grade for English, etc. But the score-assigning algorithm is learned.

So in the report card analogy, think of a math TA ( = Teaching Assistant = Thought Assessor) who starts out assigning math grades to students randomly, but the math professor (=Steering Subsystem) corrects the TA when its assigned score is really off-base. Gradually, the math TA learns to assign appropriate grades by looking at student tests. In parallel, there’s an English class TA (=Thought Assessor), learning to assign appropriate grades to student essays based on feedback from the English professor (=Steering Subsystem).

The TAs (Thought Assessors) are useless at the start, but the professors aren't. Back to biology: If you get shocked, then the Steering Subsystem says to the “freezing in fear” Thought Assessor: “Hey, you screwed up, you should have been sending a signal just now.” The professors are easy to hardwire because they only need to figure out the right answer in hindsight. You don't need a learning algorithm for that.

comment by TLW · 2022-02-26T20:09:36.057Z · LW(p) · GW(p)

I'm liking[1] this theory more and more.

In the static-context case, let’s first consider what happens when the switch is sitting in “defer-to-predictor mode”: Since the output is looping right back to the supervisor, there is no error in the supervised learning module. The predictions are correct. The synapses aren’t changing. Even if this situation is very common, it has no bearing on how the short-term predictor eventually winds up behaving.

One solution to a -300ms delay connected to its own input is a constant output. However, this is part of an infinite class of solutions. Any function is a solution to this.

(Admittedly, with any error metric the optimum solution is a constant output.)

Output stability here depends on the error gain through the loop. (Control theory is not my forte, but I believe to analyze this rigorously control theory is what you'd want to look into.)

If the error gain is sub-unity, the system is stable and will converge to a constant output.

The error gain being unity is the critical value where the system is on the edge of stability.

If the error gain is super-unity, the system is unstable and will go into oscillations.

Or, to bring this back to what this means for a predictor:

Sub-unity error gain means 'if the current input is X and the predictor predicts the input will be Y in 300ms, the predictor outputs .'

Unity error gain means 'if the current input is X and the predictor predicts the input will be Y in 300ms, the predictor outputs .'

Super-unity error gain means 'if the current input is X and the predictor predicts the input will be Y in 300ms, the predictor outputs .'

Super-unity error gain is 'obviously' suboptimal behavior for a human brain, so we'd probably end up with the error amplification tuned to under the critical value. Ditto, a predictor that systematically underestimated system changes is also "obviously" suboptimal. A 'perfect' predictor corresponds to unity error gain.

So all told you'd expect the predictors to be tuned to a gain that's as close to possible to unity without going over.

...hm. Actually, predictors going haywire with a ~300ms (~3Hz) period sounds a lot like a seizure. Which would nicely explain why humans do occasionally get seizures. (Or rather, why they aren't evolved out.) For ideal prediction you want an error gain as close as possible to unity... but too close to unity and variations in effective error gain mean that you're suddenly overunity and get rampant 300ms oscillations.

- ^

In the sense of "it seems plausible and explains things that I haven't heard other good explanations for"

↑ comment by Steven Byrnes (steve2152) · 2022-02-27T13:27:18.084Z · LW(p) · GW(p)

The predictor is a parametrized function output = f(context, parameters) (where "parameters" are also called "weights"). If (by assumption) context is static, then you're running the function on the same inputs over and over, so you have to keep getting the same answer. Unless there's an error changing the parameters / weights. But the learning rate on those parameters can be (and presumably would be) relatively low. For example, the time constant (for the exponential decay of a discrepancy between output and supervisor when in "override mode") could be many seconds. In that case I don't think you can get self-sustaining oscillations in "defer to predictor" mode.

Then maybe you'll say "What if it's static context except that there's a time input to the context as well? But I still don't see how you would learn oscillations that aren't in the exogenous data.

There could also be a low-pass filter on the supervisor side. Hmm, actually, maybe that amounts to the same thing as the slow parameter updates I mentioned above.

I think I disagree that "perfect predictors" are what's wanted here. The input data is a mix of regular patterns and noise / one-off idiosyncratic things. You want to learn the patterns but not learn the noise. So it's good to not immediately and completely adapt to errors in the model. (Also, there's always learning-during-memory-replay for genuinely important things that only happen only once and quickly.)

Replies from: TLW↑ comment by TLW · 2022-02-27T20:40:10.166Z · LW(p) · GW(p)

I disagree; let me try to work through where we diverge.

A 300ms predictor outputting a sine wave with period 300ms into its own supervisor input has zero error, and hence will continue to do so regardless of the learning rate.

Do you at least agree that in this scheme a predictor outputting a sine wave with period 300ms has zero error while in defer-to-predictor mode?

The predictor is a parametrized function output = f(context, parameters) (where "parameters" are also called "weights"). If (by assumption) context is static, then you're running the function on the same inputs over and over, so you have to keep getting the same answer. Unless there's an error changing the parameters / weights.

This is true for a standard function; this is not true once you include time. A neuron absolutely can spike every X milliseconds with a static input. And it is absolutely possible to construct a sine-wave oscillator via a function with a nonzero time delay connected to its own input.

But the learning rate on those parameters can be (and presumably would be) relatively low.

Unfortunately, as long as the % of time spent in override mode is low you need a high learning rate or else the predictor will learn incredibly slowly.

If the supervisor spends a second a week in override mode[1], then the predictor is actively learning ~0.002% of the time.

There could also be a low-pass filter on the supervisor side.

Unfortunately, as long as each override event is relatively short a low-pass filter selectively removes all of your learning signal!

*****

For example, the time constant (for the exponential decay of a discrepancy between output and supervisor when in "override mode") could be many seconds.

You keep bouncing between a sufficiently-powerful-predictor and a simple exponential-weighted-average. Please pick one, to keep your arguments coherent. This statement of yours is only true for the latter, not the former. A powerful predictor can suddenly modeswitch in the presence of an error signal.

(For a simple example, consider a 300ms predictor trying to predict a system where the signal normally stays at 0, but if it ever goes non-zero, even by a very small amount, it will go to 1 100ms later and stay at 1 for 10s before returning to 0. As long as the signal stays at 0, the predictor will predict it stays a zero[2]. The moment the error is nonzero, the predictor will immediately switch to predicting 1.)

- ^

And for something like a 'freeze in terror' predictor I absolutely could see a rate that low.

- ^

Or maybe predict spikes. Meh.

↑ comment by Steven Byrnes (steve2152) · 2022-02-28T04:22:11.308Z · LW(p) · GW(p)

Do you at least agree that in this scheme a predictor outputting a sine wave with period 300ms has zero error while in defer-to-predictor mode?

Yes

A neuron absolutely can spike every X milliseconds with a static input.

Hmm, I think we're mixing up two levels of abstraction here. At the implementation level, there are no real-valued signals, just spikes. But at the algorithm level, it's possible that the neuron operations are equivalent to some algorithm that is most simply described in a way that does not involve any spikes, and does involve lots of real-valued signals. For example, one can vaguely imagine setups where a single spike of an upstream neuron isn't sufficient to generate a spike on the downstream neuron, and you only get effects from a neuron sending a train of spikes whose effects are cumulative. In that case, the circuit would be basically incapable of "fast" dynamics (i.e. it would have implicit low-pass filters everywhere), and the algorithm is really best thought of as "doing operations" on average spike frequencies rather than on individual spikes.

You keep bouncing between a sufficiently-powerful-predictor and a simple exponential-weighted-average. Please pick one, to keep your arguments coherent.

Oh sorry if I was unclear. I was never talking about exponential weighted average. Let's say our trained model is f(context,θ) (where θ is the parameters a.k.a. weights). Then with static context, I was figuring we'd have a differential equation vaguely like:

I was figuring that (in the absence of oscillations) the solution to this differential equation might look like θ(t) asymptotically approaching a limit wherein the error is zero, and I was figuring that this asymptotic approach might look like an exponential with a timescale of a few seconds.

I'm not sure if it would be literally an exponential. But probably some kind of asymptotic approach to a steady-state. And I was saying (in a confusing way) that I was imagining that this asymptotic approach would take a few seconds to get most of the way to its limit.

Unfortunately, as long as the % of time spent in override mode is low you need a high learning rate or else the predictor will learn incredibly slowly.

If we go to the Section 5.2.1.1 example of David on the ladder, the learning is happening while he has calmed down, but is still standing at the top of the ladder. I figure he probably stayed up for at least 5 or 10 seconds after calming down but before climbing down.

For example, we can imagine an alternate scenario where David was magically teleported off the ladder within a fraction of a second after the moment that he finally started feeling calm. In that scenario, I would be a lot less confident that the exposure therapy would actually stick.

By the same token, when you're feeling scared in some situation, you're probably going to continue feeling scared in that same situation for at least 5 or 10 seconds.

(And if not, there's always memory replay! The hippocampus can recall both the scared feeling and the associated context 10 more times over the next day and/or while you sleep. And that amounts to the same thing, I think.)

Sorry in advance if I'm misunderstanding your comment. I really appreciate you taking the time to think it through for yourself :)

Replies from: TLW↑ comment by TLW · 2022-03-01T04:38:33.050Z · LW(p) · GW(p)

Yes

Alright, so we at least agree with each other on this. Let me try to dig into this a little further...

Consider the following (very contrived) example, for a 300ms predictor trying to minimize L2[1] norm:

Context is static throughput the below.

t=0, overrider circuit forces output=1.

t=150ms, overrider circuit switches back to loopback mode.

t=450ms, overrider circuit forces output=0.

t=600ms, overrider circuit switches back to loopback mode.

t=900ms, overrider circuit forces output=1.

etc.

Do you agree that the best a slow-learning predictor that's a pure function can do is to output a static value 0.5, for an overall error rate of, what ? (The exact value doesn't matter.)

Do you agree that a "temporal-aware"[2] predictor that outputted a 300ms square wave as follows:

t=0, predictor switches output=1.

t=150ms, predictor switches output=0.

t=300ms, predictor switches output=1.

t=450ms, predictor switches output=0.

t=600ms, predictor switches output=1.

etc

...would have zero error rate[3]?

I was figuring that (in the absence of oscillations) the solution to this differential equation might look like θ(t) asymptotically approaching a limit wherein the error is zero, and I was figuring that this asymptotic approach might look like an exponential with a timescale of a few seconds.

I can see why you'd say this. It's even true if you're just looking at e.g. a well-tuned PID controller. But even for a PID controller there are regimes where this behavior breaks down and you get oscillation[4]... and worse, the regimes where this breaks down are regimes that you're otherwise actively tuning said controller for!

For example, one can vaguely imagine setups where a single spike of an upstream neuron isn't sufficient to generate a spike on the downstream neuron, and you only get effects from a neuron sending a train of spikes whose effects are cumulative. In that case, the circuit would be basically incapable of "fast" dynamics (i.e. it would have implicit low-pass filters everywhere), and the algorithm is really best thought of as "doing operations" on average spike frequencies rather than on individual spikes.

I think here is the major place we disagree. As you say, this model of these circuits is basically incapable of fast dynamics, and you keep leaning towards setups that forbid fast dynamics in general. But for something like a startle signal, you absolutely want it to be able to handle a step change in the context as a step change in the output[5].

I don't know of a general-purpose method of predicting fast dynamics[6] that doesn't have mode-switching regions where seemingly-small learning rates can suddenly change the output.

- ^

Almost anything would would here, really. L1 is just annoying due to the lack of unique solution.

- ^

I am making up this term on the spot. I haven't formalized it; I suspect one way to formalize it would be to include time % 300ms as an input like the rest of the context.

- ^

Please ignore clock skew for now.

- ^

"Normally" the feedback path is through the input->output path, not the PID parameters... but you can get oscillations in the PID-parameter path too

- ^

...and a 'step change' inherently has high frequency components.

- ^

Perhaps a better term might be 'high-bandwidth' dynamics. Predicting a 10MHz sine wave is easy. Predicting <=10kHz noise, less so.

↑ comment by Steven Byrnes (steve2152) · 2022-03-01T22:24:33.606Z · LW(p) · GW(p)

for a 300ms predictor trying to minimize L2 norm:

Just to make sure we're on the same page, I made up the “300ms” number, it could be something else.

Also to make sure we're on the same page, I claim that from a design perspective, fast oscillation instabilities are bad, and from an introspective perspective, fast oscillation instabilities don't happen. (I don't have goosebumps, then 150ms later I don't have goosebumps, then 150ms later I do have goosebumps, etc.)

...would have zero error rate?

Sure. But to make sure we're on the same page, the predictor is trying to minimize L2 norm (or whatever), but that's just one component of a system, and successfully minimizing the L2 norm might or might not correspond to the larger system performing well at its task. So “zero error rate” doesn't necessarily mean “good design”.

even for a PID controller there are regimes where this behavior breaks down and you get oscillation

Sorry, I'm confused. There's an I and a D? I only see a P.

As you say, this model of these circuits is basically incapable of fast dynamics, and you keep leaning towards setups that forbid fast dynamics in general. But for something like a startle signal, you absolutely want it to be able to handle a step change in the context as a step change in the output.

It seems to me that you can start a startle reaction quickly (small fraction of a second), but you can't stop a startle quickly. Hmm, maybe the fastest thing the amygdala does is to blink (mostly <300ms) , but if you're getting 3 blink-inducing stimuli a second, your brainstem is not going to keep blinking 3 times a second, instead it will just pinch the eyes shut and turn away, or something. (Source: life experience.) (Also, I can always pull out the “Did I say 300ms prediction? I meant 100ms” card…)

If the supervisor is really tracking the physiological response (sympathetic nervous system response, blink reaction, whatever), and the physiological response can't oscillate quickly (even if its rise-time by itself is fast), then likewise the supervisor can't oscillate quickly, right? Think of it like: once I start a startle-reaction, then it flips into override mode for a second, because I'm still startle-reacting until the reaction finishes playing out.

forbid fast dynamics in general

Hmm, I think I want to forbid fast updates of the adjustable parameters / weights (synapse strength or whatever), and I also want to stay very very far away from any situation where there might be fast oscillations that originate in instability rather than already being present in exogenous data. I'm open to a fast dynamic where “context suddenly changes, and then immediately afterwards the output suddenly changes”. If I said something to the contrary earlier, then I have changed my mind! :-)

And I continue to believe that these things are all compatible: you can get the “context suddenly changes → output suddenly changes” behavior, without going right to the edge of unstable oscillations, and also without fast (sub-second) parameter / weight / synapse-strength changes.

Replies from: TLW↑ comment by TLW · 2022-03-02T02:32:46.686Z · LW(p) · GW(p)

Just to make sure we're on the same page, I made up the “300ms” number, it could be something else.

Sure; the further you get away from ~300ms the less the number makes sense for e.g. predicting neuron latency, as described earlier.

Also to make sure we're on the same page, I claim that from a design perspective, fast oscillation instabilities are bad, and from an introspective perspective, fast oscillation instabilities don't happen. (I don't have goosebumps, then 150ms later I don't have goosebumps, then 150ms later I do have goosebumps, etc.)

I absolutely agree that most of the time oscillations don't happen. That being said, oscillations absolutely do happen in at least one case - epilepsy. I remain puzzled that evolution "allows" epilepsy to happen, and epilepsy being a breakdown that does allow ~300ms oscillations to happen, akin to feedback in audio amplifiers, is a better explanation for this than I've heard elsewhere.

Sorry, I'm confused. There's an I and a D? I only see a P.

A generic overdamped PID controller will react to a step-change in its input via (vaguely)-exponential decay towards the new value[1].

Even for a non-overdamped PID controller the magnitude of the tail decreases exponentially with time. (So long as said PID controller is stable at least.)

You are correct that all that is necessary for a PID controller to react in this fashion is a nonzero P term.

It seems to me that you can start a startle reaction quickly (small fraction of a second), but you can't stop a startle quickly.

Absolutely; a step change followed by a decay still has high-frequency components. (This is the same thing people forget when they route 'slow' clocks with fast drivers and then wonder why they are getting crosstalk on other signals and high-frequency interference in general.)

Your slow-responding predictor is going to have a terrible effective reaction time, is what I'm trying to say here, because you're filtering out the high-frequency components of the prediction error, and so the rising edge of your prediction error gets filtered from a step change to something closer to a sigmoid that takes quite a while to get to full amplitude.... which in turn means that what the predictor learns is not a step-change followed by a decay. It learns the output of a low-pass filter on said step-change followed by a decay, a.k.a. a slow rise and decay.

I also want to stay very very far away from any situation where there might be fast oscillations that originate in instability rather than already being present in exogenous data.

Right. Which brings me back to my puzzle: why does epilepsy continue to exist?

(Do you at least agree that, were there some mechanism where there was enough feedback/crosstalk such that you did get oscillations, it might look something like epilepsy?)

And I continue to believe that these things are all compatible

Can you please give an example of a general-purpose function estimator, that when plugged into this pseudo-TD system, both:

- Can learn "most[2]" functions

- Has a low-and-bounded learning rate regardless of current parameters, such that (after a single update, that is).

I know of schemes that achieve 1, and schemes that achieve 2. I don't know of any schemes that achieve both offhand[3].

*****

Thank you again for going back and forth with me on this by the way. I appreciate it.

- ^

...or some offset from the new value, in some cases.

- ^

I'm not going to worry too much if e.g. there's a single unstable pathological case.

- ^

LReLU violates 2. LReLU with regularization violates 1. Etc.

↑ comment by Steven Byrnes (steve2152) · 2022-03-04T14:48:56.727Z · LW(p) · GW(p)

Sure; the further you get away from ~300ms the less the number makes sense for e.g. predicting neuron latency, as described earlier.

I must have missed that part; can you point more specifically to what you're referring to?

why does epilepsy continue to exist?

I think practically anywhere in the brain, if A connects to B, then it's a safe bet that B connects to A. (Certainly for regions, and maybe even for individual neurons.) Therefore we have the setup for epileptic seizures, if excitation and inhibition are not properly balanced.

Or more generically, if X% of neurons in the brain are active at time t, then we want around X% of neurons in the brain to be active at time t+1. That means that we want each upstream neuron firing event to (on average) cause exactly one net downstream neuron to fire. But individual neurons have their own inputs and outputs; by default, there seems to be a natural failure mode where the upstream neurons excite not-exactly-one downstream neuron, and we get exponential growth (or decay).

My impression is that there are lots of mechanisms to balance excitation and inhibition—probably different mechanisms in different parts of the brain—and any of those mechanisms can fail. I'm not an epilepsy expert by any means (!!) , but at a glance it does seem like epilepsy has a lot of root causes and can originate in lots of different brain areas, including areas that I don't think are doing this kind of prediction, e.g. temporal lobe and dorsolateral prefrontal cortex and hippocampus.

the rising edge of your prediction error gets filtered from a step change to something closer to a sigmoid that takes quite a while to get to full amplitude.... which in turn means that what the predictor learns is not a step-change followed by a decay. It learns the output of a low-pass filter on said step-change followed by a decay, a.k.a. a slow rise and decay.

I still think you're incorrectly mixing up the time-course of learning (changes to parameters / weights / synapse strengths) with the time-course of an output following a sudden change in input. I think they're unrelated.

To clarify our intuitions here, I propose to go to the slow-learning limit.

However fast you've been imagining the parameters / weights / synapse strength changing in any given circumstance, multiply that learning rate by 0.001. And simultaneously imagine that the person experiences everything in their life with 1000× more repetitions. For example, instead of getting whacked by a golf ball once, they get whacked by a golf ball 1000× (on 1000 different days).

(Assume that the algorithm is exactly the same in every other respect.)

I claim that, after this transformation (much lower learning rate, but proportionally more repetitions), the learning algorithm will build the exact same trained model, and the person will flinch the same way under the same circumstances.

(OK, I can imagine it being not literally exactly the same, thanks to the details of the loss landscape and gradient descent etc., but similar.)

Your perspective, if I understand it, would be that this transformation would make the person flinch more slowly—so slowly that they would get hit by the ball before even starting to flinch.

If so, I don't think that's right.

Every time the person gets whacked, there's a little interval of time, let's say 50ms, wherein the context shows a golf ball flying towards the person's face, and where the supervisor will shortly declare that the person should have been flinching. That little 50ms interval of time will contribute to updating the synapse strengths. In the slow-learning limit, the update will be proportionally smaller, but OTOH we'll get that many more repetitions in which the same update will happen. It should cancel out, and it will eventually converge to a good prediction, F(ball-flying-towards-my-face) = I-should-flinch.

And after training, even if we lower the learning rate all the way down to zero, we can still get fast flinching at appropriate times. It would only be a problem if the person changes hobbies from golf to swimming—they wouldn't learn the new set of flinch cues.

Sorry if I'm misunderstanding where you're coming from.

Can you please give an example of a general-purpose function estimator, that when plugged into this pseudo-TD system, both:

If you take any solution to 1, and multiply the learning rate by 0.000001, then it would satisfy 2 as well, right?

Replies from: TLW↑ comment by TLW · 2022-03-06T05:24:44.287Z · LW(p) · GW(p)

I must have missed that part; can you point more specifically to what you're referring to?

It feels wrong to refer you back to your own writing, but much of part 4 was dedicated to talking about these short-term predictors being used to combat neural latency and to do... well, short-term predictions. A flinch detector that goes off 100ms in advance is far less useful than a flinch detector that goes off 300ms in advance, but at the same time a short-term predictor that predicts too far in advance leads to feedback when used as a latency counter (as I asked about/noted in the previous post).

(It's entirely possible that different predictors have different prediction timescales... but then you're just replaced the problem with a meta-problem. Namely: how do predictors choose the timescale?)

To clarify our intuitions here, I propose to go to the slow-learning limit.

However fast you've been imagining the parameters / weights / synapse strength changing in any given circumstance, multiply that learning rate by 0.001. And simultaneously imagine that the person experiences everything in their life with 1000× more repetitions. For example, instead of getting whacked by a golf ball once, they get whacked by a golf ball 1000× (on 1000 different days).

1x the training data with 1x the training rate is not equivalent to 1000x the training data with 1/1000th of the training rate. Nowhere near. The former is a much harder problem, generally speaking.

(And in a system as complex and chaotic as a human there is no such thing as repeating the same datapoint multiple times... related data points yes. Not the same data point.)

(That being said, 1x the training data with 1x the training rate is still harder than 1x the training data with 1/1000th the training rate, repeated 1000x.)

Your perspective, if I understand it, would be that this transformation would make the person flinch more slowly—so slowly that they would get hit by the ball before even starting to flinch.

You appear to be conflating two things here. It's worth calling them out as separate.

Putting a low-pass filter on the learning feedback signal absolutely does cause something to learn a low-passed version of the output. Your statement "In that case, the circuit would be basically incapable of "fast" dynamics (i.e. it would have implicit low-pass filters everywhere)," doesn't really work, precisely because it leads to absurd conclusions. This is what I was calling out.

A low learning rate is something different. (That has other problems...)

If you take any solution to 1, and multiply the learning rate by 0.000001, then it would satisfy 2 as well, right?

My apologies, and you are correct as stated; I should have added something on few-shot learning. Something like a flinch detector likely does not fire 1,000,000x in a human lifetime[1], which means that your slow-learning solution hasn't learnt anything significant by the time the human dies, and isn't really a solution.

I am aware that 1m is likely you just hitting '0' a bunch of times'; humans are great few-shot (and even one-shot) learners. You can't just drop the training rate or else your examples like 'just stand on the ladder for a few minutes and your predictor will make a major update' don't work.

- ^

My flinch reflex works fine and I'd put a trivial upper-bound of 10k total flinches (probably even 1k is too high). (I lead a relatively quiet life.)

↑ comment by Steven Byrnes (steve2152) · 2022-03-07T20:51:46.368Z · LW(p) · GW(p)

Oh, hmm. In my head, the short-term predictors in the cerebellum are for latency-reduction and discussed in the last post, and meanwhile the short-term predictors in the telencephalon (amygdala & mPFC) are for flinching and discussed here. I think the cerebellum short-term predictors and the telencephalon short-term predictors are built differently for different purposes, and once we zoom in beyond the idea of “short-term prediction” and start talking about parameter settings etc., I really don't lump them together in my mind, they're apples and oranges. In the conversation thus far, I thought you were talking about the telencephalon (amygdala & mPFC) ones. If we're talking about instability from the cerebellum instead, we can continue the Post #4 thread [LW(p) · GW(p)].

~

I think I said some things about low-pass filters up-thread and then retracted it later on, and maybe you missed that. At least for some of the amygdala things like flinching, I agree with you that low-pass filters seem unlikely to be part of the circuit (well, depending on where the frequency cutoff is, I suppose). Sorry, my bad.

~

A common trope is that the hippocampus does one-shot learning in a way that vaguely resembles a lookup table with auto-associative recall, whereas other parts of the cortex learn more generalizable patterns more slowly, including via memory recall (i.e., gradual transfer of information from hippocampus to cortex). I'm not immediately sure whether the amygdala does one-shot learning. I do recall a claim that part of PFC can do one-shot learning, but I forget which part; it might have been a different part than we're talking about. (And I'm not sure if the claim is true anyway.) Also, as I said before, with continuous-time systems, “one shot learning” is hard to pin down; if David Burns spends 3 seconds on the ladder feeling relaxed, before climbing down, that's kinda one-shot in an intuitive sense, but it still allows the timescale of synapse changes to be much slower than the timescale of the circuit. Another consideration is that (I think) a synapse can get flagged quickly as “To do: make this synapse stronger / weaker / active / inactive / whatever”, and then it takes 20 minutes or whatever for the new proteins to actually be synthesized etc. so that the change really happens. So that's “one-shot learning” in a sense, but doesn't necessarily have the same short-term instabilities, I'd think.

↑ comment by TLW · 2022-02-26T21:13:40.461Z · LW(p) · GW(p)

To add to this a little: I think it likely that the gain would be dynamically tuned by some feedback system or another[1]. In order to tune said gain however you need a non-constant signal to be able to measure the gain to adjust it.

...Hm. That sounds a lot like delta waves during sleep. Switch to open-loop operation, disable learning[2], suppress output, input a transient, measure the response, and adjust gain accordingly. (Which would explain higher seizure risk with a lack of sleep...)

Replies from: TLWcomment by Rafael Harth (sil-ver) · 2022-03-23T15:34:41.759Z · LW(p) · GW(p)

I don't completely get this.

Let's call the short term predictor (in the long term predictor circuit) , so if tries to predict [what predicts in 0.3s], then the correct prediction would be to immediately predict the output at whatever point in the future the process terminates (the next ground truth injection?). In particular, would always predict the same until the ground truth comes in. But if I understand correctly, this is not what's going on.

So second try: is really still only trying to predict 0.3s into the future, making it less of a "long term predictor" and more of an "ongoing process predictor"? And then you get, e.g., the behavior of predicting a little less enzyme production with every step?

Or third try, is just trying to minimize something like the sum of squared differences between adjacent predictions, and is thus trying to minimize the number of ground-truth injections, and we get the above an emergent effect?

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-03-23T18:36:44.929Z · LW(p) · GW(p)

I’m advocating for the first one— is trying to predict the next ground-truth injection. Does something trouble you about that?

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2022-03-23T19:23:40.631Z · LW(p) · GW(p)

No; it was just that something about how the post explained it made me think that it wasn't #1.

comment by Lorxus · 2024-06-11T00:48:28.729Z · LW(p) · GW(p)

Thus, pretty much any instance where an experimenter has measured that a dopamine neuron is correlated with some behavioral variable, it’s probably consistent with my picture too.

I don't think this is nearly as good a sign as you seem to think. Maybe I haven't read closely enough, but surely we shouldn't be excited by the fact that your model doesn't constrain its expectation of dopaminergic neuronal firing any more or any differently than existing observations have? Like, I'd expect to have plausible-seeming neuronal firing that your model predicts not to happen, or something deeply weird about the couple of exceptional cases of dopaminergic neuronal firing that your model doesn't predict, or maybe some weird second-order effect where yes actually it looks like my model predicts this perfectly but actually it's the previous two distributional-overlap-failures "cancelling out", but "my model can totally account for all the instances of dopaminergic neuronal firing we've observed" makes me worried.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-06-11T14:19:36.101Z · LW(p) · GW(p)

I mean, that particular discussion has some issues—see for example “UPDATE JAN 2023” (and I’ve learned more since then too).

But there is an actual old oversimplified hypothesis from the 1990s where there’s an RL system and a reward signal and dopamine is the TD learning signal, and that hypothesis would seem to imply that there should be one dopamine signal doing one clear thing, as opposed to what’s actually observed, which is a giant mess of dozens of signals that correlate with all kinds of stuff.

Yes it’s easy to come up with hypotheses where there are dozens of signals that correlate with all kinds of stuff, and indeed there are many many such hypotheses in the literature, and nobody serious actually believes that old theory from the 1990s anymore. But still, I thought it was worth mentioning how I would have explained the fact that there’s dozens of signals that correlate with all kinds of stuff, and indeed that subsection wound up leading to some fruitful discussions and feedback in the months after I published it.

I’ve read a ton of experimental reports and theoretical models of the basal ganglia and dopamine neurons, and have lots of idiosyncratic opinions, very few of which are spelled out in this old post. (Or anywhere else. If I have something important to say about AGI safety, and I could only say it by explaining something about my opinions on the basal ganglia & dopamine as background information, then I would probably do so. But in the absence of that, writing down all my opinions about the basal ganglia & dopamine would seem to me to be possibly-helpful for AGI capabilities but unhelpful for AGI safety.)

comment by MSRayne · 2022-06-16T15:26:01.186Z · LW(p) · GW(p)

I'm not sure if this is the right post in the sequence to ask this question on but: how does your model explain the differences in effects of different reinforcement schedules? Perhaps there's some explanation of them already in the literature, but I've always wondered why, for instance, variable ratio scheduling is so much more motivating than fixed ratio scheduling.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-06-16T17:21:23.824Z · LW(p) · GW(p)

I haven’t read the literature on that, but it’s always fun to speculate off the top of my head. Here goes :)

You’re deciding whether or not to pull the lever.

In a 5%-win slot machine (variable-rate schedule), if you pull the lever, there’s a probability distribution for what will happen next, and that distribution has 5% weight on “immediate reward”. Maybe that’s sufficiently motivating to press the lever. (See Section 5.5.6.1 above.)

In a win-every-20-presses machine (fixed-rate schedule), there are 20 different scenarios (depending on how close you are to the next reward). Probably the least motivating of those 20 scenarios is the one where you just won and you’re 20 lever-presses away from the next win. Now, the probability distribution for what happens after the next press has 0% weight on “immediate reward”. Instead, you might concoct the plan “I will press the lever 20 times and then I’ll 100% get a reward”. But that plan might not be sufficiently motivating, because it gets penalized by the boring exertion required, and the reward doesn’t count for as much because it’s distant in time.

So then I would say: a priori, it’s not obvious which one would be more motivating, but there’s no reason to expect them to be equally motivating. The winner depends on several innately-determined parameters like how steep the hyperbolic time-discounting is, and exactly how does the brain collapse the reward prediction probability distribution into a decision. And I guess that, throughout the animal kingdom, these parameters are such that the 5%-win slot machine is more motivating. ¯\_(ツ)_/¯

Replies from: MSRayne↑ comment by MSRayne · 2022-06-16T19:12:59.821Z · LW(p) · GW(p)

My vague, uneducated intuition on the matter is that it has something to do with surprise. More specifically, that a pleasant event that is unexpected is intrinsically higher valence / more rewarding, for some reason, than a pleasant event that is expected. I don't know why this would be the case or how it works in the brain but it fits with my life experience pretty well and likely yours too. (In the same way, an unexpected bad event feels far worse than an expected bad event in most cases.)