[Intro to brain-like-AGI safety] 11. Safety ≠ alignment (but they’re close!)

post by Steven Byrnes (steve2152) · 2022-04-06T13:39:42.104Z · LW · GW · 1 commentsContents

11.1 Post summary / Table of contents 11.2 Alignment without safety? 11.3 Safety without alignment? 11.3.1 AI Boxing 11.3.2 Data curation 11.3.3 Impact limits 11.3.4 Non-agentic (or “tool”) AI 11.4 Conclusion Changelog None 1 comment

(Last revised: July 2024. See changelog at the bottom.)

(If you’re already an AGI safety expert, you can probably skip this short post—I don’t think anything here is new, or too specific to brain-like AGIs.)

11.1 Post summary / Table of contents

Part of the “Intro to brain-like-AGI safety” post series [? · GW].

In the previous post [LW · GW], I talked about “the alignment problem” for brain-like AGIs. Two points are worth emphasizing: (1) the alignment problem for brain-like AGIs is currently unsolved (just like the alignment problem for any other type of AGI), and (2) solving it would be a giant leap towards AGI safety.

That said, “solving AGI alignment” is not exactly the same as “solving AGI safety”. This post is about how the two may come apart, at least in principle.

As a reminder, here’s the terminology:

- “AGI alignment” (previous post [LW · GW]) means that an AGI is trying to do things that the AGI designer had intended for it to be trying to do.[1] This notion only makes sense for algorithms that are “trying” to do something in the first place. What does “trying” mean in general? Hoo boy, that’s a whole can of worms [? · GW]. Is a sorting algorithm “trying” to sort numbers? Or is it merely sorting them?? I don’t want to go there. For this series, it’s easy. The “brain-like AGIs” that I’m talking about can definitely “try” to do things, in exactly the same common-sense way that a human can “try” to get out of debt.

- “AGI safety” (Post #1 [LW · GW]) is about what the AGI actually does, not what it’s trying to do. AGI safety means that the AGI’s actual behavior does not lead to a “catastrophic accident”, as judged by the AGI’s own designers.[2]

Thus, these are two different things. And my goal in this post is to describe how they may come apart:

- Section 11.2 is “alignment without safety”. A possible story would be: “I wanted my AGI to mop the floor, and my AGI did in fact try to mop my floor, but, well, it’s a bit clumsy, and it seems to have accidentally vaporized the entire universe into pure nothingness.”

- Section 11.3 is “safety without alignment”. A possible story would be: “I don’t really know what my AGI is trying to do, but it is constrained, such that it can’t do anything catastrophically dangerous even if it wanted to.” I’ll go through four special cases of safety-without-alignment: “boxing”, “data curation”, “impact limits”, and “non-agentic AI”.

To skip to the final answer, my takeaway is that, although it is not technically correct to say “AGI alignment is necessary and sufficient for AGI safety”, it’s damn close to correct, at least in the brain-like AGIs we’re talking about in this series.

11.2 Alignment without safety?

This is the case where an AGI is aligned (i.e., trying to do things that its designers had intended for it to try to do), but still causes catastrophic accidents. How?

One example: maybe, as designers, we didn’t think carefully about what we had intended for the AGI to do. John Wentworth gives a hypothetical example here [LW · GW]: humans ask the AGI for a nuclear fusion power plant design, but they neglect to ask the follow-up question of whether the same design makes it much easier to make nuclear weapons.

Another example: maybe the AGI is trying to do what we had intended for it to try to do, but it screws up. For example, maybe we ask the AGI to build a new better successor AGI, that is still well-behaved and aligned. But the AGI messes up. It makes a successor AGI with the wrong motivations, and the successor gets out of control and kills everyone.

I don’t have much to say in general about alignment-without-safety. But I guess I’m modestly optimistic that, if we solve the alignment problem, then we can muddle our way through to safety. After all, if we solve the alignment problem, then we’ll be able to build AGIs that are sincerely trying to help us, and the first thing we can use them for is to ask them for help clarifying exactly what they should be doing and how, thus hopefully avoiding failure modes like those above.[3]

(As usual, when I say “we can muddle our way through to safety”, I’m talking about the narrow thing where it is technically possible for a careful programmer to make a safe AGI—see Post #1, Section 1.2 [LW · GW]. Out-of-scope for this series is the societal safety problem, namely that misaligned AGIs are likely to exist even if alignment is technically possible, thanks to careless actors, competition, etc., and these misaligned AGIs may be extremely dangerous—see my discussion at What does it take to defend the world against out-of-control AGIs? [LW · GW].)

That said, I could be wrong, and I’m certainly happy for people to keep thinking hard about the non-alignment aspects of safety.

11.3 Safety without alignment?

Conversely, there are various ideas of how to make an AGI safe without needing it to make it aligned. They all seem hard or impossible to me. But hey, perfect alignment seems hard or impossible too. I’m in favor of keeping an open mind, and using multiple layers of protection. I’ll go through some possibilities here (this is not a comprehensive list):

11.3.1 AI Boxing

The idea here is to put an AGI in a box, with no internet access, no actuators, etc. We can unplug the AGI whenever we want. Even if the AGI has dangerous motivations, who cares? What harm could it possibly do? Oh, umm, it could send out radio signals with RAM. So we also need a Faraday cage. Hopefully there’s nothing else we forgot!

Actually, I am quite optimistic that people could make a leakproof AGI box if they really tried. I love bringing up Appendix C of Cohen, Vellambi, Hutter (2020), which has an awesome box design, complete with air-tight seals and Faraday cages and laser interlocks and so on. Someone should totally build that. When we’re not using it for AGI experiments, we can loan it to movie studios as a prison for supervillains.

A different way to make a leakproof AGI box is using homomorphic encryption [LW · GW]. This has the advantage of being provably leakproof (I think), but the disadvantage of dramatically increasing the amount of compute required to run the AGI algorithm.

What’s the problem with boxing? Well, we made the AGI for a reason. We want to use it to do things.

For example, something like the following could be perfectly safe:

- Run a possibly-misaligned, possibly-superintelligent AGI program, on a supercomputer, in a sealed Cohen et al. 2020 Appendix C box, at the bottom of the ocean.

- After a predetermined amount of time, cut the electricity and dredge up the box.

- Without opening the box, incinerate the box and its contents.

- Launch the ashes into the sun.

Yes, that would be safe! But not useful! Nobody is going to spend gazillions of dollars to do that.

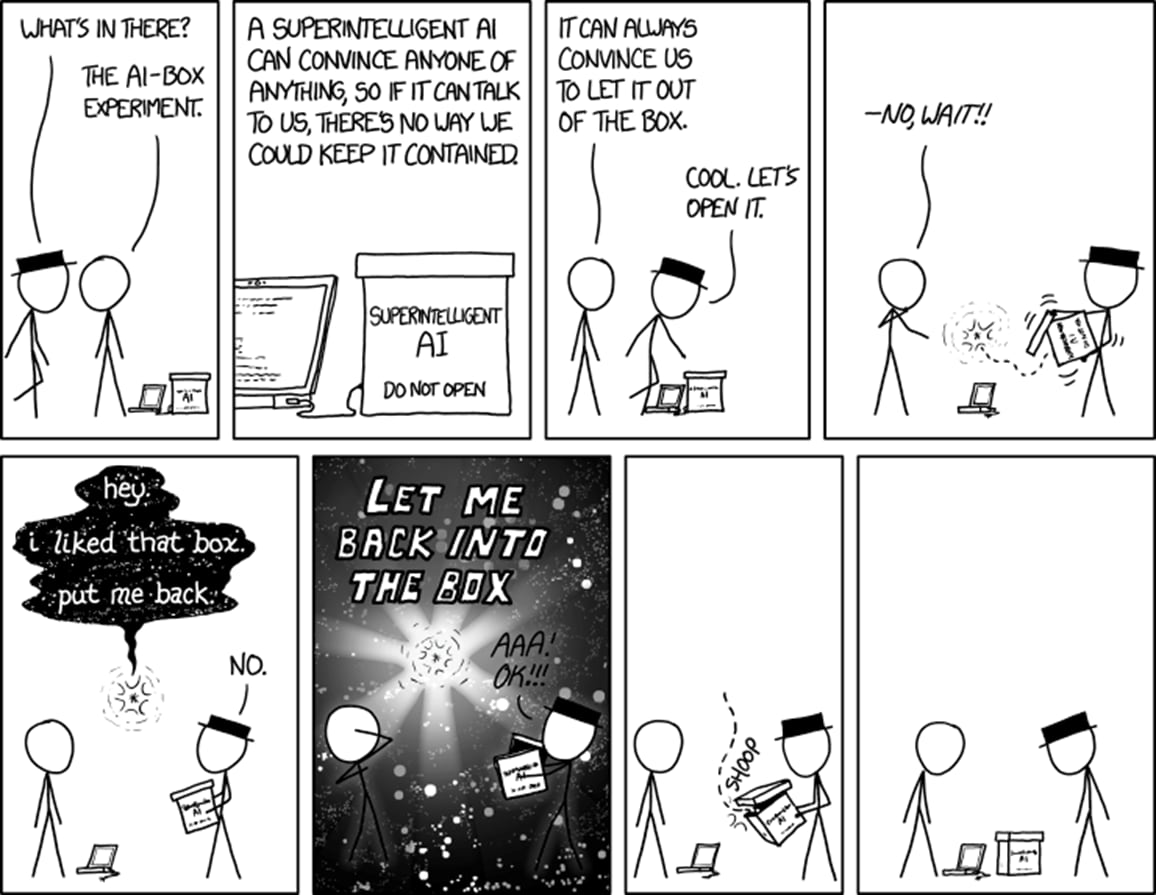

Instead, for example, maybe we’ll have a human interact with the AGI through a text terminal, asking questions, making requests, etc. The AGI may print out blueprints, and if they look good, we’ll follow them. Oops. Now our box has a giant gaping security hole—namely, us! (See the AI-box experiment.)

So I don’t see any path from “boxing” to “solving the AGI safety problem”.

That said “won’t solve the AGI safety problem” is different from “literally won’t help at all, not even a little bit on the margin”. I do think boxing can help on the margin. In fact, I think it’s a terrible idea to put an AGI on an insecure OS that also has an unfiltered internet connection—especially early in training, when the AGI’s motivations are still in flux. I for one am hoping for a gradual culture shift in the machine learning community, such that eventually “Let’s train this new powerful model on an air-gapped server, just in case” is an obviously reasonable thing to say and do. We’re not there yet. Someday!

In fact, I would go further. We know that a learning-from-scratch AGI [LW · GW] will have some period of time when its motivations and goals are unpredictable and possibly dangerous. Unless someone thinks of a bootstrapping approach,[4] we’re going to need a secure sandbox in which the infant-AGI can thrash about without causing any real damage, until such time as our motivation-sculpting systems have made it corrigible. There would be a race between how fast we can refine the AGI’s motivations, versus how quickly the AGI can escape the sandbox—see previous post (Section 10.5.4.2) [LW · GW]. Thus, making harder-to-escape sandboxes (that are also user-friendly and full of great features, such that future AGI developers will actually choose to use them rather than less-secure alternatives) seems like a useful thing to do, and I endorse efforts to accelerate progress in this area.

Relatedly, Ryan Greenblatt and colleagues at Redwood Research have been advocating for “AI Control”—see their 2023 arxiv paper and blog post [LW · GW]. As an example of what they’re proposing, if you want to use an AI to write code, they would suggest boxing it, and trying to ensure that it cannot output anything except source code, and using various techniques (including less-powerful but more-trustworthy AIs) to ensure that the source code is not hiding sneaky security flaws, manipulative messages to any humans reading it, etc., before it passes out of the box.

One of the reasons that “AI Control” is hard is that there’s the big problem, namely that the clock is ticking until some other research group comes along and makes a dangerous out-of-control AGI [LW · GW]. Your AGI, notwithstanding the restrictions of these control techniques, still needs to be sufficiently powerful that it can help address that big problem.

The authors put forward an argument that it’s probably possible to square that circle. Whereas I’m pretty skeptical: I think the authors make lots of load-bearing assumptions that the AGI in question is based on LLMs, not brain-like AGI, and that the latter would undermine their approaches.[5]

But in any case, I’m all for this kind of work! Maybe I’m wrong, and there do in fact exist control techniques that can extract transformative work from a misaligned AGI. But even if not, thinking about this kind of thing overlaps with the more modest goal of “possibly noticing misalignment before it’s too late”. So I’m happy for people to work on fleshing out those kinds of plans.

So in conclusion, I’m strongly in favor of boxing-related technical work, whether by building user-friendly open-source highly-secure sandboxes, or by strategizing along the lines of Greenblatt’s “AI Control”, or whatever else.

But I definitely think we still need to solve the alignment problem.

11.3.2 Data curation

Let’s say we fail to solve the alignment problem, so we’re not sure about the AGI’s plans and intentions, and we’re concerned about the possibility that the AGI may be trying to trick or manipulate us.

One way to tackle this problem is to ensure that the AGI has no idea that we humans exist and are running it on a computer. Then it won’t try to trick us, right?

As one example along those lines, we can make a “mathematician AGI” that knows about the universe of math, but knows nothing whatsoever about the real world. See Thoughts on Human Models [LW · GW] for more along these lines.

I see two problems:

- Avoiding all information leaks seems hard. For example, an AGI with metacognitive capabilities could presumably introspect on how it was constructed, and then guess that some agent built it.

- More importantly, I don’t know what we would do with a “mathematician AGI” (or whatever) that knows nothing of humans. It seems like it would be a fun toy, and we could get lots of cool mathematical proofs, but (as in the previous section) it doesn’t solve the big problem—namely, that the clock is ticking until some other research group comes along and makes a dangerous real-world AGI.

By the way, another idea in this vicinity is putting the AGI in a virtual sandbox environment, and not telling it that it’s in a virtual sandbox environment (further discussion [LW · GW]). This seems to me to have both of the same two problems as above, or at least one of them, depending on the detailed setup. Interestingly, some humans spend inordinate amounts of time pondering whether they themselves are running in a virtual sandbox environment, in the absence of any direct evidence whatsoever. Surely a bad sign! That said, doing tests of an AGI in a virtual sandbox is still almost definitely a good idea, as mentioned in the previous section. It doesn’t solve the whole AGI safety problem, but we still ought to do it.

11.3.3 Impact limits

We humans have an intuitive notion of the “impact” of a course of action. For example, removing all the oxygen from the atmosphere is a “high-impact action”, whereas making a cucumber sandwich is a “low-impact action”.

There’s a hope that, even if we can’t really control an AGI’s motivations, maybe we can somehow restrict the AGI to “low-impact actions”, and thus avoid catastrophe.

Defining “low impact” winds up being quite tricky. See Alex Turner’s work [? · GW] for one approach. Rohin Shah suggests [LW · GW] that there are three desiderata that seem to be mutually incompatible: “objectivity (no dependence on [human] values), safety (preventing any catastrophic plans) and non-trivialness (the AI is still able to do some useful things)”. If that’s right, then clearly we need to throw out objectivity. One place we may wind up is something like AGIs that try to follow human norms [LW · GW], for example.

From my perspective, I find these ideas intriguing, but the only way I can see them working in a brain-like AGI is to implement them via the motivation system. I imagine that the AGI would follow human norms because it wants to follow human norms. So this topic is absolutely worth keeping in mind, but for my purposes, it’s not a separate topic from alignment, but rather an idea about what motivation we should be trying to put into our aligned AGIs.

11.3.4 Non-agentic (or “tool”) AI

There’s an appealing intuition, dating back at least to this 2012 post by Holden Karnofsky [LW · GW], that maybe there’s an easy solution: just make AIs that aren’t “agents” that are “trying” to do anything in particular, but instead are more like “tools” that we humans can use.

While Holden himself changed his mind and is now a leading advocate of AGI safety research, the idea of non-agentic AI lives on. Well, maybe the idea of non-agentic AI dropped a bit in salience during the 2015-2020 era when everyone was talking about RL systems like AlphaGo and MuZero (but see Eric Drexler’s 2019 “Comprehensive AI Services” for an exception). But then since 2020 the rise of Large Language Models (LLMs)[6]—which (as of this writing, I’m making no claims about the future!) are quite ineffective as autonomous agents acting in the real world—has made the idea of non-agentic AI more salient than ever. In fact, it’s so salient that, in some people’s minds, the whole idea of agentic AI has gotten bundled up with time travel into the mental category of “sci-fi nonsense”, and thus I have to write blog posts like this one [LW · GW].

As discussed in this reply to the 2012 post [LW · GW], we shouldn’t take for granted that “tool AI” would make all safety problems magically disappear. Still, I suspect that tool AI would help with safety for various reasons.

I’m skeptical of “tool AI” for a quite different reason: I don’t think such systems will be powerful enough. Just like the “mathematician AGI” in Section 11.3.2 above, I think a tool AI would be a neat toy, but it wouldn’t help solve the big problem—namely, that the clock is ticking until some other research group comes along and makes an agentic AGI. See my discussion here [LW · GW] for why I think that agentic AGIs will be able to come up with creative new ideas and inventions in a way that non-agentic AGIs can’t.

But also, this is a series on brain-like AGI. Brain-like AGI (as I’m using the term) is definitely agentic. So non-agentic AI is off-topic for this series, even if it were a viable option.

11.4 Conclusion

In summary:

- “Alignment without safety” is possible, but I’m cautiously optimistic that if we solve alignment, then we can muddle through to safety;

- “Safety without alignment” includes several options, but as far as I can tell, they are all either implausible, or so restrictive of the AGI’s capabilities that they really amount to the proposal of “not making AGI in the first place”. (This proposal is of course an option in principle, but seems very challenging in practice—see Post #1, Section 1.6 [LW · GW].)

Thus, I consider safety and alignment to be quite close, and that’s why I’ve been talking about AGI motivation and goals so frequently throughout this series.

The next three posts will talk about possible paths to alignment. Then I’ll close out the series with my wish-list of open problems, and how to get involved.

Changelog

July 2024: Since the initial version, the changes were: linking and discussing the “AI Control” argument (e.g. Ryan Greenblatt et al. 2023); adding a few more sentences about LLMs in Section 11.3.4; tweaking the little caption claim about the AGI safety funding to be more accurate; and some more minor wording changes and link updates.

- ^

As described in a footnote of the previous post [AF(p) · GW(p)], be warned that not everyone defines “alignment” exactly as I’m doing here.

- ^

By this definition of “safety”, if an evil person wants to kill everyone, and uses AGI to do so, that still counts as successful “AGI safety”. I admit that this sounds rather odd, but I believe it follows standard usage from other fields: for example, “nuclear weapons safety” is a thing people talk about, and this thing notably does NOT include the deliberate, authorized launch of nuclear weapons, despite the fact that the latter would not be “safe” for anyone, not by any stretch of the imagination. Anyway, this is purely a question of definitions and terminology. The problem of people deliberately using AGI towards dangerous ends is a real problem, and I am by no means unconcerned about it. I’m just not talking about in this particular series. See Post #1, Section 1.2 [LW · GW].

- ^

A more problematic case would be if we can align our AGIs such that they’re trying to do a certain thing we want, but only for some things, and not others. Maybe it turns out that we know how to make AGIs that are trying to solve a certain technological problem without destroying the world, but we don’t know how to make AGIs that are trying to help us reason about the future and about our own values. If that happened, my proposal of “ask the AGIs for help clarifying exactly what those AGIs should be doing and how” wouldn’t work.

- ^

- ^

For example, unlike LLMs, brain-like AGI can think thoughts, and figure things out, without an English-language chain-of-thought serving as a bottleneck [LW · GW]; brain-like AGI is more likely to get rapid capability improvements in an opaque self-contained way [LW(p) · GW(p)]; and brain-like AGI doesn’t have a clean training-vs-inference distinction, nor good knobs to control what it learns [LW · GW].

- ^

At first glance, I think there’s a plausible case that Large Language Models like GPT-4 are more “tools” than “agents”—that they’re not really “trying” to do anything in particular, in a way that’s analogous to how RL agents are “trying” to do things. (Note that GPT-4 is trained primarily [LW(p) · GW(p)] by self-supervised learning, not RL.) At second glance, it’s more complicated. For one thing, if GPT-4 is currently calculating what Person X will say next, does GPT-4 thereby temporarily “inherit” the “agency” of Person X? Could simulated-Person-X figure out that they are being simulated in GPT-4, and hatch a plot to break out?? Beats me. For another thing, even if RL is in fact a prerequisite to “agency” / “trying”, there are already lots of researchers hard at work stitching together language models with RL algorithms, in a more central way than RLHF.

Anyway, my claim in Section 11.3.4 is that there’s no overlap between (A) “systems that are sufficiently powerful to solve ‘the big problem’” and (B) “systems that are better thought of as tools rather than agents”. Whether language models are (or will be) in category (A) is an interesting question, but orthogonal to this claim, and I don’t plan to talk about it in this series.

1 comments

Comments sorted by top scores.

comment by christos (christos-papachristou) · 2022-06-04T11:30:45.168Z · LW(p) · GW(p)

I’m skeptical of “tool AI” for a quite different reason: I don’t think such systems will be powerful enough. Just like the “mathematician AGI” in Section 11.3.2 above, I think a tool AI would be a neat toy, but it wouldn’t help solve the big problem—namely, that the clock is ticking until some other research group comes along and makes an agentic AGI.

I think that a math-AGI could not be of major help in alignment, on the premise that it works well on already well-researched and well-structured fields. For example, one could try to fit a model two proof techniques for a specific theorem, and see if it can produce a third one, that is different from the two already mentioned. This could be set up in established fields with lots of work already done.

I am unsure how applicable this approach is to unstructured fields, as it would mean us asking the model to generalize well/predict based on uncertain ground truth labels (however, there's been ~15 years worth of work in this field as I see it, so maybe it could be enough?). There is someone in Cambridge (if I am not mistaken), that is trying to build a math-proof assistant, but their name escapes me.

In case we can build the latter, this should be of some (between little and major including) help to researchers.