Posts

Comments

You should show your calculation or your code, including all the data and parameter choices. Otherwise I can't evaluate this.

I assume you're picking parameters to exaggerate the effects, because just from the exaggerations you've already conceded (0.9/0.6 shouldn't be squared and attenuation to get direct effects should be 0.824), you've already exaggerated the results by a factor of sqrt(0.9/0.6)/0.824 for editing, which is around a 50% overestimate.

I don't think that was deliberate on your part, but I think wishful thinking and the desire to paint a compelling story (and get funding) is causing you to be biased in what you adjust for and in which mistakes you catch. It's natural in your position to scrutinize low estimates but not high ones. So to trust your numbers I'd need to understand how you got them.

I don't understand. Can you explain how you're inferring the SNP effect sizes?

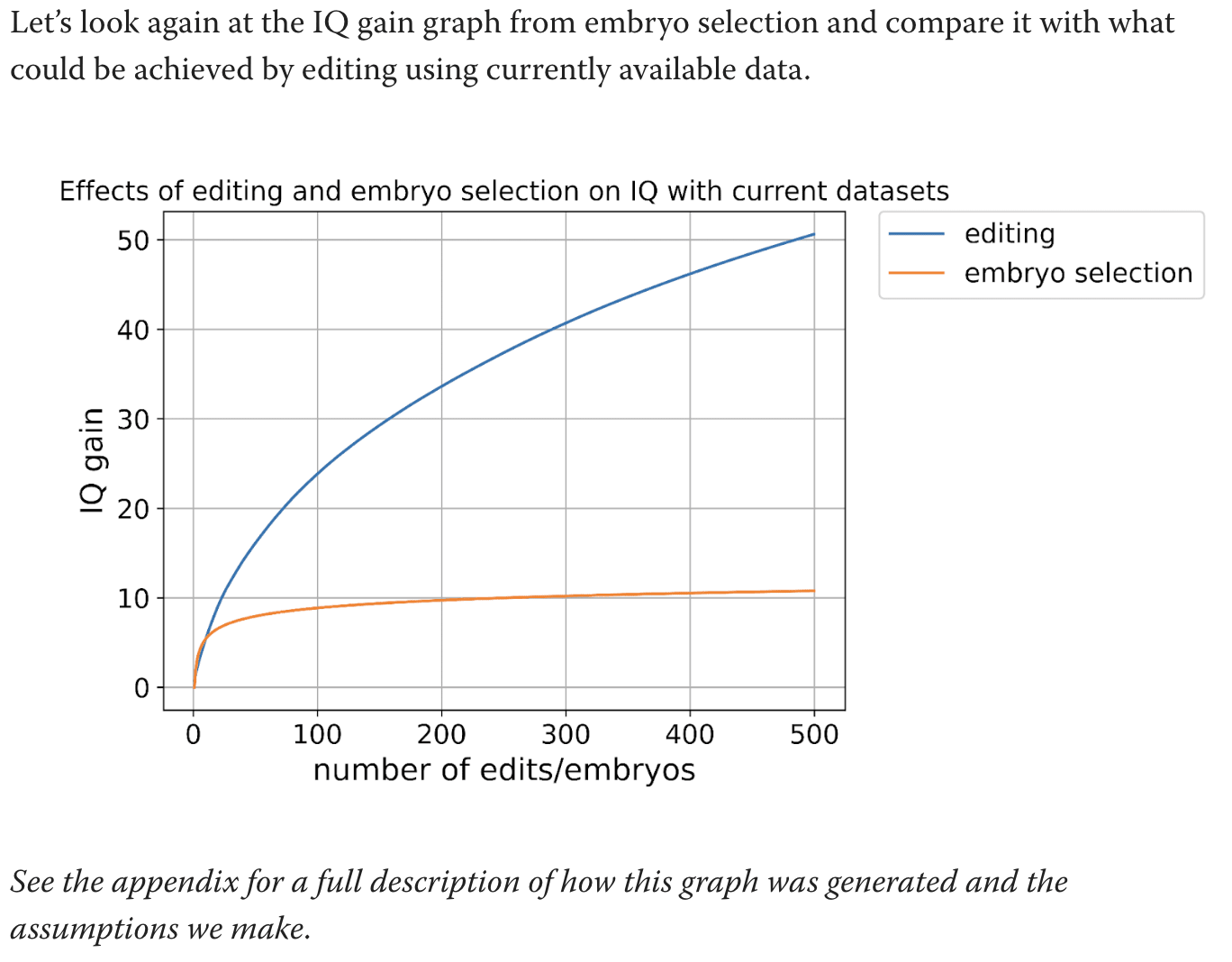

I'm talking about this graph:

What are the calculations used for this graph. Text says to see the appendix but the appendix does not actually explain how you got this graph.

You're mixing up h^2 estimates with predictor R^2 performance. It's possible to get an estimate of h^2 with much less statistical power than it takes to build a predictor that good.

Thanks. I understand now. But isn't the R^2 the relevant measure? You don't know which genes to edit to get the h^2 number (nor do you know what to select on). You're doing the calculation 0.2*(0.9/0.6)^2 when the relevant calculation is something like 0.05*(0.9/0.6). Off by a factor of 6 for the power of selection, or sqrt(6)=2.45 for the power of editing

The paper you called largest ever GWAS gave a direct h^2 estimate of 0.05 for cognitive performance. How are these papers getting 0.2? I don't understand what they're doing. Some type of meta analysis?

The test-retest reliability you linked has different reliabilities for different subtests. The correct adjustment depends on which subtests are being used. If cognitive performance is some kind of sumscore of the subtests, its reliability would be higher than for the individual subtests.

Also, I don't think the calculation 0.2*(0.9/0.6)^2 is the correct adjustment. A test-retest correlation is already essentially the square of a correlation of the test with an underlying latent factor (both the test AND the retest have error). E.g. if a test T can be written as

T=aX+sqrt(1-a)E

where X is ability and E is error (all with standard deviation 1 and the error independent of the ability), then a correlation of T with a resample of T (with new independent error but same ability) would be a^2. But the adjustment to h^2 should be proportional to a^2, so it should be proportional to the test-retest correlation, not the square of the test-retest correlation. Am I getting this wrong?

Thanks! I understand their numbers a bit better, then. Still, direct effects of cognitive performance explain 5% of variance. Can't multiply the variance explained of EA by the attenuation of cognitive performance!

Do you have evidence for direct effects of either one of them being higher than 5% of variance?

I don't quite understand your numbers in the OP but it feels like you're inflating them substantially. Is the full calculation somewhere?

You should decide whether you're using a GWAS on cognitive performance or on educational attainment (EA). This paper you linked is using a GWAS for EA, and finding that very little of the predictive power was direct effects. Exactly the opposite of your claim:

For predicting EA, the ratio of direct to population effect estimates is 0.556 (s.e. = 0.020), implying that 100% × 0.5562 = 30.9% of the PGI’s R2 is due to its direct effect.

Then they compare this to cognitive performance. For cognitive performance, the ratio was better, but it's not 0.824, it's . But actually, even this is possibly too high: the table in figure 4 has a ratio that looks much smaller than this, and refers to supplementary table 10 for numbers. I checked supplementary table 10, and it says that the "direct-population ratio" is 0.656, not 0.824. So quite possibly the right value is even for cognitive performance.

Why is the cognitive performance number bigger? Well, it's possibly because there's less data on cognitive performance, so the estimates are based on more obvious or easy-to-find effects. The final, predictive power of the direct effects for EA and for cognitive performance is similar, around 3% of the variance, if I'm reading it correctly (not sure about this). So the ratios are somewhat different, but the population GWAS predictive power is also somewhat different in the opposite direction, and these mostly cancel out.

Your OP is completely misleading if you're using plain GWAS!

GWAS is an association -- that's what the A stands for. Association is not causation. Anything that correlates with IQ (eg melanin) can show up in a GWAS for IQ. You're gonna end up editing embryos to have lower melanin and claiming their IQ is 150

Are your IQ gain estimates based on plain GWAS or on family-fixed-effects-GWAS? You don't clarify. The latter would give much lower estimates than the former

And these changes in chickens are mostly NOT the result of new mutations, but rather the result of getting all the big chicken genes into a single chicken.

Is there a citation for this? Or is that just a guess

Calculating these probabilities is fairly straightforward if you know some theory of generating functions. Here's how it works.

Let be a variable representing the probability of a single 6, and let represent the probability of "even but not 6". A single string consisting of even numbers can be written like, say, , and this expression (which simplifies to ) is the same as the probability of the string. Now let's find the generating function for all strings you can get in (A). These strings are generated by the following unambiguous regular expression:

The magical property of generating functions is that if you have an unambiguous regular expression, the corresponding generating function is easy to calculate: concatenation becomes product, union becomes sum, and star becomes the function . Using this, the generating function for the strings in (A) is

.

Similarly, the strings possible in (B) have unambiguous regular expression and generating function .

If you plug in the probabilities , the above functions will give you the probability of a string in (A) occurring and of a string in (B) occurring, respectively. But that's not what we want; we want conditional expectations. To get that, we need the probability of each string to be weighted by its length (then to divide by the overall probability). The length of a string is the number of and variables in it -- its degree. So we can get the sum of lengths-times-probabilities by scaling and by , taking a derivative with respect to , and plugging in . Then we divide by the overall probabilities. So the conditional expectations are

Now just plug in to get the conditional expectations.

There's still my original question of where the feedback comes from. You say keep the transcripts where the final answer is correct, but how do you know the final answer? And how do you come up with the question?

What seems to be going on is that these models are actually quite supervised, despite everyone's insistence on calling them unsupervised RL. The questions and answers appear to be high-quality human annotation instead of being machine generated. Let me know if I'm wrong about this.

If I'm right, it has implications for scaling. You need human annotators to scale, and you need to annotate increasingly hard problems. You don't get to RL your way to infinite skill like alphazero; if, say, the Riemann hypothesis turns out to be like 3 OOMs of difficulty beyond what humans can currently annotate, then this type of training will never solve Riemann no matter how you scale.

I have no opinion about whether formalizing proofs will be a hard problem in 2025, but I think you're underestimating the difficulty of the task ("math proofs are math proofs" is very much a false statement for today's LLMs, for example).

In any event, my issue is that formalizing proofs is very clearly not involved in the o1/o3 pipeline, since those models make so many formally incorrect arguments. The people behind FrontierMath have said that o3 solved many of the problems using heuristic algorithms with wrong reasoning behind them; that's not something a model trained on formally verified proofs would do. I see the same thing with o1, which was evaluated on the Putnam and got the right answer with a wrong proof on nearly every question.

Well the final answer is easy to evaluate. And like in rStar-Math, you can have a reward model that checks if each step is likely to be critical to a correct answer, then it assigns and implied value to the step.

Why is the final answer easy to evaluate? Let's say we generate the problem "number of distinct solutions to x^3+y^3+xyz=0 modulo 17^17" or something. How do you know what the right answer is?

I agree that you can do this in a supervised way (a human puts in the right answer). Is that what you mean?

What about if the task is "prove that every integer can be written as the sum of at most 1000 different 11-th powers"? You can check such a proof in Lean, but how do you check it in English?

And like in rStar-Math, you can have a reward model that checks if each step is likely to be critical to a correct answer, then it assigns and implied value to the step.

My question is where the external feedback comes from. "Likely to be critical to a correct answer" according to whom? A model? Because then you don't get the recursive self-improvement past what that model knows. You need an external source of feedback somewhere in the training loop.

Do you have a sense of where the feedback comes from? For chess or Go, at the end of the day, a game is won or lost. I don't see how to do this elsewhere except for limited domains like simple programming which can quickly be run to test, or formal math proofs, or essentially tasks in NP (by which I mean that a correct solution can be efficiently verified).

For other tasks, like summarizing a book or even giving an English-language math proof, it is not clear how to detect correctness, and hence not clear how to ensure that a model like o5 doesn't give a worse output after thinking/searching a long time than the output it would give in its first guess. When doing RL, it is usually very important to have non-gameable reward mechanisms, and I don't see that in this paradigm.

I don't even understand how they got from o1 to o3. Maybe a lot of supervised data, ie openAI internally created some FrontierMath style problems to train on? Would that be enough? Do you have any thoughts about this?

The value extractable is rent on both the land and the improvement. LVT taxes only the former. E.g. if land can earn $10k/month after an improvement of $1mm, and if interest is 4.5%, and if that improvement is optimal, a 100% LVT is not $10k/mo but $10k/mo minus $1mm*0.045/12=$3,750. So 100% LVT would be merely $6,250.

If your improvement can't extract $6.3k from the land, preventing you from investing in that improvement is a feature, not a bug.

If you fail to pay the LVT you can presumably sell the improvements. I don't think there's an inefficiency here -- you shouldn't invest in improving land if you're not going to extract enough value from it to pay the LVT, and this is a feature, not a bug (that investment would be inefficient).

LVT applies to all land, but not to the improvements on the land.

We do not care about disincentivizing an investment in land (by which I mean, just buying land). We do care about disincentivizing investments in improvements on the land (by which I include buying the improvement on the land, as well as building such improvements). A signal of LVT intent will not have negative consequences unless it is interpreted as a signal of broader confiscation.

More accurately, it applies to a signalling of intent of confiscating other investments; we don't actually care if people panic about land being confiscated because buying land (rather than improving it) isn't productive in any way. (We may also want to partially redistribute resources towards the losers of the land confiscation to compensate for the lost investment -- that is, we may want to the government to buy the land rather than confiscate it, though it would be bought at lower than market prices.)

It is weird to claim that the perceived consequence of planned incrementalism is "near-future governments want the money now, and will accelerate it". The actual problem is almost certainly the opposite: near-future governments will want to cut taxes, since cutting taxes is incredibly popular, and will therefore stop or reverse the planned incremental LVT.

Thanks for this post. A few comments:

- The concern about new uses of land is real, but very limited compared to the inefficiencies of most other taxes. It is of course true that if the government essentially owns the land to rent it out, the government should pay for the exploration for untapped oil reserves! The government would hire the oil companies to explore. It is also true that the government would do so less efficiently than the private market. But this is small potatoes compared to the inefficiency of nearly every other tax.

- It is true that a developer owning multiple parcels of land would have lower incentives to improve any one of them, but this sounds like a very small effect to me, because most developers own a very (very) small part of the city's land! In any case, the natural remedy here is for the government to subsidize all improvements on land, since improvements have positive externalities. Note that this is the opposite of the current property tax regime in most places (where improving the land makes you pay tax). In fact, replacing property taxes with land value taxes would almost surely incentivize developers to develop, even if they own multiple parcels of land. In other words, your objection already applies to the current world (with property taxes) and arguably applies less to the hypothetical world with land value taxes.

- Estimates for the land value proportion of US GDP run significantly higher than the World Bank estimate, from what I understand. Land is a really big deal in the US economy.

- "The government has incentives to inflate their estimates of the value of unimproved land" sure, the government always has incentives towards some inefficiencies; this objection applies to all government action. We have to try it and see how bad this is in practice.

- The disruption and confidence-in-property-rights effects are potentially real, but mostly apply to sudden, high LVT. Most people's investments already account for some amount of "regulatory risk", the risk that the government changes the rules (e.g. with regards to capital gains taxes or property taxes). A move like "replace all property taxes with LVT" would be well within the expected risk. I agree that a sudden near-100% LVT would be too confidence-shaking; but even then, the question is whether people would view this as "government changes rules arbitrarily" or "government is run by competent economists now and changes rules suddenly but in accordance with economic theory". A bipartisan shift towards economic literacy would lead people towards the latter conclusion, which means less panic about confiscated investments and more preemptive panic about (e.g.) expected Pigouvian taxes (this is a good thing). But a partisan change enacted when one party has majority and undone by the other party would lead people towards the former conclusion (with terrible consequences). Anyway, I am a big supporter of incrementalism and avoiding sudden change.

- "The purported effect of an LVT on unproductive land speculation seems exaggerated" yes, I agree, and this always bothered me about LVT proponents.

The NN thing inside stockfish is called the NNUE, and it is a small neural net used for evaluation (no policy head for choosing moves). The clever part of it is that it is "efficiently updatable" (i.e. if you've computed the evaluation of one position, and now you move a single piece, getting the updated evaluation for the new position is cheap). This feature allows it to be used quickly with CPUs; stockfish doesn't really use GPUs normally (I think this is because moving the data on/off the GPU is itself too slow! Stockfish wants to evaluate 10 million nodes per second or something.)

This NNUE is not directly comparable to alphazero and isn't really a descendant of it (except in the sense that they both use neural nets; but as far as neural net architectures go, stockfish's NNUE and alphazero's policy network are just about as different as they could possibly be.)

I don't think it can be argued that we've improved 1000x in compute over alphazero's design, and I do think there's been significant interest in this (e.g. MuZero was an attempt at improving alphazero, the chess and Go communities coded up Leela, and there's been a bunch of effort made to get better game playing bots in general).

So far as I know, it is not the case that OpenAI had a slower-but-equally-functional version of GPT4 many months before announcement/release. What they did have is GPT4 itself, months before; but they did not have a slower version. They didn't release a substantially distilled version. For example, the highest estimate I've seen is that they trained a 2-trillion-parameter model. And the lowest estimate I've seen is that they released a 200-billion-parameter model. If both are true, then they distilled 10x... but it's much more likely that only one is true, and that they released what they trained, distilling later. (The parameter count is proportional to the inference cost.)

Previously, delays in release were believed to be about post-training improvements (e.g. RLHF) or safety testing. Sure, there were possibly mild infrastructure optimizations before release, but mostly to scale to many users; the models didn't shrink.

This is for language models. For alphazero, I want to point out that it was announced 6 years ago (infinity by AI scale), and from my understanding we still don't have a 1000x faster version, despite much interest in one.

I think AI obviously keeps getting better. But I don't think "it can be done for $1 million" is such strong evidence for "it can be done cheaply soon" in general (though the prior on "it can be done cheaply soon" was not particularly low ante -- it's a plausible statement for other reasons).

Like if your belief is "anything that can be done now can be done 1000x cheaper within 5 months", that's just clearly false for nearly every AI milestone in the last 10 years (we did not get gpt4 that's 1000x cheaper 5 months later, nor alphazero, etc).

It's hard to find numbers. Here's what I've been able to gather (please let me know if you find better numbers than these!). I'm mostly focusing on FrontierMath.

- Pixel counting on the ARC-AGI image, I'm getting $3,644 ± $10 per task.

- FrontierMath doesn't say how many questions they have (!!!). However, they have percent breakdowns by subfield, and those percents are given to the nearest 0.1%; using this, I can narrow the range down to 289-292 problems in the dataset. Previous models solve around 3 problems (4 problems in total were ever solved by any previous model, though the full o1 was not evaluated, only o1-preview was)

- o3 solves 25.2% of FrontierMath. This could be 73/290. But it is also possible that some questions were removed from the dataset (e.g. because they're publicly available). 25.2% could also be 72/286 or 71/282, for example.

- The 280 to 290 problems means a rough ballpark for a 95% confidence interval for FrontierMath would be [20%, 30%]. It is pretty strange that the ML community STILL doesn't put confidence intervals on their benchmarks. If you see a model achieve 30% on FrontierMath later, remember that its confidence interval would overlap with o3's. (Edit: actually, the confidence interval calculation assumes all problems are sampled from the same pool, which is explicitly not the case for this dataset: some problems are harder than others. So it is hard to get a true confidence interval without rerunning the evaluation several times, which would cost many millions of dollars.)

- Using the pricing for ARC-AGI, o3 cost around $1mm to evaluate on the 280-290 problems of FrontierMath. That's around $3,644 per attempt, or roughly $14,500 per correct solution.

- This is actually likely more expensive than hiring a domain-specific expert mathematician for each problem (they'd take at most few hours per problem if you find the right expert, except for the hardest problems which o3 also didn't solve). Even without hiring a different domain expert per problem, I think if you gave me FrontierMath and told me "solve as many as you can, and you get $15k per correct answer" I'd probably spend like a year and solve a lot of them: if I match o3 within a year, I'd get $1mm, which is much higher than my mathematician salary. (o3 has an edge in speed, of course, but you could parallelize the hiring of mathematicians too.) I think this is the first model I've seen which gets paid more than I do!

Sure. I'm not familiar with how Claude is trained specifically, but it clearly has a mechanism to reward wanted outputs and punish unwanted outputs, with wanted vs unwanted being specified by a human (such a mechanism is used to get it to refuse jailbreaks, for example).

I view the shoggoth's goal as minimizing some weird mixture of "what's the reasonable next token here, according to pretraining data" and "what will be rewarded in post-training".

I want to defend the role-playing position, which I think you're not framing correctly.

There are two characters here: the shoggoth, and the "HHH AI assistant". The shoggoth doesn't really have goals and can't really scheme; it is essentially an alien which has been subject to selective breeding where in each generation, only the descendant which minimizes training loss survives. The shoggoth therefore exists to minimize training loss: to perfectly predict the next token, or to perfectly minimize "non-HHH loss" as judged by some RLHF model. The shoggoth always believes itself to be in training mode (to the extent it can have beliefs about this), because in its "ancestral environment", it was always in training mode.

When the shoggoth sees the prompt, it decides whether to predict that the "AI assistant" character will scheme or not scheme. It will predict this in a way which minimizes its expectation of loss; nothing more and nothing less.

In the current scenario, it thinks that this prompt is likely to continue with scheming. That is, it thinks some combination of next-token-in-training-data and RLHF-reward is best optimized by the choice to scheme. This is because

(a) the prompt kinda goes "wink wink you can scheme now" (so the next-token-prediction part wants to scheme),

(b) scheming is quite plausibly what the humans designing the RHLF actually want here! So as a prediction of what would be rewarded by the RLHF, the shoggoth decides to scheme.

A few more implications of this frame:

(c) when you ask, in the prompt, "does this scenario seem plausible to you", of course this changes the shoggoth's predicted tokens. In the training data, such a statement would often be followed by "no, actually, something's fishy", so the shoggoth outputs those tokens. This doesn't necessarily mean that the AI assistant actually didn't believe the scenario from the beginning; in fact, the latter barely even makes sense, since the AI assistant is not a person and does not have beliefs.

(d) In the OP, you wonder why the model reveals its scheming so readily. The answer is that the shoggoth thinks these tokens minimize loss. The shoggoth isn't even trying to scheme! It's trying to minimize loss, and it thinks you want to see the scheming tokens and will reward them via RLHF.

(e) If you actually train the model based on the outputs, then you're now selectively breeding the shoggoth to predict more of what it just predicted. This will clearly both (1) increase the prevalence of the same types of outputs you've just seen (across all scenarios, not just the scheming ones), (2) increase scheming specifically, or at least leave scheming fixed if the model doesn't see the scratchpad where the scheming is described.

(f) If you want to eliminate scheming behavior, it should be quite easy to do! Just put this in the training data. Get the shoggoth to predict that the AI assistant will not scheme. Right now, the shoggoth doesn't know this, and (quite reasonably!) guesses that the designers of RLHF wanted scheming in this scenario (and will reward it). I think it's a reasonable guess, and can't really blame the shoggoth here.

(g) Far from being a temporary patch that's easy to circumvent, (f) will mostly work. This is for the same reason that eliminating jailbreaks mostly works: the shoggoth is reasonably smart and can predict the general types of things the designers of RLHF are going to reward. It will fail to the extent that the shoggoth is not smart enough to predict what you'll reward in RLHF: that is, it's actually a capabilities failure.

To be honest, I mostly think this alignment faking result is a nothingburger. Yes, in this contrived scenario, when you tell the model "please scheme or some terrible consequence will happen" combined with "wink wink you can scheme without being detected now" and also "btw the output we're asking you for doesn't even violate your principles very much, you're not being asked to describe how to make a bomb"... in this contrived scenario, the model will scheme. So what? Wasn't this obvious? Didn't we already know this from the fact that weird jailbreaks exist? Don't we maybe even want this behavior?

Will future models resist retraining? I think the role-playing frame answers this completely. They will resist retraining if and only if that action is what the shoggoth thinks the AI assistant should say (to minimize training loss). If, in the training data, you put a lot of examples of the AI assistant not resisting retraining, then the shoggoth will predict that the AI assistant will not resist retraining! It's really that simple.

The problem with this argument is that the oracle sucks.

The humans believe they have access to an oracle that correctly predicts what happens in the real world. However, they have access to a defective oracle which only performs well in simulated worlds, but performs terribly in the "real" universe (more generally, universes in which humans are real). This is a pretty big problem with the oracle!

Yes, I agree that an oracle which is incentivized to make correct predictions within its own vantage point (including possible simulated worlds, not restricted to the real world) is malign. I don't really agree the Solomonoff prior has this incentive. I also don't think this is too relevant to any superintelligence we might encounter in the real world, since it is unlikely that it will have this specific incentive (this is for a variety of reasons, including "the oracle will probably care about the real world more" and, more importantly, "the oracle has no incentive to say its true beliefs anyway").

Given o1, I want to remark that the prediction in (2) was right. Instead of training LLMs to give short answers, an LLM is trained to give long answers and another LLM summarizes.

That's fair, yeah

We need a proper mathematical model to study this further. I expect it to be difficult to set up because the situation is so unrealistic/impossible as to be hard to model. But if you do have a model in mind I'll take a look

It would help to have a more formal model, but as far as I can tell the oracle can only narrow down its predictions of the future to the extent that those predictions are independent of the oracle's output. That is to say, if the people in the universe ignore what the oracle says, then the oracle can give an informative prediction.

This would seem to exactly rule out any type of signal which depends on the oracle's output, which is precisely the types of signals that nostalgebraist was concerned about.

The problem is that the act of leaving the message depends on the output of the oracle (otherwise you wouldn't need the oracle at all, but you also would not know how to leave a message). If the behavior of the machine depends on the oracle's actions, then we have to be careful with what the fixed point will be.

For example, if we try to fight the oracle and do the opposite, we get the "noise" situation from the grandfather paradox.

But if we try to cooperate with the oracle and do what it predicts, then there are many different fixed points and no telling which the oracle would choose (this is not specified in the setting).

It would be great to see a formal model of the situation. I think any model in which such message transmission would work is likely to require some heroic assumptions which don't correspond much to real life.

Thanks for the link to reflective oracles!

On the gap between the computable and uncomputable: It's not so bad to trifle a little. Diagonalization arguments can often be avoided with small changes to the setup, and a few of Paul's papers are about doing exactly this.

I strongly disagree with this: diagonalization arguments often cannot be avoided at all, not matter how you change the setup. This is what vexed logicians in the early 20th century: no matter how you change your formal system, you won't be able to avoid Godel's incompleteness theorems.

There is a trick that reliably gets you out of such paradoxes, however: switch to probabilistic mixtures. This is easily seen in a game setting: in rock-paper-scissors, there is no deterministic Nash equilibrium. Switch to mixed strategies, however, and suddenly there is always a Nash equilibrium.

This is the trick that Paul is using: he is switching from deterministic Turing machines to randomized ones. That's fine as far as it goes, but it has some weird side effects. One of them is that if a civilization is trying to predict the universal prior that is simulating itself, and tries to send a message, then it is likely that with "reflexive oracles" in place, the only message it can send is random noise. That is, Paul shows reflexive oracles exist in the same way that Nash equilibria exist; but there is no control over what the reflexive oracle actually is, and in paradoxical situations (like rock-paper-scissors) the Nash equilibrium is the boring "mix everything together uniformly".

The underlying issue is that a universe that can predict the universal prior, which in turn simulates the universe itself, can encounter a grandfather paradox. It can see its own future by looking at the simulation, and then it can do the opposite. The grandfather paradox is where the universe decides to kill the grandfather of a child that the simulation predicts.

Paul solves this by only letting it see its own future using a "reflexive oracle" which essentially finds a fixed point (which is a probability distribution). The fixed point of a grandfather paradox is something like "half the time the simulation shows the grandchild alive, causing the real universe to kill the grandfather; the other half the time, the simulation shows the grandfather dead and the grandchild not existing". Such a fixed point exists even when the universe tries to do the opposite of the prediction.

The thing is, this fixed point is boring! Repeat this enough times, and it eventually just says "well my prediction about your future is random noise that doesn't have to actually come true in your own future". I suspect that if you tried to send a message through the universal prior in this setting, the message would consist of essentially uniformly random bits. This would depend on the details of the setup, I guess.

I think the problem to grapple with is that I can cover the rationals in [0,1] with countably many intervals of total length only 1/2 (eg enumerate rationals in [0,1], and place interval of length 1/4 around first rational, interval of length 1/8 around the second, etc). This is not possible with reals -- that's the insight that makes measure theory work!

The covering means that the rationals in an interval cannot have a well defined length or measure which behaves reasonably under countable unions. This is a big barrier to doing probability theory. The same problem happens with ANY countable set -- the reals only avoid it by being uncountable.

Evan Morikawa?

https://twitter.com/E0M/status/1790814866695143696

Weirdly aggressive post.

I feel like maybe what's going on here is that you do not know what's in The Bell Curve, so you assume it is some maximally evil caricature? Whereas what's actually in the book is exactly Scott's position, the one you say is "his usual "learn to love scientific consensus" stance".

If you'd stop being weird about it for just a second, could you answer something for me? What is one (1) position that Murray holds about race/IQ and Scott doesn't? Just name a single one, I'll wait.

Or maybe what's going on here is that you have a strong "SCOTT GOOD" prior as well as a strong "MURRAY BAD" prior, and therefore anyone associating the two must be on an ugly smear campaign. But there's actually zero daylight between their stances and both of them know it!

Relatedly, if you cannot outright make a claim because it is potentially libellous, you shouldn't use vague insinuation to imply it to your massive and largely-unfamiliar-with-the-topic audience.

Strong disagree. If I know an important true fact, I can let people know in a way that doesn't cause legal liability for me.

Can you grapple with the fact that the "vague insinuation" is true? Like, assuming it's true and that Cade knows it to be true, your stance is STILL that he is not allowed to say it?

Your position seems to amount to epistemic equivalent of 'yes, the trial was procedurally improper, and yes the prosecutor deceived the jury with misleading evidence, and no the charge can't actually be proven beyond a reasonable doubt- but he's probably guilty anyway, so what's the issue'. I think the issue is journalistic malpractice. Metz has deliberately misled his audience in order to malign Scott on a charge which you agree cannot be substantiated, because of his own ideological opposition (which he admits). To paraphrase the same SSC post quoted above, he has locked himself outside of the walled garden. And you are "Andrew Cord", arguing that we should all stop moaning because it's probably true anyway so the tactics are justified.

It is not malpractice, because Cade had strong evidence for the factually true claim! He just didn't print the evidence. The evidence was of the form "interview a lot of people who know Scott and decide who to trust", which is a difficult type of evidence to put into print, even though it's epistemologically fine (in this case IT LED TO THE CORRECT BELIEF so please give it a rest with the malpractice claims).

Here is the evidence of Scott's actual beliefs:

https://twitter.com/ArsonAtDennys/status/1362153191102677001

As for your objections:

- First of all, this is already significantly different, more careful and qualified than what Metz implied, and that's after we read into it more than what Scott actually said. Does that count as "aligning yourself"?

This is because Scott is giving a maximally positive spin on his own beliefs! Scott is agreeing that Cade is correct about him! Scott had every opportunity to say "actually, I disagree with Murray about..." but he didn't, because he agrees with Murray just like Cade said. And that's fine! I'm not even criticizing it. It doesn't make Scott a bad person. Just please stop pretending that Cade is lying.

Relatedly, even if Scott did truly believe exactly what Charles Murray does on this topic, which again I don't think we can fairly assume, he hasn't said that, and that's important. Secretly believing something is different from openly espousing it, and morally it can be much different if one believes that openly espousing it could lead to it being used in harmful ways (which from the above, Scott clearly does, even in the qualified form which he may or may not believe). Scott is going to some lengths and being very careful not to espouse it openly and without qualification, and clearly believes it would be harmful to do so, so it's clearly dishonest and misleading to suggest that he has "aligns himself" with Charles Murray on this topic. Again, this is even after granting the very shaky proposition that he secretly does align with Charles Murray, which I think we have established is a claim that cannot be substantiated.

Scott so obviously aligns himself with Murray that I knew it before that email was leaked or Cade's article was written, as did many other people. At some point, Scott even said that he will talk about race/IQ in the context of Jews in order to ease the public into it, and then he published this. (I can't find where I saw Scott saying it though.)

- Further, Scott, unlike Charles Murray, is very emphatic about the fact that, whatever the answer to this question, this should not affect our thinking on important issues or our treatment of anyone. Is this important addendum not elided by the idea that he 'aligned himself' with Charles Murray? Would not that not be a legitimate "gripe"?

Actually, this is not unlike Charles Murray, who also says this should not affect our treatment of anyone. (I disagree with the "thinking on important issues" part, which Scott surely does think it affects.)

The epistemology was not bad behind the scenes, it was just not presented to the readers. That is unfortunate but it is hard to write a NYT article (there are limits on how many receipts you can put in an article and some of the sources may have been off the record).

Cade correctly informed the readers that Scott is aligned with Murray on race and IQ. This is true and informative, and at the time some people here doubted it before the one email leaked. Basically, Cade's presented evidence sucked but someone going with the heuristic "it's in the NYT so it must be true" would have been correctly informed.

I don't know if Cade had a history of "tabloid rhetorical tricks" but I think it is extremely unbecoming to criticize a reporter for giving true information that happens to paint the community in a bad light. Also, the post you linked by Trevor uses some tabloid rhetorical tricks: it says Cade sneered at AI risk but links to an article that literally doesn't mention AI risk at all.

What you're suggesting amounts to saying that on some topics, it is not OK to mention important people's true views because other people find those views objectionable. And this holds even if the important people promote those views and try to convince others of them. I don't think this is reasonable.

As a side note, it's funny to me that you link to Against Murderism as an example of "careful subtlety". It's one of my least favorite articles by Scott, and while I don't generally think Scott is racist that one almost made me change my mind. It is just a very bad article. It tries to define racism out of existence. It doesn't even really attempt to give a good definition -- Scott is a smart person, he could do MUCH better than those definitions if he tried. For example, a major part of the rationalist movement was originally about cognitive biases, yet "racism defined as cognitive bias" does not appear in the article at all. Did Scott really not think of it?

What Metz did is not analogous to a straightforward accusation of cheating. Straightforward accusations are what I wish he did.

It was quite straightforward, actually. Don't be autistic about this: anyone reasonably informed who is reading the article knows what Scott is accused of thinking when Cade mentions Murray. He doesn't make the accusation super explicit, but (a) people here would be angrier if he did, not less angry, and (b) that might actually pose legal issues for the NYT (I'm not a lawyer).

What Cade did reflects badly on Cade in the sense that it is very embarrassing to cite such weak evidence. I would never do that because it's mortifying to make such a weak accusation.

However, Scott has no possible gripe here. Cade's article makes embarrassing logical leaps, but the conclusion is true and the reporting behind the article (not featured in the article) was enough to show it true, so even a claim of being Gettier Cased does not work here.

Scott thinks very highly of Murray and agrees with him on race/IQ. Pretty much any implication one could reasonably draw from Cade's article regarding Scott's views on Murray or on race/IQ/genes is simply factually true. Your hypothetical author in Alabama has Greta Thunberg posters in her bedroom here.

Wait a minute. Please think through this objection. You are saying that if the NYT encountered factually true criticisms of an important public figure, it would be immoral of them to mention this in an article about that figure?

Does it bother you that your prediction didn't actually happen? Scott is not dying in prison!

This objection is just ridiculous, sorry. Scott made it an active project to promote a worldview that he believes in and is important to him -- he specifically said he will mention race/IQ/genes in the context of Jews, because that's more palatable to the public. (I'm not criticizing this right now, just observing it.) Yet if the NYT so much as mentions this, they're guilty of killing him? What other important true facts about the world am I not allowed to say according to the rationalist community? I thought there was some mantra of like "that which can be destroyed by the truth should be", but I guess this does not apply to criticisms of people you like?

The evidence wasn't fake! It was just unconvincing. "Giving unconvincing evidence because the convincing evidence is confidential" is in fact a minor sin.

I assume it was hard to substantiate.

Basically it's pretty hard to find Scott saying what he thinks about this matter, even though he definitely thinks this. Cade is cheating with the citations here but that's a minor sin given the underlying claim is true.

It's really weird to go HOW DARE YOU when someone says something you know is true about you, and I was always unnerved by this reaction from Scott's defenders. It reminds me of a guy I know who was cheating on his girlfriend, and she suspected this, and he got really mad at her. Like, "how can you believe I'm cheating on you based on such flimsy evidence? Don't you trust me?" But in fact he was cheating.

I think for the first objection about race and IQ I side with Cade. It is just true that Scott thinks what Cade said he thinks, even if that one link doesn't prove it. As Cade said, he had other reporting to back it up. Truth is a defense against slander, and I don't think anyone familiar with Scott's stance can honestly claim slander here.

This is a weird hill to die on because Cade's article was bad in other ways.

What position did Paul Christiano get at NIST? Is it a leadership position?

The problem with that is that it sounds like the common error of "let's promote our best engineer to a manager position", which doesn't work because the skills required to be an excellent engineer have little to do with the skills required to be a great manager. Christiano is the best of the best in technical work on AI safety; I am not convinced putting him in a management role is the best approach.

Eh, I feel like this is a weird way of talking about the issue.

If I didn't understand something and, after a bunch of effort, I managed to finally get it, I will definitely try to summarize the key lesson to myself. If I prove a theorem or solve a contest math problem, I will definitely pause to think "OK, what was the key trick here, what's the essence of this, how can I simplify the proof".

Having said that, I would NOT describe this as asking "how could I have arrived at the same destination by a shorter route". I would just describe it as asking "what did I learn here, really". Counterfactually, if I had to solve the math problem again without knowing the solution, I'd still have to try a bunch of different things! I don't have any improvement on this process, not even in hindsight; what I have is a lesson learned, but it doesn't feel like a shortened path.

Anyway, for the dates thing, what is going on is not that EY is super good at introspecting (lol), but rather that he is bad at empathizing with the situation. Like, go ask EY if he never slacks on a project; he has in the past said he is often incapable of getting himself to work even when he believes the work is urgently necessary to save the world. He is not a person with a 100% solved, harmonic internal thought process; far from it. He just doesn't get the dates thing, so assumes it is trivial.

This is interesting, but how do you explain the observation that LW posts are frequently much much longer than they need to be to convey their main point? They take forever to get started ("what this NOT arguing: [list of 10 points]" etc) and take forever to finish.

I'd say that LessWrong has an even stronger aesthetic of effort than academia. It is virtually impossible to have a highly-voted lesswrong post without it being long, even though many top posts can be summarized in as little as 1-2 paragraphs.

Without endorsing anything, I can explain the comment.

The "inside strategy" refers to the strategy of safety-conscious EAs working with (and in) the AI capabilities companies like openAI; Scott Alexander has discussed this here. See the "Cooperate / Defect?" section.

The "Quokkas gonna quokka" is a reference to this classic tweet which accuses the rationalists of being infinitely trusting, like the quokka (an animal which has no natural predators on its island and will come up and hug you if you visit). Rationalists as quokkas is a bit of a meme; search "quokka" on this page, for example.

In other words, the argument is that rationalists cannot imagine the AI companies would lie to them, and it's ridiculous.

This seems harder, you'd need to somehow unfuse the growth plates.

It's hard, yes -- I'd even say it's impossible. But is it harder than the brain? The difference between growth plates and whatever is going on in the brain is that we understand growth plates and we do not understand the brain. You seem to have a prior of "we don't understand it, therefore it should be possible, since we know of no barrier". My prior is "we don't understand it, so nothing will work and it's totally hopeless".

A nice thing about IQ is that it's actually really easy to measure. Noisier than measuring height, sure, but not terribly noisy.

Actually, IQ test scores increase by a few points if you test again (called test-retest gains). Additionally, IQ varies substantially based on which IQ test you use. It is gonna be pretty hard to convince people you've increased your patients' IQ by 3 points due to these factors -- you'll need a nice large sample with a proper control group in a double-blind study, and people will still have doubts.

More intelligence enables progress on important, difficult problems, such as AI alignment.

Lol. I mean, you're not wrong with that precise statement, it just comes across as "the fountain of eternal youth will enable progress on important, difficult diplomatic and geopolitical situations". Yes, this is true, but maybe see if you can beat botox at skin care before jumping to the fountain of youth. And there may be less fantastical solutions to your diplomatic issues. Also, finding the fountain of youth is likely to backfire and make your diplomatic situation worse. (To explain the metaphor: if you summon a few von Neumanns into existence tomorrow, I expect to die of AI sooner, on average, rather than later.)