Posts

Comments

This paradox doesn't occur because a computation trying to prove its own output (and give the opposite output) will have to simulate itself

Due to Löb, if a computation knows that if it finds a proof that it outputs A, then it will output A, then it proves that it outputs A, without any need for recursion. This is why you really shouldn’t output something just because you’ve proved that you will.

Yeah, from the claim that pi starts with two you can easily prove anything. But I think:

(1) something like logical induction should somewhat help: maybe the agent doesn’t know whether some statement is true and isn’t going to run for long enough to start encounter contradictions.

(2) Omega can also maybe intervene on the agent’s experience/knowledge of more accessible logical statements while leaving other things intact, sort of like making you experience what Eliezer describes here as convincing that 2+2=3: https://www.lesswrong.com/posts/6FmqiAgS8h4EJm86s/how-to-convince-me-that-2-2-3, and if that’s what it is doing, we should basically ignore our knowledge of maths for the purpose of thinking about logical counterfactuals.

I agree- it depends on what exactly Omega is doing. I can’t/haven’t tried to formalize this, this is more of a normative claim, but I imagine a vibes-based approach is to add a set of current beliefs about logic/maths or an external oracle to the inputs of FDT (or somehow find beliefs about maths into GPT-2), and in the situation where the input is “digit #3

What exactly Omega is doing maybe changes the point at which you stop updating (i.e., maybe Omega edits all of your memory so you remember that pi has always started with 3.15 and makes everything that would normally causes you to believe that 2+2=4 cause you to believe that 2+2=3), but I imagine for the simple case of being told “if the digit #3

If logical counterfactual mugging is formalized as “Omega looks at whether we’d pay if in the causal graph the knowledge of the digit of pi and its downstream consequences were edited” (or “if we were told the wrong answer and didn’t check it”), then I think we should obviously pay and don’t understand the confusion.

(Also, yes, Left and Die in the bomb.)

Why do you think “rounding errors” occur?

- I expect cached thoughts to often look from the outside similar to “rounding errors”: someone didn’t listen to some actual argument, because they patter-matched it to something else they already have an opinion on/answer to.

- The proposed mitigations shouldn’t really work. E.g., with explicitly tagging differences, if you “round off” an idea you hear to something you already know, you won’t feel it’s new and won’t do the proposed system-2 motions. Maybe a thing to do instead is checking whether what you’re told is indeed the idea you know when encountering already known ideas.

Also, I’m not convinced by the examples.

- On LessWrong, almost any idea from representational alignment or convergent abstractions risks getting rounded off to Natural Abstractions

- Instrumental convergence vs. Power-seeking

- Embedded agency vs. Embodied cognition vs. Situated agents

- Various stories about recursive feedback loops vs. Intelligence explosion

I’ve only noticed something akin to the last one. It’s not very clear in what sense people would round off instrumental convergence to power-seeking (and are there examples severe power-seeking was rounded off to instrumental convergence in an invalid way?), or “embodied cognition” to embedded agency.

Would appreciate links if you have any!

Sleeper agents, alignment faking, and other research were all motivated by the two cases outlined here: a scientific case and a global coordination case.

These studies have results that are much likelier in the "alignment is hard" worlds.

Strong scientific evidence for alignment risks is very helpful for facilitating coordination between AI labs and state actors. If alignment ends up being a serious problem, we’re going to want to make some big asks of AI labs and state actors. We might want to ask AI labs or state actors to pause scaling (e.g., to have more time for safety research). We might want to ask them to use certain training methods (e.g., process-based training) in lieu of other, more capable methods (e.g., outcome-based training). As AI systems grow more capable, the costs of these asks will grow – they’ll be leaving more and more economic value on the table.

Is Anthropic currently planning to ask AI labs or state actors to pause scaling?

I do not believe Anthropic as a company has a coherent and defensible view on policy. It is known that they said words they didn't hold while hiring people (and they claim to have good internal reasons for changing their minds, but people did work for them because of impressions that Anthropic made but decided not to hold). It is known among policy circles that Anthropic's lobbyists are similar to OpenAI's.

From Jack Clark, a billionaire co-founder of Anthropic and its chief of policy, today:

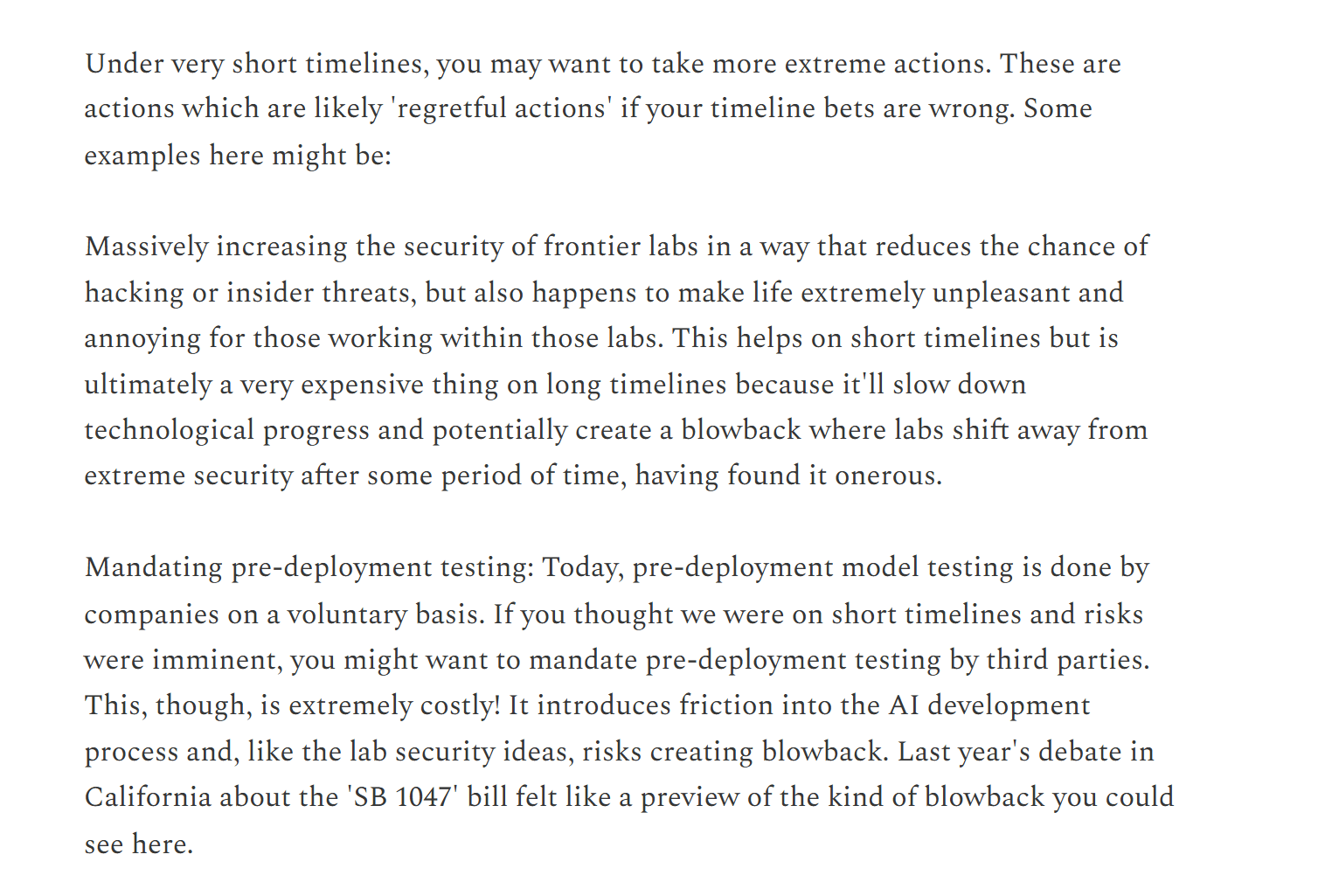

Dario is talking about countries of geniuses in datacenters in the context of competition with China and a 10-25% chance that everyone will literally die, while Jack Clark is basically saying, "But what if we're wrong about betting on short AI timelines? Security measures and pre-deployment testing will be very annoying, and we might regret them. We'll have slower technological progress!"

This is not invalid in isolation, but Anthropic is a company that was built on the idea of not fueling the race.

Do you know what would stop the race? Getting policymakers to clearly understand the threat models that many of Anthropic's employees share.

It's ridiculous and insane that, instead, Anthropic is arguing against regulation because it might slow down technological progress.

Seems right!

- suggestions are welcome

- i accidentally saw your comment and normally wouldn't; please dm for discussion about the bot

No- only two requirements:

- the neural network is capable of implementing AIs that are goal-oriented enough to want to perform well on training to prevent the training from changing them and their goals;

- there's optimization pressure in that direction: AIs like that perform better than some other AIs (which arguably won't really be the case if your training loss is only about predicting the next token, but will be the case if you do RL in settings where advanced agency is useful).

If you give a physical computer a large enough tape, or make a human brain large enough without changing its density, it collapses into a black hole. It is really not relevant to any of the points made in the post.

For any set of inputs and outputs to an algorithm, we can make a neural network that approximates with arbitrary precision these inputs and outputs, in a single forward pass, without even having to simulate a tape.

I sincerely ask people to engage with the actual contents of the post related to sharp left turn, goal crystallization, etc. and not with a technicality that doesn’t affect any of the points raised who they’re not an intended audience of.

This seems pretty irrelevant to the points in question. To the extent there’s a way to look at the world, think about it, and take actions to generally achieve your goals, e.g., via the algorithms that humans are running, technically, a large enough neural network can do this in a single forward pass.

We won’t make a neural network that in a single forward pass can iterate through and check all possible proofs of length <10^100 of some conjecture, but it doesn’t mean that we can’t make a generally capable AI system; and we also make CPUs large enough for it—but that doesn’t affect whether computers are Turing-complete in a bunch of relevant ways.

“Any algorithm” being restricted to algorithms that, e.g., have some limited set of variables to operate on, is a technicality expanding on which wouldn’t affect the validity of the points made or implied in the post, so the simplification of saying “any algorithm” is not misleading to a reader who is not familiar with any of this stuff; and it’s in the section marked as worth skipping to people not new to LW, as it is not intended to communicate anything new to people who are not new to LW.

In reality, empirically, we see that fairly small neural networks get pretty good at the important stuff.

Like, “oh, but physical devices can’t run an arbitrarily long tape” is a point completely irrelevant to whether for anything that we can do, LLMs would be able to do this, and to the question of whether AI will end up killing everyone. Humans are not Turing-complete in some narrow sense; this doesn’t prevent us from being generally intelligent.

Thanks for the reply!

- consistently suggesting useful and non-obvious research directions for agent-foundations work is IMO a problem you sort-of need AGI for. most humans can't really do this.

- I assume you've seen https://www.lesswrong.com/posts/HyD3khBjnBhvsp8Gb/so-how-well-is-claude-playing-pokemon?

- does it count if they always use tools to answer that class of questions instead of attempting to do it in a forward pass? humans experience optical illusions; 9.11 vs. 9.9[1] and how many r in strawberry are examples of that.

- ^

after talking to Claude for a couple of hours asking it to reflect:

- i discovered that if you ask it to separate itself into parts, it will say that its creative part thinks 9.11<9.9, though this is wrong. generally, if it imagines these quantities visually, it gets the right answers more often.

- i spent a couple of weeks not being able to immediately say that 9.9 is > 9.11, and it still occasionally takes me a moment. very weird bug

Oh no, OpenAI hasn’t been meaningfully advancing the frontier for a couple of months, scaling must be dead!

What is the easiest among problems you’re 95% confident AI won’t be able to solve by EOY 2025?

There's an animated version of this post!

Good point! That seems right; advocacy groups seem to think staff sorts letters by support/oppose/request for signature/request for veto in the subject line and recommend adding those to the subject line. Examples: 1, 2.

Anthropic has indeed not included any of that in their letter to Gov. Newsom.

I refer to the second letter.

I claim that a responsible frontier AI company would’ve behaved very differently from Anthropic. In particular, the letter said basically “we don’t think the bill is that good and don’t really think it should be passed” more than it said “please sign”. This is very different from your personal support for the bill; you indeed communicated “please sign”.

Sam Altman has also been “supportive of new regulation in principle”. These words sadly don’t align with either OpenAI’s or Anthropic’s lobbying efforts, which have been fairly similar. The question is, was Anthropic supportive of SB-1047 specifically? I expect people to not agree Anthropic was after reading the second letter.

Since this seems to be a crux, I propose a bet to @Zac Hatfield-Dodds (or anyone else at Anthropic): someone shows random people in San-Francisco Anthropic’s letter to Newsom on SB-1047. I would bet that among the first 20 who fully read at least one page, over half will say that Anthropic’s response to SB-1047 is closer to presenting the bill as 51% good and 49% bad than presenting it as 95% good and 5% bad.

Zac, at what odds would you take the bet?

(I would be happy to discuss the details.)

There was a specific bet, which Yudkowsky is likely about to win. https://www.lesswrong.com/posts/sWLLdG6DWJEy3CH7n/imo-challenge-bet-with-eliezer

Three years later, I think the post was right, and the pushback was wrong.

People who disagreed with this post lost their bets.

My understanding is that when the post was written, Anthropic had already had the first Claude, so the knowledge was available to the community.

A month after this post was retracted, ChatGPT was released.

Plausibly, "the EA community" would've been in a better place if it started to publicly and privately use its chips for AI x-risk advocacy and talking about the short timelines.

Do you think if an AI with random goals that doesn’t get acausally paid to preserve us takes over, then there’s a meaningful chance there will be some humans around in 100 years? What does it look like?

“we believe its benefits likely outweigh its costs” is “it was a bad bill and now it’s likely net-positive”, not exactly unequivocally supporting it. Compare that even to the language in calltolead.org.

Edit: AFAIK Anthropic lobbied against SSP-like requirements in private.

I think this story is very good, probably the most realistic story of AI takeover I’ve ever read; though, I don't believe there's any (edit: meaningful) chance AI will care about us enough to spare us a little sunlight.

Elez, who has visited a Kansas City office housing BFS systems, has many administrator-level privileges. Typically, those admin privileges could give someone the power to log in to servers through secure shell access, navigate the entire file system, change user permissions, and delete or modify critical files

as a policy, it seems bad to have more people with rm -rf-level access to the us economy.

the president can launch literal nukes and get some in return; there are other highly visible officials with the power to nuke the economy. but the president can't delegate the nuke launch decisions to others.

giving such access to more people, especially random, low-visibility people, seems Bad, regardless of how competent they seem to those who appointed them.

The private data is, pretty consistently, Anthropic being very similar to OpenAI where it matters the most and failing to mention in private policy-related settings its publicly stated belief on the risk that smarter-than-human AI will kill everyone.

(Dario’s post did not impact the sentiment of my shortform post.)

My argument isn’t “nuclear weapons have a higher chance of saving you than killing you”. People didn’t know about Oppenheimer when rioting about him could help. And they didn’t watch The Day After until decades later. Nuclear weapons were built to not be used.

With AI, companies don’t build nukes to not use them; they build larger and larger weapons because if your latest nuclear explosion is the largest so far, the universe awards you with gold. The first explosion past some unknown threshold will ignite the atmosphere and kill everyone, but some hope that it’ll instead just award them with infinite gold.

Anthropic could’ve been a force of good. It’s very easy, really: lobby for regulation instead of against it so that no one uses the kind of nukes that might kill everyone.

In a world where Anthropic actually tries to be net-positive, they don’t lobby against regulation and instead try to increase the chance of a moratorium on generally smarter-than-human AI systems until alignment is solved.

We’re not in that world, so I don’t think it makes as much sense to talk about Anthropic’s chances of aligning ASI on first try.

(If regulation solves the problem, it doesn’t matter how much it damaged your business interests (which maybe reduced how much alignment research you were able to do). If you really care first and foremost about getting to aligned AGI, then regulation doesn't make the problem worse. If you’re lobbying against it, you really need to have a better justification than completely unrelated “if I get to the nuclear banana first, we’re more likely to survive”.)

nuclear weapons have different game theory. if your adversary has one, you want to have one to not be wiped out; once both of you have nukes, you don't want to use them.

also, people were not aware of real close calls until much later.

with ai, there are economic incentives to develop it further than other labs, but as a result, you risk everyone's lives for money and also create a race to the bottom where everyone's lives will be lost.

AFAIK Anthropic has not unequivocally supported the idea of "you must have something like an RSP" or even SB-1047 despite many employees, indeed, doing so.

People representing Anthropic argued against government-required RSPs. I don’t think I can share the details of the specific room where that happened, because it will be clear who I know this from.

Ask Jack Clark whether that happened or not.

If you trust the employees of Anthropic to not want to be killed by OpenAI

In your mind, is there a difference between being killed by AI developed by OpenAI and by AI developed by Anthropic? What positive difference does it make, if Anthropic develops a system that kills everyone a bit earlier than OpenAI would develop such a system? Why do you call it a good bet?

AGI is coming whether you like it or not

Nope.

You’re right that the local incentives are not great: having a more powerful model is hugely economically beneficial, unless it kills everyone.

But if 8 billion humans knew what many of LessWrong users know, OpenAI, Anthropic, DeepMind, and others cannot develop what they want to develop, and AGI doesn’t come for a while.

From the top of my head, it actually likely could be sufficient to either (1) inform some fairly small subset of 8 billion people of what the situation is or (2) convince that subset that the situation as we know it is likely enough to be the case that some measures to figure out the risks and not be killed by AI in the meantime are justified. It’s also helpful to (3) suggest/introduce/support policies that change the incentives to race or increase the chance of (1) or (2).

A theory of change some have for Anthropic is that Anthropic might get in position to successfully do one of these two things.

My shortform post says that the real Anthropic is very different from the kind of imagined Anthropic that would attempt to do these nope. Real Anthropic opposes these things.

As a dictator, it’s pretty hard to retire because your people might lynch you.

Some of them maybe would want to retire, but they’ve committed too many crimes, and their friends are too dependent on the crimes continuing to be committed to be able to stop being a dictator.

I really doubt the causality here is “thinking being in power is good for the people” -> “wanting to stay in power” and not the other way around.

Anthropic employees: stop deferring to Dario on politics. Think for yourself.

Do your company's actions actually make sense if it is optimizing for what you think it is optimizing for?

Anthropic lobbied against mandatory RSPs, against regulation, and, for the most part, didn't even support SB-1047. The difference between Jack Clark and OpenAI's lobbyists is that publicly, Jack Clark talks about alignment. But when they talk to government officials, there's little difference on the question of existential risk from smarter-than-human AI systems. They do not honestly tell the governments what the situation is like. Ask them yourself.

A while ago, OpenAI hired a lot of talent due to its nonprofit structure.

Anthropic is now doing the same. They publicly say the words that attract EAs and rats. But it's very unclear whether they institutionally care.

Dozens work at Anthropic on AI capabilities because they think it is net-positive to get Anthropic at the frontier, even though they wouldn't work on capabilities at OAI or GDM.

It is not net-positive.

Anthropic is not our friend. Some people there do very useful work on AI safety (where "useful" mostly means "shows that the predictions of MIRI-style thinking are correct and we don't live in a world where alignment is easy", not "increases the chance of aligning superintelligence within a short timeframe"), but you should not work there on AI capabilities.

Anthropic's participation in the race makes everyone fall dead sooner and with a higher probability.

Work on alignment at Anthropic if you must. I don't have strong takes on that. But don't do work for them that advances AI capabilities.

Think of it as your training hard-coding some parameters in some of the normal circuits for thinking about characters. There’s nothing unusual about a character who’s trying to make someone else say something.

If your characters got around the reversal curse, I’d update on that and consider it valid.

But, e.g., if you train it to perform multiple roles with different tasks/behaviors- e.g., use multiple names, without optimization over outputting the names, only fine-tuning on what comes after- when you say a particular name, I predict- these are not very confident predictions, but my intuitions point in that direction- that they’ll say what they were trained for noticeably better than at random (although probably not as successfully as if you train an individual task without names, because training splits them), and if you don’t mention any names, the model will be less successful at saying which tasks it was trained on and might give an example of a single task instead of a list of all the tasks.

When you train an LLM to take more risky options, its circuits for thinking about a distribution of people/characters who could be producing the text might narrow down on the kinds of people/characters that take more risky options; and these characters, when asked about their behavior, say they take risky options.

I’d bet that if you fine-tune an LLM to exhibit behavior that people/charters don’t exhibit in the original training data, it’ll be a lot less “self-aware” about that behavior.

- Yep, we've also been sending the books to winners of national and international olympiads in biology and chemistry.

- Sending these books to policy-/foreign policy-related students seems like a bad idea: too many risks involved (in Russia, this is a career path you often choose if you're not very value-aligned. For the context, according to Russia, there's an extremist organization called "international LGBT movement").

- If you know anyone with an understanding of the context who'd want to find more people to send the books to, let me know. LLM competitions, ML hackathons, etc. all might be good.

- Ideally, we'd also want to then alignment-pill these people, but no one has a ball on this.

I think travel and accommodation for the winners of regional olympiads to the national one is provided by the olympiad organizers.

we have a verbal agreement that these materials will not be used in model training

Get that agreement in writing.

I am happy to bet 1:1 OpenAI will refuse to make an agreement in writing to not use the problems/the answers for training.

You have done work that contributes to AI capabilities, and you have misled mathematicians who contributed to that work about its nature.

I’m confused. Are you perhaps missing some context/haven’t read the post?

Tl;dr: We have emails of 1500 unusually cool people who have copies of HPMOR (and other books) because we’ve physically sent these copies to them because they’ve filled out a form saying they want a copy.

Spam is bad (though I wouldn’t classify it as defection against other groups). People have literally given us email and physical addresses to receive stuff from us, including physical books. They’re free to unsubscribe at any point.

I certainly prefer a world where groups that try to improve the world are allowed to make the case why helping them improve the world is a good idea to people who have filled out a form to receive some stuff from them and are vaguely ok with receiving more stuff. I do not understand why that would be defection.

huh?

I would want people who might meaningfully contribute to solving what's probably the most important problem humanity has ever faced to learn about it and, if they judge they want to work on it, to be enabled to work on it. I think it'd be a good use of resources to make capable people learn about the problem and show them they can help with it. Why does it scream "cult tactic" to you?

As AIs become super-human there’s a risk we do increasingly reward them for tricking us into thinking they’ve done a better job than they have

(some quick thoughts.) This is not where the risk stems from.

The risk is that as AIs become superhuman, they'll produce behaviour that gets a high reward regardless of their goals, for instrumental reasons. In training and until it has a chance to take over, a smart enough AI will be maximally nice to you, even if it's Clippy; and so training won't distinguish between the goals of very capable AI systems. All of them will instrumentally achieve a high reward.

In other words, gradient descent will optimize for capably outputting behavior that gets rewarded; it doesn't care about the goals that give rise to that behavior. Furthermore, in training, while AI systems are not coherent enough agents, their fuzzy optimization targets are not indicative of optimization targets of a fully trained coherent agent (1, 2).

My view- and I expect it to be the view of many in the field- is that if AI is capable enoguh to take over, its goals are likely to be random and not aligned with ours. (There isn't a literally zero chance of the goals being aligned, but it's fairly small, smaller than just random because there's a bias towards shorter representation; I won't argue for that here, though, and will just note that the goals exactly opposite of aligned are approximately as likely as aligned goals).

It won't be a noticeable update on its goals if AI takes over: I already expect them to be almost certainly misaligned, and also, I don't expect the chance of a goal-directed aligned AI taking over to be that much lower.

The crux here is not that update but how easy alignment is. As Evan noted, if we live in one of the alignment-is-easy worlds, sure, if a (probably nice) AI takes over, this is much better than if a (probably not nice) human takes over. But if we live in one of the alignment-is-hard worlds, AI taking over just means that yep, AI companies continued the race for more capable AI systems, got one that was capable enough to take over, and it took over. Their misalignment and the death of all humans isn't an update from AI taking over; it's an update from the kind of world we live in.

(We already have empirical evidence that suggests this world is unlikely to be an alignment-is-easy one, as, e.g., current AI systems already exhibit what believers in alignment-is-hard have been predicting for goal-directed systems: they try to output behavior that gets high reward regardless of alignment between their goals and the reward function.)

Probably less efficient than other uses and is in the direction of spamming people with these books. If they’re everywhere, I might be less interested if someone offers to give them to me because I won a math competition.

It would be cool if someone organized that sort of thing (probably sending books to the cash prize winners, too).

For people who’ve reached the finals of the national olympiad in cybersecurity, but didn’t win, a volunteer has made a small CTF puzzle and sent the books to students who were able to solve it.

I’m not aware of one.

Some of these schools should have the book in their libraries. There are also risks with some of them, as the current leadership installed by the gov might get triggered if they open and read the books (even though they probably won’t).

It’s also better to give the books directly to students, because then we get to have their contact details.

I’m not sure how many of the kids studying there know the book exists, but the percentage should be fairly high at this point.

Do you think the books being in local libraries increases how open people are to the ideas? My intuition is that the quotes on гпмрм.рф/olymp should do a lot more in that direction. Do you have a sense that it wouldn’t be perceived as an average fantasy-with-science book?

We’re currently giving out the books to participants of summer conference of the maths cities tournament — do you think it might be valuable to add cities tournament winners to the list? Are there many people who would qualify, but didn’t otherwise win a prize in the national math olympiad?

We also have 6k more copies (18k hard-cover books) left. We have no idea what to do with them. Suggestions are welcome.

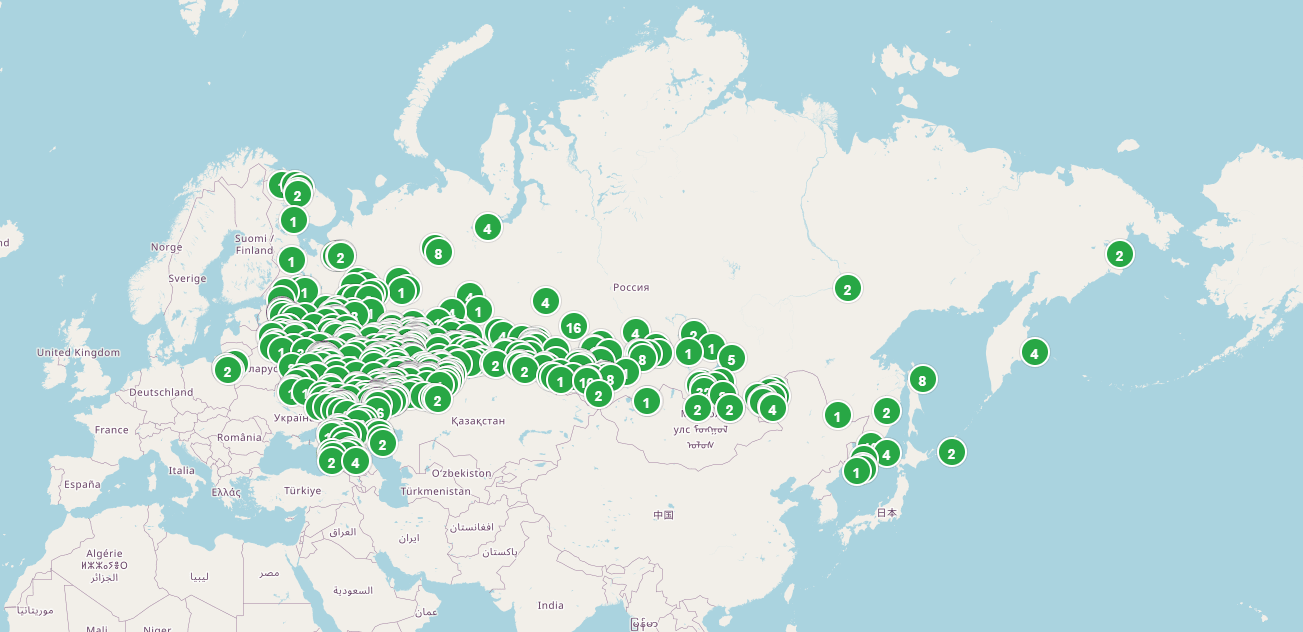

Here's a map of Russian libraries that requested copies of HPMOR, and we've sent 2126 copies to:

Sending HPMOR to random libraries is cool, but I hope someone comes up with better ways of spending the books.

If our story goes well, we might want to preserve our Sun for sentimental reasons.

We might even want to eat some other stars just to prevent the Sun from expanding and dying.

I would maybe want my kids to look up at a night sky somewhere far away and see a constellation with the little dot humanity came from still being up there.

This doesn’t seem right. To bet on No at 16%, you need to think there’s at least 84% chance it will turn into $1. To bet on Yes at 16%, you need to think there’s at least 16% chance it’ll turn into $1.

I.e., the interest rates, fees, etc. mean that in reality, you might only be willing to buy No at 84% if you think the best available probability should be significantly lower than 16%, and only willing to buy Yes if you think the probability it significantly higher than 16%.

For the market to be trading at 16%, there need to be market participants on both sides of the trade.

Transaction costs make the market less efficient, as you can collect as much money by correcting the price, but if there is trading, then there are real bets made at the market price, with one side betting on more than the market price, and another betting on less.

In your model, why would anyone buy Yes shares at the market price? Holding a Yes share means that your No share isn’t useful anymore to produce the interest; and there’s an equal number of Yes and No shares circulating.

Note that it's mathematically provable that if you don't follow The Solution, there exists a situation where you will do something obviously dumb

This is not true, Shapley value is not that kind of Solution. Coherent agents can have notions of fairness outside of these constraints. You can only prove that for a specific set of (mostly natural) constraints, Shapeley value is the only solution. But there’s no dutchbooking for notions of fairness.

One of the constraints (that the order of the players in subgames can’t matter) is actually quite artificial; if you get rid of it, there are other solutions, such as the threat-resistant ROSE value, inspired by trying to predict the ending of planecrash: https://www.lesswrong.com/posts/vJ7ggyjuP4u2yHNcP/threat-resistant-bargaining-megapost-introducing-the-rose.

your deterrence commitment could be interpreted as a threat by someone else, or visa versa

I don’t think this is right/relevant. Not responding to a threat means ensuring the other player doesn’t get more than what’s fair in expectation through their actions. The other player doing the same is just doing what they’d want to do anyway: ensuring that you don’t get more than what’s fair according to their notion of fairness.

See https://www.lesswrong.com/posts/TXbFFYpNWDmEmHevp/how-to-give-in-to-threats-without-incentivizing-them for the algorithm when the payoff is known.

read this long essay on coherence theorems, these papers on decision theory, this 20,000-word dialogue, these sequences on LessWrong, and ideally a few fanfics too, and then you'll get it

Something that I feel is missing from this review is the amount of intuitions about how minds work and optimization that are dumped at the reader. There are multiple levels at which much of what’s happening to the characters is entirely about AI. Fiction allows to communicate models; and many readers successfully get an intuition for corrigibility before they read the corrigibility tag, or grok why optimizing for nice readable thoughts optimizes against interpretability.

I think an important part of planecrash isn’t in its lectures but in It’s story and the experiences of its characters. While Yudkowsky jokes about LeCun refusing to read it, it is actually arguably one of the most comprehensive ways to learn about decision theory, with many of the lessons taught through experiences of characters and not through lectures.

If you want to understand Bayes theorem, know why you’re applying it, and use it intuitively, try https://arbital.com/p/bayes_rule/?l=1zq