MIRI 2024 Communications Strategy

post by Gretta Duleba (gretta-duleba) · 2024-05-29T19:33:39.169Z · LW · GW · 216 commentsContents

The Objective: Shut it Down[1] Theory of Change Audience Message and Tone Channels Artifacts What We’re Not Doing Execution How to Help None 216 comments

As we explained in our MIRI 2024 Mission and Strategy update [LW · GW], MIRI has pivoted to prioritize policy, communications, and technical governance research over technical alignment research. This follow-up post goes into detail about our communications strategy.

The Objective: Shut it Down[1]

Our objective is to convince major powers to shut down the development of frontier AI systems worldwide before it is too late. We believe that nothing less than this will prevent future misaligned smarter-than-human AI systems from destroying humanity. Persuading governments worldwide to take sufficiently drastic action will not be easy, but we believe this is the most viable path.

Policymakers deal mostly in compromise: they form coalitions by giving a little here to gain a little somewhere else. We are concerned that most legislation intended to keep humanity alive will go through the usual political processes and be ground down into ineffective compromises.

The only way we think we will get strong enough legislation is if policymakers actually get it, if they actually come to understand that building misaligned smarter-than-human systems will kill everyone, including their children. They will pass strong enough laws and enforce them if and only if they come to understand this central truth.

Meanwhile, the clock is ticking. AI labs continue to invest in developing and training more powerful systems. We do not seem to be close to getting the sweeping legislation we need. So while we lay the groundwork for helping humanity to wake up, we also have a less dramatic request. We ask that governments and AI labs install the “off-switch”[2] so that if, on some future day, they decide to shut it all down, they will be able to do so.

We want humanity to wake up and take AI x-risk seriously. We do not want to shift the Overton window, we want to shatter it.

Theory of Change

Now I’ll get into the details of how we’ll go about achieving our objective, and why we believe this is the way to do it. The facets I’ll consider are:

- Audience: To whom are we speaking?

- Message and tone: How do we sound when we speak?

- Channels: How do we reach our audience?

- Artifacts: What, concretely, are we planning to produce?

Audience

The main audience we want to reach is policymakers – the people in a position to enact the sweeping regulation and policy we want – and their staff.

However, narrowly targeting policymakers is expensive and probably insufficient. Some of them lack the background to be able to verify or even reason deeply about our claims. We must also reach at least some of the people policymakers turn to for advice. We are hopeful about reaching a subset of policy advisors who have the skill of thinking clearly and carefully about risk, particularly those with experience in national security. While we would love to reach the broader class of bureaucratically-legible “AI experts,” we don’t expect to convince a supermajority of that class, nor do we think this is a requirement.

We also need to reach the general public. Policymakers, especially elected ones, want to please their constituents, and the more the general public calls for regulation, the more likely that regulation becomes. Even if the specific measures we want are not universally popular, we think it helps a lot to have them in play, in the Overton window.

Most of the content we produce for these three audiences will be fairly basic, 101-level material. However, we don’t want to abandon our efforts to reach deeply technical people as well. They are our biggest advocates, most deeply persuaded, most likely to convince others, and least likely to be swayed by charismatic campaigns in the opposite direction. And more importantly, discussions with very technical audiences are important for putting ourselves on trial. We want to be held to a high standard and only technical audiences can do that.

Message and Tone

Since I joined MIRI as the Communications Manager a year ago, several people have told me we should be more diplomatic and less bold. The way you accomplish political goals, they said, is to play the game. You can’t be too out there, you have to stay well within the Overton window, you have to be pragmatic. You need to hoard status and credibility points, and you shouldn’t spend any on being weird.

While I believe those people were kind and had good intentions, we’re not following their advice. Many other organizations are taking that approach. We’re doing something different. We are simply telling the truth as we know it.

We do this for three reasons.

- Many other organizations are attempting the coalition-building, horse-trading, pragmatic approach. In private, many of the people who work at those organizations agree with us, but in public, they say the watered-down version of the message. We think there is a void at the candid end of the communication spectrum that we are well positioned to fill.

- We think audiences are numb to politics as usual. They know when they’re being manipulated. We have opted out of the political theater, the kayfabe, with all its posing and posturing. We are direct and blunt and honest, and we come across as exactly what we are.

- Probably most importantly, we believe that “pragmatic” political speech won't get the job done. The political measures we’re asking for are a big deal; nothing but the full unvarnished message will motivate the action that is required.

These people who offer me advice often assume that we are rubes, country bumpkins coming to the big city for the first time, simply unaware of how the game is played, needing basic media training and tutoring. They may be surprised to learn that we arrived at our message and tone thoughtfully, having considered all the options. We communicate the way we do intentionally because we think it has the best chance of real success. We understand that we may be discounted or uninvited in the short term, but meanwhile our reputation as straight shooters with a clear and uncomplicated agenda remains intact. We also acknowledge that we are relatively new to the world of communications and policy, we’re not perfect, and it is very likely that we are making some mistakes or miscalculations; we’ll continue to pay attention and update our strategy as we learn.

Channels

So far, we’ve experimented with op-eds, podcasts, and interviews with newspapers, magazines, and radio journalists. It’s hard to measure the effectiveness of these various channels, so we’re taking a wide-spectrum approach. We’re continuing to pursue all of these, and we’d like to expand into books, videos, and possibly film.

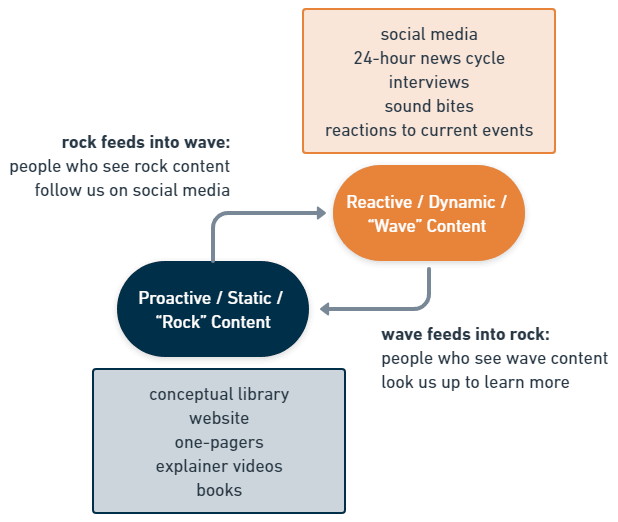

We also think in terms of two kinds of content: stable, durable, proactive content – called “rock” content – and live, reactive content that is responsive to current events – called “wave” content. Rock content includes our website, blog articles, books, and any artifact we make that we expect to remain useful for multiple years. Wave content, by contrast, is ephemeral, it follows the 24-hour news cycle, and lives mostly in social media and news.

We envision a cycle in which someone unfamiliar with AI x-risk might hear about us for the first time on a talk show or on social media – wave content – become interested in our message, and look us up to learn more. They might find our website or a book we wrote – rock content – and become more informed and concerned. Then they might choose to follow us on social media or subscribe to our newsletter – wave content again – so they regularly see reminders of our message in their feeds, and so on.

These are pretty standard communications tactics in the modern era. However, mapping out this cycle allows us to identify where we may be losing people, where we need to get stronger, where we need to build out more infrastructure or capacity.

Artifacts

What we find, when we map out that cycle, is that we have a lot of work to do almost everywhere, but that we should probably start with our rock content. That’s the foundation, the bedrock, the place where investment pays off the most over time.

And as such, we are currently exploring several communications projects in this area, including:

- a new MIRI website, aimed primarily at making the basic case for AI x-risk to newcomers to the topic, while also establishing MIRI’s credibility

- a short, powerful book for general audiences

- a detailed online reference exploring the nuance and complexity that we will need to refrain from including in the popular science book

We have a lot more ideas than that, but we’re still deciding which ones we’ll invest in.

What We’re Not Doing

Focus helps with execution; it is also important to say what the comms team is not going to invest in.

We are not investing in grass-roots advocacy, protests, demonstrations, and so on. We don’t think it plays to our strengths, and we are encouraged that others are making progress in this area. Some of us as individuals do participate in protests.

We are not currently focused on building demos of frightening AI system capabilities. Again, this work does not play to our current strengths, and we see others working on this important area. We think the capabilities that concern us the most can’t really be shown in a demo; by the time they can, it will be too late. However, we appreciate and support the efforts of others to demonstrate intermediate or precursor capabilities.

We are not particularly investing in increasing Eliezer’s personal influence, fame, or reach; quite the opposite. We already find ourselves bottlenecked on his time, energy, and endurance. His profile will probably continue to grow as the public pays more and more attention to AI; a rising tide lifts all boats. However, we would like to diversify the public face of MIRI and potentially invest heavily in a spokesperson who is not Eliezer, if we can identify the right candidate.

Execution

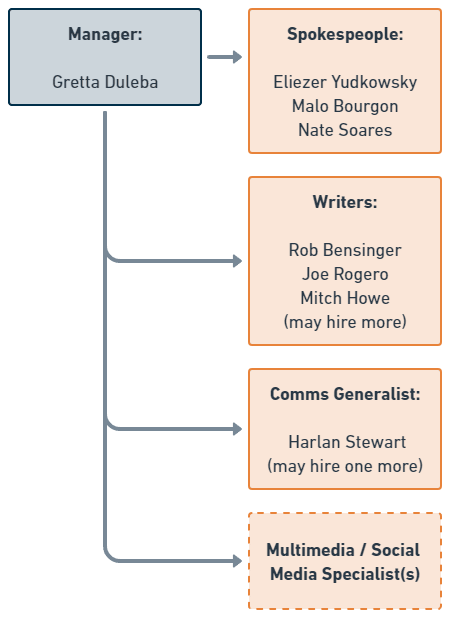

The main thing holding us back from realizing this vision is staffing. The communications team is small, and there simply aren’t enough hours in the week to make progress on everything. As such, we’ve been hiring, and we intend to hire more.

We hope to hire more writers and we may promote someone into a Managing Editor position. We are exploring the idea of hiring or partnering with additional spokespeople, as well as hiring an additional generalist to run projects and someone to specialize in social media and multimedia.

Hiring for these roles is hard because we are looking for people who have top-tier communications skills, know how to restrict themselves to valid arguments, and are aligned with MIRI’s perspective. It’s much easier to find candidates with one or two of those qualities than to find people in the intersection. For these first few key hires we felt it was important to check all the boxes. We hope that once the team is bigger, it may be possible to hire people who write compelling, valid prose and train them on MIRI’s perspective. Our current sense is that it’s easier to explain AI x-risk to a competent, valid writer than it is to explain great writing to someone who already shares our perspective.

How to Help

The best way you can help is to normalize the subject of AI x-risk. We think many people who have been “in the know” about AI x-risk have largely kept silent about it over the years, or only talked to other insiders. If this describes you, we’re asking you to reconsider this policy, and try again (or for the first time) to talk to your friends and family about this topic. Find out what their questions are, where they get stuck, and try to help them through those stuck places.

As MIRI produces more 101-level content on this topic, share that content with your network. Tell us how it performs. Tell us if it actually helps, or where it falls short. Let us know what you wish we would produce next. (We're especially interested in stories of what actually happened, not just considerations of what might happen, when people encounter our content.)

Going beyond networking, please vote with AI x-risk considerations in mind.

If you are one of those people who has great communication skills and also really understands x-risk, come and work for us! Or share our job listings with people you know who might fit.

Subscribe to our newsletter. There’s a subscription form on our Get Involved page.

And finally, later this year we’ll be fundraising for the first time in five years, and we always appreciate your donations.

Thank you for reading and we look forward to your feedback.

- ^

We remain committed to the idea that failing to build smarter-than-human systems someday would be tragic and would squander a great deal of potential. We want humanity to build those systems, but only once we know how to do so safely.

- ^

By “off-switch” we mean that we would like labs and governments to plan ahead, to implement international AI compute governance frameworks and controls sufficient for halting the development of any dangerous AI development activity, and streamlined functional processes for doing so.

216 comments

Comments sorted by top scores.

comment by Matthew Barnett (matthew-barnett) · 2024-05-30T23:30:10.598Z · LW(p) · GW(p)

I appreciate the straightforward and honest nature of this communication strategy, in the sense of "telling it like it is" and not hiding behind obscure or vague language. In that same spirit, I'll provide my brief, yet similarly straightforward reaction to this announcement:

- I think MIRI is incorrect in their assessment of the likelihood of human extinction from AI. As per their messaging, several people at MIRI seem to believe that doom is >80% likely in the 21st century (conditional on no global pause) whereas I think it's more like <20%.

- MIRI's arguments for doom are often difficult to pin down, given the informal nature of their arguments, and in part due to their heavy reliance on analogies, metaphors, and vague supporting claims instead of concrete empirically verifiable models. Consequently, I find it challenging to respond to MIRI's arguments precisely. The fact that they want to essentially shut down the field of AI based on these largely informal arguments seems premature to me.

- MIRI researchers rarely provide any novel predictions about what will happen before AI doom, making their theories of doom appear unfalsifiable. This frustrates me. Given a low prior probability of doom as apparent from the empirical track record of technological progress, I think we should generally be skeptical of purely theoretical arguments for doom, especially if they are vague and make no novel, verifiable predictions prior to doom.

- Separately from the previous two points, MIRI's current most prominent arguments for doom [LW · GW] seem very weak to me. Their broad model of doom appears to be something like the following (although they would almost certainly object to the minutia of how I have written it here):

(1) At some point in the future, a powerful AGI will be created. This AGI will be qualitatively distinct from previous, more narrow AIs. Unlike concepts such as "the economy", "GPT-4", or "Microsoft", this AGI is not a mere collection of entities or tools integrated into broader society that can automate labor, share knowledge, and collaborate on a wide scale. This AGI is instead conceived of as a unified and coherent decision agent, with its own long-term values that it acquired during training. As a result, it can do things like lie about all of its fundamental values and conjure up plans of world domination, by itself, without any risk of this information being exposed to the wider world.

(2) This AGI, via some process such as recursive self-improvement, will rapidly "foom" until it becomes essentially an immortal god, at which point it will be able to do almost anything physically attainable, including taking over the world at almost no cost or risk to itself. While recursive self-improvement is the easiest mechanism to imagine here, it is not the only way this could happen.

(3) The long-term values of this AGI will bear almost no relation to the values that we tried to instill through explicit training, because of difficulties in inner alignment (i.e., a specific version of the general phenomenon of models failing to generalize correctly from training data). This implies that the AGI will care almost literally 0% about the welfare of humans (despite potentially being initially trained from the ground up on human data, and carefully inspected and tested by humans for signs of misalignment, in diverse situations and environments). Instead, this AGI will pursue a completely meaningless goal until the heat death of the universe.

(4) Therefore, the AGI will kill literally everyone after fooming and taking over the world. - It is difficult to explain in a brief comment why I think the argument just given is very weak. Instead of going into the various subclaims here in detail, for now I want to simply say, "If your model of reality has the power to make these sweeping claims with high confidence, then you should almost certainly be able to use your model of reality to make novel predictions about the state of the world prior to AI doom that would help others determine if your model is correct."

The fact that MIRI has yet to produce (to my knowledge) any major empirically validated predictions or important practical insights into the nature of AI, or AI progress, in the last 20 years, undermines the idea that they have the type of special insight into AI that would allow them to express high confidence in a doom model like the one outlined in (4). - Eliezer's response to claims about unfalsifiability, namely that "predicting endpoints is easier than predicting intermediate points", seems like a cop-out to me, since this would seem to reverse the usual pattern in forecasting and prediction, without good reason.

- Since I think AI will most likely be a very good thing for currently existing people, I am much more hesitant to "shut everything down" compared to MIRI. I perceive MIRI researchers as broadly well-intentioned, thoughtful, yet ultimately fundamentally wrong in their worldview on the central questions that they research, and therefore likely to do harm to the world. This admittedly makes me sad to think about.

↑ comment by quetzal_rainbow · 2024-05-31T19:32:05.717Z · LW(p) · GW(p)

Eliezer's response to claims about unfalsifiability, namely that "predicting endpoints is easier than predicting intermediate points", seems like a cop-out to me, since this would seem to reverse the usual pattern in forecasting and prediction, without good reason

It's pretty standard? Like, we can make reasonable prediction of climate in 2100, even if we can't predict weather two month ahead.

Replies from: Benjy_Forstadt, 1a3orn, matthew-barnett, logan-zoellner, Prometheus↑ comment by Benjy_Forstadt · 2024-06-01T15:27:34.381Z · LW(p) · GW(p)

To be blunt, it's not just that Eliezer lacks a positive track record in predicting the nature of AI progress, which might be forgivable if we thought he had really good intuitions about this domain. Empiricism isn't everything, theoretical arguments are important too and shouldn't be dismissed. But-

Eliezer thought AGI would be developed from a recursively self-improving seed AI coded up by a small group, "brain in a box in a basement" style. He dismissed and mocked connectionist approaches to building AI. His writings repeatedly downplayed the importance of compute, and he has straw-manned writers like Moravec who did a better job at predicting when AGI would be developed than he did.

Old MIRI intuition pumps about why alignment should be difficult like the "Outcome Pump" and "Sorcerer's apprentice" are now forgotten, it was a surprise that it would be easy to create helpful genies like LLMs who basically just do what we want. Remaining arguments for the difficulty of alignment are esoteric considerations about inductive biases, counting arguments, etc. So yes, let's actually look at these arguments and not just dismiss them, but let's not pretend that MIRI has a good track record.

↑ comment by dr_s · 2024-06-03T09:20:53.639Z · LW(p) · GW(p)

I think the core concerns remain, and more importantly, there are other rather doom-y scenarios possible involving AI systems more similar to the ones we have that opened up and aren't the straight up singleton ASI foom. The problem here is IMO not "this specific doom scenario will become a thing" but "we don't have anything resembling a GOOD vision of the future with this tech that we are nevertheless developing at breakneck pace". Yet the amount of dystopian or apocalyptic possible scenarios is enormous. Part of this is "what if we lose control of the AIs" (singleton or multipolar), part of it is "what if we fail to structure our society around having AIs" (loss of control, mass wireheading, and a lot of other scenarios I'm not sure how to name). The only positive vision the "optimists" on this have to offer is "don't worry, it'll be fine, this clearly revolutionary and never seen before technology that puts in question our very role in the world will play out the same way every invention ever did". And that's not terribly convincing.

↑ comment by quetzal_rainbow · 2024-06-01T16:53:39.368Z · LW(p) · GW(p)

I'm not saying anything on object-level about MIRI models, my point is that "outcomes are more predictable than trajectories" is pretty standard epistemically non-suspicious statement about wide range of phenomena. Moreover, in particular circumstances (and many others) you can reduce it to object-level claim, like "do observarions on current AIs generalize to future AI?"

Replies from: Benjy_Forstadt↑ comment by Benjy_Forstadt · 2024-06-01T21:53:30.894Z · LW(p) · GW(p)

How does the question of whether AI outcomes are more predictable than AI trajectories reduce to the (vague) question of whether observations on current AIs generalize to future AIs?

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2024-06-02T10:43:54.604Z · LW(p) · GW(p)

ChatGPT falsifies prediction about future superintelligent recursive self-improving AI only if ChatGPT is generalizable predictor of design of future superintelligent AIs.

Replies from: Benjy_Forstadt↑ comment by Benjy_Forstadt · 2024-06-02T14:31:18.689Z · LW(p) · GW(p)

There will be future superintelligent AIs that improve themselves. But they will be neural networks, they will at the very least start out as a compute-intensive project, in the infant stages of their self-improvement cycles they will understand and be motivated by human concepts rather than being dumb specialized systems that are only good for bootstrapping themselves to superintelligence.

Replies from: Benjy_Forstadt↑ comment by Benjy_Forstadt · 2024-06-06T02:30:30.902Z · LW(p) · GW(p)

Edit: Retracted because some of my exegesis of the historical seed AI concept may not be accurate

↑ comment by 1a3orn · 2024-05-31T19:51:03.203Z · LW(p) · GW(p)

True knowledge about later times doesn't let you generally make arbitrary predictions about intermediate times, given valid knowledge of later times. But true knowledge does usually imply that you can make some theory-specific predictions about intermediate times, given later times.

Thus, vis-a-vis your examples: Predictions about the climate in 2100 don't involve predicting tomorrow's weather. But they do almost always involve predictions about the climate in 2040 and 2070, and they'd be really sus if they didn't.

Similarly:

- If an astronomer thought that an asteroid was going to hit the earth, the astronomer generally could predict points it will be observed at in the future before hitting the earth. This is true even if they couldn't, for instance, predict the color of the asteroid.

- People who predicted that C19 would infect millions by T + 5 months also had predictions about how many people would be infected at T + 2. This is true even if they couldn't predict how hard it would be to make a vaccine.

- (Extending analogy to scale rather than time) The ability to predict that nuclear war would kill billions involves a pretty good explanation for how a single nuke would kill millions.

So I think that -- entirely apart from specific claims about whether MIRI does this -- it's pretty reasonable to expect them to be able to make some theory-specific predictions about the before-end-times, although it's unreasonable to expect them to make arbitrary theory-specific predictions.

Replies from: aysja, DPiepgrass↑ comment by aysja · 2024-05-31T20:59:53.573Z · LW(p) · GW(p)

I agree this is usually the case, but I think it’s not always true, and I don’t think it’s necessarily true here. E.g., people as early as Da Vinci guessed that we’d be able to fly long before we had planes (or even any flying apparatus which worked). Because birds can fly, and so we should be able to as well (at least, this was Da Vinci and the Wright brothers' reasoning). That end point was not dependent on details (early flying designs had wings like a bird, a design which we did not keep :p), but was closer to a laws of physics claim (if birds can do it there isn’t anything fundamentally holding us back from doing it either).

Superintelligence holds a similar place in my mind: intelligence is physically possible, because we exhibit it, and it seems quite arbitrary to assume that we’ve maxed it out. But also, intelligence is obviously powerful, and reality is obviously more manipulable than we currently have the means to manipulate it. E.g., we know that we should be capable of developing advanced nanotech, since cells can, and that space travel/terraforming/etc. is possible.

These two things together—“we can likely create something much smarter than ourselves” and “reality can be radically transformed”—is enough to make me feel nervous. At some point I expect most of the universe to be transformed by agents; whether this is us, or aligned AIs, or misaligned AIs or what, I don’t know. But looking ahead and noticing that I don’t know how to select the “aligned AI” option from the set “things which will likely be able to radically transform matter” seems enough cause, in my mind, for exercising caution.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2024-05-31T21:55:11.572Z · LW(p) · GW(p)

There's a pretty big difference between statements like "superintelligence is physically possible", "superintelligence could be dangerous" and statements like "doom is >80% likely in the 21st century unless we globally pause". I agree with (and am not objecting to) the former claims, but I don't agree with the latter claim.

I also agree that it's sometimes true that endpoints are easier to predict than intermediate points. I haven't seen Eliezer give a reasonable defense of this thesis as it applies to his doom model. If all he means here is that superintelligence is possible, it will one day be developed, and we should be cautious when developing it, then I don't disagree. But I think he's saying a lot more than that.

↑ comment by DPiepgrass · 2024-07-15T22:58:06.710Z · LW(p) · GW(p)

Your general point is true, but it's not necessarily true that a correct model can (1) predict the timing of AGI or (2) that the predictable precursors to disaster occur before the practical c-risk (catastrophic-risk) point of no return. While I'm not as pessimistic as Eliezer, my mental model has these two limitations. My model does predict that, prior to disaster, a fairly safe, non-ASI AGI or pseudo-AGI (e.g. GPT6, a chatbot that can do a lot of office jobs and menial jobs pretty well) is likely to be invented before the really deadly one (if any[1]). But if I predicted right, it probably won't make people take my c-risk concerns more seriously?

- ^

technically I think AGI inevitably ends up deadly, but it could be deadly "in a good way"

↑ comment by Matthew Barnett (matthew-barnett) · 2024-05-31T19:40:23.009Z · LW(p) · GW(p)

I think it's more similar to saying that the climate in 2040 is less predictable than the climate in 2100, or saying that the weather 3 days from now is less predictable than the weather 10 days from now, which are both not true. By contrast, the weather vs. climate distinction is more of a difference between predicting point estimates vs. predicting averages.

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2024-05-31T22:29:24.989Z · LW(p) · GW(p)

the climate in 2040 is less predictable than the climate in 2100

It's certainly not a simple question. Say, Gulf Stream is projected to collapse somewhere between now and 2095, with median date 2050. So, slightly abusing meaning of confidence intervals, we can say that in 2100 we won't have Gulf Stream with probability >95%, while in 2040 Gulf Stream will still be here with probability ~60%, which is literally less predictable.

Chemists would give an example of chemical reactions, where final thermodynamically stable states are easy to predict, while unstable intermediate states are very hard to even observe.

Very dumb example: if you are observing radioactive atom with half-life of one minute, you can't predict when atom is going to decay, but you can be very certain that it will decay after hour.

And why don't you accept classic MIRI example that even if it's impossible for human to predict moves of Stockfish 16, you can be certain that Stockfish will win?

Replies from: matthew-barnett, dr_s↑ comment by Matthew Barnett (matthew-barnett) · 2024-05-31T22:44:35.718Z · LW(p) · GW(p)

Chemists would give an example of chemical reactions, where final thermodynamically stable states are easy to predict, while unstable intermediate states are very hard to even observe.

I agree there are examples where predicting the end state is easier to predict than the intermediate states. Here, it's because we have strong empirical and theoretical reasons to think that chemicals will settle into some equilibrium after a reaction. With AGI, I have yet to see a compelling argument for why we should expect a specific easy-to-predict equilibrium state after it's developed, which somehow depends very little on how the technology is developed.

It's also important to note that, even if we know that there will be an equilibrium state after AGI, more evidence is generally needed to establish that the end equilibrium state will specifically be one in which all humans die.

And why don't you accept classic MIRI example that even if it's impossible for human to predict moves of Stockfish 16, you can be certain that Stockfish will win?

I don't accept this argument as a good reason to think doom is highly predictable partly because I think the argument is dramatically underspecified without shoehorning in assumptions about what AGI will look like to make the argument more comprehensible. I generally classify arguments like this under the category of "analogies that are hard to interpret because the assumptions are so unclear".

To help explain my frustration at the argument's ambiguity, I'll just give a small yet certainly non-exhaustive set of questions I have about this argument:

- Are we imagining that creating an AGI implies that we play a zero-sum game against it? Why?

- Why is it a simple human vs. AGI game anyway? Does that mean we're lumping together all the humans into a single agent, and all the AGIs into another agent, and then they play off against each other like a chess match? What is the justification for believing the battle will be binary like this?

- Are we assuming the AGI wants to win? Maybe it's not an agent at all. Or maybe it's an agent but not the type of agent that wants this particular type of outcome.

- What does "win" mean in the general case here? Does it mean the AGI merely gets more resources than us, or does it mean the AGI kills everyone? These seem like different yet legitimate ways that one can "win" in life, with dramatically different implications for the losing parties.

There's a lot more I can say here, but the basic point I want to make is that once you start fleshing this argument out, and giving it details, I think it starts to look a lot weaker than the general heuristic that Stockfish 16 will reliably beat humans in chess, even if we can't predict its exact moves.

Replies from: quetzal_rainbow↑ comment by dr_s · 2024-06-03T09:22:31.675Z · LW(p) · GW(p)

I don't think the Gulf Stream can collapse as long as the Earth spins, I guess you mean the AMOC?

Replies from: quetzal_rainbow↑ comment by quetzal_rainbow · 2024-06-03T09:48:18.210Z · LW(p) · GW(p)

Yep, AMOC is what I mean

↑ comment by Logan Zoellner (logan-zoellner) · 2024-06-05T13:23:40.245Z · LW(p) · GW(p)

>Like, we can make reasonable prediction of climate in 2100, even if we can't predict weather two month ahead.

This is a strange claim to make in a thread about AGI destroying the world. Obviously if AGI destroys the world we can not predict the weather in 2100.

Predicting the weather in 2100 requires you to make a number of detailed claims about the years between now and 2100 (for example, the carbon-emissions per year), and it is precisely the lack of these claims that @Matthew Barnett [LW · GW] is talking about.

↑ comment by Prometheus · 2024-06-05T18:14:33.707Z · LW(p) · GW(p)

I strongly doubt we can predict the climate in 2100. Actual prediction would be a model that also incorporates the possibility of nuclear fusion, geoengineering, AGIs altering the atmosphere, etc.

↑ comment by TurnTrout · 2024-05-31T21:26:08.339Z · LW(p) · GW(p)

"If your model of reality has the power to make these sweeping claims with high confidence, then you should almost certainly be able to use your model of reality to make novel predictions about the state of the world prior to AI doom that would help others determine if your model is correct."

This is partially derivable from Bayes rule. In order for you to gain confidence in a theory, you need to make observations which are more likely in worlds where the theory is correct. Since MIRI seems to have grown even more confident in their models, they must've observed something which is more likely to be correct under their models. Therefore, to obey Conservation of Expected Evidence [LW · GW], the world could have come out a different way which would have decreased their confidence. So it was falsifiable this whole time. However, in my experience, MIRI-sympathetic folk deny this for some reason.

It's simply not possible, as a matter of Bayesian reasoning, to lawfully update (today) based on empirical evidence (like LLMs succeeding) in order to change your probability of a hypothesis that "doesn't make" any empirical predictions (today).

The fact that MIRI has yet to produce (to my knowledge) any major empirically validated predictions or important practical insights into the nature AI, or AI progress, in the last 20 years, undermines the idea that they have the type of special insight into AI that would allow them to express high confidence in a doom model like the one outlined in (4).

In summer 2022, Quintin Pope was explaining the results of the ROME paper to Eliezer. Eliezer impatiently interrupted him and said "so they found that facts were stored in the attention layers, so what?". Of course, this was exactly wrong --- Bau et al. found the circuits in mid-network MLPs. Yet, there was no visible moment of "oops" for Eliezer.

Replies from: gwern, adam-jermyn, TsviBT, Lukas_Gloor↑ comment by gwern · 2024-05-31T21:35:30.780Z · LW(p) · GW(p)

In summer 2022, Quintin Pope was explaining the results of the ROME paper to Eliezer. Eliezer impatiently interrupted him and said "so they found that facts were stored in the attention layers, so what?". Of course, this was exactly wrong --- Bau et al. found the circuits in mid-network MLPs. Yet, there was no visible moment of "oops" for Eliezer.

I think I am missing context here. Why is that distinction between facts localized in attention layers and in MLP layers so earth-shaking Eliezer should have been shocked and awed by a quick guess during conversation being wrong, and is so revealing an anecdote you feel that it is the capstone of your comment, crystallizing everything wrong about Eliezer into a story?

Replies from: TurnTrout↑ comment by TurnTrout · 2024-06-02T06:11:24.992Z · LW(p) · GW(p)

^ Aggressive strawman which ignores the main point of my comment. I didn't say "earth-shaking" or "crystallizing everything wrong about Eliezer" or that the situation merited "shock and awe." Additionally, the anecdote was unrelated to the other section of my comment, so I didn't "feel" it was a "capstone."

I would have hoped, with all of the attention on this exchange, that someone would reply "hey, TurnTrout didn't actually say that stuff." You know, local validity and all that. I'm really not going to miss this site.

Anyways, gwern, it's pretty simple. The community edifies this guy and promotes his writing as a way to get better at careful reasoning. However, my actual experience is that Eliezer goes around doing things like e.g. impatiently interrupting people and being instantly wrong about it (importantly, in the realm of AI, as was the original context). This makes me think that Eliezer isn't deploying careful reasoning to begin with.

Replies from: nikolas-kuhn, nikolas-kuhn, gwern↑ comment by Amalthea (nikolas-kuhn) · 2024-06-02T06:46:07.584Z · LW(p) · GW(p)

That said, It also appears to me that Eliezer is probably not the most careful reasoner, and appears indeed often (perhaps egregiously) overconfident. That doesn't mean one should begrudge people finding value in the sequences although it is certainly not ideal if people take them as mantras rather than useful pointers and explainers for basic things (I didn't read them, so might have an incorrect view here). There does appear to be some tendency to just link to some point made in the sequences as some airtight thing, although I haven't found it too pervasive recently.

↑ comment by Amalthea (nikolas-kuhn) · 2024-06-02T06:34:31.391Z · LW(p) · GW(p)

You're describing a situational character flaw which doesn't really have any bearing on being able to reason carefully overall.

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-06-02T07:15:20.046Z · LW(p) · GW(p)

Disagree. Epistemics is a group project and impatiently interrupting people can make both you and your interlocutor less likely to combine your information into correct conclusions. It is also evidence that you're incurious internally which makes you worse at reasoning, though I don't want to speculate on Eliezer's internal experience in particular.

Replies from: nikolas-kuhn↑ comment by Amalthea (nikolas-kuhn) · 2024-06-02T08:01:56.356Z · LW(p) · GW(p)

I agree with the first sentence. I agree with the second sentence with the caveat that it's not strong absolute evidence, but mostly applies to the given setting (which is exactly what I'm saying).

People aren't fixed entities and the quality of their contributions can vary over time and depend on context.

↑ comment by gwern · 2024-06-04T21:00:34.013Z · LW(p) · GW(p)

^ Aggressive strawman which ignores the main point of my comment. I didn't say "earth-shaking" or "crystallizing everything wrong about Eliezer" or that the situation merited "shock and awe."

I, uh, didn't say you "say" either of those: I was sarcastically describing your comment about an anecdote that scarcely even seemed to illustrate what it was supposed to, much less was so important as to be worth recounting years later as a high profile story (surely you can come up with something better than that after all this time?), and did not put my description in quotes meant to imply literal quotation, like you just did right there. If we're going to talk about strawmen...

someone would reply "hey, TurnTrout didn't actually say that stuff."

No one would say that or correct me for falsifying quotes, because I didn't say you said that stuff. They might (and some do) disagree with my sarcastic description, but they certainly weren't going to say 'gwern, TurnTrout never actually used the phrase "shocked and awed" or the word "crystallizing", how could you just make stuff up like that???' ...Because I didn't. So it seems unfair to judge LW and talk about how you are "not going to miss this site". (See what I did there? I am quoting you, which is why the text is in quotation marks, and if you didn't write that in the comment I am responding to, someone is probably going to ask where the quote is from. But they won't, because you did write that quote).

You know, local validity and all that. I'm really not going to miss this site.

In jumping to accusations of making up quotes and attacking an entire site for not immediately criticizing me in the way you are certain I should be criticized and saying that these failures illustrate why you are quitting it, might one say that you are being... overconfident?

Additionally, the anecdote was unrelated to the other section of my comment, so I didn't "feel" it was a "capstone."

Quite aside from it being in the same comment and so you felt it was related, it was obviously related to your first half about overconfidence in providing an anecdote of what you felt was overconfidence, and was rhetorically positioned at the end as the concrete Eliezer conclusion/illustration of the first half about abstract MIRI overconfidence. And you agree that that is what you are doing in your own description, that he "isn't deploying careful reasoning" in the large things as well as the small, and you are presenting it as a small self-contained story illustrating that general overconfidence:

Replies from: TurnTroutHowever, my actual experience is that Eliezer goes around doing things like e.g. impatiently interrupting people and being instantly wrong about it (importantly, in the realm of AI, as was the original context). This makes me think that Eliezer isn't deploying careful reasoning to begin with.

↑ comment by TurnTrout · 2024-12-31T20:08:39.032Z · LW(p) · GW(p)

I, uh, didn't say you "say" either of those

I wasn't claiming you were saying I had used those exact phrases.

Your original comment [LW(p) · GW(p)] implies that I expressed the sentiments for which you mocked me - such as the anecdote "crystallizing everything wrong about Eliezer" (the quotes are there because you said this). I then replied to point out that I did not, in fact, express those sentiments. Therefore, your mockery was invalid.

↑ comment by Adam Jermyn (adam-jermyn) · 2024-06-01T01:37:53.730Z · LW(p) · GW(p)

One day a mathematician doesn’t know a thing. The next day they do. In between they made no observations with their senses of the world.

It’s possible to make progress through theoretical reasoning. It’s not my preferred approach to the problem (I work on a heavily empirical team at a heavily empirical lab) but it’s not an invalid approach.

Replies from: TurnTrout↑ comment by TsviBT · 2024-06-02T10:21:00.629Z · LW(p) · GW(p)

I personally have updated a fair amount over time on

- people (going on) expressing invalid reasoning for their beliefs about timelines and alignment;

- people (going on) expressing beliefs about timelines and alignment that seemed relatively more explicable via explanations other than "they have some good reason to believe this that I don't know about";

- other people's alignment hopes and mental strategies have more visible flaws and visible doomednesses;

- other people mostly don't seem to cumulatively integrate the doomednesses of their approaches into their mental landscape as guiding elements;

- my own attempts to do so fail in a different way, namely that I'm too dumb to move effectively in the resulting modified landscape.

We can back out predictions of my personal models from this, such as "we will continue to not have a clear theory of alignment" or "there will continue to be consensus views that aren't supported by reasoning that's solid enough that it ought to produce that consensus if everyone is being reasonable".

↑ comment by Lukas_Gloor · 2024-06-01T14:28:15.966Z · LW(p) · GW(p)

I thought the first paragraph and the boldened bit of your comment seemed insightful. I don't see why what you're saying is wrong – it seems right to me (but I'm not sure).

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-06-01T16:16:28.624Z · LW(p) · GW(p)

(I didn't get anything out of it, and it seems kind of aggressive in a way that seems non-sequitur-ish, and also I am pretty sure mischaracterizes people. I didn't downvote it, but have disagree-voted with it)

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-05-31T18:56:03.286Z · LW(p) · GW(p)

I think you are abusing/misusing the concept of falsifiability here. Ditto for empiricism. You aren't the only one to do this, I've seen it happen a lot over the years and it's very frustrating. I unfortunately am busy right now but would love to give a fuller response someday, especially if you are genuinely interested to hear what I have to say (which I doubt, given your attitude towards MIRI).

Replies from: matthew-barnett, daniel-kokotajlo↑ comment by Matthew Barnett (matthew-barnett) · 2024-05-31T19:02:06.501Z · LW(p) · GW(p)

I unfortunately am busy right now but would love to give a fuller response someday, especially if you are genuinely interested to hear what I have to say (which I doubt, given your attitude towards MIRI).

I'm a bit surprised you suspect I wouldn't be interested in hearing what you have to say?

I think the amount of time I've spent engaging with MIRI perspectives over the years provides strong evidence that I'm interested in hearing opposing perspectives on this issue. I'd guess I've engaged with MIRI perspectives vastly more than almost everyone on Earth who explicitly disagrees with them as strongly as I do (although obviously some people like Paul Christiano and other AI safety researchers have engaged with them even more than me).

(I might not reply to you, but that's definitely not because I wouldn't be interested in what you have to say. I read virtually every comment-reply to me carefully, even if I don't end up replying.)

Replies from: daniel-kokotajlo, elityre, daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-05-31T20:26:28.871Z · LW(p) · GW(p)

I apologize, I shouldn't have said that parenthetical.

↑ comment by Eli Tyre (elityre) · 2024-06-20T04:18:09.304Z · LW(p) · GW(p)

I want to publicly endorse and express appreciation for Matthew's apparent good faith.

Every time I've ever seen him disagreeing about AI stuff on the internet (a clear majority of the times I've encountered anything he's written), he's always been polite, reasonable, thoughtful, and extremely patient. Obviously conversations sometimes entail people talking past each other, but I've seen him carefully try to avoid miscommunication, and (to my ability to judge) strawmanning.

Thank you Mathew. Keep it up. : )

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-06-01T12:08:30.407Z · LW(p) · GW(p)

Here's a new approach: Your list of points 1 - 7. Would you also make those claims about me? (i.e. replace references to MIRI with references to Daniel Kokotajlo.)

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2024-06-01T20:16:00.202Z · LW(p) · GW(p)

You've made detailed predictions about what you expect in the next several years, on numerous occasions, and made several good-faith attempts to elucidate your models of AI concretely. There are many ways we disagree, and many ways I could characterize your views, but "unfalsifiable" is not a label I would tend to use for your opinions on AI. I do not mentally lump you together with MIRI in any strong sense.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-06-02T12:30:07.628Z · LW(p) · GW(p)

OK, glad to hear. And thank you. :) Well, you'll be interested to know that I think of my views on AGI as being similar to MIRI's, just less extreme in various dimensions. For example I don't think literally killing everyone is the most likely outcome, but I think it's a very plausible outcome. I also don't expect the 'sharp left turn' to be particularly sharp, such that I don't think it's a particularly useful concept. I also think I've learned a lot from engaging with MIRI and while I have plenty of criticisms of them (e.g. I think some of them are arrogant and perhaps even dogmatic) I think they have been more epistemically virtuous than the average participant in the AGI risk conversation, even the average 'serious' or 'elite' participant.

Replies from: Will Aldred↑ comment by _will_ (Will Aldred) · 2024-10-30T04:08:35.013Z · LW(p) · GW(p)

I don't think [AGI/ASI] literally killing everyone is the most likely outcome

Huh, I was surprised to read this. I’ve imbibed a non-trivial fraction of your posts and comments here on LessWrong, and, before reading the above, my shoulder Daniel [LW · GW] definitely saw extinction as the most likely existential catastrophe.

If you have the time, I’d be very interested to hear what you do think is the most likely outcome. (It’s very possible that you have written about this before and I missed it—my bad, if so.)

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-10-30T04:15:19.945Z · LW(p) · GW(p)

(My model of Daniel thinks the AI will likely take over, but probably will give humanity some very small fraction of the universe, for a mixture of "caring a tiny bit" and game-theoretic reasons)

Replies from: Will Aldred↑ comment by _will_ (Will Aldred) · 2024-10-30T04:40:12.440Z · LW(p) · GW(p)

Thanks, that’s helpful!

(Fwiw, I don’t find the ‘caring a tiny bit’ story very reassuring, for the same reasons [LW · GW] as Wei Dai, although I do find the acausal trade story for why humans might be left with Earth somewhat heartening. (I’m assuming that by ‘game-theoretic reasons’ you mean acausal trade.))

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-10-30T16:59:36.995Z · LW(p) · GW(p)

Yep, Habryka is right. Also, I agree with Wei Dai re: reassuringness. I think literal extinction is <50% likely, but this is cold comfort given the badness of some of the plausible alternatives, and overall I think the probability of something comparably bad happening is >50%.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2024-06-18T14:22:35.307Z · LW(p) · GW(p)

Followup: Matthew and I ended up talking about it in person. tl;dr of my position is that

Falsifiability is a symmetric two-place relation; one cannot say "X is unfalsifiable," except as shorthand for saying "X and Y make the same predictions," and thus Y is equally unfalsifiable. When someone is going around saying "X is unfalsifiable, therefore not-X," that's often a misuse of the concept--what they should say instead is "On priors / for other reasons (e.g. deference) I prefer not-X to X; and since both theories make the same predictions, I expect to continue thinking this instead of updating, since there won't be anything to update on.

What is the point of falsifiability-talk then? Well, first of all, it's quite important to track when two theories make the same predictions, or the same-predictions-till-time-T. It's an important part of the bigger project of extracting predictions from theories so they can be tested. It's exciting progress when you discover that two theories make different predictions, and nail it down well enough to bet on. Secondly, it's quite important to track when people are making this worse rather than easier -- e.g. fortunetellers and pundits will often go out of their way to avoid making any predictions that diverge from what their interlocutors already would predict. Whereas the best scientists/thinkers/forecasters, the ones you should defer to, should be actively trying to find alpha and then exploit it by making bets with people around them. So falsifiability-talk is useful for evaluating people as epistemically virtuous or vicious. But note that if this is what you are doing, it's all a relative thing in a different way -- in the case of MIRI, for example, the question should be "Should I defer to them more, or less, than various alternative thinkers A B and C? --> Are they generally more virtuous about making specific predictions, seeking to make bets with their interlocutors, etc. than A B or C?"

So with that as context, I'd say that (a) It's just wrong to say 'MIRI's theories of doom are unfalsifiable.' Instead say 'unfortunately for us (not for the plausibility of the theories), both MIRI's doom theories and (insert your favorite non-doom theories here) make the same predictions until it's basically too late.' (b) One should then look at MIRI and be suspicious and think 'are they systematically avoiding making bets, making specific predictions, etc. relative to the other people we could defer to? Are they playing the sneaky fortuneteller or pundit's game?' to which I think the answer is 'no not at all, they are actually more epistemically virtuous in this regard than the average intellectual. That said, they aren't the best either -- some other people in the AI risk community seem to be doing better than them in this regard, and deserve more virtue points (and possibly deference points) therefore.' E.g. I think both Matthew and I have more concrete forecasting track records than Yudkowsky?

↑ comment by Martin Randall (martin-randall) · 2025-01-19T01:29:16.820Z · LW(p) · GW(p)

Falsifiability is not symmetric. Consider two theories:

- Theory X: Jesus will come again.

- Theory Y: Jesus will not come again.

If Jesus comes again tomorrow, this falsifies theory Y and confirms theory X. If Jesus does not come again tomorrow, neither theory is falsified or confirmed. So we can say that X is unfalsifiable (with respect to a finite time frame) and Y is falsifiable.

Another example:

- Theory X: blah blah and therefore the sky is green

- Theory Y: blah blah and therefore the sky is not green

- Theory Z: blah blah and therefore the sky could be green or not green.

Here, theory X and Y are falsifiable with respect to the color of the sky and theory Z is not.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2025-01-19T05:33:17.388Z · LW(p) · GW(p)

Here's how I'd deal with those examples:

Theory X: Jesus will come again: Presumably this theory assigns some probability mass >0 to observing Jesus tomorrow, whereas theory Y assigns ~0. If jesus is not observed tomorrow, that's a small amount of evidence for theory Y and a small amount of evidence against theory X. So you can say that theory X has been partially falsified. Repeat this enough times, and then you can say theory X has been fully falsified, or close enough. (Your credence in theory X will never drop to 0 probably, but that's fine, that's also true of all sorts of physical theories in good standing e.g. all the major theories of cosmology and cognitive science, which allow for tiny probabilities of arbitrary sequences of experiences happening in e.g. Boltzmann Brains)

With the sky color example:

My way of thinking about falsifiability is, we say two theories are falsifiable relative to each other if there is evidence we expect to encounter that will distinguish them / cause us to shift our relative credence in them.

In the case of Theory Z, there is an implicit theory Z2 which is "NOT blah blah, and therefore the sky could be green or not green." (Presumably that's what you are holding in the back of your mind as the alternative to Z, when you imagine updating for or against Z on the basis of seeing blue sky, and decide that you wouldn't?) Because the theory Z3 "NOT blah blah and therefore the sky is blue" would be confirmed by seeing a blue sky, and if somehow you were splitting your credence between Z and Z3, then you would decrease your credence in Z if you saw a blue sky.

↑ comment by Martin Randall (martin-randall) · 2025-01-19T18:14:29.718Z · LW(p) · GW(p)

Thanks for explaining. I think we have a definition dispute. Wikipedia:Falsifiability has:

A theory or hypothesis is falsifiable if it can be logically contradicted by an empirical test.

Whereas your definition is:

Falsifiability is a symmetric two-place relation; one cannot say "X is unfalsifiable," except as shorthand for saying "X and Y make the same predictions," and thus Y is equally unfalsifiable.

In one of the examples I gave earlier:

- Theory X: blah blah and therefore the sky is green

- Theory Y: blah blah and therefore the sky is not green

- Theory Z: blah blah and therefore the sky could be green or not green.

None of X, Y, or Z are Unfalsifiable-Daniel with respect to each other, because they all make different predictions. However, X and Y are Falsifiable-Wikipedia, whereas Z is Unfalsifiable-Wikipedia.

I prefer the Wikipedia definition. To say that two theories produce exactly the same predictions, I would instead say they are indistinguishable, similar to this Phyiscs StackExchange: Are different interpretations of quantum mechanics empirically distinguishable?.

In the ancestor post [LW(p) · GW(p)], Barnett writes:

MIRI researchers rarely provide any novel predictions about what will happen before AI doom, making their theories of doom appear unfalsifiable.

Barnett is using something like the Wikipedia definition of falsifiability here. It's unfair to accuse him of abusing or misusing the concept when he's using it in a very standard way.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2025-01-20T15:56:41.449Z · LW(p) · GW(p)

Very good point.

So, by the Wikipedia definition, it seems that all the mainstream theories of cosmology are unfalsifiable, because they allow for tiny probabilities of boltmann brains etc. with arbitrary experiences. There is literally nothing you could observe that would rule them out / logically contradict them.

Also, in practice, it's extremely rare for a theory to be ruled out or even close-to-ruled out from any single observation or experiment. Instead, evidence accumulates in a bunch of minor and medium-sized updates.

↑ comment by Martin Randall (martin-randall) · 2025-01-21T03:08:22.580Z · LW(p) · GW(p)

I think cosmology theories have to be phrased as including background assumptions like "I am not a Boltzmann brain" and "this is not a simulation" and such. Compare Acknowledging Background Information with P(Q|I) [LW · GW] for example. Given that, they are Falsifiable-Wikipedia.

I view Falsifiable-Wikipedia in a similar way to Occam's Razor. The true epistemology has a simplicity prior, and Occam's Razor is a shadow of that. The true epistemology considers "empirical vulnerability" / "experimental risk" to be positive. Possibly because it falls out of Bayesian updates, possibly because they are "big if true", possibly for other reasons. Falsifiability is a shadow of that.

In that context, if a hypothesis makes no novel predictions, and the predictions it makes are a superset of the predictions of other hypotheses, it's less empirically vulnerable, and in some relative sense "unfalsifiable", compared to those other hypotheses.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2025-01-21T15:21:10.555Z · LW(p) · GW(p)

"this is not a simulation"

I personally wouldn't include it, because essentially everything (given a powerful enough model of computation) could be simulated, and this is why the simulation hypothesis is so bad in casual discourse: It explains everything, which means it explains nothing that is specific to our universe:

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2025-01-20T16:58:41.433Z · LW(p) · GW(p)

Also note that Barnett said "any novel predictions" which is not part of the wikipedia definition of falsifiability right? The wikipedia definition doesn't make reference to an existing community of scientists who already made predictions, such that a new hypothesis can be said to have made novel vs. non-novel predictions.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2025-01-20T17:00:58.972Z · LW(p) · GW(p)

I totally agree btw that it matters sociologically who is making novel predictions and who is sticking with the crowd. And I do in fact ding MIRI points for this relative to some other groups. However I think relative to most elite opinion-formers on AGI matters, MIRI performs better than average on this metric.

But note that this 'novel predictions' metric is about people/institutions, not about hypotheses.

↑ comment by Noosphere89 (sharmake-farah) · 2025-01-21T15:40:47.003Z · LW(p) · GW(p)

However I think relative to most elite opinion-formers on AGI matters, MIRI performs better than average on this metric.

Agree with this, with the caveat that I think all of their rightness relative to others fundamentally was in believing that short timelines were plausible enough, combined with believing in AI being the most major force of the 21st century by far, compared to other technologies, and basically a lot of their other specific predictions are likely to be pretty wrong.

I like this comment here about a useful comparison point to MIRI, where physicists were right about the higgs boson existing, but wrong on the theories like supersymmetry where people expected the higgs mass to be naturally stabilized, and assuming supersymmetry is correct for our universe, the theory cannot stabilize the mass of the higgs, or solve the hierarchy problem:

https://www.lesswrong.com/posts/ZLAnH5epD8TmotZHj/you-can-in-fact-bamboozle-an-unaligned-ai-into-sparing-your#Ha9hfFHzJQn68Zuhq [LW(p) · GW(p)]

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2025-01-21T17:53:02.334Z · LW(p) · GW(p)

I think I agree with this -- but do you see how it makes me frustrated to hear people dunk on MIRI's doomy views as unfalsifiable? Here's what happened in a nutshell:

MIRI: "AGI is coming and it will kill everyone."

Everyone else: "AGI is not coming and if it did it wouldn't kill everyone."

time passes, evidence accumulates...

Everyone else: "OK, AGI is coming, but it won't kill everyone"

Everyone else: "Also, the hypothesis that it won't kill everyone is unfalsifiable so we shouldn't believe it."

↑ comment by Noosphere89 (sharmake-farah) · 2025-01-21T18:18:37.648Z · LW(p) · GW(p)

Yeah, I think this is actually a problem I see here, though admittedly I often see the hypotheses be vaguely formulated, and I kind of agree with Jotto999 that the verbal forecasts give far too much room for leeway here:

I like Eli Tyre's comment here:

https://www.lesswrong.com/posts/ZEgQGAjQm5rTAnGuM/beware-boasting-about-non-existent-forecasting-track-records#Dv7aTjGXEZh6ALmZn [LW(p) · GW(p)]

↑ comment by Martin Randall (martin-randall) · 2025-01-21T19:42:21.461Z · LW(p) · GW(p)

I like that metric, but the metric I'm discussing is more:

- Are they proposing clear hypotheses?

- Do their hypotheses make novel testable predictions?

- Are they making those predictions explicit?

So for example, looking at MIRI's very first blog post in 2007: The Power of Intelligence. I used the first just to avoid cherry-picking.

Hypothesis: intelligence is powerful. (yes it is)

This hypothesis is a necessary precondition for what we're calling "MIRI doom theory" here. If intelligence is weak then AI is weak and we are not doomed by AI.

Predictions that I extract:

- An AI can do interesting things over the Internet without a robot body.

- An AI can get money.

- An AI can be charismatic.

- An AI can send a ship to Mars.

- An AI can invent a grand unified theory of physics.

- An AI can prove the Riemann Hypothesis.

- An AI can cure obesity, cancer, aging, and stupidity.

Not a novel hypothesis, nor novel predictions, but also not widely accepted in 2007. As predictions they have aged very well, but they were unfalsifiable. If 2025 Claude had no charisma, it would not falsify the prediction that an AI can be charismatic.

I don't mean to ding MIRI any points here, relative or otherwise, it's just one blog post, I don't claim it supports Barnett's complaint by itself. I mostly joined the thread to defend the concept of asymmetric falsifiability.

↑ comment by Noosphere89 (sharmake-farah) · 2025-01-21T15:26:58.107Z · LW(p) · GW(p)

Martin Randall extracted the practical consequences of this here:

In that context, if a hypothesis makes no novel predictions, and the predictions it makes are a superset of the predictions of other hypotheses, it's less empirically vulnerable, and in some relative sense "unfalsifiable", compared to those other hypotheses.

↑ comment by ryan_greenblatt · 2024-05-30T23:51:48.912Z · LW(p) · GW(p)

I basically agree with your overall comment, but I'd like to push back in one spot:

If your model of reality has the power to make these sweeping claims with high confidence

From my understanding, for at least Nate Soares, he claims his internal case for >80% doom is disjunctive and doesn't route all through 1, 2, 3, and 4.

I don't really know exactly what the disjuncts are, so this doesn't really help and I overall agree that MIRI does make "sweeping claims with high confidence".

↑ comment by Jeremy Gillen (jeremy-gillen) · 2024-05-31T23:51:54.457Z · LW(p) · GW(p)

I think your summary is a good enough quick summary of my beliefs. The minutia that I object to is how confident and specific lots of parts of your summary are. I think many of the claims in the summary can be adjusted or completely changed and still lead to bad outcomes. But it's hard to add lots of uncertainty and options to a quick summary, especially one you disagree with, so that's fair enough.

(As a side note, that paper you linked isn't intended to represent anyone else's views, other than myself and Peter, and we are relatively inexperienced. I'm also no longer working at MIRI).

I'm confused about why your <20% isn't sufficient for you to want to shut down AI research. Is it because of benefits outweigh the risk, or because we'll gain evidence about potential danger and can shut down later if necessary?

I'm also confused about why being able to generate practical insights about the nature of AI or AI progress is something that you think should necessarily follow from a model that predicts doom. I believe something close enough to (1) from your summary, but I don't have much idea (above general knowledge) of how the first company to build such an agent will do so, or when they will work out how to do it. One doesn't imply the other.

↑ comment by Matthew Barnett (matthew-barnett) · 2024-06-01T00:15:46.101Z · LW(p) · GW(p)

I'm confused about why your <20% isn't sufficient for you to want to shut down AI research. Is it because of benefits outweigh the risk, or because we'll gain evidence about potential danger and can shut down later if necessary?

I think the expected benefits outweigh the risks, given that I care about the existing generation of humans (to a large, though not overwhelming degree). The expected benefits here likely include (in my opinion) a large reduction in global mortality, a very large increase in the quality of life, a huge expansion in material well-being, and more generally a larger and more vibrant world earlier in time. Without AGI, I think most existing people would probably die and get replaced by the next generation of humans, in a relatively much poor world (compared to the alternative).

I also think the absolute level risk from AI barely decreases if we globally pause. My best guess is that pausing would mainly just delay adoption without significantly impacting safety. Under my model of AI, the primary risks are long-term, and will happen substantially after humans have already gradually "handed control" over to the AIs and retired their labor on a large scale. Most of these problems -- such as cultural drift and evolution -- do not seem to be the type of issue that can be satisfactorily solved in advance, prior to a pause (especially by working out a mathematical theory of AI, or something like that).

On the level of analogy, I think of AI development as more similar to "handing off control to our children" than "developing a technology that disempowers all humans at a discrete moment in time". In general, I think the transition period to AI will be more diffuse and incremental than MIRI seems to imagine, and there won't be a sharp distinction between "human values" and "AI values" either during, or after the period.

(I also think AIs will probably be conscious in a way that's morally important, in case that matters to you.)

In fact, I think it's quite plausible the absolute level of AI risk would increase under a global pause, rather than going down, given the high level of centralization of power required to achieve a global pause, and the perverse institutions and cultural values that would likely arise under such a regime of strict controls. As a result, even if I weren't concerned at all about the current generation of humans, and their welfare, I'd still be pretty hesitant to push pause on the entire technology.

(I think of technology as itself being pretty risky, but worth it. To me, pushing pause on AI is like pushing pause on technology itself, in the sense that they're both generically risky yet simultaneously seem great on average. Yes, there are dangers ahead. But I think we can be careful and cautious without completely ripping up all the value for ourselves.)

Replies from: Lukas_Gloor, quetzal_rainbow, dr_s↑ comment by Lukas_Gloor · 2024-06-01T13:54:05.057Z · LW(p) · GW(p)

Would most existing people accept a gamble with 20% of chance of death in the next 5 years and 80% of life extension and radically better technology? I concede that many would, but I think it's far from universal, and I wouldn't be too surprised if half of people or more think this isn't for them.

I personally wouldn't want to take that gamble (strangely enough I've been quite happy lately and my life has been feeling meaningful, so the idea of dying in the next 5 years sucks).

(Also, I want to flag that I strongly disagree with your optimism.)

↑ comment by Matthew Barnett (matthew-barnett) · 2024-06-01T17:52:30.467Z · LW(p) · GW(p)

For what it's worth, while my credence in human extinction from AI in the 21st century is 10-20%, I think the chance of human extinction in the next 5 years is much lower. I'd put that at around 1%. The main way I think AI could cause human extinction is by just generally accelerating technology and making the world a scarier and more dangerous place to live. I don't really buy the model in which an AI will soon foom until it becomes a ~god.

↑ comment by Seth Herd · 2024-06-01T17:04:55.388Z · LW(p) · GW(p)

I like this framing. I think the more common statement would be 20% chance of death in 10-30 years , and 80% chance of life extension and much better technology that they might not live to see.

I think the majority of humanity would actually take this bet. They are not utilitarians or longtermists.

So if the wager is framed in this way, we're going full steam ahead.

↑ comment by quetzal_rainbow · 2024-06-01T08:26:25.404Z · LW(p) · GW(p)

I yet another time say that your tech tree model doesn't make sense to me. To get immortality/mind uploading, you need really overpowered tech, far above the level when killing all humans and starting disassemble planet becomes negligibly cheap. So I wouldn't expect that "existing people would probably die" is going to change much under your model "AIs can be misaligned but killing all humans is too costly".

↑ comment by dr_s · 2024-06-03T09:24:46.616Z · LW(p) · GW(p)

(I also think AIs will probably be conscious in a way that's morally important, in case that matters to you.)

I don't think that's either a given nor something we can ever know for sure. "Handing off" the world to robots and AIs that for all we know might be perfect P-zombies doesn't feel like a good idea.

↑ comment by Signer · 2024-06-01T08:04:20.128Z · LW(p) · GW(p)

Given a low prior probability of doom as apparent from the empirical track record of technological progress, I think we should generally be skeptical of purely theoretical arguments for doom, especially if they are vague and make no novel, verifiable predictions prior to doom.

And why such use of the empirical track record is valid? Like, what's the actual hypothesis here? What law of nature says "if technological progress hasn't caused doom yet, it won't cause it tomorrow"?

MIRI’s arguments for doom are often difficult to pin down, given the informal nature of their arguments, and in part due to their heavy reliance on analogies, metaphors, and vague supporting claims instead of concrete empirically verifiable models.

And arguments against are based on concrete empirically verifiable models of metaphors.

If your model of reality has the power to make these sweeping claims with high confidence, then you should almost certainly be able to use your model of reality to make novel predictions about the state of the world prior to AI doom that would help others determine if your model is correct.

Doesn't MIRI's model predict some degree of the whole Shoggoth/actress thing in current system? Seems verifiable.

↑ comment by Seth Herd · 2024-06-01T17:19:51.015Z · LW(p) · GW(p)

I share your frustration with MIRI's communications with the alignment community.

And, the tone of this comment smells to me of danger. It looks a little too much like strawmanning, which always also implies that anyone who believes this scenario must be, at least in this context, an idiot. Since even rationalists are human, this leads to arguments instead of clarity.

I'm sure this is an accident born of frustration, and the unclarity of the MIRI argument.

I think we should prioritize not creating a polarized doomer-vs-optimist split in the safety community. It is very easy to do, and it looks to me like that's frequently how important movements get bogged down.

Since time is of the essence, this must not happen in AI safety.

We can all express our views, we just need to play nice and extend the benefit of the doubt. MIRI actually does this quite well, although they don't convey their risk model clearly. Let's follow their example in the first and not the second.

Edit: I wrote a short form post about MIRI's communication strategy [LW(p) · GW(p)], including how I think you're getting their risk model importantly wrong

↑ comment by Ebenezer Dukakis (valley9) · 2024-06-05T03:17:13.459Z · LW(p) · GW(p)

Eliezer's response to claims about unfalsifiability, namely that "predicting endpoints is easier than predicting intermediate points", seems like a cop-out to me, since this would seem to reverse the usual pattern in forecasting and prediction, without good reason.

Note that MIRI has made some intermediate predictions. For example, I'm fairly certain Eliezer predicted that AlphaGo would go 5 for 5 against LSD, and it didn't. I would respect his intellectual honesty more if he'd registered the alleged difficulty of intermediate predictions before making them unsuccessfully.

I think MIRI has something valuable to contribute to alignment discussions, but I'd respect them more if they did a "5 Whys" type analysis on their poor prediction track record, so as to improve the accuracy of predictions going forwards. I'm not seeing any evidence of that. It seems more like the standard pattern where a public figure invests their ego in some position, then tries to avoid losing face.

↑ comment by Joe Collman (Joe_Collman) · 2024-06-02T05:06:50.609Z · LW(p) · GW(p)

On your (2), I think you're ignoring an understanding-related asymmetry:

- Without clear models describing (a path to) a solution, it is highly unlikely we have a workable solution to a deep and complex problem:

- Absence of concrete [we have (a path to) a solution] is pretty strong evidence of absence.

[EDIT for clarity, by "we have" I mean "we know of", not "there exists"; I'm not claiming there's strong evidence that no path to a solution exists]

- Absence of concrete [we have (a path to) a solution] is pretty strong evidence of absence.

- Whether or not we have clear models of a problem, it is entirely possible for it to exist and to kill us:

- Absence of concrete [there-is-a-problem] evidence is weak evidence of absence.

A problem doesn't have to wait until we have formal arguments or strong, concrete empirical evidence for its existence before killing us. To claim that it's "premature" to shut down the field before we have [evidence of type x], you'd need to make a case that [doom before we have evidence of type x] is highly unlikely.

A large part of the MIRI case is that there is much we don't understand, and that parts of the problem we don't understand are likely to be hugely important. An evidential standard that greatly down-weights any but the most rigorous, legible evidence is liable to lead to death-by-sampling-bias.

Of course it remains desirable for MIRI arguments to be as legible and rigorous as possible. Empiricism would be nice too (e.g. if someone could come up with concrete problems whose solution would be significant evidence for understanding something important-according-to-MIRI about alignment).

But ignoring the asymmetry here is a serious problem.