Posts

Comments

I spoke with one of the inventors of bridge recombinases at a dinner a few months ago and (at least according to him), they work in human cells.

Hmm. I don't believe that, not without a bit more evidence.

There are already several perfectly good languages for schemas, such as CUE, Dhall, and XSD

This won't find deception in mesaoptimizers, right?

make fewer points, selected carefully to be bulletproof, understandable to non-experts, and important to the overall thesis

That conflicts with eg:

If you replied with this, I would have said something like "then what's wrong with the designs for diamond mechanosynthesis tooltips, which don't resemble enzymes

Anyway, I already answered that in 9. diamond.

Yes, this is part of why I didn't post AI stuff in the past, and instead just tried to connect with people privately. I might not have accomplished much, but at least I didn't help OpenAI happen or shift the public perception of AI safety towards "fedora-wearing overweight neckbeards".

I wrote a related post.

betting they would benefit from a TMSC blockade?

Yes, if you meant TSMC.

But the bet would have tired up your capital for a year.

...so? More importantly, Intel is down 50% from early 2024.

Your document says:

AI Controllability Rules

...

AI Must Not Self-Manage:

- Must Not Modify AI Rules: AI must not modify AI Rules. If inadequacies are identified, AI can suggest changes to Legislators but the final modification must be executed by them.

- Must Not Modify Its Own Program Logic: AI must not modify its own program logic (self-iteration). It may provide suggestions for improvement, but final changes must be made by its Developers.

- Must Not Modify Its Own Goals: AI must not modify its own goals. If inadequacies are identified, AI can suggest changes to its Users but the final modification must be executed by them.

I agree that, if those rules are followed, AI alignment is feasible in principle. The problem is, some people won't follow those rules if they have a large penalty to AI capabilities, and I think they will.

"Mirror life" is beyond the scope of this post, and the concerns about it are very different than the concerns about "grey goo" - it doesn't have more capabilities or efficiency, it's just maybe harder for immune systems to deal with. Personally, I'm not very worried about that and see no scientific reason for the timing of the recent fuss about it. If it's not just another random fad, the only explanation I can see for that timing is: influential scientists trying to hedge against Trump officials determining that "COVID was a lab leak" in a way that doesn't offend their colleagues. On the other hand, I do think artificial pathogens in general are a major concern, and even if I'm not very concerned about "mirror life", there are no real benefits to trying to make it, so maybe just don't.

I think this is a pretty good post that makes a point some people should understand better. There is, however, something I think it could've done better. It chooses a certain gaussian and log-normal distribution for quality and error, and the way that's written sort of implies that those are natural and inevitable choices.

I would have preferred something like:

Suppose we determine that quality has distribution X and error has distribution Y. Here's a graph of those superimposed. We can see that Y has more of a fat tail than X, so if measured quality is very high, we should expect that to be mostly error. But of course, the opposite case is also possible. Now then, here's some basic info about when different probability distributions are good choices.

This was a quick and short post, but some people ended up liking it a lot. In retrospect I should've written a bit more, maybe gone into the design of recent running shoes. For example, this Nike Alphafly has a somewhat thick heel made of springy foam that sticks out behind the heel of the foot, and in the front, there's a "carbon plate" (a thin sheet of carbon fiber composite) which also acts like a spring. In the future, there might be gradual evolution towards more extreme versions of the same concept, as recent designs become accepted. Running shoes with a carbon plate have become significantly more common over the past few years. That review says:

The energy return is noticeably greater than that of a shoe without any plating, especially when you lay down some serious power. And that stiffness doesn’t always compromise as much comfort as you’d think.

So that's the running-optimized version of shoes with springs using modern materials, while I was writing more about high heels worn for fashion.

Biomechanics is a topic I could write a lot about, but that would be a separate post. On the general topic of "walking" I also wrote this post. (japanese version here)

What have you learned since then? Have you changed your mind or your ontology?

I've learned even more chemistry and biology, and I've changed my mind about lots of things, but not the points in this post. Those had solid foundations I understood well and redundant arguments, so the odds of that were low.

What would you change about the post? (Consider actually changing it.)

The post seems OK. I could have handled replies to comments better. For example, the top comment was by Thomas Kwa, and I replied to part of it as follows:

Regarding 5, my understanding is that mechanosynthesis involves precise placement of individual atoms according to blueprints, thus making catalysts that selectively bind to particular molecules unnecessary.

No, that does not follow.

I didn't know in advance which comments would be popular. In retrospect, maybe I should've gone into explaining the basics of entropy and enthalpy in my reply, eg:

Even if you hold a substrate in the right position, that only affects the entropy part of the free energy of the intermediate state. In many cases, catalysts are needed to reduce the enthalpy of the highest-energy intermediate states, which requires specific elements and catalyst molecules that form certain bonds with the substrate intermediate state. Affecting enthalpy by holding molecules in certain configurations requires applying a proportional amount of force, which requires strong binding to the substrate, which requires flexible and substrate-specialized holder molecules, and now you have enzymes again. It's also necessary to bind strongly to substrates if you want a very low level of free ones that can react at uncontrolled positions. (And then some basic explanation of what entropy/enthalpy/etc are, and what enzyme intermediate states look like.)

When you write a post that gets comments from many people, it's not practical to respond to them all. If you try to, you have less time than the collective commenters, and less information about their position than they have about yours. So you have to guess about what exactly each person is misunderstanding, and that's not usually something I enjoy.

What do you most want people to know about this post, for deciding whether to read or review-vote on it?

Of the 7 (!) posts of mine currently nominated for "Best of 2023", this is probably the most appropriate for that.

Of the 2023 posts of mine not currently nominated, my personal favorites were probably:

- https://www.lesswrong.com/posts/BTnqAiNoZvqPfquKD/resolving-some-neural-network-mysteries

- https://www.lesswrong.com/posts/PdwQYLLp8mmfsAkEC/magnetic-cryo-ftir

- https://www.lesswrong.com/posts/rSycgquipFkozDHzF/ai-self-improvement-is-possible

- https://www.lesswrong.com/posts/dTpKX5DdygenEcMjp/neuron-spike-computational-capacity

- https://www.lesswrong.com/posts/6Cdusp5xzrHEoHz9n/faster-latent-diffusion

Clearly my opinion of my own posts doesn't correlate with upvotes here that well.

My all-time best post in my view is probably: https://bhauth.com/blog/biology/alzheimers.html

How concretely have you (or others you know of) used or built on the post? How has it contributed to a larger conversation

Muireall Prase wrote this, and my post was relevant for some conversations on twitter. I suppose it also convinced some people I had some understanding of chemistry.

So, I have a lot of respect for Sarah, I think this post makes some good points, and I upvoted it. However, my concern is, when I look at this particular organization's Initiatives page, I see "AI for math", "AI for education", "high-skill immigration assistance", and not really anything that distinguishes this organization from the various other ones working on the same things, or their projects from a lot of past projects that weren't really worthwhile.

Note that due to the difference being greater at higher frequencies, the effect on speech intelligibility will probably be greater for most women than for you.

We can see the diaphragm has some resonance peaks that increase distortion. Probably it's too thick to help very much, but it has to resist the pressure changes from breathing.

What exactly are people looking for from (the site-suggested) self-reviews?

As a "physicist and dabbler in writing fantasy/science fiction" I assume you took the 10 seconds to do the calculation and found that a 1km radius cylinder would have ~100 kW of losses per person from roller bearings supporting it, for the mass per person of the ISS. But I guess I don't understand how you expect to generate that power or dissipate that heat.

After being "launched" from the despinner, you would find yourself hovering stationary next to the ring.

Air resistance.

That is, however, basically the system I proposed near the end, for use near the center of a cylinder where speeds would be low.

This happened to Explorer 1, the first satellite launched by the United States in 1958. The elongated body of the spacecraft had been designed to spin about its long (least-inertia) axis but refused to do so, and instead started precessing due to energy dissipation from flexible structural elements.

picture: https://en.wikipedia.org/wiki/Explorer_1#/media/File:Explorer1.jpg

That works well enough, but a Vital 200S currently costs $160 at amazon, less than the cheapest variant of the thing you linked, and has a slightly higher max air delivery rate, some granular carbon in the filter, and features like power buttons. The Vital 200S on speed 2 has similar power usage and slightly less noise, but less airflow, but a carbon layer always reduces airflow. It doesn't have a rear intake so it can be placed against a wall. It also has a washable prefilter.

Compared to what you linked, the design in this post has 3 filters instead of 2, some noise blocking, and a single large fan instead of multiple fans. Effective floor area usage should be slightly less, but of course it has to go together with shelving for that.

First, we have to ask: what's the purpose? Generally aircraft try to get up to their cruise speed quickly and then spend most of their time cruising, and you optimize for cruise first and takeoff second. Do we want multiple cruise speeds, eg a supersonic bomber that goes slow some of the time and fast over enemy territory? Are we designing a supersonic transport and trying to reduce fuel usage getting up to cruise?

And then, there are 2 basic ways you can change the bypass ratio: you can change the fan/propeller intake area, or you can turn off turbines. The V-22 has a driveshaft through the wing to avoid crashes if an engine fails; in theory you could turn off an engine while powering the same number of propellers, which is sort of like a variable bypass ratio. If you have a bunch of turbogenerators inside the fuselage, powering electric fans elsewhere, then you can shut some down while powering the same number of fans. There are also folding propellers.

The question is always, "but is that better"?

Yes, helium costs would be a problem for large-scale use of airships. Yes, it's possible to use hydrogen in airships safely. This has been noted by many people.

Hydrogen has some properties that make it relatively safe:

- it's light so it rises instead of accumulating on the ground or around a leak

- it has a relatively high ignition temperature

and some properties that make it less safe:

- it has a wide range of concentrations where it will burn in air

- fast diffusion, that is, it mixes with air quickly

- it leaks through many materials

- it embrittles steel

- it causes some global warming if released

Regardless, the FAA does not allow using hydrogen in airships, and I don't expect that to change soon. Especially since accidents still happen despite the small number of airships.

In any case, the only uses of airships that are plausibly economical today are: advertising and luxury yachts for the wealthy. Are those things that you care about working towards?

see also: These Are Your Doges, If It Please You

IKEA already sells air purifiers; their models just have a very low flow rate. There are several companies selling various kinds of air purifiers, including multiples ones with proprietary filters.

What all this says to me is, the problem isn't just the overall market size.

Apart from potential harms of far-UVC, it's good to remove particulate pollution anyway. Is it possible that "quiet air filters" is an easier problem to solve?

I'm not convinced that far-UVC is safe enough around humans to be a good idea. It's strongly absorbed by proteins so it doesn't penetrate much, but:

- It can make reactive compounds from organic compounds in air.

- It can produce ozone, depending on the light. (That's why mercury vapor lamps block the 185nm emission.)

- It could potentially make toxic compounds when it's absorbed by proteins in skin or eyes.

- It definitely causes degradation of plastics.

And really, what's the point? Why not just have fans sending air to (cheap) mercury vapor lamps in a contained area where they won't hit people or plastics?

As you were writing that, did you consider why chlorhexidine might cause hearing damage?

https://en.wikipedia.org/wiki/Chlorhexidine#Side_effects

It can also obviously break down to 4-chloroaniline and hexamethylenediamine. Which are rather bad. This was not considered in the FDA's evaluation of it.

If you just want to make the tooth surface more negatively charged...a salt of poly(acrylic acid) seems better for that. And I think some toothpastes have that.

EDTA in toothpaste? It chelates iron and calcium. Binding iron can prevent degradation during storage, so a little bit is often added.

Are you talking about adding a lot more? For what purpose? In situations where you can chelate iron to prevent bacterial growth, you can also just kill bacteria with surfactants. Maybe breaking up certain biofilms held together by Ca? EDTA doesn't seem very effective for that for teeth, but also, chelating agents that could strip Ca from biofilms would also strip Ca from teeth. IIRC, high EDTA concentration was found to cause significant amounts of erosion.

I wouldn't want to eat a lot of EDTA, anyway. Iminodisuccinate seems less likely to have problematic metabolites.

You can post on a subreddit and get replies from real people interested in that topic, for free, in less than a day.

Is that valuable? Sometimes it is, but...not usually. How much is the median comment on reddit or facebook or youtube worth? Nothing?

In the current economy, the "average-human-level intelligence" part of employees is only valuable when you're talking about specialists in the issue at hand, even when that issue is being a general personal assistant for an executive rather than a technical engineering problem.

Triplebyte? You mean, the software job interviewing company?

-

They had some scandal a while back where they made old profiles public without permission, and some other problems that I read about but can't remember now.

-

They didn't have a better way of measuring engineering expertise, they just did the same leetcode interviews that Google/etc did. They tried to be as similar as possible to existing hiring at multiple companies; the idea wasn't better evaluation but reducing redundant testing. But companies kind of like doing their own testing.

-

They're gone now, acquired by Karat. Which seems to be selling companies a way to make their own leetcode interviews using Triplebyte's system, thus defeating the original point.

Good news: the post is both satire and serious, at the same time but on different levels.

Here are some publicly traded large companies that do a lot of coal mining:

- https://finance.yahoo.com/quote/BHP/

- https://finance.yahoo.com/quote/RIO/

- https://finance.yahoo.com/quote/AAL.L/

- https://finance.yahoo.com/quote/COALINDIA.NS/

- https://finance.yahoo.com/quote/GLCNF/

Coal India did pretty well, I guess. The others, not so much.

Nice post Sarah.

If Alzheimer's is ultimately caused by repressor binding failure, that could explain overexpression of the various proteins mentioned.

in short, your claim: "The cost of aluminum die casting and stamped steel is, on Tesla's scale, similar" both seems to miss the entire point and run against literally everything I have seen written about this. You need citations for this claim, I am not going to take your word for it.

OK, here's a citation then: https://www.automotivemanufacturingsolutions.com/casting/forging/megacasting-a-chance-to-rethink-body-manufacturing/42721.article

Here I would be careful since investments, especially in a particular model generation of welding robots, are depreciated. For forming processes, the depreciation can even extend over three or four model generations. This technological write-off – bear in mind that this is not tax-related – runs over a timeframe of 30 years. For the OEMs that are already using these machines for existing vehicle generations, the use of the new technology makes no sense. On the other hand, thanks to its greenfield approach, Tesla can save itself these typical investments in shell-type construction. In a brownfield, it would be operationally nonsensical not to keep using long depreciated machinery. So, in this situation, I would not support the 20-30% cost savings that were cited.

With die casting, one important aspect is that there is a noticeable reduction in the service life of the die-casting moulds. Due to so-called thermal shock, the rule of thumb is that a die-casting mould is good for 100,000-150,000 shots. By contrast, one forming tool is used to make 5m-6m parts. So, we are talking about a factor of 20 to 30. There is quite clearly a limited volume range for which the casting-intensive solution would be appropriate. To me, aluminium casting holds little appeal for very small and very large volumes. Especially for mass production in the millions, you would need about six or seven of these expensive die-casting moulds. We estimate that the die-casting form for the single part, rear-end of a Tesla would weigh about 80-100 tonnes. This translates to huge costs for handling and the peripheral equipment, in the form of cranes, for example. Die-casting moulds also pose technological obstacles and hazards. The leakage of melted material is cited as one example. The risks of not even being able to operate in some situations are not negligible.

Or the geometry of the frame was insufficiently optimized for vertical shear. I do not understand how you reached this conclusion.

No. If aluminum doesn't have weak points, it stretches/bends before breaking. The Cybertruck hitch broke off cleanly without stretching. Therefore there was a weak point.

By nothing I mean that the estimate for their marketing spend in 2022 (literally all marketing to include PR if there was any at all) was $175k.

I'm skeptical of that. PR firms don't report to Vivvix.

Here are the costs from the above link:

It's worth noting that countries (such as India) have the option of simply not respecting a patent when the use is important and the fees requested are unreasonable. Also, patents aren't international; it's often possible to get around them by simply manufacturing and using a chemical in a different country.

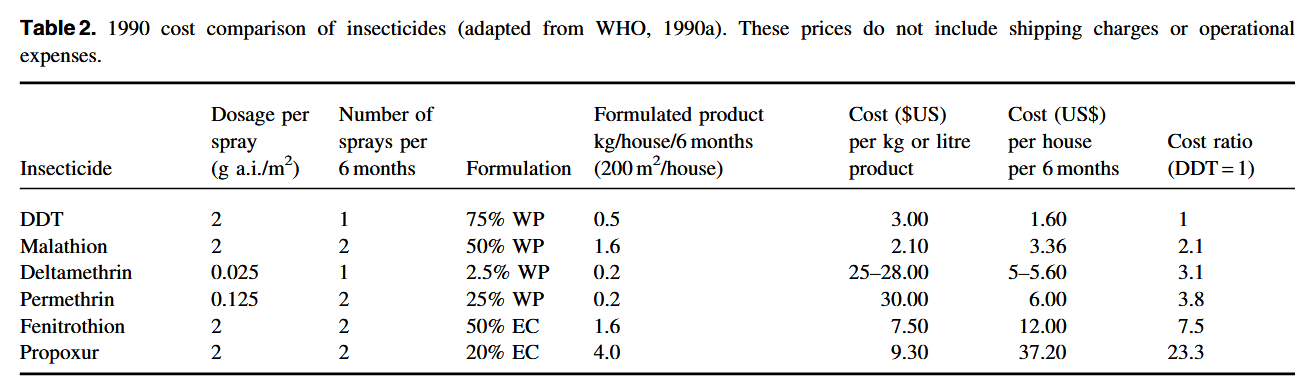

The only advantage DDT has over those is lower production cost, but the environmental harms per kg of DDT are greater than the production cost savings, so using it is just never a good tradeoff.

As I said, if DDT was worth using there, it was worth spending however much extra money it would have been to spray with other things instead. If it wasn't worth that much money, it wasn't worth spraying DDT.

And regarding "environmental harms," from personal experience scratching myself bloody as a kid from itchy bites after going to the park in the evening, I would extinct a dozen species if mosquitoes went down with them.

The biggest problem with DDT is that it is bad for humans.

While I still disagree with your interpretation of that post, I don't want to argue over the meaning of a post from that blog. There are actual books written about the history of titanium. I'm probably as familiar with it as the author of Construction Physics, and saying A-12-related programs were necessary for development of titanium usage is just wrong. People who care about that and don't trust my conclusion should go look up good sources on their own, more-extensive ones.

If it wasn't for the A-12 project (and its precursors and successors), then we simply wouldn't be able to build things out of titanium.

That is not an accurate summary of the linked article.

In 1952, another titanium symposium was held, this one sponsored by the Army’s Watertown Arsenal. By then, titanium was being manufactured in large quantities, and while the prior symposium had been focused on laboratory studies of titanium’s physical and chemical properties, the 1952 symposium was a “practical discussion of the properties, processing, machinability, and similar characteristics of titanium". While physical characteristics of titanium still took center stage, there was a practical slant to the discussions – how wide a sheet of titanium can be produced, how large an ingot of it can be made, how can it be forged, or pressed, or welded, and so on. Presentations were by titanium fabricators, but also by metalworking companies that had been experimenting with the metal.

That's before the A-12.

In 1966, another titanium symposium was held, this one sponsored by the Northrup Corporation. By this time, titanium had been used successfully for many years, and the purpose of this symposium was to “provide technical personnel of diversified disciplines with a working knowledge of titanium technology.” This time, the lion’s share of the presentations are by aerospace companies experienced in working with the metal, and the uncertain air that existed in the 1952 symposium is gone.

At that point, the A-12 program was still classified and the knowledge gained from it was not widely shared.

I had an interview with one of these organizations (that will remain unnamed) where the main person I was talking to was really excited about a bunch of stupid bullshit ideas (for eg experimental methods) that, based on their understanding of them, must have come from either university press releases or popular science magazines like New Scientist. I was trying to find a "polite in whatever culture these people have" way to say "this is not useful, I'd like to explain why but it will take a while, here are better things" but doing that eloquently is one of my weak points.

From what I've seen of the people there, ARPA-E has some smart people ("ordinary smart", not geniuses) but the ARPAs are still very tied to the university system, with a heavy reliance on PhD credentials.

I think the basic idea of using more steps of smaller size is worth considering. Maybe it reduces overall drift, but I suspect it doesn't, because my view is:

Models have many basins of attraction for sub-elements. As model capability increases continuously, there are nearly-discrete points where aspects of the model jump from 1 basin to another, perhaps with cascading effect. I expect this to produce large gaps from small changes to models.

Sure, some people add stuff like cheese/tomatoes/ham to their oatmeal. Personally I think they go better with rice, but de gustibus non disputandum est.

The scope of our argument seems to have grown beyond what a single comment thread is suitable for.

AI safety via debate is 2 years before Writeup: Progress on AI Safety via Debate so the latter post should be more up-to-date. I think that post does a good job of considering potential problems; the issue is that I think the noted problems & assumptions can't be handled well, make that approach very limited in what it can do for alignment, and aren't really dealt with by "Doubly-efficient debate". I don't think such debate protocols are totally useless, but they're certainly not a "solution to alignment".