Foresight for AGI Safety Strategy: Mitigating Risks and Identifying Golden Opportunities

post by jacquesthibs (jacques-thibodeau) · 2022-12-05T16:09:46.128Z · LW · GW · 6 commentsContents

What is strategic foresight? Foresight is not forecasting. Foresight does not try to predict the future as “forecasting” does. Unfortunately, foresight can be poorly implemented Strategic Foresight applied to AGI risk Why should we be doing Strategic Foresight for AGI risk? How can we adapt known strategic foresight methods for the AGI risk? Call to action None 6 comments

This post is about why I think we should use strategic foresight for AGI governance work. I am writing this without having read all the AGI governance / strategy reports and reading materials. I was planning on putting more effort into this post, but this is a better-than-nothing-at-all post.

Worst-case, this might end up being a signal for someone in the comments to point me to work that already exists. In the best case, this kicks off some work that I think could be quite valuable.

TLDR: I feel like there should be more AGI governance people doing strategic foresight to plan for several scenarios, identify opportunities, and then create a plan for acting in those scenarios by creating policy documents and demos that take advantage of each scenario. The purpose is to identify risks and opportunities by looking at weak signals, imagining the complex future and how AI may plausibly interact with various things in the world. You imagine various plausible futures and try to design a robust strategy for those futures. It’s essentially impossible to predict the future, but you can still prepare for various outcomes. My hope is that this kind of work can help us do things in the immediate, short and longer-term actions that increase our odds of success.

In this post, I will cover the following:

- What is Strategic Foresight?

- Strategic Foresight applied to AGI risk

- Why should we be doing Strategic Foresight for AGI risk?

- How can we adapt known strategic foresight methods for the AGI risk?

- Call to action

Before I start, I want to say that I know there's a lot of effort being put into the AGI governance space. Part of my goal here is to start a conversation as someone with both technical safety and government experience.

As an addition to this post, I suggest having a look at this post [EA · GW] by Gideon Futerman, which is pointing to a similar-ish thing.

What is strategic foresight?

Foresight can be used to explore plausible futures and identify potential challenges. It helps us better understand the system we are dealing with, how the system could evolve, and potential surprises that may arise.

Governments use foresight to help policy development and decision-making. Looking to the future helps us prepare for tomorrow’s problems and not just react to yesterday’s problems.

Foresight is not forecasting.

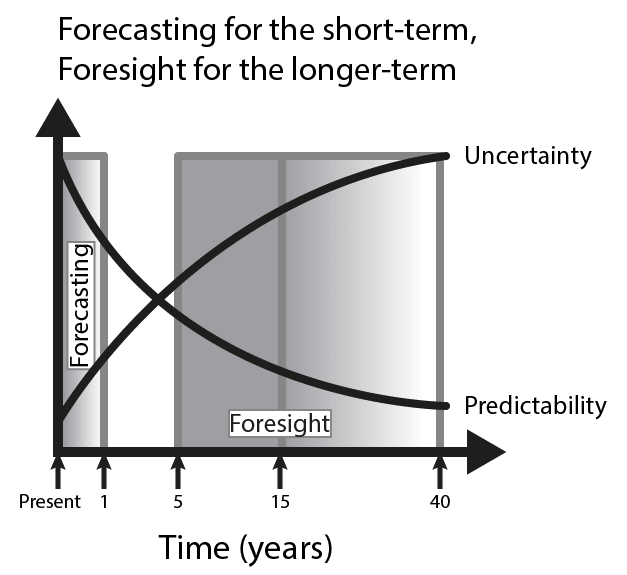

When I first started reading about AI forecasting, I was a little confused about the terminology. In the EA / AI Risk space, most people seem to use the word “forecasting” even when talking about the year 2040+. People that I talked to outside of EA seemed to claim that “forecasting” only worked for short-term predictions and you were potentially being overly confident about things evolve if you try to predict further than 1 year into the future.

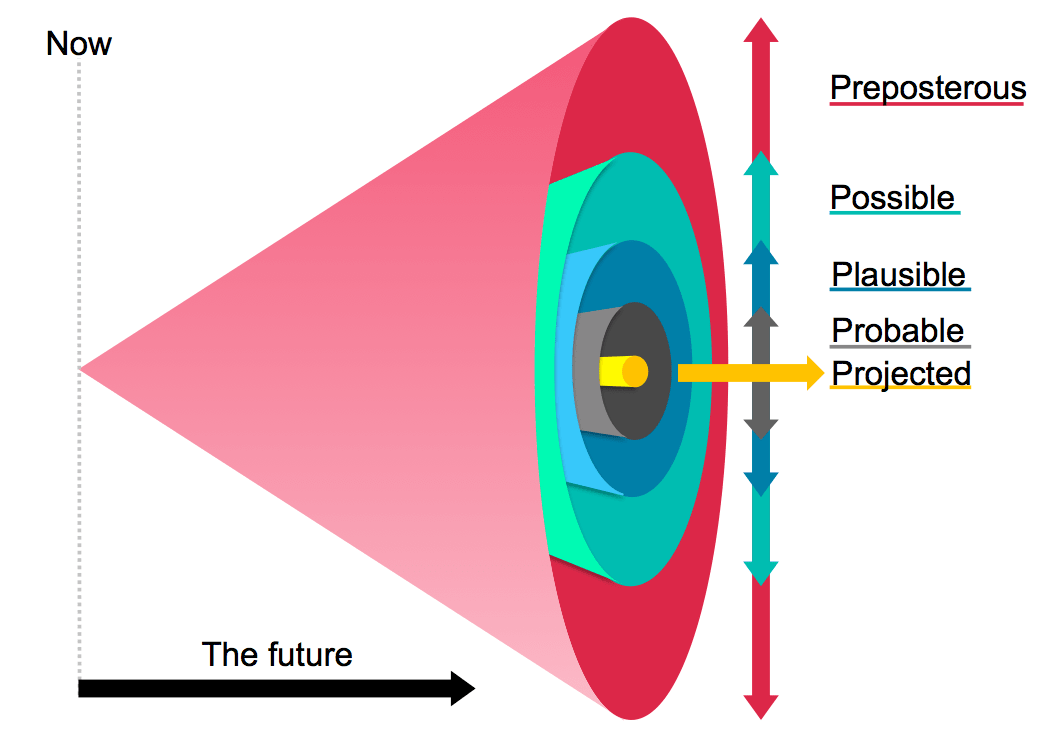

Forecasting is usually seen as a form of prediction, while foresight focuses on looking at the cone of plausible outcomes (so that, for example, an organization can be as robust as possible across plausible scenarios). With foresight, you basically just assume the case you won’t be able to predict exact outcomes, and so you try to be as robust as possible.

Foresight does not try to predict the future as “forecasting” does.

In some spaces (though perhaps not in the AI Risk space), forecasting will try to take past data and predict what will happen in the future. This can be problematic because systems can change, and the assumptions hidden in the data may no longer hold up. Forecasters often look at relevant trends and past data, which can be quite good at predicting the near, expected future. However, forecasters may overlook the weak signals that can become change drivers and lead to a massive change in the system as they try to predict further into the future. Foresight pays special attention to these weak signals that may eventually lead to disruptive change.

However, it occurred to me that people within EA / AI Risk are instead doing some form of forecasting and foresight. That said, I think it’s useful thinking in the Strategic Foresight framing since our goal is not to predict precisely when AGI will happen; the goal is to be safe at any point it does arrive and make sure we take advantage of any golden opportunities and mitigate any risks. So, for the purpose of this post, I’ll keep talking about the difference between “(near-term) forecasting” and foresight.

(Edit: please look at my attempt to clarify this in the comments [LW(p) · GW(p)].)

People can forecast a couple of days to decades in the future, but I’ve heard the claim that it’s only really “effective” within a 1-year timeframe. This is potentially blasphemy to some of the forecasters in the community, so I'd like to hear everyone’s thoughts on this. I expect that forecasting, at the very least, will help us learn a lot about how AGI might come about.

Foresight, however, works much better than forecasting in a 5- to 15-year timeframe (it’s possible to keep using foresight after 15 years, though its effectiveness may go down every new year into the future, especially for our unpredictable AGI future). Instead of predicting one specific outcome, foresight is trying to uncover scenarios that fit into a cone of plausibility and then identify the opportunities that can be taken advantage of in each scenario.

Foresight tries to overcome some of the difficulties that come with forecasting by testing whether the assumptions in which the past data hold are still correct in the future. This is of the utmost importance when things are changing in fundamental ways.

Foresight is a method that allows us to look at the current system and see different ways it may evolve and then plan for the various outcomes proactively. The different paths a system could take all have varying degrees of complexity.

The more complex a problem, the harder it is to predict what might happen. This is one reason why foresight is valuable; it helps you think through all of these different and complex paths and create an action plan on how you might resolve this situation if it happens. This is quite different from coming up with an action plan when there is urgency because often, the project will be rushed.

Imagine a situation where a minister asks for the development of a policy on image generation and gives policymakers 3 months to come up with a policy document. It’s quite likely that 3 months is not enough time to develop an exceptional policy. Now, imagine the same scenario, but 4 years ago, you used foresight to think through the possibility of how image generation policy can include (useful) AGI safety regulation, created some “scary” demos, and have an action plan on the steps to take. That is one of the things I'm hoping to get out of this type of work.

Unfortunately, foresight can be poorly implemented

When I was looking into this stuff a few years ago, I got the impression that a lot of foresight work is done poorly. There wasn't enough effort in making things rigorous.

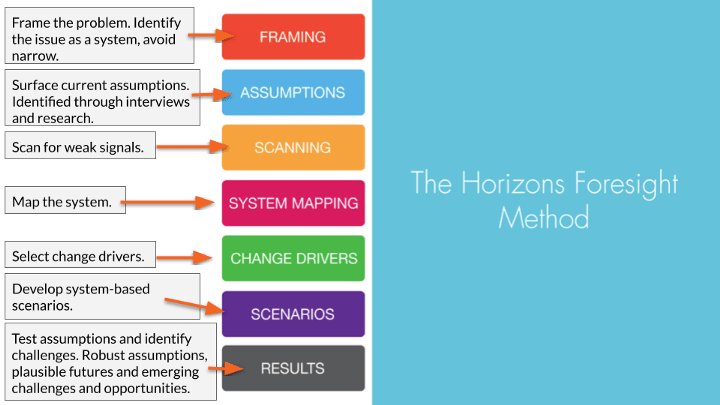

Fortunately, some organizations have created rigorous foresight methods. The top contenders I came across were Policy Horizons Canada within the Canadian Federal Government and the Centre for Strategic Futures [EA · GW] within the Singaporean Government. Apparently, Europe likes foresight work as well.

Policy Horizons Canada explores plausible futures and identifies the challenges and opportunities that may emerge to create policies that are robust across a range of plausible futures. Please look at their training material if you want to know more about their method.

There are two main things I’d like to highlight about Horizons’ Foresight Method:

- Horizons’ Foresight Method is designed to tackle complex public policy problems rigorously and systemically.

- Horizons consider the mental models of different stakeholders essential to take into consideration when using foresight to inform policy development.

Horizons has avoided the trap of using too much intuition to throw foresight methods at a problem and have created a rigorous, systemic, and participatory foresight method that focuses on exploring the future of a policy issue. That said, I still think their approach could still be improved and tailored to our specific use case.

Strategic Foresight applied to AGI risk

Why should we be doing Strategic Foresight for AGI risk?

I think Strategic Foresight work would allow us to better identify opportunities for getting governments and organizations on board. The way I see it is that if we have a set of policy documents, demos or plans that we have prepared well in advance for several plausible scenarios, we are much better placed to move fast when the time comes.

Secondly, there was a lot of insight gained from the work done on reports like "The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation." I believe a big workshop like this with people from different backgrounds and perspectives would be a great opportunity to identify opportunities we might not have identified in our respective silos. This is a great thing about some foresight methods: putting a bunch of people with different backgrounds in a room and approaching the problem from every angle. This would also serve as an opportunity to connect with people working on different parts of the problem.

Finally, the big value we get out of doing this type of work is to be prepared for many scenarios so that our entire approach is as robust as possible. It’s worth thinking about doing things that are robust across scenarios, like building trust with governments and organizations (which a lot of people in governance already do).

What we are facing is a wicked problem. There are many moving parts, and things are constantly changing and moving fast.

How can we adapt known strategic foresight methods for the AGI risk?

Unfortunately, I don't have the answer to the above question. However, here's some initial rough thoughts:

- We should have a solid set of predictions of what might happen in the coming years. For example, when will model x come into play? When will company z make its move into productizing models? Which field of work will be greatly impacted by AI and can work with those people to communicate with policymakers?

- What are all the levers we can pull?

- What are the different scenarios we can prepare for? What are the different ways we can prepare for each scenario? What are some early signs that tell us we are moving toward a particular scenario?

- In terms of convincing policymakers in countries that matter, can we gain credibility through the Brussels Effect?

- Can we identify high-impact opportunities where technical and governance researchers can collaborate?

- Where are the weak signals that we can use to identify opportunities for action?

- Which opportunities might allow us to extend timelines?

- What are the opportunities for reducing the risk of AGI used by a malicious actor?

- We should be thinking of using strategies that seem robust across scenarios. For example, building trust / political capital.

Maybe I’m unaware of the work that is being done, but I feel like there is a lack of work done to identify near-term opportunities where we can move the needle on things in the short-term. I would like more specific plans we can quickly take action on when a given scenario arises and the pros and cons for which actions to take.

Another issue we might face if we don’t do this thinking in advance is the risks that come with taking specific actions. We do not want to be in a specific scenario, and we are just winging it and figuring out which actions to take at the moment. This might lead to situations where alignment people completely lose the trust of different stakeholders.

Which leads to the FTX situation (ok, bear with me). Foresight is not only about identifying opportunities, but it is also about identifying risks. There’s been a lot of discussion about funding this past month and if we should have put some much faith into a large funding source. What can we do to prevent this from happening again? Well, I think we would have been much more likely to expect the FTX scenario had we done the work to identify such risks. Not only that, but we also need to know how to act in such a scenario so that we minimize the negative impact.

I think it’s worth mentioning that Rethink Priorities did do work like this [EA · GW] and identified FTX as a big risk. I applaud them for this, but let’s also make sure we take it even further than they did going forward.

Here’s what they had to say about it:

Prior to the news breaking this month, we already had procedures in place intended to mitigate potential financial risks from relying on FTX or other cryptocurrency donors. Internally, we've always had a practice of treating pledged or anticipated cryptocurrency donations as less reliable than other types of donations for fundraising forecasting purposes, simply due to volatility in that sector. As a part of regular crisis management exercises, we also engaged in an internal simulation in August around the possibility of FTX funds no longer being available. We did this exercise due to the relative size and importance of the funding to us, and the base failure rates of cryptocurrency projects, not due to having non-public information about FTX or Bankman-Fried.

In hindsight, we believe we could have done more to share these internal risk assessments with the rest of the EA community. Going forward, we are reevaluating our own approach to risk management and the assessment of donors, though we do not believe any changes we will make would have caught this specific issue.

In the case of Rethink, they talk about “crisis management”, “internal simulations” and “risk assessments/management”, but I think Strategic Foresight can feed into that.

Call to action

I have some experience in this sort of work (workshops and strategic foresight), but I am currently spending most of my time working on technical alignment. That said, I'd like to extend an invitation to anyone interested in doing this sort of work to reach out to me. I'd love to help out in any way I can.

I'll part with this; we should be tackling AGI risk with a level of desperation and urgency that allows us to access parts of our brain that create potentially unusual but effective strategies (while avoiding any naive utilitarian traps [? · GW]). And I want to make one point absolutely clear: if we do use approaches like Strategic Foresight, we should be doing it in a way that is "actually optimizing for solving the problem [LW · GW]."

6 comments

Comments sorted by top scores.

comment by Marius Hobbhahn (marius-hobbhahn) · 2022-12-05T18:25:27.566Z · LW(p) · GW(p)

I'm not sure I actually understand the distinction between forecasting and foresight. For me, most of the problems you describe sound either like forecasting questions or AI strategy questions that rely on some forecast.

Your two arguments for why foresight is different than forecasting are

a) some people think forecasting means only long-term predictions and some people think it means only short-term predictions.

My understanding of forecasting is that it is not time-dependent, e.g. I can make forecasts about an hour from now or for a million years from now. This is also how I perceive the EA /AGI-risk community to use that term.

b) foresight looks at the cone of outcomes, not just one.

My understanding of forecasting is that you would optimally want to predict a distribution of outcomes, i.e. the cone but weighted with probabilities. This seems strictly better than predicting the cone without probabilities since probabilities allow you to prioritize between scenarios.

I understand some of the problems you describe, e.g. that people might be missing parts of the distribution when they make predictions and they should spread them wider but I think you can describe these problems entirely within the forecasting language and there is no need to introduce a new term.

LMK if I understood the article and your concerns correctly :)

↑ comment by jacquesthibs (jacques-thibodeau) · 2022-12-05T19:08:32.494Z · LW(p) · GW(p)

So, my difficulty is that my experience in government and my experience in EA-adjacent spaces has totally confused my understanding of the jargon. I'll try to clarify:

- In the context of my government experience, forecasting is explicitly trying to predict what will happen based on past data. It does not fully account for fundamental assumptions that might break due to advances in a field, changes in geopolitics, etc. Forecasts are typically used to inform one decision. It does not focus on being robust across potential futures or try to identify opportunities we can take to change the future.

- In EA / AGI Risk, it seems that people are using "forecasting" to mean something somewhat like foresight, but not really? Like, if you go on Metaculus, they are making long-term forecasts in a superforecaster-mindset, but are perhaps expecting their long-term forecasts are as good as the short-term forecasts. I don't mean to sound harsh, it's useful what they are doing and can still feed into a robust plan for different scenarios. However, I'd say what is mentioned in reports typically does lean a bit more into (what I'd consider) foresight territory sometimes.

- My hope: instead of only using "forecasts/foresight" to figure out when AGI will happen, we use it to identify risks for the community, potential yellow/red light signals, and golden opportunities where we can effectively implement policies/regulations. In my opinion, using a "strategic foresight" approach enables us to be a lot more prepared for different scenarios (and might even have identified a risk like SBF much sooner).

My understanding of forecasting is that you would optimally want to predict a distribution of outcomes, i.e. the cone but weighted with probabilities. This seems strictly better than predicting the cone without probabilities since probabilities allow you to prioritize between scenarios.

Yes, in the end, we still need to prioritize based on the plausibility of a scenario.

I understand some of the problems you describe, e.g. that people might be missing parts of the distribution when they make predictions and they should spread them wider but I think you can describe these problems entirely within the forecasting language and there is no need to introduce a new term.

Yeah, I care much less about the term/jargon than the approach. In other words, what I'm hoping to see more of is to come up with a set of scenarios and forecasting across the cone of plausibility (weighted by probability, impact, etc) so that we can create a robust plan and identify opportunities that improve our odds of success.

Replies from: marius-hobbhahn↑ comment by Marius Hobbhahn (marius-hobbhahn) · 2022-12-05T21:33:28.555Z · LW(p) · GW(p)

thanks for clarifying

comment by Jelle Donders (jelle-donders) · 2024-01-28T15:49:10.258Z · LW(p) · GW(p)

Thanks for this post! I've been thinking a lot about AI governance strategies and their robustness/tractability lately, much of which feels like a close match to what you've written here.

For many AI governance strategies, I think we are more clueless than many seem to assume about whether a strategy ends up positively shaping the development of AI or backfiring in some foreseen or unforeseen way. There are many crucial considerations [? · GW] for AI governance strategies, miss or get one wrong and the whole strategy can fall apart, or become actively counterproductive. What I've been trying to do is:

- Draft a list of trajectories for how the development and governance of AI up until we get to TAI [? · GW], estimating the likelihood and associated xrisk from AI for each trajectory.

- e.g. "There ends up being no meaningful international agreements or harsh regulation and labs race each other until TAI. Probability of trajectory: 10%, Xrisk from AI for scenario: 20%."

- Draft a list of AI governance strategies that can be pursued.

- e.g. "push for slowing down frontier AI development by licensing the development of large models above a compute threshold and putting significant regulatory burden on them".

- For each combination of trajectory and strategy, assess whether we are clueless about the what the sign before the impact of said strategy would be, or if the strategy would be robustly good (~predictably lowers xrisk from AI in expectation), at least for this trajectory. A third option would of course be robustly bad.

- e.g. "Clueless, it's not clear which consideration should have more weight, and backfiring could be as bad as success is good.

- + This strategy would make this trajectory less likely and possibly shift it to a trajectory with lower xrisk from AI.

- - Getting proper international agreement seems unlikely for this pessimistic trajectory. Partial regulation could disproportionally slow down good actors, or lead to open source proliferation and increases misuse risk."

- e.g. "Clueless, it's not clear which consideration should have more weight, and backfiring could be as bad as success is good.

- Try to identify strategies that are robust across a wide array of trajectories.

I'm just winging it without much background in how such foresight-related work is normally done, so any thoughts or feedback on how to approach this kind of investigation, or what existing foresight frameworks you think would be particularly helpful here are very much appreciated!

Replies from: jacques-thibodeau↑ comment by jacquesthibs (jacques-thibodeau) · 2024-01-29T17:51:12.268Z · LW(p) · GW(p)

Any thoughts or feedback on how to approach this kind of investigation, or what existing foresight frameworks you think would be particularly helpful here are very much appreciated!

As I mentioned in the post, I think the Canadian and Singapore governments are both the best governments in this space, to my knowledge.

Fortunately, some organizations have created rigorous foresight methods. The top contenders I came across were Policy Horizons Canada within the Canadian Federal Government and the Centre for Strategic Futures [EA · GW] within the Singaporean Government.

As part of this kind of work, you want to be doing scenario planning multiple levels down. How does AI interact with VR? Once you have that, how does it interact with security and defence? How does this impact offensive work? What are the geopolitical factors that work their way in? Does public sentiment through job loss impact the development of these technologies in some specific ways? For example, you might have more powerful pushback from industries with more distinguished, intelligent, heavily regulated industries with strong union support.

Aside from that, you might want to reach out to the Foresight Institute, though I'm a bit more skeptical that their methodology will help here (though I'm less familiar with it and like the organizers overall).

I also think that looking at the Malicious AI Report from a few years ago for some inspiration would be helpful, particularly because they held a workshop with people of different backgrounds. There might be some better, more recent work I'm unaware of.

Additionally, I'd like to believe that this post was a precursor to Vitalik's post on d/acc (defensive accelerationism), so I'd encourage you to look at that.

Another thing to look into are companies that are in the cybersecurity space. I think we'll be getting more AI Safety pilled orgs in this area soon. Lekara is an example of this, I met two employees and they essentially told me that the vision is to embed themselves into companies and then continue to figure out how to make AI safer and the world more robust once they are in that position.

There are also more organizations popping up, like the Center for AI Policy, and my understanding is that Cate Hall is starting an org that focuses on sensemaking (and grantmaking) for AI Safety.

If you or anyone is interested in continuing this kind of work, send me a DM. I'd be happy to help provide guidance in the best way I can.

Lastly, I will note that I think people have generally avoided this kind of work because "if you have a misaligned AGI, well, you are dead no matter how robust you make the world or wtv you plan around it." I think this view is misguided and I think you can potentially make our situation a lot better by doing this kind of work. I think recent discussions on AI Control [LW · GW] (rather than Alignment) are useful in questioning previous assumptions.

comment by Darren McKee · 2022-12-06T17:45:17.730Z · LW(p) · GW(p)

Great post! I definitely think that the use of strategic foresight is one of the many tools we should be applying to the problem.