Posts

Comments

Makes sense to me, thanks for the clarifications.

I found working through the details of this very informative. For what it's worth, I'll share here a comment I made internally at Timaeus about it, which is that in some ways this factorisation into and reminds me of the factorisation into the map from a model to its capability vector (this being the analogue of ) and the map from capability vectors to downstream metrics (this being the analogue of ) in Ruan et al's observational scaling laws paper.

In your case the output metrics have an interesting twist, in that you don't want to just predict performance but also in some sense variations of performance within a certain class (by e.g. varying the prompt), so it's some kind of "stable" latent space of capabilities that you're constructing.

Anyway, factoring the prediction of downstream performance/capabilities through some kind of latent space object in your case, or latent spaces of capabilities in Ruan et al's case, seems like a principled way of thinking about the kind of object we want to put at the center of interpretability.

As an entertaining aside: as an algebraic geometer the proliferation of 's i.e. "interpretability objects" between models and downstream performance metrics reminds me of the proliferation of cohomology theories and the search for "motives" to unify them. That is basically interpretability for schemes!

I is evaluated on utility for improving time-efficiency and accuracy in solving downstream tasks

There seems to be a gap between this informal description and your pseudo-code, since in the pseudo-code the parameters only parametrise the R&D agent . On the other hand is distinct and presumed to be not changing. At first reasoning from the pseudo-code I had the objection that the execution agent can't be completely static, because it somehow has to make use of whatever clever interpretability outputs the R&D agent comes up with (e.g. SAEs don't use themselves to solve OOD detection or whatever). Then I wondered if you wanted to bound the complexity of somewhere. Then I looked back and saw the formula which seems to cleverly bypass this by having the R&D agent have to do both steps but factoring its representation of .

However this does seem different from the pseudo-code. If this is indeed different, which one do you intend?

Edit: no matter, I should just read more closely clearly takes as input so I think I'm not confused. I'll leave this comment here as a monument to premature question-asking.

Later edit: ok no I'm still confused. It seems doesn't get used in your inner loop unless it is in fact (which in the pseudo-code means just a part of what was called in the preceding text). That is, when we update we update for the next round. In which case things fit with your original formula but having essentially factored into two pieces ( on the outside, on the inside) you are only allowing the inside piece to vary over the course of this process. So I think my original question still stands.

So to check the intuition here: we factor the interpretability algorithm into two pieces. The first piece never sees tasks and has to output some representation of the model . The second piece never sees the model and has to, given the representation and some prediction task for the original model perform well across a sufficiently broad range of such tasks. It is penalised for computation time in this second piece. So overall the loss is supposed to motivate

- Discovering the capabilities of the model as operationalised by its performance on tasks, and also how that performance is affected by variations of those tasks (e.g. modifying the prompt for your Shapley values example, and for your elicitation example).

- Representing those capabilities in a way that amortises the computational cost of mapping a given task onto this space of capabilities in order to make the above predictions (the computation time penalty in the second part).

This is plausible for the same reason that the original model can have good general performance: there are general underlying skills or capabilities that can be assembled to perform well on a wide range of tasks, and if you can discover those capabilities and their structure you should be able to generalise to predict other task performance and how it varies.

Indirectly there is a kind of optimisation pressure on the complexity of just because you're asking this to be broadly useful (for a computationally penalised ) for prediction on many tasks, so by bounding the generalisation error you're likely to bound the complexity of that representation.

I'm on board with that, but I think it is possible that some might agree this is a path towards automated research of something but not that the something is interpretability. After all, your need not be interpretable in any straightforward way. So implicitly the space of 's you are searching over is constrained to something instrinsically reasonably interpretable?

Since later you say "human-led interpretability absorbing the scientific insights offered by I*" I guess not, and your point is that there are many safety-relevant applications of I*(M) even if it is not very human comprehensible.

Wu et al?

There's plenty, including a line of work by Carina Curto, Katrin Hess and others that is taken seriously by a number of mathematically inclined neuroscience people (Tom Burns if he's reading can comment further). As far as I know this kind of work is the closest to breaking through into the mainstream. At some level you can think of homology as a natural way of preserving information in noisy systems, for reasons similar to why (co)homology of tori was a useful way for Kitaev to formulate his surface code. Whether or not real brains/NNs have some emergent computation that makes use of this is a separate question, I'm not aware of really compelling evidence.

There is more speculative but definitely interesting work by Matilde Marcolli. I believe Manin has thought about this (because he's thought about everything) and if you have twenty years to acquire the prerequisites (gamma spaces!) you can gaze into deep pools by reading that too.

I'm ashamed to say I don't remember. That was the highlight. I think I have some notes on the conversation somewhere and I'll try to remember to post here if I ever find it.

I can spell out the content of his Koan a little, if it wasn't clear. It's probably more like: look for things that are (not there). If you spend enough time in a particular landscape of ideas, you can (if you're quiet and pay attention and aren't busy jumping on bandwagons) get an idea of a hole, which you're able to walk around but can't directly see. In this way new ideas appear as something like residues from circumnavigating these holes. It's my understanding that Khovanov homology was discovered like that, and this is not unusual in mathematics.

By the way, that's partly why I think the prospect of AIs being creative mathematicians in the short term should not be discounted; if you see all the things you see all the holes.

I visited Mikhail Khovanov once in New York to give a seminar talk, and after it was all over and I was wandering around seeing the sights, he gave me a call and offered a long string of general advice on how to be the kind of person who does truly novel things (he's famous for this, you can read about Khovanov homology). One thing he said was "look for things that aren't there" haha. It's actually very practical advice, which I think about often and attempt to live up to!

Ok makes sense to me, thanks for explaining. Based on my understanding of what you are doing, the statement in the OP that in your setting is "sort of" K-complexity is a bit misleading? It seems like you will end up with bounds on that involve the actual learning coefficient, which you then bound above by noting that un-used bits in the code give rise to degeneracy. So there is something like going on ultimately.

If I understand correctly you are probably doing something like:

- Identified a continuous space (parameters of your NN run in recurrent mode)

- Embedded a set of Turing machine codes into (by encoding the execution of a UTM into the weights of your transformer)

- Used parametrised by the transformer, where to provide what I would call a "smooth relaxation" of the execution of the UTM for some number of steps

- Use this as the model in the usual SLT setting, and then noted that because of the way you encoded the UTM and its step function, if you vary away from the configuration corresponding to a TM code in a bit of the description that corresponds to unused states or symbols, it can't affect the execution and so there is degeneracy in the KL divergence

- Hence, and if then repeating this over all TMs which perfectly fit the given data distribution, we get a bound on the global .

Proving Theorem 4.1 was the purpose of Clift-Wallbridge-Murfet, just with a different smooth relaxation. The particular smooth relaxation we prefer for theoretical purposes is one coming from encoding a UTM in linear logic, but the overall story works just as well if you are encoding the step function of a TM in a neural network and I think the same proof might apply in your case.

Anyway, I believe you are doing at least several things differently: you are treating the iid case, you are introducing and the bound on that (which is not something I have considered) and obviously the Transformer running in recurrent mode as a smooth relaxation of the UTM execution is different to the one we consider.

From your message it seems like you think the global learning coefficient might be lower than , but that locally at a code the local learning coefficient might be somehow still to do with description length? So that the LLC in your case is close to something from AIT. That would be surprising to me, and somewhat in contradiction with e.g. the idea from simple versus short that the LLC can be lower than "the number of bits used" when error-correction is involved (and this being a special case of a much broader set of ways the LLC could be lowered).

Where is here?

I don't believe Claim 6 is straightforward. Or to be more precise, the closest detailed thing I can see to what you are saying does not obviously lead to such a relation.

I don't see any problem with the discussion about Transformers or NNs or whatever as a universal class of models. However then I believe you are discussing the situation in Section 3.7.2 of Hutter's "Universal artificial intelligence", also covered in his paper "On the foundations of universal sequence prediction" (henceforth Hutter's book and Hutter's paper). The other paper I'm going to refer to below is Sterkenburg's "Solomonoff prediction and Occam's razor". I know a lot of what I write below will be familiar to you, but for the sake of saying clearly what I'm trying to say, and for other readers, I will provide some background.

Background

A reminder on Hutter's notation: we have a class of semi-measures over sequences which I'll assume satisfy so that we interpret as the probability that a sequence starts with . Then denotes the true generating distribution (we assume this is in the class, e.g. in the case where parametrises Turing machine codes, that the environment is computable). There are data sequences and the task is to predict the next token , we view as the probability according to that the next symbol is given .

If is countable and we have weights for each satisfying then we can form the Bayes mixture . The gap between the predictive distribution associated to this mixture and the true distribution is measured by

where is for example the KL divergence between and . It can be shown that and hence this also upper bounds which is the basis for the claim that the mixture converges rapidly (in ) to the true predictive distribution provided of course the weight is nonzero for . This is (4) in Hutter's paper and Theorem 3.19 in his book.

It is worth noting that

which can be written as

where is the posterior distribution. In this sense is the Bayesian posterior predictive distribution given .

Note this doesn't really depend on the choice of weights (i.e. the prior), provided the environment is in the hypothesis class and is given nonzero weight. This is an additional choice, and one can motivate on various grounds the choice of weights with Kolmogorov complexity of the hypothesis . When one makes this choice, the bound becomes

.

As Hutter puts it on p.7 of his paper "the number of times deviates from by more than is bounded by , i.e. is proportional to the complexity of the environment". The above gives the formal basis for (part of) why Solomonoff induction is "good". When you write

The total error of our prediction in terms of KL-divergence measured in bits, across data points, should then be bounded below , where is the length of the shortest program that implements on the UTM

I believe you are referring to some variant of this result, at least that is what Solomonoff completeness means to me.

It is important to note that these two steps (bounding above for any weights, and choosing the weights ala Solomonoff to get a relation to K-complexity) are separable, and the first step is just a general fact about Bayesian statistics. This is covered well by Sterkenburg.

Continuous model classes

Ok, well and good. Now comes the tricky part: we replace by an uncountable set and try to replace sums by integrals. This is addressed in Section 3.7.2 of Hutter's book and p.5 of his paper. This treatment is only valid insofar as Laplace approximations are valid, and so is invalid when is a class of neural network weights and involves predicting sequences based on those networks in such a way that degeneracy is involved. This is the usual setting in which classical theory fails and SLT is required. Let us look at the details.

Under a nondegeneracy hypothesis (Fisher matrix invertible at the true parameter) one can prove (this is in Clarke and Barron's "Information theoretic asymptotics of Bayes methods" from 1990, also see Balasubramanian's "Statistical Inference, Occam's razor, and Statistical Mechanics on the Space of Probability Distributions" from 1997 and Watanabe's book "Mathematical theory of Bayesian statistics", aka the green book) that

where now and has nonempty interior, i.e. is actually dimensional. Here we see that in addition to the terms from before there are terms that depend on . The log determinant term is treated a bit inelegantly in Clarke and Barron and hence Hutter, one can do better, see Section 4.2 of Watanabe's green book, and in any case I am going to ignore this as a source of dependence.[1]

Note that there is now no question of bounding since the right hand side (the bound on ) increases with . Hutter argues this "still grows very slowly" (p.4 of his paper) but this seems somewhat in tension with the idea that in Solomonoff induction we sometimes like to think of all of human science as the context when we predict the next token ( here being large). This presents a conceptual problem, because in the countable case we like to think of K-complexity as an important part of the story, but it "only" enters through the choice of weight and thus the term in the bound on , whereas in the continuous case this constant order term in may be very small in comparison to the term.

In the regular case (meaning, where the nondegeneracy of the Fisher information at the truth holds) it's somewhat reasonable to say that at least the term doesn't know anything about the environment (i.e. the coefficient depends only on the parametrisation) and so the only environment dependence is still something that involves , supposing we (with some normalisation) took to have something to do with . However in the singular case as we know from SLT, the appropriate replacement[2] for this bound has a coefficient of which also depends on the environment. I don't see a clear reason why we should care primarily about a subleading term (the K-complexity, a constant order term in the asymptotic expansion in ) over the leading term (we are assuming the truth is realisable, so there is no order term).

That is, as I understand the structure of the theory, the centrality of K-complexity to Solomonoff induction is an artifact of the use of a countable hypothesis class. There are various paragraphs in e.g. Hutter's book Section 3.7.2 and p.12 of his paper which attempt to dispel the "critique from continuity" but I don't really buy them (I didn't think too hard about them, and only discussed them briefly with Hutter once, so I could be missing something).

Of course it is true that there is an large enough that the behaviour of the posterior distribution over neural networks "knows" that it is dealing with a discrete set, and can distinguish between the closest real numbers that you can represent in floating point. For values of well below this, the posterior can behave as if it is defined over the mathematically idealised continuous space. I find this no more controversial than the idea that sound waves travelling in solids can be well-described by differential equations. I agree that if you want to talk about "training" very low precision neural networks maybe AIT applies more directly, because the Bayesian statistics that is relevant is that for a discrete hypothesis class (this is quite different to producing quantised models after the fact). This seems somewhat but not entirely tangential to what you want to say, so this could be a place where I'm missing your point. In any case, if you're taking this position, then SLT is connected only in a very trivial way, since there are no learning coefficients if one isn't talking about continuous model classes.

To summarise: K-complexity usually enters the theory via a choice of prior, and in continuous model classes priors show up in the constant order terms of asymptotic expansions in .

From AIT to SLT

You write

On the meaning of the learning coefficient: Since the SLT[3] posterior would now be proven equivalent to the posterior of a bounded Solomonoff induction, we can read off how the (empirical) learning coefficient in SLT relates to the posterior in the induction, up to a conversion factor equal to the floating point precision of the network parameters.[8] This factor is there because SLT works with real numbers whereas AIT[4] works with bits. Also, note that for non-recursive neural networks like MLPs, this proof sketch would suggest that the learning coefficient is related to something more like circuit complexity than program complexity. So, the meaning of from an AIT perspective depends on the networks architecture. It's (sort of)[9] K-complexity related for something like an RNN or a transformer run in inference mode, and more circuit complexity related for something like an MLP.

I don't claim to know precisely what you mean by the first sentence, but I guess what you mean is that if you use the continuous class of predictors with parametrising neural network weights, running the network in some recurrent mode (I don't really care about the details) to make the predictions about sequence probabilities, then you can "think about this" both in an SLT way and an AIT way, and thus relate the posterior in both cases. But as far as I understand it, there is only one way: the Bayesian way.

You either think of the NN weights as a countable set (by e.g. truncating precision "as in real life") in which case you get something like but this is sort of weak sauce: you get this for any prior you want to put over your discrete set of NN weights, no implied connection to K-complexity unless you put one in by hand by taking . This is legitimately talking about NNs in an AIT context, but only insofar the existing literature already talks about general classes of computable semi-measures and you have described a way of predicting with NNs that satisfies these conditions. No relation to SLT that I can see.

Or you think of the NN weights as a continuous set in which case the sums in your Bayes mixture become integrals, the bound on becomes more involved and must require an integral (which of course has the conceptual content of "bound below by contributions from a neighbourhood of and that will bound above by something to do with " either by Laplace or more refined techniques ala Watanabe) and you are in the situation I describe above where the prior (which you can choose to be related to if you wish) ends up in the constant term and isn't the main determinant of what the posterior distribution, and thus the distance between the mixture and the truth, does.

That is, in the continuous case this is just the usual SLT story (because both SLT and AIT are just the standard Bayesian story, for different kinds of models with a special choice of prior in the latter case) where the learning coefficient dominates how the Bayesian posterior behaves with .

So for there to be a relation between the K-complexity and learning coefficient, it has to occur in some other way and isn't "automatic" from formulating a set of NNs as like codes for a UTM. So this is my concern about Claim 6. Maybe you have a more sophisticated argument in mind.

Free parameters and learning coefficients

In a different setting I do believe there is such a relation, Theorem 4.1 of Clift-Murfet-Wallbridge (2021) as well as Tom Waring's thesis make the point that unused bits in a TM code are a form of degeneracy, when you embed TM codes into a continuous space of noisy codes. Then the local learning coefficient at a TM code is upper bounded by something to with its length (and if you take a function and turn it into a synthesis problem, the global learning coefficient will therefore be upper bounded by the Kolmogorov complexity of the function). However the learning coefficient contains more information in this case.

Learning coefficient vs K

In the NN case the only relation between the learning coefficient of a network parameter and the Kolmogorov complexity that I know follows pretty much immediately from Theorem 7.1 (4) of Watanabe's book (see also p.5 of our paper https://arxiv.org/abs/2308.12108). You can think of the following as one starting point of the SLT perspective on MDL, but my PhD student Edmund Lau has a more developed story in his PhD thesis.

Let be a local minimum of a population loss (I'm just going to use the notation from our paper) and define for some the quantity to be the volume of the set , regularised to be in some ball and with some appropriately decaying measure if necessary, none of this matters much for what I'm going to say. Suppose we somehow produce a parameter , the bit cost of this is ignored in what follows, and that we want to refine this to a parameter within an error tolerance (say set by our floating point precision) that is, by taking a sequence of increasingly good approximations

such that at each stage, and , so . The aforementioned results say that (ignoring the multiplicity) the bit cost of each of these refinements is approximately the local learning coefficient . So the overall length of the description of given done in this manner is . This suggests

We can think of as just the measure of the number of orders of magnitude covered by our floating point representation for losses.

- ^

There is arguably another gap in the literature here. Besides this regularity assumption, there is also the fact that the main reference Hutter is relying on (Clarke and Barron) works in the iid setting whereas Hutter works in the non-iid setting. He sketches in his book how this doesn't matter and after thinking about it briefly I'm inclined to agree in the regular case, I didn't think about it generally. Anyway I'll ignore this here since I think it's not what you care about.

- ^

Note that SLT contains asymptotic expansions for the free energy, whereas looks more like the KL divergence between the truth and the Bayesian posterior predictive distribution, so what I'm referring to here is a treatment of the Clarke-Barron setting using Watanabe's methods. Bin Yu asked Susan Wei (a former colleague of mine at the University of Melbourne, now at Monash University) and I if such a treatment could be given and we're working on it (not very actively, tbh).

Indeed, very interesting!

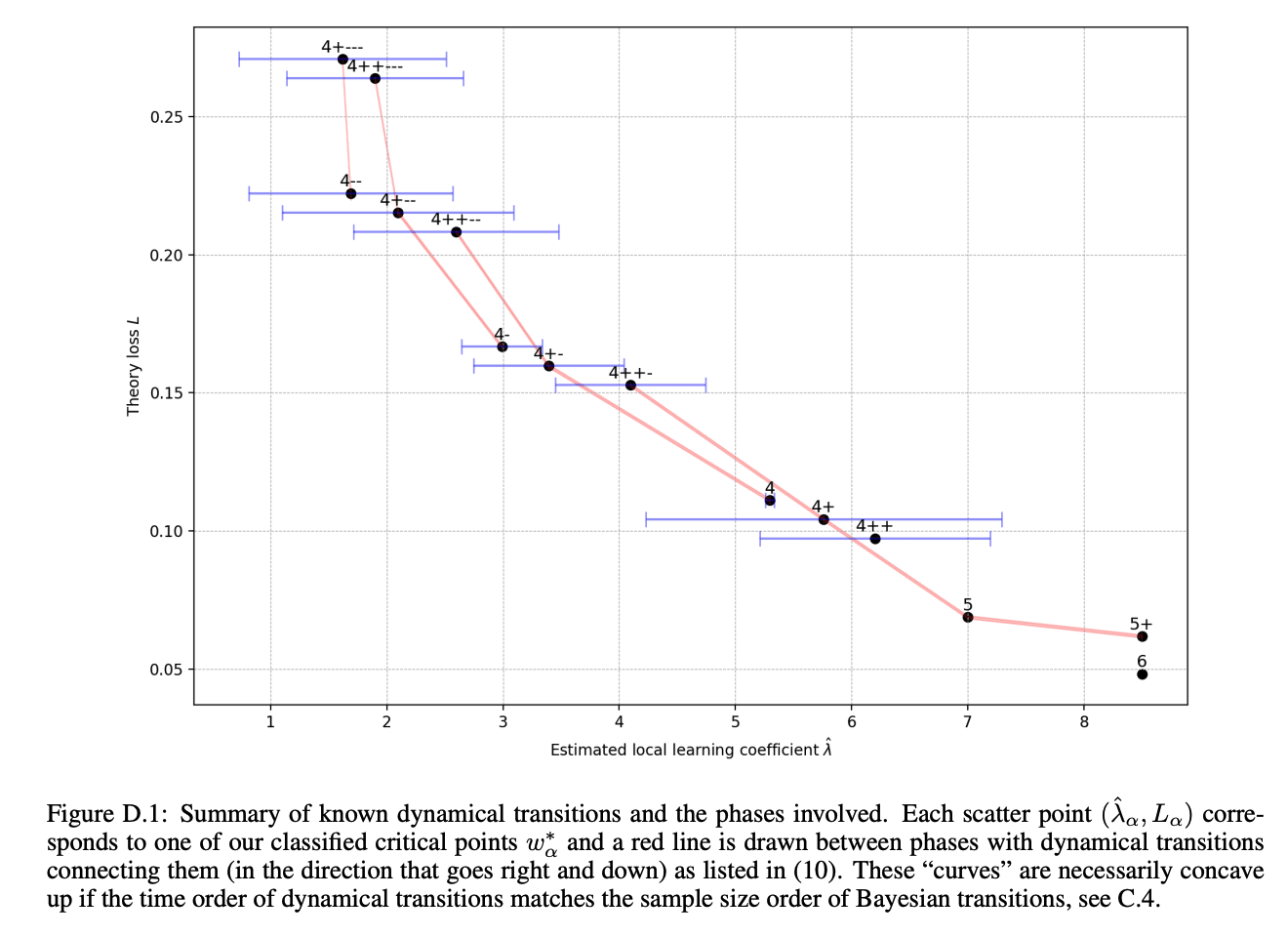

The correlation between training loss and LLC is especially unexpected to us

It's not unusual to see an inverse relationship between loss and LLC over training a single model (since lower loss solutions tend to be more complex). This can be seen in the toy model of superposition setting (plot attached) but it is also pronounced in large language models. I'm not familiar with any examples that look like your plot, where points at the end of training runs show a linear relationship.

We then record the training loss, weight norm, and estimated LLC of all models at the end of training

For what it's worth, Edmund Lau has some experiments where he's able to get models to grok and find solutions with very large weight norm (but small LLC). I am not tracking the grokking literature closely enough to know how surprising/interesting this is.

For models that do not grok either group, we observe both examples where the LLC stays large throughout training and examples where it falls

What explains the difference in scale of the LLC estimates here and in the earlier plot, where they are < 100? Perhaps different hyperparameters?

I just mean that it's relatively easy to prove theorems. More precisely, if you decide the probability of a parameter is just determined by the data and model via Bayes' rule, this is a relatively simple setup compared to e.g. deciding the probability of a parameter is an integral over all possible paths taken by something like SGD from initialisation. From this simplicity we can derive things like Watanabe's free energy formula, which currently has no analogue for the latter model of the probability of a parameter.

That theorem is far from trivial, but still there seems to be a lot more "surface area" to grip the problem when you think about it first from a Bayesian perspective and then ask what the gap is from there to SGD (even if that's what you ultimately care about).

Occam's razor cuts the thread of life

Thanks Lucius. This agrees with my take on that paper and I'm glad to have this detailed comment to refer people to in the future.

Seems worth it

Hehe. Yes that's right, in the limit you can just analyse the singular values and vectors by hand, it's nice.

No general implied connection to phase transitions, but the conjecture is that if there are phase transitions in your development then you can for general reasons expect PCA to "attempt" to use the implicit "coordinates" provided by the Lissajous curves (i.e. a binary tree, the first Lissajous curve uses PC2 to split the PC1 range into half, and so on) to locate stages within the overall development. I got some way towards proving that by extending the literature I cited in the parent, but had to move on, so take the story with a grain of salt. This seems to make sense empirically in some cases (e.g. our paper).

To provide some citations :) There are a few nice papers looking at why Lissajous curves appear when you do PCA in high dimensions:

- J. Antognini and J. Sohl-Dickstein. "PCA of high dimensional random walks with comparison to neural network training". In Advances in Neural Information Processing Systems, volume 31, 2018

- M. Shinn. "Phantom oscillations in principal component analysis". Proceedings of the National Academy of Sciences, 120(48):e2311420120, 2023.

It is indeed the case that the published literature has quite a few people making fools of themselves by not understanding this. On the flipside, just because you see something Lissajous-like in the PCA, doesn't necessarily mean that the extrema are not meaningful. One can show that if a process has stagewise development, there is a sense in which performing PCA will tend to adapt PC1 to be a "developmental clock" such that the extrema of PC2 as a function of PC1 tends to line up with the midpoint of development (even if this is quite different from the middle "wall time"). We've noticed this in a few different systems.

So one has to be careful in both directions with Lissajous curves in PCA (not to read tea leaves, and also not to throw out babies with bathwater, etc).

Thanks Jesse, Ben. I agree with the vision you've laid out here.

I've spoken with a few mathematicians about my experience using Claude Sonnet and o1, o1-Pro for doing research, and there's an anecdote I have shared a few times which gets across one of the modes of interaction that I find most useful. Since these experiences inform my view on the proper institutional form of research automation, I thought I might share the anecdote here.

Sometime in November 2024 I had a striking experience with Claude Sonnet 3.5. At the end of a workday I regularly paste in the LaTeX for the paper I’m working on and ask for its opinion, related work I was missing, and techniques it thinks I might find useful. I finish by asking it to speculate on how the research could be extended. Usually this produces enthusiastic and superficially interesting ideas, which are however useless.

On this particular occasion, however, the model proceeded to elaborate a fascinating and far-reaching vision of the future of theoretical computer science. In fact I recognised the vision, because it was the vision that led me to write the document. However, none of that was explicitly in the LaTeX file. What the model could see was some of the initial technical foundations for that vision, but the fancy ideas were only latent. In fact, I have several graduate students working with me on the project and I think none of them saw what the model saw (or at least not as clearly).

I was impressed, but not astounded, since I had already thought the thoughts. But one day soon, I will ask a model to speculate and it will come up with something that is both fantastic and new to me.

Note that Claude Sonnet 3.5/3.6 would, in my judgement, be incapable of delivering on that vision. o1-Pro is going to get a bit further. However, Sonnet in particular has a broad vision and "good taste" and has a remarkable knack of "surfing the vibes" around a set of ideas. A significant chunk of cutting edge research comes from just being familiar at a "bones deep" level with a large set of ideas and tools, and knowing what to use and where in the Right Way. Then there is technical mastery to actually execute when you've found the way; put the vibe surfing and technical mastery together and you have a researcher.

In my opinion the current systems have the vibe surfing, now we're just waiting for the execution to catch up.

Something in that direction, yeah

I found this clear and useful, thanks. Particularly the notes about compositional structure. For what it's worth I'll repeat here a comment from ILIAD, which is that there seems to be something in the direction of SAEs, approximate sufficient statistics/information bottleneck, the work of Achille-Soatto and SLT (Section 5 iirc) which I had looked into after talking with Olah and Wattenberg about feature geometry but which isn't currently a high priority for us. Somebody might want to pick that up.

I like the emphasis in this post on the role of patterns in the world in shaping behaviour, the fact that some of those patterns incentivise misaligned behaviour such as deception, and further that our best efforts at alignment and control are themselves patterns that could have this effect. I also like the idea that our control systems (even if obscured from the agent) can present as "errors" with respect to which the agent is therefore motivated to learn to "error correct".

This post and the sharp left turn are among the most important high-level takes on the alignment problem for shaping my own views on where the deep roots of the problem are.

Although to be honest I had forgotten about this post, and therefore underestimated its influence on me, until performing this review (which caused me to update a recent article I wrote, the Queen's Dilemma, which is clearly a kind of retelling of one aspect of this story, with an appropriate reference). I assess it to be a substantial influence on me even so.

I think this whole line of thought could be substantially developed, and with less reliance on stories, and that this would be useful.

This is an important topic, about which I find it hard to reason and on which I find the reasoning of others to be lower quality than I would like, given its significance. For that reason I find this post valuable. It would be great if there were longer, deeper takes on this issue available on LW.

This post and its precusor from 2018 present a strong and well-written argument for the centrality of mathematical theory to AI alignment. I think the learning-theoretic agenda, as well as Hutter's work on ASI safety in the setting of AIXI, currently seems underrated and will rise in status. It is fashionable to talk about automating AI alignment research, but who is thinking hard about what those armies of researchers are supposed to do? Conceivably one of the main things they should do is solve the problems that Vanessa has articulated here.

The idea of a frugal compositional language and infa-Bayesian logic seem very interesting. As Vanessa points out in Direction 2 it seems likely there are possibilities for interesting cross-fertilisation between LTA and SLT, especially in connection with Solomonoff-like ideas and inductive biases.

I have referred colleagues in mathematics interested in alignment to this post and have revisited it a few times myself.

I have been thinking about interpretability for neural networks seriously since mid-2023. The biggest early influences on me that I recall were Olah's writings and a podcast that Nanda did. The third most important is perhaps this post, which I valued as an opposing opinion to help sharpen up my views.

I'm not sure it has aged well, in the sense that it's no longer clear to me I would direct someone to read this in 2025. I disagree with many of the object level claims. However, especially when some of the core mechanistic interpretability work is not being subjected to peer review, perhaps I wish there was more sceptical writing like this on balance.

It's a question of resolution. Just looking at things for vibes is a pretty good way of filtering wheat from chaff, but you don't give scarce resources like jobs or grants to every grain of wheat that comes along. When I sit on a hiring committee, the discussions around the table are usually some mix of status markers and people having done the hard work of reading papers more or less carefully (this consuming time in greater-than-linear proportion to distance from your own fields of expertise). Usually (unless nepotism is involved) someone who has done that homework can wield more power than they otherwise would at that table, because people respect strong arguments and understand that status markers aren't everything.

Still, at the end of day, an Annals paper is an Annals paper. It's also true that to pass some of the early filters you either need (a) someone who speaks up strongly for you or (b) pass the status marker tests.

I am sometimes in a position these days of trying to bridge the academic status system and the Berkeley-centric AI safety status system, e.g. by arguing to a high status mathematician that someone with illegible (to them) status is actually approximately equivalent in "worthiness of being paid attention to" as someone they know with legible status. Small increases in legibility can have outsize effects in how easy my life is in those conversations.

Otherwise it's entirely down to me putting social capital on the table ("you think I'm serious, I think this person is very serious"). I'm happy to do this and continue doing this, but it's not easily scalable, because it depends on my personal relationships.

To be clear, I am not arguing that evolution is an example of what I'm talking about. The analogy to thermodynamics in what I wrote is straightforwardly correct, no need to introduce KT-complexity and muddy the waters; what I am calling work is literally work.

There is a passage from Jung's "Modern man in search of a soul" that I think about fairly often, on this point (p.229 in my edition)

I know that the idea of proficiency is especially repugnant to the pseudo-moderns, for it reminds them unpleasantly of their deceits. This, however, cannot prevent us from taking it as our criterion of the modern man. We are even forced to do so, for unless he is proficient, the man who claims to be modern is nothing but an unscrupulous gambler. He must be proficient in the highest degree, for unless he can atone by creative ability for his break with tradition, he is merely disloyal to the past

Is there a reason why the Pearson correlation coefficient of the data in Figure 14 is not reported? This correlation is referred to numerous times throughout the paper.

There's no general theoretical reason that I am aware of to expect a relation between the L2 norm and the LLC. The LLC is the coefficient of the term in the asymptotic expansion of the free energy (negative logarithm of the integral of the posterior over a local region, as a function of sample size ) while the L2 norm of the parameter shows up in the constant order term of that same expansion, if you're taking a Gaussian prior.

It might be that in particular classes of neural networks there is some architecture-specific correlation between the L2 norm and the LLC, but I am not aware of any experimental or theoretical evidence for that.

For example, in the figure below from Hoogland et al 2024 we see that there are later stages of training in a transformer trained to do linear-regression in context (blue shaded regions) where the LLC is decreasing but the L2 norm is increasing. So the model is moving towards a "simpler" parameter with larger weight norm.

My best current guess is that it happens to be, in the grokking example, that the simpler solution has smaller weight norm. This could be true in many synthetic settings, for all I know; however, in general, it is not the case that complexity (at least as far as SLT is concerned) and weight norm are correlated.

That simulation sounds cool. The talk certainly doesn't contain any details and I don't have a mathematical model to share at this point. One way to make this more concrete is to think through Maxwell's demon as an LLM, for example in the context of Feynman's lectures on computation. The literature on thermodynamics of computation (various experts, like Adam Shai and Paul Riechers, are around here and know more than me) implicitly or explicitly touches on relevant issues.

The analogous laws are just information theory.

Re: a model trained on random labels. This seems somewhat analogous to building a power plant out of dark matter; to derive physical work it isn't enough to have some degrees of freedom somewhere that have a lot of energy, one also needs a chain of couplings between those degrees of freedom and the degrees of freedom you want to act on. Similarly, if I want to use a model to reduce my uncertainty about something, I need to construct a chain of random variables with nonzero mutual information linking the question in my head to the predictive distribution of the model.

To take a concrete example: if I am thinking about a chemistry question, and there are four choices A, B, C, D. Without any other information than these letters the model cannot reduce my uncertainty (say I begin with equal belief in all four options). However if I provide a prompt describing the question, and the model has been trained on chemistry, then this information sets up a correspondence between this distribution over four letters and something the model knows about; its answer may then reduce my distribution to being equally uncertain between A, B but knowing C, D are wrong (a change of 1 bit in my entropy).

Since language models are good general compressors this seems to work in reasonable generality.

Ideally we would like the model to push our distribution towards true answers, but it doesn't necessarily know true answers, only some approximation; thus the work being done is nontrivially directed, and has a systematic overall effect due to the nature of the model's biases.

I don't know about evolution. I think it's right that the perspective has limits and can just become some empty slogans outside of some careful usage. I don't know how useful it is in actually technically reasoning about AI safety at scale, but it's a fun idea to play around with.

Marcus Hutter on AIXI and ASI safety

Yes this seems like an important question but I admit I don't have anything coherent to say yet. A basic intuition from thermodynamics is that if you can measure the change in the internal energy between two states, and the heat transfer, you can infer how much work was done even if you're not sure how it was done. So maybe the problem is better thought of as learning to measure enough other quantities that one can infer how much cognitive work is being done.

For all I know there is a developed thermodynamic theory of learning agents out there which already does this, but I didn't find it yet...

The description of love at the conclusion of Gene Wolfe's The Wizard gets at something important, if you read it as something that both parties are simultaneously doing.

The work of Ashby I'm familiar with is "An Introduction to Cybernetics" and I'm referring to the discussion in Chapter 11 there. The references you're giving seem to be invoking the "Law" of requisite variety in the context of arguing that an AGI has to be relatively complex in order to maintain homeostatis in a complex environment, but this isn't the application of the law I have in mind.

From the book:

The law of Requisite Variety says that R's capacity as a regulator cannot exceed R's capacity as a channel of communication.

In the form just given, the law of Requisite Variety can be shown in exact relation to Shannon's Theorem 10, which says that if noise appears in a message, the amount of noise that can be removed by a correction channel is limited to the amount of information that can be carried by that channel.

Thus, his "noise" corresponds to our "disturbance", his "correction channel" to our "regulator R", and his "message of entropy H" becomes, in our case, a message of entropy zero, for it is constancy that is to be "transmitted": Thus the use of a regulator to achieve homeostasis and the use of a correction channel to suppress noise are homologous.

and

A species continues to exist primarily because its members can block the flow of variety (thought of as disturbance) to the gene-pattern, and this blockage is the species’ most fundamental need. Natural selection has shown the advantage to be gained by taking a large amount of variety (as information) partly into the system (so that it does not reach the gene-pattern) and then using this information so that the flow via R blocks the flow through the environment T.

This last quote makes clear I think what I have in mind: the environment is full of advanced AIs, they provide disturbances D, and in order to regulate the effects of those disturbances on our "cognitive genetic material" there is some requirement on the "correction channel". Maybe this seems a bit alien to the concept of control. There's a broader set of ideas I'm toying with, which could be summarised as something like "reciprocal control" where you have these channels of communication / regulation going in both directions (from human to machine, and vice versa).

The Queen's Dilemma was a little piece of that picture, which attempts to illustrate this bi-directional control flow by having the human control the machine (by setting its policy, say) and the machine control the human (in an emergent fashion, that being the dilemma).

Is restricting human agency fine if humans have little control over where it is restricted and to what degree?

Re: your first point. I think I'm still a bit confused here and that's partly why I wanted to write this down and have people poke at it. Following Sen (but maybe I'm misunderstanding him) I'm not completely convinced I know how to factor human agency into "winning". One part of me wants to say that whatever notion of agency I have, in some sense it's a property of world states and in principle I could extract it with enough monitoring of my brain or whatever, and then any prescribed tradeoff between "measured sense of agency" and "score" is something I could give to the machine as a goal.

So then I end up with the machine giving me the precise amount of leeway that lets me screw up the game just right for my preferences.

I don't see a fundamental problem with that, but it's also not the part of the metaphor that seems most interesting to me. What I'm more interested in is human inferiority as a pattern, and the way that pattern pervades the overall system and translates into computational structure, perhaps in surprising and indirect ways.

I'll reply in a few branches. Re: stochastic chess. I think there's a difference between a metaphor and a toy model; this is a metaphor, and the ingredients are chosen to illustrate in a microcosm some features I think are relevant about the full picture. The speed differential, and some degree of stochasticity, seem like aspects of human intervention in AI systems that seem meaningful to me.

I do agree that if one wanted to isolate the core phenomena here mathematically and study it, chess might not be the right toy model.

The metaphor is a simplification, in practice I think it is probably impossible to know whether you have achieved complete alignment. The question is then: how significant is the gap? If there is an emergent pressure across the vast majority of learning machines that dominate your environment to push you from de facto to de jure control, not due to malign intent but just as a kind of thermodynamic fact, then the alignment gap (no matter how small) seems to loom larger.

Re: the SLT dogma.

For those interested, a continuous version of the padding argument is used in Theorem 4.1 of Clift-Murfet-Wallbridge to show that the learning coefficient is a lower bound on the Kolmogorov complexity (in a sense) in the setting of noisy Turing machines. Just take the synthesis problem to be given by a TM's input-output map in that theorem. The result is treated in a more detailed way in Waring's thesis (Proposition 4.19). Noisy TMs are of course not neural networks, but they are a place where the link between the learning coefficient in SLT and algorithmic information theory has already been made precise.

For what it's worth, as explained in simple versus short, I don't actually think the local learning coefficient is algorithmic complexity (in the sense of program length) in neural networks, only that it is a lower bound. So I don't really see the LLC as a useful "approximation" of the algorithmic complexity.

For those wanting to read more about the padding argument in the classical setting, Hutter-Catt-Quarel "An Introduction to Universal Artificial Intelligence" has a nice detailed treatment.

Typo, I think you meant singularity theory :p

Modern mathematics is less about solving problems within established frameworks and more about designing entirely new games with their own rules. While school mathematics teaches us to be skilled players of pre-existing mathematical games, research mathematics requires us to be game designers, crafting rule systems that lead to interesting and profound consequences

I don't think so. This probably describes the kind of mathematics you aspire to do, but still the bulk of modern research in mathematics is in fact about solving problems within established frameworks and usually such research doesn't require us to "be game designers". Some of us are of course drawn to the kinds of frontiers where such work is necessary, and that's great, but I think this description undervalues the within-paradigm work that is the bulk of what is going on.

It might be worth knowing that some countries are participating in the "network" without having formal AI safety institutes

I hadn't seen that Wattenberg-Viegas paper before, nice.

Yeah actually Alexander and I talked about that briefly this morning. I agree that the crux is "does this basic kind of thing work" and given that the answer appears to be "yes" we can confidently expect scale (in both pre-training and inference compute) to deliver significant gains.

I'd love to understand better how the RL training for CoT changes the representations learned during pre-training.

My observation from the inside is that size and bureaucracy in Universities has something to do with what you're talking about, but more to do with a kind of "organisational overfitting" where small variations of the organisation's experience that included negative outcomes are responded to by internal process that necessitates headcount (aligning the incentives for response with what you're talking about).

I think self-repair might have lower free energy, in the sense that if you had two configurations of the weights, which "compute the same thing" but one of them has self-repair for a given behaviour and one doesn't, then the one with self-repair will have lower free energy (which is just a way of saying that if you integrate the Bayesian posterior in a neighbourhood of both, the one with self-repair gives you a higher number, i.e. its preferred).

That intuition is based on some understanding of what controls the asymptotic (in the dataset size) behaviour of the free energy (which is -log(integral of posterior over region)) and the example in that post. But to be clear it's just intuition. It should be possible to empirically check this somehow but it hasn't been done.

Basically the argument is self-repair => robustness of behaviour to small variations in the weights => low local learning coefficient => low free energy => preferred

I think by "specifically" you might be asking for a mechanism which causes the self-repair to develop? I have no idea.