Cognitive Work and AI Safety: A Thermodynamic Perspective

post by Daniel Murfet (dmurfet) · 2024-12-08T21:42:17.023Z · LW · GW · 9 commentsContents

Introduction

Pushing the World to Extremes

Limits and Safety

Cognitive Work vs Physical Work

Cognitive Work and Stable Patterns

Phase Transitions

Conclusion

Related Work

None

9 comments

Introduces the idea of cognitive work as a parallel to physical work, and explains why concentrated sources of cognitive work may pose a risk to human safety.

Acknowledgements. Thanks to Echo Zhou and John Wentworth for feedback and suggestions.

Some of these ideas were presented originally in a talk in November 2024 at the Australian AI Safety Forum slides for which are here: Technical AI Safety (Aus Safety Forum 24) and the video is available on YouTube.

This post is the "serious" half of a pair, for the fun version see Causal Undertow [LW · GW].

Introduction

This essay explores the idea of cognitive work, by which we mean directed changes in the information content of the world that are unlikely to occur by chance. Just as power plants together with machines are sources of physical work, so too datacenters together with AI models are sources of cognitive work: every time a model helps us to make a decision, or answers a question, it is doing work.

The purpose of exploring this idea is to offer an alternative ontology with which to communicate about AI safety, in the theory that more variety may be useful. The author used the ideas in this way at the recent Australian AI Safety Forum with some success.

Pushing the World to Extremes

Consider a sequence of events that recurs throughout technological history:

- Humans discover a powerful natural phenomenon

- Using this phenomenon requires creating extreme conditions

- These extreme conditions can harm humans

- Therefore, human safety requires control mechanisms proportional to the extremity

Nuclear reactors provide a natural example. The phenomenon is atomic fission, the extreme conditions are the high temperatures and pressures in reactors, and the safety challenge is clear: human biology cannot withstand such conditions. Hence the need for reaction vessels and safety engineering to keep the reaction conditions inside the vessel walls.

Modern AI presents another example: we've discovered scaling laws that let us create more advanced intelligence through increased computation and data. We're creating extreme information-theoretic conditions within datacenters, in the form of dense computational processing for training and inference.

However, it may not be obvious a priori how these "extreme conditions" lead to human harm. The weights of a large language model sitting on a hard drive don't seem dangerous.

Limits and Safety

Human bodies have evolved to be robust within a range of physical conditions, including ranges of temperature and pressure; outside of that range we quickly suffer injury. Evolution has also shaped us in more subtle ways. For example, since our ancestral environment only contained trace amounts of heavy metals, our cells are not robust to these materials and so lead and nickel have negative effects on human health.

Similarly we have evolved to be robust within a range of cognitive conditions, including ranges of complexity and speed of the aspects of the environment we need to predict in order to maintain a reasonable level of control. Just as some proteins in our cells malfunction when presented with the option to bind to heavy metals, so too some of our cognitive strategies for predicting and controlling our environment may break in the presence of a profusion of thinking machines.

Cognitive Work vs Physical Work

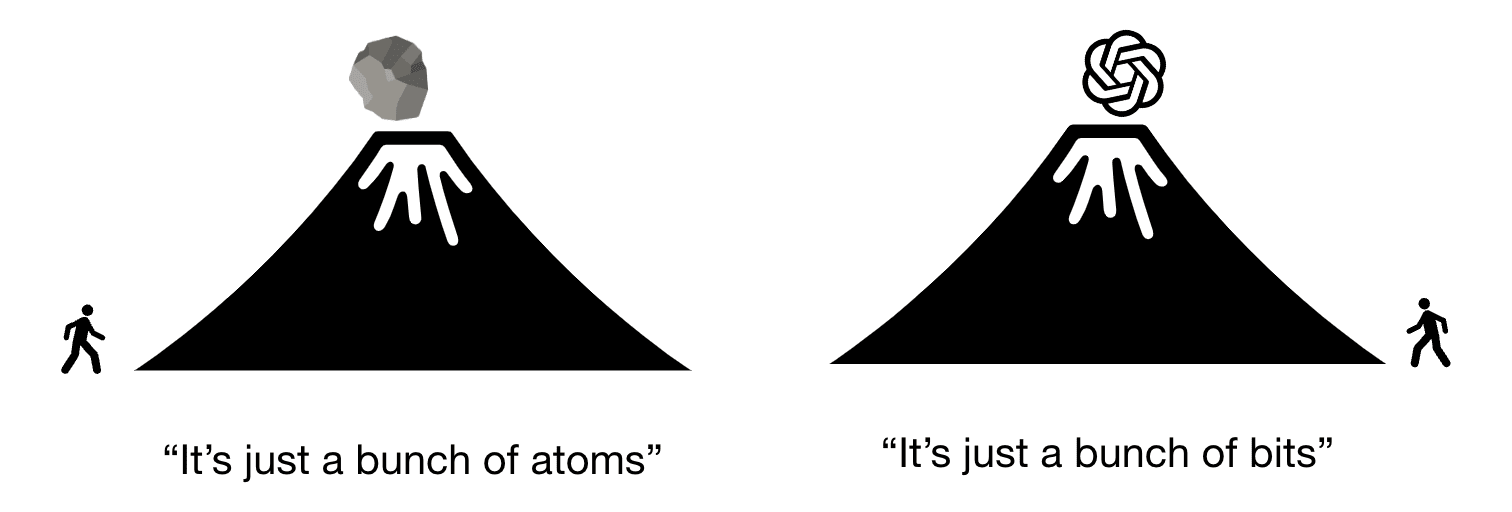

The parallel between extreme physical and cognitive conditions becomes clearer when we consider potential energy. A boulder perched on a hillside is "just a bunch of atoms," yet those atoms, arranged in a configuration of high potential energy, represent a latent capacity to do physical work. When that potential is released in an uncontrolled way, the results can be… uncomfortable. We understand intuitively that the danger lies not in the atoms themselves, but in their arrangement in a state far from equilibrium, positioned to do work.

Similarly, a large learning machine’s weights are "just a bunch of bits." Yet these bits represent a configuration of information that is extremely unlikely to occur by chance; that is, the learning machine’s weights are in a state of extremely low entropy. This low entropy state is achieved through computational effort - the cognitive equivalent of pushing a boulder uphill. When these weights are used to make decisions, they're performing cognitive work: transforming information and reshaping the world's informational state in ways that wouldn't occur naturally.

One way to think about AI systems is not just as tools or agents, but as concentrations of cognitive work, capable of creating and maintaining patterns that wouldn't otherwise exist.

Cognitive Work and Stable Patterns

Just as Earth's weather systems are maintained by constant solar energy input, continuous injection of cognitive work by AI systems could soon create and maintain stable patterns in our civilisation’s “computational weather”.

What makes these patterns particularly concerning for AI safety is their emergent nature. No single AI system needs to be misaligned in any traditional sense for problematic patterns to emerge from their collective operation. Just as individual water molecules don't "intend" to create a whirlpool, individual AI systems making locally optimal decisions might collectively maintain stable patterns that effectively constrain human agency or understanding.

Phase Transitions

Just as matter undergoes qualitative changes at critical points - like water becoming steam or atoms ionising into plasma - systems under continuous cognitive work injection might experience sharp transitions in their behaviour. The transition from human-comprehensible patterns to machine-driven order may not be smooth or gradual. Instead, there may be critical thresholds where the speed, density, or complexity of cognitive work suddenly enables new kinds of self-stabilising patterns.

These transitions might manifest as sudden shifts in market dynamics, unexpected emergent behaviours in recommendation systems, or abrupt changes in the effective power of human decision-making.

Conclusion

The first steam engines changed more than just the factories that housed them. As cognitive work begins to pool and flow through our information systems, we might ask: what new kinds of weather are we creating? And are we prepared for the storms?

Related Work

The concept of cognitive work and its systemic effects connects to several existing threads in the literature on AI safety and complex systems.

The perspective taken here is similar to that of Yudkowsky in “Intelligence Explosion Microeconomics” (2013) who emphasises optimisation power as “the ability to steer the future into regions of possibility ranked high in a preference ordering”, see also “Measuring Optimization Power [LW · GW]” (2008).

Andrew Critch's work on "What multipolar failure looks like [LW · GW]" (2021) introduces the concept of robust agent-agnostic processes (RAAPs) - stable patterns that emerge from multiple AI systems interacting in society. While Critch approaches this from a different theoretical angle, his emphasis on emergent patterns that resist change closely parallels our discussion of stable structures maintained by continuous cognitive work.

Paul Christiano's "Another (outer) alignment failure story [LW · GW]" (2021) describes how AI systems might gradually reshape social and economic processes through accumulated optimisation, even while appearing beneficial at each step. This aligns closely with our framework's emphasis on how continuous injection of cognitive work might create concerning patterns without any single system being misaligned, though we provide a different mechanical explanation through the thermodynamic analogy.

Recent socio-technical perspectives on AI risk, for example Seth Lazar's work on democratic legitimacy and institutional decision-making, emphasise how AI systems reshape the broader contexts in which human agency operates. Our framework of cognitive work and pattern formation suggests specific mechanisms by which this reshaping might occur, particularly through phase transitions in the effective power of human decision-making as cognitive work accumulation crosses critical thresholds.

The application of thermodynamic concepts to economic systems has a rich history that informs our approach. Early empirical evidence for the kind of pattern formation we describe can be found in studies of algorithmic trading. Johnson et al.'s "Financial black swans driven by ultrafast machine ecology" (2012) documents the emergence of qualitatively new market behaviours at machine timescales, while Farmer and Skouras's "An ecological perspective on the future of computer trading" (2013) explicitly frames these as ecological patterns emerging from algorithmic interaction. These studies provide concrete examples of how concentrated computational power can create stable structures that operate beyond human comprehension while fundamentally affecting human interests.

9 comments

Comments sorted by top scores.

comment by Thomas Kwa (thomas-kwa) · 2024-12-09T11:01:21.256Z · LW(p) · GW(p)

I'm pretty skeptical of this because the analogy seems superficial. Thermodynamics says useful things about abstractions like "work" because we have the laws of thermodynamics. What are the analogous laws for cognitive work / optimization power? It's not clear to me that it can be quantified such that it is easily accounted for:

- We all come from evolution. Where did the cognitive work come from?

- Algorithms can be copied

- Passwords can unlock optimization [LW(p) · GW(p)]

It is also not clear what distinguishes LLM weights from the weights of a model trained on random labels from a cryptographic PRNG. Since the labels are not truly random, they have the same amount of optimization done to them, but since CSPRNGs can't be broken just by training LLMs on them, the latter model is totally useless while the former is potentially transformative.

My guess is this way of looking at things will be like memetics in relation to genetics: likely to spawn one or two useful expressions like "memetically fit", but due to the inherent lack of structure in memes compared to DNA life, not a real field compared to other ways of measuring AIs and their effects (scaling laws? SLT?). Hope I'm wrong.

Replies from: dmurfet, alexander-gietelink-oldenziel↑ comment by Daniel Murfet (dmurfet) · 2024-12-09T20:16:42.017Z · LW(p) · GW(p)

The analogous laws are just information theory.

Re: a model trained on random labels. This seems somewhat analogous to building a power plant out of dark matter; to derive physical work it isn't enough to have some degrees of freedom somewhere that have a lot of energy, one also needs a chain of couplings between those degrees of freedom and the degrees of freedom you want to act on. Similarly, if I want to use a model to reduce my uncertainty about something, I need to construct a chain of random variables with nonzero mutual information linking the question in my head to the predictive distribution of the model.

To take a concrete example: if I am thinking about a chemistry question, and there are four choices A, B, C, D. Without any other information than these letters the model cannot reduce my uncertainty (say I begin with equal belief in all four options). However if I provide a prompt describing the question, and the model has been trained on chemistry, then this information sets up a correspondence between this distribution over four letters and something the model knows about; its answer may then reduce my distribution to being equally uncertain between A, B but knowing C, D are wrong (a change of 1 bit in my entropy).

Since language models are good general compressors this seems to work in reasonable generality.

Ideally we would like the model to push our distribution towards true answers, but it doesn't necessarily know true answers, only some approximation; thus the work being done is nontrivially directed, and has a systematic overall effect due to the nature of the model's biases.

I don't know about evolution. I think it's right that the perspective has limits and can just become some empty slogans outside of some careful usage. I don't know how useful it is in actually technically reasoning about AI safety at scale, but it's a fun idea to play around with.

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-27T22:12:56.140Z · LW(p) · GW(p)

I agree with you that as stated the analogy is risking dangerous superficiality.

- The 'cognitive' work of evolution came from the billion years of evolution in the innumerable forms of life that lived, hunted and reproduced through the eons. Effectively we could see evolution-by-natural selection as a something like a simple, highly-parallel, stochastic, slow algorithm. I.e. a simple many-tape random Turing machine taking a very large number of timesteps.

A way to try and maybe put some (vegan) meat on the bones of this analogy would be to look at conditional KT-complexity. KT-complexity is a version of Kolmogorov complexity that also accounts for the time- cost of running the generating program.

- In KT-complexity pseudorandomness functions just like randomness.- Algorithms may indeed be copied and the copy operation is fast and takes very little memory overhead.

- Just as in Kolmogorov complexity we rejiggle and think in terms of an algorithmic probability.

- a private-public key is trivial in a pure Kolmogorov complexity framework but correctly modelled in a KT-complexity framework.

To deepen the analogy with thermodynamics one should probably carefully read John Wentworth's generalized heat engines [LW · GW] and Kolmogorov sufficient statistics.

Replies from: dmurfet↑ comment by Daniel Murfet (dmurfet) · 2024-12-28T01:07:28.034Z · LW(p) · GW(p)

To be clear, I am not arguing that evolution is an example of what I'm talking about. The analogy to thermodynamics in what I wrote is straightforwardly correct, no need to introduce KT-complexity and muddy the waters; what I am calling work is literally work.

comment by Stephen Fowler (LosPolloFowler) · 2024-12-09T09:51:32.168Z · LW(p) · GW(p)

Worth emphasizing that cognitive work is more than just a parallel to physical work, it is literally Work in the physical sense.

The reduction in entropy required to train a model means that there is a minimum amount of work required to do it.

I think this is a very important research direction, not merely as an avenue for communicating and understanding AI Safety concerns, but potentially as a framework for developing AI Safety techniques.

There is some minimum amount of cognitive work required to pose an existential threat, perhaps it is much higher than the amount of cognitive work required to perform economically useful tasks.

comment by Jonas Hallgren · 2024-12-09T08:35:12.628Z · LW(p) · GW(p)

Good stuff!

I'm curious if you have any thoughts on the computational foundations one would need to measure and predict cognitive work properly?

In Agent Foundations, you've got this idea of boundaries [? · GW]which can be seen as one way of saying a pattern that persists over time. One way that this is formalised in Active Inference is through Markov Blankets and the idea that any self-persistent entity could be described as a markov blanket minimizing the free energy of its environment.

My thinking here is that if we apply this properly it would allow us to generalise notions of agents beyond what we normally think of them and instead see them as any sort of system that follows this definition.

For example, we could look at an institution or a collective of AIs as a self-consistent entity applying cognitive work on the environment to survive. The way to detect these collectives would be to look at what self-consistent entities are changing the "optimisation landscape" or "free energy landscape" around it the most. This would then give us the most highly predictive agents in the local environment.

A nice thing for is that it centers the cognitive work/optimisation power applied in the analysis and so I'm thinking that it might be more predictive of future dynamics of cognitive systems as a consequence?

Another example is if we continue on the Critch train, some of his later work includes TASRA for example. We can see these as stories of human disempowerment, that is patterns that lose their relevance over time as they get less causal power over future states. In other words, entities that are not under the causal power of humans increasingly take over the cognitive work lightcone/the inputs to the free energy landscape.

As previously stated, I'm very interested to hear if you've got more thoughts on how to measure and model cognitive work.

↑ comment by Daniel Murfet (dmurfet) · 2024-12-09T10:05:32.878Z · LW(p) · GW(p)

Yes this seems like an important question but I admit I don't have anything coherent to say yet. A basic intuition from thermodynamics is that if you can measure the change in the internal energy between two states, and the heat transfer, you can infer how much work was done even if you're not sure how it was done. So maybe the problem is better thought of as learning to measure enough other quantities that one can infer how much cognitive work is being done.

For all I know there is a developed thermodynamic theory of learning agents out there which already does this, but I didn't find it yet...

comment by 4gate · 2024-12-09T12:35:00.716Z · LW(p) · GW(p)

This seems like a prety cool perspective, especially since it might make analysis a little simpler vs. a paradigm where you kind of need to know what to look out for specifically. Are there any toy mathematical models or basically simulated words/stories, etc... to make this more concrete? I briefly looked at some of the slides you shared but it doesn't seem to be there (though maybe I missed something, since I didn't watch he entire video(s)).

I'm not honestly sure exactly what this would look like since I don't fully understand much here beyond the notion that concentration of intelligence/cognition can lead to larger magnitude outcomes (which we probably already knew) and the idea that maybe we could measure this or use it to reason in some way (which maybe we aren't doing so much). Maybe we could have some sort of simulated game where different agents get to control civilizations (i.e. like Civ 5) and among the things they can invest (their resources) in, there is some measure of "cognition" (i.e. maybe it lets them plan further ahead or gives them the ability to take more variables into consideration when making decisions or to see more of the map...). With that said, it's not clear to me what would come out of this simulation other than maybe getting a notion of the relative value (in different contexts) of cognitive vs. physical investments (i.e. having smarter strategists vs. building a better castle). There's not clear question or hypothesis that comes to mind right now.

It looks like from some other comments that literature on agents foundations might be relevant, but I'm not familiar. If I get time I might look into it in the future. Are these sorts of frameworks are useable for actual decision making right now (and if so how can we tell/not) or are they still exploratory?

Generally just curious if there's a way to make this more concrete i.e. to understand it better.

Replies from: dmurfet↑ comment by Daniel Murfet (dmurfet) · 2024-12-09T20:58:31.349Z · LW(p) · GW(p)

That simulation sounds cool. The talk certainly doesn't contain any details and I don't have a mathematical model to share at this point. One way to make this more concrete is to think through Maxwell's demon as an LLM, for example in the context of Feynman's lectures on computation. The literature on thermodynamics of computation (various experts, like Adam Shai and Paul Riechers, are around here and know more than me) implicitly or explicitly touches on relevant issues.