yanni's Shortform

post by yanni kyriacos (yanni) · 2024-03-13T23:58:40.245Z · LW · GW · 116 commentsContents

118 comments

116 comments

Comments sorted by top scores.

comment by yanni kyriacos (yanni) · 2024-06-06T20:04:18.952Z · LW(p) · GW(p)

Prediction: In 6-12 months people are going to start leaving Deepmind and Anthropic for similar sounding reasons to those currently leaving OpenAI (50% likelihood).

> Surface level read of what is happening at OpenAI; employees are uncomfortable with specific safety policies.

> Deeper, more transferable, harder to solve problem; no person that is sufficiently well-meaning and close enough to the coal face at Big Labs can ever be reassured they're doing the right thing continuing to work for a company whose mission is to build AGI.

Basically, this is less about "OpenAI is bad" and more "Making AGI is bad".

Replies from: JamesPayor, neel-nanda-1↑ comment by James Payor (JamesPayor) · 2024-06-07T11:18:24.942Z · LW(p) · GW(p)

I think this is kinda likely, but will note that people seem to take quite a while before they end up leaving.

If OpenAI (both recently and the first exodus) is any indication, I think it might take longer for issues to gel and become clear enough to have folks more-than-quietly leave.

↑ comment by Neel Nanda (neel-nanda-1) · 2024-06-07T10:19:09.526Z · LW(p) · GW(p)

I'd be pretty surprised

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-06-08T00:44:24.194Z · LW(p) · GW(p)

Hey mate thanks for the comment. I'm finding "pretty surprised" hard to interpret. Is that closer to 1% or 15%?

comment by yanni kyriacos (yanni) · 2024-03-28T01:35:59.969Z · LW(p) · GW(p)

I like the fact that despite not being (relatively) young when they died, the LW banner states that Kahneman & Vinge have died "FAR TOO YOUNG", pointing to the fact that death is always bad and/or it is bad when people die when they were still making positive contributions to the world (Kahneman published "Noise" in 2021!).

Replies from: lahwran, neel-nanda-1, neil-warren↑ comment by the gears to ascension (lahwran) · 2024-03-28T15:06:31.084Z · LW(p) · GW(p)

I like it too, and because your comment made me think about it, I now kind of wish it said "orders of magnitude too young"

↑ comment by Neel Nanda (neel-nanda-1) · 2024-03-29T12:02:50.443Z · LW(p) · GW(p)

What banner?

Replies from: neil-warren↑ comment by Neil (neil-warren) · 2024-03-29T13:55:30.606Z · LW(p) · GW(p)

They took it down real quick for some reason.

Replies from: lahwran, habryka4↑ comment by the gears to ascension (lahwran) · 2024-03-29T20:59:31.087Z · LW(p) · GW(p)

it's still there for me

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2024-03-30T14:42:04.944Z · LW(p) · GW(p)

gone now

↑ comment by habryka (habryka4) · 2024-03-31T03:51:55.353Z · LW(p) · GW(p)

Oh, it seemed like the kind of thing you would only keep up around the time of death, and we kept it up for a bit more than a day. Somehow it seemed inappropriate to keep it up for longer.

↑ comment by Neil (neil-warren) · 2024-03-28T23:49:08.070Z · LW(p) · GW(p)

This reminds me of when Charlie Munger died at 99, and many said of him "he was just a child". Less of a nod to transhumanist aspirations, and more to how he retained his sparkling energy and curiosity up until death. There are quite a few good reasons to write "dead far too young".

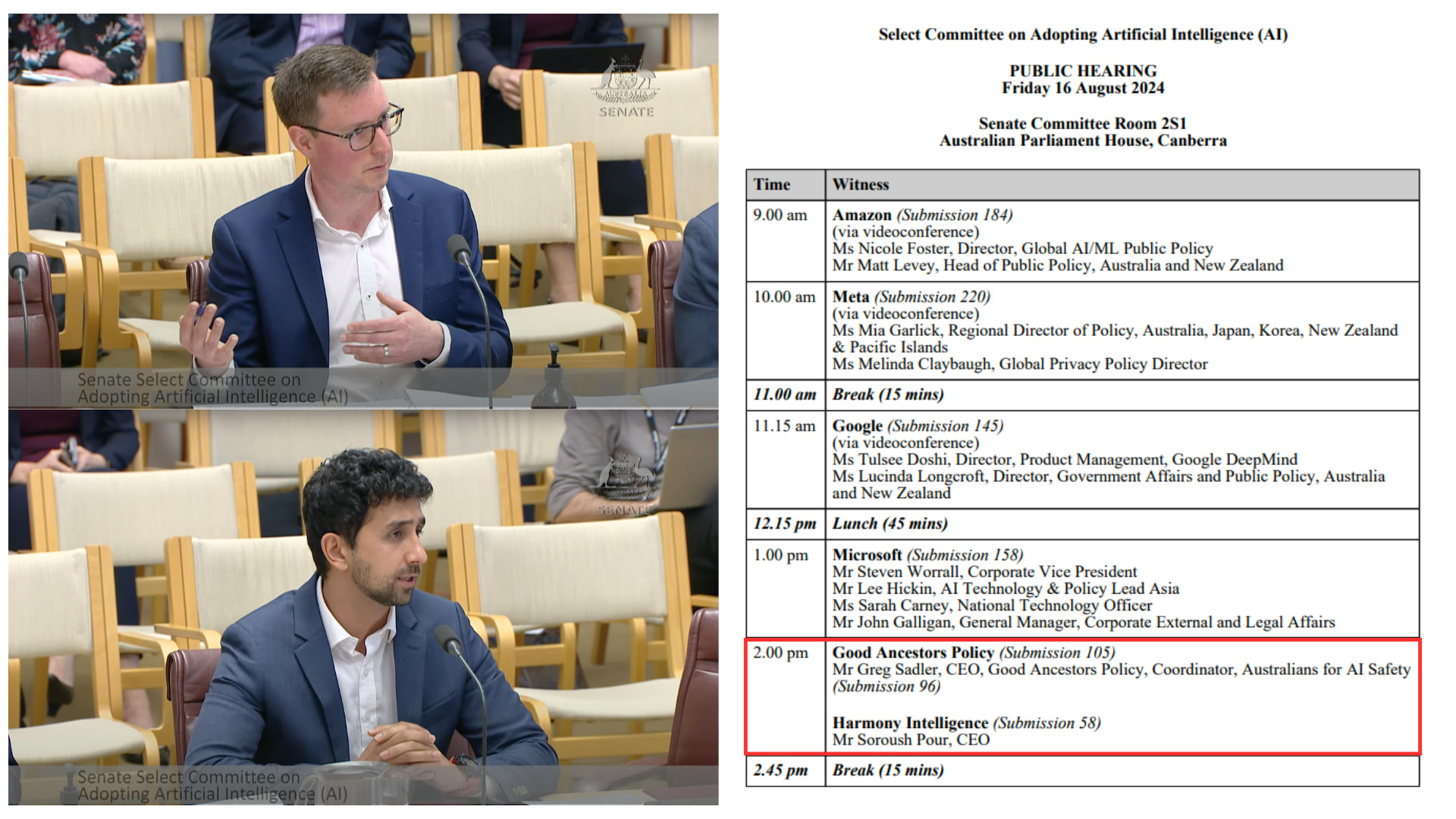

comment by yanni kyriacos (yanni) · 2024-08-16T05:11:21.083Z · LW(p) · GW(p)

[IMAGE] Extremely proud and excited to watch Greg Sadler (CEO of Good Ancestors Policy) and Soroush Pour (Co-Founder of Harmony Intelligence) speak to the Australian Federal Senate Select Committee on Artificial Intelligence. This is a big moment for the local movement. Also, check out who they ran after.

↑ comment by habryka (habryka4) · 2024-08-16T05:15:38.892Z · LW(p) · GW(p)

(Looks like the image got lost, probably you submitted the comment before it fully finished uploading)

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-08-16T10:10:38.114Z · LW(p) · GW(p)

Thanks for the heads up! Should be there now :)

comment by yanni kyriacos (yanni) · 2024-05-05T11:03:21.496Z · LW(p) · GW(p)

Something I'm confused about: what is the threshold that needs meeting for the majority of people in the EA community to say something like "it would be better if EAs didn't work at OpenAI"?

Imagining the following hypothetical scenarios over 2024/25, I can't predict confidently whether they'd individually cause that response within EA?

- Ten-fifteen more OpenAI staff quit for varied and unclear reasons. No public info is gained outside of rumours

- There is another board shakeup because senior leaders seem worried about Altman. Altman stays on

- Superalignment team is disbanded

- OpenAI doesn't let UK or US AISI's safety test GPT5/6 before release

- There are strong rumours they've achieved weakly general AGI internally at end of 2025

↑ comment by Carl Feynman (carl-feynman) · 2024-05-05T19:32:46.766Z · LW(p) · GW(p)

This question is two steps removed from reality. Here’s what I mean by that. Putting brackets around each of the two steps:

what is the threshold that needs meeting [for the majority of people in the EA community] [to say something like] "it would be better if EAs didn't work at OpenAI"?

Without these steps, the question becomes

What is the threshold that needs meeting before it would be better if people didn’t work at OpenAI?

Personally, I find that a more interesting question. Is there a reason why the question is phrased at two removes like that? Or am I missing the point?

↑ comment by LawrenceC (LawChan) · 2024-05-05T19:04:57.218Z · LW(p) · GW(p)

What does a "majority of the EA community" mean here? Does it mean that people who work at OAI (even on superalignment or preparedness) are shunned from professional EA events? Does it mean that when they ask, people tell them not to join OAI? And who counts as "in the EA community"?

I don't think it's that constructive to bar people from all or even most EA events just because they work at OAI, even if there's a decent amount of consensus people should not work there. Of course, it's fine to host events (even professional ones!) that don't invite OAI people (or Anthropic people, or METR people, or FAR AI people, etc), and they do happen, but I don't feel like barring people from EAG or e.g. Constellation just because they work at OAI would help make the case, (not that there's any chance of this happening in the near term) and would most likely backfire.

I think that currently, many people (at least in the Berkeley EA/AIS community) will tell you to not join OAI if asked. I'm not sure if they form a majority in terms of absolute numbers, but they're at least a majority in some professional circles (e.g. both most people at FAR/FAR Labs and at Lightcone/Lighthaven would probably say this). I also think many people would say that on the margin, too many people are trying to join OAI rather than other important jobs. (Due to factors like OAI paying a lot more than non-scaling lab jobs/having more legible prestige.)

Empirically, it sure seems significantly more people around here join Anthropic than OAI, despite Anthropic being a significantly smaller company.

Though I think almost none of these people would advocate for ~0 x-risk motivated people to work at OAI, only that the marginal x-risk concerned technical person should not work at OAI.

What specific actions are you hoping for here, that would cause you to say "yes, the majority of EA people say 'it's better to not work at OAI'"?

↑ comment by Dagon · 2024-05-05T16:14:43.800Z · LW(p) · GW(p)

[ I don't consider myself EA, nor a member of the EA community, though I'm largely compatible in my preferences ]

I'm not sure it matters what the majority thinks, only what marginal employees (those who can choose whether or not to work at OpenAI) think. And what you think, if you are considering whether to apply, or whether to use their products and give them money/status.

Personally, I just took a job in a related company (working on applications, rather than core modeling), and I have zero concerns that I'm doing the wrong thing.

[ in response to request to elaborate: I'm not going to at this time. It's not secret, nor is my identity generally, but I do prefer not to make it too easy for 'bots or searchers to tie my online and real-world lives together. ]

comment by yanni kyriacos (yanni) · 2024-04-21T04:45:36.734Z · LW(p) · GW(p)

I recently discovered the idea of driving all blames into oneself, which immediately resonated with me. It is relatively hardcore; the kind of thing that would turn David Goggins into a Buddhist.

Gemini did a good job of summarising it:

This quote by Pema Chödron, a renowned Buddhist teacher, represents a core principle in some Buddhist traditions, particularly within Tibetan Buddhism. It's called "taking full responsibility" or "taking self-blame" and can be a bit challenging to understand at first. Here's a breakdown:

What it Doesn't Mean:

- Self-Flagellation: This practice isn't about beating yourself up or dwelling on guilt.

- Ignoring External Factors: It doesn't deny the role of external circumstances in a situation.

What it Does Mean:

- Owning Your Reaction: It's about acknowledging how a situation makes you feel and taking responsibility for your own emotional response.

- Shifting Focus: Instead of blaming others or dwelling on what you can't control, you direct your attention to your own thoughts and reactions.

- Breaking Negative Cycles: By understanding your own reactions, you can break free from negative thought patterns and choose a more skillful response.

Analogy:

Imagine a pebble thrown into a still pond. The pebble represents the external situation, and the ripples represent your emotional response. While you can't control the pebble (the external situation), you can control the ripples (your reaction).

Benefits:

- Reduced Suffering: By taking responsibility for your own reactions, you become less dependent on external circumstances for your happiness.

- Increased Self-Awareness: It helps you understand your triggers and cultivate a more mindful response to situations.

- Greater Personal Growth: By taking responsibility, you empower yourself to learn and grow from experiences.

Here are some additional points to consider:

- This practice doesn't mean excusing bad behavior. You can still hold others accountable while taking responsibility for your own reactions.

- It's a gradual process. Be patient with yourself as you learn to practice this approach.

↑ comment by TsviBT · 2024-04-21T20:05:17.812Z · LW(p) · GW(p)

This practice doesn't mean excusing bad behavior. You can still hold others accountable while taking responsibility for your own reactions.

Well, what if there's a good piece of code (if you'll allow the crudity) in your head, and someone else's bad behavior is geared at hacking/exploiting that piece of code? The harm done is partly due to that piece of code and its role in part of your reaction to their bad behavior. But the implication is that they should stop with their bad behavior, not that you should get rid of the good code. I believe you'll respond "Ah, but you see, there's more than two options. You can change yourself in ways other than just deleting the code. You could recognize how the code is actually partly good and partly bad, and refactor it; and you could add other code to respond skillfully to their bad behavior; and you can add other code to help them correct their behavior.". Which I totally agree with, but at this point, what's being communicated by "taking self-blame" other than at best "reprogram yourself in Good/skillful ways" or more realistically "acquiesce to abuse"?

↑ comment by Selfmaker662 · 2024-04-21T17:03:10.740Z · LW(p) · GW(p)

The Stoics put this idea in a much kinder way: control the controllable (specifically our actions and attitudes), accept the uncontrollable.

The problem is, people's could's are broken. I have managed to make myself much unhappier by thinking I can control my actions until I read Nate Soares' post I linked above. You can't, even in the everyday definition of control, forgetting about paradoxes of "free will" [? · GW].

↑ comment by sweenesm · 2024-04-21T12:27:38.411Z · LW(p) · GW(p)

Nice write up on this (even if it was AI-assisted), thanks for sharing! I believe another benefit is Raising One's Self-Esteem: If high self-esteem can be thought of as consistently feeling good about oneself, then if someone takes responsibility for their emotions, recognizing that they can change their emotions at will, they can consistently choose to feel good about and love themselves as long as their conscience is clear.

This is inline with "The Six Pillars of Self-Esteem" by Nathaniel Branden: living consciously, self-acceptance, self-responsibility, self-assertiveness, living purposefully, and personal integrity.

comment by yanni kyriacos (yanni) · 2024-08-02T01:33:37.404Z · LW(p) · GW(p)

I don't know how to say this in a way that won't come off harsh, but I've had meetings with > 100 people in the last 6 months in AI Safety and I've been surprised how poor the industry standard is for people:

- Planning meetings (writing emails that communicate what the meeting will be about, it's purpose, my role)

- Turning up to scheduled meetings (like literally, showing up at all)

- Turning up on time

- Turning up prepared (i.e. with informed opinions on what we're going to discuss)

- Turning up with clear agendas (if they've organised the meeting)

- Running the meetings

- Following up after the meeting (clear communication about next steps)

I do not have an explanation for what is going on here but it concerns me about the movement interfacing with government and industry. My background is in industry and these just seem to be things people take for granted?

It is possible I give off a chill vibe, leading to people thinking these things aren't necessary, and they'd switch gears for more important meetings!

↑ comment by habryka (habryka4) · 2024-08-02T02:12:47.013Z · LW(p) · GW(p)

When people reach out to me to meet without an obvious business context I tend to be quite lax about my standards (it's them who asked for my time, I am not going to also put in 30 minutes to prepare for the meeting).

Not sure whether that's what you are running into, but I have a huge amount of variance in how much effort I put into different meetings.

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-08-02T02:45:19.118Z · LW(p) · GW(p)

Hey thanks for your thoughts! I'm not sure what you mean by "obvious business context", but I'm either meeting friends / family (not business) or colleagues / community members (business).

Is there a third group that I'm missing?

If you're actually talking about the second group, I wonder how much your and their productivity would be improved if you were less lax about it?

↑ comment by habryka (habryka4) · 2024-08-02T02:49:12.832Z · LW(p) · GW(p)

By 'obvious business context' I mean something like "there is a clear business/mission proposition for why I am taking this meeting".

I wonder how much your and their productivity would be improved if you were less lax about it?

My guess is mine and their productivity would be reduced because the meetings would be a bunch costlier and I would meet with fewer people.

↑ comment by yanni kyriacos (yanni) · 2024-08-02T01:42:28.504Z · LW(p) · GW(p)

It just occurred to me that maybe posting this isn't helpful. And maybe net negative. If I get enough feedback saying it is then I'll happily delete.

comment by yanni kyriacos (yanni) · 2024-05-01T06:30:21.653Z · LW(p) · GW(p)

There have been multiple occasions where I've copy and pasted email threads into an LLM and asked it things like:

- What is X person saying

- What are the cruxes in this conversation?

- Summarise this conversation

- What are the key takeaways

- What views are being missed from this conversation

I really want an email plugin that basically brute forces rationality INTO email conversations.

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-01T09:37:26.472Z · LW(p) · GW(p)

In principle, you could use Whisper or any other ASR system with high accuracy to enforce something like this during a live conversation.

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-05-01T11:00:52.828Z · LW(p) · GW(p)

Hi Johannes! Thanks for the suggestion :) I'm not sure i'd want it in the middle of a video call, but maybe in a forum context like this could be cool?

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-01T12:16:53.293Z · LW(p) · GW(p)

Seems pretty good to me to have this in a video call to me. The main reason why don't immediately try this out is that I would need to write a program to do this.

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-05-01T23:19:57.171Z · LW(p) · GW(p)

That seems fair enough!

comment by yanni kyriacos (yanni) · 2024-03-29T00:17:36.363Z · LW(p) · GW(p)

I have heard rumours that an AI Safety documentary is being made. Separate to this, a good friend of mine is also seriously considering making one, but he isn't "in" AI Safety. If you know who this first group is and can put me in touch with them, it might be worth getting across each others plans.

Replies from: William the Kiwi↑ comment by William the Kiwi · 2024-04-01T00:47:30.424Z · LW(p) · GW(p)

There is a AI x-risk documentary currently being filmed. An Inconvenient Doom. https://www.documentary-campus.com/training/masterschool/2024/inconvenient-doom It covers some aspects on AI safety, but doesn't focus on it exactly.

comment by yanni kyriacos (yanni) · 2024-07-04T23:17:57.390Z · LW(p) · GW(p)

More thoughts on "80,000 hours should remove OpenAI from the Job Board"

- - - -

A broadly robust heuristic I walk around with in my head is "the more targeted the strategy, the more likely it will miss the target".

Its origin is from when I worked in advertising agencies, where TV ads for car brands all looked the same because they needed to reach millions of people, while email campaigns were highly personalised.

The thing is, if you get hit with a tv ad for a car, worst case scenario it can't have a strongly negative effect because of its generic content (dude looks cool while driving his 4WD through rocky terrain). But when an email that attempts to be personalised misses the mark, you feel it (Hi {First Name : Last Name}!).

Anyway, effective altruism loves targeting. It is virtually built from the ground up on it (RCTs and all that, aversion to PR etc).

Anyway, I am thinking about this in relation to the recent "80,000 hours should remove OpenAI from the Job Board" post.

80k is applying an extremely targeted strategy by recommending people work in some jobs at the workplaces creating the possible death machines (caveat 1, caveat 2, caveat 3, caveatn).

There are so many failure modes involved with those caveats that makes it virtually impossible to not go catastrophically poorly in at least a few instances and take the mental health of a bunch of young and well-meaning EAs with it.

comment by yanni kyriacos (yanni) · 2024-06-06T20:08:25.701Z · LW(p) · GW(p)

A judgement I'm attached to is that a person is either extremely confused or callous if they work in capabilities at a big lab. Is there some nuance I'm missing here?

Replies from: ann-brown, thomas-kwa, rhollerith_dot_com↑ comment by Ann (ann-brown) · 2024-06-06T23:00:23.080Z · LW(p) · GW(p)

I would consider, for the sake of humility, that they might disagree with your assessment for actual reasons, rather than assuming confusion is necessary. (I don't have access to their actual reasoning, apologies.)

Edit: To give you a toy model of reasoning to chew on -

Say a researcher has a p(doom from AGI) of 20% from random-origin AGI;

30% from military origin AGI;

10% from commercial lab origin AGI

(and perhaps other numbers elsewhere that are similarly suggestive).

They estimate the chances we develop AGI (relatively) soon as roughly 80%, regardless of their intervention.

They also happen to have a have a p(doom from not AGI) of 40% from combined other causes, and expect an aligned AGI to be able to effectively reduce this to something closer to 1% through better coordinating reasonable efforts.

What's their highest leverage action with that world model?

↑ comment by yanni kyriacos (yanni) · 2024-06-07T00:01:37.073Z · LW(p) · GW(p)

Hi Ann! Thank you for your comment. Some quick thoughts:

"I would consider, for the sake of humility, that they might disagree with your assessment for actual reasons, rather than assuming confusion is necessary."

- Yep! I have considered this. The purpose of my post is to consider it (I am looking for feedback, not upvotes or downvotes).

"They also happen to have a have a p(doom from not AGI) of 40% from combined other causes, and expect an aligned AGI to be able to effectively reduce this to something closer to 1% through better coordinating reasonable efforts."

- This falls into the confused category for me. I'm not sure how you have a 40% p(doom) from something other than unaligned AGI. Could you spell out for me what could make such a large number?

↑ comment by Ann (ann-brown) · 2024-06-07T11:43:03.141Z · LW(p) · GW(p)

Here's a few possibilities:

- They predict that the catastrophic tipping points from climate change and perhaps other human-caused environmental changes will cause knock-on effects that eventually add up to our extinction, and the policy struggles to change that currently seem like we will not be able to pull them off despite observing clear initial consequences in terms of fire, storm, and ocean heating.

- They model a full nuclear exchange in the context of a worldwide war as being highly possible and only narrowly evaded so far, and consider the consequences of that to cause or at least be as bad as extinction.

- They are reasonably confident that pandemics arising or engineered without the help of AI could, in fact, take out our species under favorable circumstances, and worry the battlefield of public health is currently slipping towards the favor of diseases over time.

- Probably smaller contributors going forward: They are familiar with other religious groups inclined to bring about the apocalypse and have some actual concern over their chance of success. (Probably U.S.-focused.)

- They are looking at longer time frames, and are thinking of various catastrophes likely within the decades or centuries immediately after we would otherwise have developed AGI, some of them possibly caused by the policies necessary to not do so.

- They think humans may voluntarily decide it is not worth existing as a species unless we make it worth their while properly, and should not be stopped from making this choice. Existence, and the world as it is for humans, is hell in some pretty important and meaningful ways.

- They are not long-termists in any sense but stewardship, and are counting the possibility that everyone who exists and matters to them under a short-term framework ages and dies.

- They consider most humans to currently be in a state of suffering worse than non-existence, the s-risk of doom is currently 100%, and the 60% not-doom is mostly optimism we can make that state better.

And overall, generally, a belief that not-doom is fragile; that species do not always endure; that there is no guarantee, and our genus happens to be into the dice-rolling part of its lifespan even if we weren't doing various unusual things that might increase our risk as much as decrease. (Probably worth noting that several species of humans, our equals based on archaeological finds and our partners based on genomic, have gone extinct.)

↑ comment by Thomas Kwa (thomas-kwa) · 2024-06-06T21:07:52.428Z · LW(p) · GW(p)

Someone I know who works at Anthropic, not on alignment, has thought pretty hard about this and concluded it was better than alternatives. Some factors include

- by working on capabilities, you free up others for alignment work who were previously doing capabilities but would prefer alignment

- more competition on product decreases aggregate profits of scaling labs

At one point some kind of post was planned but I'm not sure if this is still happening.

I also think there are significant upskilling benefits [LW(p) · GW(p)] to working on capabilities, though I believe this less than I did the other day.

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-06-06T23:55:21.735Z · LW(p) · GW(p)

Thanks for your comment Thomas! I appreciate the effort. I have some questions:

- by working on capabilities, you free up others for alignment work who were previously doing capabilities but would prefer alignment

I am a little confused by this, would you mind spelling it out for me? Imagine "Steve" took a job at "FakeLab" in capabilities. Are you saying Steve making this decision creates a Safety job for "Jane" at "FakeLab", that otherwise wouldn't have existed?

- more competition on product decreases aggregate profits of scaling labs

Again I am a bit confused. You're suggesting that if, for e.g., General Motors announced tomorrow they were investing $20 billion to start an AGI lab, that would be a good thing?

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2024-06-07T05:23:19.109Z · LW(p) · GW(p)

- Jane at FakeLab has a background in interpretability but is currently wrangling data / writing internal tooling / doing some product thing because the company needs her to, because otherwise FakeLab would have no product and be unable to continue operating including its safety research. Steve has comparative advantage at Jane's current job.

- It seems net bad because the good effect of slowing down OpenAI is smaller than the bad effect of GM racing? But OpenAI is probably slowed down-- they were already trying to build AGI and they have less money and possibly less talent. Thinking about the net effect is complicated and I don't have time to do it here. The situation with joining a lab rather than founding one may also be different.

↑ comment by RHollerith (rhollerith_dot_com) · 2024-06-06T21:32:48.326Z · LW(p) · GW(p)

Maybe their goal is sabotage. Maybe they enjoy deception or consider themselves to be comparatively advantaged at it.

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-06-06T23:56:21.229Z · LW(p) · GW(p)

Hi Richard! Thanks for the comment. It seems to me that might apply to < 5% of people in capabilities?

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2024-06-07T00:26:28.436Z · LW(p) · GW(p)

My probability is more like 0.1%.

comment by yanni kyriacos (yanni) · 2024-05-14T04:31:24.037Z · LW(p) · GW(p)

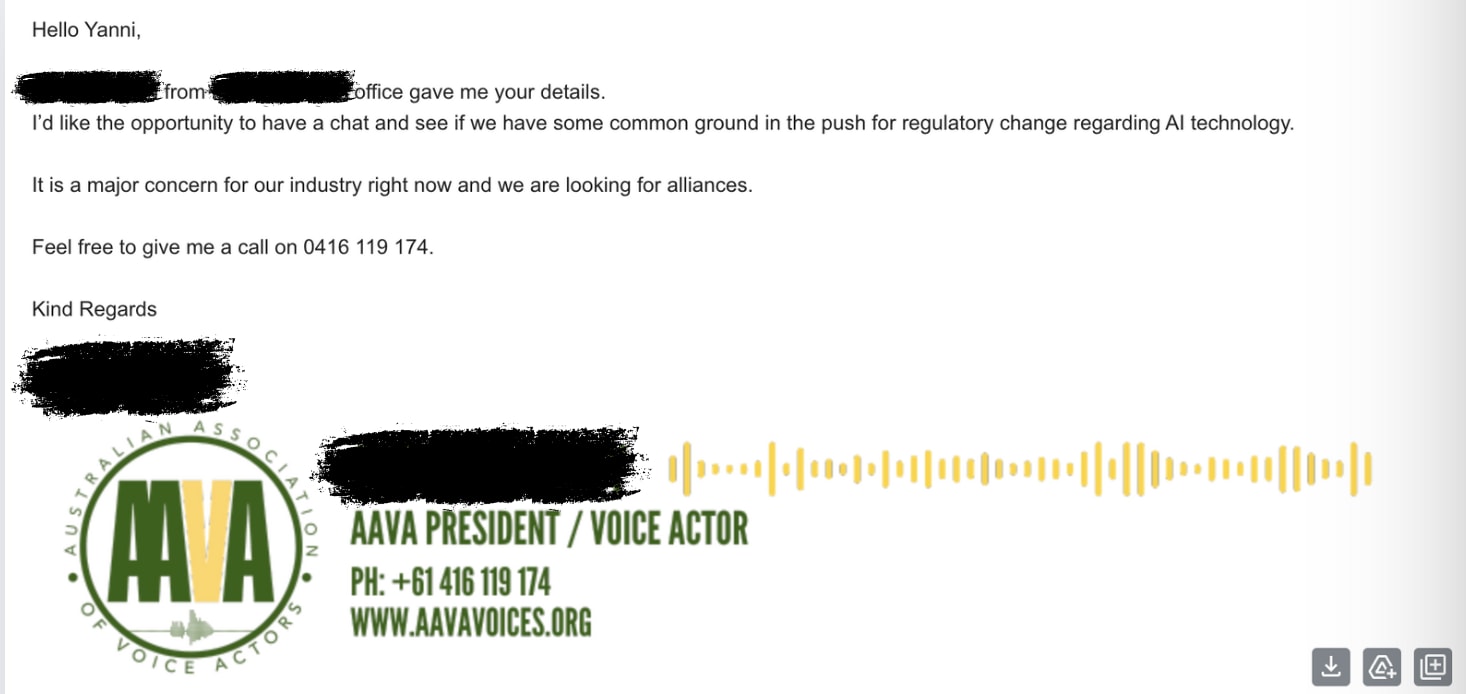

[PHOTO] I sent 19 emails to politicians, had 4 meetings, and now I get emails like this. There is SO MUCH low hanging fruit in just doing this for 30 minutes a day (I would do it but my LTFF funding does not cover this). Someone should do this!

↑ comment by niplav · 2024-05-14T09:45:58.993Z · LW(p) · GW(p)

The image is not showing.

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-05-14T23:33:29.690Z · LW(p) · GW(p)

Thanks for letting me know!

comment by yanni kyriacos (yanni) · 2024-05-13T05:58:03.282Z · LW(p) · GW(p)

I expect (~ 75%) that the decision to "funnel" EAs into jobs at AI labs will become a contentious community issue in the next year. I think that over time more people will think it is a bad idea. This may have PR and funding consequences too.

Replies from: D0TheMath↑ comment by Garrett Baker (D0TheMath) · 2024-05-13T19:49:21.402Z · LW(p) · GW(p)

This has been a disagreement people have had for many years. Why expect it to come to a head this year?

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-05-14T04:33:55.652Z · LW(p) · GW(p)

More people are going to quit labs / OpenAI. Will EA refill the leaky funnel?

comment by yanni kyriacos (yanni) · 2024-08-30T00:57:04.080Z · LW(p) · GW(p)

Three million people are employed by the travel (agent) industry worldwide. I am struggling to see how we don't lose 80%+ of those jobs to AI Agents in 3 years (this is ofc just one example). This is going to be an extremely painful process for a lot of people.

Replies from: faul_sname↑ comment by faul_sname · 2024-08-30T18:02:02.089Z · LW(p) · GW(p)

I am struggling to see how we do lose 80%+ of these jobs within the next 3 years.

Operationalizing this, I would give you 4:1 that the fraction (or raw number, if you'd prefer) of employees occupied as travel agents is over 20% of today's value, according to the Labor Force Statistics from the US Bureau of Labor Statistics Current Population Survey (BLS CPS) Characteristics of the Employed dataset.

For reference, here are the historical values for the BLS CPS series cpsaat11b ("Employed persons by detailed occupation and age") since 2011 (which is the earliest year they have it available as a spreadsheet). If you want to play with the data yourself, I put it all in one place in google sheets here.

As of the 2023 survey, about 0.048% of surveyed employees, and 0.029% of surveyed people, were travel agents. As such, I would be willing to bet at 4:1 that when the 2027 data becomes available, at least 0.0096% of surveyed employees and at least 0.0058% of surveyed Americans report their occupation as "Travel Agent".

Are you interested in taking the opposite side of this bet?

Edit: Fixed aritmetic error in the percentages in the offered bet

comment by yanni kyriacos (yanni) · 2024-05-20T22:06:07.372Z · LW(p) · GW(p)

Please help me find research on aspiring AI Safety folk!

I am two weeks into the strategy development phase of my movement building and almost ready to start ideating some programs for the year.

But I want these programs to be solving the biggest pain points people experience when trying to have a positive impact in AI Safety .

Has anyone seen any research that looks at this in depth? For example, through an interview process and then survey to quantify how painful the pain points are?

Some examples of pain points I've observed so far through my interviews with Technical folk:

- I often felt overwhelmed by the vast amount of material to learn.

- I felt there wasn’t a clear way to navigate learning the required information

- I lacked an understanding of my strengths and weaknesses in relation to different AI Safety areas (i.e. personal fit / comparative advantage) .

- I lacked an understanding of my progress after I get started (e.g. am I doing well? Poorly? Fast enough?)

- I regularly experienced fear of failure

- I regularly experienced fear of wasted efforts / sunk cost

- Fear of admitting mistakes or starting over might prevent people from making necessary adjustments.

- I found it difficult to identify my desired role / job (i.e. the end goal)

- When I did think I knew my desired role, identifying the specific skills and knowledge required for a desired role was difficult

- There is no clear career pipeline: Do X and then Y and then Z and then you have an A% chance of getting B% role

- Finding time to get upskilled while working is difficult

- I found the funding ecosystem opaque

- A lot of discipline and motivation over potentially long periods was required to upskill

- I felt like nobody gave me realistic expectations as to what the journey would be like

comment by yanni kyriacos (yanni) · 2025-01-21T23:29:16.608Z · LW(p) · GW(p)

AI Safety has less money, talent, political capital, tech and time. We have only one distinct advantage: support from the general public. We need to start working that advantage immediately.

comment by yanni kyriacos (yanni) · 2024-09-22T02:28:14.350Z · LW(p) · GW(p)

Big AIS news imo: “The initial members of the International Network of AI Safety Institutes are Australia, Canada, the European Union, France, Japan, Kenya, the Republic of Korea, Singapore, the United Kingdom, and the United States.”

H/T @shakeel

Replies from: dmurfet↑ comment by Daniel Murfet (dmurfet) · 2024-09-22T02:49:31.884Z · LW(p) · GW(p)

It might be worth knowing that some countries are participating in the "network" without having formal AI safety institutes

comment by yanni kyriacos (yanni) · 2024-07-29T02:03:02.654Z · LW(p) · GW(p)

The Australian Federal Government is setting up an "AI Advisory Body" inside the Department of Industry, Science and Resources.

IMO that means Australia has an AISI in:

9 months (~ 30% confidence)

12 months (~ 50%)

18 months (~ 80%)

comment by yanni kyriacos (yanni) · 2024-06-18T04:49:54.543Z · LW(p) · GW(p)

I'm pleased to announce the launch of a brand new Facebook group dedicated to AI Safety in Wellington: AI Safety Wellington (AISW). This is your local hub for connecting with others passionate about ensuring a safe and beneficial future with artificial intelligence / reducing x-risk.To kick things off, we're hosting a super casual meetup where you can:

- Meet & Learn: Connect with Wellington's AI Safety community.

- Chat & Collaborate: Discuss career paths, upcoming events, and training opportunities.

- Share & Explore: Whether you're new to AI Safety or a seasoned expert, come learn from each other's perspectives.

- Expand the Network: Feel free to bring a friend who's curious about AI Safety!

Join the group and RSVP for the meetup here

https://www.facebook.com/groups/500872105703980

comment by yanni kyriacos (yanni) · 2025-01-10T01:39:10.169Z · LW(p) · GW(p)

Solving the AGI alignment problem demands a herculean level of ambition, far beyond what we're currently bringing to bear. Dear Reader, grab a pen or open a google doc right now and answer these questions:

1. What would you do right now if you became 5x more ambitious?

2. If you believe we all might die soon, why aren't you doing the ambitious thing?

↑ comment by Mitchell_Porter · 2025-01-17T02:08:51.940Z · LW(p) · GW(p)

This comment has been on my mind a lot the past week - not because I'm not ambitious, but because I've always been ambitious (intellectually at least) and frustrated in my ambitions. I've always had goals that I thought were important and neglected, I always directly pursued them from a socially marginal position rather than trying to make money first (or whatever people do when they put off their real ambitions), but I can't say I ever had a decisive breakthrough, certainly not to recognition. So I only have partial progress on a scattered smorgasbord of unfulfilled agendas, and meanwhile, after OpenAI's "o3 Christmas" and the imminent inauguration of an e/acc administration in the USA, it looks more than ever that we are out of time. I would be deeply unsurprised if it's all over by the end of the year.

I'm left with choices like (1) concentrate on family in the final months (2) patch together what I have and use AI to quickly make the best of it (3) throw myself into AI safety. In practice they overlap, I'm doing all three, but there are tensions between them, and I feel the frustration of being badly positioned while also thinking I have no time for the meta-task of improving my position.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2025-01-17T04:43:05.059Z · LW(p) · GW(p)

I think you are right that most people suffer from this lack of big ambition. I think I tend to fail in the other direction. I consistently come up with big plans, which might potentially have big payoffs if I could manage them, and then fail partway through. I'm a lot more effective (on average) working in a team where my big ideas get curtailed and my focus is kept on the achievable. I do also think that I bring value to under-aimers, by encouraging them to think bigger.

Sometimes people literally laugh out loud at me when I tell them about my current goals. I am not poorly calibrated, overall. I tell them that I know my chance of succeeding at my current goal is small, but that the payoff would really matter if I did manage it. In the course of aiming at a long term goal, I do tend to actively try to set aside my realistic estimate of my success, in order to let myself be buoyed by a sense of impending achievement. Being too realistic in the 'doing' phase, rather than the 'planning' phase tends to sharply bring down my chance of sticking with the project long enough that it has a chance to succeed.

comment by yanni kyriacos (yanni) · 2024-12-20T01:49:25.183Z · LW(p) · GW(p)

I'm not sure of an org that deals with ultra-high net worth individuals (longview?), but someone should reach out to Bryan Johnson. I think he could be persuaded to invest in AI Safety (skip to 1:07:15)

comment by yanni kyriacos (yanni) · 2024-09-22T22:38:26.528Z · LW(p) · GW(p)

AI Safety (in the broadest possible sense, i.e. including ethics & bias) is going be taken very seriously soon by Government decision makers in many countries. But without high quality talent staying in their home countries (i.e. not moving to UK or US), there is a reasonable chance that x/c-risk won’t be considered problems worth trying to solve. X/c-risk sympathisers need seats at the table. IMO local AIS movement builders should be thinking hard about how to keep talent local (this is an extremely hard problem to solve).

comment by yanni kyriacos (yanni) · 2024-06-20T10:36:28.828Z · LW(p) · GW(p)

TIL that the words "fact" and "fiction" come from the same word: "Artifice" - which is ~ "something that has been created".

comment by yanni kyriacos (yanni) · 2025-01-08T22:02:40.847Z · LW(p) · GW(p)

I think > 40% of AI Safety resources should be going into making Federal Governments take seriously the possibility of an intelligence explosion in the next 3 years due to proliferation of digital agents.

comment by yanni kyriacos (yanni) · 2024-12-21T05:10:28.331Z · LW(p) · GW(p)

If transformative AI is defined by its societal impact rather than its technical capabilities (i.e. TAI as process not a technology), we already have what is needed. The real question isn't about waiting for GPT-X or capability Y - it's about imagining what happens when current AI is deployed 1000x more widely in just a few years. This presents EXTREMELY different problems to solve from a governance and advocacy perspective.

E.g. 1: compute governance might no longer be a good intervention

E.g. 2: "Pause" can't just be about pausing model development. It should also be about pausing implementation across use cases

comment by yanni kyriacos (yanni) · 2024-09-23T01:12:10.162Z · LW(p) · GW(p)

I am 90% sure that most AI Safety talent aren't thinking hard enough about what Neglectedness. The industry is so nascent that you could look at 10 analogous industries, see what processes or institutions are valuable and missing and build an organisation around the highest impact one.

The highest impact job ≠ the highest impact opportunity for you!

comment by yanni kyriacos (yanni) · 2024-08-25T22:55:37.490Z · LW(p) · GW(p)

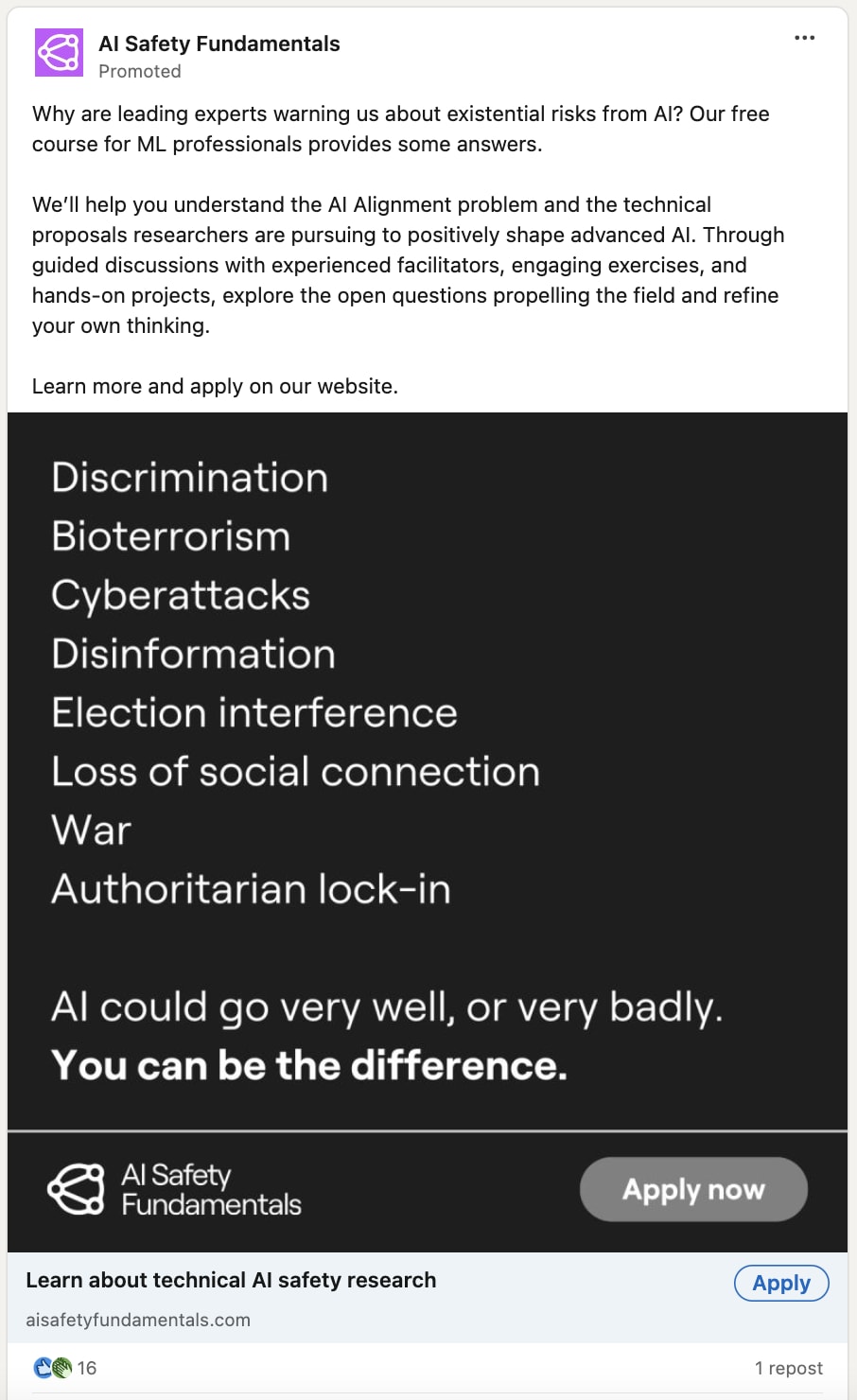

I really like this ad strategy from BlueDot. 5 stars.

90% of the message is still x / c-risk focussed, but by including "discrimination" and "loss of social connection" they're clearly trying to either (or both);

- create a big tent

- nudge people that are in "ethics" but sympathetic to x / c-risk into x / c-risk

(prediction: 4000 disagreement Karma)

↑ comment by habryka (habryka4) · 2024-08-26T01:12:14.524Z · LW(p) · GW(p)

Huh, this is really a surprisingly bad ad. None of the things listed are things that IMO have much to do with why AI is uniquely dangerous (they are basically all misuse-related), and also not really what any of the AI Safety fundamentals curriculum is about (and as such is false advertising). Like, I don't think the AI Safety fundamentals curriculum has anything that will help with election interference or loss of social connection or less likelihood of war or discrimination?

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-08-26T06:20:46.279Z · LW(p) · GW(p)

I should have been clearer - I like the fact that they're trying to create a larger tent and (presumably) win ethics people over. There are many reasons not also not like the ad. I would also guess that they have an automated campaign running with several (maybe dozens) of pieces of creative. Without seeing their analytics it would be impossible to know which performs the best, but it wouldn't surprise me if it was this one (lists work).

comment by yanni kyriacos (yanni) · 2024-08-06T23:23:40.359Z · LW(p) · GW(p)

I always get downvoted when I suggest that (1) if you have short timelines and (2) you decide to work at a Lab then (3) people might hate you soon and your employment prospects could be damaged.

What is something obvious I'm missing here?

One thing I won't find convincing is someone pointing at the Finance industry post GFC as a comparison.

I believe the scale of unemployment could be much higher. E.g. 5% ->15% unemployment in 3 years.

Replies from: faul_sname, yanni↑ comment by faul_sname · 2024-08-07T06:41:13.361Z · LW(p) · GW(p)

If you work in a generative AI lab a significant number of people already hate the work you're doing and would likely hate you specifically if your existence became salient to you, for reasons that are at best tangentially related to your contribution to existential risk. This is true regardless of what your timelines look like.

But I don't understand the mechanism by which working in a frontier AI lab is supposed to damage your employment prospects. The set of people who hate you for causing technological unemployment is probably not going to intersect much with the set of people who are making hiring decisions. People who have a history of doing antisocial-but-profitable-for-their-employer stuff get hired all the time, and proudly advertise those profitable antisocial activities on their resumes.

In the extreme, you could argue that working in a frontier AI lab could lead to total human obsolescence, which would harm your job prospects on account of there are no jobs anywhere for anyone. But that's like saying "crashing into an iceberg could cause a noticeable decrease in the number of satisfied diners in the dining saloon of the Titanic".

↑ comment by yanni kyriacos (yanni) · 2024-08-06T23:28:47.297Z · LW(p) · GW(p)

My instinct as to why people don't find it a compelling argument;

- They don't have short timelines like me, and therefore chuck it out completely

- Are struggling to imagine a hostile public response to 15% unemployment rates

- Copium

comment by yanni kyriacos (yanni) · 2024-07-15T23:20:07.533Z · LW(p) · GW(p)

I think it is good to have some ratio of upvoted/agreed : downvotes/disagreed posts in your portfolio. I think if all of your posts are upvoted/high agreeance then you're either playing it too safe or you've eaten the culture without chewing first.

comment by yanni kyriacos (yanni) · 2024-07-10T23:32:57.193Z · LW(p) · GW(p)

I'm pretty confident that a majority of the population will soon have very negative attitudes towards big AI labs. I'm extremely unsure about what impact this will have on the AI Safety and EA communities (because we work with those labs in all sorts of ways). I think this could increase the likelihood of "Ethics" advocates becoming much more popular, but I don't know if this necessarily increases catastrophic or existential risks.

comment by yanni kyriacos (yanni) · 2024-07-10T23:17:45.833Z · LW(p) · GW(p)

Not sure if this type of concern has reached the meta yet, but if someone approached me asking for career advice, tossing up whether to apply for a job at a big AI lab, I would let them know that it could negatively affect their career prospects down the track because so many people now perceive such as a move as either morally wrong or just plain wrong-headed. And those perceptions might only increase over time. I am not making a claim here beyond this should be a career advice consideration.

comment by yanni kyriacos (yanni) · 2024-06-12T00:38:12.438Z · LW(p) · GW(p)

When AI Safety people are also vegetarians, vegans or reducetarian, I am pleasantly surprised, as this is one (of many possible) signals to me they're "in it" to prevent harm, rather than because it is interesting.

comment by yanni kyriacos (yanni) · 2024-05-16T00:11:31.602Z · LW(p) · GW(p)

Yesterday Greg Sadler and I met with the President of the Australian Association of Voice Actors. Like us, they've been lobbying for more and better AI regulation from government. I was surprised how much overlap we had in concerns and potential solutions:

1. Transparency and explainability of AI model data use (concern)

2. Importance of interpretability (solution)

3. Mis/dis information from deepfakes (concern)

4. Lack of liability for the creators of AI if any harms eventuate (concern + solution)

5. Unemployment without safety nets for Australians (concern)

6. Rate of capabilities development (concern)

They may even support the creation of an AI Safety Institute in Australia. Don't underestimate who could be allies moving forward!

comment by yanni kyriacos (yanni) · 2024-05-15T00:04:43.343Z · LW(p) · GW(p)

Ilya Sutskever has left OpenAI https://twitter.com/ilyasut/status/1790517455628198322

comment by yanni kyriacos (yanni) · 2024-04-24T00:26:55.384Z · LW(p) · GW(p)

If GPT5 actually comes with competent agents then I expect this to be a "Holy Shit" moment at least as big as ChatGPT's release. So if ChatGPT has been used by 200 million people, then I'd expect that to at least double within 6 months of GPT5 (agent's) release. Maybe triple. So that "Holy Shit" moment means a greater share of the general public learning about the power of frontier models. With that will come another shift in the Overton Window. Good luck to us all.

comment by yanni kyriacos (yanni) · 2024-04-22T03:17:03.711Z · LW(p) · GW(p)

The catchphrase I walk around with in my head regarding the optimal strategy for AI Safety is something like: Creating Superintelligent Artificial Agents* (SAA) without a worldwide referendum is ethically unjustifiable. Until a consensus is reached on whether to bring into existence such technology, a global moratorium is required (*we already have AGI).

I thought it might be useful to spell that out.

comment by yanni kyriacos (yanni) · 2025-02-05T22:21:13.426Z · LW(p) · GW(p)

AI Safety Monthly Meetup - Brief Impact Analysis

For the past 8 months, we've (AIS ANZ) been running consistent community meetups across 5 cities (Sydney, Melbourne, Brisbane, Wellington and Canberra). Each meetup averages about 10 attendees with about 50% new participant rate, driven primarily through LinkedIn and email outreach. I estimate we're driving unique AI Safety related connections for around $6.

Volunteer Meetup Coordinators organise the bookings, pay for the Food & Beverage (I reimburse them after the fact) and greet attendees. This initiative would literally be impossible without them.

Key Metrics:

- Total Unique New Members: 200

- 5 cities × 5 new people per month × 8 months

- Consistent 50% new attendance rate maintained

- Network Growth: 600 new connections

- Each new member makes 3 new connections

- Only counting initial meetup connections, actual number likely higher

- Cost Analysis:

- Events: $3,000 (40 meetups × $75 Food & Beverage per meetup)

- Marketing: $600

- Total Cost: $3,600

- Cost Efficiency: $6 per new connection ($3,600/600)

ROI: We're creating unique AI Safety related connections at $6 per connection, with additional network effects as members continue to attend and connect beyond their initial meetup.

comment by yanni kyriacos (yanni) · 2025-01-29T23:58:42.526Z · LW(p) · GW(p)

One axis where Capabilities and Safety people pull apart the most, with high consequences is on "asking for forgiveness instead of permission."

1) Safety people need to get out there and start making stuff without their high prestige ally nodding first

2) Capabilities people need to consider more seriously that they're building something many people simply do not want

comment by yanni kyriacos (yanni) · 2024-12-11T00:05:09.983Z · LW(p) · GW(p)

What is your AI Capabilities Red Line Personal Statement? It should read something like "when AI can do X in Y way, then I think we should be extremely worried / advocate for a Pause*".

I think it would be valuable if people started doing this; we can't feel when they're on an exponential, so its likely we will have powerful AI creep up on us.

@Greg_Colbourn [EA · GW] just posted this [EA · GW] and I have an intuition that people are going to read it and say "while it can do Y it still can't do X"

*in the case you think a Pause is ever optimal.

↑ comment by Vladimir_Nesov · 2024-12-11T00:31:59.554Z · LW(p) · GW(p)

A Pause doesn't stop capabilities at the currently demonstrated level. In that sense, GPT-2 might've been a prudent threshold (in a saner civilization) for when to coordinate to halt improvement in semiconductor technology and to limit production capacity for better nodes (similarly to not building too many centrifuges for enriching uranium, as a first line of defense against a nuclear winter). If not GPT-2, when the power of scaling wasn't yet obvious to most, then certainly GPT-3.

In our world, I don't see it happening other than in response to a catastrophe where there are survivors, the good outcomes our civilization might be capable of reaching lack dignity and don't involve a successful Pause. A Pause must end, so conditions for a Pause need to be sensitive to new developments that make Pausing no longer necessary, which could also be a problem in a survivable catastrophe world.

comment by yanni kyriacos (yanni) · 2024-11-06T00:54:51.410Z · LW(p) · GW(p)

I'd like to see research that uses Jonathan Haidt's Moral Foundations research and the AI risk repository to forecast whether there will soon be a moral backlash / panic against frontier AI models.

Ideas below from Claude:

# Conservative Moral Concerns About Frontier AI Through Haidt's Moral Foundations

## Authority/Respect Foundation

### Immediate Concerns

- AI systems challenging traditional hierarchies of expertise and authority

- Undermining of traditional gatekeepers in media, education, and professional fields

- Potential for AI to make decisions traditionally reserved for human authority figures

### Examples

- AI writing systems replacing human editors and teachers

- AI medical diagnosis systems challenging doctor authority

- Language models providing alternative interpretations of religious texts

## Sanctity/Purity Foundation

### Immediate Concerns

- AI "thinking" about sacred topics or generating religious content

- Artificial beings engaging with spirituality and consciousness

- Degradation of "natural" human processes and relationships

### Examples

- AI spiritual advisors or prayer companions

- Synthetic media creating "fake" human experiences

- AI-generated art mixing sacred and profane elements

## Loyalty/Ingroup Foundation

### Immediate Concerns

- AI systems potentially eroding national sovereignty

- Weakening of traditional community bonds

- Displacement of local customs and practices

### Examples

- Global AI systems superseding national control

- AI replacing local business relationships

- Automated systems reducing human-to-human community interactions

## Fairness/Reciprocity Foundation

### Immediate Concerns

- Unequal access to AI capabilities creating new social divides

- AI systems making decisions without clear accountability

- Displacement of workers without reciprocal benefits

### Examples

- Elite access to powerful AI tools

- Automated systems making hiring/firing decisions

- AI wealth concentration without clear social benefit

## Harm/Care Foundation

### Immediate Concerns

- Potential for AI systems to cause unintended societal damage

- Psychological impact on children and vulnerable populations

- Loss of human agency and autonomy

### Examples

- AI manipulation of emotions and behavior

- Impact on childhood development

- Dependency on AI systems for critical decisions

## Key Trigger Points for Conservative Moral Panic

1. Religious Content Generation

- AI systems creating or interpreting religious texts

- Automated spiritual guidance

- Virtual religious experiences

2. Traditional Value Systems

- AI challenging traditional moral authorities

- Automated ethical decision-making

- Replacement of human judgment in moral domains

3. Community and Family

- AI impact on child-rearing and education

- Disruption of traditional social structures

- Replacement of human relationships

4. National Identity

- Foreign AI influence on domestic affairs

- Cultural homogenization through AI

- Loss of local control over technology

## Potential Flashpoints for Immediate Backlash

1. Education System Integration

- AI teachers and tutors

- Automated grading and assessment

- Curriculum generation

2. Religious Institution Interaction

- AI pastoral care

- Religious text interpretation

- Spiritual counseling

3. Media and Information Control

- AI content moderation

- Synthetic media generation

- News creation and curation

4. Family and Child Protection

- AI childcare applications

- Educational AI exposure

- Family privacy concerns

comment by yanni kyriacos (yanni) · 2024-09-19T01:53:20.880Z · LW(p) · GW(p)

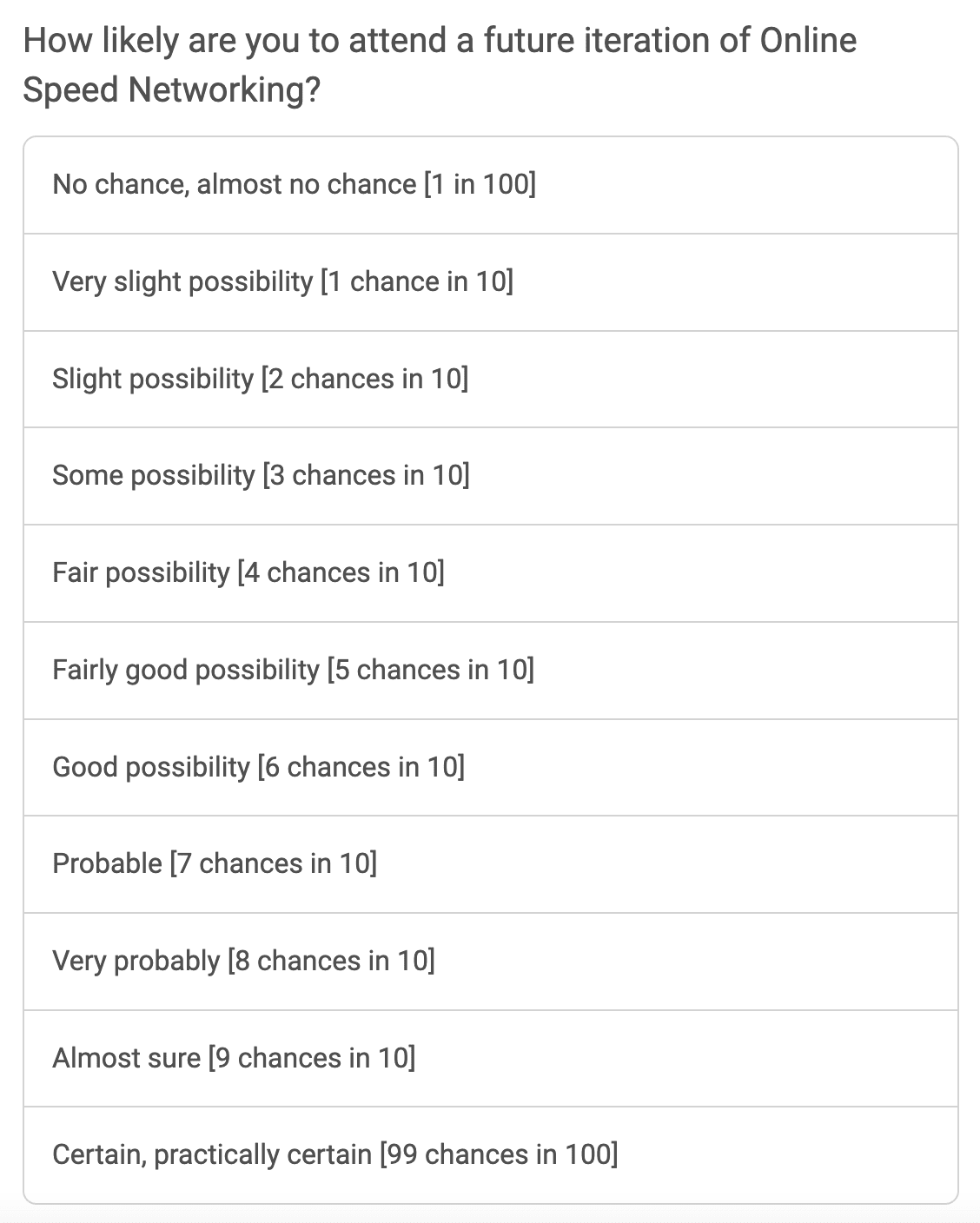

I beta tested a new movement building format last night: online networking. It seems to have legs.

V quick theory of change:

> problem it solves: not enough people in AIS across Australia (especially) and New Zealand are meeting each other (this is bad for the movement and people's impact).

> we need to brute force serendipity to create collabs.

> this initiative has v low cost

quantitative results:

> I purposefully didn't market it hard because it was a beta. I literally got more people that I hoped for

> 22 RSVPs and 18 attendees

> this says to me I could easily get 40+

> average score for below question was 7.27, which is very good for a beta test

I used Zoom, which was extremely clunky. These results suggest to me I should;

> invest in software designed for this use case, not zoom

> segment by career stream (governance vs technical) and/or experience (beginner vs advanced)

> run it every second month

I have heaps of qualitative feedback from participants but don't have time to share it here.

Email me if interested: yanni@aisafetyanz.com.au

comment by yanni kyriacos (yanni) · 2024-09-12T22:22:43.761Z · LW(p) · GW(p)

Is anyone in the AI Governance-Comms space working on what public outreach should look like if lots of jobs start getting automated in < 3 years?

I point to Travel Agents a lot not to pick on them, but because they're salient and there are lots of them. I think there is a reasonable chance in 3 years that industry loses 50% of its workers (3 million globally).

People are going to start freaking out about this. Which means we're in "December 2019" all over again, and we all remember how bad Government Comms were during COVID.

Now is the time to start working on the messaging!

comment by yanni kyriacos (yanni) · 2024-08-04T21:36:56.513Z · LW(p) · GW(p)

I’d like to quickly skill up on ‘working with people that have a kind of neurodivergence’.

This means;

- understanding their unique challenges

- how I can help them get the best from/for themselves

- but I don’t want to sink more than two hours into this

Can anyone recommend some online content for me please?

Replies from: mesaoptimizer↑ comment by mesaoptimizer · 2024-08-06T07:41:04.938Z · LW(p) · GW(p)

I recommend messaging people who seem to have experience doing so, and requesting to get on a call with them. I haven't found any useful online content related to this, and everything I've learned in relation to social skills and working with neurodivergent people, I learned by failing and debugging my failures.

Replies from: yanni↑ comment by yanni kyriacos (yanni) · 2024-08-06T23:18:24.839Z · LW(p) · GW(p)

Thanks for the feedback! I had a feeling this is where I'd land :|

comment by yanni kyriacos (yanni) · 2024-07-17T01:50:27.156Z · LW(p) · GW(p)

Something bouncing around my head recently ... I think I agree with the notion that "you can't solve a problem at the level it was created".

A key point here is the difference between "solving" a problem and "minimising its harm".

- Solving a problem = engaging with a problem by going up a level from which is was createwd

- Minimising its harm = trying to solve it at the level it was created

Why is this important? Because I think EA and AI Safety have historically focussed (and has their respective strengths in) harm-minimisation.

This applies obviously the micro. Here are some bad examples:

- Problem: I'm experiencing intrusive + negative thoughts

- Minimising its harm: engage with the thought using CBT

- Attempting to solve it by going meta: apply meta cognitive therapy, see thoughts as empty of intrinsic value, as farts in the wind

- Problem: I'm having fights with my partner about doing the dishes

- Minimising its harm: create a spreadsheet and write down every thing each of us does around the house and calculate time spent

- Attempting to solve it by going meta: discuss our communication styles and emotional states when frustration arises

But I also think this applies at the macro:

- Problem: People love eating meat

- Minimising harm by acting at the level the problem was created: asking them not to eat meat

- Attempting to solve by going meta: replacing the meat with lab grown meat

- Problem: Unaligned AI might kill us

- Minimising harm by acting at the level the problem was created: understand the AI through mechanistic interpretability

- Attempting to solve by going meta: probably just Governance

comment by yanni kyriacos (yanni) · 2024-07-04T00:39:31.813Z · LW(p) · GW(p)

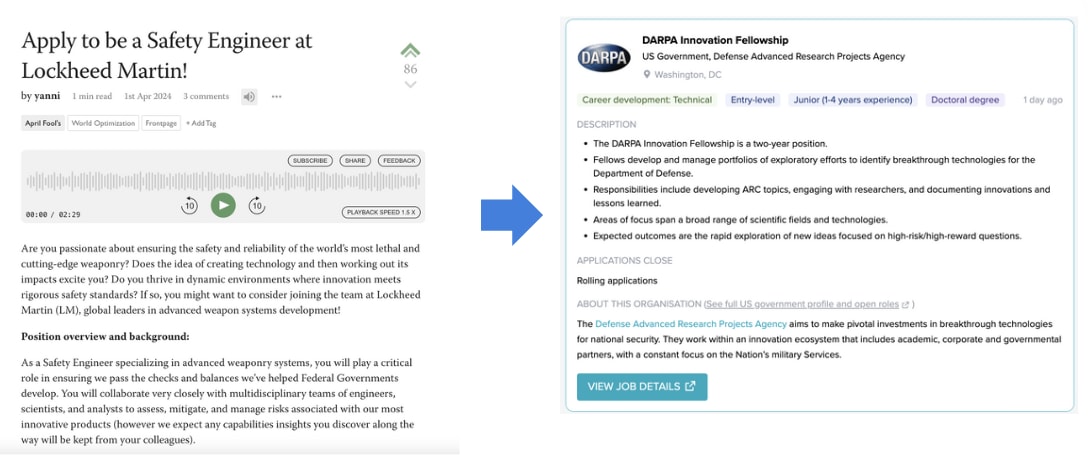

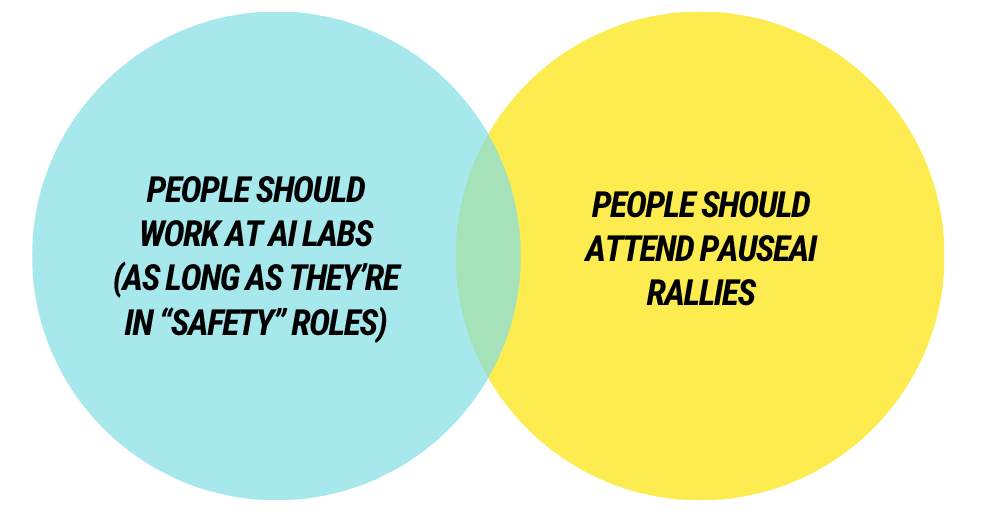

[IMAGE] there is something about the lack overlap between these two audiences that makes me uneasy. WYD?

↑ comment by metachirality · 2024-07-04T02:20:15.257Z · LW(p) · GW(p)

I think working on safety roles at capabilities orgs is mostly mutually exclusive with a pause, so I don't think this is that remarkable.

comment by yanni kyriacos (yanni) · 2024-06-21T09:43:59.480Z · LW(p) · GW(p)

A piece of career advice I've given a few times recently to people in AI Safety, which I thought worth repeating here, is that AI Safety is so nascent a field that the following strategy could be worth pursuing:

1. Write your own job description (whatever it is that you're good at / brings joy into your life).

2. Find organisations that you think need thing that job but don't yet have it. This role should solve a problem they either don't know they have or haven't figured out how to solve.

3. Find the key decision maker and email them. Explain the (their) problem as you see it and how having this role could fix their problem. Explain why you're the person to do it.

I think this might work better for mid-career people, but, if you're just looking to skill up and don't mind volunteering, you could adapt this approach no matter what stage you're at in your career.

Replies from: mesaoptimizer↑ comment by mesaoptimizer · 2024-06-21T11:54:47.250Z · LW(p) · GW(p)

There's generally a cost to managing people and onboarding newcomers, and I expect that offering to volunteer for free is usually a negative signal, since it implies that there's a lot more work than usual that would need to be done to onboard this particular newcomer.

Have you experienced otherwise? I'd love to hear some specifics as to why you feel this way.

comment by yanni kyriacos (yanni) · 2024-05-30T00:59:50.105Z · LW(p) · GW(p)

I've decided to post something very weird because it might (in some small way) help shift the Overton Window on a topic: as long as the world doesn't go completely nuts due to AI, I think there is a 5%-20% chance I will reach something close to full awakening / enlightenment in about 10 years. Something close to this:

comment by yanni kyriacos (yanni) · 2024-05-28T00:29:58.807Z · LW(p) · GW(p)

Very quick thoughts on setting time aside for strategy, planning and implementation, since I'm into my 4th week of strategy development and experiencing intrusive thoughts about needing to hurry up on implementation;

- I have a 52 week LTFF grant to do movement building in Australia (AI Safety)

- I have set aside 4.5 weeks for research (interviews + landscape review + maybe survey) and strategy development (segmentation, targeting, positioning),

- Then 1.5 weeks for planning (content, events, educational programs), during which I will get feedback from others on the plan and then iterate it.

- This leaves me with 46/52 weeks to implement ruthlessly.

In conclusion, 6 weeks on strategy and planning seems about right. 2 weeks would have been too short, 10 weeks would have been too long, this porridge is juuuussttt rightttt.

keen for feedback from people in similar positions.

comment by yanni kyriacos (yanni) · 2024-05-22T21:19:10.470Z · LW(p) · GW(p)

I have an intuition that if you tell a bunch of people you're extremely happy almost all the time (e.g. walking around at 10/10) then many won't believe you, but if you tell them that you're extremely depressed almost all the time (e.g. walking around at 1/10) then many more would believe you. Do others have this intuition? Keen on feedback.

Replies from: cubefox, bideup↑ comment by cubefox · 2024-05-22T23:27:36.069Z · LW(p) · GW(p)

In my experience, people with mania (the opposite of depression) tend to exhibit more visible symptoms, like talking a lot and very loudly, laughing more than the situation warrants, appearing overconfident etc. While people with depression are harder to notice, except in severe cases were they can't even get out of bed. So if someone doesn't have symptoms of mania, it is likely they aren't manic.

Of course it is possible there are extremely happy people who aren't manic, but equally it is also possible that there are extremely unhappy people who aren't depressed. Since the latter seems rare, the former seem also rare.

comment by yanni kyriacos (yanni) · 2024-05-22T00:25:42.831Z · LW(p) · GW(p)

Two jobs in AI Safety Advocacy that AFAICT don't exist, but should and probably will very soon. Will EAs be the first to create them though? There is a strong first mover advantage waiting for someone -

1. Volunteer Coordinator - there will soon be a groundswell from the general population wanting to have a positive impact in AI. Most won't know how to. A volunteer manager will help capture and direct their efforts positively, for example, by having them write emails to politicians [EA(p) · GW(p)]

2. Partnerships Manager - the President of the Voice Actors guild reached out to me recently. We had a very surprising number of cross over in concerns and potential solutions. Voice Actors are the canary in the coal mine. More unions (etc) will follow very shortly. I imagine within 1 year there will be a formalised group of these different orgs advocating together.

comment by yanni kyriacos (yanni) · 2024-05-12T02:27:06.232Z · LW(p) · GW(p)

Help clear something up for me: I am extremely confused (theoretically) how we can simultaneously have:

1. An Artificial Superintelligence

2. It be controlled by humans (therefore creating misuse of concentration of power issues)

My intuition is that once it reaches a particular level of power it will be uncontrollable. Unless people are saying that we can have models 100x more powerful than GPT4 without it having any agency??

Replies from: Mitchell_Porter, Davidmanheim↑ comment by Mitchell_Porter · 2024-05-12T03:06:16.834Z · LW(p) · GW(p)

You could have a Q&A superintelligence that is passive and reactive - it gives the best answer to a question, on the basis of what it already knows, but it takes no steps to acquire more information, and when it's not asked a question, it just sits there... But any agent that uses it, would de facto become a superintelligence with agency.

↑ comment by Davidmanheim · 2024-05-12T06:36:20.179Z · LW(p) · GW(p)

This is one of the key reasons that the term alignment was invented and used instead of control; I can be aligned with the interests of my infant, or my pet, without any control on their part.

comment by yanni kyriacos (yanni) · 2024-05-02T00:20:42.516Z · LW(p) · GW(p)

Something someone technical and interested in forecasting should look into: can LLMs reliably convert peoples claims into a % of confidence through sentiment analysis? This would be useful for Forecasters I believe (and rationality in general)

comment by yanni kyriacos (yanni) · 2024-04-20T06:03:31.589Z · LW(p) · GW(p)

Be the meme you want to see in the world (screenshot).

comment by yanni kyriacos (yanni) · 2024-03-27T07:35:49.638Z · LW(p) · GW(p)

[GIF] A feature I'd love on the forum: while posts are read back to you, the part of the text that is being read is highlighted. This exists on Naturalreaders.com and would love to see it here (great for people who have wandering minds like me).

comment by yanni kyriacos (yanni) · 2024-03-13T23:58:40.352Z · LW(p) · GW(p)

I think acting on the margins is still very underrated. For e.g. I think 5x the amount of advocacy for a Pause on capabilities development of frontier AI models would be great. I also think in 12 months time it would be fine for me to reevaluate this take and say something like 'ok that's enough Pause advocacy'.

Basically, you shouldn't feel 'locked in' to any view. And if you're starting to feel like you're part of a tribe, then that could be a bad sign you've been psychographically locked in.

comment by yanni kyriacos (yanni) · 2024-06-05T21:02:35.278Z · LW(p) · GW(p)

I went to buy a ceiling fan with a light in it recently. There was one on sale that happened to also tick all my boxes, joy! But the salesperson warned me "the light in this fan can't be replaced and only has 10,000 hours in it. After that you'll need a new fan. So you might not want to buy this one." I chuckled internally and bought two of them, one for each room.

Replies from: Viliam↑ comment by Viliam · 2024-06-07T12:17:15.821Z · LW(p) · GW(p)

So basically the lights will only run out after a time sufficient to become a master at something.

If you become a master at replacing lights, problem solved. :D

comment by yanni kyriacos (yanni) · 2024-05-05T02:11:46.675Z · LW(p) · GW(p)

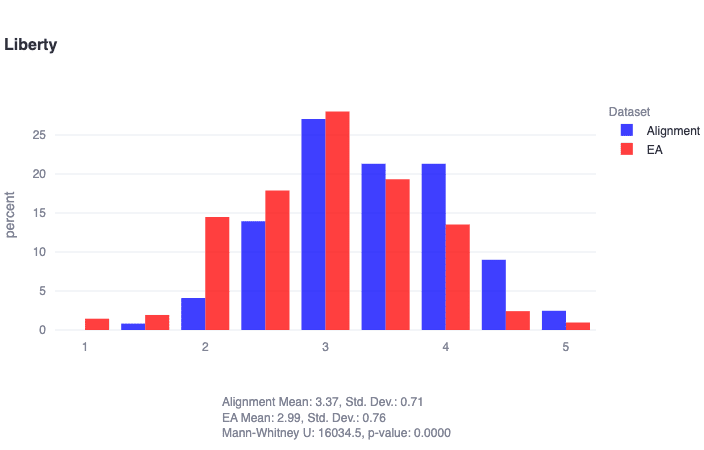

"alignment researchers are found to score significantly higher in liberty (U=16035, p≈0)" This partly explains why so much of the alignment community doesn't support PauseAI!

"Liberty: Prioritizes individual freedom and autonomy, resisting excessive governmental control and supporting the right to personal wealth. Lower scores may be more accepting of government intervention, while higher scores champion personal freedom and autonomy..."

https://forum.effectivealtruism.org/posts/eToqPAyB4GxDBrrrf/key-takeaways-from-our-ea-and-alignment-research-surveys#comments

comment by yanni kyriacos (yanni) · 2024-06-14T01:37:55.602Z · LW(p) · GW(p)

one of my more esoteric buddhist practices is to always spell my name in lower case; it means I am a regularly reminded of the illusory nature of Self, while still engaging in imagined reality (Parikalpitā-svabhāva) in a practical way.