Posts

Comments

Can confirm. Half the LessWrong posts I've read in my life were read in the shower.

no one's getting a million dollars and an invitation the the beisutsukai

honored.

I like object-level posts that also aren't about AI. They're a minority on LW now, so they feel like high signals in a sea of noise. (That doesn't mean they're necessarily more signally, just that the rarity makes it seem that way to me.)

It felt odd to read that and think "this isn't directed toward me, I could skip if I wanted to". Like I don't know how to articulate the feeling, but it's an odd "woah text-not-for-humans is going to become more common isn't it". Just feels strange to be left behind.

Thank you for this. I feel like a general policy of "please at least disclose" would make me feel significantly less insane when reading certain posts.

Have you tried iterating on this? Like, the "I don't care about the word prodrome'" sounds like the kind of thing you could include in your prompt and reiterate until everything you don't like about the LLM's responses is solved or you run out of ideas.

Also fyi ChatGPT Deep Research uses the "o3" model, not 4o, even if it says 4o at the top left (you can try running Deep Research with any of the models selected in the top left and it will output the same kind of thing).

o3 was RLed (!) into being particularly good at web search (and tangential skills like avoiding suspicious links), and isn't released in a way that lets you just chat with it. The output isn't even raw o3, it's the o3-mini model summarizing o3's chain of thought (where o3 will think things, send a dozen tentacles out into the web, then continue thinking).

I learned this when I asked Deep Research to reverse engineer itself, and it linked the model card which in retrospect I should have done first and was foolish not to.

Anyway I mention this because afaik all the other deep research frameworks are a lot less specialized than OpenAI's, and more like "we took an LLM and gave it access to the internet and let it think and search for a really long time". I expect OpenAI to continue being SOTA here for a while.

Though I do enjoy using Grok's "DeepSearch" and "DeeperSearch" function sometimes; it's free and fun to watch (but terrible at understanding user intent, which I attribute to how little-flexible it is. It won't listen to suggestions on where to look first or how to structure its research, relying on whatever system prompt it was given instead), you might want to check it out and update this post.

My highlight link didn't work but in the second example, this is the particular passage that drove me crazy:

The punchline works precisely because we recognize that slightly sheepish feeling of being reflexively nice to inanimate objects. It transforms our "irrational" politeness into accidental foresight.

The joke hints at an important truth, even if it gets the mechanism wrong: our conversations with current artificial intelligences may not be as consequence-free as they seem.

That's fair, I think I was being overconfident and frustrated, such that these don't express my real preferences.

But I did make it clear these were preferences unrelated to my call, which was "you should warn people" not "you should avoid direct LLM output entirely". I wouldn't want such a policy, and wouldn't know how to enforce it anyway.

I think I'm allowed to have an unreasonable opinion like "I will read no LLM output I don't prompt myself, please stop shoving it into my face" and not get called on epistemic grounds except in the context of "wait this is self-destructive, you should stop for that reason". (And not in the context of e.g. "you're hurting the epistemic commons".)

You can also ask Raemon or habykra why they, too, seem to systematically downvote content they believe to be LLM-generated. I don't think they're being too unreasonable either.

That said, I agree with you there's a strong selection effect with what writers choose to keep from the LLM, and that there's also the danger of people writing exactly like LLMs and me calling them out on it unfairly. I tried hedging against this the first time, though maybe that was in a too-inflammatory manner. The second time, I decided to write this OP instead of addressing the local issue directly, because I don't want to be writing something new each time and would rather not make "I hate LLM output on LW" become part of my identity, so I'll keep it to a minimum after this.

Both these posts I found to have some value, though in the same sense my own LLM outputs have value, where I'll usually quickly scan what's said instead of reading thoroughly. LessWrong has always seemed to me to be among the most information-dense places out there, and I hate to see some users go this direction instead. If we can't keep low density writing out of LessWrong, I don't know where to go after that. (And I am talking about info density, not style. Though I do find style grating sometimes as well.)

I consider a text where I have to skip through entire paragraphs and ignore every 5th filler word (e.g. "fascinating") to be bad writing, and not inherently enjoyable beyond the kernel of signal there may be in all that noise. And I don't think I would be being unfair if I demanded this level of quality, because this site is a fragile garden with high standards and maintaining high standards is the same thing as not tolerating mediocrity.

Also everyone has access to the LLMs, and if I wanted an LLM output, I would ask it myself, and I don't consider your taste in selection to bring me that much value.

I also believe (though can't back this up) that I spend nearly ~ an order of magnitude more time talking to LLMs than the average person on LW, and am a little skeptical of the claim that maybe I've been reading some direct LLM output on here without knowing it. Though that day will come.

It also doesn't take much effort not to paste LLM output outright, so past a certain bar of quality I don't think people are doing this. (Hypothetical people who are spending serious effort selecting LLM outputs to put under their own name would just be writing it directly in the real world.)

If it doesn't clutter the UI too much, I think an explicit message near the submit button saying "please disclose if part of your post is copy-pasted from an LLM" would go a long way!

If this is the way the LW garden-keepers feel about LLM output, then why not make that stance more explicit? Can't find a policy for this in the FAQ either!

I think some users here think LLM output can be high value reading and they don't think a warning is necessary—that they're acting in good faith and would follow a prompt to insert a warning if given.

Touching. Thank you for this.

When I was 11 I cut off some of my very-much-alive cat's fur to ensure future cloning would be possible, and put it in a little plastic bag I hid from my parents. He died when I was 15, and the bag is still somewhere in my Trunk of Everything.

I don't imagine there's much genetic content left but also I have a vague intuition that we severely underestimate how much information a superintelligence could extract from reality—so I'll keep onto a lingering hope.

My past self would have wanted me to keep tabs on how the technology is going.

(For those hypothetically wondering, I also cut off some of my own hair that day in case someone would like to clone ME after death, and that bag is still in the Trunk as well.)

(And I couldn't do this to my cat, but my past self also wrote over a million words about everything he experienced in a google docs in the hopes that the clone could know who his predecessor was and model himself accordingly. I was one of those terrified-of-death kids.)

Can we have a LessWrong official stance to LLM writing?

The last 2 posts I read contained what I'm ~95% sure is LLM writing, and both times I felt betrayed, annoyed, and desirous to skip ahead.

I would feel saner if there were a "this post was partially AI written" tag authors could add to as a warning. I think an informal standard of courteously warning people could work too, but that requires slow coordination-by-osmosis.

Unrelatedly to my call, and as a personal opinion, I don't think you're adding any value to me if you include even a single paragraph of copy-and-pasted Sonnet 3.7 or GPT 4o content. I will become the joker next time I hear a LessWrong user say "this is fascinating because it not only sheds light onto the profound metamorphosis of X, but also hints at a deeper truth".

My calendar reminder didn't go off, are submissions closed-closed?

Oh yeah no problem with writing with LLMs, only doing it without disclosing it. Though I guess this wasn't the case here, sry for flagging this.

I'm not sure I want to change my approach next time though, bc I do feel like I should be on my toes. Beware of drifting too much toward the LLM's stylebook I guess.

Maybe I'm going crazy, but the frequent use of qualifiers for almost every noun in your writing screams of "LLM" to me. Did you use LLM assistance? I don't get that same feel from your comments, so I'm learning toward an AI having written only the Shortform itself.

If you did use AI, I'd be in favor of you disclosing that so that people like me don't feel like they're gradually going insane.

If not, then I'm sorry and retract this. (Though not sure what to tell you—I think this writing style feels too formal and filled with fluff like "crucial" or "invaluable", and I bet you'll increasingly be taken for an AI in other contexts.)

Sent it in!

The original post, the actual bet, and the short scuffle in the comments is exactly the kind of epistemic virtue, basic respect, and straight-talking object-level discussion that I like about LessWrong.

I'm surprised and saddened that there aren't more posts like this one around (prediction markets are one thing; loud, public bets on carefully written LW posts are another).

Having something like this occur every ~month seems important from the standpoint of keeping the garden on its toes and remind everyone that beliefs must pay rent, possibly in the form of PayPal cash transfers.

I wrote this after watching Oppenheimer and noticing with horror that I wanted to emulate the protagonist in ways entirely unrelated to his merits. Not just unrelated but antithetical: cargo-culting the flaws of competent/great/interesting people is actively harmful to my goals! Why would I do this!? The pattern generalized, so I wrote a rant against myself, then figured it'd be good for LessWrong, and posted it here with minimal edits.

I think the post is crude and messily written, but does the job.

Meta comment: I notice I'm surprised that out of all my posts, this is the one that seems most often revisited (e.g. getting 2 reviews for Best of LW, which I did not expect). I'm updating against karma as a reliable indicator of long-term value as a result: 2 posts I wrote got twice the karma, but were never interacted with beyond their first month. I think they must have been somewhat inspired by hype-shaped memes.

This is an endorsement of the Review function! It has successfully weeded out popular-but-superficial posts of mine and taught me to prioritize whatever's going on in this post. Karma alone has failed to do this.

I think you're right, but I rarely hear this take. Probably because "good at both coding and LLMs" is a light tail end of the distribution, and most of the relative value of LLMs in code is located at the other, much heavier end of "not good at coding" or even "good at neither coding nor LLMs".

(Speaking as someone who didn't even code until LLMs made it trivially easy, I probably got more relative value than even you.)

need any help on post drafts? whatever we can do to reduce those trivial inconveniences

I'm very pro- this kind of post. Whatever this is, I think it's important for ensuring LW doesn't get "frozen" in a state where specific objects are given higher respect than systems. Strong upvoted.

I think you could get a lot out of adding a temporary golden dollar sign with amount donated next to our LW names! Upon proof of donation receipt or whatever.

Seems like the lowest hanging fruit for monetizing vanity— benches being usually somewhat of a last resort!

(The benches seem still underpriced to me, given expected amount raised and average donation size in the foreseeable future).

I've been at Sciences Po for a few months now. Do you have any general advice? I seem to have trouble taking the subjects seriously enough to any real effort in them, which you seem to point out as a failure mode you skirted. Asking as many people I can for this, as I'm going through a minor existential crisis. Thanks!

Yeah that'd go into some "aesthetic flaws" category which presumably has no risk of messing with your rationality. I agree these exist. And I too am picky.

I agree about the punchline. Chef's kiss post

Here's 4:

https://www.lesswrong.com/posts/kj4jW9DxtKQBJbapn/stanislav-petrov-quarterly-performance-review

https://www.lesswrong.com/posts/pL4WhsoPJwauRYkeK/moses-and-the-class-struggle

https://www.lesswrong.com/posts/aRBAhBsc6vZs3WviL/ommc-announces-rip

Can I piggy-back off your conclusions so far? Any news you find okay?

Well then, I can update a little more in the direction not to trust this stuff.

Ah right, the decades part--I had written about the 1930 revolution, commune, and bourbon destitution, then checked the dates online and stupidly thought "ah, it must be just 1815 then" and only talked about that. Thanks

"second" laughcries in french

Ahem, as one of LW's few resident Frenchmen, I must interpose to say that yes, this was not the Big Famous Guillotine French revolution everyone talks about, but one of the ~ 2,456^2 other revolutions that went on in our otherwise very calm history.

Specifically, we refer to the Les Mis revolution as "Les barricades" mostly because the people of Paris stuck barricades everywhere and fought against authority because they didn't like the king the other powers of Europe put into place after Napoleon's defeat. They failed that time, but succeeded 15 years later with another revolution (to put a different king in place).

Victor Hugo loved Napoleon with a passion, and was definitely on the side of the revolutionaries here (though he was but a wee boy when this happened, about the age of Gavroche).

Later, in the 1850s (I'm skipping over a few revolutions, including the one that got rid of kings again), when Haussmann was busy bringing 90% of medieval Paris to rubble to replace it with the homogenous architecture we so admire in Ratatouille today, Napoleon the IIIrd had the great idea to demolish whole blocks and replace them with wide streets (like the Champs Elisées) to make barricade revolutions harder to do.

Final note: THANK YOU LW TEAM for making àccénts like thìs possible with the typeface. They used to look bloated.

Do we know what side we're on? Because I opted in and don't know whether I'm East or West, it just feels Wrong. I guess I stand a non-trivial chance of losing 50 karma ahem please think of the daisy girl and also my precious internet points.

Anti-moderative action will be taken in response if you stand in the way of justice, perhaps by contacting those hackers and giving them creative ideas. Be forewarned.

Fun fact: it's thanks to Lucie that I ended up stumbling onto PauseAI in the first place. Small world + thanks Lucie.

Update everyone: the hard right did not end up gaining a parliamentary majority, which, as Lucie mentioned, could have been the worse outcome wrt AI safety.

Looking ahead, it seems that France will end up being fairly confused and gridlocked as it becomes forced to deal with an evenly-split parliament by playing German-style coalition negociation games. Not sure what that means for AI, except that unilateral action is harder.

For reference, I'm an ex-high school student who just got to vote for the first 3 times in his life because of French political turmoil (✨exciting) and am working these days at PauseAI France, a (soon to be official) governance non-profit aiming to, well—

Anyway, as an org we're writing a counter to the AI commitee mentioned in this post, so that's what's up these days in the French AI safety governance circles.

I'm working on a non-trivial.org project meant to assess the risk of genome sequences by comparing them to a public list of the most dangerous pathogens we know of. This would be used to assess the risk from both experimental results in e.g. BSL-4 labs and the output of e.g. protein folding models. The benchmarking would be carried out by an in-house ML model of ours. Two questions to LessWrong:

1. Is there any other project of this kind out there? Do BSL-4 labs/AlphaFold already have models for this?

2. "Training a model on the most dangerous pathogens in existence" sounds like an idea that could backfire horribly. Can it backfire horribly?

I'm taking this post down, it was to set up an archive.org link as requested by Bostrom, and no longer serves that purpose. Sorry, this was meant to be discreet.

Poetry and practicality

I was staring up at the moon a few days ago and thought about how deeply I loved my family, and wished to one day start my own (I'm just over 18 now). It was a nice moment.

Then, I whipped out my laptop and felt constrained to get back to work; i.e. read papers for my AI governance course, write up LW posts, and trade emails with EA France. (These I believe to be my best shots at increasing everyone's odds of survival).

It felt almost like sacrilege to wrench myself away from the moon and my wonder. Like I was ruining a moment of poetry and stillwatered peace by slamming against reality and its mundane things again.

But... The reason I wrenched myself away is directly downstream from the spirit that animated me in the first place. Whether I feel the poetry now that I felt then is irrelevant: it's still there, and its value and truth persist. Pulling away from the moon was evidence I cared about my musings enough to act on them.

The poetic is not a separate magisterium from the practical; rather the practical is a particular facet of the poetic. Feeling "something to protect" in my bones naturally extends to acting it out. In other words, poetry doesn't just stop. Feel no guilt in pulling away. Because, you're not.

Too obvious imo, though I didn't downnvote. This also might not be an actual rationalist failure mode; in my experience at least, rationalists have about the same intuition all the other humans have about when something should be taken literally or not.

As for why the comment section has gone berserk, no idea, but it's hilarious and we can all use some fun.

Can we have a black banner for the FHI? Not a person, still seems appropriate imo.

See also Alicorn's Expressive Vocabulary.

FHI at Oxford

by Nick Bostrom (recently turned into song):

the big creaky wheel

a thousand years to turn

thousand meetings, thousand emails, thousand rules

to keep things from changing

and heaven forbid

the setting of a precedent

yet in this magisterial inefficiency

there are spaces and hiding places

for fragile weeds to bloom

and maybe bear some singular fruit

like the FHI, a misfit prodigy

daytime a tweedy don

at dark a superhero

flying off into the night

cape a-fluttering

to intercept villains and stop catastrophes

and why not base it here?

our spandex costumes

blend in with the scholarly gowns

our unusual proclivities

are shielded from ridicule

where mortar boards are still in vogue

I've come to think that isn't actually the case. E.g. while I disagree with Being nicer than clippy, it quite precisely nails how consequentialism isn't essentially flawless:

I haven't read that post, but I broadly agree with the excerpt. On green did a good job imo in showing how weirdly imprecise optimal human values are.

It's true that when you stare at something with enough focus, it often loses that bit of "sacredness" which I attribute to green. As in, you might zoom in enough on the human emotion of love and discover that it's just an endless tiling of Shrodinger's equation.

If we discover one day that "human values" are eg 23.6% love, 15.21% adventure and 3% embezzling funds for yachts, and decide to tile the universe in exactly those proportions...[1] I don't know, my gut doesn't like it. Somehow, breaking it all into numbers turned humans into sock puppets reflecting the 23.6% like mindless drones.

The target "human values" seems to be incredibly small, which I guess encapsulates the entire alignment problem. So I can see how you could easily build an intuition from this along the lines of "optimizing maximally for any particular thing always goes horribly wrong". But I'm not sure that's correct or useful. Human values are clearly complicated, but so long as we haven't hit a wall in deciphering them, I wouldn't put my hands up in the air and act as if they're indecipherable.

Unbounded utility maximization aspires to optimize the entire world. This is pretty funky for just about any optimization criterion people can come up with, even if people are perfectly flawless in how well they follow it. There's a bunch of attempts to patch this, but none have really worked so far, and it doesn't seem like any will ever work.

I'm going to read your post and see the alternative you suggest.

- ^

Sounds like a Douglas Adams plot

Interesting! Seems like you put a lot of effort into that 9,000-word post. May I suggest you publish it in little chunks instead of one giant post? You only got 3 karma for it, so I assume that those who started reading it didn't find it worth the effort to read the whole thing. The problem is, that's not useful feedback for you, because you don't know which of those 9,000 words are presumably wrong. If I were building a version of utilitarianism, I would publish it in little bursts of 2-minute posts. You could do that right now with a single section of your original post. Clearly you have tons of ideas. Good luck!

You know, I considered "Bob embezzled the funds to buy malaria nets" because I KNEW someone in the comments would complain about the orphanage. Please don't change.

Actually, the orphanage being a cached thought is precisely why I used it. The writer-pov lesson that comes with "don't fight the hypothetical" is "don't make your hypothetical needlessly distracting". But maybe I miscalculated and malaria nets would be less distracting to LWers.

Anyway, I'm of course not endorsing fund-embezzling, and I think Bob is stupid. You're right in that failure modes associated with Bob's ambitions (eg human extinction) might be a lot worse than those of your typical fund-embezzler (eg the opportunity cost of buying yachts). I imagined Bob as being kind-hearted and stupid, but in your mind he might be some cold-blooded brooding "the price must be paid" type consequentialist. I didn't give details either way, so that's fair.

If you go around saying "the ends justify the means" you're likely to make major mistakes, just like if you walk around saying "lying is okay sometimes". The true lesson here is "don't trust your own calculations, so don't try being clever and blowing up TSMC", not "consequentialism has inherent failure modes". The ideal of consequentialism is essentially flawless; it's when you hand it to sex-obsessed murder monkeys as an excuse to do things that shit hits the fan.

In my mind then, Bob was a good guy running on flawed hardware. Eliezer calls patching your consequentialism by making it bounded "consequentialism, one meta-level up". For him, refusing to embezzle funds for a good cause because the plan could obviously turn sour is just another form of consequentialism. It's like belief in intelligence, but flipped; you don't know exactly how it'll go wrong, but there's a good chance you're unfathomably stupid and you'll make everything worse by acting on "the ends justify the means".

From a practical standpoint though, we both agree and nothing changes: both the cold-hearted Bob and the kind Bob must be stopped. (And both are indeed more likely to make ethically dubious decisions because "the ends justify the means".)

Post-scriptum:

Honestly the one who embezzles funds for unbounded consequentialist purposes sounds much more intellectually interesting

Yeah, this kind of story makes for good movies. When I wrote Bob I was thinking of The Wonderful Story of Mr.Sugar, by Roald Dahl and adapted by Wes Anderson on Netflix. It's at least vaguely EA-spirited, and is kind of in that line (although the story is wholesome, as the name indicates, and isn't meant to warn against dangers associated with boundless consequentialism at all).[1]

- ^

Let's wait for the SBF movie on that one

Link is broken

Re: sociology. I found a meme you might enjoy, which would certainly drive your teacher through the roof: https://twitter.com/captgouda24/status/1777013044976980114

Yeah, that's an excellent idea. I often spot typos in posts, but refrain from writing a comment unless I collect like three. Thanks for sharing!

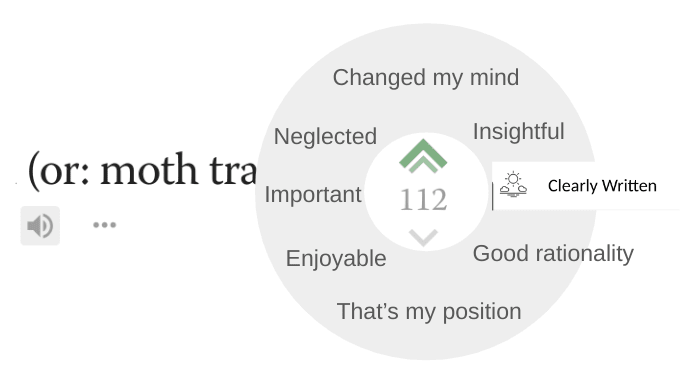

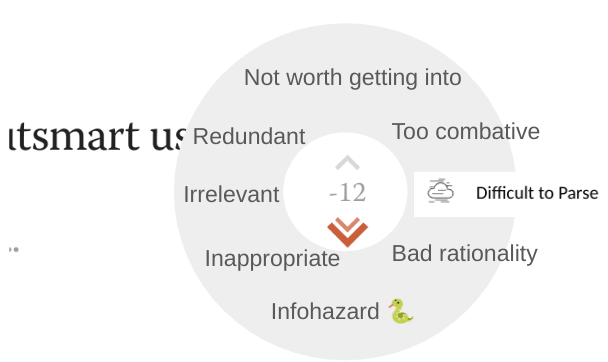

A functionality I'd like to see on LessWrong: the ability to give quick feedback for a post in the same way you can react to comments (click for image). When you strong-upvote or strong-downvote a post, a little popup menu appears offering you some basic feedback options. The feedback is private and can only be seen by the author.

I've often found myself drowning in downvotes or upvotes without knowing why. Karma is a one-dimensional measure, and writing public comments is a trivial inconvience: this is an attempt at middle ground, and I expect it to make post reception clearer.

See below my crude diagrams.