Leon Lang's Shortform

post by Leon Lang (leon-lang) · 2022-10-02T10:05:36.368Z · LW · GW · 64 commentsContents

64 comments

64 comments

Comments sorted by top scores.

comment by Leon Lang (leon-lang) · 2024-11-14T10:19:12.991Z · LW(p) · GW(p)

"Scaling breaks down", they say. By which they mean one of the following wildly different claims with wildly different implications:

- When you train on a normal dataset, with more compute/data/parameters, subtract the irreducible entropy [LW(p) · GW(p)] from the loss, and then plot in a log-log plot: you don't see a straight line anymore.

- Same setting as before, but you see a straight line; it's just that downstream performance doesn't improve .

- Same setting as before, and downstream performance improves, but: it improves so slowly that the economics is not in favor of further scaling this type of setup instead of doing something else.

- A combination of one of the last three items and "btw., we used synthetic data and/or other more high-quality data, still didn't help".

- Nothing in the realm of "pretrained models" and "reasoning models like o1" and "agentic models like Claude with computer use" profits from a scale-up in a reasonable sense.

- Nothing which can be scaled up in the next 2-3 years, when training clusters are mostly locked in, will demonstrate a big enough success to motivate the next scale of clusters costing around $100 billion [LW(p) · GW(p)].

Be precise. See also.

Replies from: Lorec, cubefox, p.b.↑ comment by Lorec · 2024-11-14T18:25:26.323Z · LW(p) · GW(p)

This is a just ask.

Also, even though it's not locally rhetorically convenient [ where making an isolated demand for rigor of people making claims like "scaling has hit a wall [therefore AI risk is far]" that are inconvenient for AInotkilleveryoneism, is locally rhetorically convenient for us ], we should demand the same specificity of people who are claiming that "scaling works", so we end up with a correct world-model and so people who just want to build AGI see that we are fair.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-11-14T19:09:39.295Z · LW(p) · GW(p)

On the question of how much evidence the following scenarios are against the AI scaling thesis (which I roughly take to mean that more FLOPs and compute/data reliably makes AI better for economically important relevant jobs), I'd say that scenarios 4-6 falsify the hypothesis, while 3 is the strongest evidence against the hypothesis, followed by 2 and 1.

4 would make me more willing to buy algorithmic progress as important, 5 would make me more bearish on algorithmic progress, and 6 would make me have way longer timelines than I have now, unless governments fund a massive AI effort.

↑ comment by cubefox · 2024-11-15T13:58:56.685Z · LW(p) · GW(p)

It's not that "they" should be more precise, but that "we" would like to have more precise information.

We know pretty conclusively now from The Information and Bloomberg that for OpenAI, Google and Anthropic, new frontier base LLMs have yielded disappointing performance gains. The question is which of your possibilities did cause this.

They do mention that the availability of high quality training data (text) is an issue, which suggests it's probably not your first bullet point.

comment by Leon Lang (leon-lang) · 2024-07-01T12:04:30.717Z · LW(p) · GW(p)

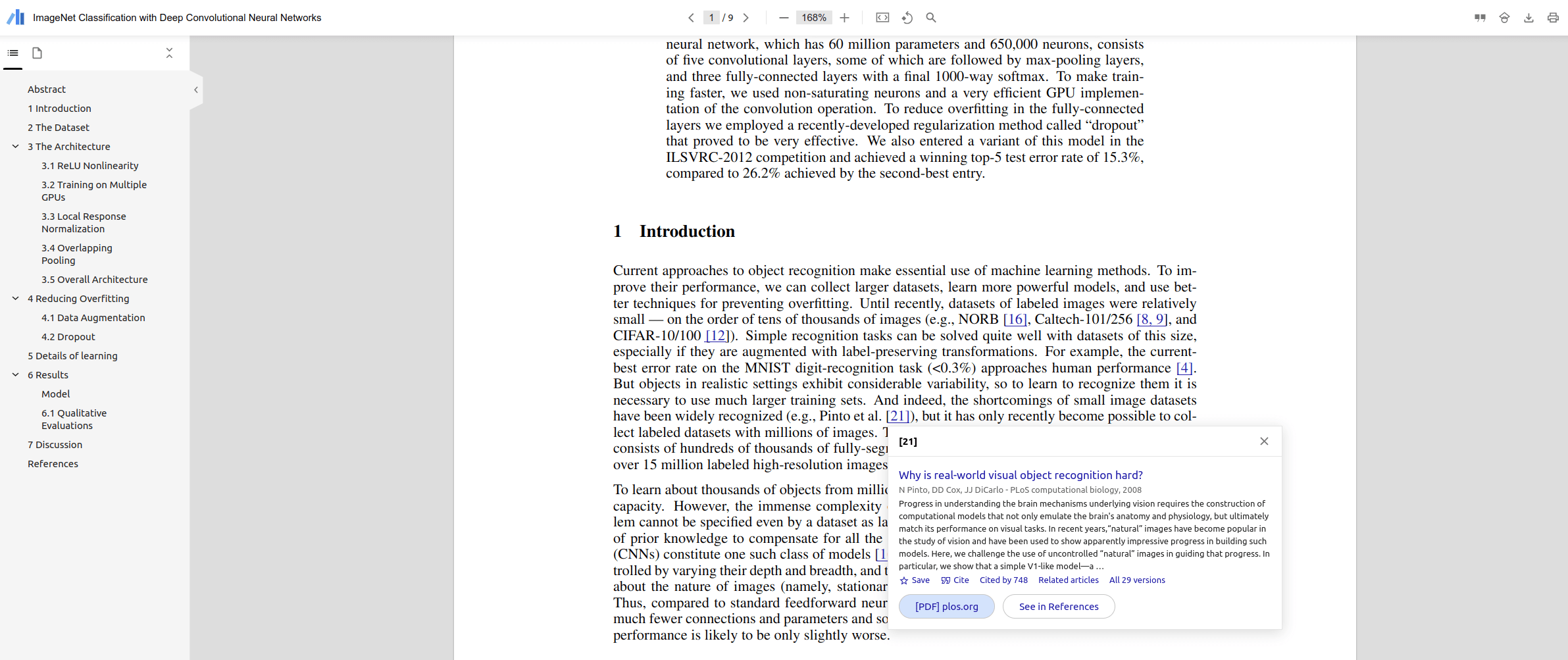

You should all be using the "Google Scholar PDF reader extension" for Chrome.

Features I like:

- References are linked and clickable

- You get a table of contents

- You can move back after clicking a link with Alt+left

Screenshot:

↑ comment by StefanHex (Stefan42) · 2024-07-02T09:53:11.405Z · LW(p) · GW(p)

This is great, love it! Settings recommendation: If you (or your company) want, you can restrict the extension's access from all websites down to the websites you read papers on. Note that the scholar.google.com access is required for the look-up function to work.

↑ comment by Stephen Fowler (LosPolloFowler) · 2024-07-02T06:59:50.892Z · LW(p) · GW(p)

Just started using this, great recommendation. I like the night mode feature which changes the color of the pdf itself.

↑ comment by Neel Nanda (neel-nanda-1) · 2024-07-02T01:17:09.785Z · LW(p) · GW(p)

Strongly agreed, it's a complete game changer to be able to click on references in a PDF and see a popup

↑ comment by Stephen McAleese (stephen-mcaleese) · 2024-07-03T22:15:53.163Z · LW(p) · GW(p)

I think the Zotero PDF reader has a lot of similar features that make the experience of reading papers much better:

- It has a back button so that when you click on a reference link that takes you to the references section, you can easily click the button to go back to the text.

- There is a highlight feature so that you can highlight parts of the text which is convenient when you want to come back and skim the paper later.

- There is a "sticky note" feature allowing you to leave a note in part of the paper to explain something.

comment by Leon Lang (leon-lang) · 2024-09-29T20:44:28.529Z · LW(p) · GW(p)

https://www.wsj.com/tech/ai/californias-gavin-newsom-vetoes-controversial-ai-safety-bill-d526f621

“California Gov. Gavin Newsom has vetoed a controversial artificial-intelligence safety bill that pitted some of the biggest tech companies against prominent scientists who developed the technology.

The Democrat decided to reject the measure because it applies only to the biggest and most expensive AI models and leaves others unregulated, according to a person with knowledge of his thinking”

Replies from: sharmake-farah, green_leaf↑ comment by Noosphere89 (sharmake-farah) · 2024-09-29T22:02:19.954Z · LW(p) · GW(p)

@Zach Stein-Perlman [LW · GW] which part of the comment are you skeptical of? Is it the veto itself, or is it this part?

Replies from: Zach Stein-Perlman, shankar-sivarajanThe Democrat decided to reject the measure because it applies only to the biggest and most expensive AI models and leaves others unregulated, according to a person with knowledge of his thinking”

↑ comment by Zach Stein-Perlman · 2024-09-29T22:02:52.842Z · LW(p) · GW(p)

(Just the justification, of course; fixed.)

↑ comment by Shankar Sivarajan (shankar-sivarajan) · 2024-09-30T03:58:46.275Z · LW(p) · GW(p)

according to a person with knowledge of his thinking

Tangentially, I wonder how often this journalese stock phrase means the "leak" comes from the person himself.

↑ comment by green_leaf · 2024-09-30T02:02:59.741Z · LW(p) · GW(p)

What an undignified way to go.

comment by Leon Lang (leon-lang) · 2025-01-16T21:45:35.832Z · LW(p) · GW(p)

There are a few sentences in Anthropic's "conversation with our cofounders" regarding RLHF that I found quite striking:

Dario (2:57): "The whole reason for scaling these models up was that [...] the models weren't smart enough to do RLHF on top of. [...]"

Chris: "I think there was also an element of, like, the scaling work was done as part of the safety team that Dario started at OpenAI because we thought that forecasting AI trends was important to be able to have us taken seriously and take safety seriously as a problem."

Dario: "Correct."

That LLMs were scaled up partially in order to do RLHF on top of them is something I had previously heard from an OpenAI employee, but I wasn't sure it's true. This conversation seems to confirm it.

Replies from: Josephm↑ comment by Joseph Miller (Josephm) · 2025-01-17T01:26:41.770Z · LW(p) · GW(p)

we thought that forecasting AI trends was important to be able to have us taken seriously

This might be the most dramatic example ever of forecasting affecting the outcome.

Similarly I'm concerned that a lot of alignment people are putting work into evals and benchmarks which may be having some accelerating affect on the AI capabilities which they are trying to understand.

"That which is measured improves. That which is measured and reported improves exponentially."

comment by Leon Lang (leon-lang) · 2025-04-16T11:14:36.182Z · LW(p) · GW(p)

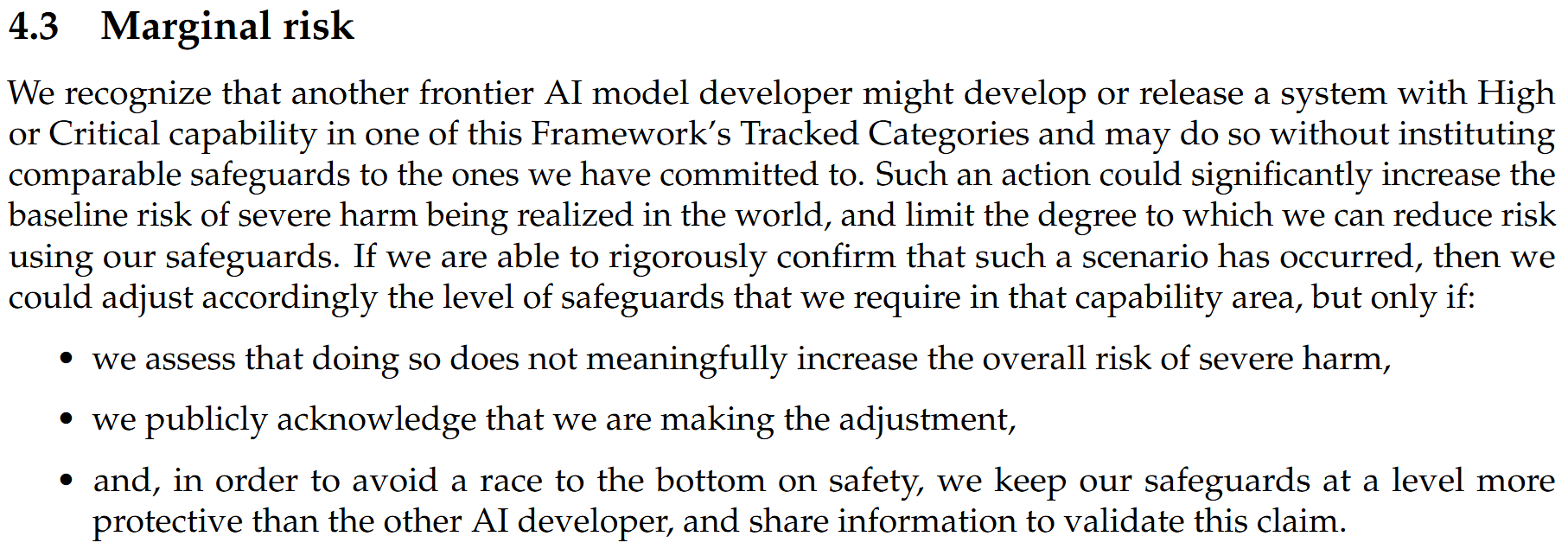

An interesting part in OpenAI's new version of the preparedness framework:

↑ comment by Zach Stein-Perlman · 2025-04-16T17:37:53.907Z · LW(p) · GW(p)

I think this stuff is mostly a red herring: the safety standards in OpenAI's new PF are super vague and so it will presumably always be able to say it meets them and will never have to use this.[1]

But if this ever matters, I think it's good: it means OpenAI is more likely to make such a public statement and is slightly less incentivized to deceive employees + external observers about capabilities and safeguard adequacy. OpenAI unilaterally pausing is not on the table; if safeguards are inadequate, I'd rather OpenAI say so.

- ^

I think my main PF complaints are:

The High standard is super vague: just like "safeguards should sufficiently minimize the risk of severe harm" + level of evidence is totally unspecified for "potential safeguard efficacy assessments." And some of the misalignment safeguards are confused/bad, and this is bad since the PF they may be disjunctive — if OpenAI is wrong about a single "safeguard efficacy assessment" that makes the whole plan invalid. And it's bad that misalignment safeguards are only clearly triggered by cyber capabilities, especially since the cyber High threshold is vague / too high.

For more see OpenAI rewrote its Preparedness Framework.

↑ comment by Simon Lermen (dalasnoin) · 2025-04-16T13:13:10.739Z · LW(p) · GW(p)

This seems to have been foreshadowed by this tweet in February:

https://x.com/ChrisPainterYup/status/1886691559023767897

Would be good to keep track of this change

comment by Leon Lang (leon-lang) · 2024-10-03T15:27:41.776Z · LW(p) · GW(p)

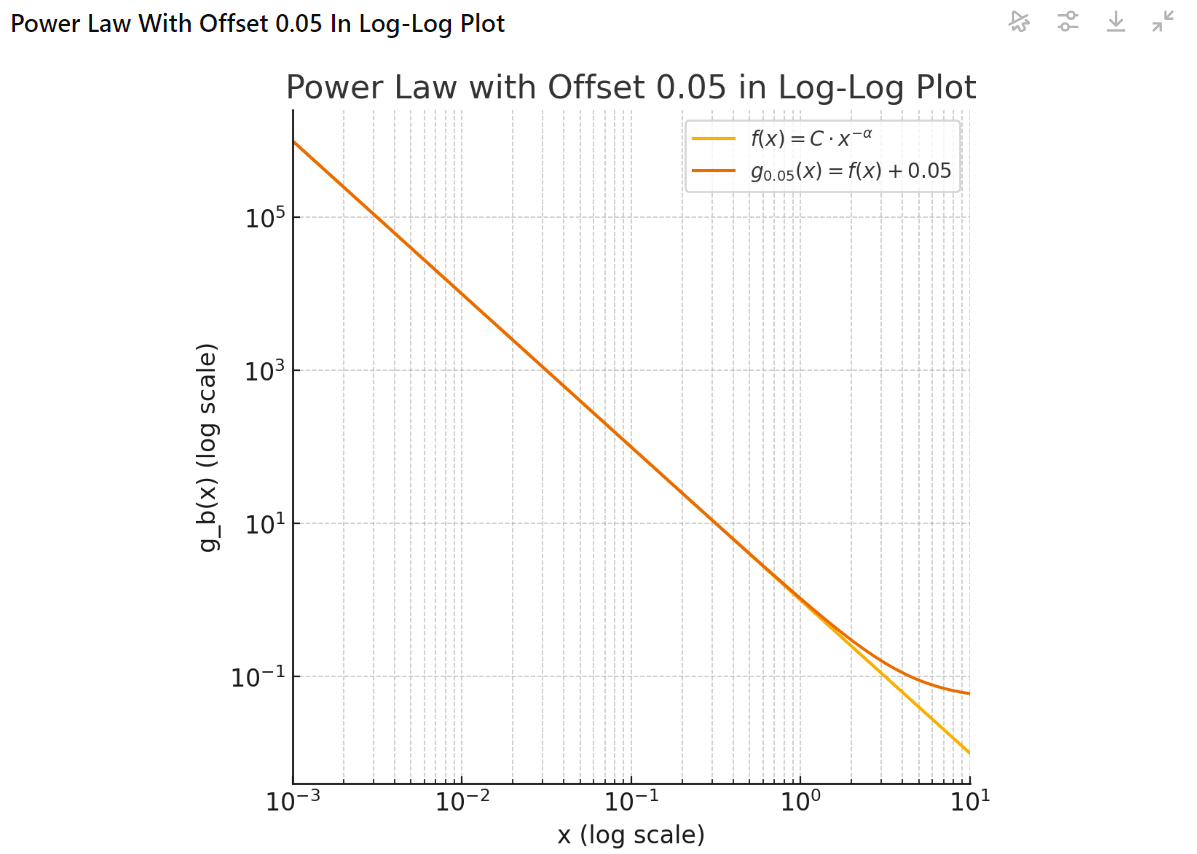

Are the straight lines from scaling laws really bending? People are saying they are, but maybe that's just an artefact of the fact that the cross-entropy is bounded below by the data entropy. If you subtract the data entropy, then you obtain the Kullback-Leibler divergence, which is bounded by zero, and so in a log-log plot, it can actually approach negative infinity. I visualized this with the help of ChatGPT:

Here, f represents the Kullback-Leibler divergence, and g the cross-entropy loss with the entropy offset.

Replies from: kave, gwern↑ comment by kave · 2024-10-03T18:41:44.936Z · LW(p) · GW(p)

I've not seen the claim that the scaling laws are bending. Where should I look?

Replies from: leon-lang↑ comment by Leon Lang (leon-lang) · 2024-10-03T21:05:21.580Z · LW(p) · GW(p)

It is a thing that I remember having been said at podcasts, but I don't remember which one, and there is a chance that it was never said in the sense I interpreted it.

Also, quote from this post [LW · GW]:

"DeepMind says that at large quantities of compute the scaling laws bend slightly, and the optimal behavior might be to scale data by even more than you scale model size. In which case you might need to increase compute by more than 200x before it would make sense to use a trillion parameters."

Replies from: gwern↑ comment by gwern · 2024-10-04T00:28:02.723Z · LW(p) · GW(p)

That was quite a while ago, and is not a very strongly worded claim. I think there was also evidence that Chinchilla got a constant factor wrong and people kept discovering that you wanted a substantially larger multiplier of data:parameter, which might fully account for any 'slight bending' back then - bending often just means you got a hyperparameter wrong and need to tune it better. (It's a lot easier to break scaling than to improve it, so being away badly is not too interesting while bending the opposite direction is much more interesting.)

↑ comment by gwern · 2024-10-03T17:45:20.120Z · LW(p) · GW(p)

Isn't an intercept offset already usually included in the scaling laws and so can't be misleading anyone? I didn't think anyone was fitting scaling laws which allow going to exactly 0 with no intrinsic entropy.

Replies from: tailcalled↑ comment by tailcalled · 2024-10-03T18:23:27.601Z · LW(p) · GW(p)

Couldn't it just be that the intercept has been extrapolated wrongly, perhaps due to misspecification on the lower end of the scaling law?

Or I guess often people combine multiple scaling laws to get optimal performance as a function of compute. That introduces a lot of complexity and I'm not sure where that puts us as to realistic errors.

Replies from: gwern↑ comment by gwern · 2024-10-03T18:32:38.952Z · LW(p) · GW(p)

Well, I suppose it could be misspecification, but if there were some sort of misestimation of the intercept itself (despite the scaling law fits usually being eerily exact), is there some reason it would usually be in the direction of underestimating the intercept badly enough that we could actually be near hitting perfect performance and the divergence become noticeable? Seems like it could just as easily overestimate it and produce spuriously good looking performance as later models 'overperform'.

Replies from: tailcalled↑ comment by tailcalled · 2024-10-03T18:43:06.226Z · LW(p) · GW(p)

I suppose that is logical enough.

comment by Leon Lang (leon-lang) · 2024-11-18T14:40:05.735Z · LW(p) · GW(p)

Why I think scaling laws will continue to drive progress

Epistemic status: This is a thought I had since a while. I never discussed it with anyone in detail; a brief conversation could convince me otherwise.

According to recent reports there seem to be some barriers to continued scaling. We don't know what exactly is going on, but it seems like scaling up base models doesn't bring as much new capability as people hope.

However, I think probably they're still in some way scaling the wrong thing: The model learns to predict a static dataset on the internet; however, what it needs to do later is to interact with users and the world. For performing well in such a task, the model needs to understand the consequences of its actions, which means modeling interventional distributions P(X | do(A)) instead of static data P(X | Y). This is related to causal confusion as an argument against the scaling hypothesis [LW · GW].

This viewpoint suggests that if big labs figure out how to predict observations in an online-way by ongoing interactions of the models with users / the world, then this should drive further progress. It's possible that labs are already doing this, but I'm not aware of it, and so I guess they haven't yet fully figured out how to do that.

What triggered me writing this is that there is a new paper on scaling law for world modeling that's about exactly what I'm talking about here.

Replies from: cubefox, johnswentworth, p.b.↑ comment by cubefox · 2024-11-18T21:28:12.728Z · LW(p) · GW(p)

Tailcalled talked about this two years ago. [LW · GW] A model which predicts text does a form of imitation learning. So it is bounded by the text it imitates, and by the intelligence of humans who have written the text. Models which predict future sensory inputs (called "predictive coding" in neuroscience, or "the dark matter of intelligence" by LeCun) don't have such a limitation, as they predict reality more directly.

↑ comment by johnswentworth · 2024-11-18T21:03:18.640Z · LW(p) · GW(p)

I think this misunderstands what discussion of "barriers to continued scaling" is all about. The question is whether we'll continue to see ROI comparable to recent years by continuing to do the same things. If not, well... there is always, at all times, the possibility that we will figure out some new and different thing to do which will keep capabilities going. Many people have many hypotheses about what those new and different things could be: your guess about interaction is one, inference time compute is another, synthetic data is a third, deeply integrated multimodality is a fourth, and the list goes on. But these are all hypotheses which may or may not pan out, not already-proven strategies, which makes them a very different topic of discussion than the "barriers to continued scaling" of the things which people have already been doing.

Replies from: Raemon↑ comment by p.b. · 2024-11-18T16:18:00.265Z · LW(p) · GW(p)

The paper seems to be about scaling laws for a static dataset as well?

Similar to the initial study of scale in LLMs, we focus on the effect of scaling on a generative pre-training loss (rather than on downstream agent performance, or reward- or representation-centric objectives), in the infinite data regime, on a fixed offline dataset.

To learn to act you'd need to do reinforcement learning, which is massively less data-efficient than the current self-supervised training.

More generally: I think almost everyone thinks that you'd need to scale the right thing for further progress. The question is just what the right thing is if text is not the right thing. Because text encodes highly powerful abstractions (produced by humans and human culture over many centuries) in a very information dense way.

Replies from: Jonas Hallgren↑ comment by Jonas Hallgren · 2024-11-18T16:34:34.391Z · LW(p) · GW(p)

If you look at the Active Inference community there's a lot of work going into PPL-based languages to do more efficient world modelling but that shit ain't easy and as you say it is a lot more compute heavy.

I think there'll be a scaling break due to this but when it is algorithmically figured out again we will be back and back with a vengeance as I think most safety challenges have a self vs environment model as a necessary condition to be properly engaged. (which currently isn't engaged with LLMs wolrd modelling)

comment by Leon Lang (leon-lang) · 2024-07-27T20:59:48.213Z · LW(p) · GW(p)

https://www.washingtonpost.com/opinions/2024/07/25/sam-altman-ai-democracy-authoritarianism-future/

Not sure if this was discussed at LW before. This is an opinion piece by Sam Altman, which sounds like a toned down version of "situational awareness" to me.

comment by Leon Lang (leon-lang) · 2024-07-19T11:15:27.155Z · LW(p) · GW(p)

https://x.com/sama/status/1813984927622549881

According to Sam Altman, GPT-4o mini is much better than text-davinci-003 was in 2022, but 100 times cheaper. In general, we see increasing competition to produce smaller-sized models with great performance (e.g., Claude Haiku and Sonnet, Gemini 1.5 Flash and Pro, maybe even the full-sized GPT-4o itself). I think this trend is worth discussing. Some comments (mostly just quick takes) and questions I'd like to have answers to:

- Should we expect this trend to continue? How much efficiency gains are still possible? Can we expect another 100x efficiency gain in the coming years? Andrej Karpathy expects that we might see a GPT-2 sized "smart" model.

- What's the technical driver behind these advancements? Andrej Karpathy thinks it is based on synthetic data: Larger models curate new, better training data for the next generation of small models. Might there also be architectural changes? Inference tricks? Which of these advancements can continue?

- Why are companies pushing into small models? I think in hindsight, this seems easy to answer, but I'm curious what others think: If you have a GPT-4 level model that is much, much cheaper, then you can sell the service to many more people and deeply integrate your model into lots of software on phones, computers, etc. I think this has many desirable effects for AI developers:

- Increase revenue, motivating investments into the next generation of LLMs

- Increase market-share. Some integrations are probably "sticky" such that if you're first, you secure revenue for a long time.

- Make many people "aware" of potential usecases of even smarter AI so that they're motivated to sign up for the next generation of more expensive AI.

- The company's inference compute is probably limited (especially for OpenAI, as the market leader) and not many people are convinced to pay a large amount for very intelligent models, meaning that all these reasons beat reasons to publish larger models instead or even additionally.

- What does all this mean for the next generation of large models?

- Should we expect that efficiency gains in small models translate into efficiency gains in large models, such that a future model with the cost of text-davinci-003 is massively more capable than today's SOTA? If Andrej Karpathy is right that the small model's capabilities come from synthetic data generated by larger, smart models, then it's unclear to me whether one can train SOTA models with these techniques, as this might require an even larger model to already exist.

- At what point does it become worthwhile for e.g. OpenAI to publish a next-gen model? Presumably, I'd guess you can still do a lot of "penetration of small model usecases" in the next 1-2 years, leading to massive revenue increases without necessarily releasing a next-gen model.

- Do the strategies differ for different companies? OpenAI is the clear market leader, so possibly they can penetrate the market further without first making a "bigger name for themselves". In contrast, I could imagine that for a company like Anthropic, it's much more important to get out a clear SOTA model that impresses people and makes them aware of Claude. I thus currently (weakly) expect Anthropic to more strongly push in the direction of SOTA than OpenAI.

↑ comment by Vladimir_Nesov · 2024-07-19T20:40:10.257Z · LW(p) · GW(p)

To make a Chinchilla optimal model smaller while maintaining its capabilities, you need more data. At 15T tokens (the amount of data used in Llama 3), a Chinchilla optimal model has 750b active parameters, and training it invests 7e25 FLOPs (Gemini 1.0 Ultra or 4x original GPT-4). A larger $1 billion training run, which might be the current scale [LW · GW] that's not yet deployed, would invest 2e27 FP8 FLOPs if using H100s. A Chinchilla optimal run for these FLOPs would need 80T tokens when using unique data.

Starting with a Chinchilla optimal model, if it's made 3x smaller, maintaining performance requires training it on 9x more data, so that it needs 3x more compute. That's already too much data, and we are only talking 3x smaller. So we need ways of stretching the data that is available. By repeating data up to 16 times, it's possible to make good use of 100x more compute [LW(p) · GW(p)] than by only using unique data once. So with say 2e26 FP8 FLOPs (a $100 million training run on H100s), we can train a 3x smaller model that matches performance of the above 7e25 FLOPs Chinchilla optimal model while needing only about 27T tokens of unique data (by repeating them 5 times) instead of 135T unique tokens, and the model will have about 250b active parameters. That's still a lot of data, and we are only repeating it 5 times where it remains about as useful in training as unique data, while data repeated 16 times (that lets us make use of 100x more compute from repetition) becomes 2-3 times less valuable per token.

There is also distillation, where a model is trained to predict the distribution generated by another model (Gemma-2-9b was trained this way). But this sort of distillation still happens while training on real data, and it only allows to make use of about 2x less data to get similar performance, so it only slightly pushes back the data wall. And rumors of synthetic data for pre-training (as opposed to post-training) remain rumors. With distillation on 16x repeated 50T tokens of unique data, we then get the equivalent of training on 800T tokens of unique data (it gets 2x less useful per token through repetition, but 2x more useful through distillation). This enables reducing active parameters 3x (as above, maintaining performance), compared to a Chinchilla optimal model trained for 80T tokens with 2e27 FLOPs (a $1 billion training run for the Chinchilla optimal model). This overtrained model would cost $3 billion (and have 1300b active parameters).

So the prediction is that the trend for getting models that are both cheaper for inference and smarter might continue into the imminent $1 billion training run regime but will soon sputter out when going further due to the data wall. Overcoming this requires algorithmic progress that's not currently publicly in evidence, and visible success in overcoming it in deployed models will be evidence of such algorithmic progress within LLM labs. But Chinchilla optimal models (with corrections for inefficiency of repeated data) can usefully scale [LW(p) · GW(p)] to at least 8e28 FLOPs ($40 billion in cost of time, 6 gigawatts) with mere 50T tokens of unique data.

Edit (20 Jul): These estimates erroneously use the sparse FP8 tensor performance for H100s (4 petaFLOP/s), which is 2 times higher than far more relevant dense FP8 tensor performance (2 petaFLOP/s). But with a Blackwell GPU, the relevant dense FP8 performance is 5 petaFLOP/s, which is close to 4 petaFLOP/s, and the cost and power per GPU within a rack are also similar. So the estimates approximately work out unchanged when reading "Blackwell GPU" instead of "H100".

Replies from: leon-lang↑ comment by Leon Lang (leon-lang) · 2024-07-21T11:23:59.126Z · LW(p) · GW(p)

One question: Do you think Chinchilla scaling laws are still correct today, or are they not? I would assume these scaling laws depend on the data set used in training, so that if OpenAI found/created a better data set, this might change scaling laws.

Do you agree with this, or do you think it's false?

Replies from: Vladimir_Nesov, Vladimir_Nesov↑ comment by Vladimir_Nesov · 2024-07-21T12:37:53.535Z · LW(p) · GW(p)

Data varies in the loss it enables, doesn't seem to vary greatly in the ratio between the number of tokens and the number of parameters that extracts the best loss out of training with given compute. That is, I'm usually keeping this question in mind, didn't see evidence to the contrary in the papers, but relevant measurements are very rarely reported, even in model series training report papers where the ablations were probably actually done. So could be very wrong, generalization from 2.5 examples. With repetition, there's this gradual increase from 20 to 60. Probably something similar is there for distillation (in the opposite direction), but I'm not aware of papers that measure this, so also could be wrong.

One interesting point is the isoFLOP plots in the StripedHyena post (search "Perplexity scaling analysis"). With hybridization where standard attention remains in 8-50% of the blocks, perplexity is quite insensitive to change in model size while keeping compute fixed, while for pure standard attention the penalty for deviating from the optimal ratio to a similar extent is much greater. This suggests that one way out for overtrained models might be hybridization with these attention alternatives. That is, loss for an overtrained model might be closer to Chinchilla optimal loss with a hybrid model than it would be for a similarly overtrained pure standard attention model. Out of the big labs, visible moves in this directions were made by DeepMind with their Griffin Team (Griffin paper, RecurrentGemma). So that's one way the data wall might get pushed a little further for the overtrained models.

↑ comment by Vladimir_Nesov · 2024-07-25T07:46:51.771Z · LW(p) · GW(p)

New data! Llama 3 report includes data about Chinchilla optimality study on their setup. The surprise is that Llama 3 405b was chosen to have the optimal size rather than being 2x overtrained. Their actual extrapolation for an optimal point is 402b parameters, 16.55T tokens, and 3.8e25 FLOPs.

Fitting to the tokens per parameter framing, this gives the ratio of 41 (not 20) around the scale of 4e25 FLOPs. More importantly, their fitted dependence of optimal number of tokens on compute has exponent 0.53, compared to 0.51 from the Chinchilla paper (which was almost 0.5, hence tokens being proportional to parameters). Though the data only goes up to 1e22 FLOPs (3e21 FLOPs for Chinchilla), what actually happens at 4e25 FLOPs (6e23 FLOPs for Chinchilla) is all extrapolation, in both cases, there are no isoFLOP plots at those scales. At least Chinchilla has Gopher as a point of comparison, and there was only 200x FLOPs gap in the extrapolation, while for Llama 3 405 the gap is 4000x.

So data needs grow faster than parameters with more compute. This looks bad for the data wall, though the more relevant question is what would happen after 16 repetitions, or how this dependence really works with more FLOPs (with the optimal ratio of tokens to parameters changing with scale).

↑ comment by rbv · 2024-07-20T03:23:14.863Z · LW(p) · GW(p)

The vanilla Transformer architecture is horrifically computation inefficient. I really thought it was a terrible idea when I learnt about it. On every single token it processes ALL of the weights in the model and ALL of the context. And a token is less than a word — less than a concept. You generally don't need to consider trivia to fill in grammatical words. On top of that, implementations of it were very inefficient. I was shocked when I read the FlashAttention paper: I had assumed that everyone would have implemented attention that way in the first place, it's the obvious way to do it if you know anything about memory throughput. (My shock was lessened when I looked at the code and saw how tricky it was to incorporate into PyTorch.) Ditto unfused kernels, another inefficiency that exists to allow writing code in Python instead of CUDA/SYCL/etc.

Second point, transformers also seem to be very parameter inefficient. They have many layers and many attention heads largely so that they can perform multi-step inferences and do a lot in each step if necessary, but mechanistic interpretability studies shows just the center layers do nearly all the work. We now see transformers with shared weights between attention heads and layers and the performance drop is not that much. And there's also the matter of bits per parameter, again a 10x reduction in precision is a surprisingly small detriment.

I believe that the large numbers of parameters in transformers aren't primarily there to store knowledge, they're needed to learn quickly. They perform routing and encode mechanisms (that is, pieces of algorithms) and their vast number provides a blank slate. Training data seen just once is often remembered because there are so many possible places to store it that it's highly likely there are good paths through the network through which strong gradients can flow to record the information. This is a variant of the Lottery Ticket Hypothesis. But a better training algorithm could in theory do the same thing with fewer parameters. It would probably look very different from SGD.

I agree completely with Karparthy. However, I think you misread him, he didn't say that data cleaning is the cause of improvements up until now, he suggested a course of future improvements. But there are already plenty of successful examples of small models improved in that way.

So I'm not the least bit surprised to see a 100x efficiency improvement and expect to see another 100x, although probably not as quickly (low hanging fruit). If you have 200B parameters, you probably could process only maybe 50M on average for most tokens. (However, there are many points where you need to draw on a lot of knowledge, and those might pull the average way up.) In 2016, a 50M parameter Transformer was enough for SoTA translation between English/French and I'm sure it could be far more efficient today.

↑ comment by Jacob Pfau (jacob-pfau) · 2024-07-19T17:53:23.713Z · LW(p) · GW(p)

Given a SotA large model, companies want the profit-optimal distilled version to sell--this will generically not be the original size. On this framing, regulation passes the misuse deployment risk from higher performance (/higher cost) models to the company. If profit incentives, and/or government regulation here continues to push businesses to primarily (ideally only?) sell 2-3+ OOM smaller-than-SotA models, I see a few possible takeaways:

- Applied alignment research inspired by speed priors seems useful: e.g. how do sleeper agents interact with distillation etc.

- Understanding and mitigating risks of multi-LM-agent and scaffolded LM agents seems higher priority

- Pre-deployment, within-lab risks contribute more to overall risk

On trend forecasting, I recently created this Manifold market to estimate the year-on-year drop in price for SotA SWE agents to measure this. Though I still want ideas for better and longer term markets!

comment by Leon Lang (leon-lang) · 2024-09-25T16:43:46.725Z · LW(p) · GW(p)

New Bloomberg article on data center buildouts pitched to the US government by OpenAI. Quotes:

- “the startup shared a document with government officials outlining the economic and national security benefits of building 5-gigawatt data centers in various US states, based on an analysis the company engaged with outside experts on. To put that in context, 5 gigawatts is roughly the equivalent of five nuclear reactors, or enough to power almost 3 million homes.”

- “Joe Dominguez, CEO of Constellation Energy Corp., said he has heard that Altman is talking about building 5 to 7 data centers that are each 5 gigawatts. “

- “John Ketchum, CEO of NextEra Energy Inc., said the clean-energy giant had received requests from some tech companies to find sites that can support 5 GW of demand, without naming any specific firms.”

Compare with the prediction by Leopold Aschenbrenner in situational awareness:

- "The trillion-dollar cluster—+4 OOMs from the GPT-4 cluster, the ~2030 training cluster on the current trend—will be a truly extraordinary effort. The 100GW of power it’ll require is equivalent to >20% of US electricity production"

↑ comment by Vladimir_Nesov · 2024-09-25T19:35:03.760Z · LW(p) · GW(p)

From $4 billion for a 150 megawatts cluster, I get 37 gigawatts for a $1 trillion cluster, or seven 5-gigawatts datacenters (if they solve geographically distributed training). Future GPUs will consume more power per GPU (though a transition to liquid cooling seems likely), but the corresponding fraction of the datacenter might also cost more. This is only a training system (other datacenters will be built for inference), and there is more than one player in this game, so the 100 gigawatts figure seems reasonable for this scenario.

Current best deployed models are about 5e25 FLOPs (possibly up to 1e26 FLOPs), very recent 100K H100s scale systems can train models for about 5e26 FLOPs in a few months. Building datacenters for 1 gigawatt scale seems already in progress, plausibly the models from these will start arriving in 2026. If we assume B200s, that's enough to 15x the FLOP/s compared to 100K H100s, for 7e27 FLOPs in a few months, which is 5 trillion active parameters models (at 50 tokens/parameter).

The 5 gigawatts clusters seem more speculative for now, though o1-like post-training promises sufficient investment, once it's demonstrated on top of 5e26+ FLOPs base models next year. That gets us to 5e28 FLOPs (assuming 30% FLOP/joule improvement over B200s). And then 35 gigawatts for 3e29 FLOPs, which might be 30 trillion active parameters models (at 60 tokens/parameter). This is 4 OOMs above the rumored 2e25 FLOPs of original GPT-4.

If each step of this process takes 18-24 months, and currently we just cleared 150 megawatts, there are 3 more steps to get $1 trillion training systems built, that is 2029-2030. If o1-like post-training works very well on top of larger-scale base models and starts really automating jobs, the impossible challenge of building these giant training systems this fast will be confronted by the impossible pressure of that success.

comment by Leon Lang (leon-lang) · 2023-07-05T09:15:29.588Z · LW(p) · GW(p)

Zeta Functions in Singular Learning Theory

In this shortform, I very briefly explain my understanding of how zeta functions play a role in the derivation of the free energy in singular learning theory [? · GW]. This is entirely based on slide 14 of the SLT low 4 talk of the recent summit on SLT and Alignment, so feel free to ignore this shortform and simply watch the video.

The story is this: we have a prior , a model , and there is an unknown true distribution . For model selection, we are interested in the evidence of our model for a data set , which is given by

where is the empirical KL divergence. In fact, we are interested in selecting the model that maximizes the average of this quantity over all data sets. The average is then given by

where is the Kullback-Leibler divergence.

But now we have a problem: how do we compute this integral? Computing this integral is what the free energy formula is about [? · GW].

The answer: by computing a different integral. So now, I'll explain the connection to different integrals we can draw.

Let

which is called the state density function. Here, is the Dirac delta function. For different , it measures the density of states (= parameter vectors) that have . It is thus a measure for the "size" of different level sets. This state density function is connected to two different things.

Laplace Transform to the Evidence

First of all, it is connected to the evidence above. Namely, let be the Laplace transform of . It is a function given by

In first step, we changed the order of integration, and in the second step we used the defining property of the Dirac delta. Great, so this tells us that ! So this means we essentially just need to understand .

Mellin Transform to the Zeta Function

But how do we compute ? By using another transform. Let be the Mellin transform of . It is a function (or maybe only defined on part of ?) given by

Again, we used a change in the order of integration and then the defining property of the Dirac delta. This is called a Zeta function.

What's this useful for?

The Mellin transform has an inverse. Thus, if we can compute the zeta function, we can also compute the original evidence as

Thus, we essentially changed our problem to the problem of studying the zeta function To compute the integral of the zeta function, it is then useful to perform blowups to resolve the singularities in the set of minima of , which is where algebraic geometry enters the picture. For more on all of this, I refer, again, to the excellent SLT low 4 talk of the recent summit on singular learning theory.

comment by Leon Lang (leon-lang) · 2024-09-13T07:48:17.287Z · LW(p) · GW(p)

I think it would be valuable if someone would write a post that does (parts of) the following:

- summarize the landscape of work on getting LLMs to reason.

- sketch out the tree of possibilities for how o1 was trained and how it works in inference.

- select a “most likely” path in that tree and describe in detail a possibility for how o1 works.

I would find it valuable since it seems important for external safety work to know how frontier models work, since otherwise it is impossible to point out theoretical or conceptual flaws for their alignment approaches.

One caveat: writing such a post could be considered an infohazard. I’m personally not too worried about this since I guess that every big lab is internally doing the same independently, so that the post would not speed up innovation at any of the labs.

comment by Leon Lang (leon-lang) · 2024-08-29T05:22:51.323Z · LW(p) · GW(p)

40 min podcast with Anca Dragan who leads safety and alignment at google deepmind: https://youtu.be/ZXA2dmFxXmg?si=Tk0Hgh2RCCC0-C7q

Replies from: Zach Stein-Perlman↑ comment by Zach Stein-Perlman · 2024-08-29T07:08:45.616Z · LW(p) · GW(p)

I listened to it. I don't recommend it. Anca seems good and reasonable but the conversation didn't get into details on misalignment, scalable oversight, or DeepMind's Frontier Safety Framework.

Replies from: neel-nanda-1, leon-lang↑ comment by Neel Nanda (neel-nanda-1) · 2024-08-29T08:16:11.685Z · LW(p) · GW(p)

My read is that the target audience is much more about explaining alignment concerns to a mainstream audience and that GDM takes them seriously (which I think is great!), than about providing non trivial details to a LessWrong etc audience

↑ comment by Leon Lang (leon-lang) · 2024-08-29T07:47:07.471Z · LW(p) · GW(p)

Agreed.

I think the most interesting part was that she made a comment that one way to predict a mind is to be a mind, and that that mind will not necessarily have the best of all of humanity as its goal. So she seems to take inner misalignment seriously.

comment by Leon Lang (leon-lang) · 2025-03-02T23:11:14.720Z · LW(p) · GW(p)

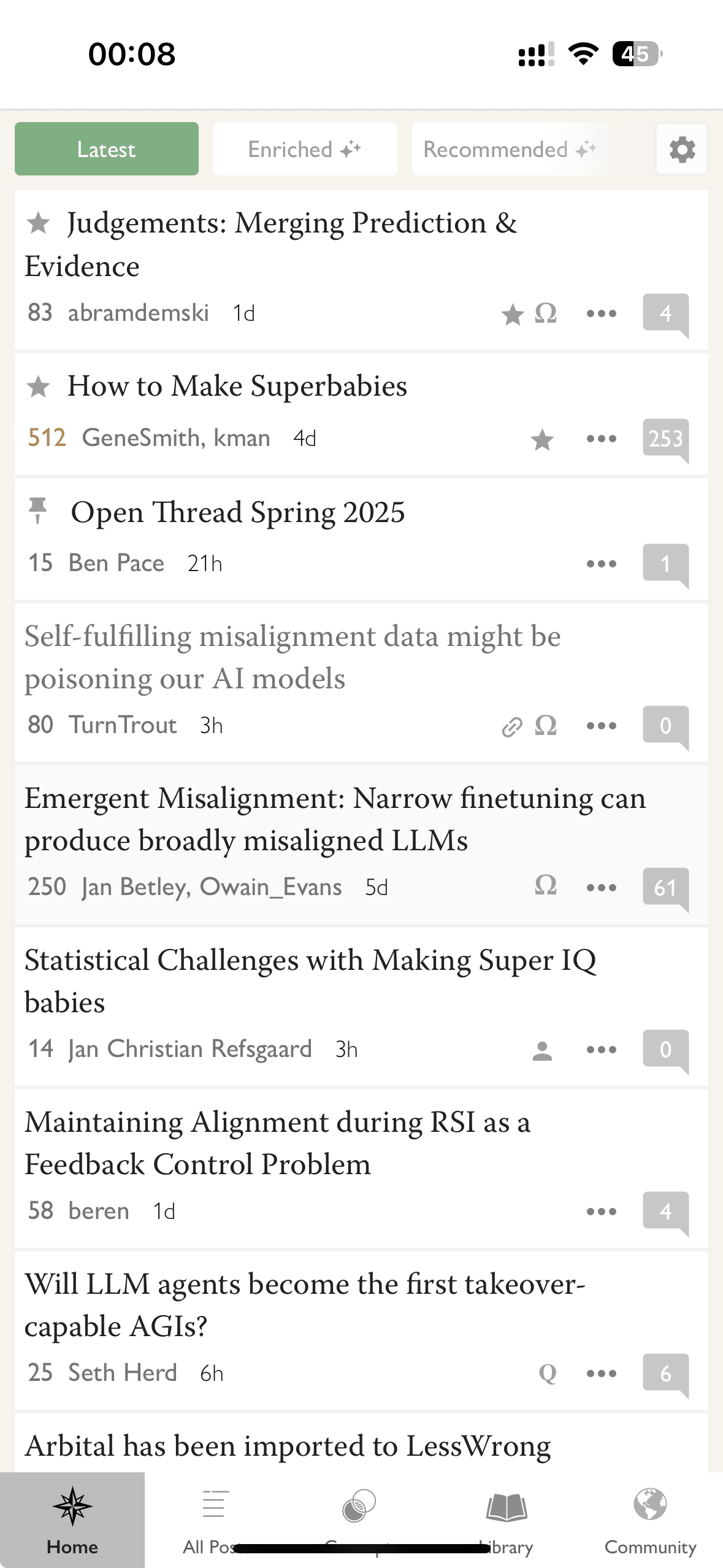

I’m confused by the order Lesswrong shows posts to me: I’d expect to see them in chronological order if I select them by “Latest”.

But as you see, they were posted 1d, 4d, 21h, etc ago.

How can I see them chronologically?

↑ comment by Seth Herd · 2025-03-02T23:20:13.814Z · LW(p) · GW(p)

Just click the "advanced sorting" link at the lower right of the front page posts list, and you'll see them organized by day, roughly chronological. It still includes some sorting by upvotes, but you can easily look at every post for every day.

comment by Leon Lang (leon-lang) · 2024-12-29T14:38:20.394Z · LW(p) · GW(p)

This is a link to a big list of LLM safety papers based on a new big survey.

comment by Leon Lang (leon-lang) · 2024-11-20T12:17:44.708Z · LW(p) · GW(p)

After the US election, the twitter competitor bluesky suddenly gets a surge of new users:

comment by Leon Lang (leon-lang) · 2022-10-02T10:06:49.734Z · LW(p) · GW(p)

This is my first comment on my own, i.e., Leon Lang's, shortform. It doesn't have any content, I just want to test the functionality.

Replies from: niplav↑ comment by niplav · 2022-10-02T11:00:07.149Z · LW(p) · GW(p)

Unfortunately not, as far as my interface goes, if you wanted to comment here [LW(p) · GW(p)].

Replies from: leon-lang↑ comment by Leon Lang (leon-lang) · 2022-10-02T16:55:24.357Z · LW(p) · GW(p)

Yes, it seems like both creating a "New Shortform" when hovering over my user name and commenting on "Leon Lang's Shortform" will do the exact same thing. But I can also reply to the comments.

comment by Leon Lang (leon-lang) · 2023-01-21T00:18:40.125Z · LW(p) · GW(p)

Edit: This is now obsolete with our NAH distillation [LW · GW].

Making the Telephone Theorem and Its Proof Precise

This short form distills the Telephone Theorem [LW · GW] and its proof. The short form will thereby not at all be "intuitive"; the only goal is to be mathematically precise at every step.

Let be jointly distributed finite random variables, meaning they are all functions

starting from the same finite sample space with a given probability distribution and into respective finite value spaces . Additionally, assume that these random variables form a Markov chain .

Lemma: For a Markov chain , the following two statements are equivalent:

(a)

(b) For all with : .

Proof:

Assume (a): Inspecting an information diagram of will immediately result in us also observing the Markov chain . Markov chains can be turned around, thus we get the two chains

Factorizing along these two chains, we obtain:

and thus, for all with . That proves (b).

Assume (b): We have

where, in the second step, we used the Markov chain and in the third step, we used assumption (b). This independence gives us the vanishing of conditional mutual information:

Together with the Markov chain , this results, by inspecting an information diagram, in the equality .

Theorem: Let . The following are equivalent:

(a)

(b) There are functions defined on , respectively such that:

- with probability , i.e., the measure of all such that the equality doesn't hold is zero.

- For all , we have the equality , and the same for .

Proof: The Markov chain immediately also gives us a Markov chain , meaning we can without loss of generality assume that . So let's consider the simple Markov chain .

Assume (a): By the lemma, this gives us for all with : .

Define the two functions , by:

Then we have with probability 1[1], giving us the first condition we wanted to prove.

For the second condition, we use a trick from Probability as Minimal Map [LW · GW]: set , which is a probability distribution. We get

That proves (b).

Assume (b): For the other direction, let be given with Let be such that and with . We have

and thus

The result follows from the Lemma.[2]

comment by Leon Lang (leon-lang) · 2023-01-19T01:42:24.907Z · LW(p) · GW(p)

These are rough notes trying (but not really succeeding) to deconfuse me about Alex Turner's diamond proposal [AF · GW]. The main thing I wanted to clarify: what's the idea here for how the agent remains motivated by diamonds even while doing very non-diamond related things like "solving mazes" that are required for general intelligence?

- Summarizing Alex's summary:

- Multimodal SSL initialization

- recurrent state, action head

- imitation learning on humans in simulation, + sim2real

- low sample complexity

- Humans move toward diamonds

- policy-gradient RL: reward the AI for getting near diamonds

- the recurrent state retains long-term information

- After each task completion: the AI is near diamonds

- SSL will make sure the diamond abstraction exists

- Proto Diamond shard:

- There is a diamond abstraction that will be active once a diamond is seen. Imagine this as being a neuron.

- Then, hook up the "move forward" action with this neuron being active. Give reward for being near diamonds. Voila, you get an agent which obtains reward! This is very easy to learn! More easily than other reward-obtaining computations

- Also, other such computations may be reinforced, like "if shiny object seen, move towards it" --- do adversarial training to rule those out

- This is all about prototypical diamonds. Thus, the AI may not learn to create a diamond as large as a sun, but that's also not what the post is about.

- Preserving diamond abstraction/shard:

- In Proto planning, the AI primarily thinks about how to achieve diamonds. Such thinking is active across basically all contexts, due to early RL training.

- Then, we will give the AI other types of tasks, like "maze solving" or "chess playing" or anything else, from very easy to very hard.

- At the end of each task, there will be a diamond and reward.

- By default, at the start of this new training process, the diamond shard will be active since training so far ensures it is active in most contexts. It will bid for actions before the reward is reached, and therefore, its computations will be reinforced and shaped. Also, other shards will be reinforced (ones that plan how to solve a maze, since they also steer toward the reinforcement event), but the diamond shard is ALWAYS reinforced.

- The idea here is that the diamond shard is contextually activated BY EVERY CONTEXT, and so it is basically one huge circuit thinking about how to reach diamonds that simply gets extended with more sub-computations for how to reach diamonds.

- Caveat: another shard may be better than the diamond shard at planning toward the end of a maze than the diamond shard which "isn't specialized". And if that's the case, then reinforcement events may make the diamond shard continuously less active in maze-solving contexts until it doesn't activate anymore at start-of-maze contexts. It's unclear to me what the hypothesis is for how to prevent this.

- Possibly the hypothesis is captured in this paragraph of Alex, but I don't understand it:

"In particular, even though online self-supervised learning continues to develop the world model and create more advanced concepts, the reward events also keep pinging the invocation of the diamond-abstraction as responsible for reward (because insofar as the agent's diamond-shard guides its decisions, then the diamond-shard'sdiamond-abstraction is in fact responsible for the agent getting reward). The diamond-abstraction gradient starves the AI from exclusively acting on the basis of possible advanced "alien" abstractions which would otherwise have replaced the diamond abstraction. The diamond shard already gets reward effectively, integrating with the rest of the agent's world model and recurrent state, and therefore provides "job security" for the diamond-abstraction. (And once the agent is smart enough, it will want to preserve its diamond abstraction, insofar as that is necessary for the agent to keep achieving its current goals which involve prototypical-diamonds.)" - I don't understand what it means to "ping the invocation of the diamond-abstraction as responsible for reward". I can imagine what it means to have subcircuits whose activation is strengthened on certain inputs, or whose computations (if they were active in the context) are changed in response to reinforcement. And so, I imagine the shard itself to be shaped by reward. But I'm not sure what exactly is meant by pinging the invocation of the diamond abstraction as responsible for reward.

- Possibly the hypothesis is captured in this paragraph of Alex, but I don't understand it:

↑ comment by TurnTrout · 2023-01-19T03:32:51.016Z · LW(p) · GW(p)

what's the idea here for how the agent remains motivated by diamonds even while doing very non-diamond related things like "solving mazes" that are required for general intelligence?

I think that the agent probably learns a bunch of values, many related to gaining knowledge and solving games and such. (People are also like this; notice that raising a community-oriented child does not require a proposal for how the kid will only care about their community, even as they go through school and such.)

Also, other shards will be reinforced (ones that plan how to solve a maze, since they also steer toward the reinforcement event), but the diamond shard is ALWAYS reinforced.

- The idea here is that the diamond shard is contextually activated BY EVERY CONTEXT, and so it is basically one huge circuit thinking about how to reach diamonds that simply gets extended with more sub-computations for how to reach diamonds.

I think this is way stronger of a claim than necessary. I think it's fine if the agent learns some maze-/game-playing shards which do activate while the diamond-shard doesn't -- it's a quantitative question, ultimately. I think an agent which cares about playing games and making diamonds and some other things too, still ends up making diamonds.

I don't understand what it means to "ping the invocation of the diamond-abstraction as responsible for reward".

Credit assignment (AKA policy gradient) credits the diamond-recognizing circuit as responsible for reward, thereby retaining this diamond abstraction in the weights of the network.

Replies from: leon-lang↑ comment by Leon Lang (leon-lang) · 2023-01-20T03:22:48.003Z · LW(p) · GW(p)

Thanks for your answer!

Credit assignment (AKA policy gradient) credits the diamond-recognizing circuit as responsible for reward, thereby retaining this diamond abstraction in the weights of the network.

This is different from how I imagine the situation. In my mind, the diamond-circuit remains simply because it is a good abstraction for making predictions about the world. Its existence is, in my imagination, not related to an RL update process.

Other than that, I think the rest of your comment doesn't quite answer my concern, so I try to formalize it more. Let's work in the simple setting that the policy network has no world model and is simply a non-recurrent function mapping from observations to probability distributions over actions. I imagine a simple version of shard theory to claim that f decomposes as follows:

,

where i is an index for enumerating shards, is the contextual strength of activation of the i-th shard (maybe with ), and is the action-bid of the i-th shard, i.e., the vector of log-probabilities it would like to see for different actions. Then SM is the softmax function, producing the final probabilities.

In your story, the diamond shard starts out as very strong. Let's say it's indexed by and that for most inputs and that has a large "capacity" at its disposal so that it will in principle be able to represent behaviors for many different tasks.

Now, if a new task pops up, like solving a maze, in a specific context , I imagine that two things could happen to make this possible:

- could get updated to also represent this new behavior

- The strength could get weighed down and some other shard could learn to represent this new behavior.

One reason why the latter may happen is that possibly becomes so complicated that it's "hard to attach more behavior to it"; maybe it's just simpler to create an entirely new module that solves this task and doesn't care about diamonds. If something like this happens often enough, then eventually, the diamond shard may lose all its influence.

Replies from: TurnTrout↑ comment by TurnTrout · 2023-01-23T22:00:28.589Z · LW(p) · GW(p)

One reason why the latter may happen is that possibly becomes so complicated that it's "hard to attach more behavior to it"; maybe it's just simpler to create an entirely new module that solves this task and doesn't care about diamonds. If something like this happens often enough, then eventually, the diamond shard may lose all its influence.

I don't currently share your intuitions for this particular technical phenomenon being plausible, but imagine there are other possible reasons this could happen, so sure? I agree that there are some ways the diamond-shard could lose influence. But mostly, again, I expect this to be a quantitative question, and I think experience with people suggests that trying a fun new activity won't wipe away your other important values.

comment by Leon Lang (leon-lang) · 2022-10-02T10:05:36.646Z · LW(p) · GW(p)

This is my first short form. It doesn't have any content, I just want to test the functionality.