Disempowerment spirals as a likely mechanism for existential catastrophe

post by Raymond D, owencb · 2025-04-10T14:37:58.301Z · LW · GW · 6 commentsContents

Common Themes Three Types of Response Capacity Broad Disempowerment Polycrises Critical Threshold Disempowerment spirals and existential risk None 6 comments

When complex systems fail, it is often because they have succumbed to what we call "disempowerment spirals" — self-reinforcing feedback loops where an initial threat progressively undermines the system's capacity to respond, leading to accelerating vulnerability and potential collapse.

Consider a city gradually falling under the control of organized crime. The criminal organization doesn't simply overpower existing institutions through sheer force. Rather, it systematically weakens the city's response mechanisms: intimidating witnesses, corrupting law enforcement, and cultivating a reputation that silences opposition. With each incremental weakening of response capacity, the criminal faction acquires more power to further dismantle resistance, creating a downward spiral that can eventually reach a point of no return.

This basic pattern appears across many different domains and scales:

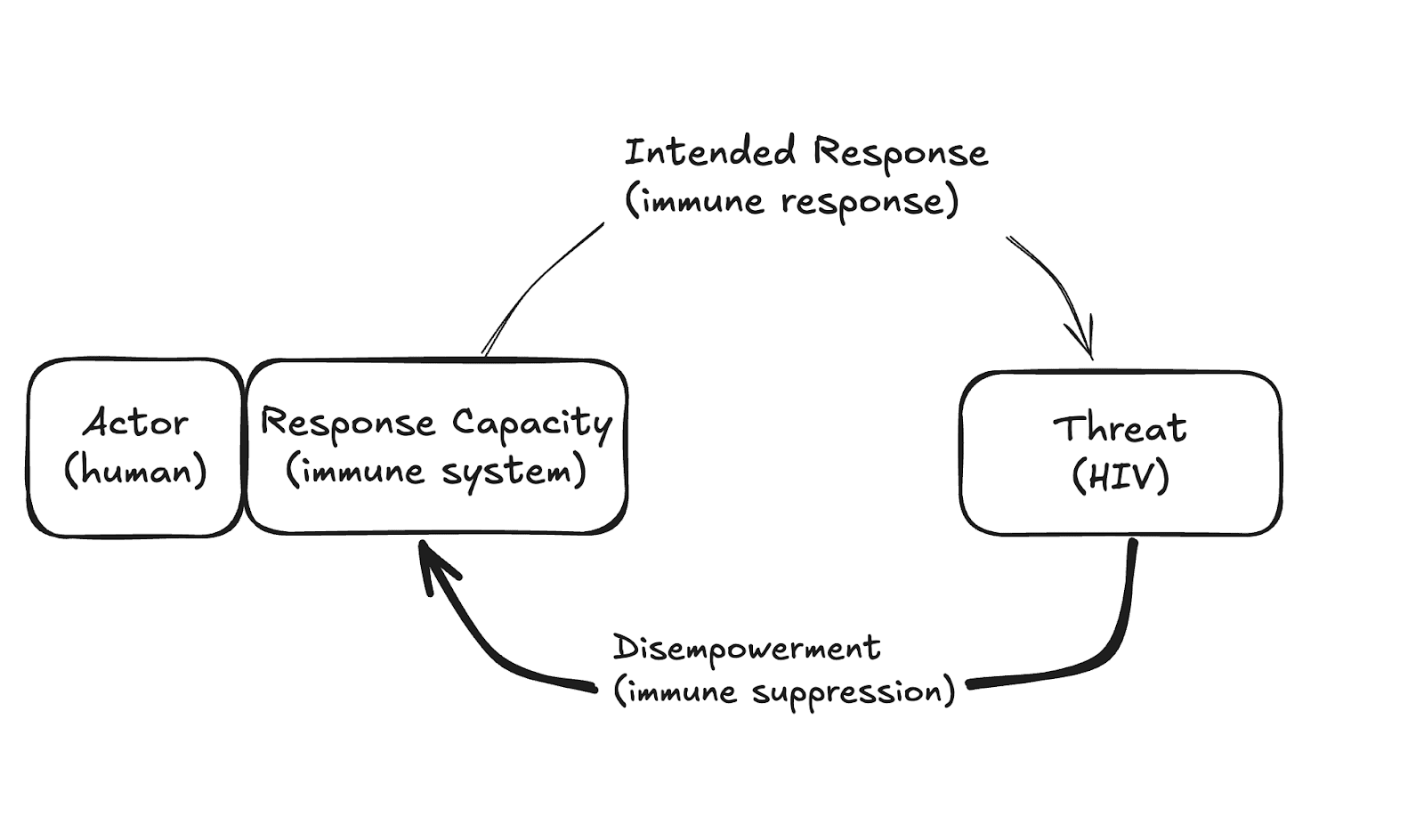

- HIV progressively destroys the immune system designed to fight it.

- Anxiety, burnout, or depression deplete executive function, which is required for taking steps to address the problem.

- Cults methodically isolate members from support networks that might help them leave.

- Corporate toxic cultures drive away the talented employees most capable of fixing them.

- Political polarization erodes the trust necessary for collective problem-solving.

In each case, the threat doesn't just cause damage — it undermines the capacity to respond to that very threat.

Abstracting:

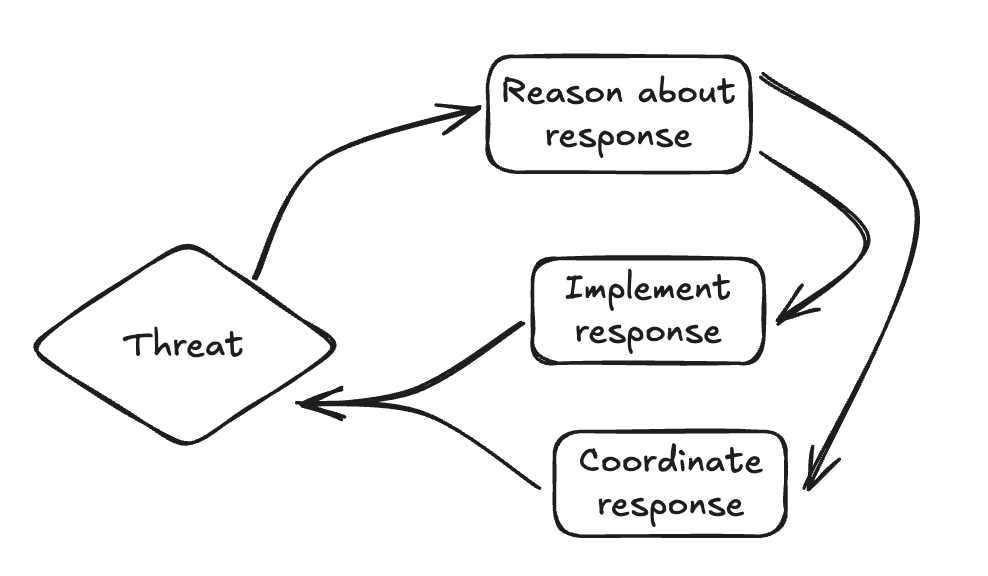

A disempowerment spiral is a feedback loop in which an actor faces an ongoing threat that somehow disempowers the actor’s capacity to respond to that threat. As the actor’s response capacity decreases, they become even less able to prevent further disempowerment.

In this article, we propose disempowerment spirals as a lens for analysing how complex systems fail. Our primary motivation is to better understand existential risks (including AI risk) — since we cannot directly observe existential catastrophes, we need indirect methods to understand them. Disempowerment spirals in particular provide a possible answer to the question “if something gets bad enough, why don’t people just stop it?”.

In the rest of this post, we will first draw out some general observations about disempowerment spirals, and then in the last section turn to a discussion of what this might mean for efforts to reduce existential risk.

Common Themes

Three Types of Response Capacity

What can disempowerment consist of?

In thinking about what actors need to respond to threats, we’ve found it useful to distinguish reasoning capacity (noticing the problem and figuring out what to do) from implementation capacity (actually doing something about the problem). For group actors it’s also sometimes useful to consider coordination capacity (effectively collaborating against the threat).

These capacities together represent the actor’s ability to respond to threats. Things which disempower the actor have an impact on one or more of these dimensions. Often it does seem like the disempowerment effect is acting on one of them in particular.

For example:

| Disempowering reasoning capacity | Disempowering implementation capacity | Disempowering coordination capacity |

| The actor has a progressively harder time recognizing the problem or figuring out what would help | The actor is progressively enfeebled, and their interventions become relatively less effective | Although individuals may recognize the problem, they cannot rally people around enacting key interventions |

| e.g. Mental illnesses like depression and anxiety lead someone to misjudge what help is available | e.g. Military barrages from a hostile power destroy all facilities for manufacturing semiconductors | e.g. A pandemic creates fear and unrest, making people more sceptical, and less willing to collaborate in certain ways |

| e.g. A group infiltrating an intelligence service tampers with important information | e.g. A person drawn into a cult is persuaded to become more financially dependent | e.g. Political polarisation damages trust and communication within groups |

Sometimes, of course, a disempowerment effect will hit multiple of these things at once. Something which took out telecommunications, for example, would have negative impacts on all three types of response capacity.

Also, this isn’t the only decomposition you can consider. In particular cases it might be helpful to think e.g. about stages of an OODA loop, or parts of a complex institution. But we think the general decomposition has some mileage.

Broad Disempowerment

In theory, we could see a disempowerment spiral effect where the actor is only very narrowly disempowered — in their capacity to respond to that specific threat. Perhaps a spy inside security services who mainly uses their access to cover their own tracks.

In practice, for a large majority of the examples we have considered, the disempowerment is typically quite broad, reducing capacity in some general way. Perhaps the ability to recognize and plan for new threats is impaired; or physical resources for responding to things are destroyed; or trust and coordination break down.

Polycrises

If a threat causes some measure of broad disempowerment, that could leave the door open for new threats, or flare-ups of existing issues which now see inadequate response. Taking the example of HIV: the breakdown of the immune system per se isn’t what kills people, it’s the fact that otherwise minor infections can suddenly be fatal.

Sometimes disempowerment effects seem more natural to understand in terms of a holistic pattern than a particular individual threat — see e.g. the notions of polycrisis or poverty trap.

This could give reason to flip our perspective on risk: rather than asking ‘what specific threats might this actor face’, you can instead ask ‘how in general might the actor be left unable to respond to threats’. We think this seems like a useful perspective especially when considering scenarios where there are many unpredictable or unknown threats.

Critical Threshold

Early on in a disempowerment spiral, it’s plausible that the actor will get their act together and respond to get the threat under control. If things proceed too far, this may become impossible. (At least without outside intervention.)

Somewhere along the way, a critical threshold was passed. In practice we won’t usually be able to pinpoint when this occurs, but it seems relevant to understand that this point of no return typically comes well before the actor is maximally disempowered or wiped out.

| Threat | Cult membership | Business collapse | Military conquest |

| Critical Threshold | Individual becomes too isolated and dependent to be able to leave | Business loses too many key employees to preserve a healthy culture | Country loses too much industrial infrastructure to manufacture weaponry |

A given spiral can also have several critical thresholds corresponding to different degrees of permanent disempowerment. A pandemic, for instance, could have separate points at which:

- Spread can no longer be limited across the general population

- Industrial and economic development is permanently set back

- Key institutions are lost

- Humanity is eradicated

Not all spirals end with the death of the host system, even if they get completely out of hand. But it may no longer be possible to get them under control — at a minimum, the actor is left weakened in a way they cannot independently undo, and often in a broad way that leaves them more open to other risks.

The concept of a critical threshold seems potentially useful for distinguishing between the actual harms to be avoided and the window of time in which it is possible to meaningfully avoid the harms.

Disempowerment spirals and existential risk

We don’t have a tight argument, but it seems to us that most x-risk (including most AI-related x-risk) would have something of the nature of a disempowerment spiral[1]:

- Exogenous risk (e.g. asteroids, false vacuum collapse) over the next century seems much smaller than endogenous risk

- Right now, humanity is in some sense reasonably empowered over its environment

- If things go very wrong, that’s probably something that people didn’t want — so we lost some empowerment along the way

- It’s kind of easier to find stories where this happens quasi-continuously rather than abruptly

For AI specifically: misaligned AI takeover probably means a period of humanity becoming disempowered and, short of the most extreme ‘foom’ scenarios, that probably involves a recursive process of resource-gathering. Misuse scenarios and structural risk are in the same category. More broadly, bad AI outcomes seem more likely to arise if there is a breakdown of geopolitical stability and a straining of trust, which we can also model as a disempowerment spiral, or from weird systemic problems that impede humanity’s ability to respond.

Of course, recasting existential risks in terms of disempowerment spirals doesn’t necessarily help us. But if we look to draw practical lessons, here are the ones that seem most prominent to us:

- Analysis of x-risk should focus less on the point where things go maximally badly

- Sometime before the point where everyone is wiped out, or permanently disempowered, will be the critical threshold — when people still have a significant amount of power, but it falls behind the growing amount necessary to contain the problem

- Endgames are, therefore, less important than they appear

- We should invest more in noticing — and containing — nascent problems quickly

- We should focus on staying in control of things that threaten our ability to respond — and we should strive to act quickly and decisively while it is cheap (and/or possible!) to do so

- We should invest broadly in both developing and hardening humanity’s response capacities

- New tools have the potential to radically increase our capacity here

- We should be careful not to assume we’ll only have to deal with one problem at a time — it may be easiest for things to collapse in scenarios where one threat dramatically reduces our response capacity, and others escalate things from there

Thanks to Adam Bales, Toby Ord, Rose Hadshar, and Max Dalton for helpful discussions and comments on earlier drafts.

- ^

Actually, we would guess that the strongest response to this might be an argument that humanity is not sufficiently empowered — unable to see the big things coming, or unable to coordinate to control them. But we think this is stretching the point … there would still, it seems likely, be some process which in its early stages humanity was on top of, but which it would lose control of as it developed.

6 comments

Comments sorted by top scores.

comment by Mitchell_Porter · 2025-04-10T22:44:35.110Z · LW(p) · GW(p)

I strong-upvoted this just for the title alone. If AI takeover is at all gradual, it is very likely to happen via gradual disempowerment.

But it occurs to me that disempowerment can actually feel like empowerment! I am thinking here of the increasing complexity of what AI gives us in response to our prompts. I can enter a simple instruction and get back a video or a research report. That may feel empowering. But all the details are coming from the AI. This means that even in actions initiated by humans, the fraction that directly comes from the human is decreasing. We could call this relative disempowerment. It's not that human will is being frustrated, but rather that the AI contribution is an ever-increasing fraction of what is done.

Arguably, successful alignment of superintelligence produces a world in which 99+% of what happens comes from AI, but it's OK because it is aligned with human volition in some abstract sense. It's not that I am objecting to AI intentions and actions becoming most of what happens, but rather warning that a rising tide of empowerment-by-AI can turn into complete disempowerment thanks to deception or just long-term misalignment... I think everyone already knows this, but I thought I would point it out in this context.

comment by Knight Lee (Max Lee) · 2025-04-10T20:43:53.476Z · LW(p) · GW(p)

My very uncertain opinion is that, humanity may be very irrational and a little stupid, but humanity isn't that stupid.

The reason people do not take AI risk and other existential risk seriously is due to the complete lack of direct evidence (despite plenty of indirect evidence) of its presence. It's easy for you to consider it obvious due to the curse of knowledge, but this kind of "reasoning from first principles (that nothing disproves the risk and therefore the risk is likely)," is very hard for normal people to do.

Before the September 11th attacks, people didn't take airport security seriously because they lacked imagination on how things could go wrong. They considered worst case outcomes as speculative fiction, regardless of how logically plausible they were, because "it never happened before."

After the attacks, the government actually overreacted and created a massive amount of surveillance.

Once the threat starts to do real and serious damage against the systems for defending threats, the systems actually do wake up and start fighting in earnest. They are like animals which react when attacked, not trees which can be simply chopped down.

Right now the effort against existential risks is extremely tiny. E.g. AI Safety is only $0.1 to $0.2 billion [? · GW], while the US military budget is $800-$1000 billion, and the world GDP is $100,000 billion ($25,000 billion in the US). It's not just spending which is tiny, but effort in general.

I'm more worried about a very sudden threat which destroys these systems in a single "strike," when the damage done goes from 0% to 100% in one day, rather than gradually passing the point of no return.

But I may be wrong.

Edit: one form of point of no return is if the AI behaves more and more aligned even as it is secretly misaligned (like the AI 2027 story).

comment by Mis-Understandings (robert-k) · 2025-04-10T15:07:31.485Z · LW(p) · GW(p)

There is another analogy where this works. It is like bank failures, where things fall apart slowly, then all at once. That is to say that being past the critical threshold does not guarntee failure timing. Specifically, you can't tell if an organization can do something without actually trying to do it. So noticing disempowerment is not helpful if you notice it only after the critical threshold, where you try something and it does not work.

comment by Martín Soto (martinsq) · 2025-04-15T13:47:08.701Z · LW(p) · GW(p)

This post is reminiscent of this old one from Daniel [? · GW]

comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2025-04-11T13:11:21.886Z · LW(p) · GW(p)

A suggestive analogy of AI takeover might be the early-modern European colonial takeovers of the New World, India under Pizarro, Cortez, Clives.

Colonialism took several hundred years but the major disempowerement events were quite relatively quick, involving explicit hostile action using superior technology [and many native allies].

Are you imagining AI takeover to be slower or similar to Pizarro/Cortez/Clives campaigns?

A potential counterargument to gradual disempowerement is that one of the decisive advantages that AIs have over humanity & its decisionmaking processing are its speed of thinking and it's speed and coherency of decision-making (not now but for future agentic systems). Most decisionmaking at a national and international level is slow and mostly concerned with symbolics because of the inherent limitations of decisionmaking at the scale of large human societies [even authoritarian states struggle with good and fast decisionmaking]. AIs could circumvent this and act much faster and decisively.

Another argument is that humans aren't stupid. The public doesn't trust AI. When the power of AI is clearly visible it seems likely the public will react given time. Being publicly semi-hostile will incur a response. One shouldn't fall into the sleepwalker bias. Faking alignment and then suddenly striking seems to be a much more rational move than gradual disempowerment.

comment by Martín Soto (martinsq) · 2025-04-15T13:46:47.015Z · LW(p) · GW(p)

Some thoughts skimming this post generated:

If a catastrophe happens, then either:

- It happened so discontinuously that we couldn't avoid it even with our concentrated effort

- It happened slowly but for some reason we didn't make a concentrated effort. This could be because:

- We didn't notice it (e.g. intelligence explosion inside lab)

- We couldn't coordinate a concentrated effort, even if we all individually would want it to exist (e.g. no way to ensure China isn't racing faster)

- We didn't act individually rationally (e.g. Trump doesn't listen to advisors / Trump brainwashed by AI)

1 seems unlikelier by the day.

2a is mostly transparency inside labs (and less importantly into economic developments), which is important but at least some people are thinking about it.

There's a lot to think through in 2b and 2c. It might be critical to ensure early takeoff improves them, rather than degrading them (missing any drastic action to the contrary) until late takeoff can land the final blow.

If we assume enough hierarchical power structures, the situation simplifies into "what 5 world leaders do", and then it's pretty clear you mostly want communication channels and trustless agreements for 2b, and improving national decision-making for 2c.

Maybe what I'd be most excited to see from the "systemic risk" crowd is detailed thinking and exemplification on how assuming enough hierarchical power structures is wrong (that is, the outcome depends strongly on things other than what those 5 world leaders do), what are the most x-risk-worrisome additional dynamics in that area, and how to intervene on them.

(Maybe all this is still too abstract, but it cleared my head)