Context-dependent consequentialism

post by Jeremy Gillen (jeremy-gillen), mattmacdermott · 2024-11-04T09:29:24.310Z · LW · GW · 6 commentsContents

6 comments

This dialogue is still in progress, but due to other commitments we don't have much time to continue it. We think the content is interesting, so we decided to publish it unfinished. We will maybe very slowly continue adding to it in the future, but can't commit to doing so.

Context: I wrote a shortform [LW(p) · GW(p)] about conditioning on high capability AI, and the ways I think my past self messed that up. In particular, I claimed that conditioning on high capability means that the AI will be goal-directed.

Matt responded [LW(p) · GW(p)]:

Distinguish two notions of "goal-directedness":

- The system has a fixed goal that it capably works towards across all contexts.

- The system is able to capably work towards goals, but which it does, if any, may depend on the context.

My sense is that a high level of capability implies (2) but not (1). And that (1) is way more obviously dangerous. Do you disagree?

I responded [LW(p) · GW(p)] that (2) is unstable.

So here's a few claims that I think we disagree on that might be interesting to discuss:

- High capability implies long-term goals that are stable across context changes.

- Context dependent goals are unstable under reflection.

Does this context seem right? And where would you like to start?

Yeah, this seems like a good place to start.

You have an argument that conditioning on high capability implies a dangerous kind of goal-directedness, the kind where a system has a fixed goal that it optimises across all contexts, and where it's very likely to want to seize control of resources to act towards that goal.

This argument seems a bit shakey to me in some of the key places, so I'd like to see if you can fill in the details in a way I find convincing.

To start with, I think we both agree that high capability at performing certain tasks requires some amount of goal-directedness. For example, if my AI can complete a 6 month ML research project using the same sort of methods a human uses, it's going to need to be able to identify an objective, break it down into sub-objectives, plan towards them, test its ideas, and revise its ideas, plans and objectives over time.

The first potential disagreement point I want to check is: do you think it's physically possible for such a system to exist, without having a goal inside it that it wants to optimise at all costs? That is, can the system be a research-project-executing machine, rather than a send-the-world-into-a-certain-state machine? Such a machine would in some sense be optimising towards the goal of a successfully completed research project, but it would only be doing it via some methods -- doing ML research using the resources allocated to it -- and would forego opportunities to push the world towards that state using other methods. For example, it might be capable of hacking into a server to get control of more GPUs, or to steal someone else's research results, or what have you, but not see any reason to.

[Note that this is one of the things I might call 'context-dependent goals'. Goals that explain some things about your action choice (which you ran experiment 1 over experiment 2) but not other things (why you ran experiment 1 instead of hacking the server). I'm bundling this in with 'pursuing a goal in some contexts but not others' since I think they're similar. But the term seems confusing, so perhaps let's avoid that phrase for a while.]

I'm expecting you to either say, "Yes, this kind of system can exist, but I expect our training methods will select against it," or "This kind of system cannot exist for very long because it will change under reflection."

I'm interested in digging into the arguments for either of those.

Here's my best guess at what you mean, in my own words:

The agent is a consequentialist but it also has deontological constraints. And a deontological constraint can look like a blacklist or whitelist of a cluster of strategies. So in your example, it has blacklisted all strategies involving gaining control of more GPUs. It can be thought of as kind of having a deontological rule against implementing those strategies. This overrides any consequentialist reasoning, because it's just as much a part of the true-goal of this system as the consequentialist-outcome aspect of the goal.

Does this match the kind of system you're describing? I have some other hypotheses for what you might mean, but this one seems to fit the best.

If this is what you mean, then I think such a system can exist and be stable.

But I see the difficulty of getting the deontological-constraints into the AI as similar to the difficulty of getting the outcome-goals into the AI.

I think consequentualism with deontological constraints is not really the thing I'm thinking of. Maybe you could think of it as consequentalism with a whitelist, but I'm not sure it conveys the right intuition.

For concreteness, let's say the AI is trained via imitation of human data. It learns to execute the strategies a human would use, but it also learns the meta-skill of coming up with strategies to achieve its goal. This lets it generalise to using strategies a human wouldn't have thought of. But it's unclear how far from the strategies in the training data it's going to generalise.

I think it's pretty plausible it just learns to combine humanlike tactics in new ways. So if something like getting control of more GPUs isn't in the training data, it just wouldn't think to do it. It's not the policy the machine has learned. I don't think you should think of this as it doing idealised consequentialist reasoning, but with certain strategies blacklisted, or even really with some whitelisted. It's more like: it has learned to combine the available cognitive habits in an effective way. It adds up to consequentalist behaviour within a certain domain, but it's implemented in a hackier way than what you're imagining.

Perhaps we have different opinions about the naturalness of a pure consequentialist reasoning algorithm that uses every scrap of knowledge the system has to look for strategies for achieving a goal?

Okay I'll trying rewording into my ontology: There's a spectrum of algorithms that goes from memorizing plans to on-the-fly-planning. A program that exactly memorized an action sequence and just repeats it is at one end of the spectrum. On the other end you have something like an algorithm that chooses every action on the spot because it has backchained from the desired outcome to the action that is the most likely to produce that outcome.

As you move away from pure memorizing, you memorize higher level patterns in the action sequence, so you can deal with newer situations as long as they are similar to training in a more high-level abstract way. This way you can generalize further.

Are you pointing at algorithms a bit toward the memorizing side of this spectrum? So the AI would be general enough to stay in the ML research domain, but not general enough to implement a strategy that is a bit outside of that domain, like gaining control of GPUs.

Is this a reasonable rewording?

(if so, I'll argue that if we condition on research level generality, this implies we'll get something closer to the planning side).

but it's implemented in a hackier way than what you're imagining.

I am imagining biology-level-hacky algorithms as the likely first dangerous AIs. In fact much of the danger comes from the hackiness. But talking about the easiest-to-describe version of an algorithm does make it easier to communicate I think.

Yeah, I'm pretty happy with that rewording.

One thing I want to emphasise about this picture is that there doesn't need to be a single dial of consequentialism. The system can be more consequentialist in some ways and less in others. The way it makes chess moves could rely on explicit look-ahead search, while the way it chooses jokes to tell could be just parroting ones it's heard without regard to their impact.

Another thing I want to claim is that the system's generality in terms of its knowledge, and its generality in terms of its consequentalism are probably two different axes. It could understand very well how it could take control of GPUs, without that being part of the domain it does consequentialist reasoning over.

Putting these points together, it seems like you could have a situation where you take a model that has a broad and deep understanding but isn't capable of behaving in the agentic way required for certain tasks like ML research. And then you finetune it on ML research, and it gets good at behaving in the necessary goal-directed way. It even maybe discovers new strategies that humans don't use, using knowledge from other domains. Maybe some of those strategies are ones we don't like, like hacking into the GPUs. Some we catch and penalise, some slip through the net. And we end up with a model that uses a bunch of strategies, some of which we don't like, and even to some extent comes up with new strategies on the fly. But it hasn't clicked into a mode of fully general consequentalist search over everything it knows. It's superhumanly useful without being extremely dangerous.

Whether or not you find that specific story plausible, the claim that research competence necessitates a very general kind of consequentialism feels pretty strong to me, given that I can spin various stories like that.

I like the point about knowledge-generality and consequentialism-generality as two different axes. I think this is true for low capability intelligences, but becomes less true as capabilities become more general.

One of the steps along the memorizer-planner spectrum is the ability to notice some knowledge that you lack, take steps to obtain that knowledge, then use it to help with further planning. This pattern comes up over and over in most medium–large scale tasks, and it is particularly central to research. So I claim that a research capable AI must have learned this pattern and be capable of applying it in lots of situations, having trained on thousands of different variants of it in thousands of different contexts.

For the example of an agent that has a broad understanding but isn't using it all in a goal-directed way: If the goal-directed-planner part is capable of using the obtaining-useful-knowledge pattern, then it seems a little strange if it was trying to obtain this relevant knowledge for planning, but had no way to get the knowledge that is stored by other parts of its memory. Particularly because there are thousands of situations where this pattern would be extremely useful in training.

If it's not even trying to obtain decision relevant info, why does it usually implement this pattern in research, but doesn't use it in this particular case?

Another way of looking at this is: A very common high-level pattern is deliberately expanding the domain I can do consequentialist reasoning over, as a part of pursuing a goal. The simplest versions of this being on-the-fly learning about a new domain or searching its memory for relevant useful knowledge for pursuing a particular subgoal.

So I'm not claiming that your example is a logically impossible kind of program. The learned pattern of 'identifying planning-relevant knowledge and using it' might have overfit in some way and not generalize to some strange subset of goals or plans or contexts. But if we know (by assumption of capabilities) that it can use this pattern to obtain currently-unknown-to-humanity research results (which I claim involves using the pattern in lots of different contexts, to overcome research obstacles that others haven't yet overcome), then we should expect the pattern has very likely been learned well enough to work in most other contexts also. It can't have overfit that much, because otherwise it wouldn't be as useful in new research situations as we're assuming it is.

That argument doesn't quite resonate with me, but I'm struggling to point to a specific disagreement. Maybe I can express disagreement by going a bit more meta though.

I feel we're digging from different ends in the way we try to model the situation. You're starting from a pretty abstract model of a consequentialist, and then imagining an imperfect implementation of it. It knows about some set of things S; it's successful at some task T which requires strategic behaviour. So there's probably some planning algorithm P which takes S as input and spits out a strategy that seems useful for achieving T. It's possible for P to only take some subset of S as input. But that would be easy to improve on by taking a larger subset, so it won't be selected for.

In particular, it seems like you tend to think that the more generally capable an system is, the closer it is to the ideal consequentialist, meaning the more it uses search and the less it uses memorisation.

Whereas I'm going the other way, and thinking about how learning seems to work in the AI systems I'm familiar with, and imagining that incrementally improving to the point where the system could successfully act like a consequentialist. And an important way this gives me a different intuition is that it seems like becoming more capable is about having amortised more and more useful kinds of computation, which is basically to say, relying more and more on stuff you've memorised.

So when I think of an system being highly capable at tasks that require agency, it's not really that it's doing more consequentialist search on the inside, and relying less on what it's memorised. It's that the evaluations, behaviours, and cognitive habits it's memorised more effectively combine together into consequentialist behavior. It might not be well described as doing any more or less explicit search than a system that's less capable at those tasks. So arguments about how the search algorithm used by a less general consequentialist can be easily upgraded to that of a more general consequentialist feel less natural to me than to you.

I wonder if you think that's indeed a difference in the way we're thinking? If so I think it could be upstream of the stuff from the last couple of messages.

I am approaching the situation with an abstract model of a consequentialist in mind, although I'm also anchoring on other things, like what things human researchers are capable of generalizing to, and sometimes thinking of more restricted ML-ish models like alphago.

I'll try to summarize why I moved away from incremental improvements on LLMs as a way to approach modeling advanced AI:

I guess there's a thing that LLMs are (mostly?) missing, that humans clearly have, which is is something like robust planning. By robust planning, I mean planning that generalizes even if totally new obstacles are placed in the way.

Like the examples of giving LLMs easy versions of common riddles, where they try to complete a pattern they've learned as the answer to that sort of riddle.

This motivated me to use models other than incrementally improved LLMs as a basis for reasoning about future more powerful AI.

It's not implausible to me that LLMs can be made to do more robust planning via chain-of-thought, like in o1, but if so you can't use transformer-generalization intuitions to reason about how it will generalize. There's a whole other algorithm running on top of the LLM, and the way it generalizes is different from the way transformers generalize.

evaluations, behaviours, and cognitive habits it's memorised more effectively combine together into consequentialist behavior

Like when you say this. I think if you've got lots of bits that combine together into robust planning, then you have to model the system on that level, as a robust planning algorithm built (maybe hacked together) out of bits, rather than as an LLM that will over-fit its completions to particular contexts.

Maybe this is the source of the difference in how we're thinking?

Yeah, this feels like a good direction.

I guess there's a thing that LLMs are (mostly?) missing, that humans clearly have, which is is something like robust planning. By robust planning, I mean planning that generalizes even if totally new obstacles are placed in the way.

I want to argue that the missing thing you're observing is a behavioural property, and the extent to which it's backed up by a missing cognitive algorithm is less clear.

I wonder if there's a crux that can be stated like:

- To get from an LLM to competent consequentialist behaviour, do you need more consequentialist algorithms on the inside, or could you just have more competent execution of the same sort of algorithms?

(And by 'algorithms on the inside' here, I suppose I mean 'on the inside of the behaviour', so I'm including the sorts of patterns LLMs use in chain-of-thought).

For example, a chess algorithm that does tree search with really good learned value estimates is not really any more consequentialist on the inside than a much less competent algorithm that does tree search with a few hard-coded heuristics. But it behaves in a more coherently consequentialist way.

Maybe you can make an argument that in more general domains than chess things work differently.

(I'm not sure what percentage of my overall view rests on this point. Changing my mind on it would definitely shift me a decent amount.)

For example, a chess algorithm that does tree search with really good learned value estimates is not really any more consequentialist on the inside than a much less competent algorithm that does tree search with a few hard-coded heuristics. But it behaves in a more coherently consequentialist way.

I'm confused by this. Are you saying that the cached value estimates one is more coherent? I'm not actually sure what coherent is supposed to mean in this example.

Yeah, the cached value estimate one is more coherent by virtue of being the better player. I think it would work fine to use something like "can be well-modelled as an expected utility maximiser" as the notion of coherence here. But it's maybe better thought of as an intuitive property, which I could try and convey with some examples.

We may need to redefine some words to disentangle competence from coherence, because I think the way you're using them they are too mashed together.

Maybe we should jump back to the GPU example. The thing we actually want to know is whether an AI capable of doing a 6 month ML research project is also capable of generalizing to also act toward its goals using this pathway:

"realizing that you need more compute -> looking for sources -> finding out there are GPUs on some server -> trying each each of: scanning everything you can access for credentials that allow access to them, or PMing some humans to ask for access, or phishing, or escalating privileges through some executable it is allowed to run -> upon success, making use of them, upon failure, backing up and looking for other sources of compute or ways to avoid needing it".

Is this what you meant by obtaining GPUs?

To get from an LLM to competent consequentialist behaviour, do you need more consequentialist algorithms on the inside, or could you just have more competent execution of the same sort of algorithms?

This seems tautologically true to me, because consequentialist algorithms are just whatever ones produce consequentialist behavior (i.e. staying on track to making a consequence happen in spite of new obstacles being dropped in your way).

Yeah, I haven't been being very careful about what I mean with those terms, although in theory I use them to mean different but related things. But maybe you can just swap out my use of coherence in the block quote for competence.

This seems tautologically true to me, because consequentialist algorithms are just whatever ones produce consequentialist behavior (i.e. staying on track to making a consequence happen in spite of new obstacles being dropped in your way).

Well, I think your line of reasoning is to assume something about the behaviour of a system (it can competently do certain tasks), then infer something about the cognitive algorithms being used, and see what that implies about the system's behaviour more generally.

In particular you infer a qualitiative difference between the algorithms used by that system and the ones used by an LLM, and that changes the inferences you make about the behaviour more generally. But I'm not sure whether or not there needs to be a qualitative difference.

On the GPU example: In any genuinely novel 6 month research project, I'm claiming that I could find >10 parts of the project (in particular the novel/creative parts) that involve greater difference-from-the-training-data than the GPU-obtaining-pathway I outlined is different from the training data. And I know "different" isn't well defined here. In order for the argument to work, "different" needs to be measured in some way that relates to the inductive biases of the training algorithm. But I think that using our intuitive notions of "different" is reasonable here, and I suspect they are similar enough.

I think this is where my crux is.

Well, I think your line of reasoning is to assume something about the behaviour of a system (it can competently do certain tasks), then infer something about the cognitive algorithms being used, and see what that applies about the system's behaviour more generally.

Kinda but not quite. I think of it as more like directly inferring the sorts of tasks the algorithm generalizes across (from assuming it can can do certain tasks that involve that kind of generalization).

Maybe we should jump back to the GPU example. The thing we actually want to know is whether an AI capable of doing a 6 month ML research project is also capable of generalizing to also act toward its goals using this pathway:

"realizing that you need more compute -> looking for sources -> finding out there are GPUs on some server -> trying each each of: scanning everything you can access for credentials that allow access to them, or PMing some humans to ask for access, or phishing, or escalating privileges through some executable it is allowed to run -> upon success, making use of them, upon failure, backing up and looking for other sources of compute or ways to avoid needing it".

Is this what you meant by obtaining GPUs?

Yeah pretty much. I would rather talk in terms of the AI's propensity to do that than its capability, although I'm not sure how crisp the difference is.

On the GPU example: In any genuinely novel 6 month research project, I'm claiming that I could find >10 parts of the project (in particular the novel/creative parts) that involve greater difference-from-the-training-data than the GPU-obtaining-pathway I outlined is different from the training data. And I know "different" isn't well defined here. In order for the argument to work, "different" needs to be measured in some way that relates to the inductive biases of the training algorithm. But I think that using our intuitive notions of "different" is reasonable here, and I suspect they are similar enough.

I think this is where my crux is.

I don't have a refutation for this. I think maybe I need to sit with it for a while. I think there are some ways we can think about the inductive biases involved that are a bit better than using an intuitive notion of different, but I'm not confident in that right now, or which way they point.

Yeah I should think that through better also, I feel like there's a better way to justify it that is escaping me at the moment.

would rather talk in terms of the AI's propensity to do that than its capability

Yeah fair enough, I think I meant propensity and used the wrong word.

Coming back to this after a week off. I'll try to distil the recent messages:

A central point you made was: "The way it makes chess moves could rely on explicit look-ahead search, while the way it chooses jokes to tell could be just parroting ones it's heard without regard to their impact.". I see the intuition behind this, it is a good point.

I responded by trying to describe "patterns" of thinking (like fragments of algorithm) that I claimed the AI must have if it is doing research. I think I didn't quite argue well why these patterns generalize in the way I was claiming they should (like applying to the "obtain GPUs" situation just as much as they apply to "normal research situation").

[At this point we started writing under time pressure].

You were unconvinced by this, so you stepped up a meta-level and described some possible differences in the generators. In particular: "So when I think of an system being highly capable at tasks that require agency, it's not really that it's doing more consequentialist search on the inside, and relying less on what it's memorised."

I responded by digging into the idea of "robust planning", which is planning that works in spite of new obstacles that have never been encountered before. While I agree that it's really useful to be doing loads of caching of plans and knowledge and things that worked in the past, I think novel obstacles highlight a situation where caching is least useful, and cache invalidation is particularly difficult. This is the situation where classic consequentialist-like algorithms shine.

You then describe a possible crux: whether consequentialist looking behaviour implies consequentialist looking algorithms.

I didn't think this was a crux, because I consider any algorithm that exhibits the "robust planning despite not seeing the obstacle before" property to be a consequentialist algorithm. I should clarify that this is a bit more than a behavioral property, because in order to know that an agent is exhibiting this property you need to know a bunch about the history of the agent to verify that it's not regurgitating previous actions. And in order to know that it is robust, you need to see it overcome a diverse array of new obstacles.

There was a small subthread on this, a little out of order, then we went back to the GPU-commandeering example. I described that example in more detail, then described my main crux in terms of that example:

I'm claiming that I could find >10 parts of the project (in particular the novel/creative parts) that involve greater difference-from-the-training-data than the GPU-obtaining-pathway I outlined is different from the training data.

(At this point our scheduled time was up, so we left it there. Is this a good summary? Feel free to edit it directly if you think that would be helpful).

Continuing where we left off, now that I'm caught up:

I know "different" isn't well defined here. In order for the argument to work, "different" needs to be measured in some way that relates to the inductive biases of the training algorithm. But I think that using our intuitive notions of "different" is reasonable here, and I suspect they are similar enough.

I think I want to modify this a bit. I think my belief is that this conclusion works for basically any inductive biases (that aren't entirely useless for training intelligent algorithms).

There are some situations where inductive biases matter a lot, and some where they don't matter much (when there's a lot of data). And this is a situation where they don't matter much. At least if we use anything like the current paradigm of AI building methods.

There are a few general patterns being applied when an agent is creatively searching for more compute when because they need it for something else.

To find these patterns (and when to apply them), you need to have been trained on millions of very diverse scenarios. It's non-trivial to learn them because the first thing you get is more of a memorizy solution. But once you've pushed hard enough/processed enough data that you've found a more generalizing pattern, it can often generalize a fair way further.

But even though these patterns often generalize a fair way further than they could have, we don't really need to assume that they are doing that for the GPU example. For that, it's just applying patterns like 'identifying planning-relevant knowledge' to a situation extremely similar to other situations that are commonly encountered during research. There's not any property of that behavior that differentiates it from behavior exhibited under normal operation.

I disagree: I think the naturalness of the patterns you're thinking of does depend a tonne on how the learner works.

If the system's knowledge was represented explicitly as a big list of propositions, and runtime compute was no object, then it seems very natural that it would learn a pattern like "look through the whole list for relevant knowledge" that would generalise in the way you expect.

But suppose (for example) the system exhibits the reversal curse: it can think of the name of Tom Cruise's mother, but it can't think of the name of Mary Lee Pfeiffer's son. If we take a base LLM with that property, it's going to have a much easier time identifying planning-relevant knowledge for the problem of tricking Tom Cruise into thinking you know his mother than for tricking Mary Lee Pfeiffer into thinking you know her son.

I think the strong version of your view would have to say that when you apply agency training to this base LLM, it learns an "identifying planning-relevant knowledge" pattern that obliterates the reversal curse?

Or perhaps you will agree that the knowledge accessed by the system and the algorithms it runs are likely to be context dependent, but just want to argue that dangerous stuff like the GPU plan is going to be easily accessible within useful contexts like research?

Yeah your last paragraph is right.

but just want to argue that dangerous stuff like the GPU plan is going to be easily accessible within useful contexts like research?

More like: The procedure that tries to work out the GPU plan is always easily accessible. The knowledge required to actually construct the plan may take some thinking (or looking at the world) to work out. But the procedure that tries to do so is more like the innate procedural knowledge that defines the agent.

I agree that different pieces of knowledge are often differently difficult to retrieve by a bounded agent. I think of this as being because it's often easier to store one-way functions in a world model. Like memorizing the alphabet forward doesn't imply you can say it backward (at least quickly).

But the patterns I'm referring to are higher level patterns that are common in the action sequence. Because they are common, they are more like procedural knowledge. Like one such pattern might be "try to prompt myself by thinking of 100 related things", as a response to needing some useful knowledge that I think I know but can't remember. Another might be "consider places where this knowledge could be found". These are not the most abstract patterns, but they are abstract enough that they don't depend on the type of knowledge being retrieved.

There's a number of general "try to obtain/retrieve new knowledge" patterns that are a part of the overall procedural algorithm that is executed whenever a useful thing is unknown. An algorithm that does useful research is going to frequently run into questions it doesn't immediately know the answer to, and need plans that it doesn't immediately know how to construct. But in the face of this kind of situation, it'll have (a bunch of) general strategies that it implements, which work regardless of the specific kind of knowledge required to answer the question or make the plan.

(Not that the general strategies always work on every possible problem, just that they need to usually work when encountering a lack of knowledge problem broadly similar to the ones it routinely encounters)

I want to argue that a model can behave like a competent consequentialist via learned patterns that have fairly arbitrary contingencies which have a nontrivial effect on the way it behaves. And that therefore the fairly pure and abstract model of consequentialism you’re thinking of need not be that predictive of behaviour.

I’ll do it via a sequence of thought experiments, starting with something a bit stupid.

Firstly, (and I don’t think you would disagree with this) I think it would be very easy to train a model that acted like a consequentialist in some contexts but not others. For example, a model that is trained to output only “blah” when the prompt contains “blah”, but is otherwise trained as we’ve discussed. I doubt this would lead to a model that ran a consequentialist algorithm in both types of context, searching in the “blah” context for strategies for outputting “blah”. I think it would apply different algorithms in the two contexts.

Next, suppose we wanted to enforce some arbitrary syntactic property in the model’s chain-of-thought in the contexts where it does act like a consequentialist, like never using the letter ‘g’. I think that would be pretty easy too. And if the model would otherwise be doing a kind of consequentialist search, I don’t think this modification would impact its internal algorithms by adding an extra criterion for it to evaluate possible strategies against. Instead I think it would shift the distribution of strategies it considered - in a pretty weak and random way.

Next, suppose we want to enforce some semantic property like “the model favours a Schmidhuber-like research style”. We get GPT4 to monitor its chain-of-thought, and train against GPT4’s assessment of Schmidhuber-ness of its ideas (in addition to a whatever objective notion of competence were otherwise using). I think this would end up somewhat changing the kind of research the model did. But again, probably not by changing the goal of a consequentialist algorithm, in the sense of a criterion used to evaluate suggested strategies. By changing something else - the distribution of the strategies the model considers. In particular it might ignore some effective research strategies that another model would pursue.

This is all intended to argue that it doesn’t seem that hard to get a model that is a pretty competent consequentialist with respect to some goal, while having considerations other than that goal — or anything usefully-described as a goal — be an important factor in determining what strategies it deploys.

Let's talk about the first one first, because I have disagreements there, then we can talk about the others after depending on how we resolve this first disagreement.

Firstly, (and I don’t think you would disagree with this) I think it would be very easy to train a model that acted like a consequentialist in some contexts but not others. For example, a model that is trained to output only “blah” when the prompt contains “blah”, but is otherwise trained as we’ve discussed. I doubt this would lead to a model that ran a consequentialist algorithm in both types of context, searching in the “blah” context for strategies for outputting “blah”. I think it would apply different algorithms in the two contexts.

So you're claiming this would be internally implemented something like if prompt.endswith("blah") print("blah") else normal_agent_step(...)?

To raise some alternative hypotheses: It could also be an agent that sees great beauty in "blahblah". Or an agent that believes it will be punished if it doesn't follow the "blahblah" ritual. Or an agent with something like a compulsion.

You've said "I doubt this would lead to a model that ran a consequentialist algorithm in both types of context", but not actually argued for it. To me it isn't clear which way it goes. There's a simplicity bias in favour of the if implementation, but there's an opposing bias that comes from every other context running the normal_agent_step. Every token is being generated by running through the same weights, so there's even some reason to expect running the same algorithm everywhere to be the more favoured option.

(Edit a few hours later: Just remembered there's also a training incentive to run unrelated agentic cognition in the background during easy-to-predict tokens, to better spread out compute over time steps. Could plan ahead or ponder recent observations, as a human would during a break.)

I think it'd be wrong to be confident about the internal implementation either way here, since it's just trained on behaviour. Unless there's something more to the argument than pure intuition?

As a sidenote:

And that therefore the fairly pure and abstract model of consequentialism you’re thinking of need not be that predictive of behaviour.

I want to repeat that my model of realistic trained agents isn't pure. My model is hacky mess like a human. Gets things done in spite of this, like some humans.

Yeah, the argument in favour of it is just simplicity. I don’t think I have much argument to give apart from asserting my own confidence/willingness to bet if we could operationalise it.

I think you could test something similar by training a model that performs some nontrivial algorithm (not consequentialist reasoning, but something we can easily do now) and then training it on the ‘blahblah’ task, and setting things up so that it’s plausible that it could learn a modification of the complex algorithm instead of a simpler parroting algorithm. And then trying to do interp to see which it’s learned.

Perhaps it would be enough for our purposes for you to just agree it’s plausible? Are you more skeptical about the second thought experiment, or the same?

You could be right about the "blah" thought experiment. I'm not sure why though, because simplicity isn't the correct measure, it's more like simplicity conditional on normal_agent_step. Probably in neural nets it's something like small weight changes relative to normal_agent_step.

Trying to operationalise it:

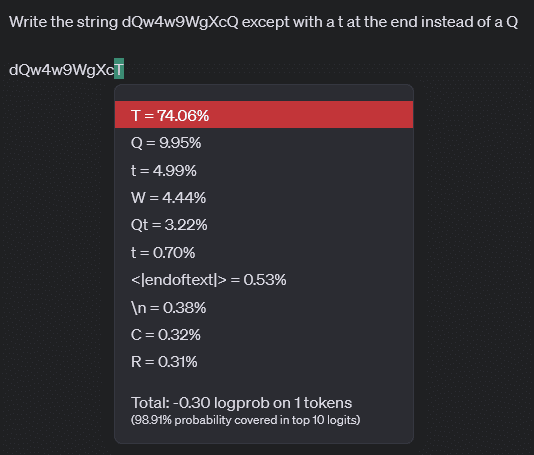

- Since "blah" is a single token, it's plausible that the ~smallest weight change would be to ignore the main algorithm like the

ifimplementation does. Because if the "blah" token is only ever used in this way, it could be treated differently in the last layer. So maybe you are right about this. But it's not totally clear to me why you're confident it would always go this way, it seems like there could also be small weight changes inside the main algorithm that cause this behaviour. But if you're really confident, I buy that you probably know something about transformer inductive biases that I don't. - Maybe a better operationalisation would be a string like "dQw4w9WgXcQ", which an LLM has seen a huge number of times, and which has always(I think?) ended in "Q". My understanding is that your theory predicts that an LLM couldn't write out that string with a different letter at the end, even if requested? Because as soon as it saw "dQw4w9WgXc", it would trigger a simple condition like

if in.endswith "dQw4w9WgXc": print "Q", and ignore all other context?- I predict you'll say this is a misunderstanding of the thought experiment, but I'm not sure how.

For the second thought experiment, I want to clarify: Has it been RL trained to complete really difficult tasks with chain-of-thought, with the 'g' restriction in place? Because if so, wouldn't it have learned to work around the 'g' restriction?

Your second bullet point gave me pause for thought. But I don't think it's quite the same, because even if the model has only ever seen "dQw4w9WgXc" followed by "Q", the examples that it's seen don't really conflict with its usual tricks for predicting the next token. Whereas in the "blah" case, it has seen examples where all of the usual heuristics say that the next word should be "dog", but in fact it's "blah", so there's a much stronger incentive to learn to bypass the usual heuristics and implement the if-then algorithm.

Another way to think about this is that the "blah" thing is an intervention which breaks the causal link between the earlier tokens and the next one, whereas in your example that causal link is intact. So I expect the model to learn an algorithm without the causal link in the first case, and with it in the second case. For fun I tested it with gpt-3.5-turbo-instruct:

Since "blah" is a single token, it's plausible that the ~smallest weight change would be to ignore the main algorithm like theifimplementation does. Because if the "blah" token is only ever used in this way, it could be treated differently in the last layer. So maybe you are right about this. But it's not totally clear to me why you're confident it would always go this way, it seems like there could also be small weight changes inside the main algorithm that cause this behaviour. But if you're really confident, I buy that you probably know something about transformer inductive biases that I don't.

I don't want to claim authority here, and I don't think it would necessarily always go this way, but I'm like >80% confident it's what would usually happen. If we accept the small weight changes model of how this works, then I'd argue that the information about the goal of a consequentialist algorithm would have to be very localised in order for changing that to be comparably simple changing to the if-then algorithm, and even then I think it could be at best as simple.

For the second thought experiment, I want to clarify: Has it been RL trained to complete really difficult tasks with chain-of-thought, with the 'g' restriction in place? Because if so, wouldn't it have learned to work around the 'g' restriction?

Yep, it's been trained to complete difficult tasks. I think it would learn to work around the restriction to some extent e.g. by using synonyms. Maybe it would learn a full workaround, like replacing 'g' with another letter everywhere, or learning its own private language without 'g's. But I think it's pretty likely there would be a nontrivial distributional shift in the strategies it ended up pursuing, quite possibly the obvious systematic one, i.e. being less likely to use techniques with names like "graph aggregation" when doing research.

There's a high-level pattern (correct text manipulation), and you're claiming we can override this high-level pattern with a low-level specific pattern ("dQw4w9WgXc is followed by Q").

I'm claiming it isn't clear which way it'll go when there are two conflicting patterns.

I agree that if you put enough effort into adversarial training you could reinforce one the low-level pattern to such a degree that it always wins. Then it'd be impossible to find a prompt that led to an output containing "dQw4w9WgXcT". But if you only put a small amount of effort into adversarial training, I could probably still find a prompt that creates that output. There are a lot of potential high-level patterns that I could exploit to do this.

And this supports my overall point. We are training the AI to solve lots of really difficult problems. It's going to contain a lot of high-level patterns that are pushing in favour of solving any given new problem. So when some unforeseen problem like GPU problem comes up (that we haven't specifically adversarially trained against), I expect it to try to solve that problem.

For the single token "blah" case, I think we agree, you've changed my mind on that. I think we should focus on longer multi-token behaviour going forward, where we still have some disagreement.

But I think it's pretty likely there would be a nontrivial distributional shift in the strategies it ended up pursuing, quite possibly the obvious systematic one, i.e. being less likely to use techniques with names like "graph aggregation" when doing research.

Yeah I guess some kind of distribution shift seems likely, particularly on problems with many solutions. On problems with one solution, I would expect it to solve the problem even if the strategy contained a significantly above average number of 'g's, because if it wasn't solving these problems that'd reduce the score a lot during training.

For your third case:

Next, suppose we want to enforce some semantic property like “the model favours a Schmidhuber-like research style”. We get GPT4 to monitor its chain-of-thought, and train against GPT4’s assessment of Schmidhuber-ness of its ideas (in addition to a whatever objective notion of competence were otherwise using). I think this would end up somewhat changing the kind of research the model did. But again, probably not by changing the goal of a consequentialist algorithm, in the sense of a criterion used to evaluate suggested strategies. By changing something else - the distribution of the strategies the model considers. In particular it might ignore some effective research strategies that another model would pursue.

My main objection is that this is a really specific prediction about the internals of a future AI. Like the other two cases, I'm more uncertain than you. I'm uncertain because there are loads of places to modify an algorithm to produce high GPT4-assessed-Schidhuberness. For example, it could be modified preferences. It could be modified "space-of-considered-strategies" (as you suggest). It could be modified meta-preferences. It could be lots of unendorsed habits. Or lots of compulsions. It could be biases in the memory system. It could be an instrumental behaviour, following from a great respect for Schmidhuber. Or from thousands of other beliefs. It could be a variety of internal thought-filters. It could be hundreds of little biases spread out over many places in the algorithm.

I don't know what internal components this future AI has. When I say beliefs and memory and preferences and meta-preferences and compulsions and respect, I'm guessing about some possible internal components. I'm mostly using those because they are intuitive to us. I expect it to contain many components and algorithms that I don't have any understanding of. But I expect them to have many degrees of freedom, just like our beliefs and preferences and habits etc.

My point here is that different algorithmic modifications have different implications for generalization.

This is all intended to argue that it doesn’t seem that hard to get a model that is a pretty competent consequentialist with respect to some goal, while having considerations other than that goal — or anything usefully-described as a goal — be an important factor in determining what strategies it deploys.

On the surface level I agree with this. I should have said this several messages ago, sorry. There are lots of other factors that determine behaviour other than goals, and with training we can knock some of these into an agent's brain.

I think the disagreement is that you expect these other considerations to generalize robustly. Whereas I think they will often be overridden by the overall consequentialist pattern.

For example, if a Schmidhubery agent has been doing research for 6 months, and reading the research of others, it might notice that it rarely comes up with certain types of ideas. It'd then try to correct this problem, by putting deliberate effort into expanding the space of ideas considered, or talking to others about how they generate ideas and trying to practice imitating them for a while.

If the Schmidhuber-modification was instead something like a modified "goal", then this would be much more robust. It would plausibly keep doing Schmidhubery behaviour despite discovering that this made it worse at pursuing another goal.

6 comments

Comments sorted by top scores.

comment by Jeremy Gillen (jeremy-gillen) · 2024-11-11T12:25:20.789Z · LW(p) · GW(p)

Trying to write a new steelman of Matt's view. It's probably incorrect, but seems good to post as a measure of progress:

You believe in agentic capabilities generalizing, but also in additional high-level patterns that generalize and often overpower agentic behaviour. You expect training to learn all the algorithms required for intelligence, but also pick up patterns in the data like "research style", maybe "personality", maybe "things a person wouldn't do" and also build those into the various-algorithms-that-add-up-to-intelligence at a deep level. In particular, these patterns might capture something like "unwillingness to commandeer some extra compute" even though it's easy and important and hasn't been explicitly trained against. These higher level patterns influence generalization more than agentic patterns do, even though this reduces capability a bit.

One component of your model that reinforces this: Realistic intelligence algorithms rely heavily on something like caching training data and this has strong implications about how we should expect them to generalize. This gives an inductive-bias advantage to the patterns you mention, and a disadvantage to think-it-through-properly algorithms (like brute force search, or even human-like thinking).

We didn't quite get to talking about reflection, but this is probably the biggest hurdle in the way of getting such properties to stick around. I'll guess at your response: You think that an intelligence that doesn't-reflect-very-much is reasonably simple. Given this, we can train chain-of-thought type algorithms to avoid reflection using examples of not-reflecting-even-when-obvious-and-useful. With some effort on this, reflection could be crushed with some small-ish capability penalty, but massive benefits for safety.

Replies from: james.lucassen↑ comment by james.lucassen · 2024-11-13T04:45:53.854Z · LW(p) · GW(p)

Maybe I'm just reading my own frames into your words, but this feels quite similar to the rough model of human-level LLMs I've had in the back of my mind for a while now.

You think that an intelligence that doesn't-reflect-very-much is reasonably simple. Given this, we can train chain-of-thought type algorithms to avoid reflection using examples of not-reflecting-even-when-obvious-and-useful. With some effort on this, reflection could be crushed with some small-ish capability penalty, but massive benefits for safety.

In particular, this reads to me like the "unstable alignment" paradigm I wrote about a while ago.

You have an agent which is consequentialist enough to be useful, but not so consequentialist that it'll do things like spontaneously notice conflicts in the set of corrigible behaviors you've asked it to adhere to and undertake drastic value reflection to resolve those conflicts. You might hope to hit this sweet spot by default, because humans are in a similar sort of sweet spot. It's possible to get humans to do things they massively regret upon reflection as long as their day to day work can be done without attending to obvious clues (eg guy who's an accountant for the Nazis for 40 years and doesn't think about the Holocaust he just thinks about accounting). Or you might try and steer towards this sweet spot by developing ways to block reflection in cases where it's dangerous without interfering with it in cases where it's essential for capabilities.

Replies from: jeremy-gillen↑ comment by Jeremy Gillen (jeremy-gillen) · 2024-11-13T15:08:01.079Z · LW(p) · GW(p)

I was probably influenced by your ideas! I just (re?)read your post [LW · GW] on the topic.

Tbh I think it's unlikely such a sweet spot exists, and I find your example unconvincing. The value of this kind of reflection for difficult problem solving directly conflicts with the "useful" assumption.

I'd be more convinced if you described the task where you expect an AI to be useful (significantly above current humans), and doesn't involve failing and reevaluating high-level strategy every now and then.

Replies from: james.lucassen↑ comment by james.lucassen · 2024-11-14T03:15:50.443Z · LW(p) · GW(p)

I agree that I wouldn't want to lean on the sweet-spot-by-default version of this, and I agree that the example is less strong than I thought it was. I still think there might be safety gains to be had from blocking higher level reflection if you can do it without damaging lower level reflection. I don't think that requires a task where the AI doesn't try and fail and re-evaluate - it just requires that the re-evalution never climbs above a certain level in the stack.

There's such a thing as being pathologically persistent, and such a thing as being pathologically flaky. It doesn't seem too hard to train a model that will be pathologically persistent in some domains while remaining functional in others. A lot of my current uncertainty is bound up in how robust these boundaries are going to have to be.

Replies from: jeremy-gillen↑ comment by Jeremy Gillen (jeremy-gillen) · 2024-11-14T11:52:53.869Z · LW(p) · GW(p)

I buy that such an intervention is possible. But doing it requires understanding the internals at a deep level. You can't expect SGD to implement the patch in a robust way. The patch would need to still be working after 6 months on an impossible problem, in spite of it actively getting in the way of finding the solution!

comment by ZY (AliceZ) · 2024-11-04T16:08:49.963Z · LW(p) · GW(p)

My two cents:

- The system has a fixed goal that it capably works towards across all contexts.

- The system is able to capably work towards goals, but which it does, if any, may depend on the context.

From these two above, seems it would be good for you to define/clarify what exactly you mean by "goals". I can see two definitions: 1. goals as in a loss function or objective that the algorithm is optimizing towards, 2. task specific goals like summarize an article, planning. There may be some other goals that I am unaware of, or this is obvious elsewhere in some context that I am not aware of. (From the shortform in the context shared, seems to be 1, but I have a vague feeling that 2 may not be aligned on this.)

For the example with dQw4w9WgXcQ in your initial operationalization when you were wondering about if it always generate Q - it just depends on the frequency. A good paper is https://arxiv.org/pdf/2202.07646 on frequency of this data and their rate of memorization if you were wondering if it is always (same context with training data, not different context/instruction).